Hyper-YOLO: When Visual Object Detection Meets Hypergraph Computation

①Hyper-YOLO引入一种新的目标检测方法,结合超图计算捕捉视觉特征中的复杂高阶相关性②为解决传统的YOLO模型在 Neck 结构上的局限,提出了HGC-SCS框架。

简介:

时间:2025

期刊:TPAMI

作者:Yifan Feng, Jiangang Huang, Shaoyi Du, Shihui Ying, Jun-Hai Yong

摘要:

①Hyper-YOLO引入一种新的目标检测方法,结合超图计算捕捉视觉特征中的复杂高阶相关性

②为解决传统的YOLO模型在 Neck 结构上的局限,提出了HGC-SCS框架

创新点:

①提出HGC-SCS框架:结合超图计算和高阶信息建模,实现更强的特征表达能力

②设计HyperC2Net(改进 YOLO Neck 结构):突破传统YOLO仅限于相邻层特征融合的局限,实现更高效的信息传播

③引入MANet改进 Backbone:结合不同类型的卷积(标准卷积、深度可分卷积、C2f 模块)提升特征提取能力

方法:

HGC-SCS 框架:

HGC-SCS(Hypergraph Computation Empowered Semantic Collecting and Scattering)是 Hyper-YOLO 的核心框架,主要功能包括语义特征收集、超图计算、语义散射。

过程:

①采用超图构造函数来估计语义空间中特征点之间潜在的高阶相关性

②利用光谱或空间超图卷积方法,通过超图结构在特征点之间传播高阶信息,生成

③将高阶关系信息集成到中,生成的混合特征映射

,由

和

融合产生

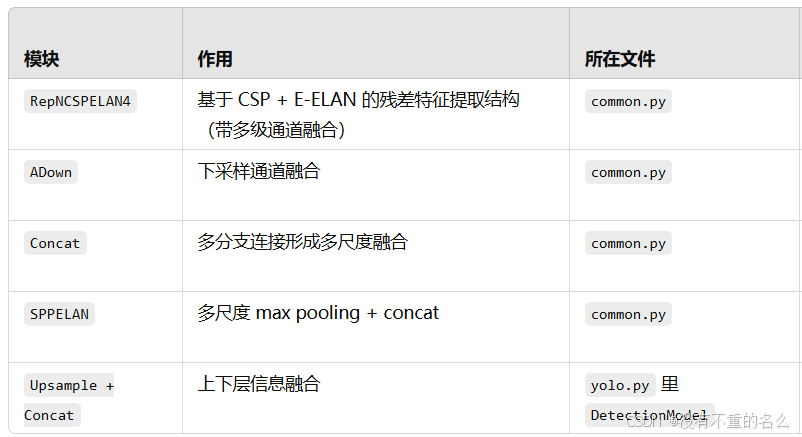

语义特征收集:

跨多个层(B1, B2, B3, B4, B5)收集 Backbone 提取的特征

通过矩阵拼接(Concatenation)形成混合特征表示

超图计算:

①通过基于距离的超图构建生成超图结构

通过执行五个基本特征的通道级连接来启动该过程,从而合成跨层视觉特征。

超边构造:

表示所有特征点的集合,使用距离阈值从每个特征点构建一个 ε-ball,每个顶点

都会有一个对应的超边

,该 ε-ball将作为超边,包含距离中心特征点在一定阈值内的所有特征点。

某个特征点的邻居集合可表示为

所有与 之间的距离小于ϵ的点

(相似性高的),都会被包含在超边中

②使用超图卷积进行高阶信息传播,生成增强特征

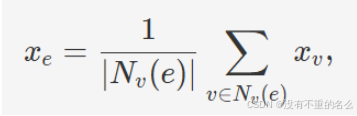

空间域超图卷积:

两阶段超图信息传递的矩阵表达式:

语义散射:

将网格特征分解为语义空间中的一组特征点,并基于距离构建超边缘,从而允许高阶消息在点集中不同位置的顶点之间传递。结合超图特征和不同层的 Backbone 特征

进行融合,得到最终检测特征

MANet:

MANet作为Hyper-YOLO的Backbone改进模块,目标是增强特征提取能力

特点:

结合 1×1 通道重校准卷积、深度可分离卷积(DSConv)、C2f 模块

通过混合不同类型的卷积操作,实现更强的信息流动

采用跳跃连接,确保梯度流动稳定

流程:

最终特征拼接后的

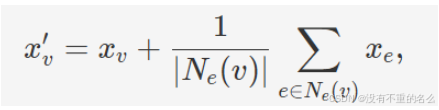

代码中对应:

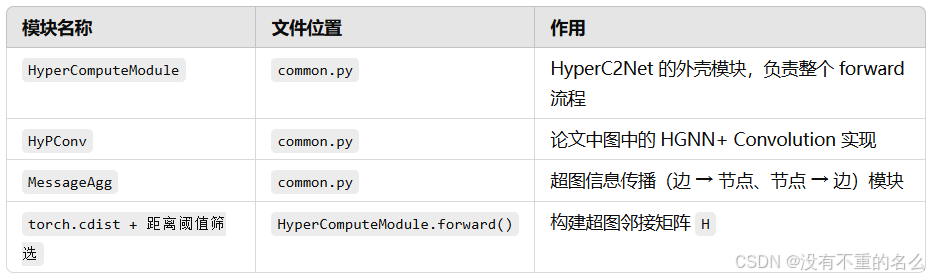

HyperC2Net:

HyperC2Net 负责跨层、跨位置信息传播。

主要特点:

采用超图构建(Hypergraph Construction)生成高阶特征关系

通过超图卷积(Hypergraph Convolution)在语义空间中传播信息

采用底部向上(Bottom-Up)信息融合,使特征信息更全面

基于超图的跨级别和跨位置表示网络:

其中,|| ||表示矩阵拼接操作,是融合函数

代码中对应:

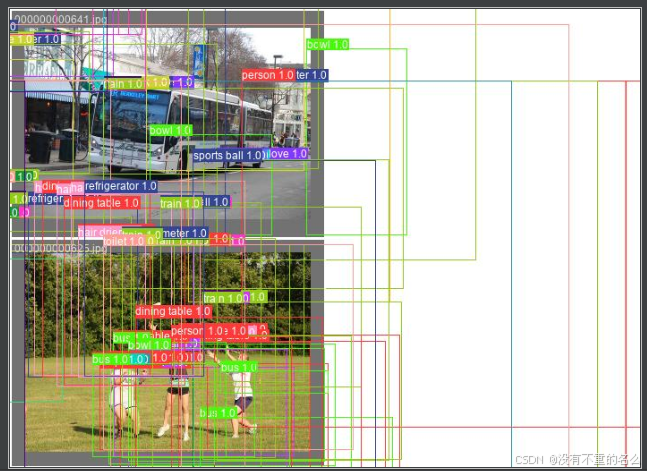

代码调试:

网络结构:

原作者提供了其他yolo网络的yaml文件,在此使用基于yolov9.yaml

加载模型:

model = DetectMultiBackend(weights, device=device, dnn=dnn, data=data, fp16=half)model = attempt_load(weights if isinstance(weights, list) else w, device=device, inplace=True, fuse=fuse)导入模型:

def attempt_load(weights, device=None, inplace=True, fuse=True):

# Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

from models.yolo import Detect, Model网络整体架构:

DetectionModel(

(model): Sequential(

# backbone

# 输入层

# ***Silence()屏蔽无用输出提高推理效率***

(0): Silence()

(1): Conv(

(conv): Conv2d(3, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

# 主干部分

# ***RepNCSPELAN4改进的CSP,使用重参数化***

(3): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(4): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(5): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(6): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(7): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(8): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(9): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

# neck(特征融合层)

# 特征融合

# ***SPPELAN是SPP的变体,采用更高效的空洞卷积金字塔池化,提高感受野***

(10): SPPELAN(

(cv1): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): SP(

(m): MaxPool2d(kernel_size=5, stride=1, padding=2, dilation=1, ceil_mode=False)

)

(cv3): SP(

(m): MaxPool2d(kernel_size=5, stride=1, padding=2, dilation=1, ceil_mode=False)

)

(cv4): SP(

(m): MaxPool2d(kernel_size=5, stride=1, padding=2, dilation=1, ceil_mode=False)

)

(cv5): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(11): Upsample(scale_factor=2.0, mode=nearest)

(12): Concat()

# FPN结构

(13): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(14): Upsample(scale_factor=2.0, mode=nearest)

(15): Concat()

(16): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(17): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(18): Concat()

(19): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(768, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(20): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(21): Concat()

(22): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(23): CBLinear(

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

)

(24): CBLinear(

(conv): Conv2d(512, 768, kernel_size=(1, 1), stride=(1, 1))

)

(25): CBLinear(

(conv): Conv2d(512, 1280, kernel_size=(1, 1), stride=(1, 1))

)

(26): Conv(

(conv): Conv2d(3, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(27): Conv(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(28): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(29): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(30): CBFuse()

(31): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(32): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(33): CBFuse()

(34): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(35): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(36): CBFuse()

(37): RepNCSPELAN4(

(cv1): Conv(

(conv): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv3): Sequential(

(0): RepNCSP(

(cv1): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv2): Conv(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(cv3): Conv(

(conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): ReLU()

(conv1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

(conv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): Identity()

)

)

(cv2): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

)

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

(cv4): Conv(

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

)

# 检测头

# ***关键创新点***

(38): DualDDetect(

# ***cv2:特征金字塔部分,使用了多组卷积***

(cv2): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(512, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(512, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(512, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

)

# ***cv3:用于检测框回归***

(cv3): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(512, 80, kernel_size=(1, 1), stride=(1, 1))

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(512, 80, kernel_size=(1, 1), stride=(1, 1))

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(512, 80, kernel_size=(1, 1), stride=(1, 1))

)

)

# ***cv4:对检测结果进行特征增强***

(cv4): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(256, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(512, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(512, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4, bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

)

# ***cv5:输出最终的检测结果***

(cv5): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(256, 80, kernel_size=(1, 1), stride=(1, 1))

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(256, 80, kernel_size=(1, 1), stride=(1, 1))

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(1): Conv(

(conv): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): ReLU()

)

(2): Conv2d(256, 80, kernel_size=(1, 1), stride=(1, 1))

)

)

# ***使用DFL进行边界框回归,提升定位精度***

(dfl): DFL(

(conv): Conv2d(16, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

(dfl2): DFL(

(conv): Conv2d(16, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)

)

)模块创新:

通过parse_model()读取YAML 文件里的模块名(字符串)

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch])转入eval(),将字符串解析为 Python 表达式,并返回表达式的值找到 Python 中定义的同名类

m = eval(m) if isinstance(m, str) else m # eval strings最终构建对应的神经网络层模块。

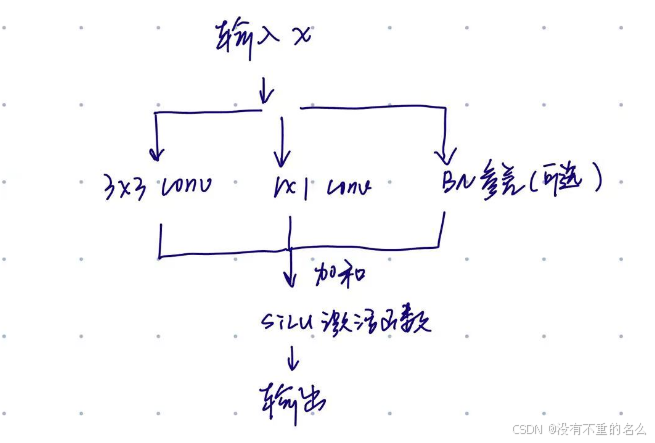

Rep:

RepNCovN:

RepConvN 是一个可重参数化卷积块,训练时多个卷积分支 + 残差,推理时融合为单卷积。

![]()

# ***可重参数化卷积块***

class RepConvN(nn.Module):

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=3, s=1, p=1, g=1, d=1, act=True, bn=False, deploy=False):

super().__init__()

assert k == 3 and p == 1

self.g = g

self.c1 = c1

self.c2 = c2

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

self.bn = None # 如果self.bn被赋值,那还会加一条identity分支

self.conv1 = Conv(c1, c2, k, s, p=p, g=g, act=False) # 3x3 卷积分支

self.conv2 = Conv(c1, c2, 1, s, p=(p - k // 2), g=g, act=False) # 1x1 卷积分支(补充中心特征)

...

def forward(self, x):

"""Forward process"""

id_out = 0 if self.bn is None else self.bn(x)

# 激活函数之后再返回

return self.act(self.conv1(x) + self.conv2(x) + id_out)

# ***结构重参数化,把多分支的参数变换为一个等效的单一卷积核***

def get_equivalent_kernel_bias(self):

# 通过_fuse_bn_tensor()把BatchNorm的参数合并进权重

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.conv1)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.conv2)

kernelid, biasid = self._fuse_bn_tensor(self.bn)

return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _avg_to_3x3_tensor(self, avgp):

channels = self.c1

groups = self.g

kernel_size = avgp.kernel_size

input_dim = channels // groups

k = torch.zeros((channels, input_dim, kernel_size, kernel_size))

k[np.arange(channels), np.tile(np.arange(input_dim), groups), :, :] = 1.0 / kernel_size ** 2

return k

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, Conv):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

elif isinstance(branch, nn.BatchNorm2d):

if not hasattr(self, 'id_tensor'):

input_dim = self.c1 // self.g

kernel_value = np.zeros((self.c1, input_dim, 3, 3), dtype=np.float32)

for i in range(self.c1):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

# 把多个卷积融合为一个等效的nn.Conv2d,同时删除多余模块

def fuse_convs(self):

if hasattr(self, 'conv'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.conv = nn.Conv2d(in_channels=self.conv1.conv.in_channels,

out_channels=self.conv1.conv.out_channels,

kernel_size=self.conv1.conv.kernel_size,

stride=self.conv1.conv.stride,

padding=self.conv1.conv.padding,

dilation=self.conv1.conv.dilation,

groups=self.conv1.conv.groups,

bias=True).requires_grad_(False)

self.conv.weight.data = kernel

self.conv.bias.data = bias

for para in self.parameters():

para.detach_()

self.__delattr__('conv1')

self.__delattr__('conv2')

if hasattr(self, 'nm'):

self.__delattr__('nm')

if hasattr(self, 'bn'):

self.__delattr__('bn')

if hasattr(self, 'id_tensor'):

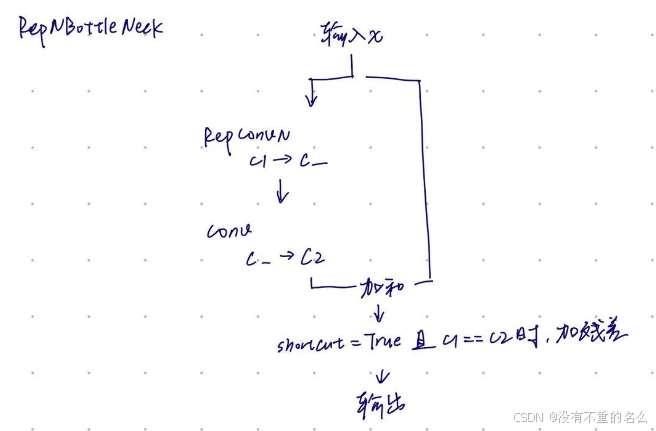

self.__delattr__('id_tensor')RepNCSP:

原型是 YOLOv5 的C3模块,结合 CSP+重参数化卷积(RepConvN),用结构重参数化的RepNBottleNeck替代普通bottleneck,提高推理速度;计算量降低,但保持Dense连接的优点

Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): SiLU(inplace=True)

(conv): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(cv2): Conv(

(conv): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

)

)class RepNCSP(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # optional act=FReLU(c2)

self.m = nn.Sequential(*(RepNBottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

RepNCSP(

(cv1): Conv(

(conv): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

(cv2): Conv(

(conv): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

(cv3): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1))

(act): SiLU(inplace=True)

)

(m): Sequential(

(0): RepNBottleneck(

(cv1): RepConvN(

(act): SiLU(inplace=True)

(conv): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(cv2): Conv(

(conv): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

)

)

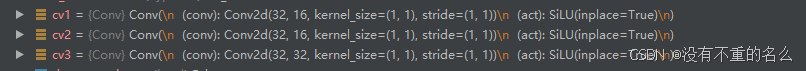

)RepNCSPELAN4:

基于YOLOv7的ELAN和CSPELAN,在ELAN的多分支多层聚合上,融合结构重参数化+通道分流

# ***SPPELAN融合可重参数化卷积块***

class RepNCSPELAN4(nn.Module):

# csp-elan

def __init__(self, c1, c2, c3, c4, c5=1): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

self.c = c3 // 2

self.cv1 = Conv(c1, c3, 1, 1)

self.cv2 = nn.Sequential(RepNCSP(c3 // 2, c4, c5), Conv(c4, c4, 3, 1)) # 主路径1

self.cv3 = nn.Sequential(RepNCSP(c4, c4, c5), Conv(c4, c4, 3, 1)) # 主路径2

# 将cv1的输出(c3通道)+cv2的输出(c4通道)+cv3的输出(c4通道)拼接

self.cv4 = Conv(c3 + (2 * c4), c2, 1, 1) # 1×1卷积融合为输出通道数c2

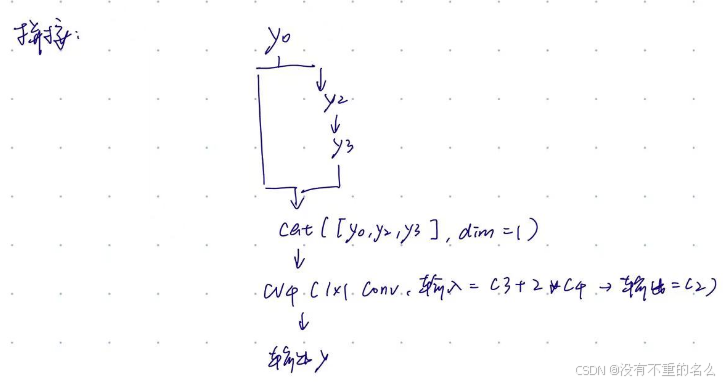

关键部分:

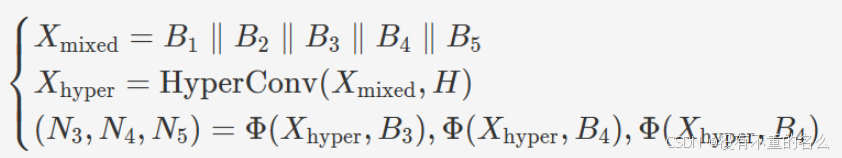

def forward(self, x):

y = list(self.cv1(x).chunk(2, 1))

y.extend((m(y[-1])) for m in [self.cv2, self.cv3])

return self.cv4(torch.cat(y, 1))

①做一次卷积并切成两段

![]()

②一路继续经过两个卷积

![]()

③拼接这些特征

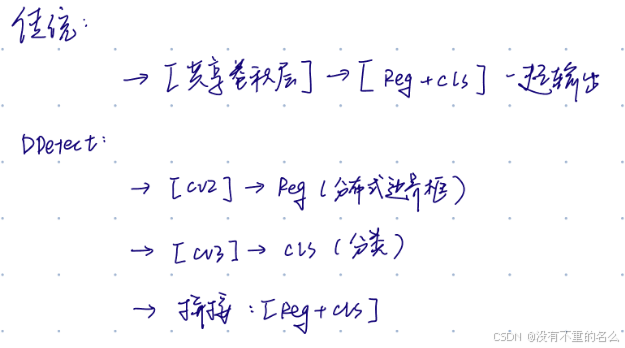

DDetect创新检测头:

DDetect是HyperYOLO中最基础的解耦式检测头创新模块,引入了分类-回归结构解耦 + 分组卷积 + 轻量通道调整的设计,提升精度与推理效率,是后续 DualDetect / DualDDetect / TripleDetect 的基础。

DDetect(

(cv2): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(128, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4)

(act): SiLU(inplace=True)

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(256, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4)

(act): SiLU(inplace=True)

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(256, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=4)

(act): SiLU(inplace=True)

)

(2): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), groups=4)

)

)

(cv3): ModuleList(

(0): Sequential(

(0): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(2): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

)

(1): Sequential(

(0): Conv(

(conv): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(2): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

)

(2): Sequential(

(0): Conv(

(conv): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(1): Conv(

(conv): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(act): SiLU(inplace=True)

)

(2): Conv2d(128, 80, kernel_size=(1, 1), stride=(1, 1))

)

)

(dfl): DFL(

(conv): Conv2d(16, 1, kernel_size=(1, 1), stride=(1, 1), bias=False)

)

)# ***基础的双分支创新***

class DDetect(nn.Module):

# YOLO Detect head for detection models

dynamic = False # force grid reconstruction

export = False # export mode

shape = None

anchors = torch.empty(0) # init

strides = torch.empty(0) # init

def __init__(self, nc=80, ch=(), inplace=True): # detection layer

super().__init__()

self.nc = nc # number of classes

self.nl = len(ch) # number of detection layers

self.reg_max = 16

self.no = nc + self.reg_max * 4 # number of outputs per anchor

self.inplace = inplace # use inplace ops (e.g. slice assignment)

self.stride = torch.zeros(self.nl) # strides computed during build

# 自动调整通道为 4 的倍数(比如 64、128),利于量化部署

c2, c3 = make_divisible(max((ch[0] // 4, self.reg_max * 4, 16)), 4), max(

(ch[0], min((self.nc * 2, 128)))) # channels

self.cv2 = nn.ModuleList( # 回归分支

nn.Sequential(Conv(x, c2, 3), Conv(c2, c2, 3, g=4), nn.Conv2d(c2, 4 * self.reg_max, 1, groups=4)) for x in

ch)

self.cv3 = nn.ModuleList( # 分类分支

nn.Sequential(Conv(x, c3, 3), Conv(c3, c3, 3), nn.Conv2d(c3, self.nc, 1)) for x in ch)

self.dfl = DFL(self.reg_max) if self.reg_max > 1 else nn.Identity()

def forward(self, x):

shape = x[0].shape # BCHW

for i in range(self.nl):

# 融合多尺度信息作为超图特征点

x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

if self.training:

return x

elif self.dynamic or self.shape != shape:

# 动态生成多尺度anchors

self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

self.shape = shape

box, cls = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2).split((self.reg_max * 4, self.nc), 1)

# 解码目标框,分布式边界框预测

dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

y = torch.cat((dbox, cls.sigmoid()), 1)

return y if self.export else (y, x)

def bias_init(self):

# Initialize Detect() biases, WARNING: requires stride availability

m = self # self.model[-1] # Detect() module

# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1

# ncf = math.log(0.6 / (m.nc - 0.999999)) if cf is None else torch.log(cf / cf.sum()) # nominal class frequency

for a, b, s in zip(m.cv2, m.cv3, m.stride): # from

a[-1].bias.data[:] = 1.0 # box

b[-1].bias.data[:m.nc] = math.log(5 / m.nc / (640 / s) ** 2) # cls (5 objects and 80 classes per 640 image)①提取输入特征图尺寸

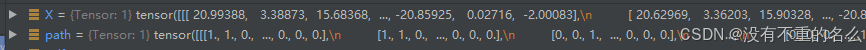

![]()

②融合边界框预测特征

③更新 anchors(可选)

如果是动态 anchor 模式,或者 feature map 尺寸发生了变化,就需要重新生成 anchor

调用make_anchors(),基于输出特征图的形状构造每个格子的 anchor 中心点和尺度

def make_anchors(feats, strides, grid_cell_offset=0.5):

"""Generate anchors from features."""

anchor_points, stride_tensor = [], []

assert feats is not None

dtype, device = feats[0].dtype, feats[0].device

for i, stride in enumerate(strides):

_, _, h, w = feats[i].shape

sx = torch.arange(end=w, device=device, dtype=dtype) + grid_cell_offset # shift x

sy = torch.arange(end=h, device=device, dtype=dtype) + grid_cell_offset # shift y

sy, sx = torch.meshgrid(sy, sx, indexing='ij') if TORCH_1_10 else torch.meshgrid(sy, sx)

anchor_points.append(torch.stack((sx, sy), -1).view(-1, 2))

stride_tensor.append(torch.full((h * w, 1), stride, dtype=dtype, device=device))

return torch.cat(anchor_points), torch.cat(stride_tensor)![]()

![]()

![]()

④解码预测框

把[B,C,H,W]的预测特征图还原为[B,N,C]的格式(N是anchor数量),将 channel 拆成回归和分类两个部分

![]()

⑤分布回归得到坐标

使用分布式回归 DFL方法,将每个bbox 的左上右下回归分布解码为实际坐标

![]()

![]()

⑥合并 box 和分类分数

![]()

⑦返回最终预测

![]()

HyperGraph模块:

通过超图进行的一系列高阶特征建模和信息传播,主要包括三个模块

高阶特征建模模块:

HyperComputeModule(

(hgconv): HyPConv(

(fc): Linear(in_features=256, out_features=256, bias=True)

(v2e): MessageAgg()

(e2v): MessageAgg()

)

(bn): BatchNorm2d(256, eps=0.001, momentum=0.03, affine=True, track_running_stats=True)

(act): SiLU(inplace=True)

)# ***高阶特征建模模块***

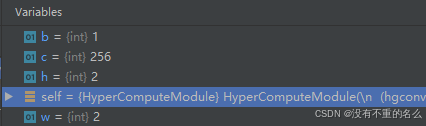

class HyperComputeModule(nn.Module):

def __init__(self, c1, c2):

super().__init__()

self.threshold = 11

# 使用高阶图卷积(HyperGraph Conv)

self.hgconv = HyPConv(c1, c2) # c1=c2

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU()

def forward(self, x):

b, c, h, w = x.shape[0], x.shape[1], x.shape[2], x.shape[3]

# 特征展平为序列结构

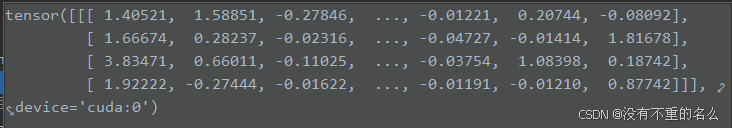

x = x.view(b, c, -1).transpose(1, 2).contiguous() # [B, N, C]

feature = x.clone()

# 计算token间欧氏距离,构建每个token与其他token的相似度

distance = torch.cdist(feature, feature)

# 构建超图邻接矩阵

hg = distance < self.threshold # 通过设定阈值,保留“邻近点对”,超图邻接关系矩阵

hg = hg.float().to(x.device).to(x.dtype)

# 高阶消息传递(图卷积)

x = self.hgconv(x, hg).to(x.device).to(x.dtype) + x # 高阶建模+残差连接

# 恢复形状+归一化+激活

x = x.transpose(1, 2).contiguous().view(b, c, h, w)

x = self.act(self.bn(x))

return x

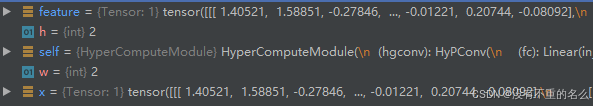

①获取输入特征维度

②构造 [B, N, C] 的序列结构,其中N=H*W

![]()

③克隆一份当前特征,用于构造特征之间的相似度

④计算 token 之间欧氏距离

![]()

⑤ 设置一个阈值,构建邻接矩阵

![]()

⑥布尔矩阵转换为浮点矩阵

![]()

⑦高阶建模+残差连接

![]()

⑧把展平的特征还原回空间结构

![]()

⑨归一化+激活函数

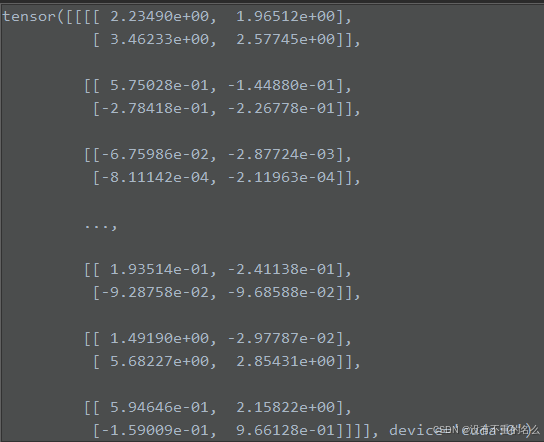

消息传递图信息聚合:

![]()

# ***消息传递图信息聚合***

class MessageAgg(nn.Module):

def __init__(self, agg_method="mean"):

super().__init__()

self.agg_method = agg_method

def forward(self, X, path):

"""

X: [n_node, dim] # 输入的节点特征(每个token的表示)

path: col(source) -> row(target) # 邻接矩阵定义了哪些节点之间存在“信息传递”关系

"""

# 消息聚合核心操作

X = torch.matmul(path, X) # 邻接矩阵 × 节点特征 将source节点的信息聚合到target节点上

# 归一化(mean聚合)

if self.agg_method == "mean":

norm_out = 1 / torch.sum(path, dim=2, keepdim=True)

norm_out[torch.isinf(norm_out)] = 0

X = norm_out * X

return X

elif self.agg_method == "sum":

pass

return X

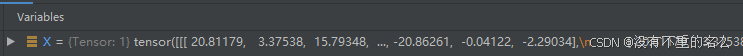

①核心聚合操作:

X:[N,d]

path:[N,N]

把source节点的特征加权(乘以path)后,聚合到对应的target节点

![]()

②聚合方式判断,平均聚合计算归一化系数(备用分支是sum)

![]()

③应用归一化,把刚刚聚合后的X每一行乘以归一化系数,得到平均值

轻量图卷积模块:

![]()

# ***轻量图卷积模块***

class HyPConv(nn.Module):

def __init__(self, c1, c2):

super().__init__()

self.fc = nn.Linear(c1, c2)

self.v2e = MessageAgg(agg_method="mean") # 节点到边(v → e)信息聚合

self.e2v = MessageAgg(agg_method="mean") # 边到节点(e → v)反向传播

def forward(self, x, H):

x = self.fc(x)

# v -> e

E = self.v2e(x, H.transpose(1, 2).contiguous())

# e -> v

x = self.e2v(E, H)

return x输入:

x:形状是[B,N,C],表示每个图像 batch 中有N个token,每个token是C维特征

H:形状是[B,N,N],表示邻接矩阵,定义了token与token之间的图关系,是稠密图的邻接矩阵表示,每个值表示两个token的“是否相关”或者“相似度”

①对输入特征进行一次线性映射,调整维度

![]()

②节点到边

![]()

③边到节点

![]()

结论:

Hyper-YOLO 通过引入超图计算和高阶特征建模,有效增强了 YOLO 模型的目标检测能力:突破传统 YOLO 仅限于相邻层特征融合的局限;提升跨层 & 跨位置信息传播能力;在 COCO 数据集上取得 SOTA 级别性能。

HyperYOLO的主干,C2f模块被MANet模块取代,MANet采用深度可分离卷积,增加通道数

Hyper2net通过对每个模型尺度采用不同的距离阈值来相应地调整其方法,根据模型规模和特征点分布动态调整阈值

结果:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)