pytorch学习2(卷积,线性,损失)

开!将大局逆转吧!

·

1.线性层的运用

import torch

import torchvision

from requests.packages import target

from torch import nn

from torch.nn import Conv2d

from torch.onnx.symbolic_opset9 import conv2d, linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset_transform = torchvision.transforms.Compose({

torchvision.transforms.ToTensor()

})

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=False)

test_load = DataLoader(dataset=train_set, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super(yu, self).__init__(*args, **kwargs)

self.lineral=nn.Linear(196608,10)#这里的g为输入的数据,该数据是将数组(向量)展开成一维数组,

#这里的input是表示线性层将输入的 196608 个特征映射到 10 个特征上。

def forward(self, x):

x = self.lineral(x)

return x

hh = yu()

# print(hh)

writer = SummaryWriter("C:\yolo\yolov5-5.0\logs")

step = 0

for data in test_load:

imgs, targets = data

print(imgs.shape)

output = torch.reshape(imgs,(1,1,1,-1))

#这里的操作是将数组进行展开,也可以使用output = gtorch.flatten(imgs)

print(output.shape)#这个19800在这里获得

output = hh(output)

print(output.shape)2.卷积层再理解

self.conv1 = Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2)

#in_channels是输入图像的通道数(这里我们假设为3)

#out_channels是输出图像的通道数,同时也是设定的卷积核个数

#每个卷积核会对输入的3通道图片进行卷积操作,生成一个一通道的特征图。这个特征图实际上是卷积核在输入图片的所有通道上滑动并进行局部区域加权求和的结果。由于卷积核在所有通道上操作,因此它能够捕捉到跨通道的特征。

当有32个这样的卷积核时,每个卷积核都会生成一个一通道的特征图,最终这些特征图会堆叠在一起,形成32通道的输出特征图。这32个通道中的每一个都代表了输入图片通过不同卷积核提取出的不同特征。

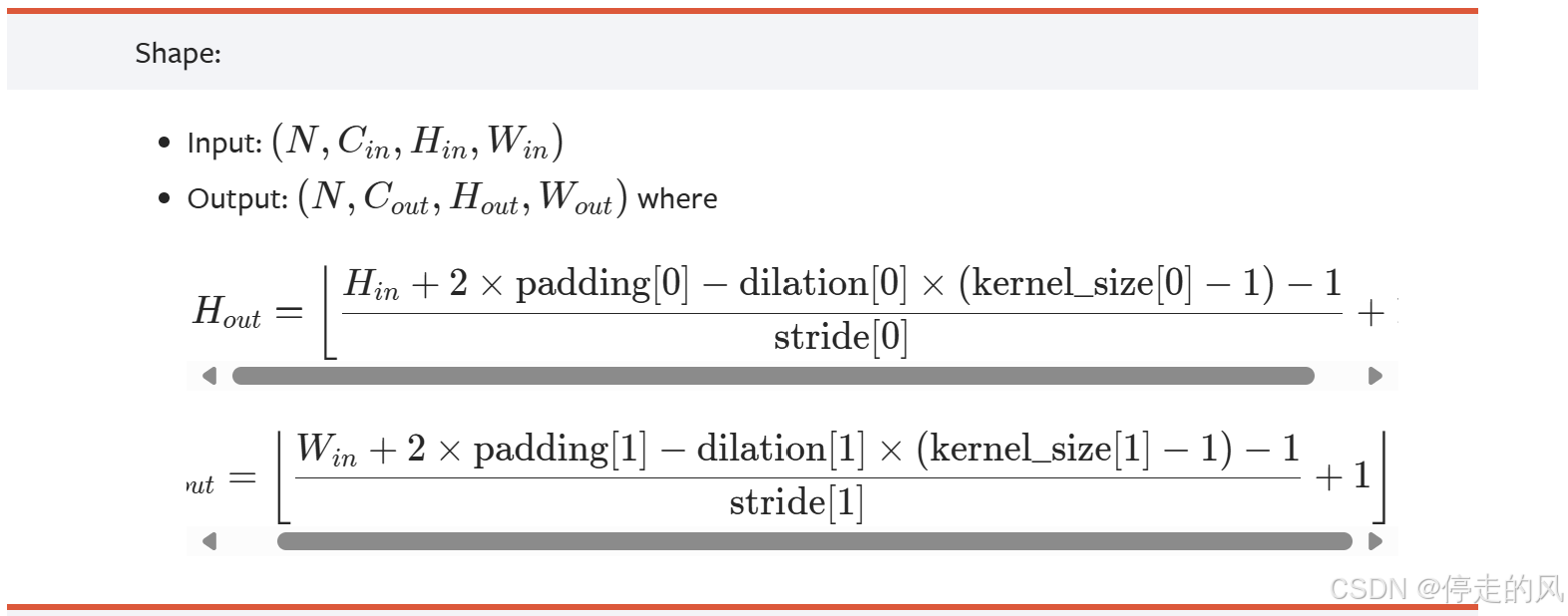

#padding是根据

根据此公式得到的。

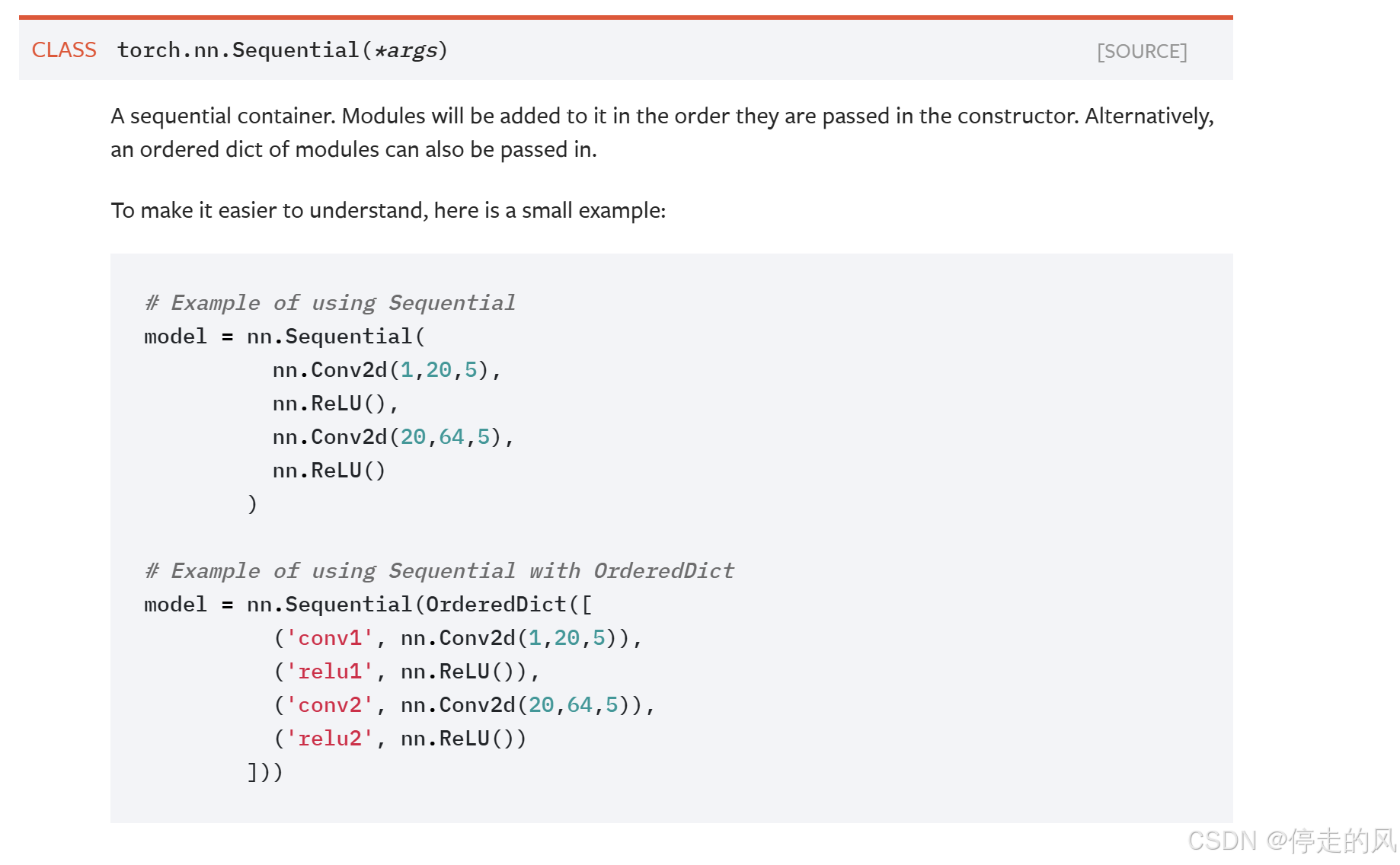

3. Sequential的使用(模块化函数)

位置:位于containers中

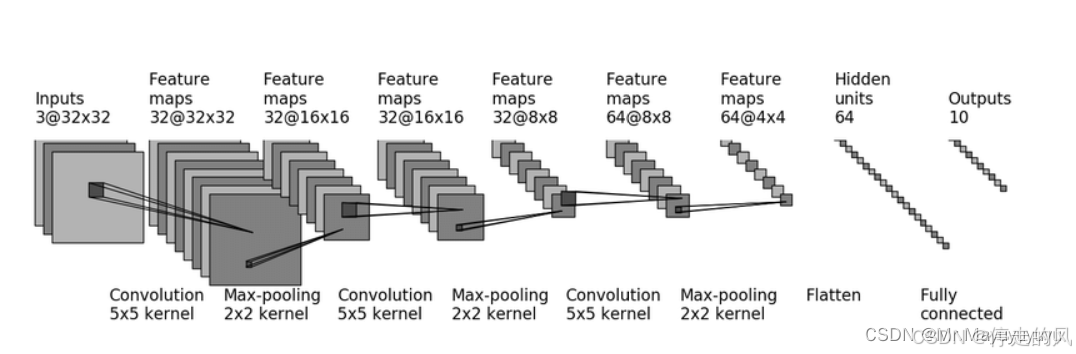

4.基于cifar10数据集的·神经网络搭建

import torch

import torchvision

from requests.packages import target

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter, writer

dataset_transform = torchvision.transforms.Compose({

torchvision.transforms.ToTensor()

})

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=False)

test_load = DataLoader(dataset=train_set, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super(yu, self).__init__(*args, **kwargs)

self.conv1=Conv2d(3,32,5,padding=2)

self.pool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.pool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.pool3=MaxPool2d(2)

self.flatten=Flatten()

self.liner1=Linear(1024,64)

self.liner2=Linear(64,10)

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10),

)

def forward(self, x):

# x = self.conv1(x)

# x = self.pool1(x)

# x = self.conv2(x)

# x = self.pool2(x)

# x = self.conv3(x)

# x = self.pool3(x)

# x = self.flatten(x)

# x = self.liner1(x)

# x = self.liner2(x)

x=self.model(x)

return x

hh=yu()

input = torch.ones((64,3,32,32))

outpot = hh(input)

print(outpot.shape)

writer= SummaryWriter("C:\yolo\yolov5-5.0\logs")

writer.add_graph(hh,input)

writer.close()

5.loss函数与反向传播

import torch

from torch import nn

from torch.nn import L1Loss#计算规则为差值取平均

from torch.nn import MSELoss#计算规则为均方差

input = torch.tensor([1,2,3],dtype=torch.float32)

output = torch.tensor([1,2,5],dtype=torch.float32)#这里为输入输出

#L1Loss的使用

loss = L1Loss()#这里可以加入参数reduction="sum"用来改变loss函数的计算规则,

result = loss(input,output)

print(result)

#MSELoss的使用

mse= MSELoss()

result2=mse(input,output)

print(result2)

#CrossEntry的使用

x=torch.tensor([0.1,0.2,0.3])

print(x)

y=torch.tensor([1])

x=torch.reshape(x,(1,3))#这里进行变换的原因是,交叉函数要求输入二维向量

loss_cross =nn.CrossEntropyLoss()

hh=loss_cross(x,y)

print(hh)

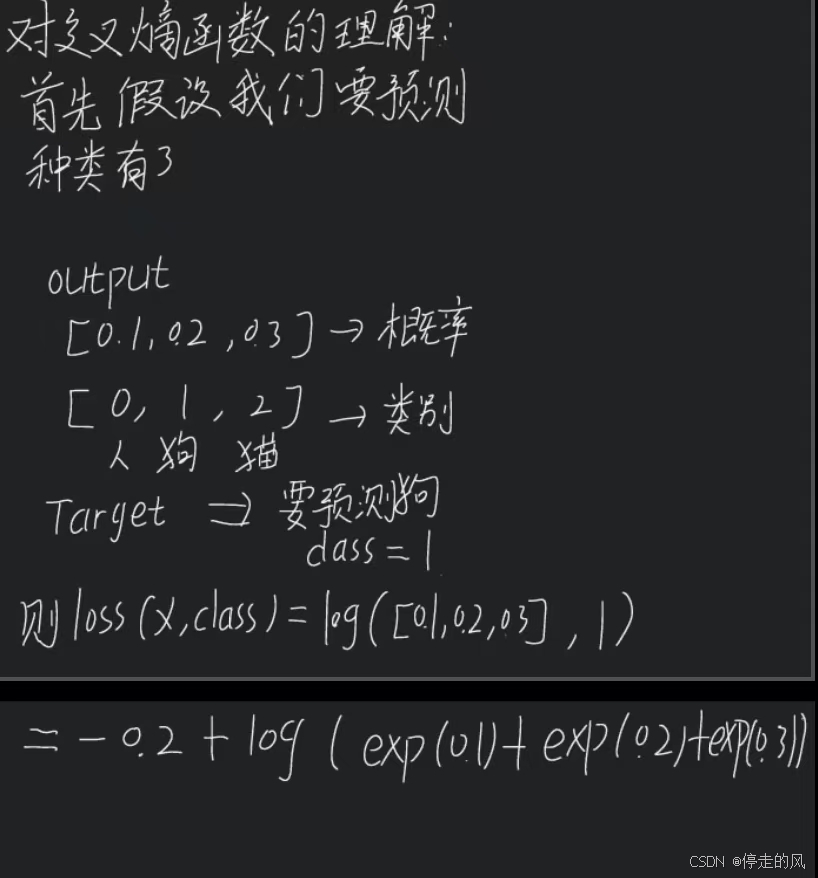

5.1.对于crossEntry函数的理解

5.2 添加了损失函数的神经网络

import torch

import torchvision

from requests.packages import target

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter, writer

dataset_transform = torchvision.transforms.Compose({

torchvision.transforms.ToTensor()

})

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=False)

test_load = DataLoader(dataset=train_set, batch_size=1, shuffle=True, num_workers=0, drop_last=False)

class yu(nn.Module):

def __init__(self, *args, **kwargs) -> None:

super(yu, self).__init__(*args, **kwargs)

self.conv1=Conv2d(3,32,5,padding=2)

self.pool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.pool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.pool3=MaxPool2d(2)

self.flatten=Flatten()

self.liner1=Linear(1024,64)

self.liner2=Linear(64,10)

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10),

)

def forward(self, x):

# x = self.conv1(x)

# x = self.pool1(x)

# x = self.conv2(x)

# x = self.pool2(x)

# x = self.conv3(x)

# x = self.pool3(x)

# x = self.flatten(x)

# x = self.liner1(x)

# x = self.liner2(x)

x=self.model(x)

return x

hh=yu()

loss = nn.CrossEntropyLoss()

for data in test_load:

imgs,targets=data

outputs=hh(imgs)

result = loss(outputs,targets)

print(result)

#writer= SummaryWriter("C:\yolo\yolov5-5.0\logs")

#writer.close()

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)