从零掌握上下文工程:解决AI Agent“罢工“问题的核心技术!

文章探讨了AI Agent"掉链子"现象的根本原因,指出这并非模型能力不足,而是上下文工程(Context Engineering)的失败。系统介绍了上下文工程的概念、四大核心技术、系统架构设计、应用实践、最佳设计原则及未来趋势,强调"模型能力+上下文工程"将成为AI系统竞争力的双引擎,只有让AI在正确的上下文中工作,才能真正释放其潜力。

不知道你有没有遇到过这种情况,辛辛苦苦搭建的AI Agent,用了一段时间就经常"掉链子"——要么理解错你的意思,要么给出莫名其妙的回答,要么干脆就"罢工"不干了。

你可能会想:是不是模型还不够强?是不是需要更先进的GPT-5?

但实际上,多数AI Agent的失败,并不是模型能力的失败,而是上下文工程(Context Engineering)的失败。

我们过度关注了模型本身的能力,却忽视了一个更关键的问题:如何让AI在正确的上下文中工作。今天我们就从系统工程的角度,深入解析上下文工程的核心原理、实施方法和最佳实践,帮你彻底搞懂这个决定AI Agent成败的关键技术。

一、 问题本质:AI Agent为什么总是"不靠谱"?

现象观察:技术很先进,体验很"智障"

你有没有遇到过这样的情况?

场景一:智能客服Agent

ounter(lineounter(lineounter(line

用户:"我想查询昨天下午3点的订单"

AI:"好的,我来帮您查询。请问您需要什么帮助?"

用户:"......"

场景二:代码助手Agent

ounter(lineounter(lineounter(lineounter(line

用户:"帮我优化这段Python代码的性能"

AI:"我很乐意帮助您!但是我需要看到您的代码才能提供建议。"

用户:"代码我刚刚已经贴给你了啊!"

AI:"抱歉,我没有看到任何代码。"

场景三:数据分析Agent

ounter(lineounter(lineounter(line

用户:"基于刚才上传的销售数据,分析一下Q3的趋势"

AI:"请您先上传数据文件,我来帮您分析。"

用户:"数据10分钟前就上传了,你在跟我开玩笑吗?"

问题根源:不是智商问题,是"记忆力"问题

这些问题的本质是什么?不是AI不够聪明,而是AI无法有效管理和利用上下文信息。

传统观点认为:

- AI失败 = 模型能力不足

- 解决方案 = 更强大的模型

实际情况是:

- AI失败 = 上下文管理失败

- 解决方案 = 更好的上下文工程

上下文管理的三大核心挑战:

挑战一:信息丢失

ounter(lineounter(line

对话轮次:1 → 2 → 3 → 4 → 5 → 6

上下文保留:100% → 90% → 70% → 40% → 20% → 5%

随着对话的进行,早期的重要信息逐渐丢失,AI开始"失忆"。

挑战二:信息混乱

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

当前任务:分析销售数据

上下文包含:

├─ 用户个人信息

├─ 历史聊天记录

├─ 销售数据文件

├─ 上次分析结果

├─ 系统配置信息

└─ 无关的闲聊内容

结果:AI分不清哪些信息是相关的

挑战三:信息过载

ounter(lineounter(lineounter(lineounter(line

输入token限制:4096 tokens

实际需要的上下文:8000+ tokens

结果:要么截断重要信息,要么拒绝执行任务

一直在更新,更多的大模型学习和面试资料已经上传带到CSDN的官方了,有需要的朋友可以扫描下方二维码免费领取【保证100%免费】👇👇

二、概念解析:上下文工程到底是什么?

定义与边界:不只是"多轮对话"那么简单

上下文工程(Context Engineering)的正式定义:

上下文工程是一门系统性的工程学科,专注于设计、构建和优化AI系统中的上下文管理机制,确保AI能够在正确的信息环境中做出准确的决策和回应。

这个定义包含三个关键要素:

- 系统性 - 不是临时补丁,而是完整的工程体系

- 信息环境 - 不只是文本,还包括状态、历史、配置等

- 准确决策 - 最终目标是提升AI的实际表现

与相关技术的关系图谱

很多人容易混淆上下文工程与其他相关技术,我们来理清楚它们的关系:

上下文工程 vs 提示词工程

提示词工程(Prompt Engineering):

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

# 单次交互优化

prompt = """

你是一个专业的数据分析师。

请分析以下销售数据:

[数据内容...]

要求:

1. 计算增长率

2. 识别异常值

3. 提供改进建议

"""

上下文工程:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

# 多轮交互管理

class ContextManager:

def __init__(self):

self.conversation_history = []

self.user_profile = {}

self.current_task_state = {}

self.relevant_documents = []

def update_context(self, new_info):

# 智能选择和整合信息

# 压缩历史对话

# 维护任务状态

# 动态加载相关文档

核心差异:

- 提示词工程关注"说什么"

- 上下文工程关注"记住什么"

上下文工程 vs RAG(检索增强生成)

RAG的工作方式:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

# 基于查询检索相关文档

def rag_process(query):

# 1. 将查询转换为向量

query_embedding = embed(query)

# 2. 检索相似文档

relevant_docs = vector_db.search(query_embedding, top_k=5)

# 3. 拼接上下文

context = "\n".join(relevant_docs)

# 4. 生成回答

return llm.generate(f"{context}\n\nQuestion: {query}")

上下文工程的工作方式:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

# 综合管理多种上下文源

def context_engineering_process(current_input):

context = ContextManager()

# 1. 历史对话上下文

context.add_conversation_history(filter_relevant=True)

# 2. 任务状态上下文

context.add_task_state(current_task)

# 3. 用户档案上下文

context.add_user_profile(user_id)

# 4. 动态检索上下文(类似RAG)

context.add_retrieved_info(query=current_input)

# 5. 智能压缩和选择

optimized_context = context.optimize_for_model()

return llm.generate_with_context(optimized_context, current_input)

核心差异:

- RAG专注于外部知识检索

- 上下文工程管理所有类型的上下文信息

上下文工程 vs MCP(模型上下文协议)

MCP的关注点:

- 标准化的上下文传输协议

- 确保不同系统间的上下文兼容性

- 技术标准和规范

上下文工程的关注点:

- 上下文的生命周期管理

- 信息的智能选择和优化

- 实际业务效果的提升

关系:

- MCP是上下文工程的基础设施

- 上下文工程是MCP的应用实践

上下文工程的四大核心技术

根据最新的技术发展,上下文工程主要包含四个核心技术方向:

1. 上下文写入(Context Writing)

目标: 如何有效地收集和记录上下文信息

关键技术:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextWriter:

def __init__(self):

self.structured_memory = {}

self.semantic_index = {}

def write_interaction(self, user_input, ai_response, metadata):

# 结构化存储

interaction = {

'timestamp': datetime.now(),

'user_intent': self.extract_intent(user_input),

'entities': self.extract_entities(user_input),

'task_state': metadata.get('task_state'),

'success_flag': self.evaluate_success(ai_response)

}

# 语义索引

embedding = self.embed(user_input + ai_response)

self.semantic_index[interaction['id']] = embedding

return interaction['id']

2. 上下文选取(Context Selection)

目标: 从大量历史信息中选择最相关的内容

关键技术:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextSelector:

def select_relevant_context(self, current_query, max_tokens=2000):

candidates = []

# 1. 语义相似度筛选

query_embedding = self.embed(current_query)

semantic_scores = self.compute_similarity(query_embedding)

# 2. 时间权重调整

time_weights = self.compute_time_decay(candidates)

# 3. 任务相关性评分

task_relevance = self.compute_task_relevance(current_query)

# 4. 综合评分排序

final_scores = (semantic_scores * 0.4 +

time_weights * 0.3 +

task_relevance * 0.3)

return self.select_top_k_within_limit(candidates, final_scores, max_tokens)

3. 上下文压缩(Context Compression)

目标: 在保持关键信息的前提下减少token消耗

关键技术:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextCompressor:

def compress_conversation_history(self, history, target_length):

compressed = []

for interaction in history:

# 提取关键信息

summary = self.extract_key_points(interaction)

# 去除冗余表达

compressed_text = self.remove_redundancy(summary)

# 保留重要实体和关系

entities = self.preserve_entities(interaction)

compressed.append({

'summary': compressed_text,

'entities': entities,

'importance_score': self.calculate_importance(interaction)

})

return self.optimize_for_target_length(compressed, target_length)

4. 上下文隔离(Context Isolation)

目标: 防止不同任务或用户间的上下文污染

关键技术:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextIsolator:

def __init__(self):

self.session_contexts = {}

self.user_contexts = {}

self.task_contexts = {}

def get_isolated_context(self, user_id, session_id, task_id):

# 用户级隔离

user_context = self.user_contexts.get(user_id, {})

# 会话级隔离

session_context = self.session_contexts.get(session_id, {})

# 任务级隔离

task_context = self.task_contexts.get(task_id, {})

# 安全合并,避免泄露

return self.secure_merge(user_context, session_context, task_context)

def secure_merge(self, *contexts):

# 实现权限控制和信息过滤

# 确保敏感信息不会跨域泄露

pass

三、技术实现:构建高效的上下文管理系统

系统架构设计:分层式上下文管理

一个完整的上下文工程系统需要采用分层架构,确保各个组件的职责清晰、可扩展性强。

架构层次图

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

┌─────────────────────────────────────────┐

│ 应用接口层 │

│ (Chat API, Agent API, Webhook API) │

├─────────────────────────────────────────┤

│ 上下文引擎层 │

│ (Context Manager, Selection Engine) │

├─────────────────────────────────────────┤

│ 存储抽象层 │

│ (Memory Interface, Vector Interface) │

├─────────────────────────────────────────┤

│ 基础设施层 │

│ (Redis, Vector DB, Object Storage) │

└─────────────────────────────────────────┘

核心组件实现

1. 上下文管理器(Context Manager)

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class AdvancedContextManager:

def __init__(self, config):

self.config = config

self.memory_store = self._init_memory_store()

self.vector_store = self._init_vector_store()

self.compressor = ContextCompressor()

self.selector = ContextSelector()

async def process_interaction(self, user_id, message, metadata=None):

"""处理一次完整的交互流程"""

# 1. 加载用户上下文

user_context = await self.load_user_context(user_id)

# 2. 选择相关历史信息

relevant_history = await self.selector.select_relevant(

query=message,

user_context=user_context,

max_tokens=self.config.max_context_tokens

)

# 3. 构建完整上下文

full_context = self._build_full_context(

current_message=message,

user_profile=user_context.profile,

conversation_history=relevant_history,

task_state=user_context.current_task,

metadata=metadata

)

# 4. 压缩优化

optimized_context = await self.compressor.compress(

context=full_context,

target_tokens=self.config.target_context_size

)

return optimized_context

async def update_context(self, user_id, interaction_result):

"""更新上下文信息"""

# 提取关键信息

extracted_info = self._extract_information(interaction_result)

# 更新向量索引

await self.vector_store.upsert(

id=f"{user_id}_{interaction_result.timestamp}",

vector=extracted_info.embedding,

metadata=extracted_info.metadata

)

# 更新结构化存储

await self.memory_store.update_user_context(

user_id=user_id,

new_info=extracted_info

)

2. 智能选择引擎(Selection Engine)

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class IntelligentSelector:

def __init__(self):

self.similarity_threshold = 0.7

self.recency_weight = 0.3

self.importance_weight = 0.4

self.relevance_weight = 0.3

async def select_relevant(self, query, user_context, max_tokens):

"""智能选择相关上下文"""

# 1. 候选项生成

candidates = await self._generate_candidates(user_context)

# 2. 多维度评分

scores = await self._calculate_multi_dimensional_scores(

query=query,

candidates=candidates

)

# 3. 动态阈值调整

adaptive_threshold = self._calculate_adaptive_threshold(scores)

# 4. 最优选择

selected = self._select_optimal_subset(

candidates=candidates,

scores=scores,

threshold=adaptive_threshold,

max_tokens=max_tokens

)

return selected

async def _calculate_multi_dimensional_scores(self, query, candidates):

"""多维度评分计算"""

scores = {}

for candidate in candidates:

# 语义相似度

semantic_score = await self._semantic_similarity(query, candidate)

# 时间新鲜度

recency_score = self._calculate_recency_score(candidate.timestamp)

# 重要性评分

importance_score = self._calculate_importance_score(candidate)

# 任务相关性

task_relevance = self._calculate_task_relevance(query, candidate)

# 加权综合

final_score = (

semantic_score * self.relevance_weight +

recency_score * self.recency_weight +

importance_score * self.importance_weight +

task_relevance * 0.2 # 任务特定权重

)

scores[candidate.id] = final_score

return scores

性能优化策略

缓存机制设计

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextCache:

def __init__(self):

self.l1_cache = {} # 内存缓存,最近访问

self.l2_cache = Redis() # Redis缓存,会话级别

self.l3_cache = Database() # 数据库,持久化存储

async def get_context(self, cache_key):

# L1缓存命中

if cache_key in self.l1_cache:

return self.l1_cache[cache_key]

# L2缓存命中

l2_result = await self.l2_cache.get(cache_key)

if l2_result:

self.l1_cache[cache_key] = l2_result

return l2_result

# L3缓存命中

l3_result = await self.l3_cache.get(cache_key)

if l3_result:

await self.l2_cache.set(cache_key, l3_result, ttl=3600)

self.l1_cache[cache_key] = l3_result

return l3_result

return None

异步处理优化

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class AsyncContextProcessor:

def __init__(self):

self.background_tasks = asyncio.Queue()

self.worker_pool = []

async def process_context_async(self, user_id, interaction):

"""异步处理非关键路径的上下文更新"""

# 立即返回必要的上下文

immediate_context = await self._get_immediate_context(user_id)

# 将耗时操作放入后台队列

background_task = {

'type': 'context_update',

'user_id': user_id,

'interaction': interaction,

'timestamp': datetime.now()

}

await self.background_tasks.put(background_task)

return immediate_context

async def background_worker(self):

"""后台工作协程"""

while True:

try:

task = await self.background_tasks.get()

if task['type'] == 'context_update':

await self._process_context_update(task)

elif task['type'] == 'embedding_generation':

await self._process_embedding_generation(task)

self.background_tasks.task_done()

except Exception as e:

logger.error(f"Background task failed: {e}")

continue

监控与诊断系统

上下文质量监控

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextQualityMonitor:

def __init__(self):

self.metrics_collector = MetricsCollector()

async def monitor_context_quality(self, context, interaction_result):

"""监控上下文质量"""

metrics = {

# 完整性指标

'completeness_score': self._calculate_completeness(context),

# 相关性指标

'relevance_score': self._calculate_relevance(context, interaction_result),

# 一致性指标

'consistency_score': self._calculate_consistency(context),

# 性能指标

'context_size': len(context.tokens),

'selection_time': context.selection_time,

'compression_ratio': context.compression_ratio,

# 业务指标

'task_success_rate': interaction_result.success_rate,

'user_satisfaction': interaction_result.user_rating

}

await self.metrics_collector.record(metrics)

# 质量告警

if metrics['relevance_score'] < 0.6:

await self._trigger_quality_alert(context, metrics)

return metrics

一直在更新,更多的大模型学习和面试资料已经上传带到CSDN的官方了,有需要的朋友可以扫描下方二维码免费领取【保证100%免费】👇👇

四、实战应用:不同场景下的上下文工程实践

智能客服Agent:让客服真正"智能"起来

传统客服Agent最大的痛点就是"答非所问",而这正是上下文工程大显身手的场景。

场景分析:客户咨询电商订单问题

传统实现方式:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

# 简单的意图识别 + 模板回复

def handle_customer_query(query):

intent = classify_intent(query)

if intent == "order_inquiry":

return "请提供您的订单号,我来帮您查询。"

elif intent == "refund_request":

return "请说明您要退款的原因。"

else:

return "抱歉,我没有理解您的问题。"

问题: 每次对话都是独立的,无法记住用户之前说了什么。

上下文工程实现:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class SmartCustomerServiceAgent:

def __init__(self):

self.context_manager = ContextManager()

self.knowledge_base = CustomerKnowledgeBase()

async def handle_customer_query(self, customer_id, query, session_id):

# 1. 加载客户完整上下文

customer_context = await self.context_manager.load_customer_context(

customer_id=customer_id,

include_history=True,

include_orders=True,

include_preferences=True

)

# 2. 分析当前查询的上下文依赖

context_dependencies = self._analyze_context_dependencies(query)

# 3. 构建智能回复

if context_dependencies.requires_order_info:

# 检查是否已有订单上下文

if customer_context.current_order:

order_info = customer_context.current_order

response = await self._handle_order_query_with_context(

query, order_info, customer_context

)

else:

# 智能推断可能的订单

potential_orders = await self._infer_potential_orders(

customer_context, query

)

if len(potential_orders) == 1:

# 自动锁定订单

order_info = potential_orders[0]

response = f"我看到您最近的订单是{order_info.id},{await self._handle_order_query_with_context(query, order_info, customer_context)}"

else:

response = await self._request_order_clarification(potential_orders)

# 4. 更新上下文状态

await self.context_manager.update_conversation_context(

customer_id=customer_id,

session_id=session_id,

query=query,

response=response,

resolved_entities=self._extract_entities(query, response)

)

return response

效果对比:

传统方式对话:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

用户:"我的订单什么时候能到?"

客服:"请提供订单号。"

用户:"就是昨天下午买的那个iPhone"

客服:"请提供具体的订单号。"

用户:"我怎么知道订单号在哪里?"

客服:"您可以在我的订单页面查看。"

上下文工程优化后:

ounter(lineounter(lineounter(lineounter(line

用户:"我的订单什么时候能到?"

客服:"我看到您昨天下午确实购买了iPhone 15 Pro,订单号XXX123,预计明天下午2-6点送达。需要我帮您安排具体的送货时间吗?"

用户:"太好了!能安排在下午4点后吗?"

客服:"没问题,我已经帮您备注了4点后送达。还有其他需要帮助的吗?"

代码助手Agent:真正理解开发者意图

开发者在使用代码助手时,经常需要在一个项目上下文中进行多轮交互,这对上下文管理提出了很高要求。

项目上下文管理

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class CodeAssistantAgent:

def __init__(self):

self.project_context = ProjectContextManager()

self.code_analyzer = CodeAnalyzer()

async def handle_code_request(self, developer_id, request, project_id=None):

# 1. 加载项目上下文

if project_id:

project_context = await self.project_context.load_project(project_id)

else:

# 智能推断项目上下文

project_context = await self._infer_project_context(developer_id, request)

# 2. 分析代码依赖关系

code_dependencies = await self.code_analyzer.analyze_dependencies(

request=request,

project_context=project_context

)

# 3. 生成上下文感知的代码

code_solution = await self._generate_contextual_code(

request=request,

project_context=project_context,

dependencies=code_dependencies

)

return code_solution

async def _generate_contextual_code(self, request, project_context, dependencies):

"""生成上下文感知的代码"""

# 构建代码生成上下文

generation_context = {

'project_structure': project_context.structure,

'existing_functions': project_context.functions,

'coding_style': project_context.style_guide,

'dependencies': dependencies,

'recent_changes': project_context.recent_commits

}

# 生成代码

prompt = self._build_code_generation_prompt(request, generation_context)

code = await self.llm.generate_code(prompt)

# 验证代码与项目的兼容性

compatibility_check = await self._verify_code_compatibility(

code, project_context

)

if not compatibility_check.is_compatible:

# 自动修复兼容性问题

code = await self._fix_compatibility_issues(

code, compatibility_check.issues

)

return code

智能代码补全示例

用户场景: 开发者正在一个React项目中添加新功能

对话流程:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

开发者:"帮我写一个用户登录组件"

助手分析上下文:

- 项目类型:React + TypeScript

- 状态管理:使用Redux Toolkit

- UI库:Material-UI

- 认证方式:JWT token

- API风格:RESTful

生成的代码:

```typescript

import React, { useState } from 'react';

import { useDispatch } from 'react-redux';

import { TextField, Button, Box, Alert } from '@mui/material';

import { loginUser } from '../store/authSlice'; // 基于项目结构推断

import { LoginCredentials } from '../types/auth'; // 使用项目现有类型

const LoginComponent: React.FC = () => {

const [credentials, setCredentials] = useState<LoginCredentials>({

email: '',

password: ''

});

const [error, setError] = useState<string>('');

const dispatch = useDispatch();

const handleSubmit = async (e: React.FormEvent) => {

e.preventDefault();

try {

await dispatch(loginUser(credentials)).unwrap();

// 登录成功,路由跳转在authSlice中处理

} catch (err) {

setError('登录失败,请检查用户名和密码');

}

};

return (

<Box component="form" onSubmit={handleSubmit} sx={{ mt: 1 }}>

{error && <Alert severity="error" sx={{ mb: 2 }}>{error}</Alert>}

<TextField

margin="normal"

required

fullWidth

id="email"

label="邮箱地址"

name="email"

autoComplete="email"

autoFocus

value={credentials.email}

onChange={(e) => setCredentials({...credentials, email: e.target.value})}

/>

<TextField

margin="normal"

required

fullWidth

name="password"

label="密码"

type="password"

id="password"

autoComplete="current-password"

value={credentials.password}

onChange={(e) => setCredentials({...credentials, password: e.target.value})}

/>

<Button

type="submit"

fullWidth

variant="contained"

sx={{ mt: 3, mb: 2 }}

>

登录

</Button>

</Box>

);

};

export default LoginComponent;

继续对话:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

开发者:"这个组件需要添加记住登录状态的功能"

助手:"我来为您添加'记住我'功能,基于您项目中的localStorage工具类:"

// 添加的代码会自动:

// 1. 导入项目现有的localStorage工具

// 2. 添加复选框组件

// 3. 更新登录逻辑以支持持久化

// 4. 保持与现有代码风格一致

数据分析Agent:上下文驱动的智能分析

数据分析场景对上下文管理要求特别高,因为分析往往是一个多步骤、迭代式的过程。

分析上下文管理

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class DataAnalysisAgent:

def __init__(self):

self.analysis_context = AnalysisContextManager()

self.data_processor = DataProcessor()

async def handle_analysis_request(self, user_id, request, session_id):

# 1. 加载分析会话上下文

session_context = await self.analysis_context.load_session(

user_id=user_id,

session_id=session_id

)

# 2. 理解分析意图

analysis_intent = await self._parse_analysis_intent(

request=request,

context=session_context

)

# 3. 执行上下文感知的分析

if analysis_intent.requires_previous_results:

# 基于之前的分析结果继续

previous_results = session_context.analysis_results

analysis_result = await self._continue_analysis(

request, previous_results, session_context

)

else:

# 开始新的分析流程

analysis_result = await self._start_new_analysis(

request, session_context

)

# 4. 更新分析上下文

await self.analysis_context.update_session(

session_id=session_id,

new_result=analysis_result,

analysis_chain=session_context.analysis_chain + [analysis_intent]

)

return analysis_result

实际分析场景

用户: 数据科学家分析电商销售数据

分析对话流程:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

第1轮:

用户:"分析一下Q3的销售趋势"

系统:[自动加载Q3销售数据,生成趋势图和关键指标]

回复:"Q3整体销售呈上升趋势,总销售额3.2亿,同比增长15%。9月份表现特别突出,环比增长22%。"

第2轮:

用户:"9月份为什么增长这么快?"

系统上下文理解:

- 用户关注9月份增长原因

- 需要深入分析9月份数据

- 可能需要对比其他月份

回复:"9月份增长主要由三个因素驱动:1)新品iPhone发布带动电子产品销量增长45%;2)中秋促销活动转化率提升30%;3)新用户获取量增长28%。"

第3轮:

用户:"这个趋势能持续到Q4吗?"

系统上下文理解:

- 用户询问趋势预测

- 需要基于Q3分析结果

- 应该考虑Q4的特殊因素

回复:"基于当前趋势和历史数据,Q4销量预计会继续增长,但增长率可能放缓至12%左右。建议关注双11和双12的促销效果,这可能带来额外的20%增量。"

第4轮:

用户:"帮我生成一份给老板的分析报告"

系统上下文理解:

- 需要汇总整个分析过程

- 格式要适合高管查看

- 包含关键结论和建议

回复:[自动生成包含所有分析结果的高管报告,突出关键发现和actionable insights]

五、 最佳实践:构建生产级上下文工程系统

设计原则:七大核心准则

基于大量实际项目的经验总结,我们提炼出构建高质量上下文工程系统的七大核心设计原则:

1. 渐进式信息加载(Progressive Information Loading)

原则: 根据对话的深入程度,逐步加载更详细的上下文信息。

实现策略:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ProgressiveContextLoader:

def __init__(self):

self.context_levels = {

'basic': ['user_profile', 'current_session'],

'intermediate': ['recent_history', 'task_state', 'preferences'],

'advanced': ['full_history', 'related_documents', 'behavioral_patterns']

}

async def load_context_by_depth(self, user_id, conversation_depth):

if conversation_depth <= 2:

level = 'basic'

elif conversation_depth <= 5:

level = 'intermediate'

else:

level = 'advanced'

context_components = self.context_levels[level]

return await self._load_components(user_id, context_components)

好处:

- 减少初始延迟

- 节省计算资源

- 避免信息过载

2. 智能上下文过期(Intelligent Context Expiration)

原则: 不同类型的上下文信息应该有不同的过期策略。

实现策略:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextExpirationManager:

def __init__(self):

self.expiration_rules = {

'user_preferences': timedelta(days=30), # 偏好相对稳定

'task_state': timedelta(hours=2), # 任务状态短期有效

'conversation_history': self._dynamic_expiration, # 动态计算

'temporary_context': timedelta(minutes=15) # 临时信息快速过期

}

def _dynamic_expiration(self, context_item):

"""基于重要性和使用频率动态计算过期时间"""

importance_score = context_item.importance_score

last_access = context_item.last_access

base_ttl = timedelta(days=7)

importance_multiplier = 1 + (importance_score - 0.5)

access_multiplier = 1 + min(context_item.access_count / 10, 2)

return base_ttl * importance_multiplier * access_multiplier

3. 上下文一致性检查(Context Consistency Validation)

原则: 确保不同来源的上下文信息之间保持逻辑一致性。

实现策略:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextConsistencyValidator:

async def validate_context_consistency(self, context_bundle):

inconsistencies = []

# 检查时间一致性

time_conflicts = self._check_temporal_consistency(context_bundle)

if time_conflicts:

inconsistencies.extend(time_conflicts)

# 检查逻辑一致性

logic_conflicts = self._check_logical_consistency(context_bundle)

if logic_conflicts:

inconsistencies.extend(logic_conflicts)

# 检查实体一致性

entity_conflicts = self._check_entity_consistency(context_bundle)

if entity_conflicts:

inconsistencies.extend(entity_conflicts)

if inconsistencies:

return await self._resolve_inconsistencies(inconsistencies)

return context_bundle

async def _resolve_inconsistencies(self, inconsistencies):

"""智能解决上下文冲突"""

for conflict in inconsistencies:

if conflict.type == 'temporal':

# 选择最新的信息

resolved = self._select_most_recent(conflict.conflicting_items)

elif conflict.type == 'logical':

# 选择置信度最高的信息

resolved = self._select_highest_confidence(conflict.conflicting_items)

else:

# 标记为需要人工审核

resolved = self._mark_for_review(conflict)

conflict.resolved_value = resolved

return self._apply_resolutions(inconsistencies)

4. 多模态上下文融合(Multi-modal Context Fusion)

原则: 整合文本、图像、音频等多种模态的上下文信息。

实现策略:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class MultimodalContextFusion:

def __init__(self):

self.text_processor = TextContextProcessor()

self.image_processor = ImageContextProcessor()

self.audio_processor = AudioContextProcessor()

async def fuse_multimodal_context(self, context_inputs):

fused_context = {}

# 处理各种模态

if 'text' in context_inputs:

text_features = await self.text_processor.extract_features(

context_inputs['text']

)

fused_context['text'] = text_features

if 'images' in context_inputs:

image_features = await self.image_processor.extract_features(

context_inputs['images']

)

fused_context['visual'] = image_features

if 'audio' in context_inputs:

audio_features = await self.audio_processor.extract_features(

context_inputs['audio']

)

fused_context['audio'] = audio_features

# 跨模态关联分析

cross_modal_relations = await self._analyze_cross_modal_relations(

fused_context

)

# 生成统一的上下文表示

unified_context = await self._create_unified_representation(

fused_context, cross_modal_relations

)

return unified_context

性能优化:生产环境的关键考量

1. 分布式上下文存储

架构设计:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class DistributedContextStorage:

def __init__(self):

self.hot_storage = Redis() # 热数据:最近1小时

self.warm_storage = MongoDB() # 温数据:最近1个月

self.cold_storage = S3() # 冷数据:历史归档

async def get_context(self, user_id, context_type):

# 优先从热存储获取

hot_data = await self.hot_storage.get(f"hot:{user_id}:{context_type}")

if hot_data:

return hot_data

# 从温存储获取

warm_data = await self.warm_storage.find_one({

'user_id': user_id,

'context_type': context_type,

'last_access': {'$gte': datetime.now() - timedelta(days=30)}

})

if warm_data:

# 提升到热存储

await self.hot_storage.setex(

f"hot:{user_id}:{context_type}",

3600,

warm_data

)

return warm_data

# 从冷存储获取(异步加载)

cold_data_task = asyncio.create_task(

self._load_from_cold_storage(user_id, context_type)

)

return await cold_data_task

2. 上下文预计算与缓存

策略实现:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextPrecomputation:

def __init__(self):

self.precompute_scheduler = AsyncScheduler()

async def schedule_precomputation(self, user_id):

"""为活跃用户预计算上下文"""

# 分析用户行为模式

user_patterns = await self._analyze_user_patterns(user_id)

# 预测可能需要的上下文

predicted_contexts = await self._predict_required_contexts(

user_patterns

)

# 调度预计算任务

for context_type in predicted_contexts:

await self.precompute_scheduler.schedule(

task_type='context_precompute',

user_id=user_id,

context_type=context_type,

priority=predicted_contexts[context_type].priority

)

async def precompute_context(self, user_id, context_type):

"""执行上下文预计算"""

# 收集原始数据

raw_data = await self._collect_raw_context_data(user_id, context_type)

# 处理和压缩

processed_context = await self._process_and_compress(raw_data)

# 缓存结果

await self._cache_precomputed_context(

user_id, context_type, processed_context

)

监控与运维:确保系统稳定性

1. 上下文质量监控

监控指标体系:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextQualityMetrics:

def __init__(self):

self.metrics = {

'relevance_score': GaugeMetric('context_relevance'),

'completeness_score': GaugeMetric('context_completeness'),

'consistency_score': GaugeMetric('context_consistency'),

'latency': HistogramMetric('context_latency'),

'cache_hit_rate': GaugeMetric('context_cache_hit_rate'),

'context_size': HistogramMetric('context_size_tokens')

}

async def record_context_metrics(self, context_operation):

# 记录相关性分数

relevance = await self._calculate_relevance_score(context_operation)

self.metrics['relevance_score'].set(relevance)

# 记录完整性分数

completeness = await self._calculate_completeness_score(context_operation)

self.metrics['completeness_score'].set(completeness)

# 记录延迟

self.metrics['latency'].observe(context_operation.duration)

# 记录上下文大小

self.metrics['context_size'].observe(context_operation.token_count)

2. 异常检测与自动恢复

异常处理机制:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class ContextExceptionHandler:

def __init__(self):

self.fallback_strategies = {

'context_corruption': self._handle_corruption,

'context_timeout': self._handle_timeout,

'context_inconsistency': self._handle_inconsistency,

'memory_overflow': self._handle_memory_overflow

}

async def handle_context_exception(self, exception, context_state):

exception_type = self._classify_exception(exception)

if exception_type in self.fallback_strategies:

recovery_strategy = self.fallback_strategies[exception_type]

return await recovery_strategy(exception, context_state)

else:

# 未知异常,启用安全模式

return await self._enable_safe_mode(context_state)

async def _handle_corruption(self, exception, context_state):

"""处理上下文损坏"""

# 1. 从备份恢复

backup_context = await self._restore_from_backup(context_state.user_id)

if backup_context:

logger.info(f"Restored context from backup for user {context_state.user_id}")

return backup_context

# 2. 重建基础上下文

minimal_context = await self._rebuild_minimal_context(context_state.user_id)

# 3. 记录异常用于分析

await self._log_corruption_incident(exception, context_state)

return minimal_context

六、 未来趋势:上下文工程的发展方向

技术演进:三大发展趋势

1. 自适应上下文管理(Adaptive Context Management)

未来的上下文工程系统将具备自我学习和优化的能力,不再需要人工调参。

核心特征:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class AdaptiveContextManager:

def __init__(self):

self.learning_engine = ContextLearningEngine()

self.adaptation_controller = AdaptationController()

async def adaptive_context_selection(self, user_id, query):

"""自适应的上下文选择"""

# 1. 基于历史成功率调整选择策略

historical_performance = await self.learning_engine.get_performance_stats(

user_id=user_id,

context_type='selection_strategy'

)

# 2. 动态调整权重

adaptive_weights = self.adaptation_controller.calculate_adaptive_weights(

base_weights=self.default_weights,

performance_feedback=historical_performance,

current_context=query

)

# 3. 执行优化后的选择

selected_context = await self._select_with_adaptive_weights(

query, adaptive_weights

)

return selected_context

async def learn_from_interaction(self, interaction_result):

"""从交互结果中学习"""

# 提取学习信号

learning_signals = {

'context_relevance': interaction_result.relevance_score,

'task_success': interaction_result.task_completed,

'user_satisfaction': interaction_result.user_rating,

'response_quality': interaction_result.response_quality

}

# 更新学习模型

await self.learning_engine.update_model(

context_features=interaction_result.context_features,

outcomes=learning_signals

)

# 调整系统参数

parameter_updates = await self.learning_engine.suggest_parameter_updates()

await self.adaptation_controller.apply_updates(parameter_updates)

2. 跨模态上下文理解(Cross-modal Context Understanding)

技术方向:

- 视觉-语言联合理解

- 音频-文本情境融合

- 多感官信息整合

应用示例:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class CrossModalContextProcessor:

async def process_multimodal_input(self, text, image, audio):

"""处理多模态输入的上下文理解"""

# 1. 各模态特征提取

text_features = await self.text_encoder.encode(text)

visual_features = await self.vision_encoder.encode(image)

audio_features = await self.audio_encoder.encode(audio)

# 2. 跨模态关联分析

cross_modal_attention = await self.cross_modal_transformer.forward(

text_features, visual_features, audio_features

)

# 3. 统一上下文表示

unified_context = await self.context_fusion_layer.fuse(

cross_modal_attention

)

return unified_context

3. 隐私保护的上下文工程(Privacy-Preserving Context Engineering)

随着隐私保护要求的提升,上下文工程需要在保护用户隐私的前提下实现个性化。

技术实现:

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(line

class PrivacyPreservingContextManager:

def __init__(self):

self.differential_privacy = DifferentialPrivacyEngine()

self.federated_learning = FederatedContextLearning()

async def process_sensitive_context(self, user_context, privacy_level):

"""处理敏感上下文信息"""

if privacy_level == 'high':

# 本地处理,不上传原始数据

processed_context = await self._local_context_processing(user_context)

# 添加差分隐私噪声

noisy_context = await self.differential_privacy.add_noise(

processed_context, epsilon=0.1

)

return noisy_context

elif privacy_level == 'medium':

# 联邦学习方式

federated_context = await self.federated_learning.collaborative_learning(

user_context, user_id_hash=hash(user_context.user_id)

)

return federated_context

else:

# 标准处理流程

return await self._standard_context_processing(user_context)

随着AI应用的日益普及,"模型能力 + 上下文工程"将成为AI系统竞争力的双引擎。这个深刻洞察提醒我们,在追求更强大AI模型的同时,更应该关注如何让AI在正确的上下文中发挥作用。只有这样,我们才能真正释放AI技术的巨大潜力,创造出真正有价值的智能应用。

下期将介绍一个上下文管理的开源项目,实操一下,在项目开发中怎么实现上下文管理。

七、AI大模型从0到精通全套学习大礼包

我在一线互联网企业工作十余年里,指导过不少同行后辈。帮助很多人得到了学习和成长。

只要你是真心想学AI大模型,我这份资料就可以无偿共享给你学习。大模型行业确实也需要更多的有志之士加入进来,我也真心希望帮助大家学好这门技术,如果日后有什么学习上的问题,欢迎找我交流,有技术上面的问题,我是很愿意去帮助大家的!

如果你也想通过学大模型技术去帮助就业和转行,可以扫描下方链接👇👇

大模型重磅福利:入门进阶全套104G学习资源包免费分享!

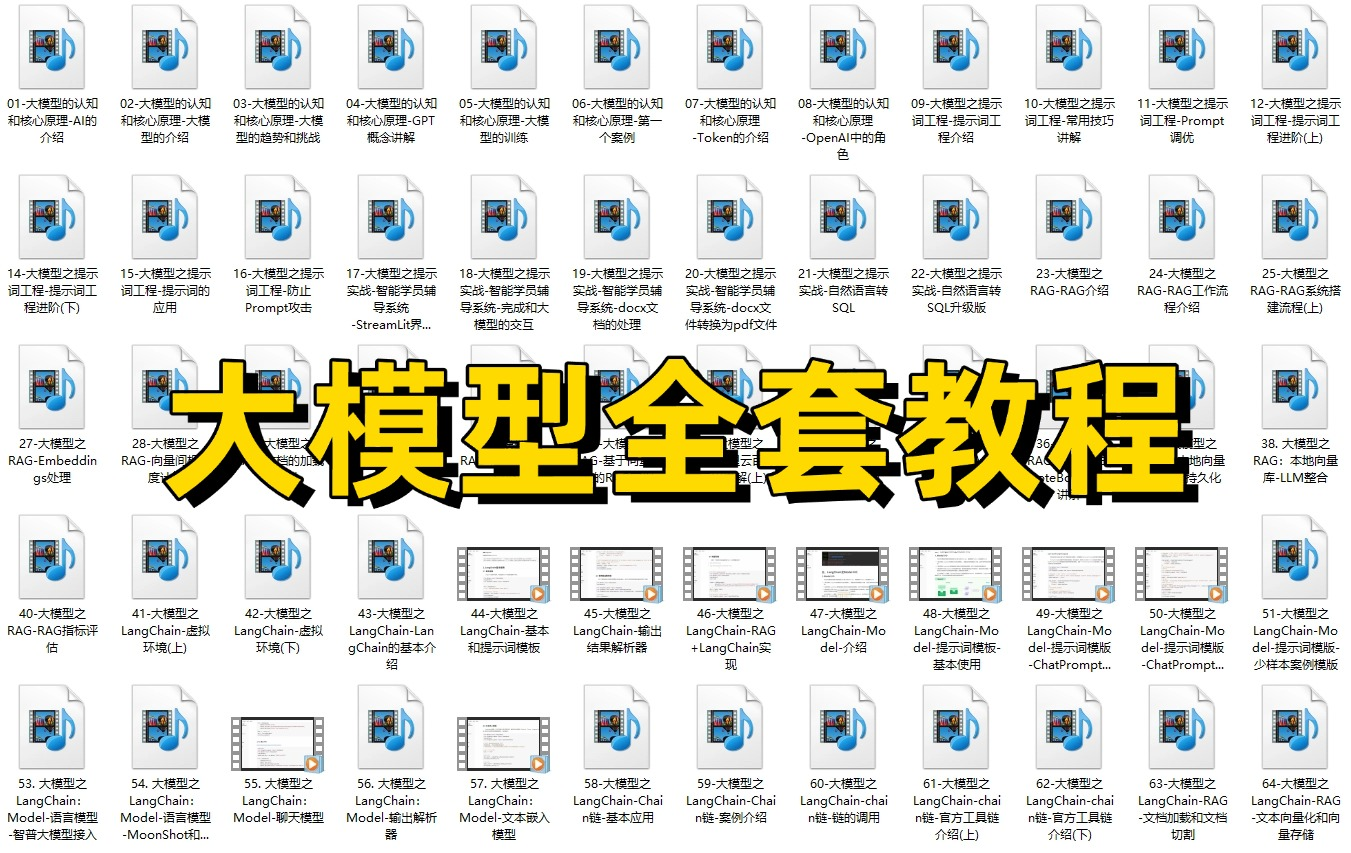

01.从入门到精通的全套视频教程

包含提示词工程、RAG、Agent等技术点

02.AI大模型学习路线图(还有视频解说)

全过程AI大模型学习路线

03.学习电子书籍和技术文档

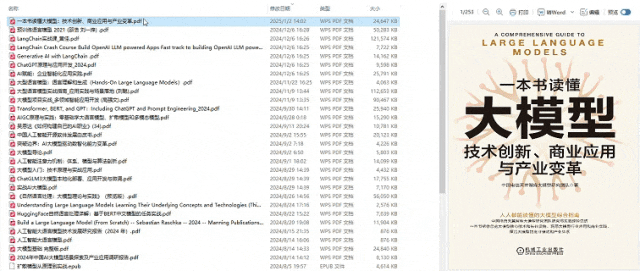

市面上的大模型书籍确实太多了,这些是我精选出来的

04.大模型面试题目详解

05.这些资料真的有用吗?

这份资料由我和鲁为民博士共同整理,鲁为民博士先后获得了北京清华大学学士和美国加州理工学院博士学位,在包括IEEE Transactions等学术期刊和诸多国际会议上发表了超过50篇学术论文、取得了多项美国和中国发明专利,同时还斩获了吴文俊人工智能科学技术奖。目前我正在和鲁博士共同进行人工智能的研究。

所有的视频由智泊AI老师录制,且资料与智泊AI共享,相互补充。这份学习大礼包应该算是现在最全面的大模型学习资料了。

资料内容涵盖了从入门到进阶的各类视频教程和实战项目,无论你是小白还是有些技术基础的,这份资料都绝对能帮助你提升薪资待遇,转行大模型岗位。

智泊AI始终秉持着“让每个人平等享受到优质教育资源”的育人理念,通过动态追踪大模型开发、数据标注伦理等前沿技术趋势,构建起"前沿课程+智能实训+精准就业"的高效培养体系。

课堂上不光教理论,还带着学员做了十多个真实项目。学员要亲自上手搞数据清洗、模型调优这些硬核操作,把课本知识变成真本事!

如果说你是以下人群中的其中一类,都可以来智泊AI学习人工智能,找到高薪工作,一次小小的“投资”换来的是终身受益!

应届毕业生:无工作经验但想要系统学习AI大模型技术,期待通过实战项目掌握核心技术。

零基础转型:非技术背景但关注AI应用场景,计划通过低代码工具实现“AI+行业”跨界。

业务赋能 突破瓶颈:传统开发者(Java/前端等)学习Transformer架构与LangChain框架,向AI全栈工程师转型。

👉获取方式:

😝有需要的小伙伴,可以保存图片到wx扫描二v码免费领取【保证100%免费】🆓

更多推荐

已为社区贡献95条内容

已为社区贡献95条内容

所有评论(0)