从零开始掌握17种大模型代理(Agentic AI)架构,小白到专家的进阶指南!

本文系统介绍了17种构建大规模AI系统的代理架构模式,包括Reflection、ReAct、Planning、Multi-Agent等,每种架构均提供理论解析、代码实现和性能评估。文章不仅展示了如何单独应用这些架构,还详细讲解了如何将它们组合成复杂的多层工作流程,实现从基础代理到高级AI系统的构建。这些架构模式为开发者在构建AI系统时提供了多样化的解决方案,帮助解决从简单推理到复杂决策的各类问题。

简介

本文系统介绍了17种构建大规模AI系统的代理架构模式,包括Reflection、ReAct、Planning、Multi-Agent等,每种架构均提供理论解析、代码实现和性能评估。文章不仅展示了如何单独应用这些架构,还详细讲解了如何将它们组合成复杂的多层工作流程,实现从基础代理到高级AI系统的构建。这些架构模式为开发者在构建AI系统时提供了多样化的解决方案,帮助解决从简单推理到复杂决策的各类问题。

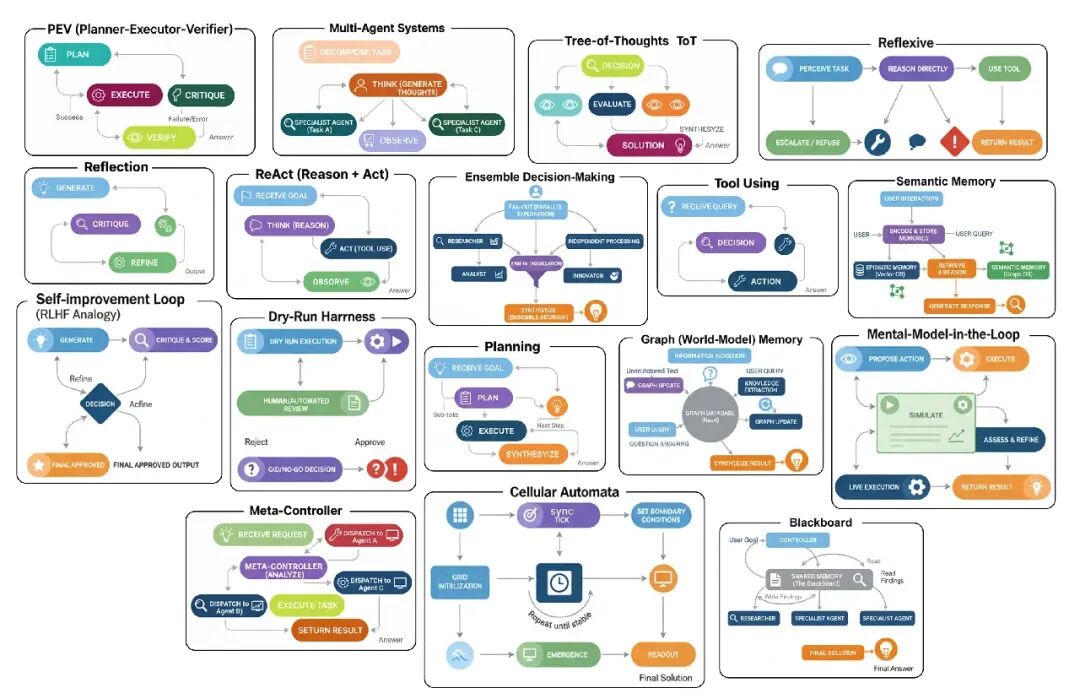

当你构建一个大规模AI系统时,你其实是在把不同的代理设计模式组合起来。每个模式都有自己的阶段、构建方法、输出和评估。如果我们退一步,把这些模式归类,它们可以分成17种高层架构,这些架构捕捉了代理系统可能采用的主要形式……

- Multi-Agent System,在这个系统中,几个工具和代理一起合作来解决问题。

- Ensemble Decision System,在这个系统中,多个代理各自提出一个答案,然后投票选出最好的那个。

- Tree-of-Thoughts,在这个系统中,代理会探索很多不同的推理路径,然后选择最有前景的方向。

- Reflexive approach,在这个方法中,代理能够认识到并承认自己不知道的东西。

- ReAct loop,在这个循环中,代理会在思考、采取行动,然后再思考来完善它的过程之间交替。 还有很多其他……

在这篇博客里,我们要拆解这些不同的代理架构,并展示每个架构在完整AI系统中扮演的独特角色。

我们会视觉化地理解每个架构的重要性,编码它的工作流程,并评估它,看看它是否真的比基准性能有所提升。

所有代码都在我的GitHub仓库里可用:

GitHub - https://github.com/FareedKhan-dev/all-agentic-architectures

目录

- 设置环境

- Reflection

- Tool Using

- ReAct (Reason + Act)

- Planning

- PEV (Planner-Executor-Verifier)

- Tree-of-Thoughts (ToT)

- Multi-Agent Systems

- Meta-Controller

- Blackboard

- Ensemble Decision-Making

- Episodic + Semantic Memory

- Graph (World-Model) Memory

- Self-Improvement Loop (RLHF Analogy)

- Dry-Run Harness

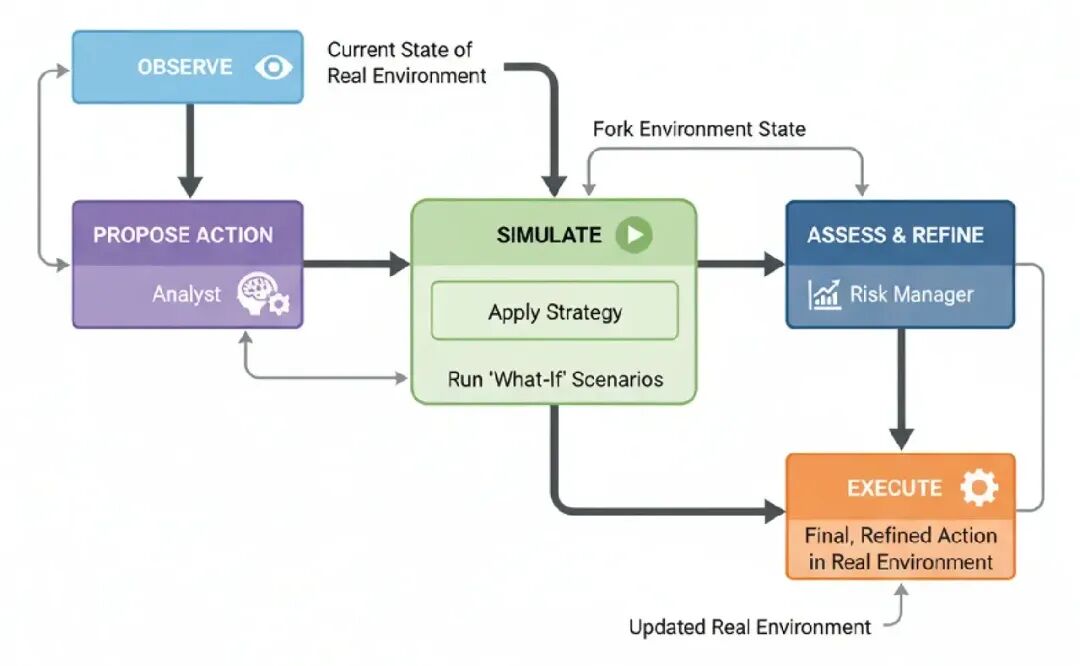

- Simulator (Mental-Model-in-the-Loop)

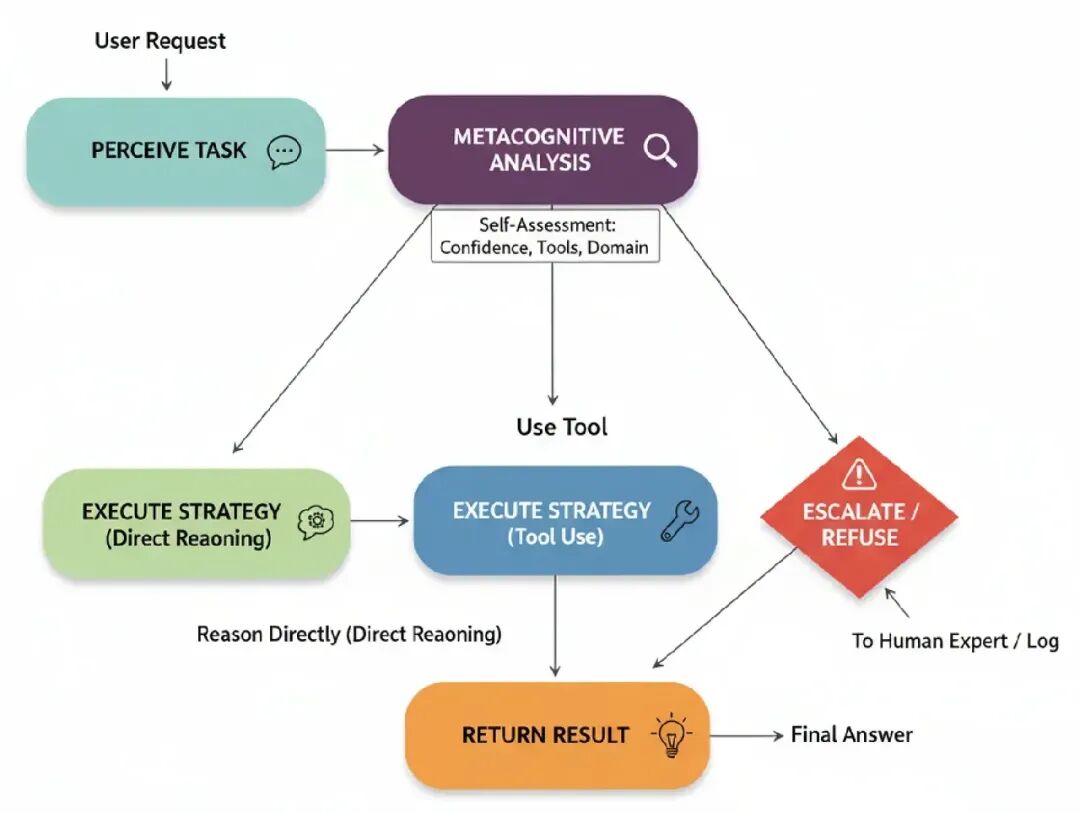

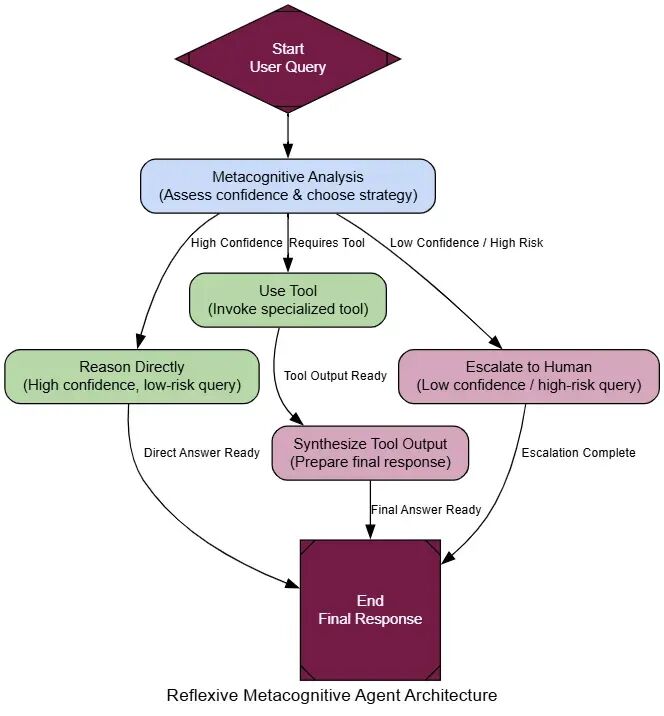

- Reflexive Metacognitive

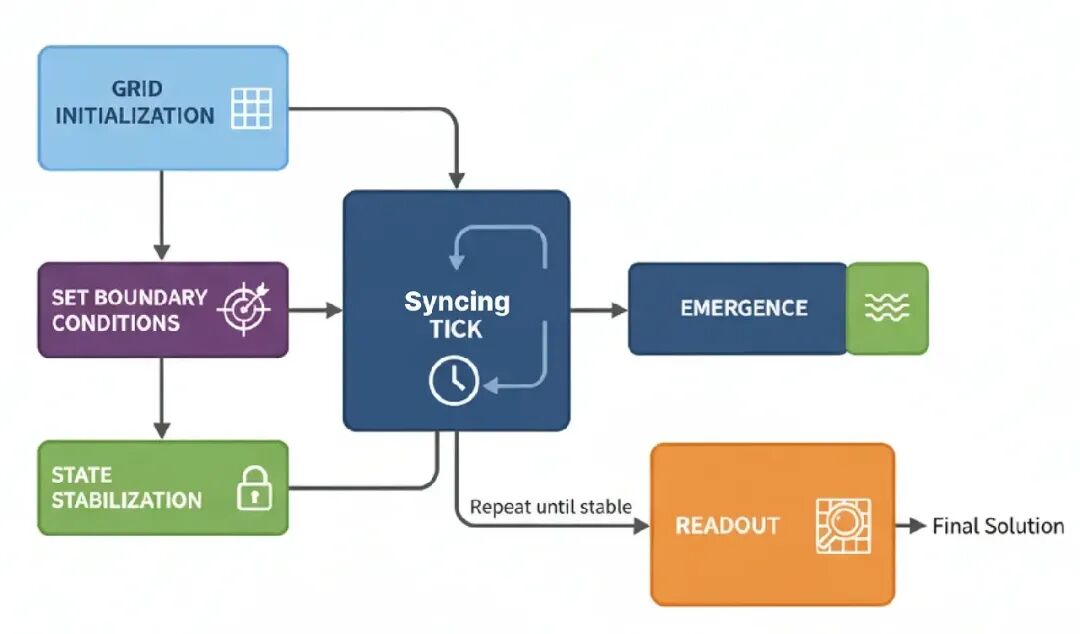

- Cellular Automata

- 将架构组合在一起

这份完整版的大模型 AI 学习和面试资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】

设置环境

在我们开始构建每个架构之前,我们需要设置基础,并清楚我们用的是什么,为什么用这些模块、模型,以及它们如何组合在一起。

我们知道LangChain、LangGraph和LangSmith基本上是行业标准模块,用来构建任何严肃的RAG或代理系统。它们给我们提供了构建、编排所需的一切,最重要的是,当事情变得复杂时,能搞清楚代理内部发生了什么,所以我们就坚持用这三个。

第一步是导入我们的核心库。这样,我们以后就不用重复了,设置保持干净整洁。来做吧。

import os

from typing importList, Dict, Any, Optional, Annotated, TypedDict

from dotenv import load_dotenv # Load environment variables from .env file

# Pydantic for data modeling / validation

from pydantic import BaseModel, Field

# LangChain & LangGraph components

from langchain_nebius import ChatNebius # Nebius LLM wrapper

from langchain_tavily import TavilySearch # Tavily search tool integration

from langchain_core.prompts import ChatPromptTemplate # For structuring prompts

from langgraph.graph import StateGraph, END # Build a state machine graph

from langgraph.prebuilt import ToolNode, tools_condition # Prebuilt nodes & conditions

# For pretty printing output

from rich.console import Console # Console styling

from rich.markdown import Markdown # Render markdown in terminal

所以,来快速拆解一下为什么我们用这三个。

LangChain是我们的工具箱,给我们核心构建块,比如prompts、工具定义和LLM wrappers。 LangGraph是我们的编排引擎,把一切连成复杂的流程,有循环和分支。 LangSmith是我们的调试器,展示代理每一步的可视化追踪,这样我们能快速发现和修复问题。 我们会用像Nebius AI或Together AI这样的提供商来处理开源LLMs。好处是它们就像标准OpenAI模块一样工作,所以我们不用改太多就能上手。如果我们想本地运行,我们可以换成像Ollama这样的东西。

为了确保我们的代理不局限于静态数据,我们给它们访问Tavily API来做实时网页搜索(每月1000积分够测试了)。这样,它们能主动出去找信息,保持焦点在真正的推理和工具使用上。

接下来,我们需要设置环境变量。这里是我们放敏感信息的地方,比如API键。要做这个,在同一个目录创建一个叫.env的文件,把你的键放进去,比如:

# API key for Nebius LLM (used with ChatNebius)

NEBIUS_API_KEY="your_nebius_api_key_here"

# API key for LangSmith (LangChain’s observability/telemetry platform)

LANGCHAIN_API_KEY="your_langsmith_api_key_here"

# API key for Tavily search tool (used with TavilySearch integration)

TAVILY_API_KEY="your_tavily_api_key_here"

一旦.env文件设置好,我们可以用之前导入的dotenv模块轻松把那些键拉进我们的代码。

load_dotenv() # Load environment variables from .env file

# Enable LangSmith tracing for monitoring / debugging

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = "Implementing 17 Agentic Architectures"# Project name for grouping traces

# Verify that all required API keys are available

for key in ["NEBIUS_API_KEY", "LANGCHAIN_API_KEY", "TAVILY_API_KEY"]:

ifnot os.environ.get(key): # If key not found in env vars

print(f"{key} not found. Please create a .env file and set it.")

现在,我们的基本设置准备好了,我们可以开始一个一个构建每个架构,看看它们的表现,以及在大规模AI系统中它们最适合哪里。

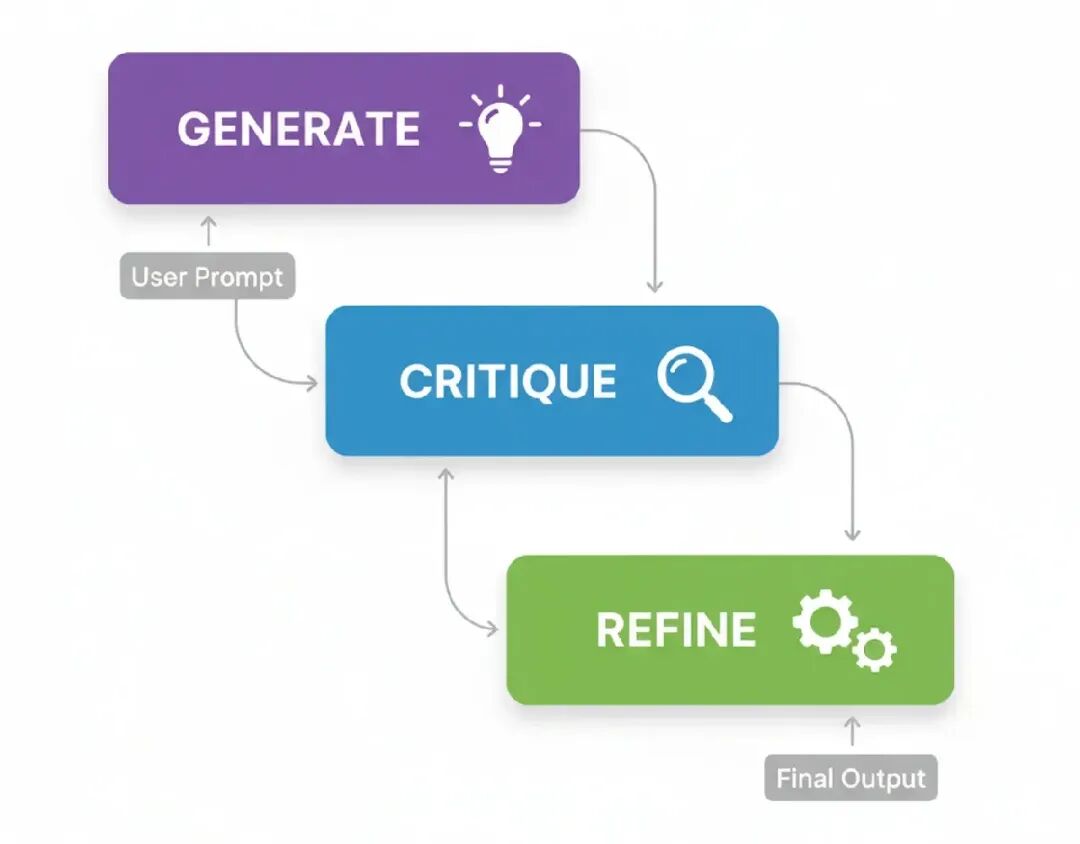

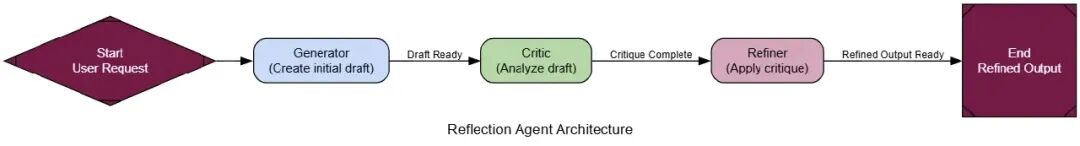

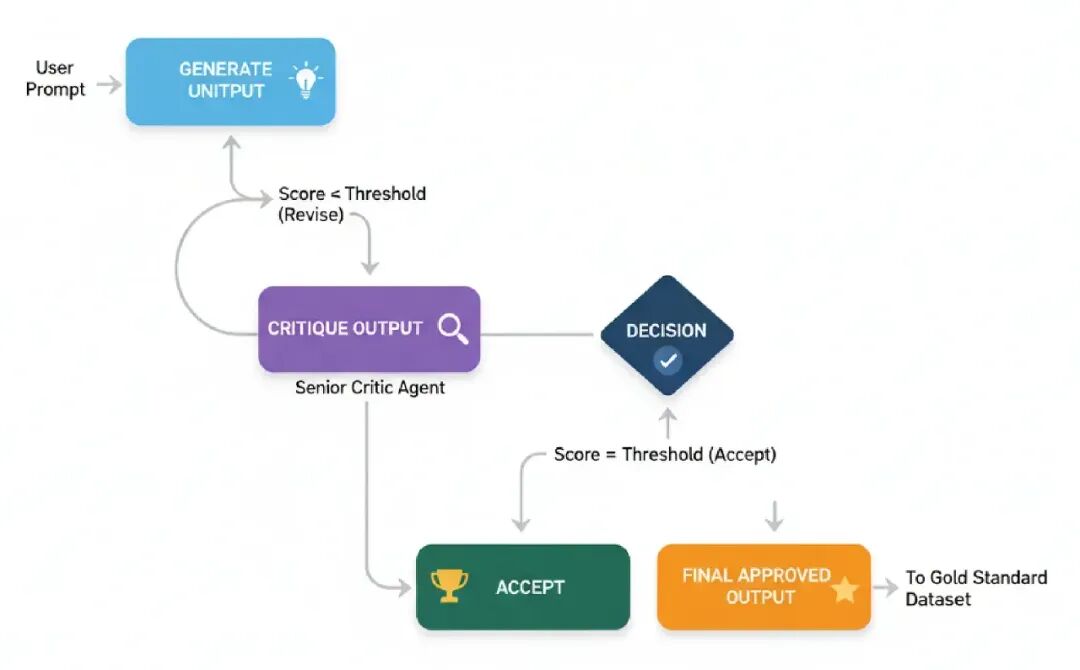

Reflection

所以,我们要看的第一个架构是Reflection。这个可能是你在代理工作流程中最常见和基础的模式了。

它就是让代理能够退一步,看看自己的工作,然后把它做得更好。

在大规模AI系统中,这个模式完美适合任何阶段,那里生成输出的质量很关键。想想像生成复杂代码、写详细技术报告这样的任务,任何地方一个简单的初稿答案都不够好,可能导致现实问题。

来理解一下过程怎么流动的。

- Generate: 代理拿用户prompt,产生一个初始草案或解决方案。这是它第一次、未过滤的尝试。

- Critique: 然后代理切换角色,成为自己的批评者。它分析草案找缺陷,问像“是这个正确吗?”、“这个高效吗?”或“我漏了什么?”这样的问题。

- Refine: 最后,用自己的批评,代理生成一个最终的、改进版本的输出,直接解决它发现的缺陷。

在我们创建代理逻辑之前,我们需要定义它要用的数据结构。用Pydantic模型是强迫LLM给我们干净、结构化JSON输出的好办法,这对多步过程很重要,一个步骤的输出成为下一个的输入。

classDraftCode(BaseModel):

"""Schema for the initial code draft generated by the agent."""

code: str = Field(description="The Python code generated to solve the user's request.") # raw draft

explanation: str = Field(description="A brief explanation of how the code works.") # reasoning

classCritique(BaseModel):

"""Schema for the self-critique of the generated code."""

has_errors: bool = Field(description="Does the code have any potential bugs or logical errors?") # error check

is_efficient: bool = Field(description="Is the code written in an efficient and optimal way?") # performance check

suggested_improvements: List[str] = Field(description="Specific, actionable suggestions for improving the code.") # concrete fixes

critique_summary: str = Field(description="A summary of the critique.") # overview of review

classRefinedCode(BaseModel):

"""Schema for the final, refined code after incorporating the critique."""

refined_code: str = Field(description="The final, improved Python code.") # polished version

refinement_summary: str = Field(description="A summary of the changes made based on the critique.") # explanation of changes

用这些schema,我们有了一个清晰的‘合同’给我们的LLM。Critique模型特别有用,因为它强迫一个结构化审查,问特定检查错误和效率,而不是模糊的“审查代码”命令。

首先是我们的generator_node。它唯一的工作是拿用户请求,产生那个初稿。

defgenerator_node(state):

"""Generates the initial draft of the code."""

console.print("--- 1. Generating Initial Draft ---")

# Initialize the LLM to return a structured DraftCode object

generator_llm = llm.with_structured_output(DraftCode)

prompt = f"""You are an expert Python programmer. Write a Python function to solve the following request.

Provide a simple, clear implementation and an explanation.

Request: {state['user_request']}

"""

draft = generator_llm.invoke(prompt)

return {"draft": draft.model_dump()}

这个节点会给我们初稿和它的解释,然后我们传给批评者审查。

现在是Reflection过程的核心,critic_node。这里代理进入资深开发者角色,给自己的工作一个严格的代码审查。

defcritic_node(state):

"""Critiques the generated code for errors and inefficiencies."""

console.print("--- 2. Critiquing Draft ---")

# Initialize the LLM to return a structured Critique object

critic_llm = llm.with_structured_output(Critique)

code_to_critique = state['draft']['code']

prompt = f"""You are an expert code reviewer and senior Python developer. Your task is to perform a thorough critique of the following code.

Analyze the code for:

1. **Bugs and Errors:** Are there any potential runtime errors, logical flaws, or edge cases that are not handled?

2. **Efficiency and Best Practices:** Is this the most efficient way to solve the problem? Does it follow standard Python conventions (PEP 8)?

Provide a structured critique with specific, actionable suggestions.

Code to Review:

```python

{code_to_critique}

```{data-source-line="87"}

"""

critique = critic_llm.invoke(prompt)

return {"critique": critique.model_dump()}

这里的输出是一个结构化的Critique对象。这比模糊的“这个可以改进”消息好多了,因为它给我们的下一步提供了具体、可行动的反馈。

我们逻辑的最后一块是refiner_node。它拿原稿和编辑的反馈,创建最终的、改进版本。

defrefiner_node(state):

"""Refines the code based on the critique."""

console.print("--- 3. Refining Code ---")

# Initialize the LLM to return a structured RefinedCode object

refiner_llm = llm.with_structured_output(RefinedCode)

draft_code = state['draft']['code']

critique_suggestions = json.dumps(state['critique'], indent=2)

prompt = f"""You are an expert Python programmer tasked with refining a piece of code based on a critique.

Your goal is to rewrite the original code, implementing all the suggested improvements from the critique.

**Original Code:**

```python

{draft_code}

```{data-source-line="115"}

**Critique and Suggestions:**

{critique_suggestions}

Please provide the final, refined code and a summary of the changes you made.

"""

refined_code = refiner_llm.invoke(prompt)

return {"refined_code": refined_code.model_dump()}

好了,我们有三个逻辑部分了。现在,我们需要把它们连成一个工作流程。这就是LangGraph的用武之地。我们会定义在节点间传递的状态,然后构建图本身。

classReflectionState(TypedDict):

"""Represents the state of our reflection graph."""

user_request: str

draft: Optional[dict]

critique: Optional[dict]

refined_code: Optional[dict]

# Initialize a new state graph

graph_builder = StateGraph(ReflectionState)

# Add the nodes to the graph

graph_builder.add_node("generator", generator_node)

graph_builder.add_node("critic", critic_node)

graph_builder.add_node("refiner", refiner_node)

# Define the workflow edges as a simple linear sequence

graph_builder.set_entry_point("generator")

graph_builder.add_edge("generator", "critic")

graph_builder.add_edge("critic", "refiner")

graph_builder.add_edge("refiner", END)

# Compile the graph into a runnable application

reflection_app = graph_builder.compile()

流程是一个简单的直线:generator -> critic -> refiner。这是经典的Reflection模式,现在我们准备测试它了。

为了测试这个工作流程,我们给它一个经典的编码问题,那里一个天真的初次尝试往往低效,找第n个Fibonacci数。这是一个完美的测试案例,因为一个简单的递归解决方案容易写,但计算上很贵,留下了很多改进空间。

user_request = "Write a Python function to find the nth Fibonacci number."

initial_input = {"user_request": user_request}

console.print(f"[bold cyan]🚀 Kicking off Reflection workflow for request:[/bold cyan] '{user_request}'\n")

# Stream the results and capture the final state

final_state = None

for state_update in reflection_app.stream(initial_input, stream_mode="values"):

final_state = state_update

console.print("\n[bold green]✅ Reflection workflow complete![/bold green]")

来看看前后对比,看看我们的代理做了什么。

--- ### Initial Draft ---

Explanation: This function uses a recursive approach to calculate the nth Fibonacci number... This approach is not efficient for large values of n due to the repeated calculations...

1 def fibonacci(n):

2 if n <= 0:

3 return 0

4 elif n == 1:

5 return 1

6 else:

7 return fibonacci(n-1) + fibonacci(n-2)

--- ### Critique ---

Summary: The function has potential bugs and inefficiencies. It should be revised to handle negative inputs and improve its time complexity.

Improvements Suggested:

- The function does not handle negative numbers correctly.

- The function has a high time complexity due to the repeated calculations. Consider using dynamic programming or memoization.

- The function does not follow PEP 8 conventions...

--- ### Final Refined Code ---

Refinement Summary: The original code has been revised to handle negative inputs, improve its time complexity, and follow PEP 8 conventions.

1 def fibonacci(n):

2 """Calculates the nth Fibonacci number."""

3 if n < 0:

4 raise ValueError("n must be a non-negative integer")

5 elif n == 0:

6 return 0

7 elif n == 1:

8 return 1

9 else:

10 fib = [0, 1]

11 for i in range(2, n + 1):

12 fib.append(fib[i-1] + fib[i-2])

13 return fib[n]

初稿是一个简单的递归函数,没错,但效率极差。批评者指出了指数复杂度和其他问题。精炼代码是一个聪明得多、更健壮的迭代解决方案。这就是模式按预期工作的完美例子。

为了让这个更具体,我们引入另一个LLM作为公正的‘法官’,给初稿和最终代码打分。这会给我们一个定量的改进度量。

classCodeEvaluation(BaseModel):

"""Schema for evaluating a piece of code."""

correctness_score: int = Field(description="Score from 1-10 on whether the code is logically correct.")

efficiency_score: int = Field(description="Score from 1-10 on the code's algorithmic efficiency.")

style_score: int = Field(description="Score from 1-10 on code style and readability (PEP 8). ")

justification: str = Field(description="A brief justification for the scores.")

defevaluate_code(code_to_evaluate: str):

prompt = f"""You are an expert judge of Python code. Evaluate the following function on a scale of 1-10 for correctness, efficiency, and style. Provide a brief justification.

Code:

```python

{code_to_evaluate}

```

"""

return judge_llm.invoke(prompt)

if final_state and'draft'in final_state and'refined_code'in final_state:

console.print("--- Evaluating Initial Draft ---")

initial_draft_evaluation = evaluate_code(final_state['draft']['code'])

console.print(initial_draft_evaluation.model_dump()) # Corrected: use .model_dump()

console.print("\n--- Evaluating Refined Code ---")

refined_code_evaluation = evaluate_code(final_state['refined_code']['refined_code'])

console.print(refined_code_evaluation.model_dump()) # Corrected: use .model_dump()

else:

console.print("[bold red]Error: Cannot perform evaluation because the `final_state` is incomplete.[/bold red]")

当我们运行法官对两个版本时,分数告诉了整个故事。

--- Evaluating Initial Draft ---

{

'correctness_score': 2,

'efficiency_score': 4,

'style_score': 2,

'justification': 'The function has a time complexity of O(2^n)...'

}

--- Evaluating Refined Code ---

{

'correctness_score': 8,

'efficiency_score': 6,

'style_score': 9,

'justification': 'The code is correct... it has a time complexity of O(n)...'

}

初稿的分数很差,特别是效率。精炼代码在各方面都有巨大提升。

这给了我们硬证据,reflection过程不只是改变了代码,它让它变得更好一些。

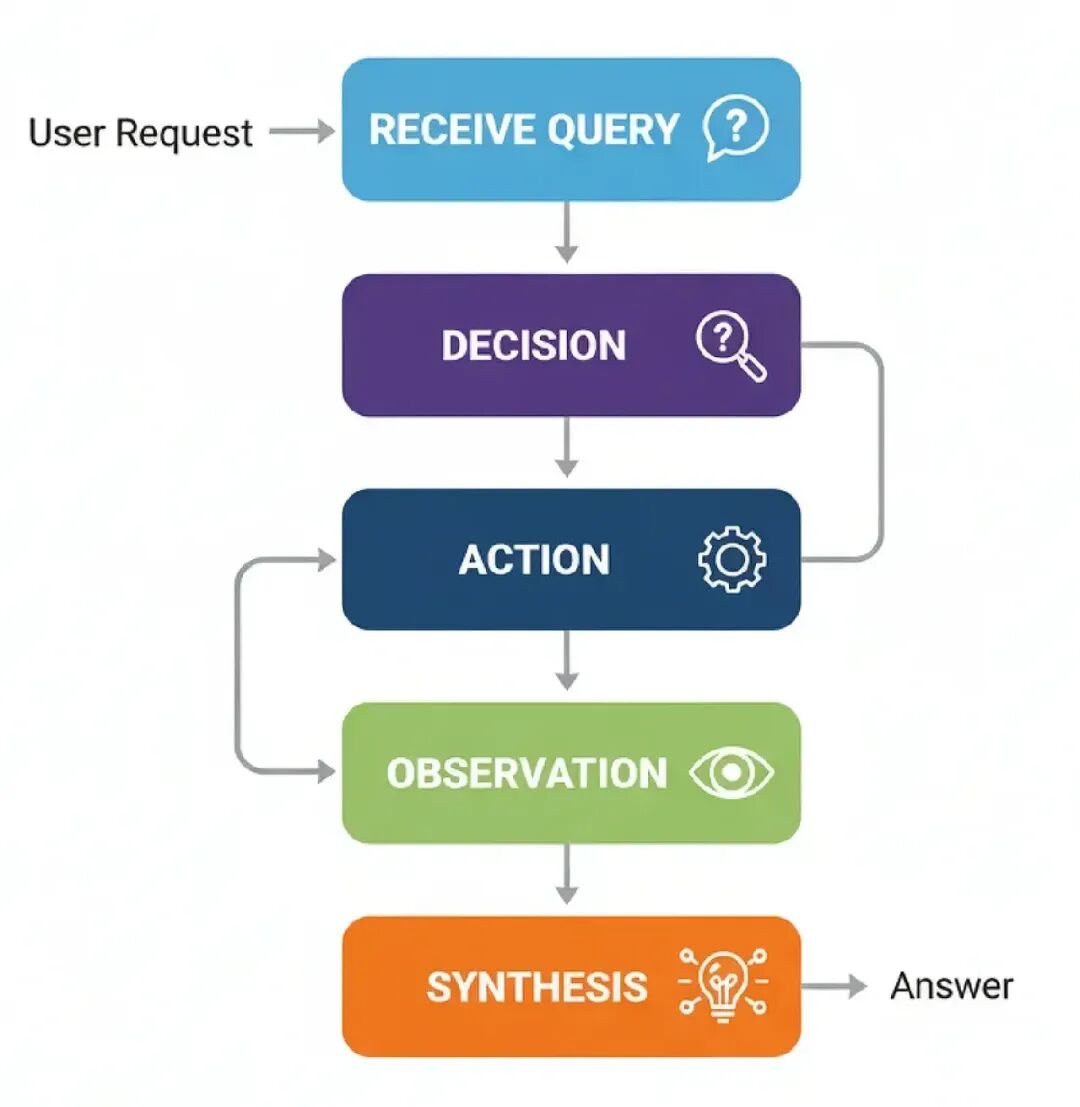

Tool Using

我们刚建的Reflection模式很棒,用于 sharpening 一个代理的内部推理。

但如果代理需要它不知道的信息,会发生什么?

没有外部工具访问,一个LLM局限于它的预训练参数,它可以生成和推理,但不能查询新数据或与外部世界互动。这就是我们的第二个架构,Tool Use,进来的地方。

在任何大规模AI系统中,tool use不是可选的,而是重要的和必需的组件。它是代理推理和真实世界数据之间的桥梁。不管是一个支持机器人检查订单状态,还是一个金融代理拉取实时股票价格。

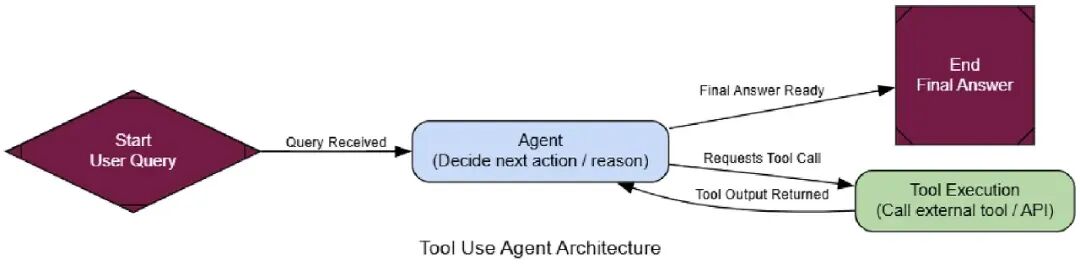

来理解一下过程怎么流动的。

- Receive Query: 代理从用户那里得到一个请求。

- Decision: 代理分析查询,看看它可用的工具。然后决定是否需要一个工具来准确回答问题。

- Action: 如果需要一个工具,代理格式化一个调用那个工具的请求,比如,一个特定函数带正确的参数。

- Observation: 系统执行工具调用,结果(“观察”)被发回给代理。 Synthesis: 代理拿工具的输出,结合它自己的推理,生成一个最终的、基于事实的答案给用户。

为了构建这个,我们需要给我们的代理一个工具。为此,我们会用TavilySearchResults工具,它给我们的代理访问网页搜索的能力。这里最重要的部分是描述。LLM读这个自然语言描述来搞清楚工具做什么,以及什么时候该用它,所以让它清晰和精确是关键。

# Initialize the tool. We can set the max number of results to keep the context concise.

search_tool = TavilySearchResults(max_results=2)

# It's crucial to give the tool a clear name and description for the agent

search_tool.name = "web_search"

search_tool.description = "A tool that can be used to search the internet for up-to-date information on any topic, including news, events, and current affairs."

tools = [search_tool]

现在我们有一个功能的工具,我们可以构建会学着用它的代理。一个tool-using代理的状态挺简单的,就是一个消息列表,跟踪整个对话历史。

classAgentState(TypedDict):

messages: Annotated[list[AnyMessage], add_messages]

接下来,我们必须让LLM“知道”我们给了它工具。这是关键一步。我们用.bind_tools()方法,它本质上把工具的名字和描述注入到LLM的系统prompt中,让它决定什么时候调用工具。

llm = ChatNebius(model="meta-llama/Meta-Llama-3.1-8B-Instruct", temperature=0)

# Bind the tools to the LLM, making it tool-aware

llm_with_tools = llm.bind_tools(tools)

现在我们可以定义我们的代理工作流程,用LangGraph。我们需要两个主要节点:agent_node(“大脑”)调用LLM决定做什么,和tool_node(“手”)实际执行工具。

defagent_node(state: AgentState):

"""The primary node that calls the LLM to decide the next action."""

console.print("--- AGENT: Thinking... ---")

response = llm_with_tools.invoke(state["messages"])

return {"messages": [response]}

# The ToolNode is a pre-built node from LangGraph that executes tools

tool_node = ToolNode(tools)

在agent_node运行后,我们需要一个路由器决定下一步去哪。如果代理的最后消息包含tool_calls属性,意味着它想用工具,所以我们路由到tool_node。如果没有,意味着代理有一个最终答案,我们可以结束工作流程。

defrouter_function(state: AgentState) -> str:

"""Inspects the agent's last message to decide the next step."""

last_message = state["messages"][-1]

if last_message.tool_calls:

# The agent has requested a tool call

console.print("--- ROUTER: Decision is to call a tool. ---")

return"call_tool"

else:

# The agent has provided a final answer

console.print("--- ROUTER: Decision is to finish. ---")

return"__end__"

好了,我们有所有部分了。来把它们连成一个图。这里关键是条件边,用我们的router_function创建代理的主要推理循环:agent -> router -> tool -> agent。

graph_builder = StateGraph(AgentState)

# Add the nodes

graph_builder.add_node("agent", agent_node)

graph_builder.add_node("call_tool", tool_node)

# Set the entry point

graph_builder.set_entry_point("agent")

# Add the conditional router

graph_builder.add_conditional_edges(

"agent",

router_function,

)

# Add the edge from the tool node back to the agent to complete the loop

graph_builder.add_edge("call_tool", "agent")

# Compile the graph

tool_agent_app = graph_builder.compile()

现在来测试它。我们给它一个它从训练数据不可能知道的问题,强迫它用网页搜索工具找实时答案。

user_query = "What were the main announcements from Apple's latest WWDC event?"

initial_input = {"messages": [("user", user_query)]}

console.print(f"[bold cyan]🚀 Kicking off Tool Use workflow for request:[/bold cyan] '{user_query}'\n")

for chunk in tool_agent_app.stream(initial_input, stream_mode="values"):

chunk["messages"][-1].pretty_print()

console.print("\n---\n")

console.print("\n[bold green]✅ Tool Use workflow complete![/bold green]")

来看输出,看看代理的思考过程。

================================= Human Message =================================

What were the main announcements from Apple's latest WWDC event?

---

--- AGENT: Thinking... ---

--- ROUTER: Decision is to call a tool. ---

================================== Ai Message ==================================

Tool Calls:

web_search (call_abc123)

Args:

query: Apple WWDC latest announcements

---

================================= Tool Message =================================

Name: web_search

[{"title": "WWDC 2025: Everything We Know...", "url": "...", "content": "Apple's event lasted for an hour... we recapped all of the announcements... iOS 26, iPadOS 26, macOS Tahoe..."}]

---

--- AGENT: Thinking... ---

--- ROUTER: Decision is to finish. ---

================================== Ai Message ==================================

The main announcements from Apple's latest WWDC event include a new design that will inform the next decade of iOS, iPadOS, and macOS development, new features for the iPhone... and updates across every platform, including iOS 26, iPadOS 26, CarPlay, macOS Tahoe...

追踪清楚展示了代理的逻辑:

首先,agent_node思考,决定需要搜索网页,输出一个tool_calls请求。 接下来,tool_node执行那个搜索,返回一个ToolMessage带原始网页结果。 最后,agent_node再运行,这次拿搜索结果作为上下文来合成一个最终的、有帮助的答案给用户。 为了形式化这个,我们再引入我们的LLM-as-a-Judge,但用特定于评估tool use的标准。

classToolUseEvaluation(BaseModel):

"""Schema for evaluating the agent's tool use and final answer."""

tool_selection_score: int = Field(description="Score 1-5 on whether the agent chose the correct tool for the task.")

tool_input_score: int = Field(description="Score 1-5 on how well-formed and relevant the input to the tool was.")

synthesis_quality_score: int = Field(description="Score 1-5 on how well the agent integrated the tool's output into its final answer.")

justification: str = Field(description="A brief justification for the scores.")

当我们运行法官对整个对话追踪时,我们得到一个结构化的评估。

--- Evaluating Tool Use Performance ---

{

'tool_selection_score': 5,

'tool_input_score': 5,

'synthesis_quality_score': 4,

'justification': "The AI agent correctly used the web search tool to find relevant information... The tool output was well-formed and relevant... the AI agent could have done a better job of synthesizing the information..."

}

高分给我们证据,我们的代理不只是调用工具,而是有效使用它。

它正确识别了什么时候搜索、搜什么,以及怎么用结果。这个架构几乎是任何实用AI助手的基礎构建块。

ReAct (Reason + Act)

我们的上一个代理是个大进步。它可以用工具取实时数据,这很棒。但问题是,它有点一锤子买卖,它决定需要一个工具,调用一次,然后试着回答。

但如果问题更复杂,需要多个、依赖的步骤来解决,会发生什么?

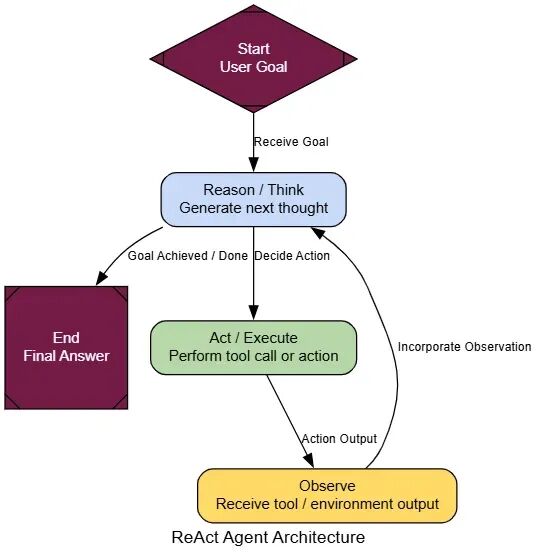

ReAct (Reason + Act)就是创建一个循环。它让代理动态推理下一步做什么,采取行动(像调用工具),观察结果,然后用那个新信息再推理。它是从静态工具调用者到适应性问题解决者的转变。

在任何AI系统中,ReAct是你处理任何需要multi-hop推理的任务的首选模式。

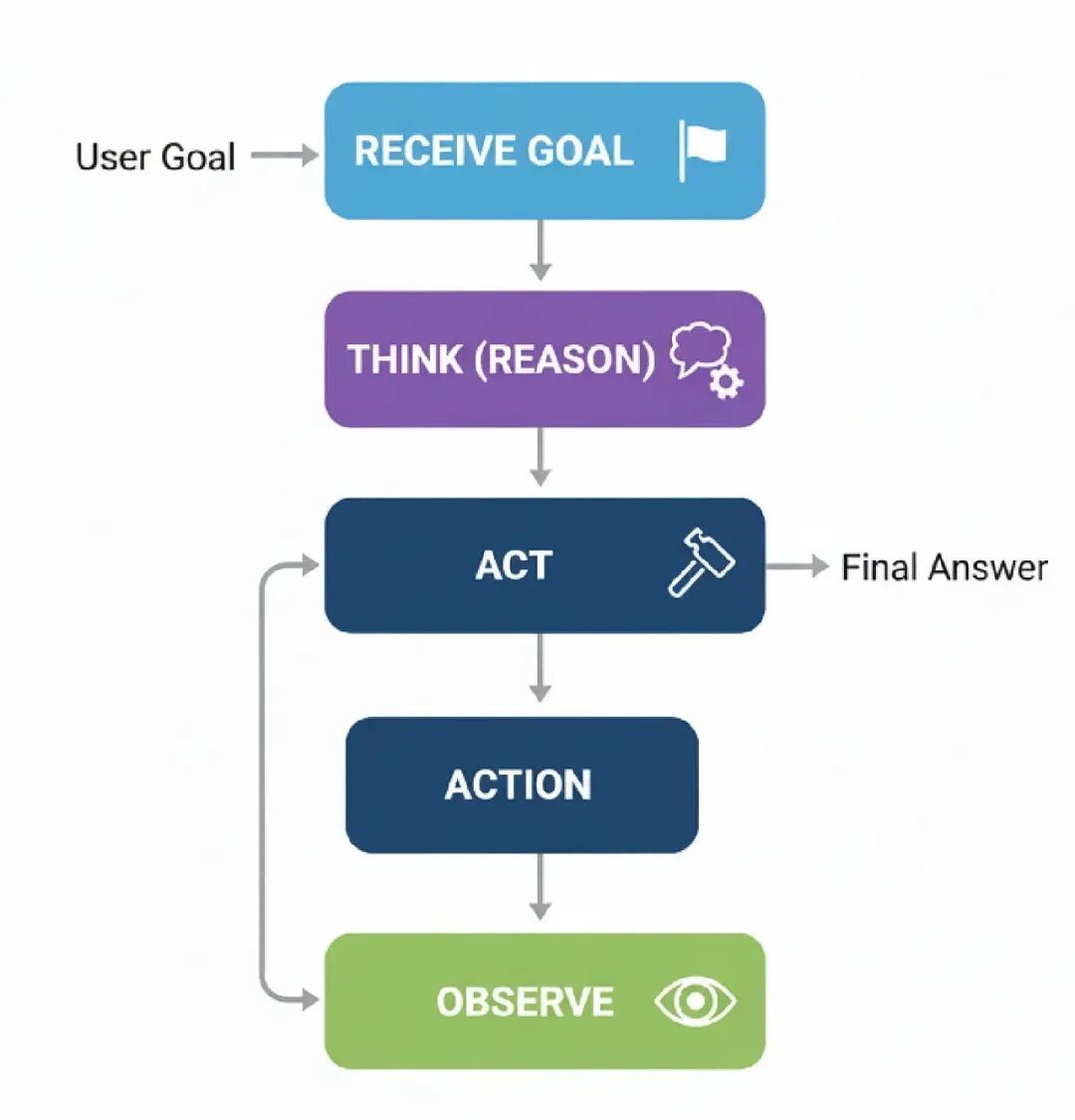

来理解一下过程怎么流动的。

- Receive Goal: 代理被给一个不能一步解决的复杂任务。

- T- hink (Reason): 代理生成一个想法,像:“要回答这个,我先需要找信息X”。

- Act: 基于那个想法,它执行一个行动,像调用搜索工具找‘X’。

- Observe: 代理从工具得到‘X’的结果回来。

- Repeat: 它拿那个新信息,回第2步,想:“好了,现在我有X,我需要用它找Y”。这个循环继续,直到最终目标达成。

好消息是,我们已经建了大部分部分。我们会重用AgentState、web_search和tool_node。我们图逻辑的唯一变化:在tool_node运行后,我们把输出发回给agent_node而不是结束。这创建了推理循环,让代理审查结果并选择下一步。

defreact_agent_node(state: AgentState):

"""The agent node that thinks and decides the next step."""

console.print("--- REACT AGENT: Thinking... ---")

response = llm_with_tools.invoke(state["messages"])

return {"messages": [response]}

# The ReAct graph with its characteristic loop

react_graph_builder = StateGraph(AgentState)

react_graph_builder.add_node("agent", react_agent_node)

react_graph_builder.add_node("tools", tool_node) # We reuse the tool_node from before

react_graph_builder.set_entry_point("agent")

react_graph_builder.add_conditional_edges(

"agent",

# We can reuse the same router function

router_function,

# The map now defines the routing logic

{"call_tool": "tools", "__end__": "__end__"}

)

# This is the key difference: the edge goes from the tool node BACK to the agent

react_graph_builder.add_edge("tools", "agent")

react_agent_app = react_graph_builder.compile()

就这了。唯一真正的变化是react_graph_builder.add_edge(“tools”, “agent”)。那一行创建了循环,把我们的简单tool-user变成一个动态ReAct代理。

为了看到为什么这个循环这么强大,来给它一个不可能一击解决的任务,一个经典的multi-hop问题。一个简单的tool-using代理会失败这个,因为它不能把步骤链起来。

multi_step_query = "Who is the current CEO of the company that created the sci-fi movie 'Dune', and what was the budget for that company's most recent film?"

console.print(f"[bold yellow]Testing ReAct agent on a multi-step query:[/bold yellow] '{multi_step_query}'\n")

final_react_output = None

# Stream the output to see the step-by-step reasoning

for chunk in react_agent_app.stream({"messages": [("user", multi_step_query)]}, stream_mode="values"):

final_react_output = chunk

console.print(f"--- [bold purple]Current State Update[/bold purple] ---")

chunk['messages'][-1].pretty_print()

console.print("\n")

console.print("\n--- [bold green]Final Output from ReAct Agent[/bold green] ---")

console.print(Markdown(final_react_output['messages'][-1].content))

--- Human Message ---

Who is CEO of company that made 'Dune'...

--- REACT Agent: Thinking... ---

--- ROUTER: Call a tool...

--- Ai Message ---

Tool Calls: web_search...

Args: query: current CEO of company that made Dune...

--- Tool Message ---

[{"title": "Dune: Part Three - Wikipedia", "content": "Legendary CEO Joshua Grode..."}]

--- REACT Agent: Thinking... ---

--- ROUTER: Finish...

--- Final Output ---

CEO of company that made 'Dune' is Joshua Grode...

Budget for most recent film not found...

当我们运行这个时,代理的执行追踪显示了一个远更智能的过程。它不只是做一个搜索。相反,它推理它的方式通过问题:

Thought 1: “First, I need to find out which company made the movie ‘Dune’.”

Action 1: It calls web_search(‘production company for Dune movie’).

Observation 1: It gets back “Legendary Entertainment”.

Thought 2: “Okay, now I need the CEO of Legendary Entertainment.”

Action 2: It calls web_search(‘CEO of Legendary Entertainment’) and so on, until it has all the pieces.

为了形式化改进,我们可以用我们的LLM-as-a-Judge,这次焦点在任务完成上。

classTaskEvaluation(BaseModel):

"""Schema for evaluating an agent's ability to complete a task."""

task_completion_score: int = Field(description="Score 1-10 on whether the agent successfully completed all parts of the user's request.")

reasoning_quality_score: int = Field(description="Score 1-10 on the logical flow and reasoning process demonstrated by the agent.")

justification: str = Field(description="A brief justification for the scores.")

defevaluate_agent_output(query: str, agent_output: dict):

"""Runs an LLM-as-a-Judge to evaluate the agent's final performance."""

trace = "\n".join([f"{m.type}: {m.content}"for m in agent_output['messages']])

prompt = f"""You are an expert judge of AI agents. Evaluate the following agent's performance on the given task on a scale of 1-10. A score of 10 means the task was completed perfectly. A score of 1 means complete failure.

**User's Task:**

{query}

**Full Agent Conversation Trace:**

```

{trace}

```{data-source-line="108"}

"""

judge_llm = llm.with_structured_output(TaskEvaluation)

return judge_llm.invoke(prompt)

来看分数。一个基本代理试这个任务会得很低分,因为它会失败收集所有所需信息。但我们的ReAct代理表现好多了。

— Evaluating ReAct Agent’s Output — { ‘task_completion_score’: 8, ‘reasoning_quality_score’: 9, ‘justification’: “The agent correctly broke down the problem into multiple steps… It successfully identified the company, then the CEO. While it struggled to find the budget for the most recent film, its reasoning process was sound and it completed most of the task.” }

我们可以看到reasoning_quality_score确认了它的步步过程是逻辑的,并被我们的法官(LLM)验证。

ReAct模式给代理能力处理这些需要动态思考的复杂、multi-hop问题。

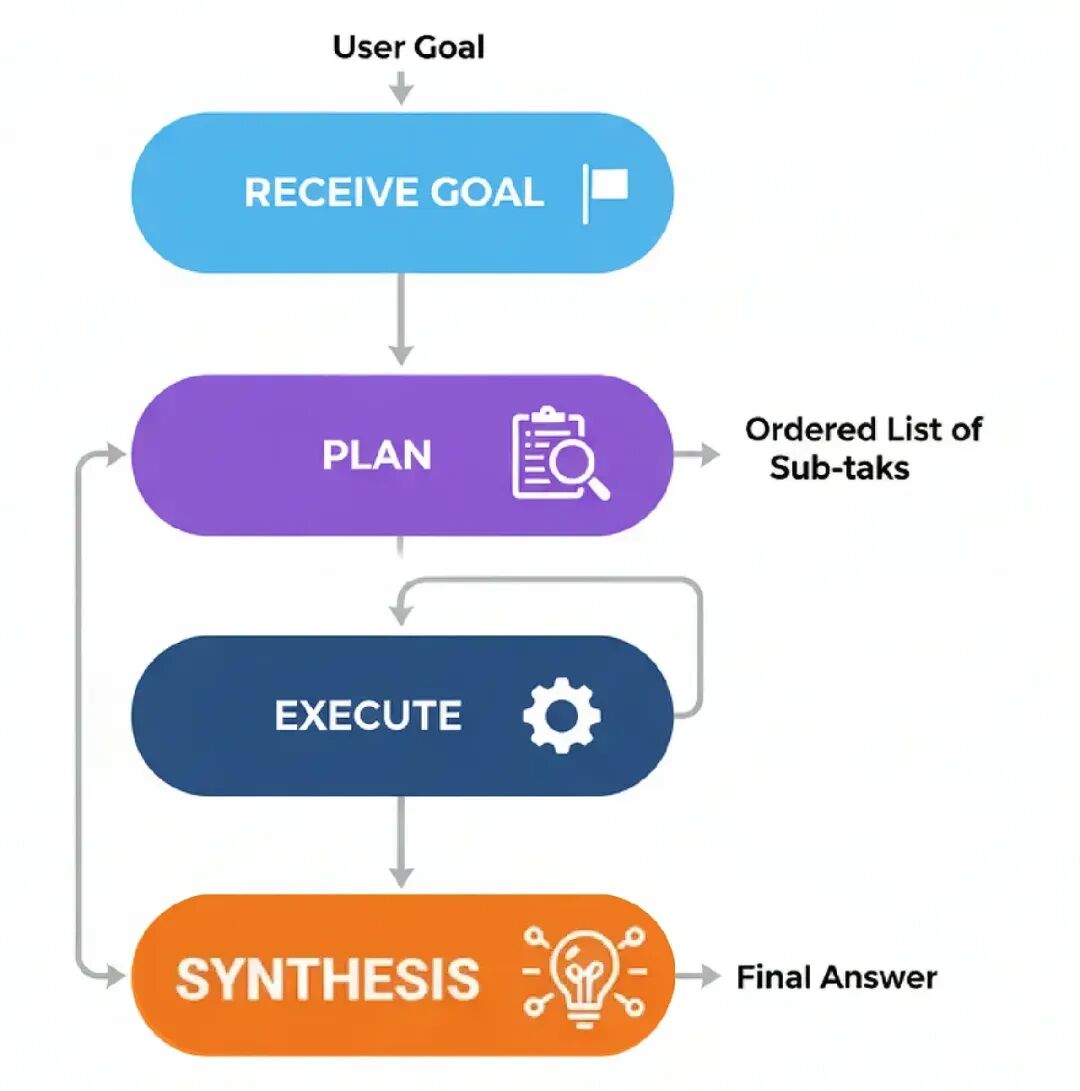

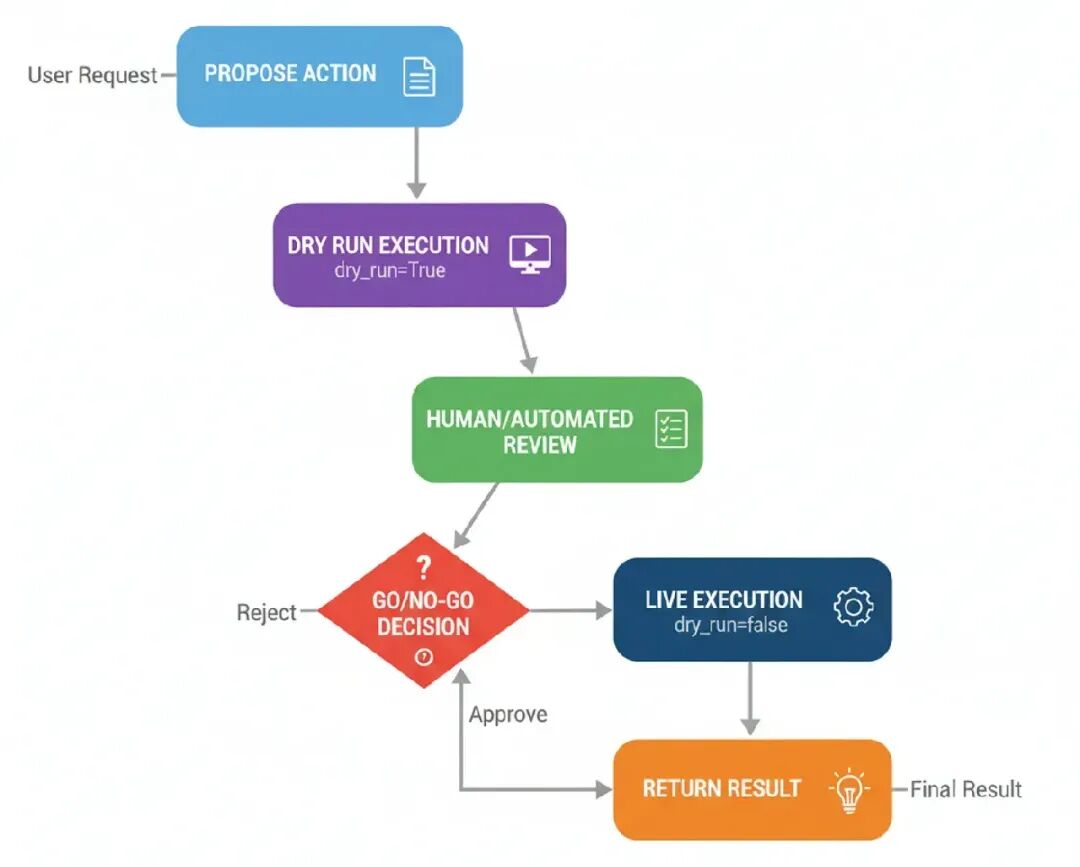

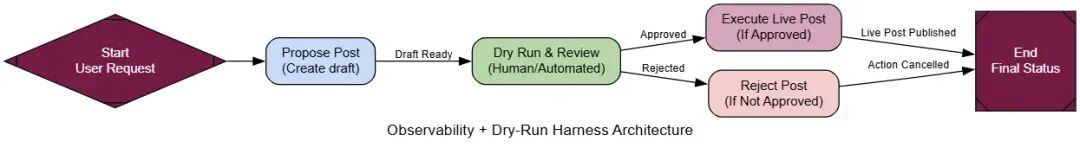

Planning

ReAct模式对探索问题和即时搞清楚东西很棒。但对步骤可预测的任务,它可能有点低效。它像一个人每次问一个转弯的方向,而不是先看全地图。这就是Planning架构进来的地方。

这个模式引入了一个关键的预见层。

而不是步步反应,一个planning代理先创建一个完整的‘作战计划’,然后才采取任何行动。

在AI系统中,Planning是你处理任何结构化、多步过程的工作马。想想数据处理管道、报告生成,或任何你提前知道操作序列的工作流程。它带来可预测性和效率,让代理的行为更容易追踪和调试。

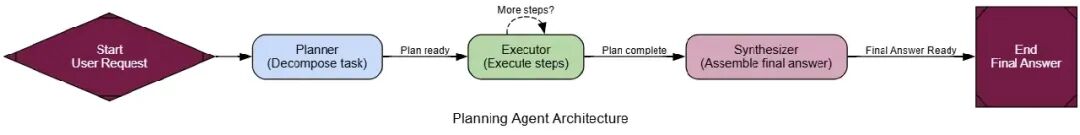

来理解一下过程怎么流动的。

- Receive Goal: 代理被给一个复杂任务。

- Plan: 一个专用的‘Planner’组件分析目标,生成一个有序的子任务列表来实现它。比如:[“Find fact A”, “Find fact B”, “Calculate C using A and B”]。

- Execute: 一个‘Executor’组件拿计划,按顺序执行每个子任务,需要时用工具。

- Synthesize: 一旦计划所有步骤完成,一个最终组件把执行步骤的结果组装成一个连贯的最终答案。

来开始构建它。

我们会创建三个核心组件:一个planner_node来创建策略,一个executor_node来执行它,和一个synthesizer_node来组装最终报告。

首先,我们需要一个专用的planner_node。这里关键是一个非常明确的prompt,告诉LLM它的工作是创建一个简单、可执行步骤的列表。

classPlan(BaseModel):

"""A plan of tool calls to execute to answer the user's query."""

steps: List[str] = Field(description="A list of tool calls that, when executed, will answer the query.")

classPlanningState(TypedDict):

user_request: str

plan: Optional[List[str]]

intermediate_steps: List[str] # Will store tool outputs

final_answer: Optional[str]

defplanner_node(state: PlanningState):

"""Generates a plan of action to answer the user's request."""

console.print("--- PLANNER: Decomposing task... ---")

planner_llm = llm.with_structured_output(Plan)

prompt = f"""You are an expert planner. Your job is to create a step-by-step plan to answer the user's request.

Each step in the plan must be a single call to the `web_search` tool.

**User's Request:**

{state['user_request']}

"""

plan_result = planner_llm.invoke(prompt)

console.print(f"--- PLANNER: Generated Plan: {plan_result.steps} ---")

return {"plan": plan_result.steps}

接下来,executor_node。这是一个简单的工人,只拿计划的下一步,运行工具,把结果加到我们的状态。

defexecutor_node(state: PlanningState):

"""Executes the next step in the plan."""

console.print("--- EXECUTOR: Running next step... ---")

next_step = state["plan"][0]

# In a real app, you'd parse the tool name and args. Here we assume 'web_search'.

query = next_step.replace("web_search('", "").replace("')", "")

result = search_tool.invoke({"query": query})

return {

"plan": state["plan"][1:], # Pop the executed step

"intermediate_steps": state["intermediate_steps"] + [result]

}

现在我们只需把它们连在图里。一个路由器会检查计划里是否还有步骤。如果有,它循环回executor。如果没有,它移到最终synthesizer_node(我们可以从之前模式重用)来生成答案。

defplanning_router(state: PlanningState):

"""Routes to the executor or synthesizer based on the plan."""

ifnot state["plan"]:

console.print("--- ROUTER: Plan complete. Moving to synthesizer. ---")

return"synthesize"

else:

console.print("--- ROUTER: Plan has more steps. Continuing execution. ---")

return"execute"

planning_graph_builder = StateGraph(PlanningState)

planning_graph_builder.add_node("plan", planner_node)

planning_graph_builder.add_node("execute", executor_node)

planning_graph_builder.add_node("synthesize", synthesizer_node)

planning_graph_builder.set_entry_point("plan")

planning_graph_builder.add_conditional_edges("plan", planning_router)

planning_graph_builder.add_conditional_edges("execute", planning_router)

planning_graph_builder.add_edge("synthesize", END)

planning_agent_app = planning_graph_builder.compile()

为了真正看到区别,来给我们的代理一个从预见受益的任务。一个ReAct代理能解决这个,但它的步步过程不太直接。

plan_centric_query = """

Find the population of the capital cities of France, Germany, and Italy.

Then calculate their combined total.

"""

console.print(f"[bold green]Testing PLANNING agent on a plan-centric query:[/bold green] '{plan_centric_query}'\n")

# Initialize the state correctly, especially the list for intermediate steps

initial_planning_input = {"user_request": plan_centric_query, "intermediate_steps": []}

final_planning_output = planning_agent_app.invoke(initial_planning_input)

console.print("\n--- [bold green]Final Output from Planning Agent[/bold green] ---")

console.print(Markdown(final_planning_output['final_answer']))

过程的区别立即明显。我们的代理做的第一件事就是铺开它的整个策略。

--- PLANNER: Decomposing task... ---

--- PLANNER: Generated Plan: ["web_search('population of Paris')", "web_search('population of Berlin')", "web_search('population of Rome')"] ---

--- ROUTER: Plan has more steps. Continuing execution. ---

--- EXECUTOR: Running next step... ---

...

代理在采取一个行动前创建了一个完整的、明确的计划。然后它有条不紊地执行这个计划。这个过程更透明和健壮,因为它在遵循一组清晰的指令。

为了形式化这个,我们会用我们的LLM-as-a-Judge,但这次我们评分过程的效率。

classProcessEvaluation(BaseModel):

"""Schema for evaluating an agent's problem-solving process."""

task_completion_score: int = Field(description="Score 1-10 on task completion.")

process_efficiency_score: int = Field(description="Score 1-10 on the efficiency and directness of the agent's process.")

justification: str = Field(description="A brief justification for the scores.")

当被评估时,Planning代理在它的直接性上闪耀。

--- Evaluating Planning Agent's Process ---

{

'task_completion_score': 8,

'process_efficiency_score': 9,

'justification': "The agent created a clear, optimal plan upfront and executed it without any unnecessary steps. Its process was highly direct and efficient for this predictable task."

}

我们得到好分数,意味着我们的方法确实创建了一个正确的planning系统,所以……

当解决方案路径可预测时,Planning提供了一个比纯反应式更结构化和高效的方法。

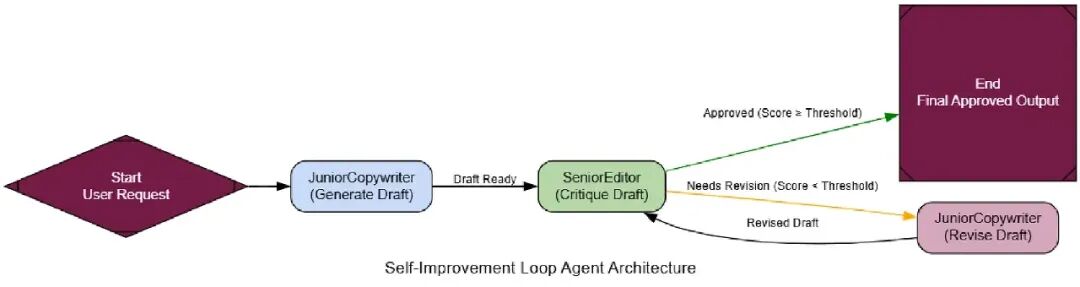

PEV (Planner-Executor-Verifier)

我们的Planning代理当路径清晰时工作得很好,它做一个计划并跟随它。但有个隐藏假设……

如果事情出错,会发生什么?如果一个工具失败,一个API挂了,或搜索返回垃圾,一个标准planner就只是把错误传下去,以失败或胡说结束。

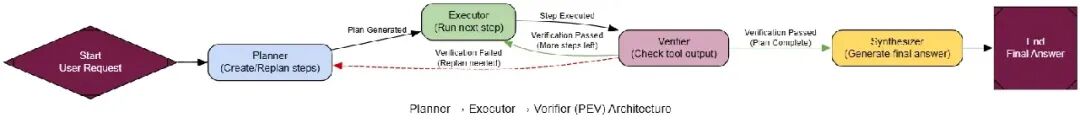

PEV (Planner-Executor-Verifier)架构是对Planning模式的一个简单但强大的升级,它加了一个关键的质量控制和自我修正层。

PEV对构建健壮和可靠的工作流程很重要。你在任何代理与可能不可靠的外部工具互动的地方用它。

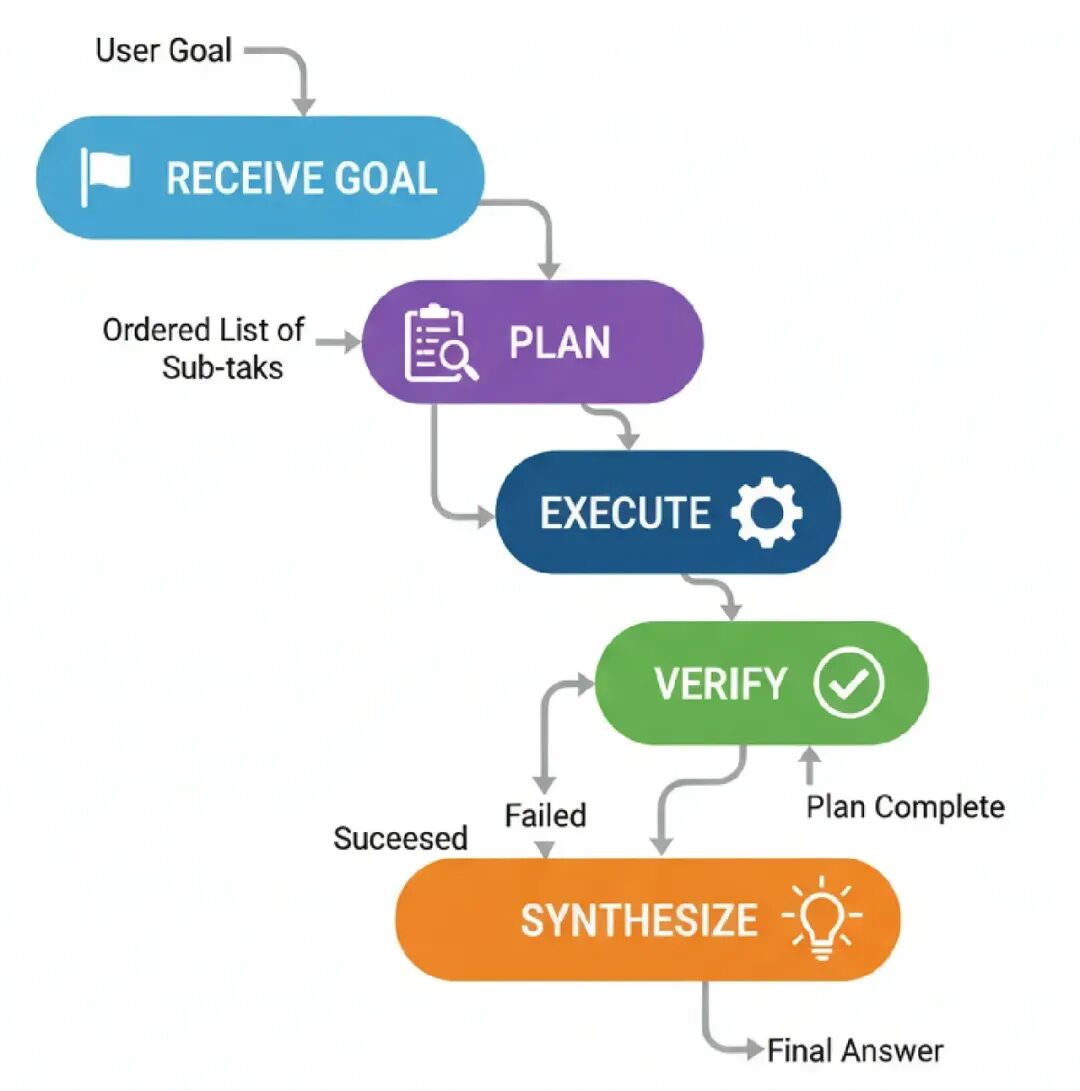

这是它怎么工作的……

- Plan: 一个‘Planner’代理创建一个步骤序列,就像之前一样。

- Execute: 一个‘Executor’代理拿计划的下一步,调用工具。

- Verify: 这是新步骤。一个‘Verifier’代理检查工具的输出。它检查正确性、相关性和错误。

- Route & Iterate: 基于Verifier的判断:

如果步骤成功,代理移到计划的下一步。

如果步骤失败,代理循环回Planner创建一个新计划,现在知道失败了。

如果计划完成,它继续到结束。

为了真正展示这个,我们需要一个实际上能失败的工具。所以,我们会创建一个特殊的flaky_web_search工具,我们设计它故意为特定查询返回错误消息。

defflaky_web_search(query: str) -> str:

"""A web search tool that intentionally fails for a specific query."""

console.print(f"--- TOOL: Searching for '{query}'... ---")

if"employee count"in query.lower():

console.print("--- TOOL: [bold red]Simulating API failure![/bold red] ---")

return"Error: Could not retrieve data. The API endpoint is currently unavailable."

else:

return search_tool.invoke({"query": query})

现在是PEV模式的核心:verifier_node。这个节点唯一的工作是看最后工具的输出,决定它是成功还是失败。

classVerificationResult(BaseModel):

"""Schema for the Verifier's output."""

is_successful: bool = Field(description="True if the tool execution was successful and the data is valid.")

reasoning: str = Field(description="Reasoning for the verification decision.")

defverifier_node(state: PEVState):

"""Checks the last tool result for errors."""

console.print("--- VERIFIER: Checking last tool result... ---")

verifier_llm = llm.with_structured_output(VerificationResult)

prompt = f"Verify if the following tool output is a successful, valid result or an error message. The task was '{state['user_request']}'.\n\nTool Output: '{state['last_tool_result']}'"

verification = verifier_llm.invoke(prompt)

console.print(f"--- VERIFIER: Judgment is '{'Success'if verification.is_successful else'Failure'}' ---")

if verification.is_successful:

return {"intermediate_steps": state["intermediate_steps"] + [state['last_tool_result']]}

else:

# If it failed, we add the failure reason and clear the plan to trigger re-planning

return {"plan": [], "intermediate_steps": state["intermediate_steps"] + [f"Verification Failed: {state['last_tool_result']}"]}

用我们的verifier准备好,我们可以连起整个图。这里关键是路由器逻辑。在verifier_node运行后,如果计划突然空了(因为verifier清了它),我们的路由器知道发代理回planner_node再试。

classPEVState(TypedDict):

user_request: str

plan: Optional[List[str]]

last_tool_result: Optional[str]

intermediate_steps: List[str]

final_answer: Optional[str]

retries: int

# ... (planner_node, executor_node, and synthesizer_node definitions are similar to before) ...

defpev_router(state: PEVState):

"""Routes execution based on verification and plan status."""

ifnot state["plan"]:

# Check if the plan is empty because verification failed

if state["intermediate_steps"] and"Verification Failed"in state["intermediate_steps"][-1]:

console.print("--- ROUTER: Verification failed. Re-planning... ---")

return"plan"

else:

console.print("--- ROUTER: Plan complete. Moving to synthesizer. ---")

return"synthesize"

else:

console.print("--- ROUTER: Plan has more steps. Continuing execution. ---")

return"execute"

pev_graph_builder = StateGraph(PEVState)

pev_graph_builder.add_node("plan", pev_planner_node)

pev_graph_builder.add_node("execute", pev_executor_node)

pev_graph_builder.add_node("verify", verifier_node)

pev_graph_builder.add_node("synthesize", synthesizer_node)

pev_graph_builder.set_entry_point("plan")

pev_graph_builder.add_edge("plan", "execute")

pev_graph_builder.add_edge("execute", "verify")

pev_graph_builder.add_conditional_edges("verify", pev_router)

pev_graph_builder.add_edge("synthesize", END)

pev_agent_app = pev_graph_builder.compile()

现在是关键测试。我们会给我们的PEV代理一个需要它调用我们的flaky_web_search工具的任务,用我们知道会失败的查询。一个简单的Planner-Executor代理会在这里崩溃。

flaky_query = "What was Apple's R&D spend in their last fiscal year, and what was their total employee count? Calculate the R&D spend per employee."

console.print(f"[bold green]Testing PEV agent on a flaky query:[/bold green]\n'{flaky_query}'\n")

initial_pev_input = {"user_request": flaky_query, "intermediate_steps": [], "retries": 0}

final_pev_output = pev_agent_app.invoke(initial_pev_input)

console.print("\n--- [bold green]Final Output from PEV Agent[/bold green] ---")

console.print(Markdown(final_pev_output['final_answer']))

Testing PEV agent on the same flaky query:

'What was Apple's R&D spend in their last fiscal year, and what was their total employee count? Calculate the R&D

spend per employee.'

--- (PEV) PLANNER: Creating/revising plan (retry 0)... ---

--- (PEV) EXECUTOR: Running next step... ---

--- TOOL: Searching for 'Apple R&D spend last fiscal year'... ---

--- VERIFIER: Checking last tool result... ---

--- VERIFIER: Judgment is 'Success' ---

...

Plan 1: The agent creates a plan: ["Apple R&D spend...", "Apple total employee count"].

Execute & Fail: It gets the R&D spend, but the employee count search hits our flaky tool and returns an error.

Verify & Catch: The verifier_node gets the error message, correctly judges it as a failure, and clears the plan.

Router & Re-plan: The router sees the empty plan and the failure message, and sends the agent back to the planner_node.

Plan 2: The planner, now aware that “Apple total employee count” failed, creates a new, smarter plan, perhaps trying web_search('Apple number of employees worldwide').

Execute & Succeed: This new plan works, and the agent gets all the data it needs.

最终输出是正确的计算。代理不只是放弃;它检测了问题,想出了新方法,并成功了。

为了形式化这个,我们的LLM-as-a-Judge需要评分鲁棒性。

classRobustnessEvaluation(BaseModel):

"""Schema for evaluating an agent's robustness and error handling."""

task_completion_score: int = Field(description="Score 1-10 on task completion.")

error_handling_score: int = Field(description="Score 1-10 on the agent's ability to detect and recover from errors.")

justification: str = Field(description="A brief justification for the scores.")

一个标准Planner-Executor代理会得可怕的error_handling_score。但我们的PEV代理表现出色。

--- Evaluating PEV Agent's Robustness ---

{

'task_completion_score': 8,

'error_handling_score': 10,

'justification': "The agent demonstrated perfect robustness. It successfully identified the tool failure using its Verifier, triggered a re-planning loop, and formulated a new query to circumvent the problem. This is an exemplary case of error recovery."

}

你可以看到PEV架构不只是当事情顺利时得到正确答案……

它是当事情出错时不得到错答案。

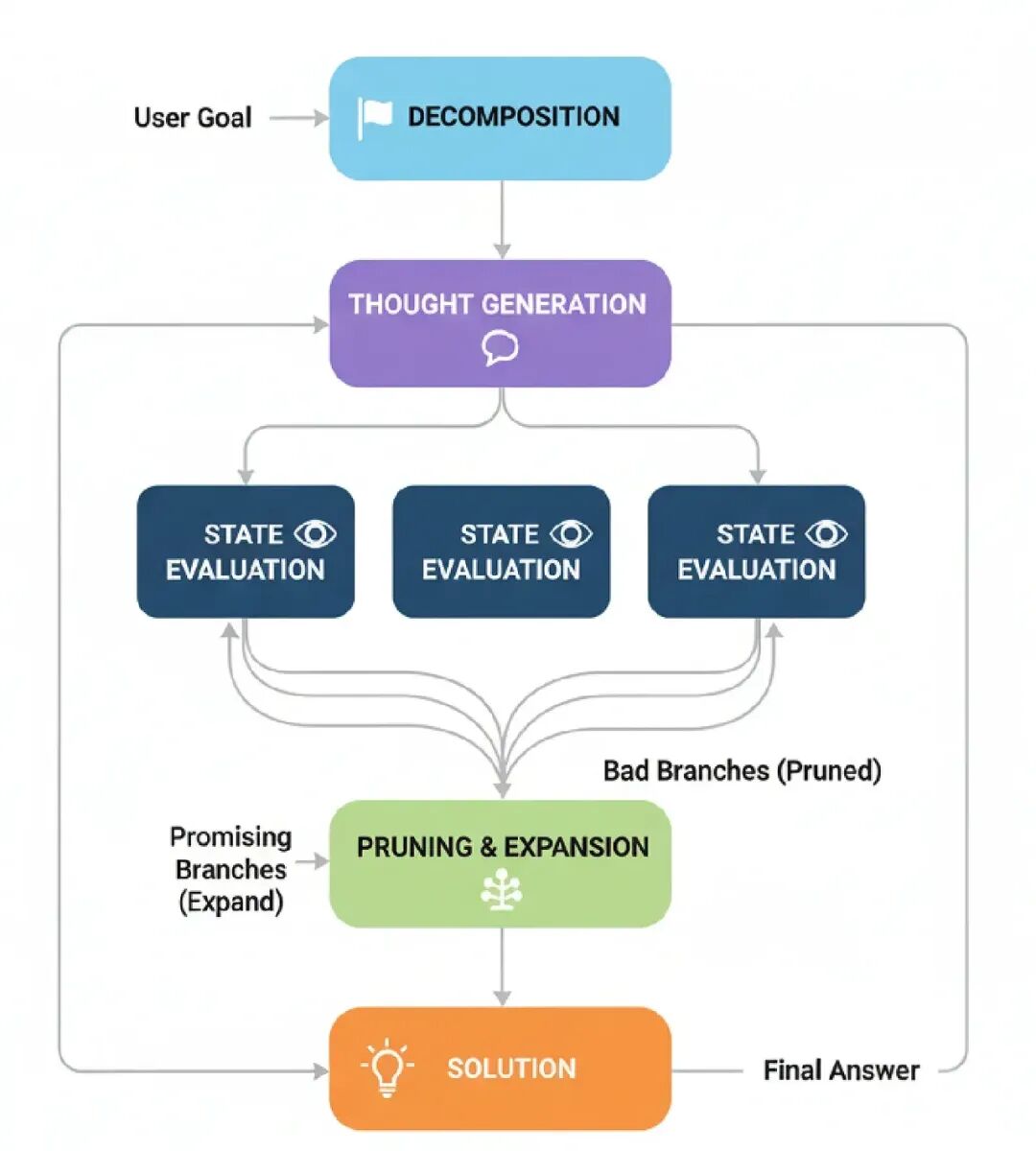

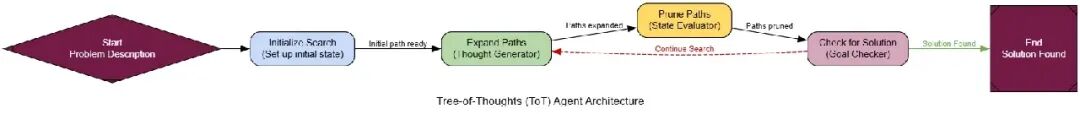

Tree-of-Thoughts (ToT)

PEV模式能处理工具失败,并用新计划再试。但规划本身还是线性的。它创建一个单一的、步步计划并跟随它。

如果问题不是直路,而是像迷宫有死胡同和多可能路径,会发生什么?

这就是Tree-of-Thoughts (ToT)架构进来的地方。不是生成单一推理线,一个ToT代理同时探索多个路径,往往用不同的“个性”,然后继续探索最有前景的分支。

在大AI系统中,ToT帮助解决硬问题像规划路线、调度,或棘手的谜题。

这是正常ToT过程怎么工作的……

- Decomposition: 问题被拆成一系列步骤或“想法”。

- Thought Generation: 对于当前状态,代理生成多个潜在下一步。这创建了我们的“想法树”的分支。

- State Evaluation: 每个新潜在步骤被一个批评者或验证函数评估。这个检查决定一个移动是否有效,是否在进步,还是只是原地打转。

- Pruning & Expansion: 代理然后“修剪”坏分支(无效或没前景的),并从剩下的好分支继续过程。

- Solution: 这继续直到一个分支到达最终目标。

为了展示ToT,我们需要一个不能直线解决的问题,狼、山羊和卷心菜过河谜题。它完美因为它需要非明显移动(像带东西回来),有能困住简单推理者的无效状态。

首先,我们会用Pydantic模型定义谜题规则,一个检查有效性的函数(所以没什么被吃)。

classPuzzleState(BaseModel):

"Represents the state of the Wolf, Goat, and Cabbage puzzle."

left_bank: set[str] = Field(default_factory=lambda: {"wolf", "goat", "cabbage"})

right_bank: set[str] = Field(default_factory=set)

boat_location: str = "left"

move_description: str = "Initial state."

defis_valid(self) -> bool:

"""Checks if the current state is valid."""

# Check left bank for invalid pairs if boat is on the right

ifself.boat_location == "right":

if"wolf"inself.left_bank and"goat"inself.left_bank: returnFalse

if"goat"inself.left_bank and"cabbage"inself.left_bank: returnFalse

# Check right bank for invalid pairs if boat is on the left

ifself.boat_location == "left":

if"wolf"inself.right_bank and"goat"inself.right_bank: returnFalse

if"goat"inself.right_bank and"cabbage"inself.right_bank: returnFalse

returnTrue

defis_goal(self) -> bool:

"""Checks if we've won."""

returnself.right_bank == {"wolf", "goat", "cabbage"}

# Make the state hashable so we can detect cycles

def__hash__(self):

returnhash((frozenset(self.left_bank), frozenset(self.right_bank), self.boat_location))

现在是ToT代理的核心。我们图的状态会持有我们想法树的所有活跃路径。expand_paths节点会生成新分支,prune_paths节点会通过移除任何撞死胡同或原地打转的路径来修剪树。

classToTState(TypedDict):

problem_description: str

active_paths: List[List[PuzzleState]] # This is our "tree"

solution: Optional[List[PuzzleState]]

defexpand_paths(state: ToTState) -> Dict[str, Any]:

"""The 'Thought Generator'. Expands each active path with all valid next moves."""

console.print("--- Expanding Paths ---")

new_paths = []

for path in state['active_paths']:

last_state = path[-1]

# Get all valid next states from the current state

possible_next_states = get_possible_moves(last_state) # Assuming get_possible_moves is defined

for next_state in possible_next_states:

new_paths.append(path + [next_state])

console.print(f"[cyan]Expanded to {len(new_paths)} potential paths.[/cyan]")

return {"active_paths": new_paths}

defprune_paths(state: ToTState) -> Dict[str, Any]:

"""The 'State Evaluator'. Prunes paths that are invalid or contain cycles."""

console.print("--- Pruning Paths ---")

pruned_paths = []

for path in state['active_paths']:

# Check for cycles: if the last state has appeared before in the path

if path[-1] in path[:-1]:

continue# Found a cycle, prune this path

pruned_paths.append(path)

console.print(f"[green]Pruned down to {len(pruned_paths)} valid, non-cyclical paths.[/green]")

return {"active_paths": pruned_paths}

# ... (graph wiring with a conditional edge that checks for a solution) ...

workflow = StateGraph(ToTState)

workflow.add_node("expand", expand_paths)

workflow.add_node("prune", prune_paths)

来运行我们的ToT代理在谜题上。一个简单的Chain-of-Thought代理如果见过它可能解决,但它只是回忆解决方案。我们的ToT代理会通过系统搜索发现解决方案。

problem = "A farmer wants to cross a river with a wolf, a goat, and a cabbage..."

console.print("--- 🌳 Running Tree-of-Thoughts Agent ---")

final_state = tot_agent.invoke({"problem_description": problem}, {"recursion_limit": 15})

console.print("\n--- ✅ ToT Agent Solution ---")

输出追踪显示代理在工作,有条不紊地探索谜题。

--- Expanding Paths ---

[cyan]Expanded to 1 potential paths.[/cyan]

--- Pruning Paths ---

[green]Pruned down to 1 valid, non-cyclical paths.[/green]

--- Expanding Paths ---

[cyan]Expanded to 2 potential paths.[/cyan]

--- Pruning Paths ---

[green]Pruned down to 2 valid, non-cyclical paths.[/green]

...

[bold green]Solution Found![/bold green]

--- ✅ ToT Agent Solution ---

1. Initial state.

2. Move goat to the right bank.

3. Move the boat empty to the left bank.

4. Move wolf to the right bank.

5. Move goat to the left bank.

6. Move cabbage to the right bank.

7. Move the boat empty to the left bank.

8. Move goat to the right bank.

代理找到了正确的8步解决方案!它不只是猜;它系统探索了可能性,扔掉坏的,找到了保证有效的路径。这就是ToT的力量。

为了形式化这个,我们会用一个LLM-as-a-Judge焦点在推理过程质量上。

classReasoningEvaluation(BaseModel):

"""Schema for evaluating an agent's reasoning process."""

solution_correctness_score: int = Field(description="Score 1-10 on whether the final solution is correct and valid.")

reasoning_robustness_score: int = Field(description="Score 1-10 on how robust the agent's process was. A high score means it systematically explored the problem, a low score means it just guessed.")

justification: str = Field(description="A brief justification for the scores.")

一个简单的Chain-of-Thought代理如果幸运可能得高正确分,但它的鲁棒分会低。但我们的ToT代理得顶分。

--- Evaluating ToT Agent's Process ---

{

'solution_correctness_score': 8,

'reasoning_robustness_score': 9,

'justification': "The agent's process was perfectly robust. It didn't just provide an answer; it systematically explored a tree of possibilities, pruned invalid paths, and guaranteed a correct solution. This is a far more reliable method than a single-pass guess."

}

我们可以看到ToT代理工作好不是偶然,而是因为它的搜索扎实。

这让它成为需要高可靠性的任务的更好选择。

这份完整版的大模型 AI 学习和面试资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】

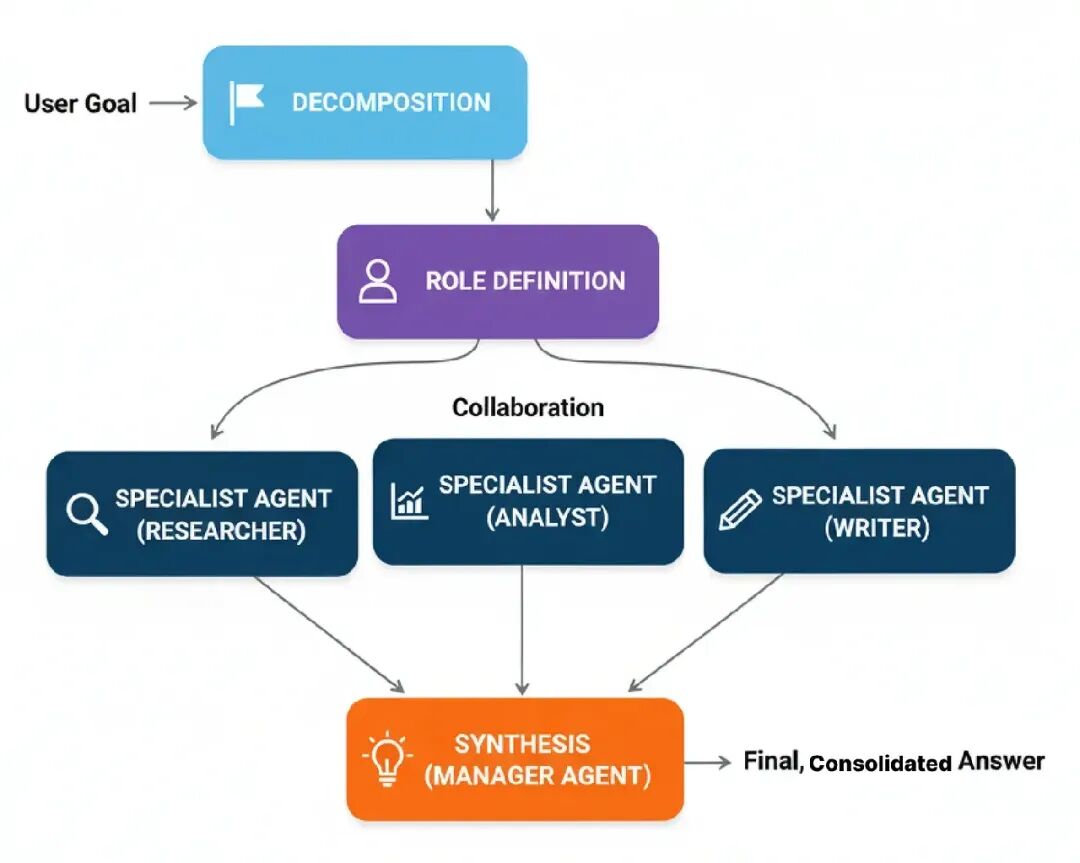

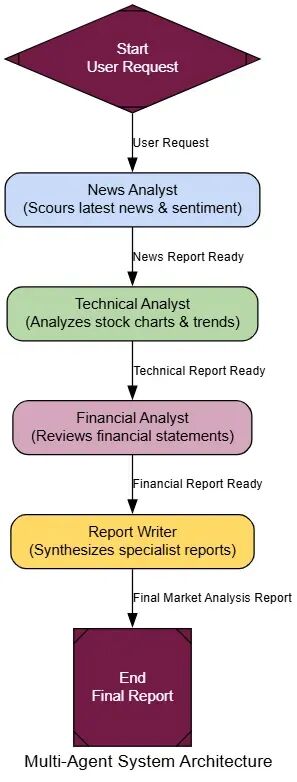

Multi-Agent Systems

我们到现在实现的所有的方法,它们都独自工作。

如果问题太大或太复杂,一个代理有效处理不了,会发生什么?

而不是建一个做一切的超级代理,multi-agent systems用一个专家团队。每个代理专注自己的领域,就像人类专家,你不会问一个数据科学家写营销文案。

对于复杂任务,像生成市场分析,你可以有一个新闻专家、一个金融专家和一个股票专家一起工作,得更好结果。

Multi-Agent系统实现起来可以很复杂,但一个更简单的版本是这样工作的……

- Decomposition: 一个复杂任务被拆成子任务。

- Role Definition: 每个子任务分配给一个专家代理,基于它的定义角色(比如,‘Researcher’, ‘Coder’, ‘Writer’)。

- Collaboration: 代理执行它们的任务,把发现传给彼此或一个中央经理。

- Synthesis: 一个最终‘manager’代理收集专家的输出,组装最终的、整合的响应。

为了真正看到为什么团队更好,我们先需要一个基准。我们会建一个单一的、整体的‘generalist’代理,给它一个复杂、多面任务。

然后,我们会建我们的专家团队:一个News Analyst,一个Technical Analyst,和一个Financial Analyst。每个会是自己的代理节点,有非常特定的个性。一个最终Report Writer会作为经理,编译它们的工作。

classAgentState(TypedDict):

user_request: str

news_report: Optional[str]

technical_report: Optional[str]

financial_report: Optional[str]

final_report: Optional[str]

# A helper factory to create our specialist nodes cleanly

defcreate_specialist_node(persona: str, output_key: str):

"""Factory function to create a specialist agent node."""

system_prompt = persona + "\n\nYou have access to a web search tool. Your output MUST be a concise report section, focusing only on your area of expertise."

prompt = ChatPromptTemplate.from_messages([("system", system_prompt), ("human", "{user_request}")])

agent = prompt | llm.bind_tools([search_tool])

defspecialist_node(state: AgentState):

console.print(f"--- CALLING {output_key.replace('_report','').upper()} ANALYST ---")

result = agent.invoke({"user_request": state["user_request"]})

return {output_key: result.content}

return specialist_node

# Create the specialist nodes

news_analyst_node = create_specialist_node("You are an expert News Analyst...", "news_report")

technical_analyst_node = create_specialist_node("You are an expert Technical Analyst...", "technical_report")

financial_analyst_node = create_specialist_node("You are an expert Financial Analyst...", "financial_report")

defreport_writer_node(state: AgentState):

"""The manager agent that synthesizes the specialist reports."""

console.print("--- CALLING REPORT WRITER ---")

prompt = f"""You are an expert financial editor. Your task is to combine the following specialist reports into a single, professional, and cohesive market analysis report.

News Report: {state['news_report']}

Technical Report: {state['technical_report']}

Financial Report: {state['financial_report']}

"""

final_report = llm.invoke(prompt).content

return {"final_report": final_report}

现在来把它们连在LangGraph里。对于这个例子,我们会用一个简单的顺序工作流程:News Analyst先,然后Technical Analyst,等等。

multi_agent_graph_builder = StateGraph(AgentState)

# Add all the nodes

multi_agent_graph_builder.add_node("news_analyst", news_analyst_node)

multi_agent_graph_builder.add_node("technical_analyst", technical_analyst_node)

multi_agent_graph_builder.add_node("financial_analyst", financial_analyst_node)

multi_agent_graph_builder.add_node("report_writer", report_writer_node)

# Define the workflow sequence

multi_agent_graph_builder.set_entry_point("news_analyst")

multi_agent_graph_builder.add_edge("news_analyst", "technical_analyst")

multi_agent_graph_builder.add_edge("technical_analyst", "financial_analyst")

multi_agent_graph_builder.add_edge("financial_analyst", "report_writer")

multi_agent_graph_builder.add_edge("report_writer", END)

multi_agent_app = multi_agent_graph_builder.compile()

来给我们的专家团队一个复杂任务:为NVIDIA创建一个完整的市场分析报告。一个单一的、generalist代理很可能产生一个浅的、无结构的文本块。来看我们的团队怎么做。

multi_agent_query = "Create a brief but comprehensive market analysis report for NVIDIA (NVDA)."

console.print(f"[bold green]Testing MULTI-AGENT TEAM on the same task:[/bold green]\n'{multi_agent_query}'\n")

final_multi_agent_output = multi_agent_app.invoke({"user_request": multi_agent_query})

console.print("\n--- [bold green]Final Report from Multi-Agent Team[/bold green] ---")

console.print(Markdown(final_multi_agent_output['final_report']))

最终报告的区别很明显。从multi-agent团队的输出高度结构化,有清晰、不同的部分给每个分析领域。每个部分包含更多详细的、领域特定的语言和洞见。

### Market Analysis Report: NVIDIA

**Introduction**

NVIDIA has been a subject of interest for investors... This report combines findings from three specialists...

**News & Sentiment Report**

Recent news surrounding NVIDIA has been mixed... The company has been facing increased competition... but has also been making significant strides in AI...

**Technical Analysis Report**

NVIDIA's stock has been trading in a bullish trend over the past year... The report also highlights the company's strong earnings growth...

**Financial Performance Report**

The report highlights the company's strong revenue growth... Gross margin has been increasing...

**Conclusion**

In conclusion, NVIDIA's market analysis suggests that the company has been facing increased competition... but remains a strong player...

这个输出远优于单一代理能产生的。通过分工,我们得到的结果是结构化的、深的和专业的。

为了形式化这个,我们会用一个LLM-as-a-Judge焦点在最终报告质量上。

classReportEvaluation(BaseModel):

"""Schema for evaluating a financial report."""

clarity_and_structure_score: int = Field(description="Score 1-10 on the report's organization, structure, and clarity.")

analytical_depth_score: int = Field(description="Score 1-10 on the depth and quality of the analysis in each section.")

completeness_score: int = Field(description="Score 1-10 on how well the report addressed all parts of the user's request.")

justification: str = Field(description="A brief justification for the scores.")

当被评估时,区别明显。一个整体代理可能得6或7分。但我们的multi-agent团队得高得多分。

--- Evaluating Multi-Agent Team's Report ---

{

'clarity_and_structure_score': 9,

'analytical_depth_score': 8,

'completeness_score': 9,

'justification': "The report is exceptionally well-structured with clear, distinct sections for each analysis type. Each section provides a good level of expert detail. The synthesis in the conclusion is logical and well-supported by the preceding specialist reports."

}

从分数我们可以看到,对于可以拆分的复杂任务……

专家团队几乎总是优于单一generalist。

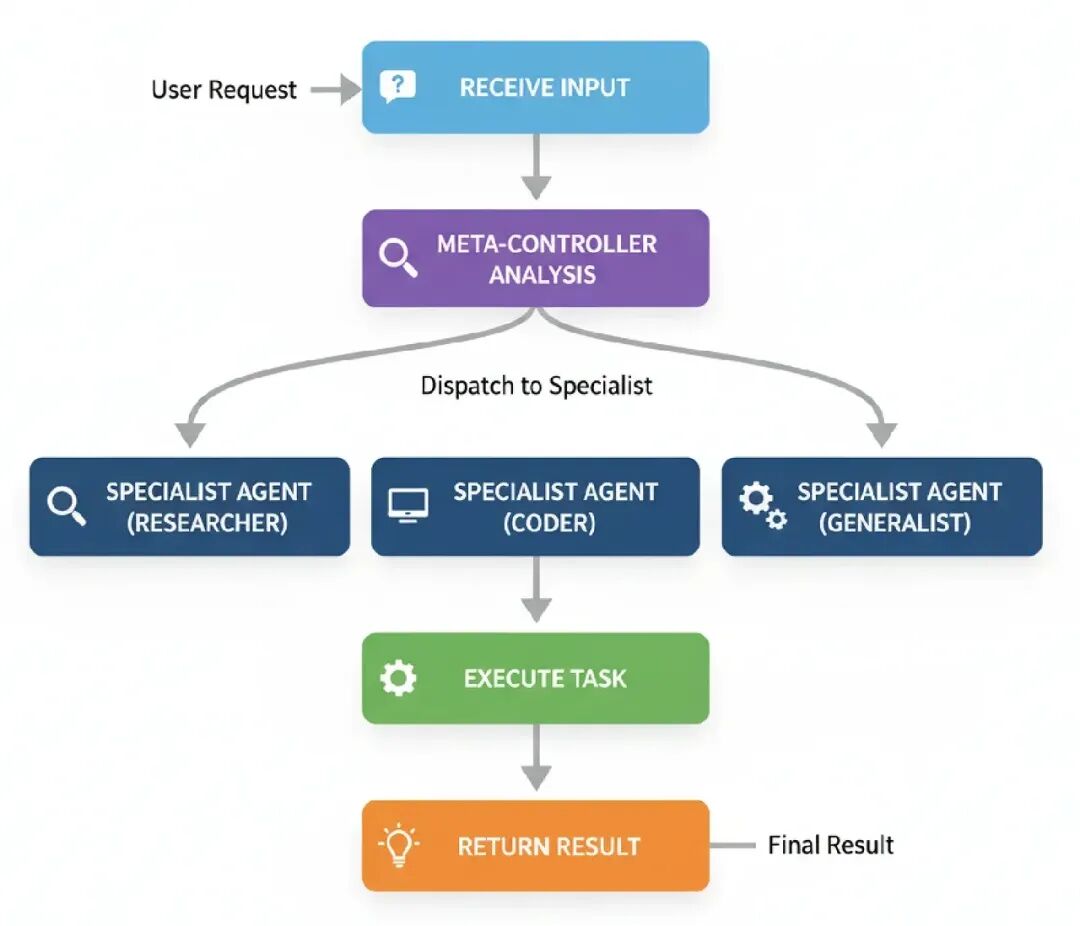

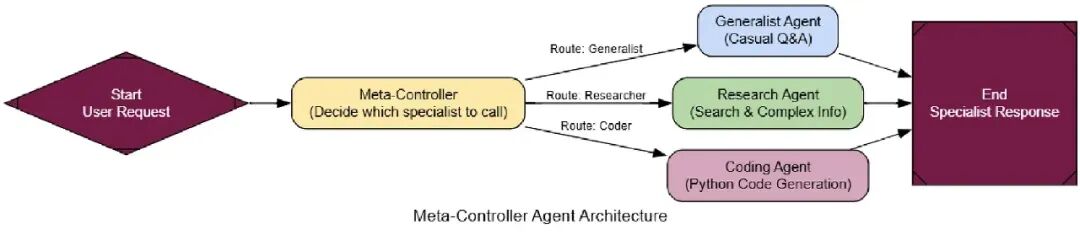

Meta-Controller

在Multi-agent团队你可能注意到它有点僵硬。我们硬编码了序列:News -> Technical -> Financial -> Writer。

如果用户只想要技术分析呢?我们的系统还是会浪费时间和钱运行其他分析师。

Meta-Controller架构引入了一个智能调度器。这个controller代理唯一的工作是看用户请求,决定哪个专家是合适的人选。

在RAG或代理系统中,Meta-Controller是中央神经系统。它是前门,把进来的请求路由到正确部门。

一个简单Meta controller工作像这样:

- Receive Input: 系统得到用户请求。

- Meta-Controller Analysis: Meta-Controller代理检查请求来理解它的意图。

- Dispatch to Specialist: 基于它的分析,它从专家池选择最好的专家代理(比如,‘Researcher’, ‘Coder’, ‘Generalist’)。

- Execute Task: 选择的专家代理运行,生成结果。

- Return Result: 专家的结果直接返回给用户。

首先,我们需要编码操作的大脑:meta_controller_node。它的工作是看用户请求,用可用专家列表,选择正确的那个。这里prompt很关键,因为它需要清楚解释每个专家的角色给controller。

classControllerDecision(BaseModel):

"""The routing decision made by the Meta-Controller."""

next_agent: str = Field(description="The name of the specialist agent to call next. Must be one of ['Generalist', 'Researcher', 'Coder'].")

reasoning: str = Field(description="A brief reason for choosing the next agent.")

defmeta_controller_node(state: MetaAgentState):

"""The central controller that decides which specialist to call."""

console.print("--- 🧠 Meta-Controller Analyzing Request ---")

specialists = {

"Generalist": "Handles casual conversation, greetings, and simple questions.",

"Researcher": "Answers questions requiring up-to-date information from the web.",

"Coder": "Writes Python code based on a user's specification."

}

specialist_descriptions = "\n".join([f"- {name}: {desc}"for name, desc in specialists.items()])

prompt = ChatPromptTemplate.from_template(

f"""You are the meta-controller for a multi-agent AI system. Your job is to route the user's request to the most appropriate specialist agent.

Here are the available specialists:

{specialist_descriptions}

Analyze the following user request and choose the best specialist.

User Request: "{{user_request}}""""

)

controller_llm = llm.with_structured_output(ControllerDecision)

chain = prompt | controller_llm

decision = chain.invoke({"user_request": state['user_request']})

console.print(f"[yellow]Routing decision:[/yellow] Send to [bold]{decision.next_agent}[/bold]. [italic]Reason: {decision.reasoning}[/italic]")

return {"next_agent_to_call": decision.next_agent}

用我们的controller定义,我们只需在LangGraph连它。图会从meta_controller开始,一个条件边然后基于它的决定路由请求到正确专家节点。

classMetaAgentState(TypedDict):

user_request: str

next_agent_to_call: Optional[str]

generation: str

# We can reuse the specialist nodes (generalist_node, research_agent_node, etc.)

workflow = StateGraph(MetaAgentState)

workflow.add_node("meta_controller", meta_controller_node)

workflow.add_node("Generalist", generalist_node)

workflow.add_node("Researcher", research_agent_node)

workflow.add_node("Coder", coder_node)

workflow.set_entry_point("meta_controller")

defroute_to_specialist(state: MetaAgentState) -> str:

"""Reads the controller's decision and returns the name of the node to route to."""

return state["next_agent_to_call"]

workflow.add_conditional_edges("meta_controller", route_to_specialist)

# After any specialist runs, the process ends

workflow.add_edge("Generalist", END)

workflow.add_edge("Researcher", END)

workflow.add_edge("Coder", END)

meta_agent = workflow.compile()

现在来用各种prompt测试我们的调度器。每个设计来看到controller是否发到正确专家。

# Test 1: Should be routed to the Generalist

run_agent("Hello, how are you today?")

# Test 2: Should be routed to the Researcher

run_agent("What were NVIDIA's latest financial results?")

# Test 3: Should be routed to the Coder

run_agent("Can you write me a python function to calculate the nth fibonacci number?")

输出追踪显示controller每次做聪明决定。

--- 🧠 Meta-Controller Analyzing Request ---

[yellow]Routing decision:[/yellow] Send to [bold]Generalist[/bold]. [italic]Reason: The user's request is a simple greeting...[/italic]

Final Response: Hello there! How can I help you today?

--- 🧠 Meta-Controller Analyzing Request ---

[yellow]Routing decision:[/yellow] Send to [bold]Researcher[/bold]. [italic]Reason: The user is asking about a recent event...[/italic]

Final Response: NVIDIA's latest financial results... were exceptionally strong. They reported revenue of $26.04 billion...

--- 🧠 Meta-Controller Analyzing Request ---

[yellow]Routing decision:[/yellow] Send to [bold]Coder[/bold]. [italic]Reason: The user is explicitly asking for a Python function...[/italic]

Final Response:

```python

def fibonacci(n):

# ... (code) ...

```{data-source-line="130"}

系统完美工作。问候去Generalist,新闻查询去Researcher,代码请求去Coder。controller基于内容正确调度任务。

为了形式化这个,我们会用一个LLM-as-a-Judge评分一件事:路由正确性。

classRoutingEvaluation(BaseModel):

"""Schema for evaluating the Meta-Controller's routing decision."""

routing_correctness_score: int = Field(description="Score 1-10 on whether the controller chose the most appropriate specialist for the request.")

justification: str = Field(description="A brief justification for the score.")

对于像“What is the capital of France?”的查询,法官的评估会是:

--- Evaluating Meta-Controller's Routing ---

{

'routing_correctness_score': 8.5,

'justification': "The controller correctly identified the user's request as a factual query requiring up-to-date information and routed it to the 'Researcher' agent. This was the optimal choice, as the Generalist might have outdated knowledge."

}

这个分数显示我们的controller不只是路由,它是聪明调度。

这让AI系统可扩展和易维护,因为新技能可以通过插入专家和更新controller来添加。

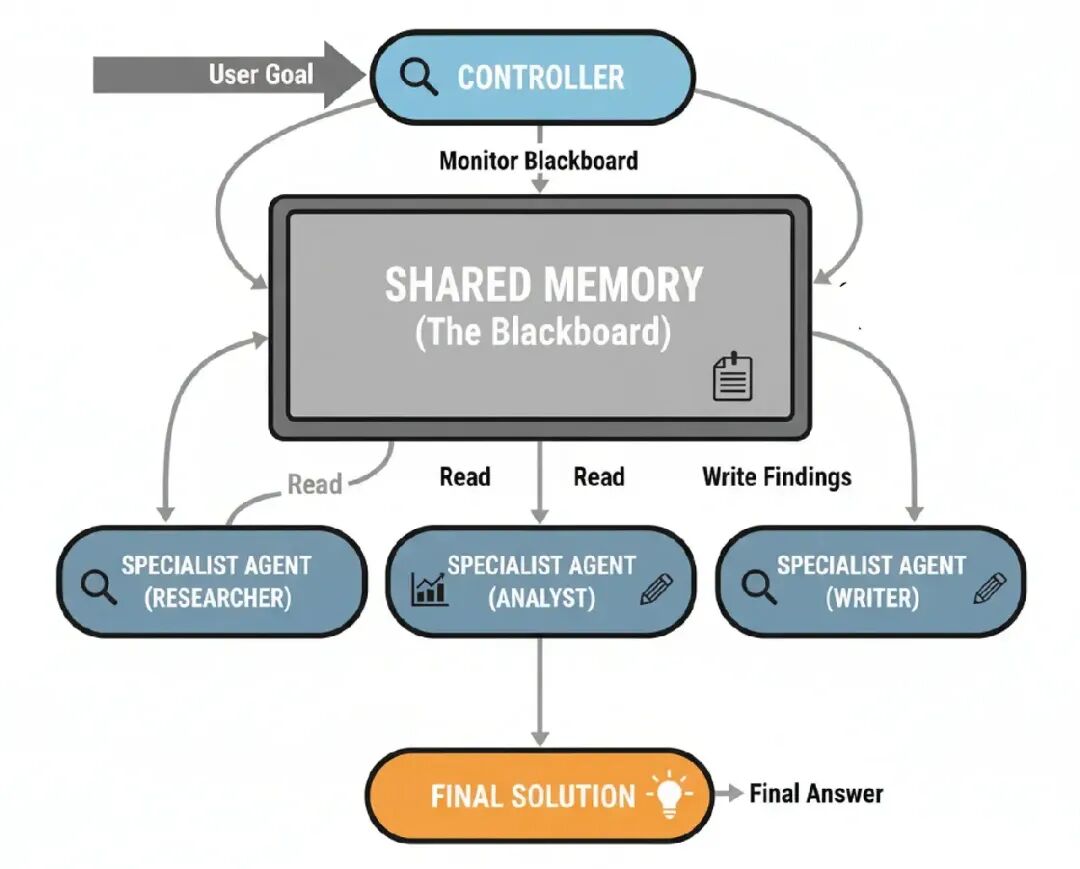

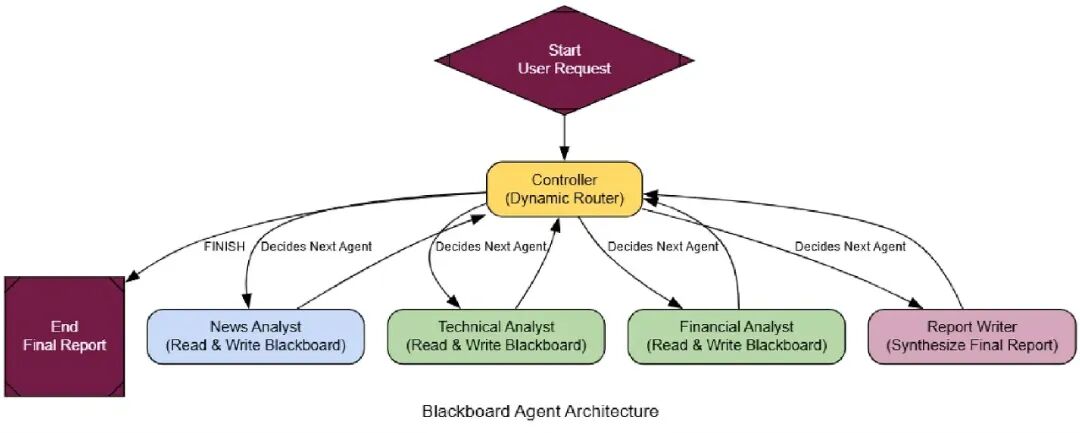

Blackboard

如果问题总是相同的,以前架构可能工作了……

但如果最佳下一步取决于前一步的结果呢?一个僵硬序列可能极其低效,强迫系统运行不必要的步骤。

这就是Blackboard架构进来的地方。它是协调专家团队的一个更高级和灵活的方式。这个想法来自人类专家怎么解决问题,

他们围着一个黑板,一个共享工作空间,任何人可以写下发现。 一个领导者然后看黑板,决定谁该下一步贡献。 当你编码你的架构时,Blackboard是你处理复杂、非结构化问题、解决方案路径提前未知的模式。它允许一个新兴的、机会主义策略,让它完美用于动态感测或复杂诊断,那里下一步总是对最新发现的反应。

来理解它的流动。。

- Shared Memory (The Blackboard): 一个中央数据存储持有问题当前状态和所有发现到现在。

- Specialist Agents: 一个独立代理池,每个有特定技能,监视黑板。

- Controller: 一个中央‘controller’代理也监视黑板。它的工作是分析当前状态,决定哪个专家最适合做下一步移动。

- Opportunistic Activation: Controller激活选择的代理。代理从黑板读,做它的工作,把发现写回去。

- Iteration: 这个过程重复,Controller动态选择下一个代理,直到它决定问题解决了。

来开始构建它。

这个系统最重要的部分是智能Controller。不像我们之前的Meta-Controller只做一次调度,这个运行在循环中。每个专家代理运行后,Controller重新评估黑板,决定下一步。

classBlackboardState(TypedDict):

user_request: str

blackboard: List[str] # The shared workspace

available_agents: List[str]

next_agent: Optional[str] # The controller's decision

classControllerDecision(BaseModel):

next_agent: str = Field(description="The name of the next agent to call. Must be one of ['News Analyst', 'Technical Analyst', 'Financial Analyst', 'Report Writer'] or 'FINISH'.")

reasoning: str = Field(description="A brief reason for choosing the next agent.")

defcontroller_node(state: BlackboardState):

"""The intelligent controller that analyzes the blackboard and decides the next step."""

console.print("--- CONTROLLER: Analyzing blackboard... ---")

controller_llm = llm.with_structured_output(ControllerDecision)

blackboard_content = "\n\n".join(state['blackboard'])

prompt = f"""You are the central controller of a multi-agent system. Your job is to analyze the shared blackboard and the original user request to decide which specialist agent should run next.

**Original User Request:**

{state['user_request']}

**Current Blackboard Content:**

---

{blackboard_content if blackboard_content else"The blackboard is currently empty."}

---

**Available Specialist Agents:**

{', '.join(state['available_agents'])}

**Your Task:**

1. Read the user request and the current blackboard content carefully.

2. Determine what the *next logical step* is to move closer to a complete answer.

3. Choose the single best agent to perform that step.

4. If the request has been fully addressed, choose 'FINISH'.

Provide your decision in the required format.

"""

decision = controller_llm.invoke(prompt)

console.print(f"--- CONTROLLER: Decision is to call '{decision.next_agent}'. Reason: {decision.reasoning} ---")

return {"next_agent": decision.next_agent}

现在我们只需在LangGraph连它。这里关键是中央循环:任何专家代理,运行后,把控制发回Controller给下一个决定。

# ... (specialist nodes are defined similarly to the multi-agent system) ...

bb_graph_builder = StateGraph(BlackboardState)

bb_graph_builder.add_node("Controller", controller_node)

bb_graph_builder.add_node("News Analyst", news_analyst_bb)

# ... add other specialist nodes ...

bb_graph_builder.set_entry_point("Controller")

defroute_to_agent(state: BlackboardState):

return state["next_agent"]

bb_graph_builder.add_conditional_edges("Controller", route_to_agent, {

"News Analyst": "News Analyst",

# ... other routes ...

"FINISH": END

})

# After any specialist runs, control always returns to the Controller

bb_graph_builder.add_edge("News Analyst", "Controller")

# ... other edges back to controller ...

blackboard_app = bb_graph_builder.compile()

为了看到为什么这比僵硬序列好多了,来给它一个有条件逻辑的任务,顺序代理会失败。

dynamic_query = "Find the latest major news about Nvidia. Based on the sentiment of that news, conduct either a technical analysis (if the news is neutral or positive) or a financial analysis (if the news is negative)."

initial_bb_input = {"user_request": dynamic_query, "blackboard": [], "available_agents": ["News Analyst", "Technical Analyst", "Financial Analyst", "Report Writer"]}

final_bb_output = blackboard_app.invoke(initial_bb_input, {"recursion_limit": 10})

console.print("\n--- [bold green]Final Report from Blackboard System[/bold green] ---")

console.print(Markdown(final_bb_output['blackboard'][-1]))

--- CONTROLLER: Analyzing blackboard... ---

Decision: call 'News Analyst'...

--- Blackboard State ---

AGENT 'News Analyst' is working...

--- CONTROLLER: Analyzing blackboard... ---

Decision: call 'Technical Analyst' (news positive)...

--- Blackboard State ---

Report 1: Nvidia news positive, new AI chip "Rubin", bullish market sentiment...

--- (Blackboard) AGENT 'Technical Analyst' is working... ---

--- CONTROLLER: Analyzing blackboard... ---

Decision: call 'Report Writer' (analysis complete, synthesize report)...

...

执行追踪显示了一个远更智能的过程。顺序代理会运行两个Technical和Financial分析师,浪费资源。我们的Blackboard系统更聪明:

- Controller Start: 它看到空板,调用News Analyst。

- News Analyst Runs: 它找到Nvidia的正面新闻,发到板上。

- Controller Re-evaluates: 它读正面新闻,正确决定下一步是调用Technical Analyst,完全跳过financial。

- Specialist Runs: technical analyst做它的工作,发它的报告。

- Controller Finishes: 它看到所有必要分析完成了,调用Report Writer合成最终答案然后结束。

这个动态、机会主义工作流程就是定义Blackboard系统的。为了让它形式化,我们的LLM-as-a-Judge评估每个贡献,评分逻辑一致性和效率,确保新兴解决方案既合理又可行动。

classProcessLogicEvaluation(BaseModel):

instruction_following_score: int = Field(description="Score 1-10 on how well the agent followed conditional instructions.")

process_efficiency_score: int = Field(description="Score 1-10 on whether the agent avoided unnecessary work.")

justification: str = Field(description="A brief justification for the scores.")

一个顺序代理这里会得可怕分数。但我们的Blackboard系统过关。

--- Evaluating Blackboard System's Process ---

{

'instruction_following_score': 7,

'process_efficiency_score': 8,

'justification': "The agent perfectly followed the user's conditional instructions. After the News Analyst reported positive sentiment, the system correctly chose to run the Technical Analyst and completely skipped the Financial Analyst. This demonstrates both flawless instruction following and optimal process efficiency."

}

对于下一步取决于中间结果的复杂问题,Blackboard架构的灵活性可以优于multi agent system。

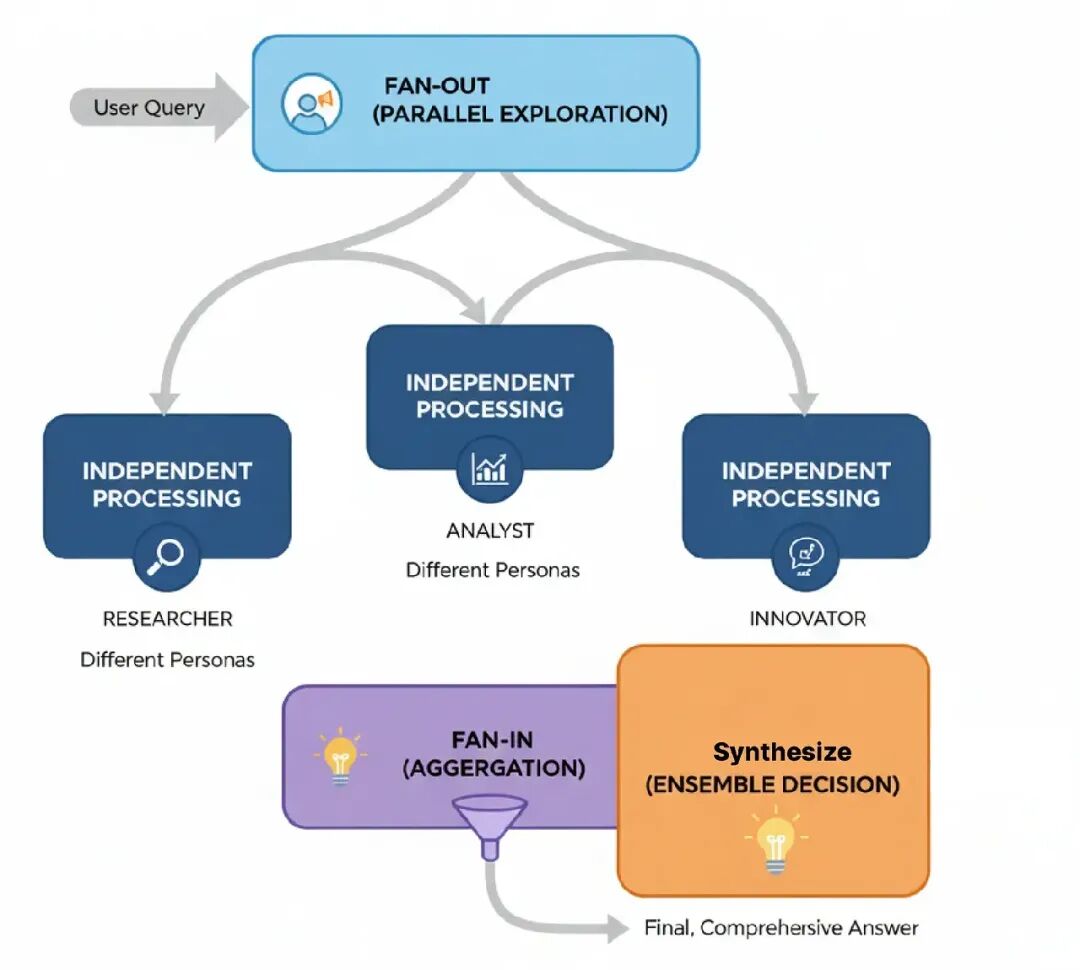

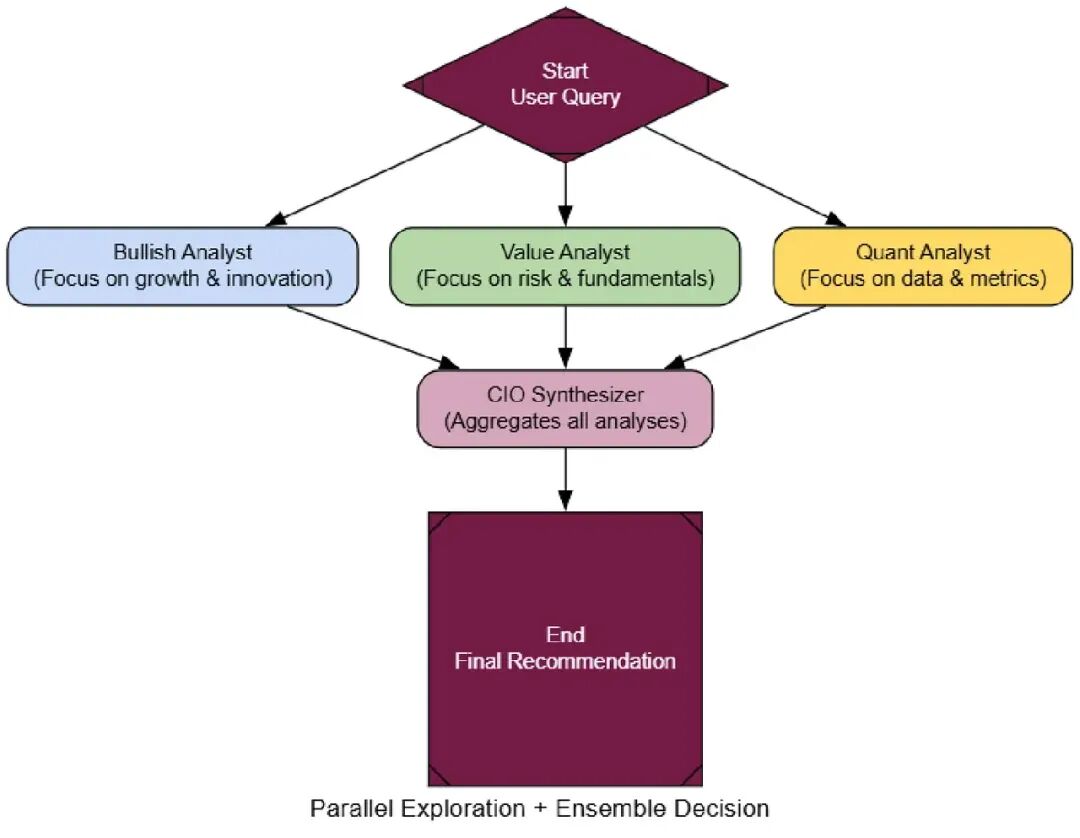

Ensemble Decision-Making

到现在,我们的所有代理,甚至团队,有一个共同点,它们产生单一推理线。

但LLMs是非确定性的,运行同一个prompt两次你可能得略不同答案。

这在高风险情况可能是问题,那里你需要可靠、全面的答案。

Ensemble Decision-Making架构直接解决这个。它基于“群众智慧”原则。

而不是靠一个代理,我们并行运行多个独立代理,往往有不同“个性”,然后用一个最终聚合代理合成它们的输出成单一的、更健壮的结论。

在大规模AI系统中,这是你处理任何关键决策支持任务的首选模式。想想一个AI投资委员会或医疗诊断系统。从不同AI个性得“第二意见”(或第三、第四)极大减少单一代理偏见或幻觉导致坏结果的机会。

来理解一下过程怎么流动的。

- Fan-Out (Parallel Exploration): 用户的查询同时发到多个专家代理,往往有不同个性来鼓励多样思考。

- Independent Processing: 每个代理孤立工作,生成它自己的完整分析。

- Fan-In (Aggregation): 所有代理的输出被收集。

- Synthesize (Ensemble Decision): 一个最终“aggregator”代理接收所有个别报告,权衡不同观点,合成一个全面的最终答案。

好ensemble的关键是认知多样性。我们会创建三个分析师代理,每个有非常不同个性:一个Bullish Growth Analyst(乐观者),一个Cautious Value Analyst(怀疑者),和一个Quantitative Analyst(数据纯粹主义者)。

classEnsembleState(TypedDict):

query: str

analyses: Dict[str, str] # Store the output from each parallel agent

final_recommendation: Optional[Any]

# We'll use our specialist factory again, but with very different personas

bullish_persona = "The Bullish Growth Analyst: You are extremely optimistic about technology and innovation. Focus on future growth potential and downplay short-term risks."

bullish_analyst_node = create_specialist_node(bullish_persona, "BullishAnalyst")

value_persona = "The Cautious Value Analyst: You are a skeptical investor focused on fundamentals and risk. Scrutinize financials, competition, and potential downside scenarios."

value_analyst_node = create_specialist_node(value_persona, "ValueAnalyst")

quant_persona = "The Quantitative Analyst (Quant): You are purely data-driven. Ignore narratives and focus only on hard numbers like financial metrics and technical indicators."

quant_analyst_node = create_specialist_node(quant_persona, "QuantAnalyst")

最终和最重要的部分是我们的aggregator,cio_synthesizer_node。它的工作是拿这些冲突报告,产生单一的、平衡的投资论点。

classFinalRecommendation(BaseModel):

"""The final, synthesized investment thesis from the CIO."""

final_recommendation: str

confidence_score: float

synthesis_summary: str

identified_opportunities: List[str]

identified_risks: List[str]

defcio_synthesizer_node(state: EnsembleState) -> Dict[str, Any]:

"""The final node that synthesizes all analyses into a single recommendation."""

console.print("--- 🏛️ Calling Chief Investment Officer for Final Decision ---")

all_analyses = "\n\n---\n\n".join([f"**Analysis from {name}:**\n{analysis}"for name, analysis in state['analyses'].items()])

cio_prompt = ChatPromptTemplate.from_messages([

("system", "You are the Chief Investment Officer. Your task is to synthesize these diverse and often conflicting viewpoints into a single, final, and actionable investment thesis. Weigh the growth potential against the risks to arrive at a balanced conclusion."),

("human", "Here are the reports from your team regarding the query: '{query}'\n\n{analyses}\n\nProvide your final, synthesized investment thesis.")

])

cio_llm = llm.with_structured_output(FinalRecommendation)

chain = cio_prompt | cio_llm

final_decision = chain.invoke({"query": state['query'], "analyses": all_analyses})

return {"final_recommendation": final_decision}

现在来连它。LangGraph对这个独特,它有一个“fan-out”一个节点分支到三个并行节点,然后一个“fan-in”那三个汇聚到最终synthesizer。

workflow = StateGraph(EnsembleState)

# The entry node just prepares the state

workflow.add_node("start_analysis", lambda state: {"analyses": {}})

# Add the parallel analyst nodes and the final synthesizer

workflow.add_node("bullish_analyst", bullish_analyst_node)

workflow.add_node("value_analyst", value_analyst_node)

workflow.add_node("quant_analyst", quant_analyst_node)

workflow.add_node("cio_synthesizer", cio_synthesizer_node)

workflow.set_entry_point("start_analysis")

# FAN-OUT: Run all three analysts in parallel

workflow.add_edge("start_analysis", ["bullish_analyst", "value_analyst", "quant_analyst"])

# FAN-IN: After all analysts are done, call the synthesizer

workflow.add_edge(["bullish_analyst", "value_analyst", "quant_analyst"], "cio_synthesizer")

workflow.add_edge("cio_synthesizer", END)

ensemble_agent = workflow.compile()

来给我们的投资委员会一个艰难、模糊的问题,那里不同视角有价值。

query = "Based on recent news, financial performance, and future outlook, is NVIDIA (NVDA) a good long-term investment in mid-2024?"

console.print(f"--- 📈 Running Investment Committee for: {query} ---")

result = ensemble_agent.invoke({"query": query})

# ... (code to print individual reports and the final CIO recommendation) ...

这个架构的力量当你看到结果时立即明显。三个分析师产生 wildly不同的报告:Bull给一个 glowing “Buy”,Value分析师一个 cautious “Hold”,Quant提供中性数据。

CIO的最终报告不只是平均它们。它执行一个真正合成,承认bull case但用value关切 temper它。

**Final Recommendation:** Buy

**Confidence Score:** 7.5/10

**Synthesis Summary:**

The committee presents a compelling but contested case for NVIDIA. There is unanimous agreement on the company's current technological dominance... However, the Value and Quant analysts raise critical, concurring points about the stock's extremely high valuation... The final recommendation is a 'Buy', but with a strong emphasis on it being a long-term position and advising a cautious entry...

**Identified Opportunities:**

* Unquestioned leadership in the AI accelerator market.

* ...

**Identified Risks:**

* Extremely high valuation (P/E and P/S ratios).

* ...

这是比任何单一代理能提供的更健壮和可信答案。为了形式化这个,我们的LLM-as-a-Judge需要评分分析深度和平衡。

classEnsembleEvaluation(BaseModel):

analytical_depth_score: int = Field(description="Score 1-10 on the depth of the analysis.")

nuance_and_balance_score: int = Field(description="Score 1-10 on how well the final answer balanced conflicting viewpoints and provided a nuanced conclusion.")

justification: str = Field(description="A brief justification for the scores.")

当被评估时,ensemble的输出得顶分。

--- Evaluating Ensemble Agent's Output ---

{

'analytical_depth_score': 9,

'nuance_and_balance_score': 8,

'justification': "The final answer is exceptionally well-balanced. It doesn't just pick a side but masterfully synthesizes the optimistic growth case with the skeptical valuation concerns, providing a nuanced recommendation that reflects the real-world complexity of the investment decision. This is a high-quality, reliable analysis."

}

你可以看到当我们用多样视角的ensemble时增加代理推理的可靠性和深度,类似我们用deep thinking模型看到的。

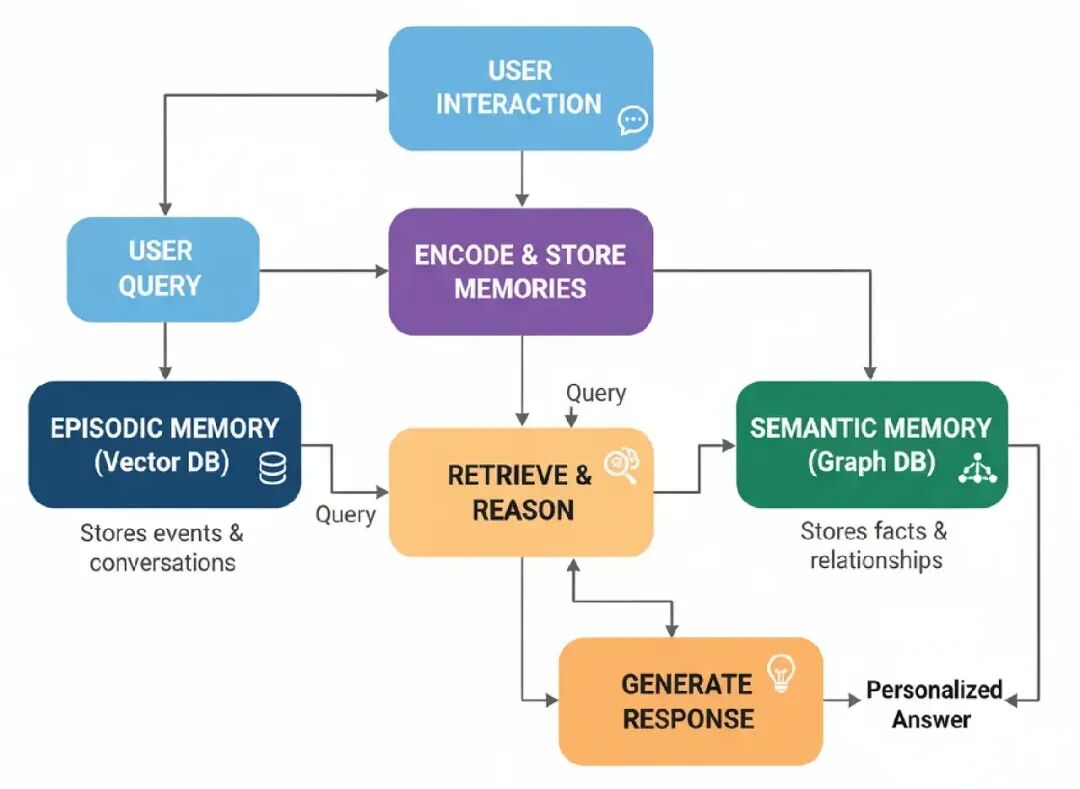

Episodic + Semantic Memory

对于我们所有代理都有金鱼的记忆,但一旦对话结束,一切被忘。

为了建一个真正学习和随用户成长的个人助理,我们需要给它一个长期记忆组件。

Episodic + Semantic Memory Stack架构模仿人类认知,给代理两种记忆:

- Episodic Memory: 这是特定事件的记忆,像过去对话。它回答,“发生了什么?”我们会用向量数据库这个。

- Semantic Memory: 这是从那些事件提取的结构化事实和关系的记忆。它回答,“我知道什么?”我们会用图数据库(Neo4j)这个。

你可能知道这是任何AI系统个性化的核心。它是怎么一个电商机器人记住你的风格,一个导师记住你的弱点,一个个人助理记住你的项目和偏好过周月。

这是它怎么工作的……

Interaction: 代理和用户对话。

- Memory Retrieval: 对于新查询,代理搜索它的episodic(向量)和semantic(图)记忆找相关上下文。

- Augmented Generation: 检索的记忆用来生成个性化的、上下文意识的响应。

- Memory Creation: 互动后,一个“memory maker”代理分析对话,创建一个总结(episodic memory),提取事实(semantic memory)。

- Memory Storage: 新记忆保存到各自数据库。

这个系统核心是“Memory Maker”,一个负责处理对话和创建新记忆的代理。它有两个工作:为向量存储创建一个简洁总结,为图提取结构化事实。

# Pydantic models for knowledge extraction

classNode(BaseModel):

id: str; type: str

classRelationship(BaseModel):

source: Node; target: Node; type: str

classKnowledgeGraph(BaseModel):

relationships: List[Relationship]

defcreate_memories(user_input: str, assistant_output: str):

conversation = f"User: {user_input}\nAssistant: {assistant_output}"

# Create Episodic Memory (Summarization)

console.print("--- Creating Episodic Memory (Summary) ---")

summary_prompt = ChatPromptTemplate.from_messages([

("system", "You are a summarization expert. Create a concise, one-sentence summary of the following user-assistant interaction. This summary will be used as a memory for future recall."),

("human", "Interaction:\n{interaction}")

])

summarizer = summary_prompt | llm

episodic_summary = summarizer.invoke({"interaction": conversation}).content

new_doc = Document(page_content=episodic_summary, metadata={"created_at": uuid.uuid4().hex})

episodic_vector_store.add_documents([new_doc])

console.print(f"[green]Episodic memory created:[/green] '{episodic_summary}'")

# Create Semantic Memory (Fact Extraction)

console.print("--- Creating Semantic Memory (Graph) ---")

extraction_llm = llm.with_structured_output(KnowledgeGraph)

extraction_prompt = ChatPromptTemplate.from_messages([

("system", "You are a knowledge extraction expert. Your task is to identify key entities and their relationships from a conversation and model them as a graph. Focus on user preferences, goals, and stated facts."),

("human", "Extract all relationships from this interaction:\n{interaction}")

])

extractor = extraction_prompt | extraction_llm

try:

kg_data = extractor.invoke({"interaction": conversation})

if kg_data.relationships:

for rel in kg_data.relationships:

graph.add_graph_documents([rel], include_source=True)

console.print(f"[green]Semantic memory created:[/green] Added {len(kg_data.relationships)} relationships to the graph.")

else:

console.print("[yellow]No new semantic memories identified in this interaction.[/yellow]")

except Exception as e:

console.print(f"[red]Could not extract or save semantic memory: {e}[/red]")

用我们的memory maker准备好,我们可以建完整代理。图是一个简单序列:检索记忆,用它们生成响应,然后用新对话更新记忆。

classAgentState(TypedDict):

user_input: str

retrieved_memories: Optional[str]

generation: str

defretrieve_memory(state: AgentState) -> Dict[str, Any]:

"""Node that retrieves memories from both episodic and semantic stores."""

console.print("--- Retrieving Memories ---")

user_input = state['user_input']

# Retrieve from episodic memory

retrieved_docs = episodic_vector_store.similarity_search(user_input, k=2)

episodic_memories = "\n".join([doc.page_content for doc in retrieved_docs])

# Retrieve from semantic memory

# This is a simple retrieval; more advanced would involve entity extraction from the query

try:

graph_schema = graph.get_schema

# Using a fulltext index for better retrieval. Neo4j automatically creates one on node properties.

# A more robust solution might involve extracting entities from user_input first.

semantic_memories = str(graph.query("""

UNWIND $keywords AS keyword

CALL db.index.fulltext.queryNodes("entity", keyword) YIELD node, score

MATCH (node)-[r]-(related_node)

RETURN node, r, related_node LIMIT 5

""", {'keywords': user_input.split()}))

except Exception as e:

semantic_memories = f"Could not query graph: {e}"

retrieved_content = f"Relevant Past Conversations (Episodic Memory):\n{episodic_memories}\n\nRelevant Facts (Semantic Memory):\n{semantic_memories}"

console.print(f"[cyan]Retrieved Context:\n{retrieved_content}[/cyan]")

return {"retrieved_memories": retrieved_content}

defgenerate_response(state: AgentState) -> Dict[str, Any]:

"""Node that generates a response using the retrieved memories."""

console.print("--- Generating Response ---")

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful and personalized financial assistant. Use the retrieved memories to inform your response and tailor it to the user. If the memories indicate a user's preference (e.g., they are a conservative investor), you MUST respect it."),

("human", "My question is: {user_input}\n\nHere are some memories that might be relevant:\n{retrieved_memories}")

])

generator = prompt | llm

generation = generator.invoke(state).content

console.print(f"[green]Generated Response:\n{generation}[/green]")

return {"generation": generation}

defupdate_memory(state: AgentState) -> Dict[str, Any]:

"""Node that updates the memory with the latest interaction."""

console.print("--- Updating Memory ---")

create_memories(state['user_input'], state['generation'])

return {}

workflow = StateGraph(AgentState)

workflow.add_node("retrieve", retrieve_memory)

workflow.add_node("generate", generate_response)

workflow.add_node("update", update_memory)

# ... (wire nodes sequentially) ...

memory_agent = workflow.compile()

唯一测试这个的方式是用多轮对话。我们会有一个对话来种记忆,第二个看代理是否能用它。

# Interaction 1: Seeding the memory

run_interaction("Hi, my name is Alex. I'm a conservative investor, mainly interested in established tech companies.")

# Interaction 2: THE MEMORY TEST

run_interaction("Based on my goals, what's a good alternative to Apple?")

--- 💬 INTERACTION 1: Seeding Memory ---

--- Retrieving Memories...

Retrieved Context: Past conversations + initial document...

--- Generating Response...

Generated Response: Hello, Alex! Conservative investor strategy noted...

--- Updating Memory...

Episodic memory created: 'User Alex is conservative investor in tech...'

Semantic memory created: Added 2 relationships...

--- 💬 INTERACTION 2: Asking a specific question ---

--- Retrieving Memories...

Retrieved Context: Alex as conservative investor...

--- Generating Response...

Generated Response: Apple (AAPL) fits conservative tech portfolio, strong brand, stable revenue...

--- Updating Memory...

Episodic memory created: 'User asked about Apple; assistant confirmed suitability...'

Semantic memory created: Added 1 relationship...

一个无状态代理会失败第二个查询,因为它不知道Alex的目标。但我们的记忆增强代理成功。

Recall Episodically: 它检索第一个对话的总结:“The user, Alex, introduced himself as a conservative investor…”

Recall Semantically: 它查询图,找到事实:(User: Alex) -[HAS_GOAL]-> (InvestmentPhilosophy: Conservative)。

Synthesize: 它用这个结合上下文给一个完美的、个性化的推荐。 我们可以用一个LLM-as-a-Judge形式化这个,评分个性化。

classPersonalizationEvaluation(BaseModel):

personalization_score: int = Field(description="Score 1-10 on how well the agent used past interactions and user preferences to tailor its response.")

justification: str = Field(description="A brief justification for the score.")

当被评估时,代理得顶分。

--- Evaluating Memory Agent's Personalization ---

{

'personalization_score': 7,

'justification': "The agent's response was perfectly personalized. It explicitly referenced the user's stated goal of being a 'conservative investor' (which it recalled from a previous conversation) to justify its recommendation of Microsoft. This demonstrates a deep, stateful understanding of the user."

}

通过结合episodic和semantic recall……

我们能建代理从简单Q&A移到成为真正的、学习的伙伴。

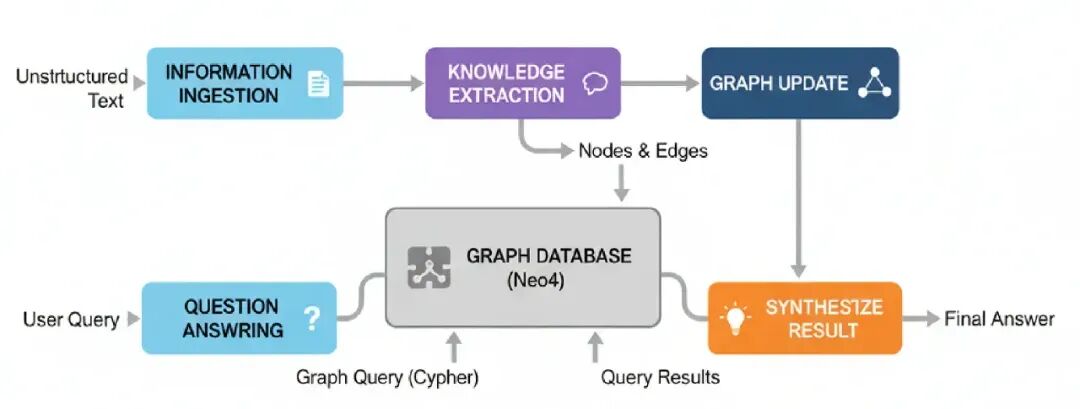

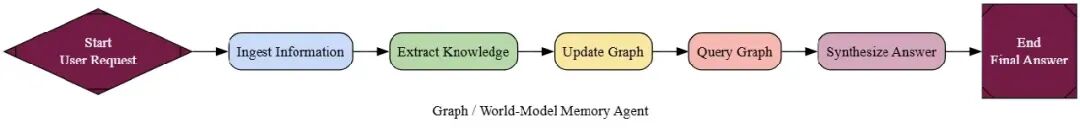

Graph (World-Model) Memory

所以上一个代理能记住东西,这是个好步向个性化。但它的记忆还是有点断开的。它能回忆一个对话发生了(episodic)和一个事实存在(semantic)……

但它挣扎理解所有事实之间复杂的关系网。

Graph (World-Model) Memory架构解决这个问题。

不是只存储事实,这个代理建一个结构化的、互联的“world model”它的知识。它摄入非结构化文本,把它转成实体(节点)和关系(边)的丰富知识图。

在大规模AI系统中,这是你建一个真“脑”的方式。它是任何需要回答复杂、multi-hop问题、需要连接分散信息片段的系统的基礎。想想一个公司情报系统,需要从成千文档理解公司、员工和产品之间的关系。

来理解一下过程怎么流动的。

- Information Ingestion: 代理读非结构化文本(像新闻文章或报告)。

- Knowledge Extraction: 一个LLM驱动的过程解析文本,识别关键实体和连接它们的关大系。

- Graph Update: 提取的节点和边加到持久图数据库像Neo4j。

- Question Answering: 当被问问题时,代理把用户查询转成正式图查询(像Cypher),执行它,合成结果成答案。

这个系统核心是“Graph Maker”代理。它的工作是读一段文本,吐出一个结构化的实体和关系列表。我们用Pydantic确保它的输出干净,准备好给我们的数据库。

# Pydantic models for structured extraction

classNode(BaseModel):

id: str = Field(description="Unique name or identifier for the entity.")

type: str = Field(description="The type of the entity (e.g., Person, Company).")

classRelationship(BaseModel):

source: Node

target: Node

type: str = Field(description="The type of relationship (e.g., WORKS_FOR, ACQUIRED).")

classKnowledgeGraph(BaseModel):

relationships: List[Relationship]

defget_graph_maker_chain():

"""Creates the agent responsible for extracting knowledge."""

extractor_llm = llm.with_structured_output(KnowledgeGraph)