kube-prometheus 介绍和安装讲解(k8s环境推荐)

介绍了kube-prometheus 介绍和安装讲解(k8s环境推荐)

kube-prometheus 优点

-

一键化部署k8s-prometheus中的所有组件

-

复杂的k8s采集自动生成

-

内置了很多alert和record rule,专业的promql,不用我们自己写了

-

自定义指标的接入可以由业务方自行配置,无需监控管理员介入

安装部署 kube-prometheus

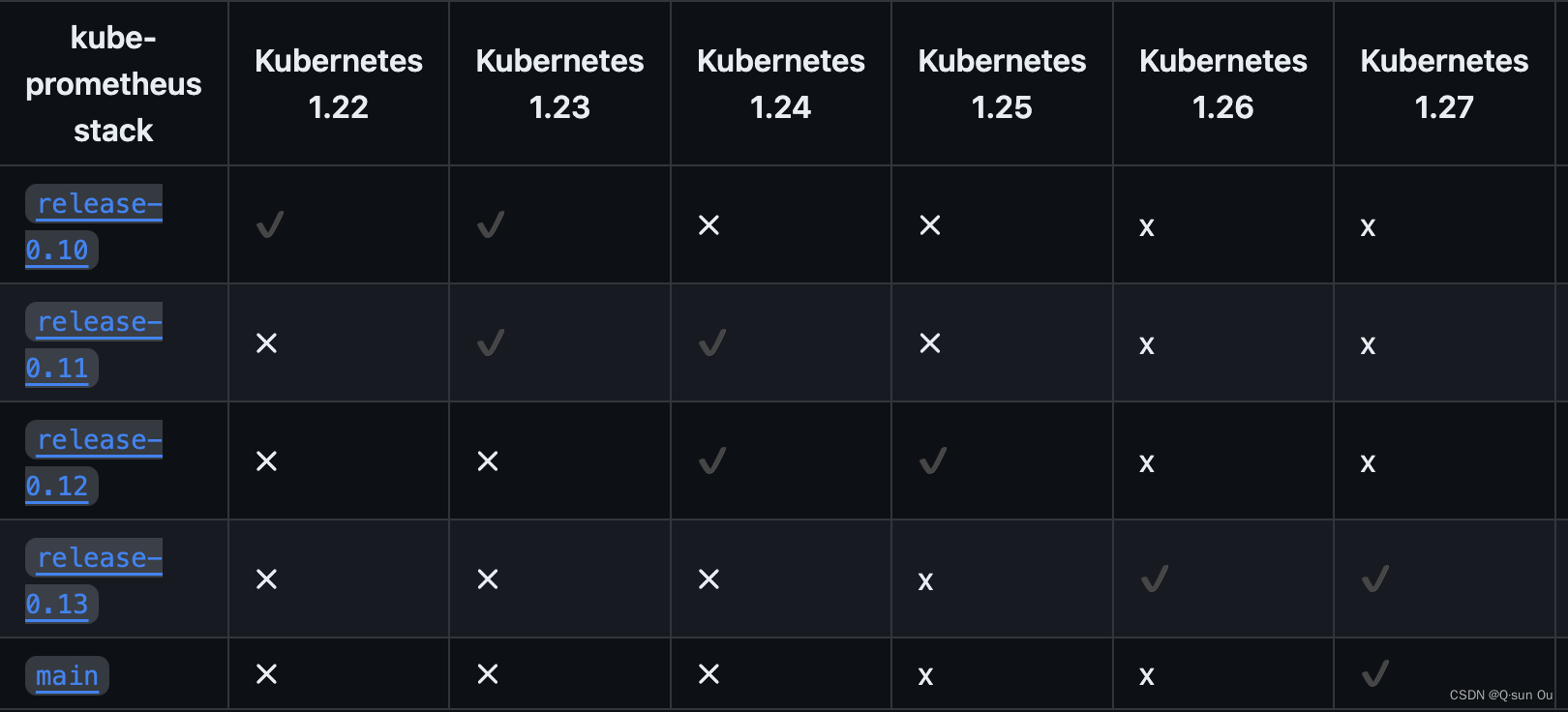

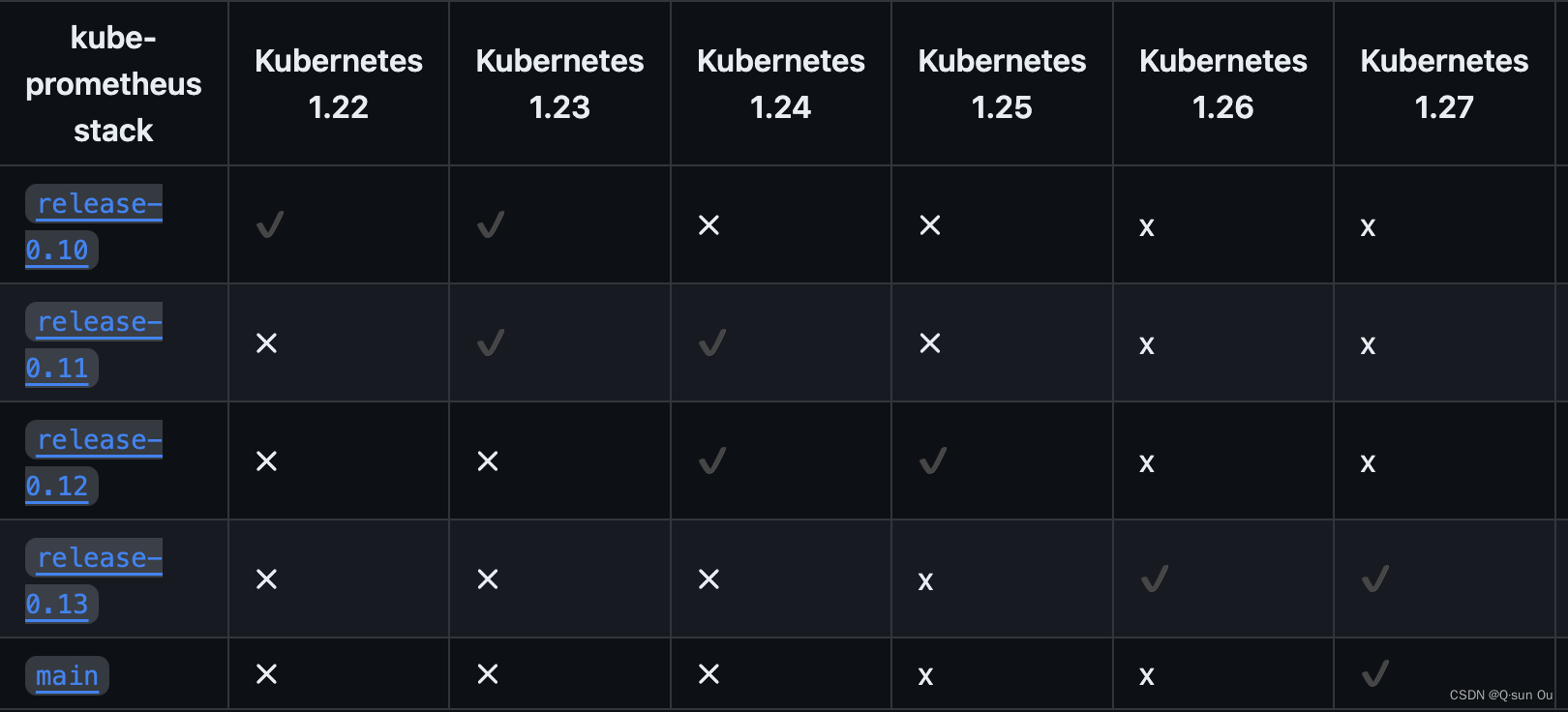

根据k8s集群版本选择kube-prometheus 版本

下载kube-prometheus 源码

git clone https://github.com/prometheus-operator/kube-prometheus.git

-

根据k8s集群版本切换到指定的分支

git checkout -b release-0.8 remotes/origin/release-0.11

创建命名空间和CRD

-

执行命令

-

kubectl create -f manifests/setup

解读 setup做了什么

-

01 创建命名空间 monitoring

-

02 创建鉴权相关

-

03 创建prometheus-operator的deployment

-

04 创建所需的CRD

创建授权信息和手动直接创建prometheus授权是一样的

-

创建clusterrole 和 clusterrolebinding并赋给serviceaccount

-

clusterrole

-

clusterrolebinding

-

serviceaccount

创建名为prometheus-operator 的serviceaccount

-

manifests\setup\prometheus-operator-serviceAccount.yaml

创建名为prometheus-operator 的clusterrole

-

manifests\setup\prometheus-operator-clusterRole.yaml

-

apiGroups=monitoring.coreos.com 能够操作几乎所有的资源,verbs=*代表没限制

- apiGroups:

- monitoring.coreos.com

resources:

- alertmanagers

- alertmanagers/finalizers

- alertmanagerconfigs

- prometheuses

- prometheuses/finalizers

- thanosrulers

- thanosrulers/finalizers

- servicemonitors

- podmonitors

- probes

- prometheusrules

verbs:

- '*'

创建名为prometheus-operator 的ClusterRoleBinding

-

并且将prometheus-operator的ClusterRole绑定给ServiceAccount prometheus-operator

-

位置 manifests\setup\prometheus-operator-clusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.47.0

name: prometheus-operator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-operator

subjects:

- kind: ServiceAccount

name: prometheus-operator

namespace: monitoring

创建prometheus-operator的deployment

创建prometheus-operator的service

-

位置 manifests\setup\prometheus-operator-service.yaml

-

指定后端的pod名称为prometheus-operator

-

pod端口为443,

-

service的端口为8443

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.47.0

name: prometheus-operator

namespace: monitoring

spec:

clusterIP: None

ports:

- name: https

port: 8443

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

创建prometheus-operator的deployment 部署两个容器

位置 manifests\setup\prometheus-operator-deployment.yaml

容器01 prometheus-operator

- args:

- --kubelet-service=kube-system/kubelet

- --prometheus-config-reloader=quay.io/prometheus-operator/prometheus-config-reloader:v0.47.0

image: quay.io/prometheus-operator/prometheus-operator:v0.47.0

name: prometheus-operator

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

容器02 kube-rbac-proxy

- args:

- --logtostderr

- --secure-listen-address=:8443

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

- --upstream=http://127.0.0.1:8080/

image: quay.io/brancz/kube-rbac-proxy:v0.8.0

name: kube-rbac-proxy

ports:

- containerPort: 8443

name: https

resources:

limits:

cpu: 20m

memory: 40Mi

requests:

cpu: 10m

memory: 20Mi

securityContext:

runAsGroup: 65532

runAsNonRoot: true

runAsUser: 65532

创建所需的CRD

-

位置 manifests\setup\prometheus-operator-xxxxCustomResourceDefinition.yaml

创建资源

执行命令

kubectl create -f manifests/

然而:kube-state-metrics和prometheus-adapter镜像地址,在国内无法下载,镜像源是k8s_io,是拉不到的

解决方法:

1.自己先用魔法下载下来

2.修改yaml文件镜像源

vi manifests/kubeStateMetrics-deployment.yaml

image: bitnami/kube-state-metrics:2.7.0 #版本看看自己Yaml文件里面是多少就好

vi manifests/prometheusAdapter-deployment.yaml

image: cloveropen/prometheus-adapter:v0.10.0

检查最终部署情况

-

部署了3个alertmanager

-

部署了1个blackbox-exporter

-

部署了1个grafana

-

部署了1个kube-state-metrics

-

部署了2个node_exporter(节点数量)

-

部署了1个kube-state-metrics

-

部署了2个prometheus-adapter

-

部署了2个prometheus-k8s

[root@k8s-master01 kube-prometheus]# kubectl -n monitoring get pod

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 83s

alertmanager-main-1 2/2 Running 0 83s

alertmanager-main-2 2/2 Running 0 83s

blackbox-exporter-55c457d5fb-rzn7l 3/3 Running 0 82s

grafana-9df57cdc4-tf6qj 1/1 Running 0 82s

kube-state-metrics-76f6cb7996-27dc2 3/3 Running 0 81s

node-exporter-7rqfg 2/2 Running 0 81s

node-exporter-b5pnx 2/2 Running 0 81s

prometheus-adapter-59df95d9f5-28n4c 1/1 Running 0 81s

prometheus-adapter-59df95d9f5-glwk7 1/1 Running 0 81s

prometheus-k8s-0 2/2 Running 1 81s

prometheus-k8s-1 2/2 Running 1 81s

prometheus-operator-7775c66ccf-hkmpr 2/2 Running 0 44m

删除的命令

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup\

访问部署成果

prometheus-k8s 的svc改为NodePort型(LB的自己根据云厂商或者产品自己改)

-

kubectl edit svc -n monitoring prometheus-k8s

-

type: NodePort

-

nodePort: 6090

-

spec:

clusterIP: 10.96.200.87

clusterIPs:

- 10.96.200.87

externalTrafficPolicy: Cluster

ports:

- name: web

nodePort: 6090

port: 9090

protocol: TCP

targetPort: web

selector:

app: prometheus

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

prometheus: k8s

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: NodePort

浏览器访问node 的6090端口

grafana 的svc改为nodePort型

-

kubectl edit svc -n monitoring grafana

-

type: NodePort

-

nodePort: 3003

-

spec:

clusterIP: 10.96.171.57

clusterIPs:

- 10.96.171.57

externalTrafficPolicy: Cluster

ports:

- name: http

nodePort: 3003

port: 3000

protocol: TCP

targetPort: http

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: None

type: NodePort

浏览器访问节点 的3003端口

-

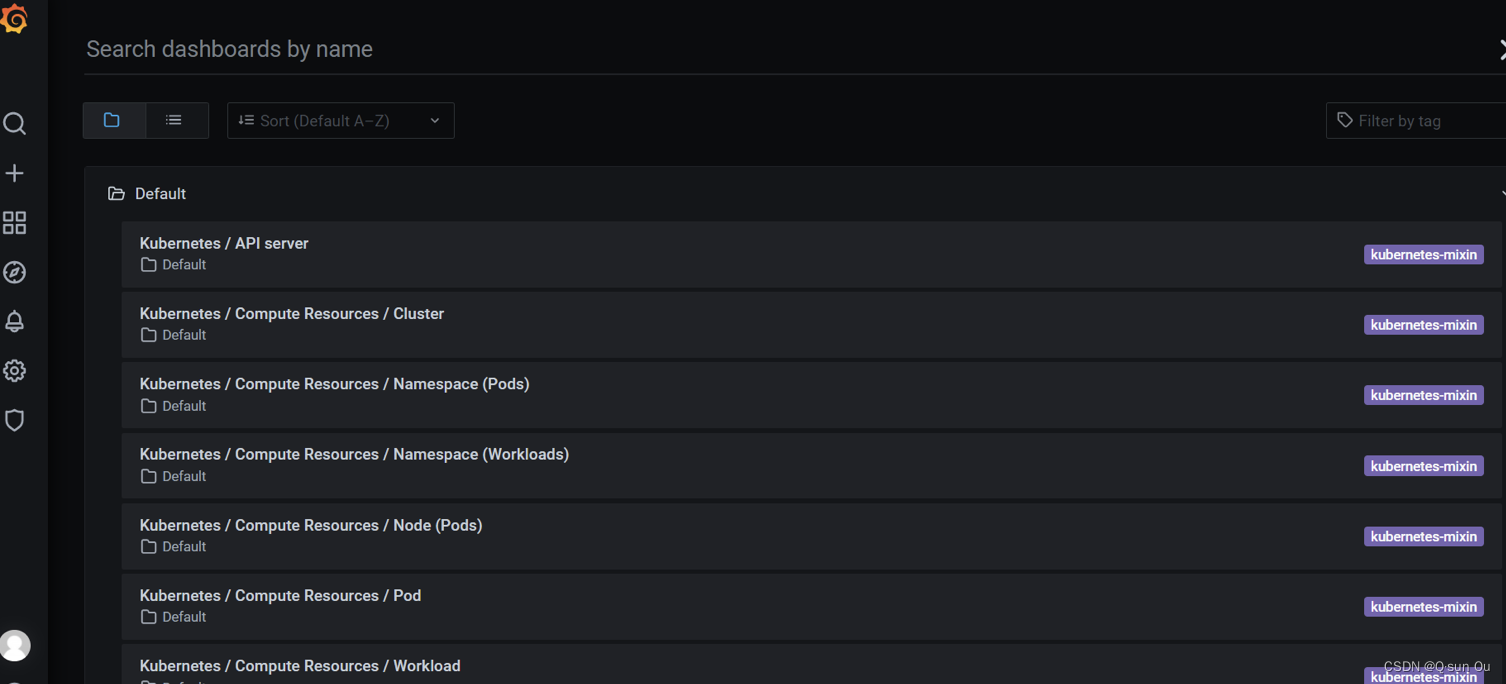

内置的dashboard查看,人家都帮你弄好了超爽

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)