Ubuntu 22.04.5系统使用k8s v1.33.4部署若依微服务(ruoyi-cloud)运行容器使用containerd 1.7.13

摘要: 本文详细介绍了在Kubernetes集群上部署若依微服务系统的完整过程。主要内容包括: 集群规划:包含3个节点(1个master和2个worker)和1个Harbor仓库节点 环境准备:Ubuntu系统配置、网络设置、时区同步等基础环境搭建 Kubernetes集群部署:containerd运行时安装、kubeadm集群初始化、Calico网络插件配置 Harbor私有仓库搭建与配置 NF

一、项目主机规划

|

主机名 |

IP地址 |

版本 |

系统 |

角色 |

容器运行时 |

|

K8s-master01 |

192.168.8.10 |

v1.33.4 |

Ubuntu 22.04.5 LTS |

管理节点 |

containerd://1 .7.13 |

|

worker02 |

192.168.8.20 |

v1.33.4 |

Ubuntu 22.04.5 LTS |

工作节点 |

containerd://1 .7.13 |

|

worker03 |

192.168.8.30 |

v1.33.4 |

Ubuntu 22.04.5 LTS |

工作节点 |

containerd://1 .7.13 |

|

k8snfs,Harbor |

192.168.8.40 |

Harbor仓库v2.13.1 |

Ubuntu 22.04.5 LTS |

NFS-storage |

Docker-26 |

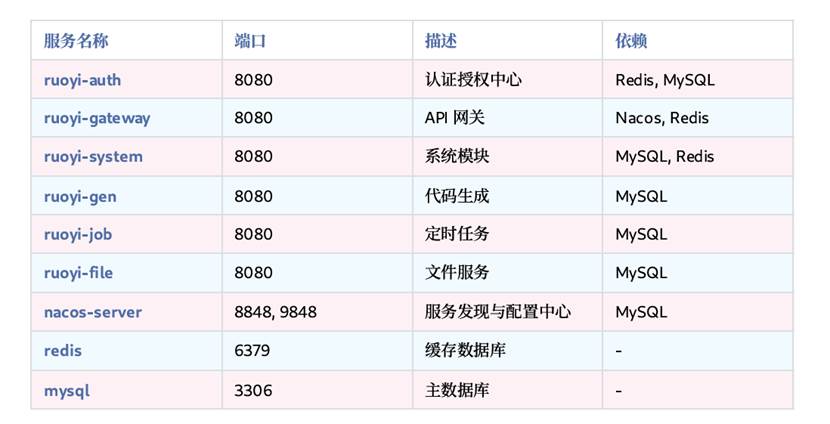

RuoYi-Cloud 主要包含以下组件,每个组件都将被部署为独⽴的 K8s Deployment 和 Service。

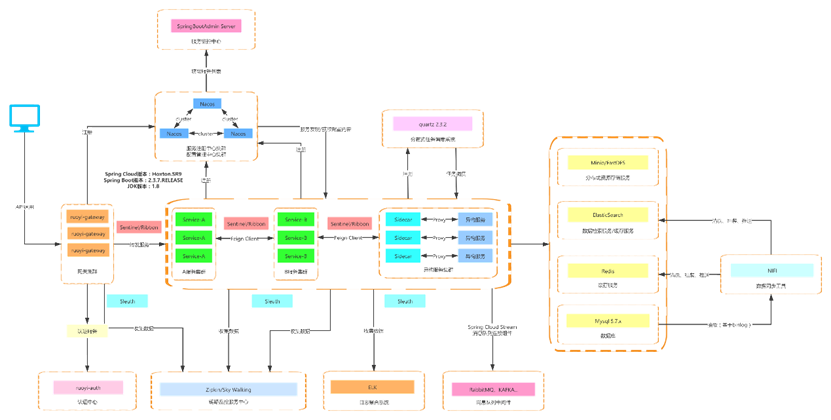

架构图

后端结构

com.ruoyi

├── ruoyi-ui // 前端框架 [80]

├── ruoyi-gateway // 网关模块 [8080]

├── ruoyi-auth // 认证中心 [9200]

├── ruoyi-api // 接口模块

│ └── ruoyi-api-system // 系统接口

├── ruoyi-common // 通用模块

│ └── ruoyi-common-core // 核心模块

│ └── ruoyi-common-datascope // 权限范围

│ └── ruoyi-common-datasource // 多数据源

│ └── ruoyi-common-log // 日志记录

│ └── ruoyi-common-redis // 缓存服务

│ └── ruoyi-common-seata // 分布式事务

│ └── ruoyi-common-security // 安全模块

│ └── ruoyi-common-sensitive // 数据脱敏

│ └── ruoyi-common-swagger // 系统接口

├── ruoyi-modules // 业务模块

│ └── ruoyi-system // 系统模块 [9201]

│ └── ruoyi-gen // 代码生成 [9202]

│ └── ruoyi-job // 定时任务 [9203]

│ └── ruoyi-file // 文件服务 [9300]

├── ruoyi-visual // 图形化管理模块

│ └── ruoyi-visual-monitor // 监控中心 [9100]

├──pom.xml // 公共依赖

前端结构

├── build // 构建相关

├── bin // 执行脚本

├── public // 公共文件

│ ├── favicon.ico // favicon图标

│ └── index.html // html模板

├── src // 源代码

│ ├── api // 所有请求

│ ├── assets // 主题 字体等静态资源

│ ├── components // 全局公用组件

│ ├── directive // 全局指令

│ ├── layout // 布局

│ ├── plugins // 通用方法

│ ├── router // 路由

│ ├── store // 全局 store管理

│ ├── utils // 全局公用方法

│ ├── views // view

│ ├── App.vue // 入口页面

│ ├── main.js // 入口 加载组件 初始化等

│ ├── permission.js // 权限管理

│ └── settings.js // 系统配置

├── .editorconfig // 编码格式

├── .env.development // 开发环境配置

├── .env.production // 生产环境配置

├── .env.staging // 测试环境配置

├── .eslintignore // 忽略语法检查

├── .eslintrc.js // eslint 配置项

├── .gitignore // git 忽略项

├── babel.config.js // babel.config.js

├── package.json // package.json

└── vue.config.js // vue.config.js

二、基本环境准备

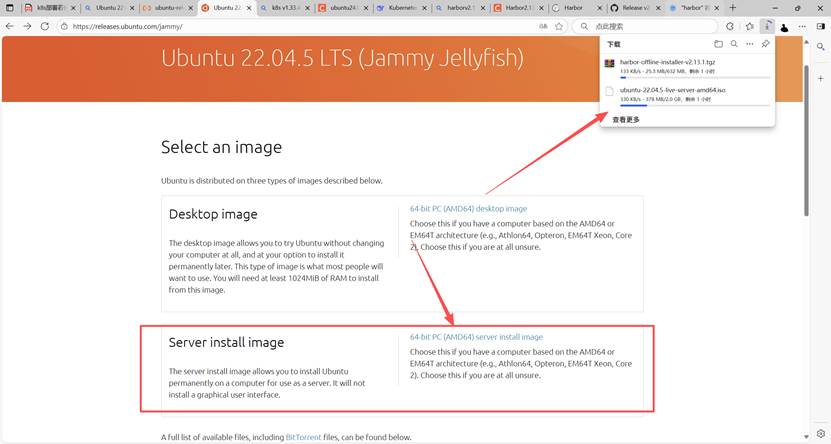

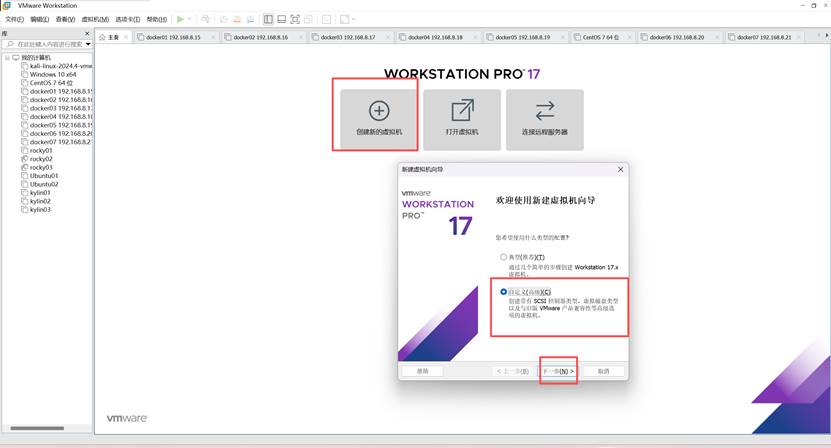

1、Ubuntu22.04.5镜像准备

https://releases.ubuntu.com/jammy/

或者

https://mirrors.aliyun.com/ubuntu-releases/22.04.5/

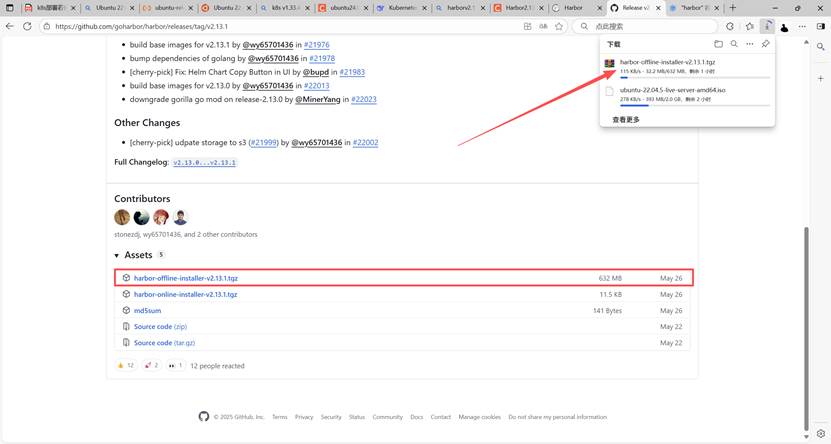

2、Harbor仓库软件包准备

https://github.com/goharbor/harbor/releases/tag/v2.13.1

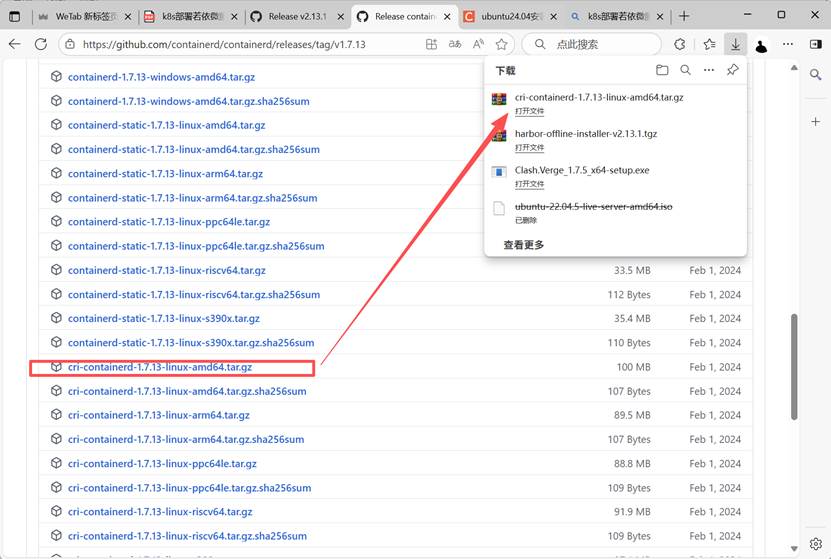

3、Containerd文件准备

https://github.com/containerd/containerd/releases/tag/v1.7.13

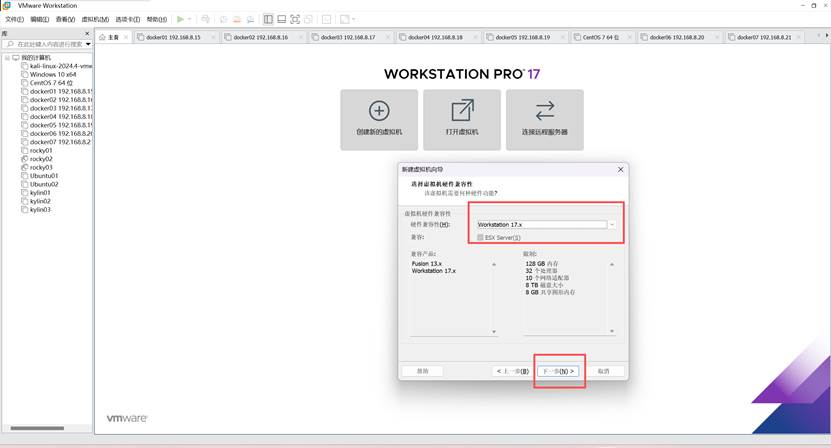

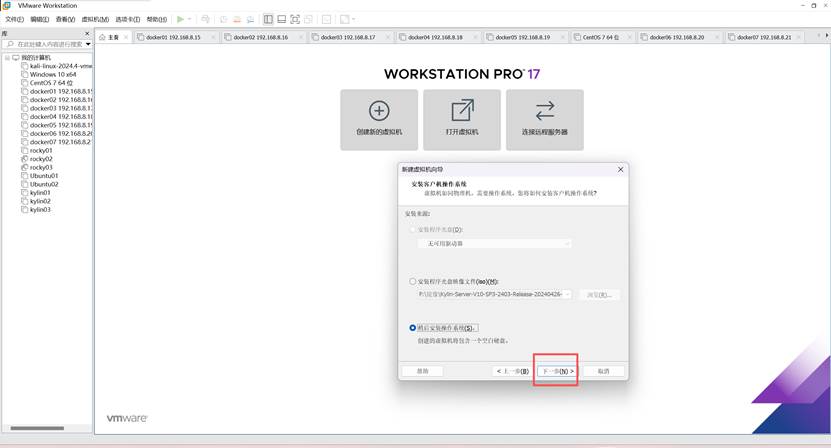

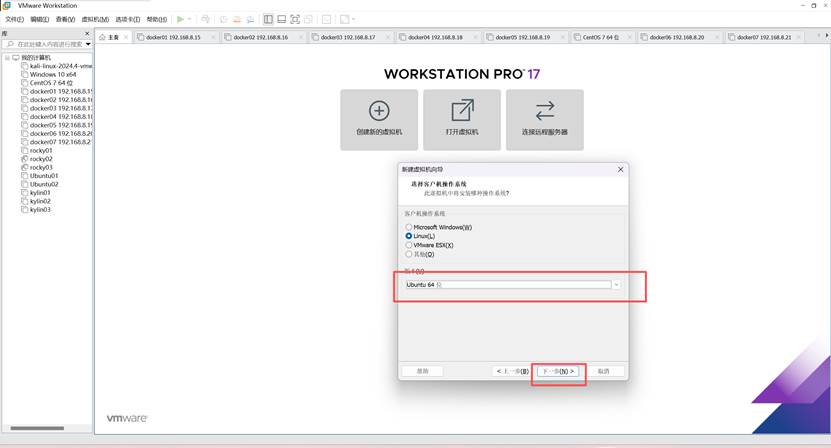

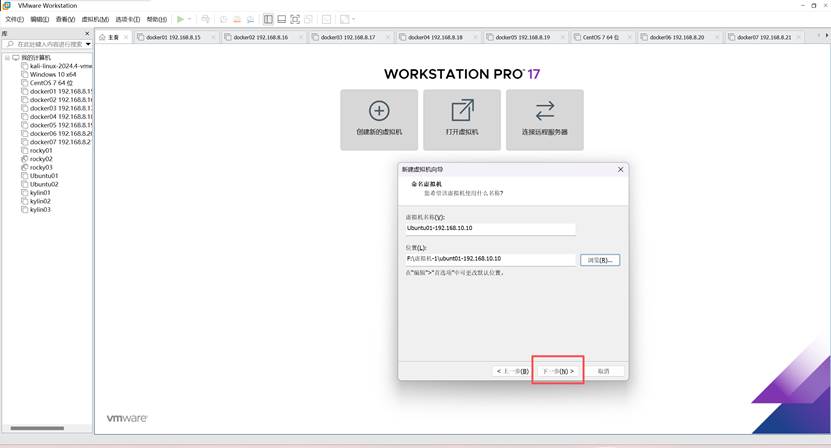

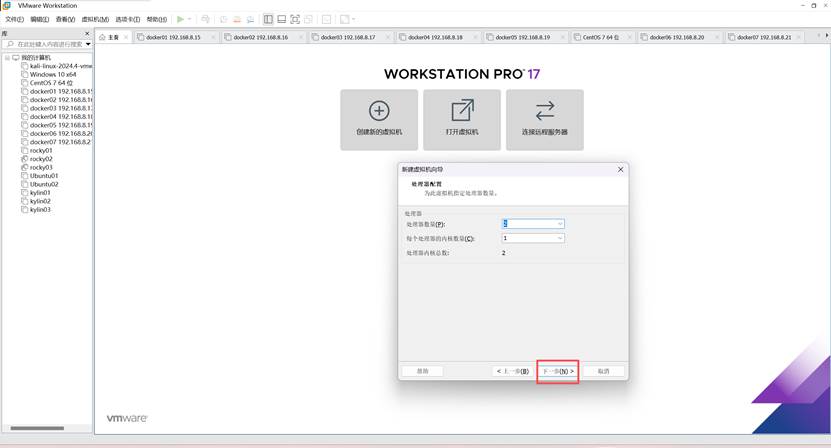

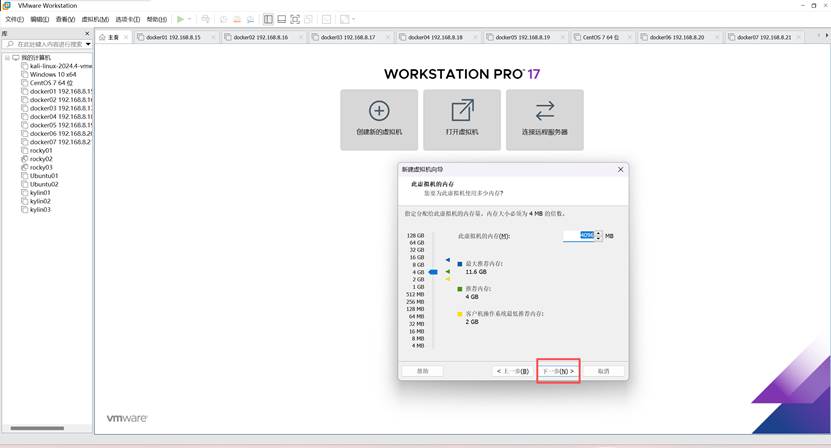

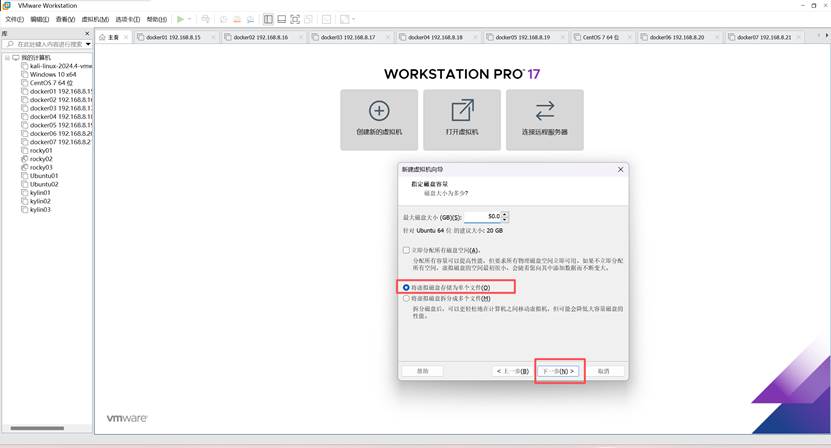

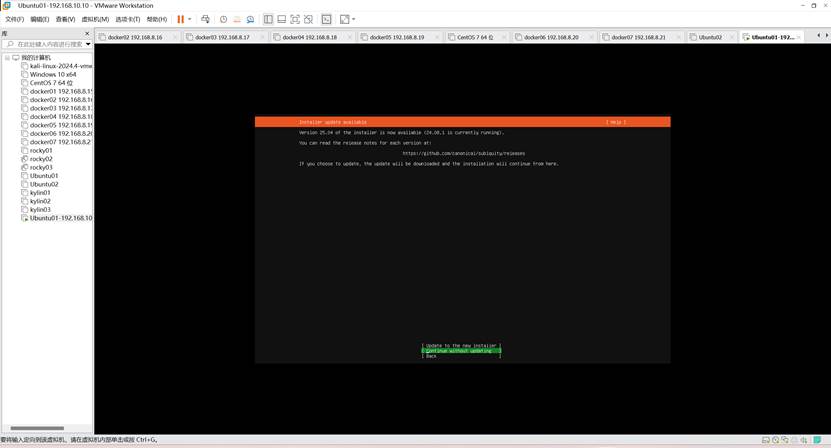

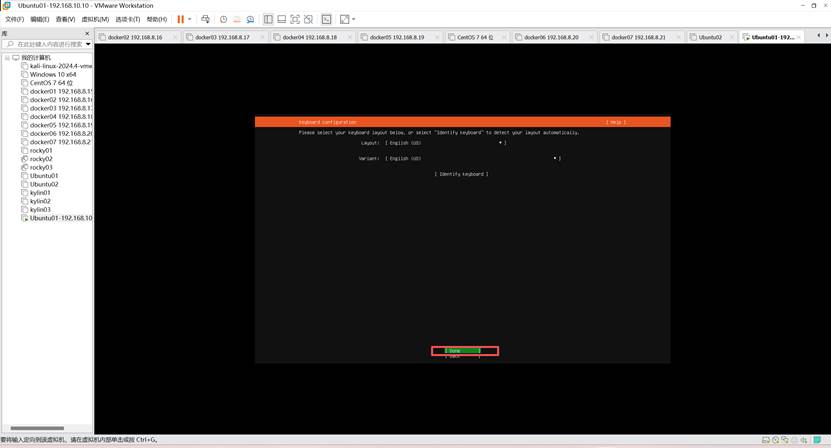

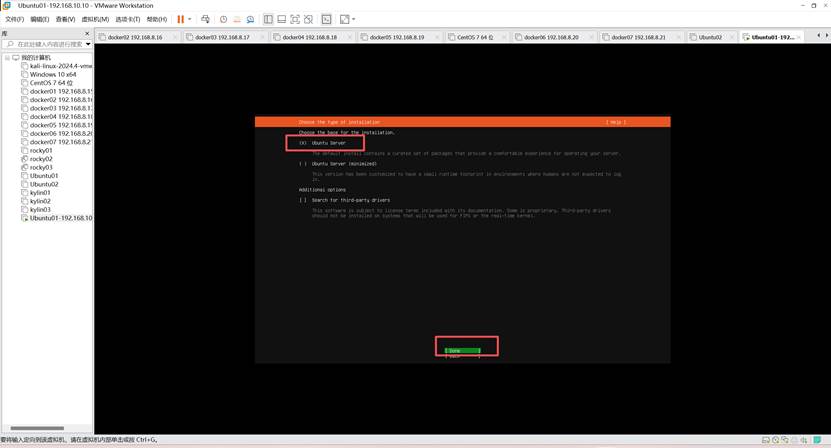

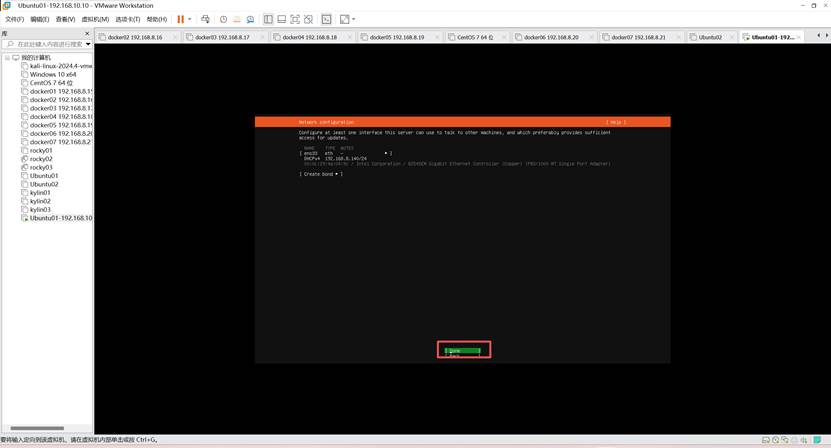

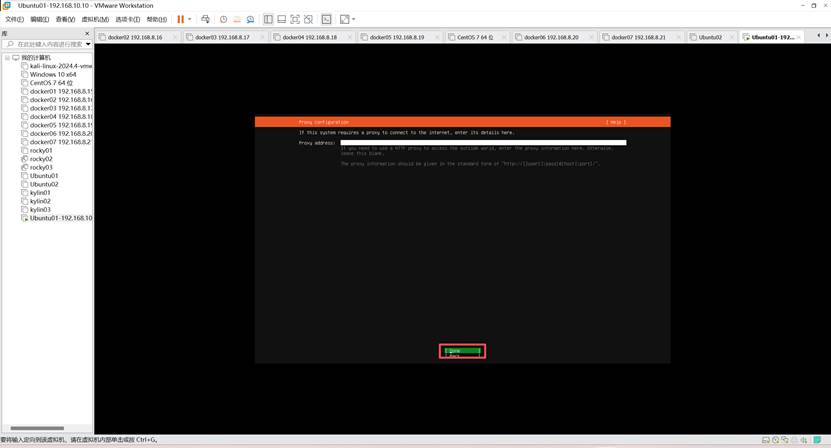

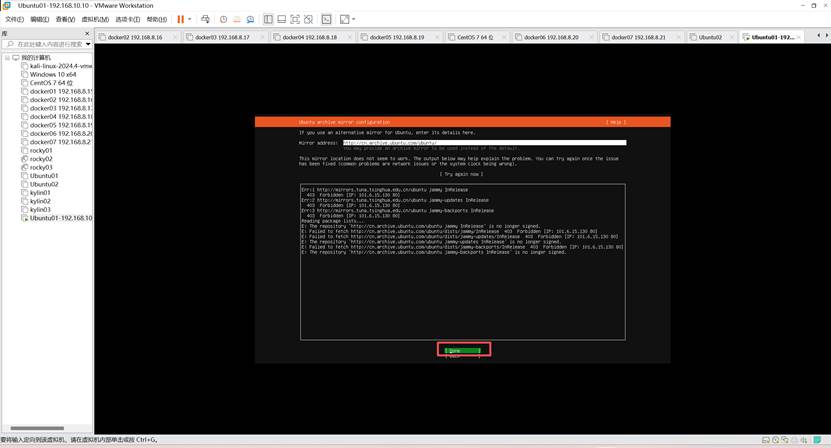

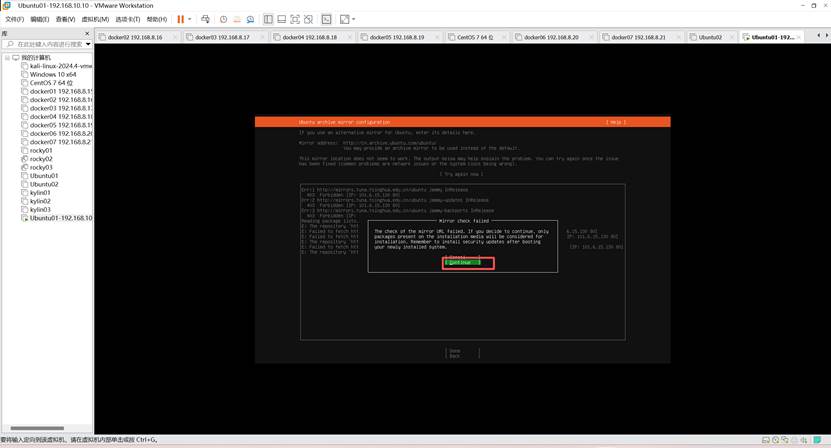

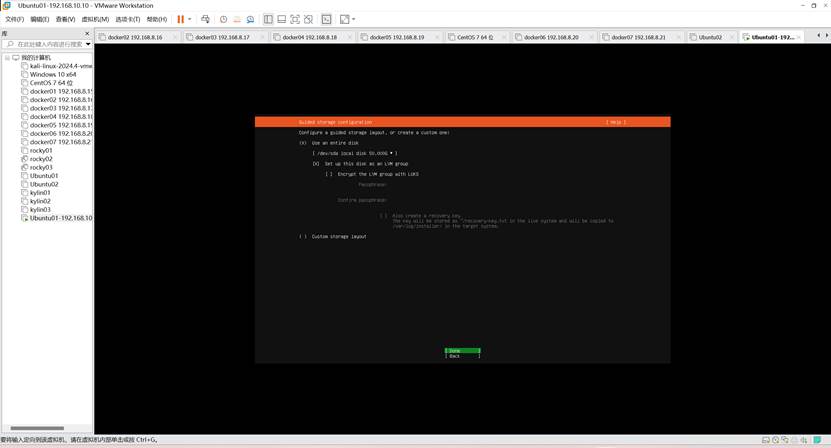

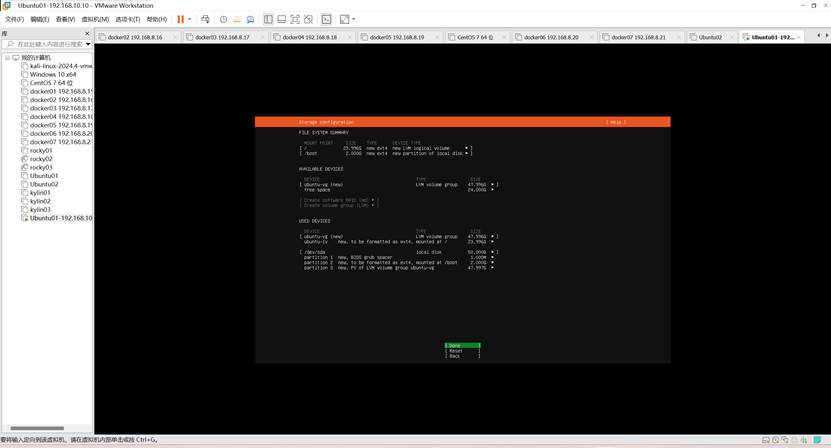

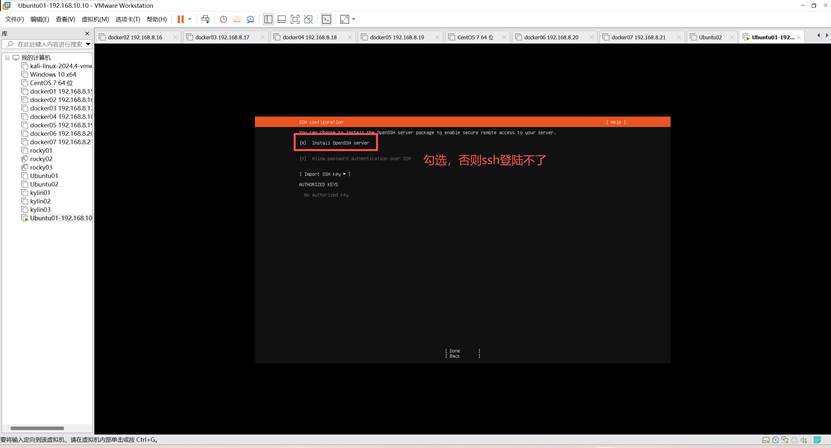

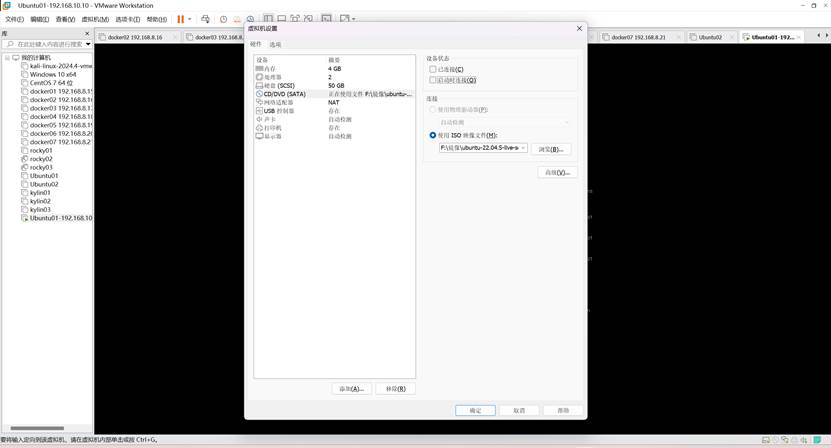

4、安装ubuntu22.04.5

下一步-下一步-下一步-下一步

下一步-完成

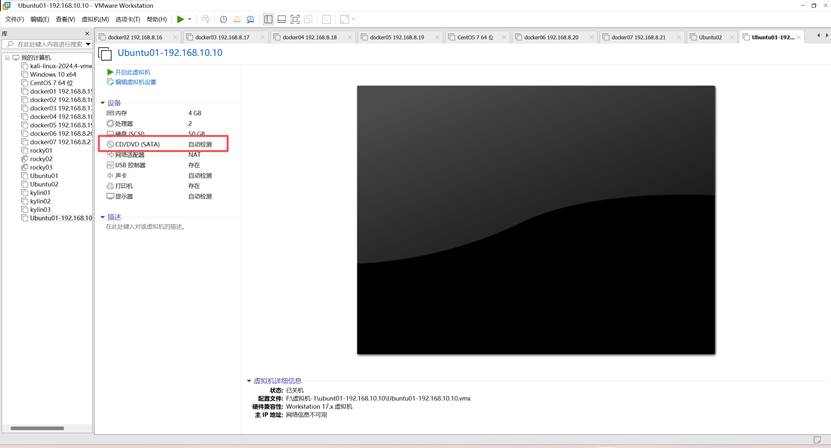

修改镜像

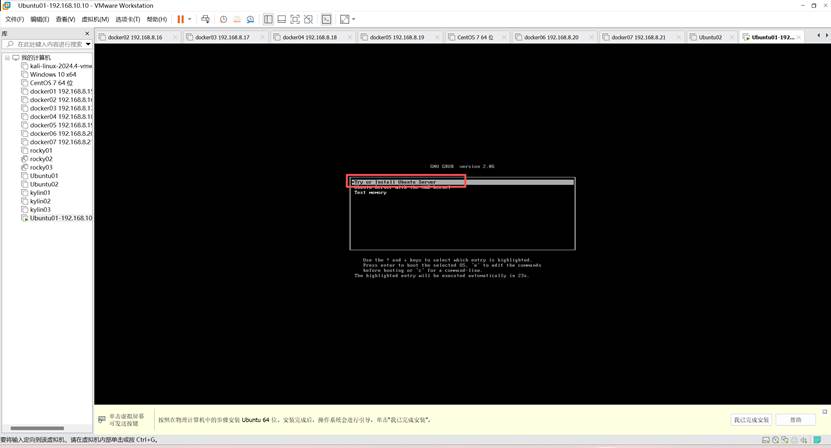

开机

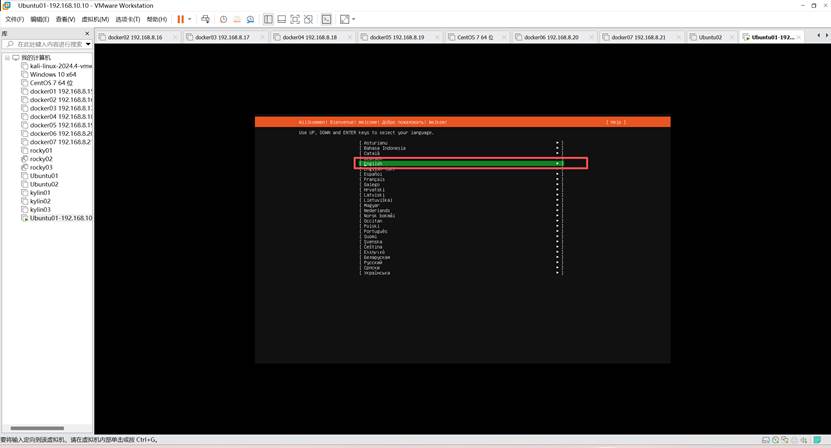

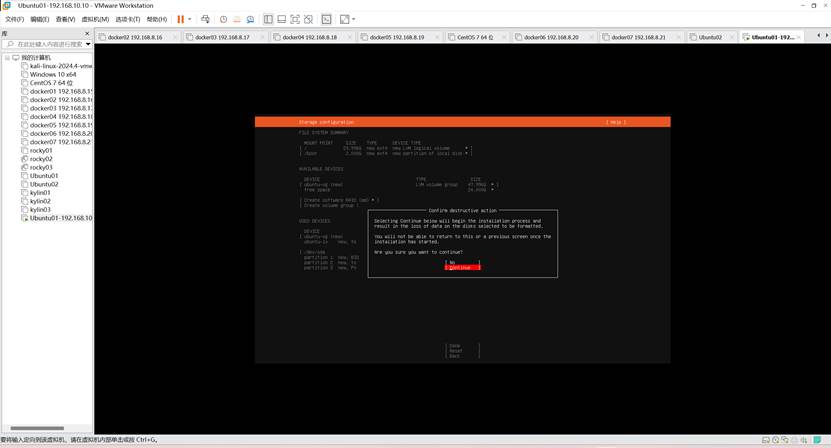

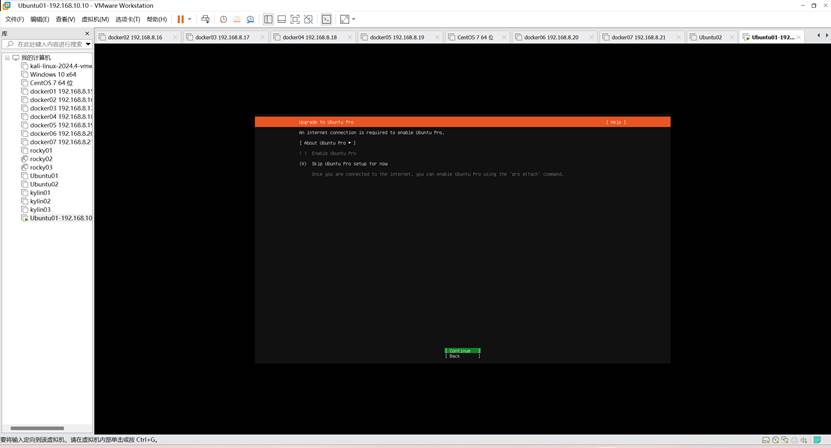

- 安装过程

安装过程除了选定“安装openssh server”外,其他过程基本按默认方式处理即可

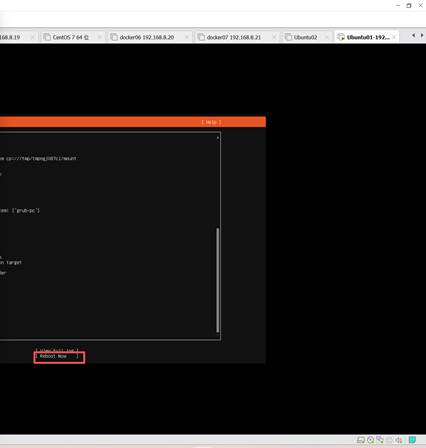

安装过程很快,要求重启电脑,重启之前要断开光盘连接

安装过程很快,要求重启电脑,重启之前要断开光盘连接

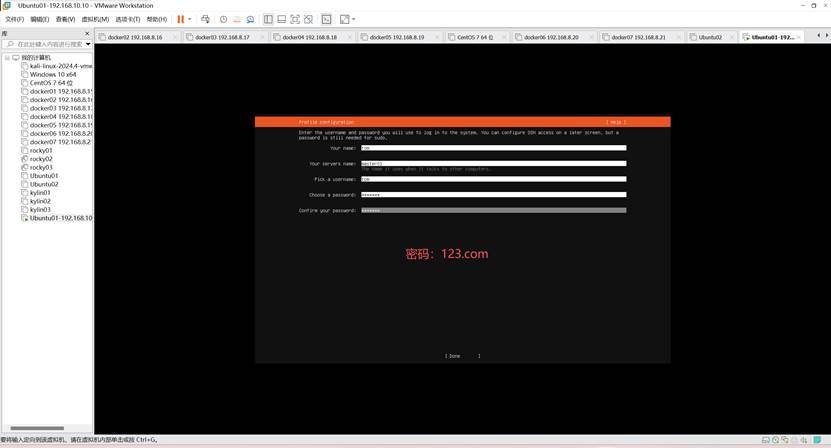

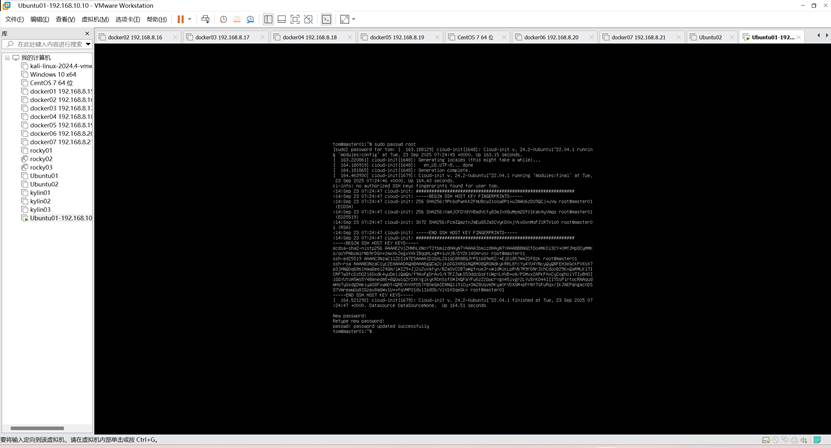

- 用户登录

以普通用户身份登录,可以给root用户配置密码后激活root用户账号

登录我创建的tom

sudo passwd root

su – root

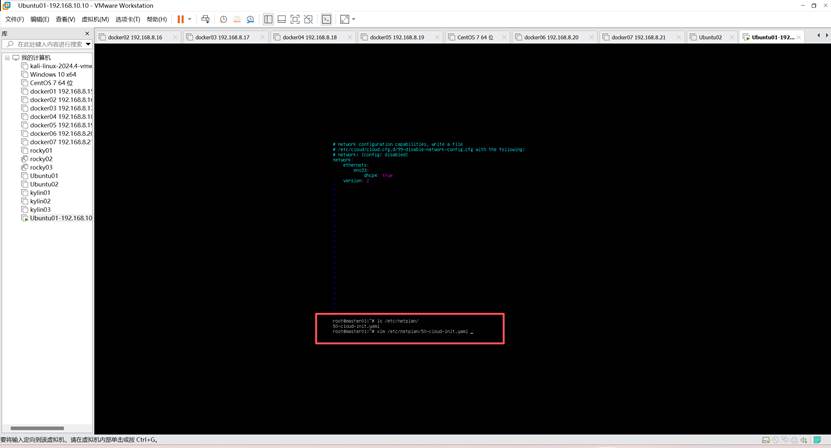

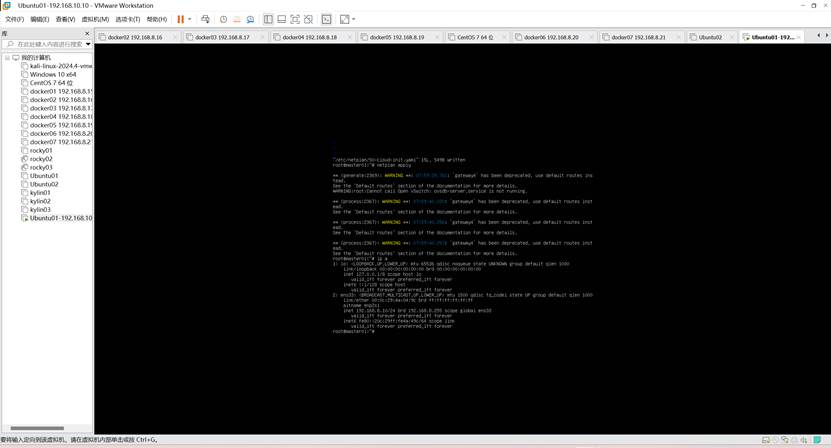

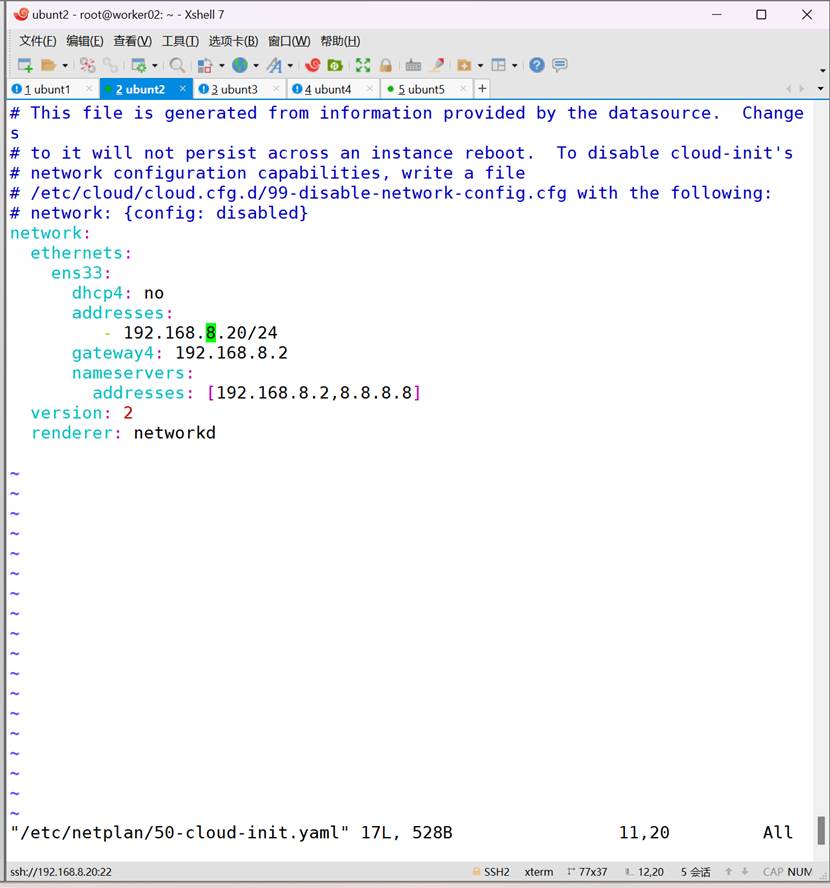

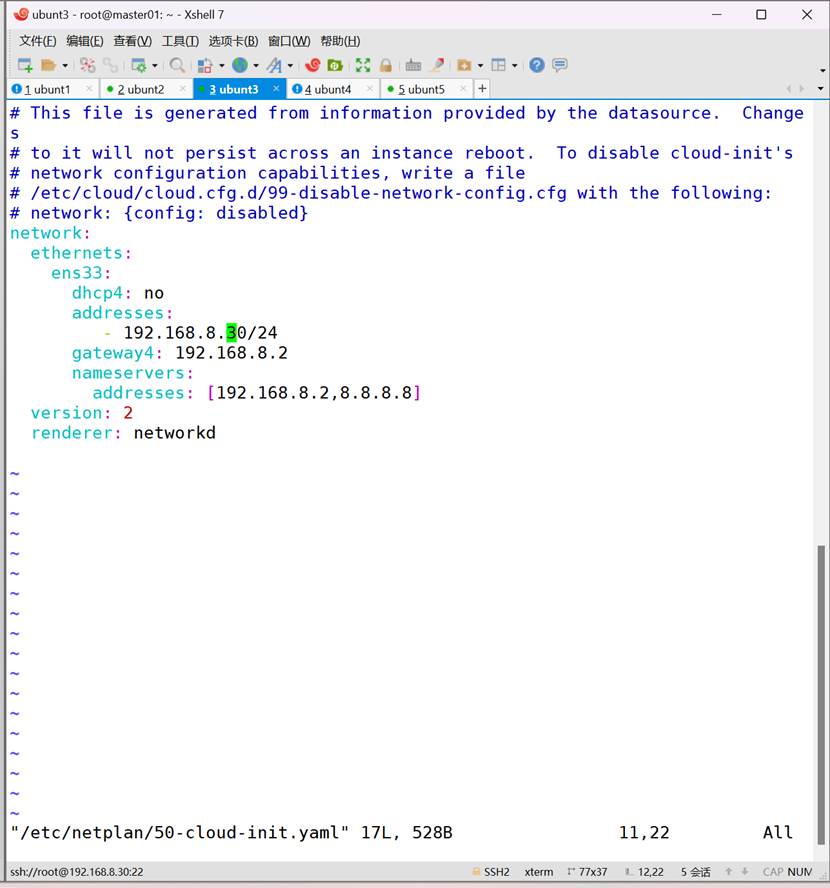

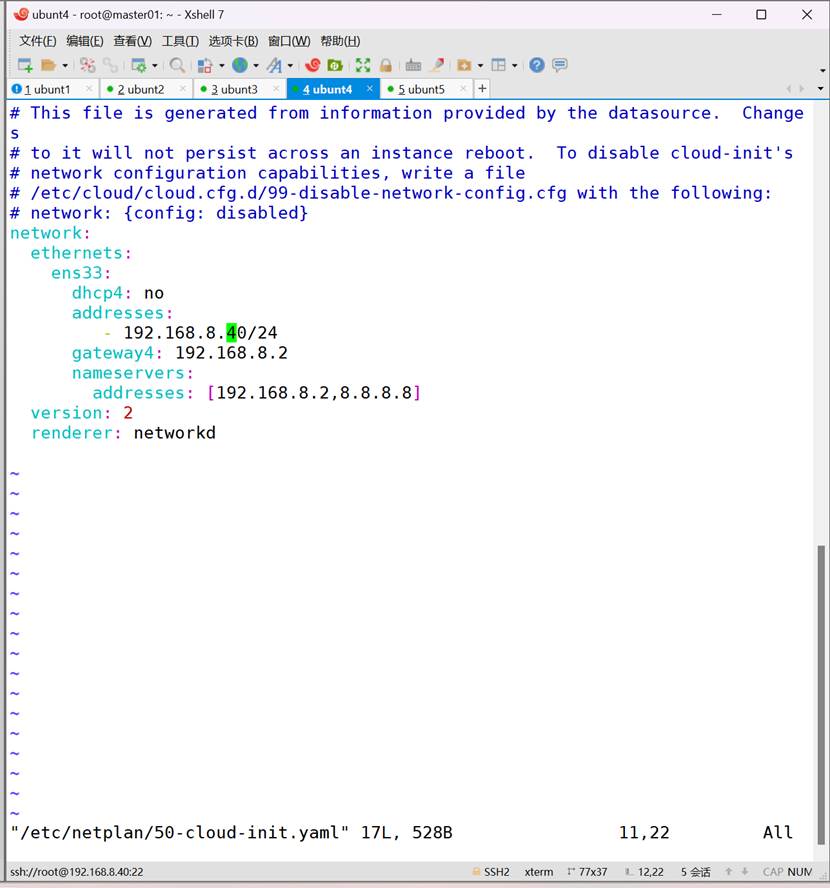

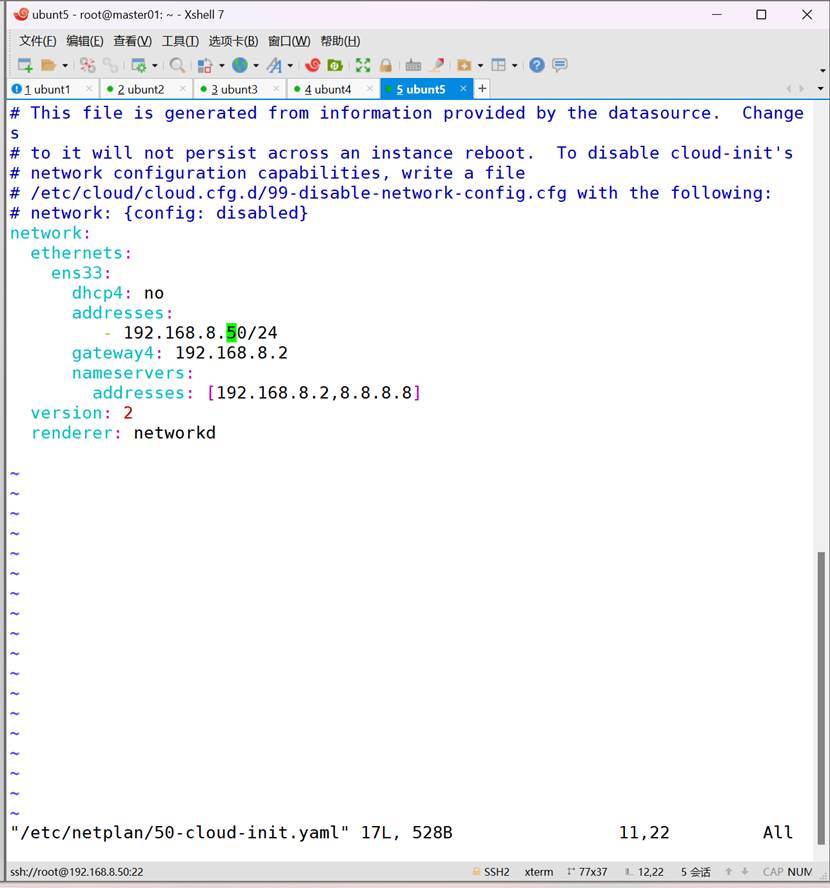

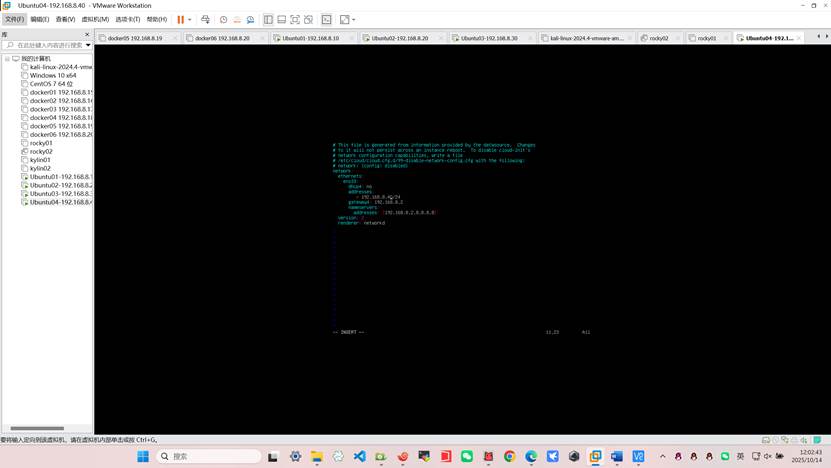

ls /etc/netplan

vim /etc/netplan/50-cloud-init.yaml

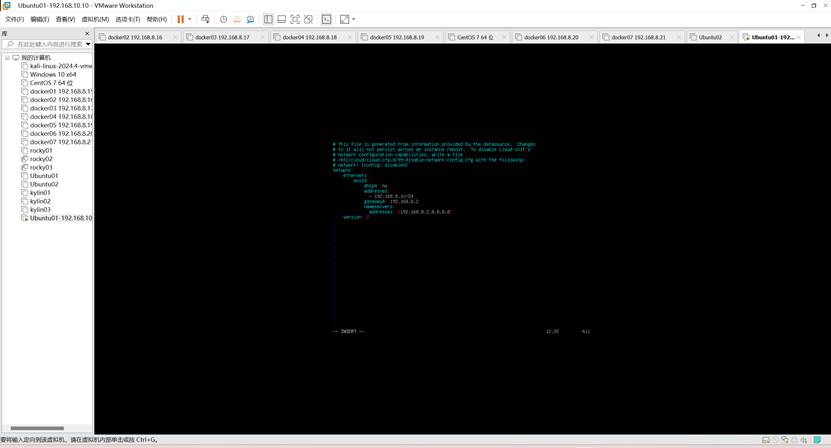

network:

ethernets:

ens33:

dhcp4: no

addresses:

- 192.168.8.10/24

gateway4: 192.168.8.2

nameservers:

addresses: [192.168.8.2,8.8.8.8]

version: 2

renderer: networkd

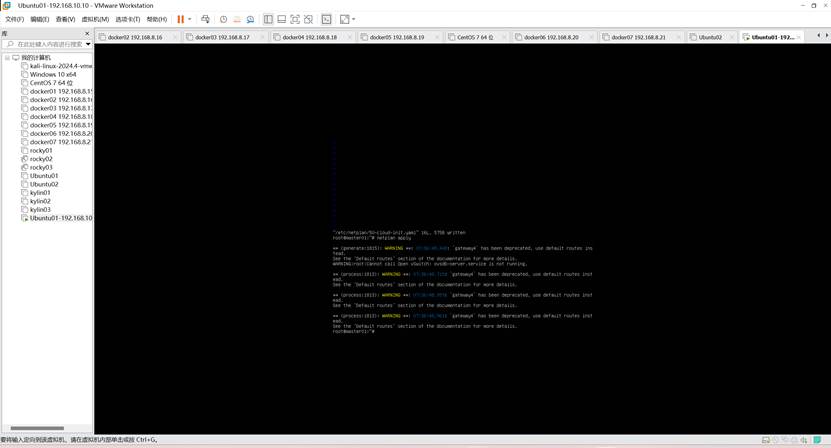

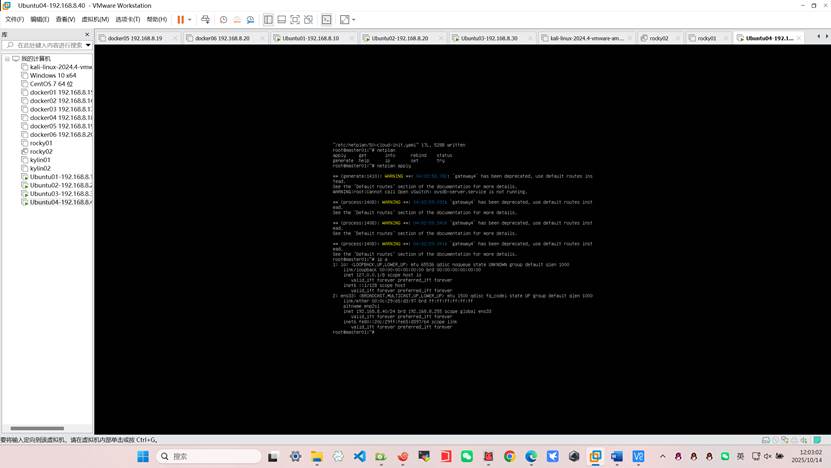

应用网卡配置:

添加

echo "network: {config: disabled}" > /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg

不添加这个每次开机ip都会消失

netplan apply

查看网络配置:

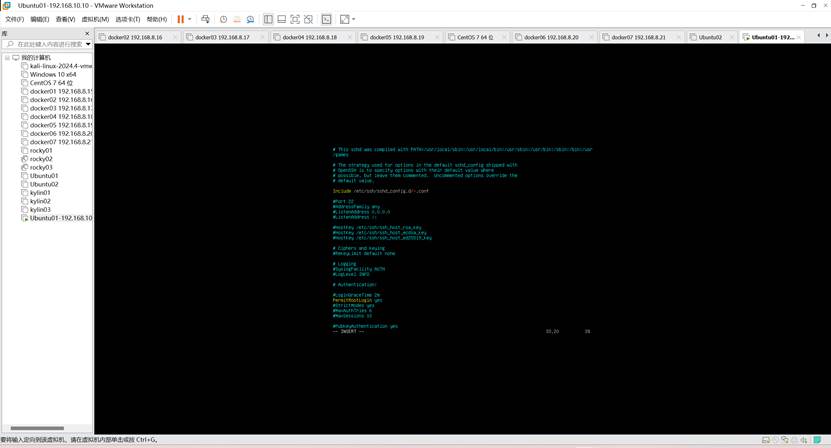

- 开启root远程登录(非必需)

vim /etc/ssh/sshd_config

PermitRootLogin yes

重启sshd服务:

systemctl restart sshd

xshell连接

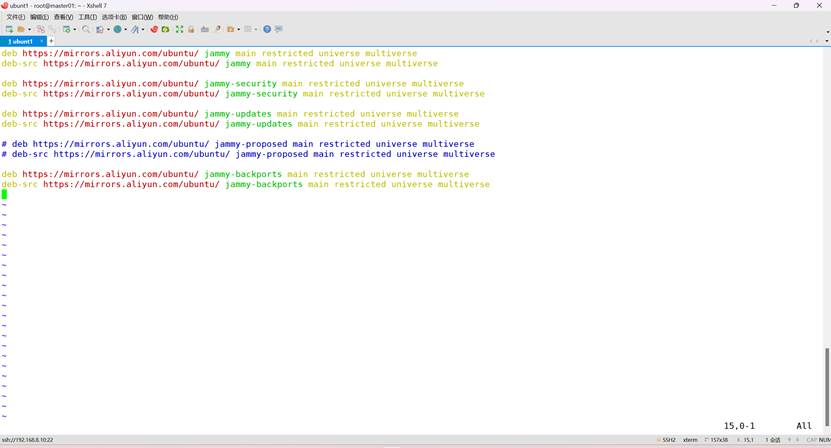

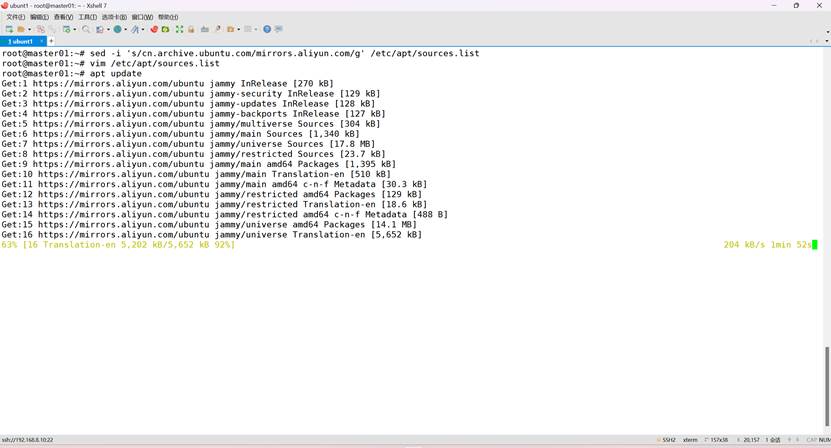

配置apt源

手动更改

用你熟悉的编辑器打开:

/etc/apt/sources.list

替换默认的

http://archive.ubuntu.com/

为

https://mirrors.aliyun.com/

root@k8s-master01:~# vim /etc/apt/sources.list

修改:

deb https://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

# deb https://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

# deb-src https://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

更新apt

root@k8s-master01:~# apt update

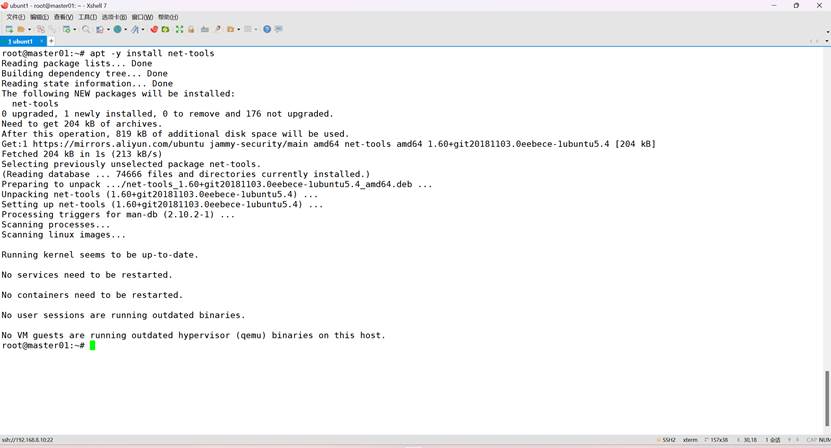

安装net-tools工具

root@k8s-master01:~# apt -y install net-tools

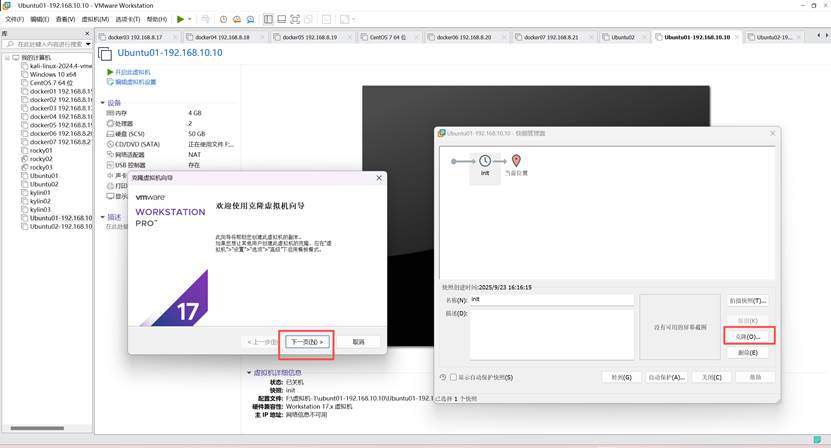

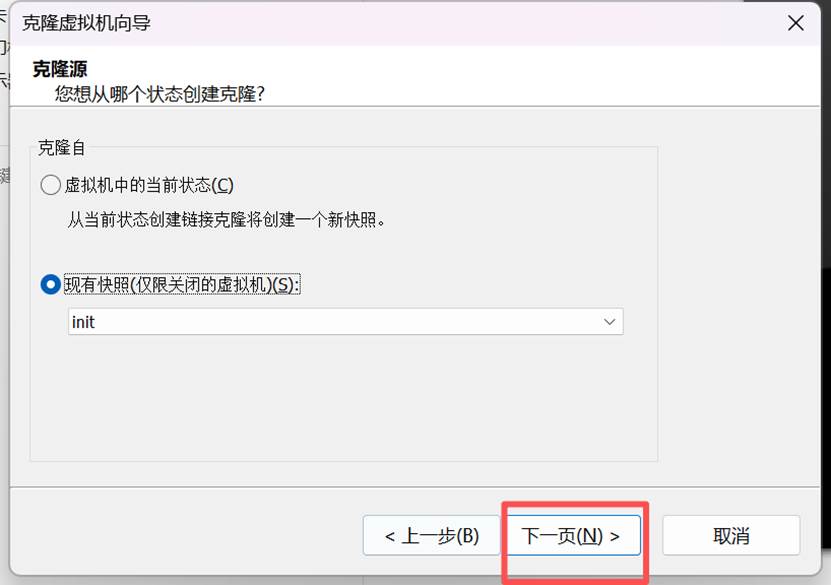

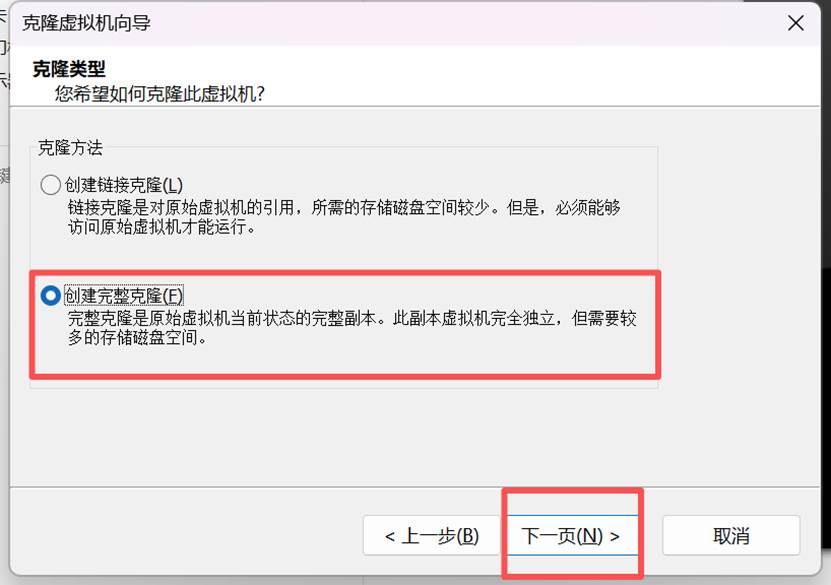

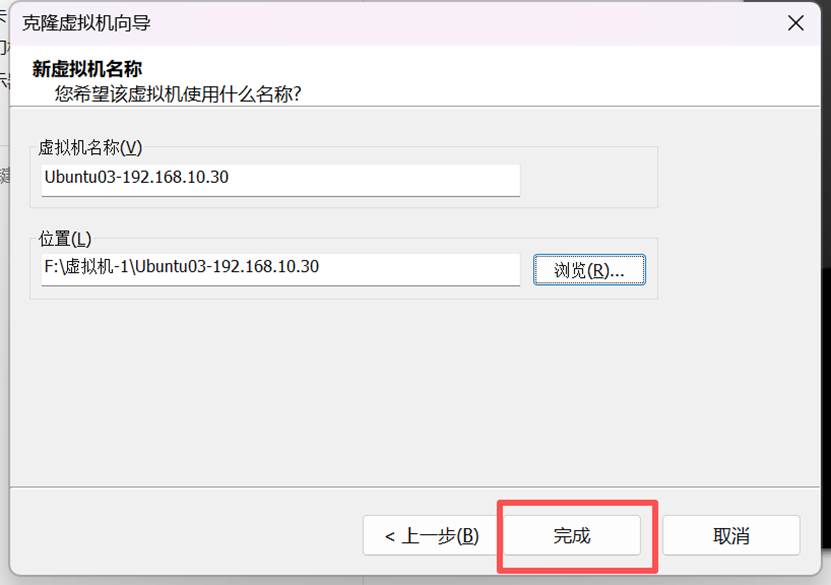

拍摄快照并基于该快照克隆四台

四台同样操作

四台配置ip

三、配置K8S集群环境

1、修改主机名和hosts文件

root@k8s-master01:~# hostnamectl set-hostname k8s-k8s-master01

root@k8s-master01:~# bash

root@ k8s-worker01:~# hostnamectl set-hostname k8s-worker01

root@worker02:~# bash

root@ k8s-worker02:~# hostnamectl set-hostname k8s-worker02

root@ k8s-worker02:~# bash

root@harbor:~# hostnamectl set-hostname harbor

root@harbor:~# bash

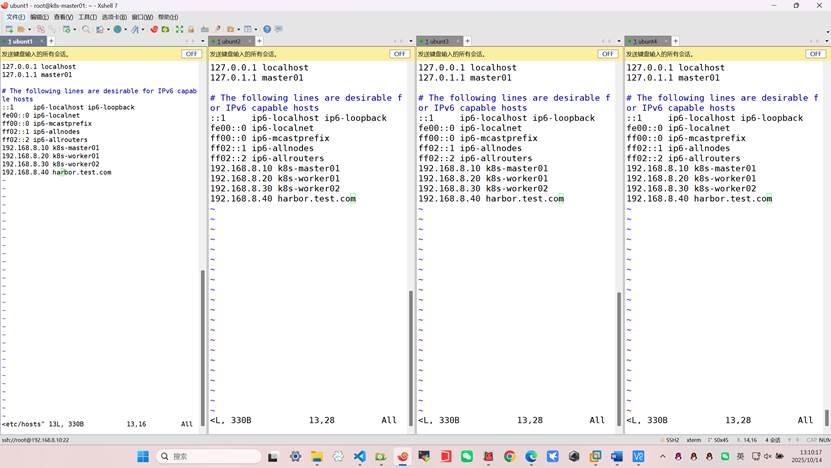

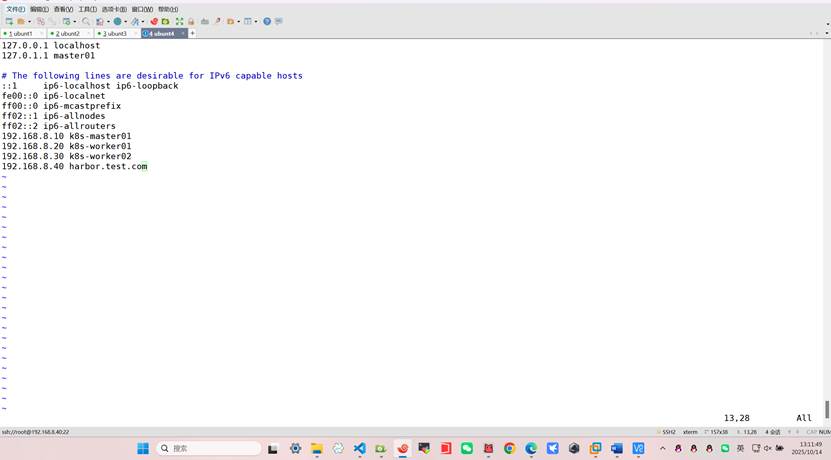

四台都添加hosts文件

root@所有:~# vim /etc/hosts

192.168.8.10 k8s-master01

192.168.8.20 k8s-worker01

192.168.8.30 k8s-worker02

192.168.8.40 harbor.test.com

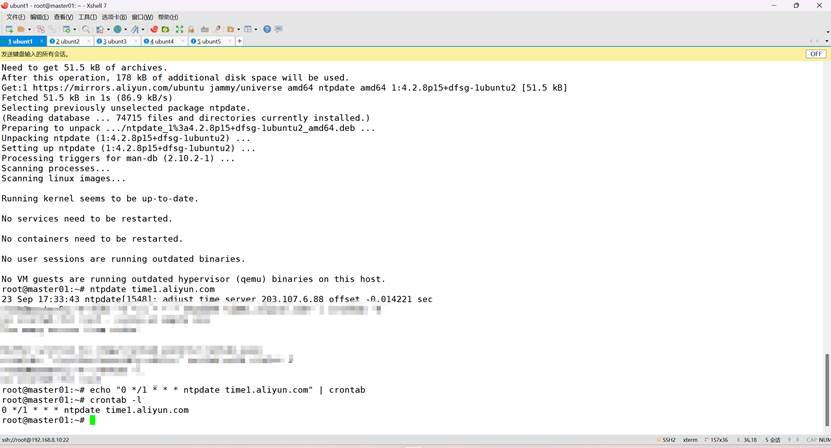

2、设置时区(所有主机)

root@k8s-master01:~# timedatectl set-timezone Asia/Shanghai

安装ntpdate命令

apt -y install ntpdate

使用ntpdate命令同步时间

ntpdate time1.aliyun.com

通过计划任务实现时间同步

root@k8s-master01:~# echo "0 */1 * * * ntpdate time1.aliyun.com" | crontab

root@k8s-master01:~# crontab -l

关闭防火墙(由于ubuntu是最小化安装没有防火墙所有不需要关闭)

关闭selinux(由于ubuntu是最小化安装没有防火墙所有不需要关闭)

root@worker02:~# ufw disable

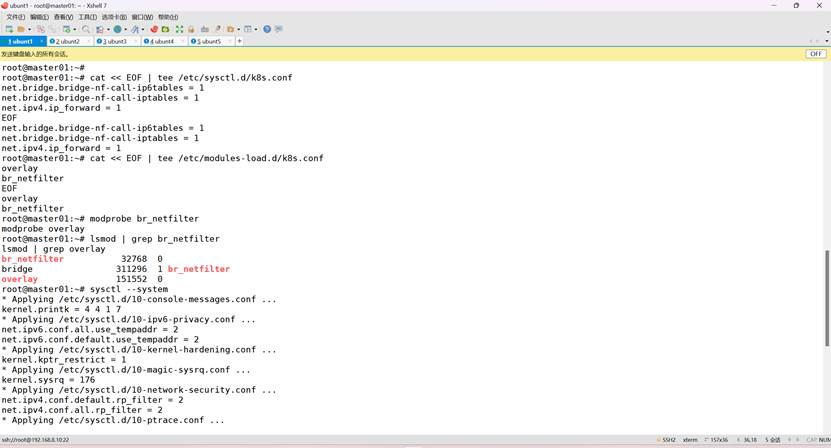

3、配置内核转发及网桥过滤(前三台k8s集群)

配置

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

配置加载文件(ubuntu版本)

cat << EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

加载br_netfilter 和 overlay

modprobe br_netfilter

modprobe overlay

查看模块是否加载完成

lsmod | grep br_netfilter

lsmod | grep overlay

应用配置

sysctl --system

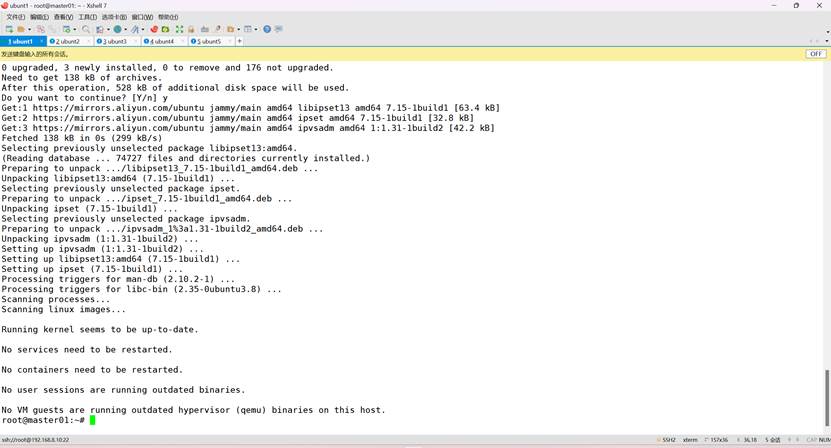

安装ipset及ipvsadm

apt install ipset ipvsadm

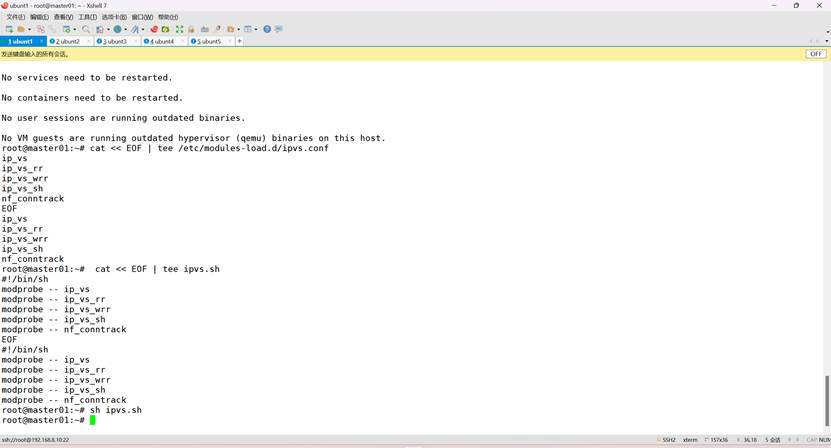

配置ipvsadm模块加载

添加需要加载的模块

cat << EOF | tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

cat << EOF | tee ipvs.sh

#!/bin/sh

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

sh ipvs.sh

4、关闭交换分区

root@k8s-master01:~# swapoff -a

设置永久关闭

root@k8s-master01:~# vim /etc/fstab

#/swap.img none swap sw 0 0 注释掉

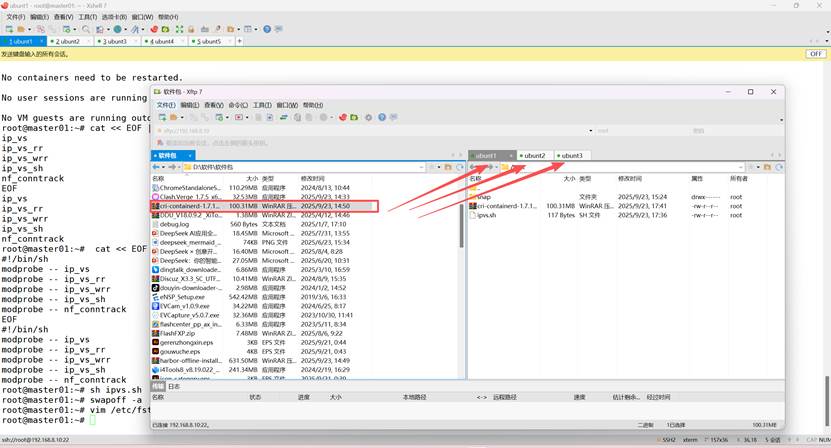

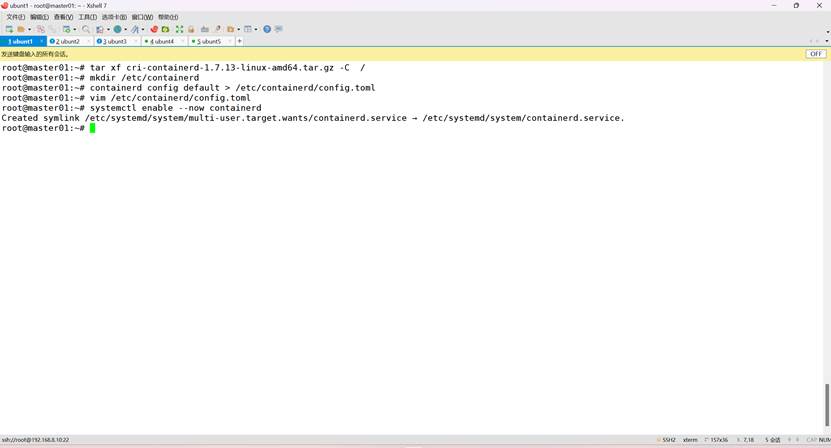

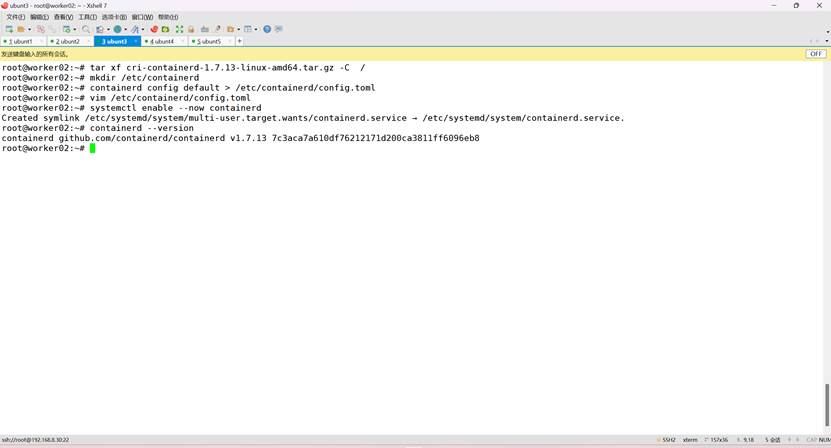

四、K8S集群容器运行时 Containerd准备(替换docker)(前三台k8s集群)

1 、Containerd部署文件获取并解压缩(文件已提前下载)

root@k8s-master01:~# tar xf cri-containerd-1.7.13-linux-amd64.tar.gz -C /

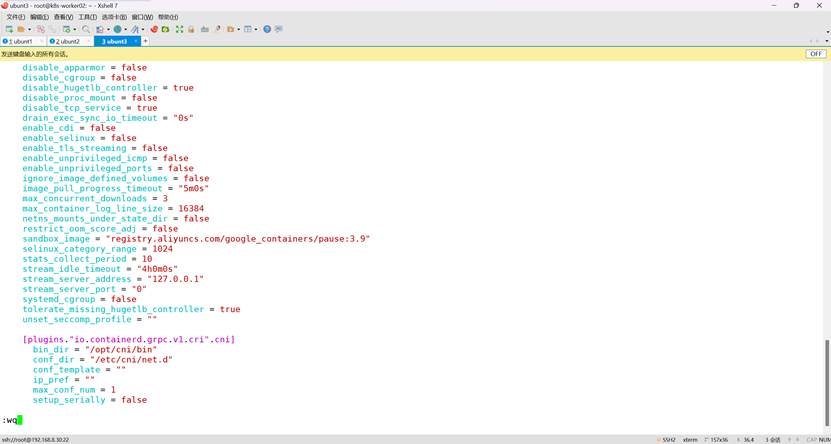

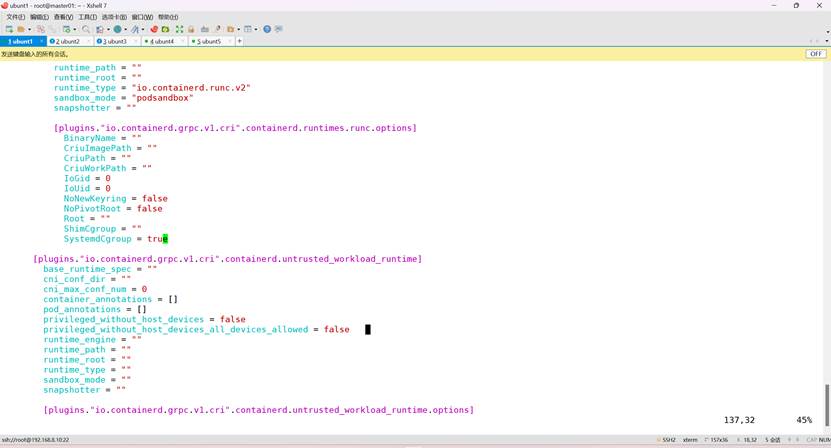

2、Containerd配置文件生成并修改

root@k8s-master01:~# mkdir /etc/containerd

root@k8s-master01:~# containerd config default > /etc/containerd/config.toml

vim /etc/containerd/config.toml

修改第65行

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

修改第137行

SystemdCgroup = true 由false修改为true

root@k8s-k8s-master01:~# systemctl restart containerd

3、Containerd启动及开机自启动

root@k8s-master01:~# systemctl enable --now containerd

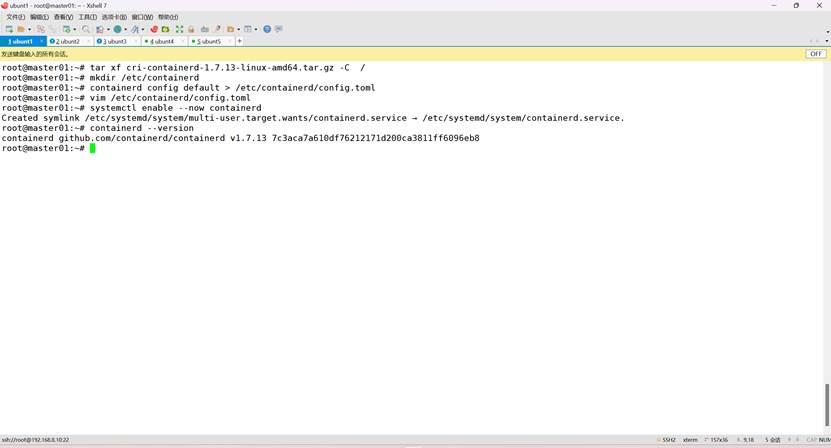

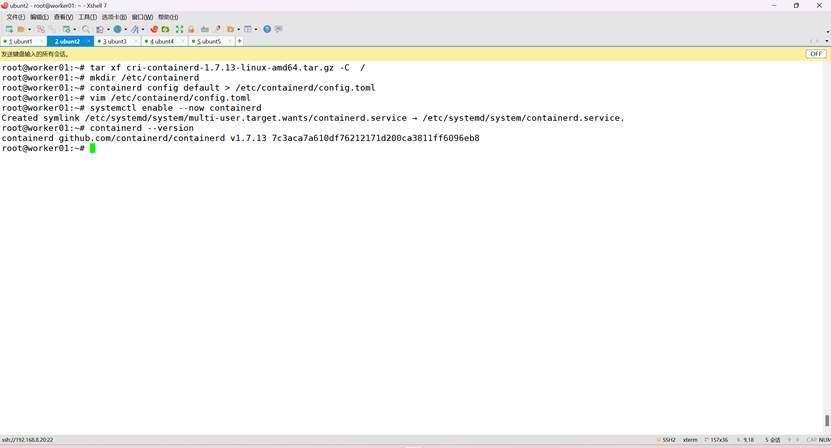

验证其版本

containerd --version

五、K8S集群部署

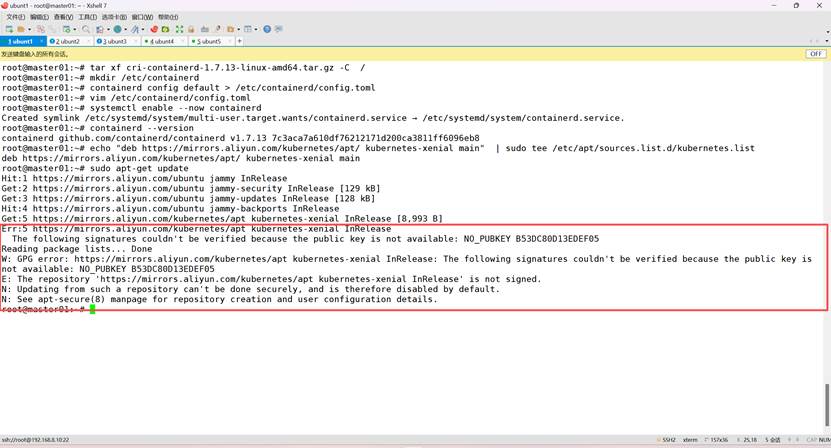

1、 K8S集群软件apt源准备

阿里云提供apt源

echo "deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

报错如下,主要因为没有公钥:

Err:5 https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease

The following signatures couldn't be verified because the public key is not available: NO_PUBKEY B53DC80D13EDEF05

Reading package lists... Done

W: GPG error: https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY B53DC80D13EDEF05

E: The repository 'https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial InRelease' is not signed.

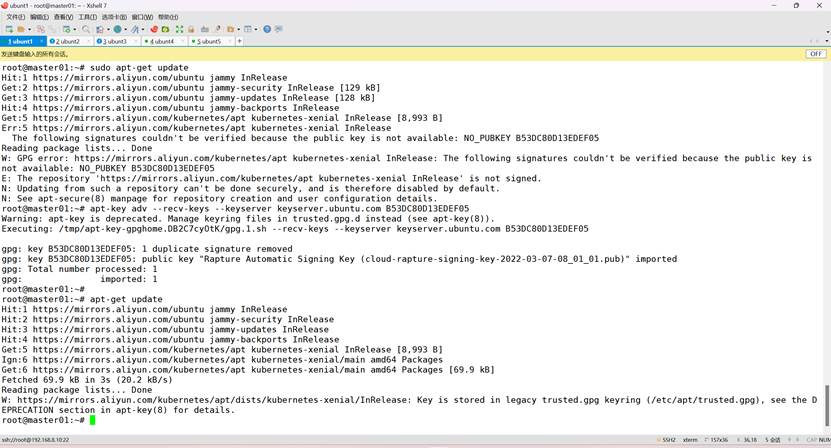

将公钥添加至服务器(根据自己主机上面的提示更改)

apt-key adv --recv-keys --keyserver keyserver.ubuntu.com B53DC80D13EDEF05

再次更新

root@k8s-master01:~# apt-get update

2、K8S集群软件安装(1.33.4)

安装K8S组件

# 安装工具

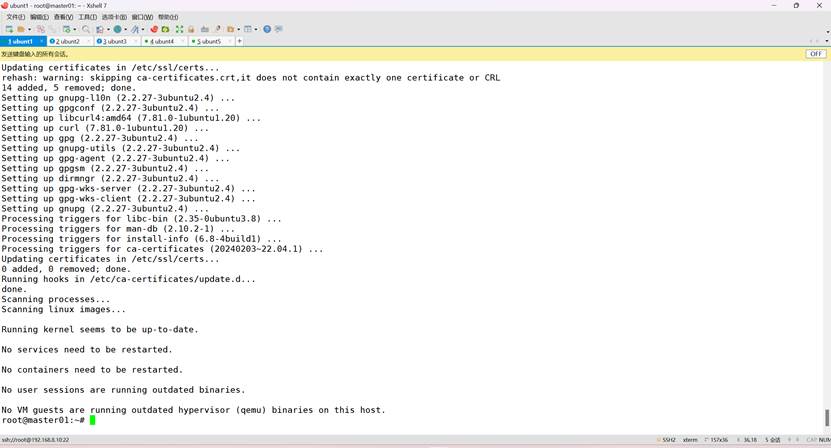

apt install -y apt-transport-https ca-certificates curl gpg

3、下载用于 Kubernetes 软件包仓库的公共签名密钥。所有仓库都使用相同的签名密钥,因此你可以忽略URL中的版本:

root@k8s-master01:~# curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

4、添加 Kubernetes apt 仓库。 请注意,此仓库仅包含适用于 Kubernetes 1.33 的软件包; 对于其他 Kubernetes 次要版本,则需要更改 URL 中的 Kubernetes 次要版本以匹配你所需的次要版本 (你还应该检查正在阅读的安装文档是否为你计划安装的 Kubernetes 版本的文档)。

root@k8s-master01:~# echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

root@k8s-master01:~# echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

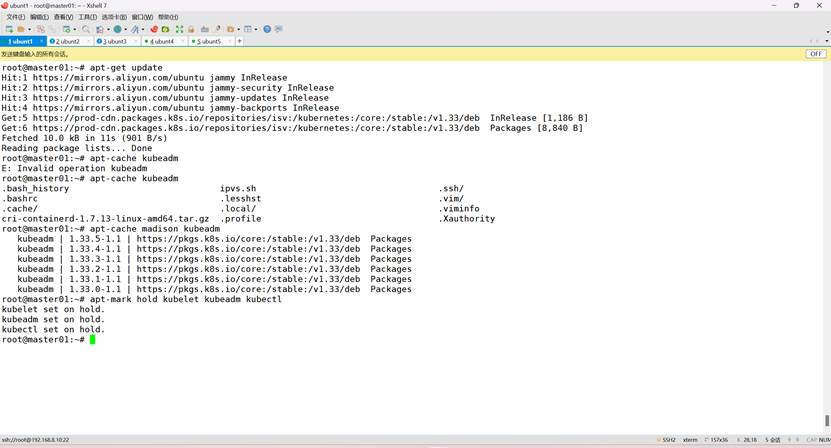

5、更新 apt 包索引,安装 kubelet、kubeadm 和 kubectl,:

root@k8s-master01:~# apt-get update

root@k8s-master01:~# apt-cache madison kubeadm

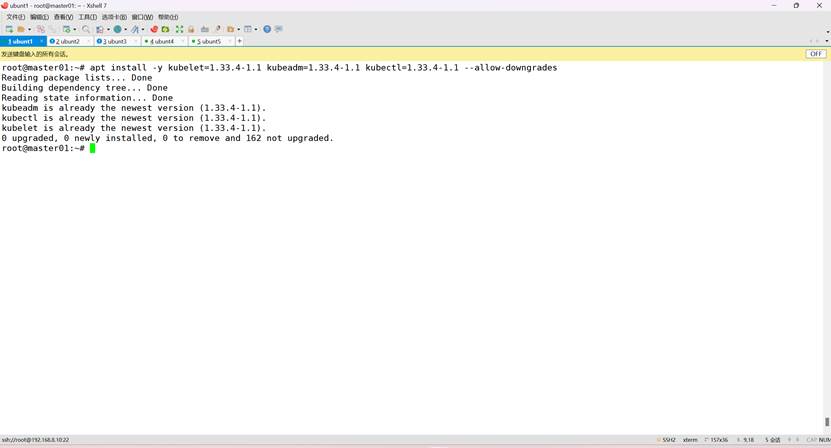

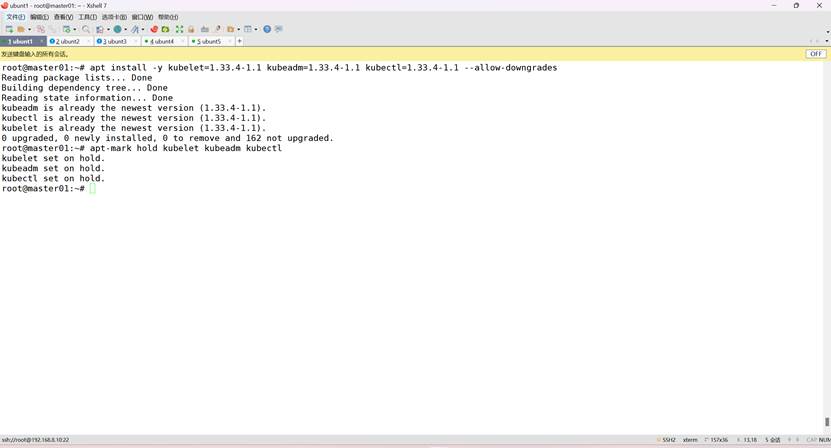

安装指定版本(1.33.4)

root@k8s-master01:~# apt install -y kubelet=1.33.4-1.1 kubeadm=1.33.4-1.1 kubectl=1.33.4-1.1

并锁定其版本当前版本为1.33.4所有没有修改版本

root@k8s-master01:~# apt-mark hold kubelet kubeadm kubectl

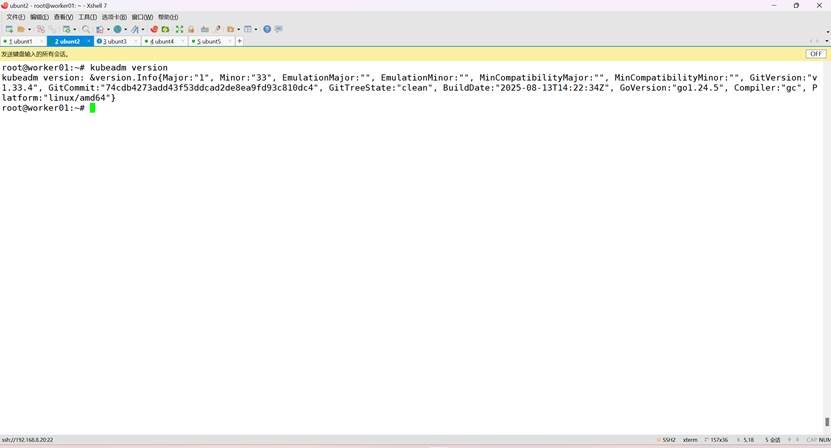

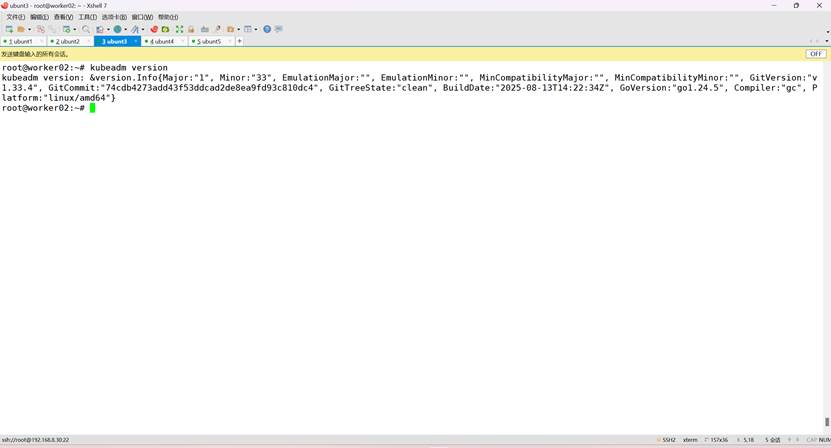

6、k8s集群初始化(前面操作所有主机都做)

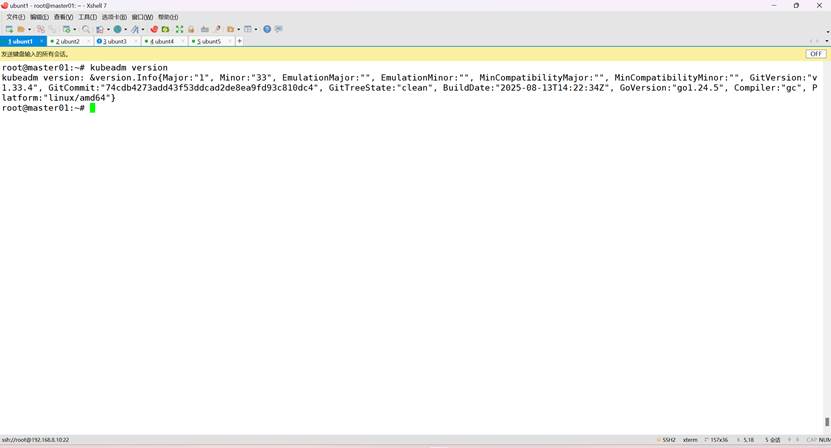

查看k8s版本

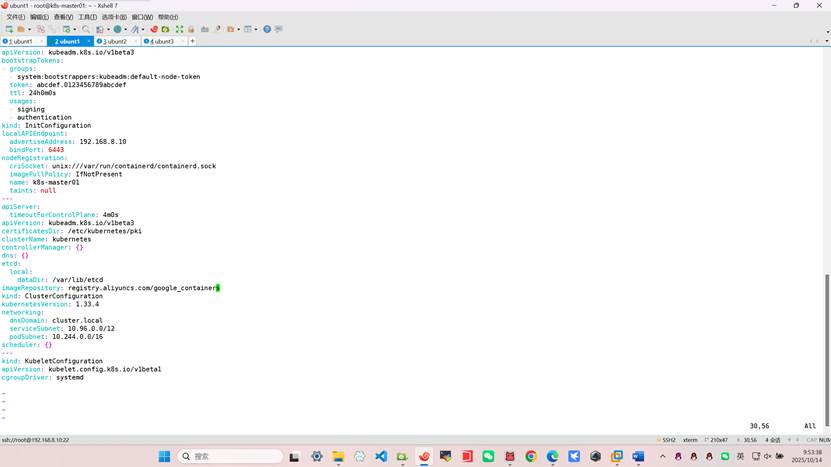

root@k8s-k8s-master01:~# kubeadm config print init-defaults > kubeadm-config.yaml

root@k8s-k8s-master01:~# vim kubeadm-config.yaml

root@k8s-k8s-master01:~# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.8.10

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-k8s-master01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.33.4

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

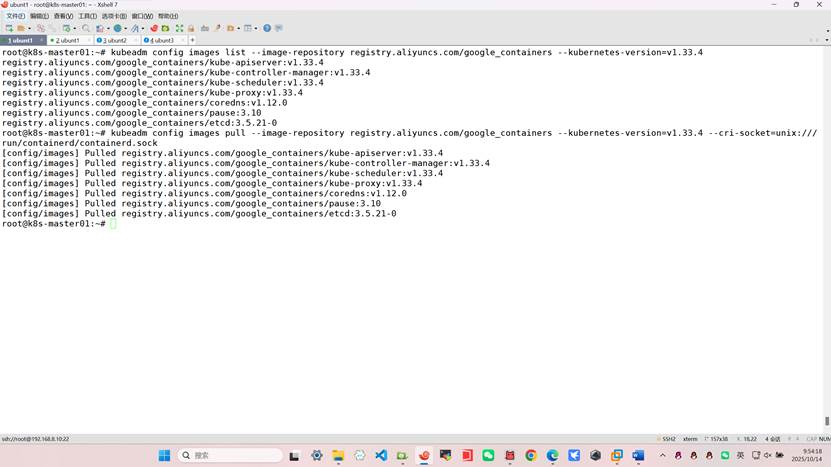

root@k8s-k8s-master01:~# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.33.4

registry.aliyuncs.com/google_containers/kube-apiserver:v1.33.4

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.33.4

registry.aliyuncs.com/google_containers/kube-scheduler:v1.33.4

registry.aliyuncs.com/google_containers/kube-proxy:v1.33.4

registry.aliyuncs.com/google_containers/coredns:v1.12.0

registry.aliyuncs.com/google_containers/pause:3.10

registry.aliyuncs.com/google_containers/etcd:3.5.21-0

拉取镜像(通过阿里云镜像拉取)

root@k8s-master01:~# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.33.4 --cri-socket=unix:///run/containerd/containerd.sock

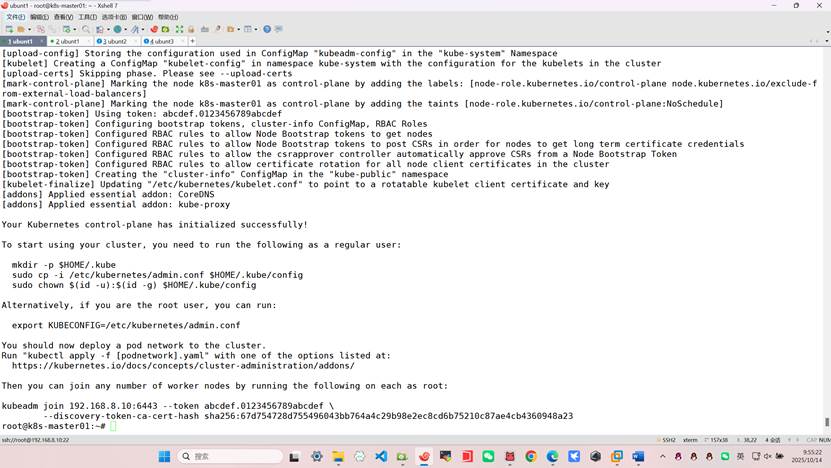

k8s初始化(注:在主节点k8s-k8s-master01初始化)

root@k8s-master01:~# kubeadm init --config kubeadm-config.yaml

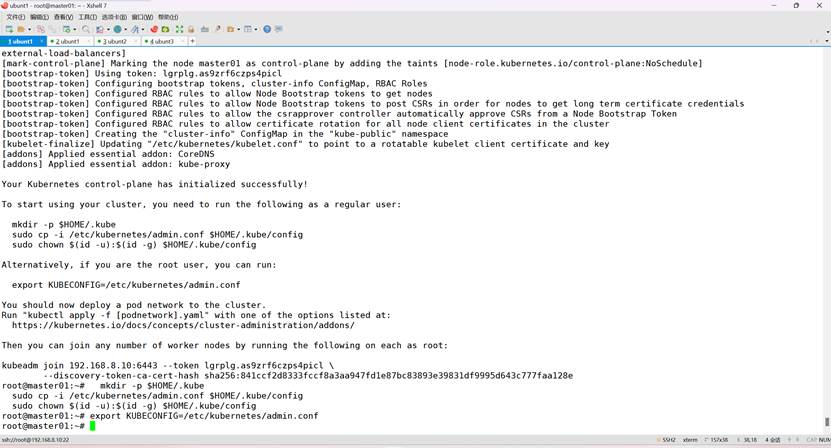

复制并粘贴到当前服务器

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

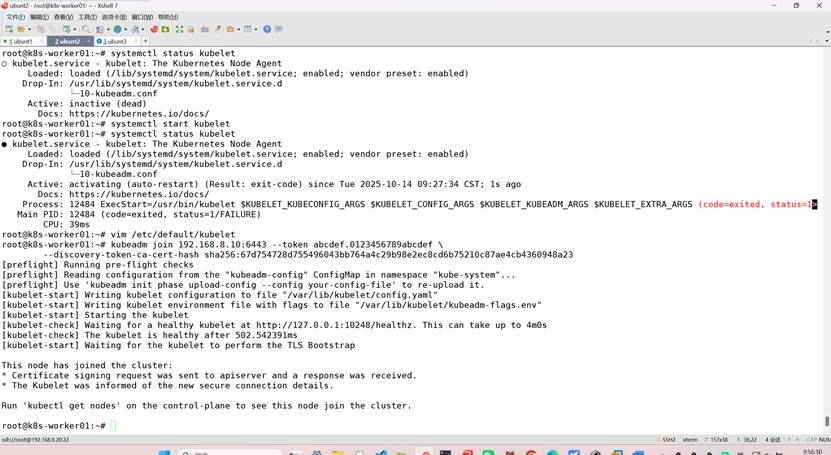

其它节点加入集群

kubeadm join 192.168.8.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:67d754728d755496043bb764a4c29b98e2ec8cd6b75210c87ae4cb4360948a23

k8s初始化失败后重置后重试以上步骤

kubeadm reset -f

查看节点

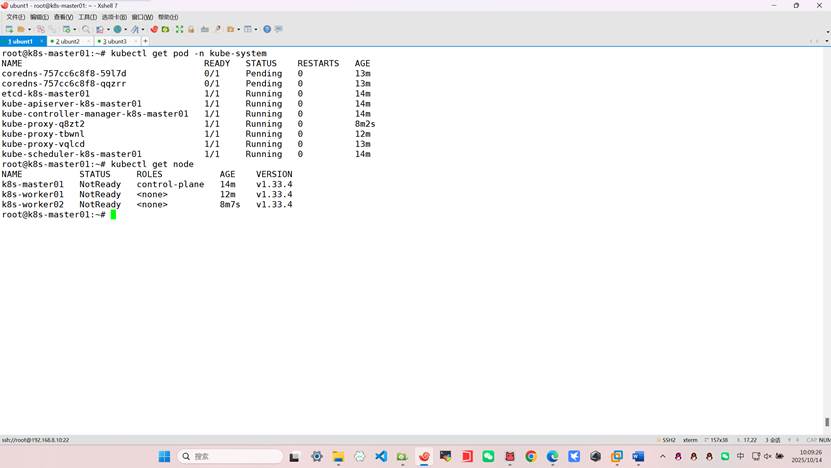

root@k8s-master01:~# kubectl get nodes

root@k8s-master01:~# kubectl get pod -n kube-system

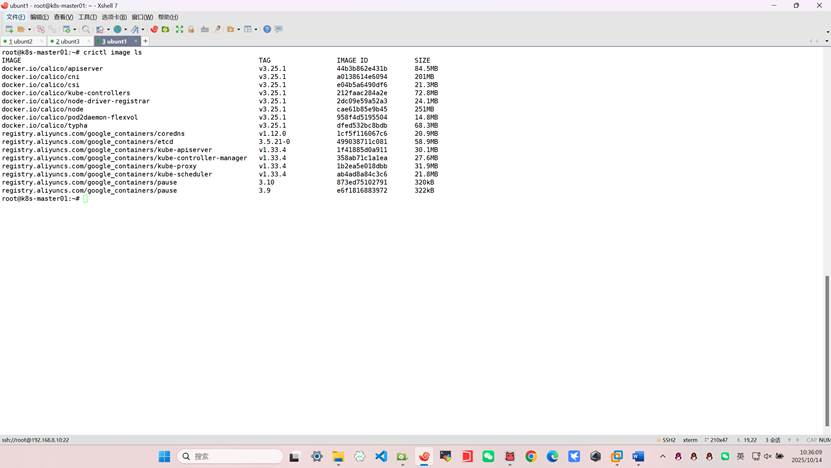

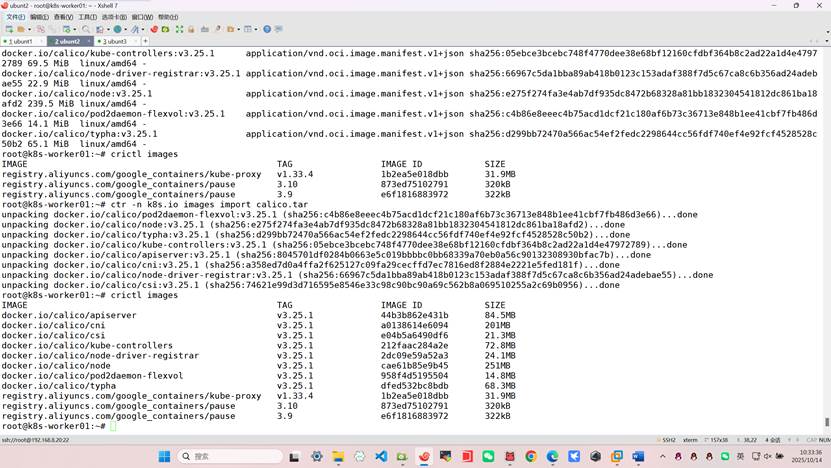

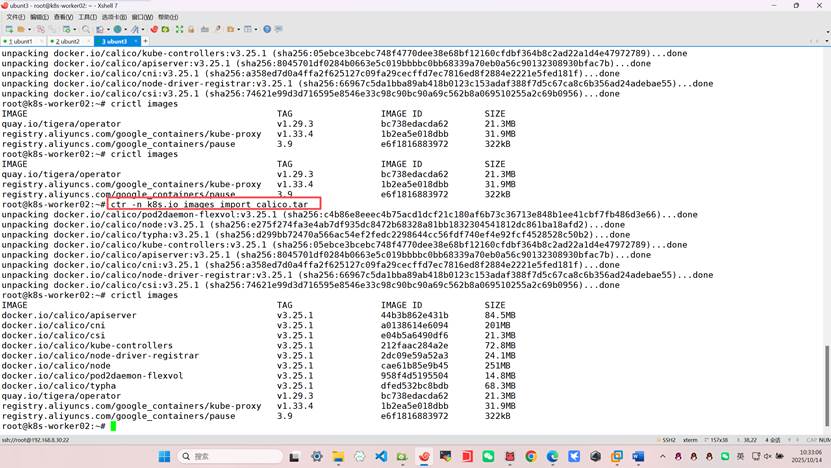

9、k8s集群部署calico网络插件

可以托包也可以连接vpn网上下

导镜像

问题在于您使用了 ctr 命令导入镜像,但 crictl 使用的是不同的命名空间。containerd 有不同的命名空间:

- ctr 默认使用 default 命名空间

- crictl 默认使用 k8s.io 命名空间

root@k8s-master01:~# ctr -n k8s.io images import calico.tar

root@k8s-worker01:~# ctr -n k8s.io images import calico.tar

root@k8s-worker02:~# ctr -n k8s.io images import calico.tar

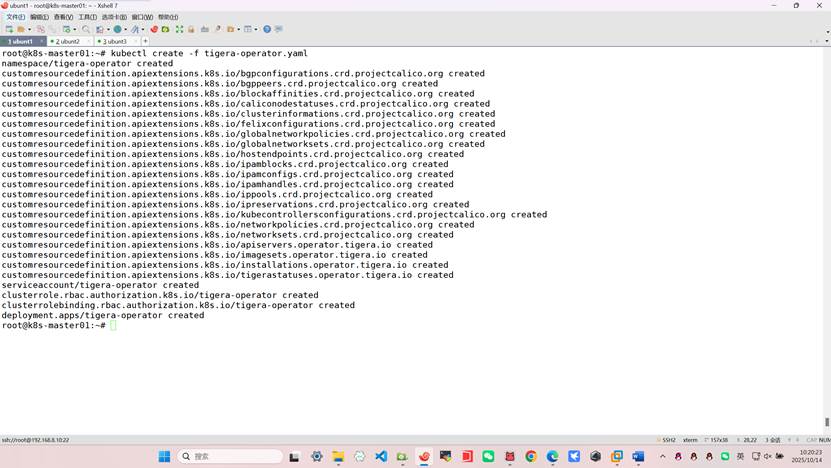

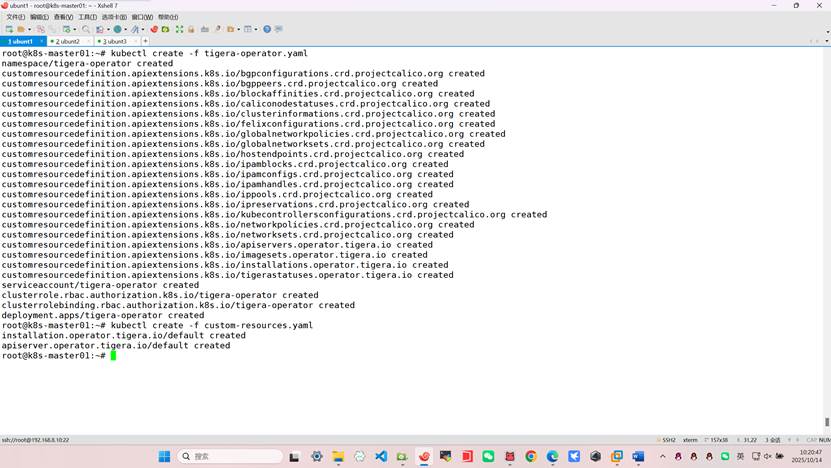

root@k8s-k8s-master01:~# kubectl create -f tigera-operator.yaml

root@k8s-master01:~# kubectl create -f custom-resources.yaml

root@k8s-k8s-master01:~#

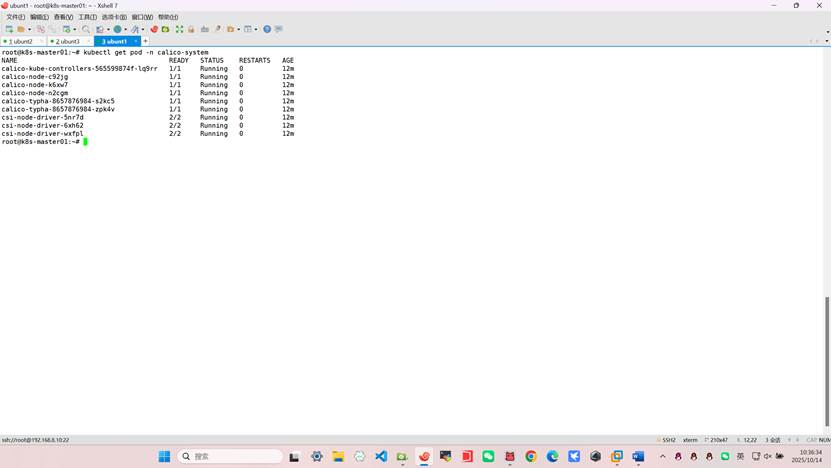

root@k8s-k8s-master01:~# kubectl get pod -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-565599874f-lq9rr 1/1 Running 0 12m

calico-node-c92jg 1/1 Running 0 12m

calico-node-k6xw7 1/1 Running 0 12m

calico-node-n2cgm 1/1 Running 0 12m

calico-typha-8657876984-s2kc5 1/1 Running 0 12m

calico-typha-8657876984-zpk4v 1/1 Running 0 12m

csi-node-driver-5nr7d 2/2 Running 0 12m

csi-node-driver-6xh62 2/2 Running 0 12m

csi-node-driver-wxfpl 2/2 Running 0 12m

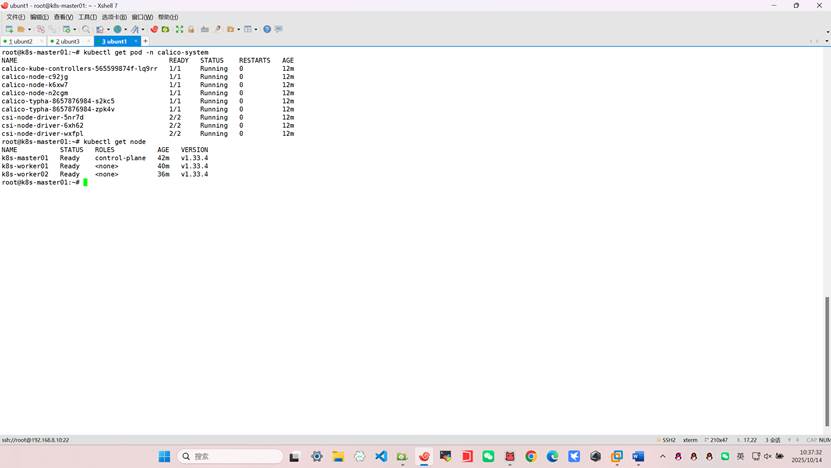

root@k8s-k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-k8s-master01 Ready control-plane 42m v1.33.4

k8s-worker01 Ready <none> 40m v1.33.4

k8s-worker02 Ready <none> 36m v1.33.4

六、配置harbor仓库

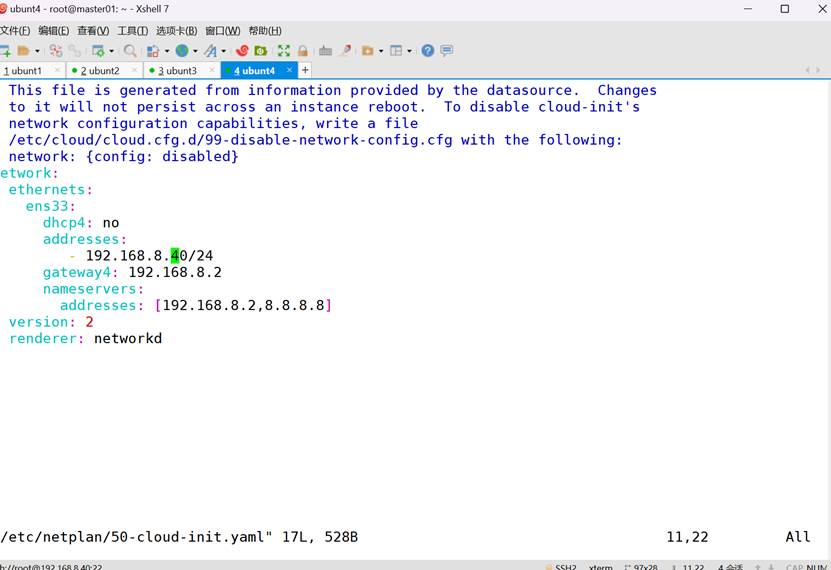

root@k8s-master01:~# vim /etc/netplan/50-cloud-init.yaml

添加

echo "network: {config: disabled}" > /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg

不添加这个每次开机ip都会消失

修改主机名和hosts文件

root@k8s-master01:~# hostnamectl set-hostname harbor

root@k8s-master01:~# bash

root@harbor:~# vim /etc/hosts

192.168.8.10 k8s-k8s-master01

192.168.8.20 k8s-worker01

192.168.8.30 k8s-worker02

192.168.8.40 harbor.test.com

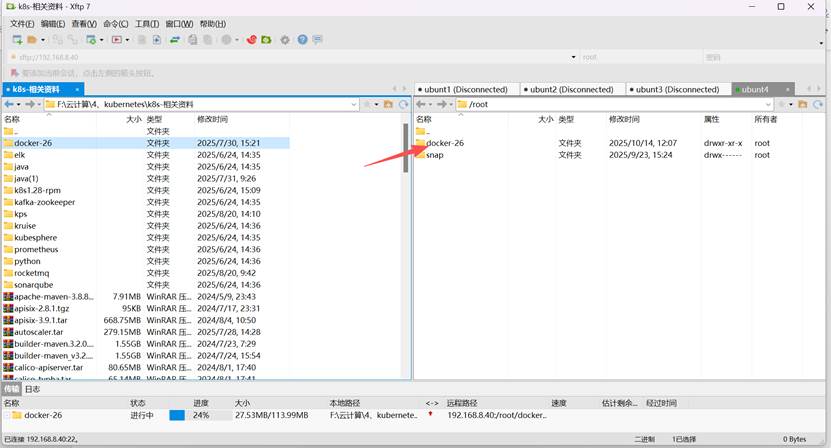

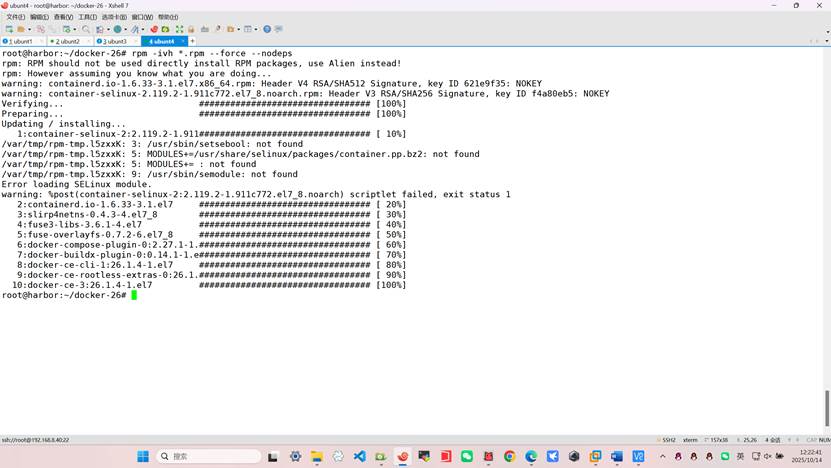

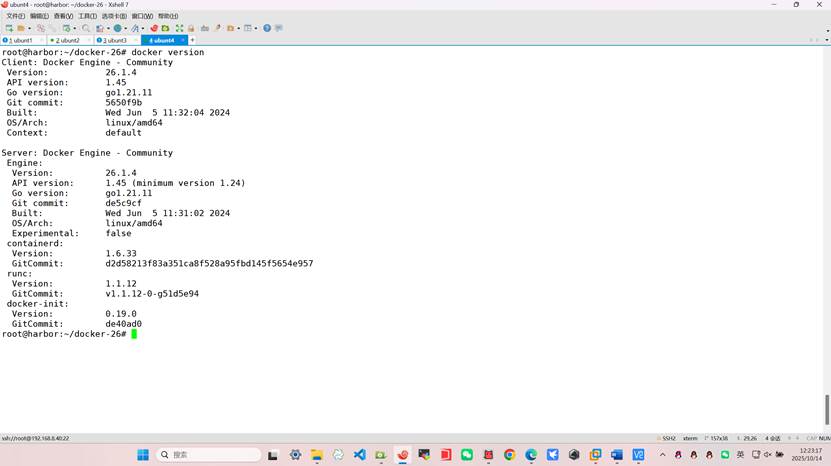

将docker-ce26的包复制到本机

root@harbor:~/docker-26# apt -y install rpm

root@harbor:~/docker-26# rpm -ivh *.rpm --force –nodeps

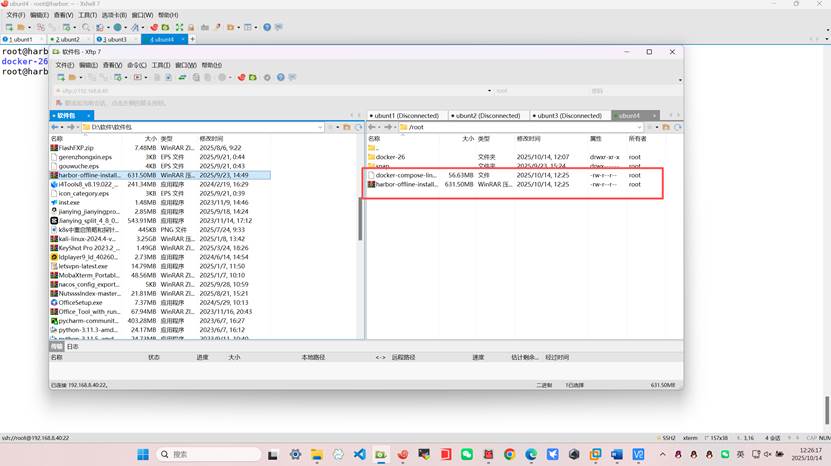

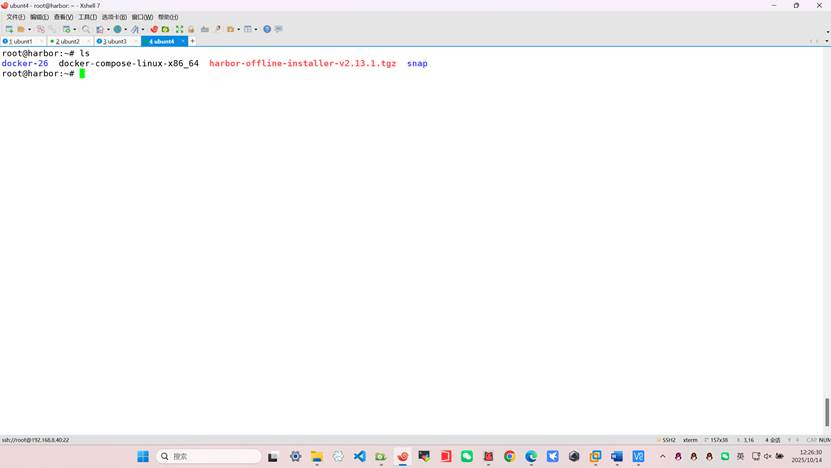

将harbor和docker-compose的软件包复制到本机

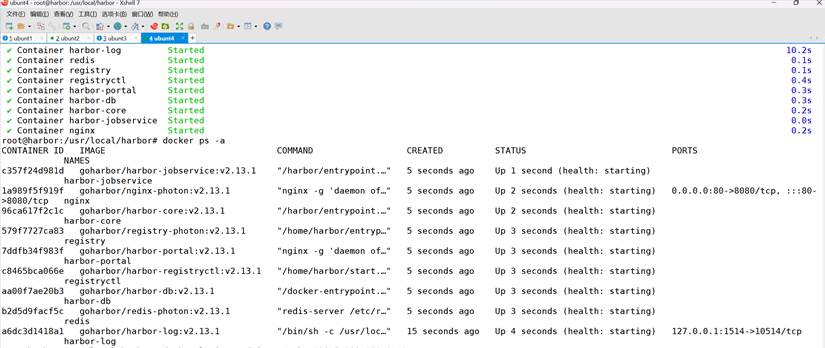

Harbor仓库要求版本v2.13.1

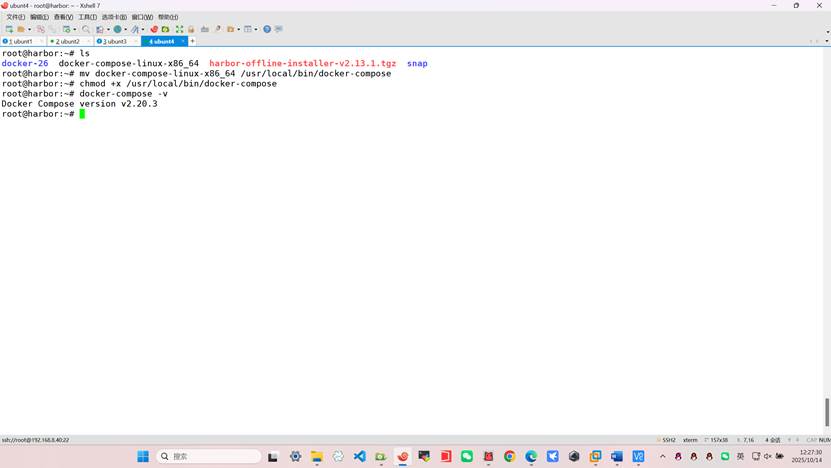

将docker-compose绿色软件放到/usr/local/bin/下

root@harbor:~# mv docker-compose-linux-x86_64 /usr/local/bin/docker-compose

root@harbor:~# chmod +x /usr/local/bin/docker-compose

root@harbor:~# docker-compose -v

Docker Compose version v2.20.3

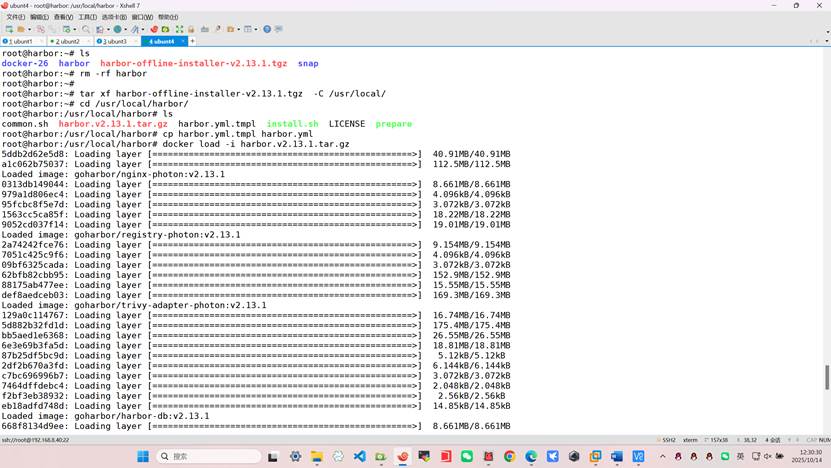

解压harbor到/usr/local

root@harbor:~# tar xf harbor-offline-installer-v2.13.1.tgz -C /usr/local/

root@harbor:/usr/local/harbor# ls

common.sh harbor.v2.13.1.tar.gz harbor.yml.tmpl install.sh LICENSE prepare

root@harbor:/usr/local/harbor# cp harbor.yml.tmpl harbor.yml

root@harbor:/usr/local/harbor# docker load -i harbor.v2.13.1.tar.gz

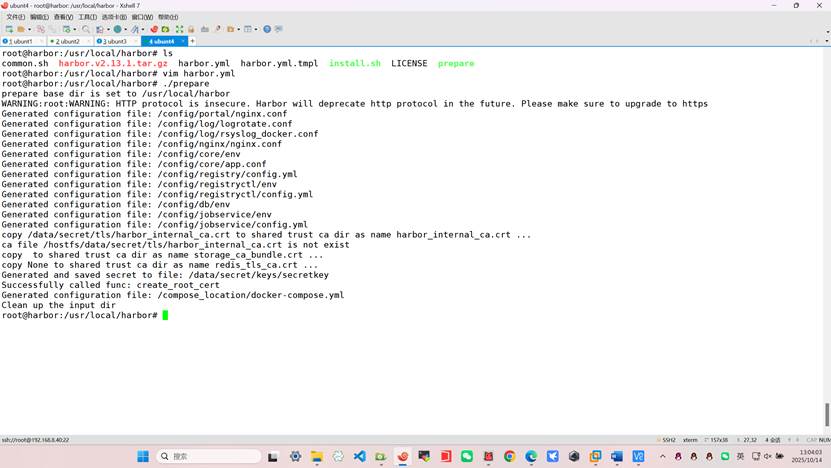

root@harbor:/usr/local/harbor# vim harbor.yml

hostname: harbor.test.com

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 80

# https related config

#https:

# https port for harbor, default is 443

#port: 443

# The path of cert and key files for nginx

#certificate: /your/certificate/path

#private_key: /your/private/key/path

# enable strong ssl ciphers (default: false)

# strong_ssl_ciphers: false

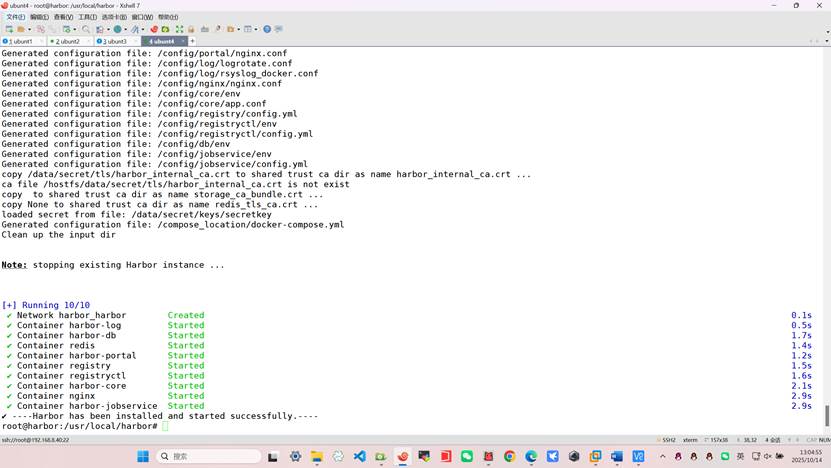

root@harbor:/usr/local/harbor# ./prepare

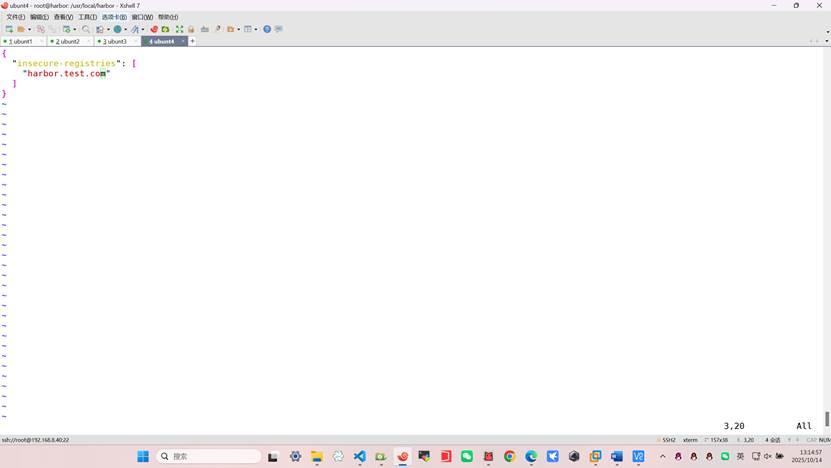

root@harbor:~# vim /etc/docker/daemon.json

{

"insecure-registries": [

"harbor.test.com"

]

}

root@harbor:/usr/local/harbor# systemctl restart docker

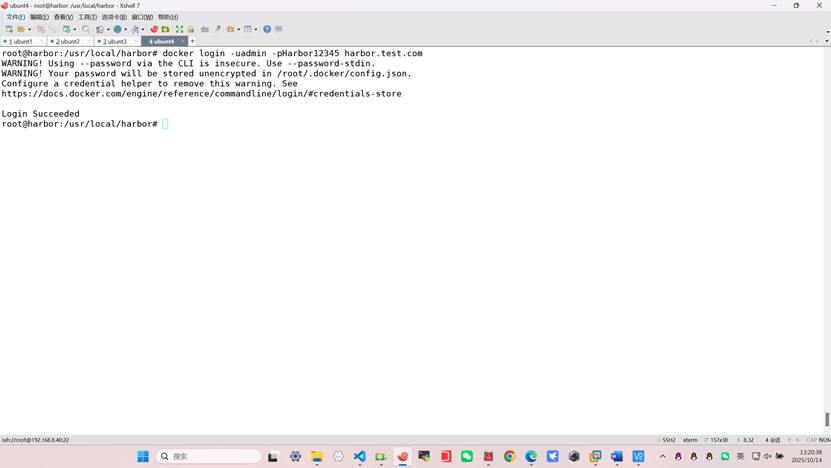

root@harbor:/usr/local/harbor# docker login -uadmin -pHarbor12345 harbor.test.com

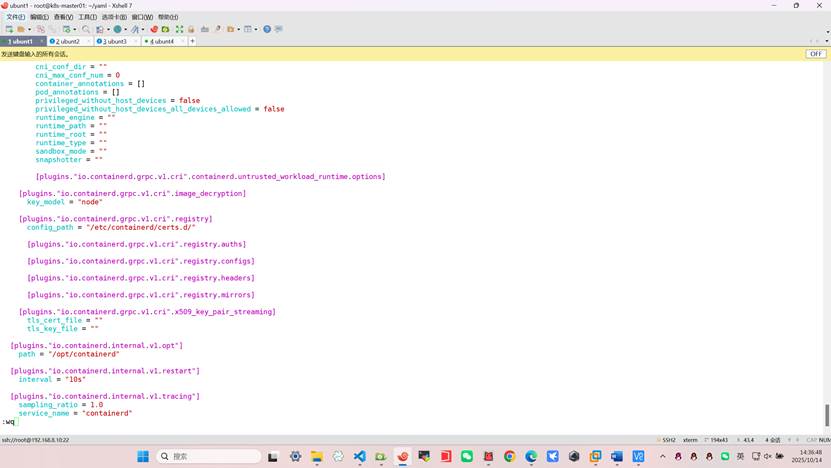

将所需其他k8s主机的containerd指向harbor私有仓库

# 创建 Harbor 配置目录

mkdir -p /etc/containerd/certs.d/harbor.test.com

root@k8s-master01:~/yaml# vim /etc/containerd/config.toml

config_path = "/etc/containerd/certs.d/"

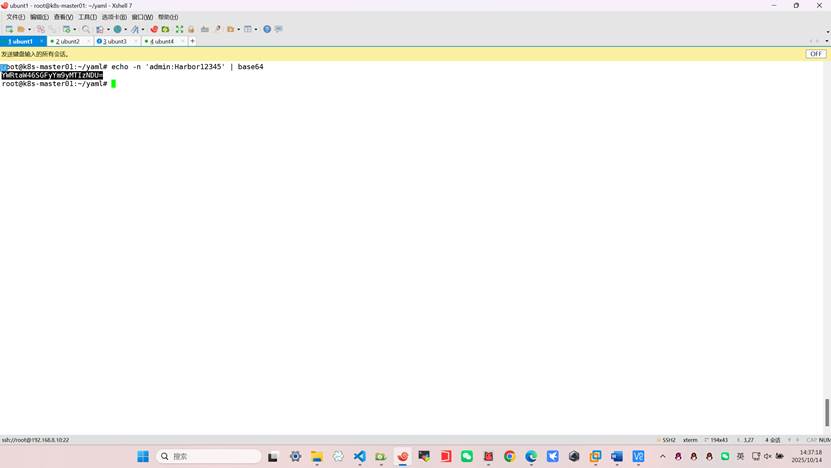

echo -n 'admin:Harbor12345' | base64

YWRtaW46SGFyYm9yMTIzNDU=

cat > /etc/containerd/certs.d/harbor.test.com/hosts.toml << 'EOF'

server = "http://harbor.test.com"

[host."http://harbor.test.com"]

capabilities = ["pull", "resolve", "push"]

skip_verify = true

[host."http://harbor.test.com".header]

Authorization = ["Basic YWRtaW46SGFyYm9yMTIzNDU="]

EOF

root@k8s-master01:~/yaml# systemctl restart containerd

root@k8s-master01:~/yaml# systemctl start kubelet

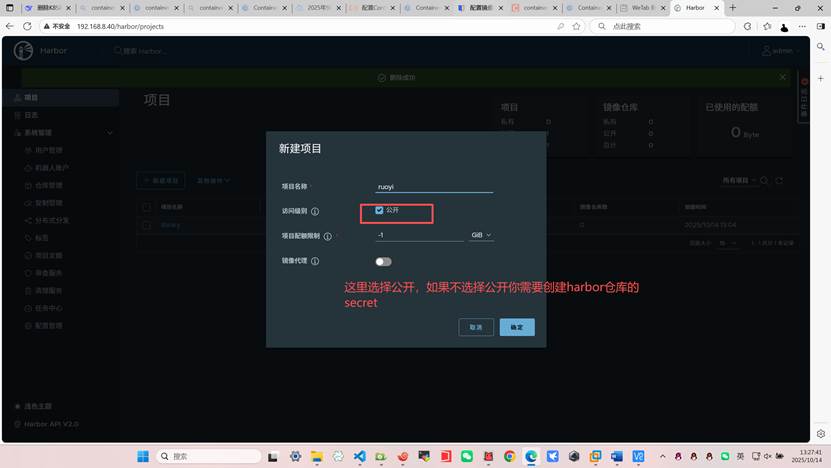

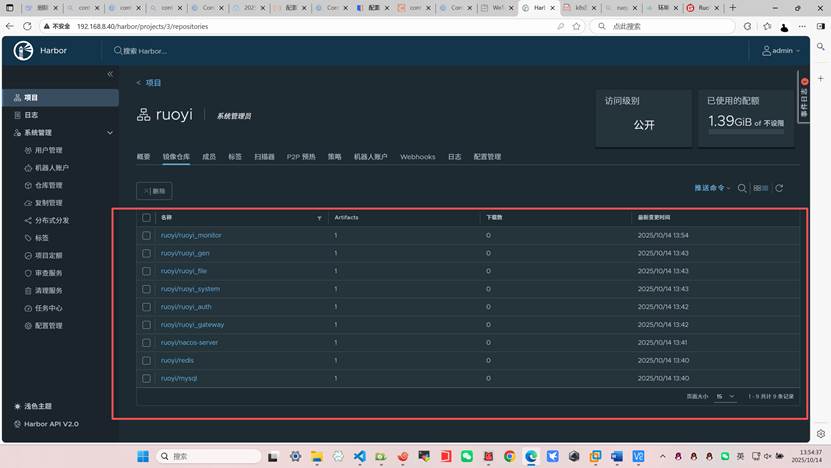

登录harbor仓库创建ruoyi项目

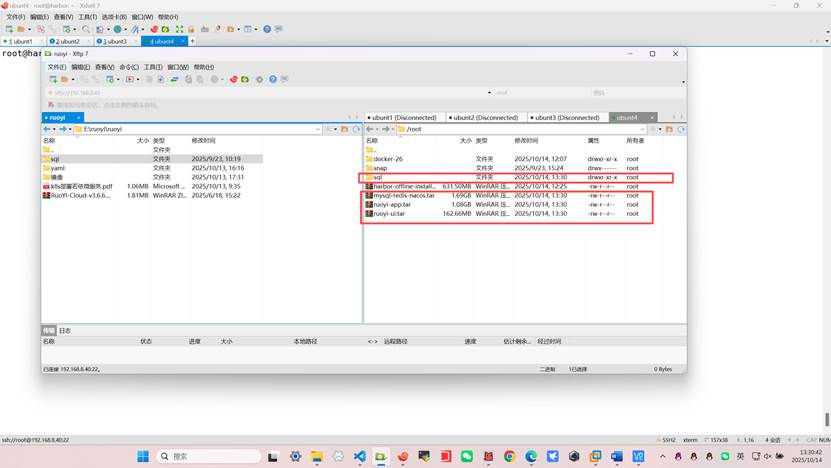

上传若依项目的所有镜像到harbor,如果你没有镜像需要自己制作成镜像步骤繁琐可以参考以下文档

构建镜像步骤

首先需要安装git,使用git clone https://gitee.com/y_project/RuoYi-Cloud.git

把源代码拉下来使用maven编译打包打包完使用dockerfile等打包

具体参考以下文档

官方文档:

https://doc.ruoyi.vip/ruoyi-cloud/document/hjbs.html#%E5%87%86%E5%A4%87%E5%B7%A5%E4%BD%9C

https://blog.csdn.net/dreamsarchitects/article/details/121121109

https://blog.csdn.net/wkywky123/article/details/147052237

以上为参考文档

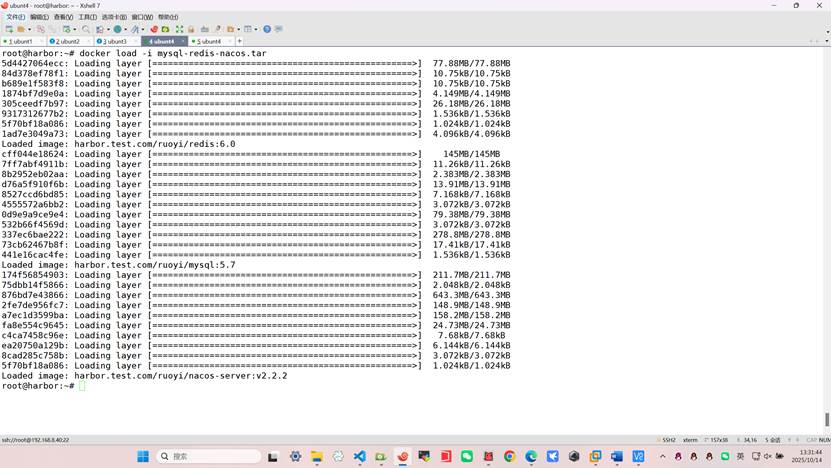

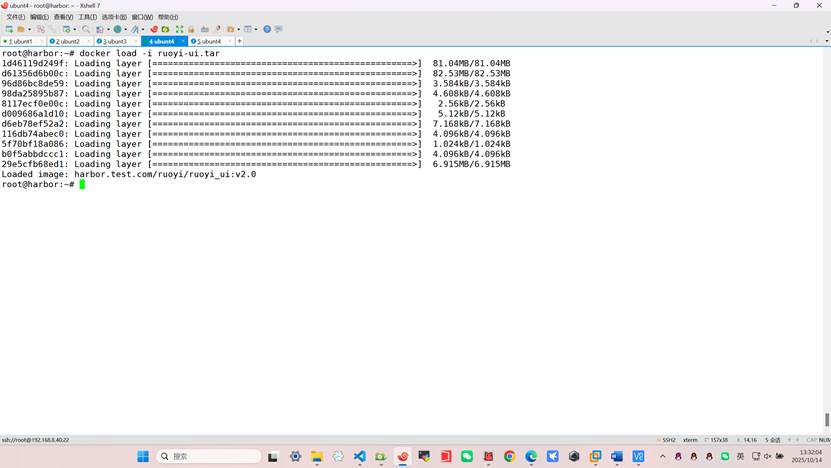

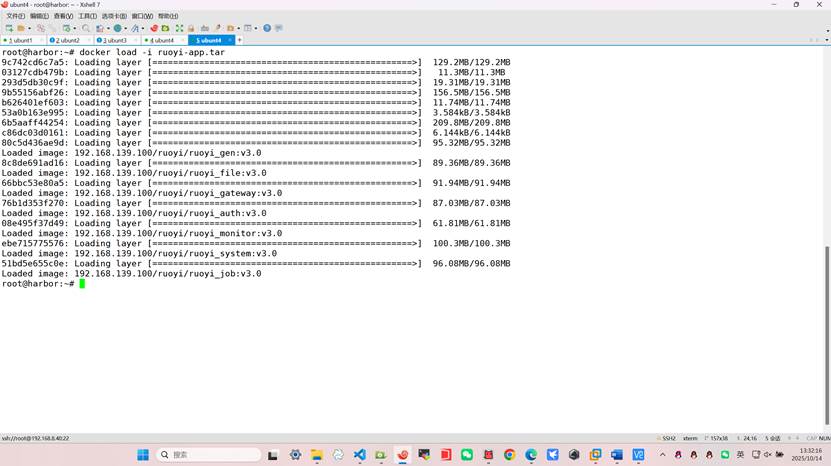

root@harbor:~# docker load -i mysql-redis-nacos.tar

root@harbor:~# docker load -i ruoyi-ui.tar

root@harbor:~# docker load -i ruoyi-app.tar

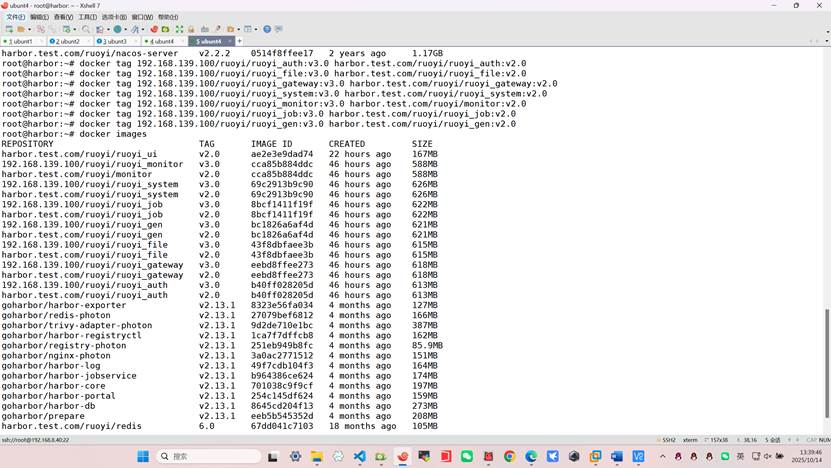

我们修改镜像的名称以及标签并推送到harbor仓库

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_auth:v3.0 harbor.test.com/ruoyi/ruoyi_auth:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_file:v3.0 harbor.test.com/ruoyi/ruoyi_file:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_gateway:v3.0 harbor.test.com/ruoyi/ruoyi_gateway:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_system:v3.0 harbor.test.com/ruoyi/ruoyi_system:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_monitor:v3.0 harbor.test.com/ruoyi/ruoyi_monitor:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_job:v3.0 harbor.test.com/ruoyi/ruoyi_job:v2.0

root@harbor:~# docker tag 192.168.139.100/ruoyi/ruoyi_gen:v3.0 harbor.test.com/ruoyi/ruoyi_gen:v2.0

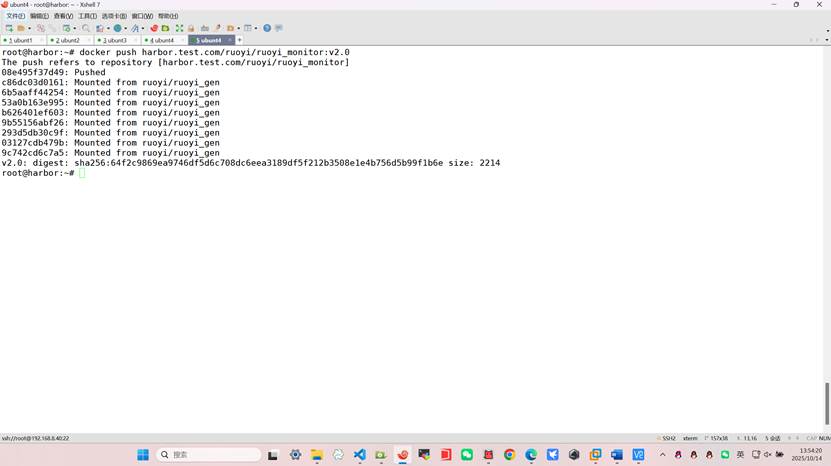

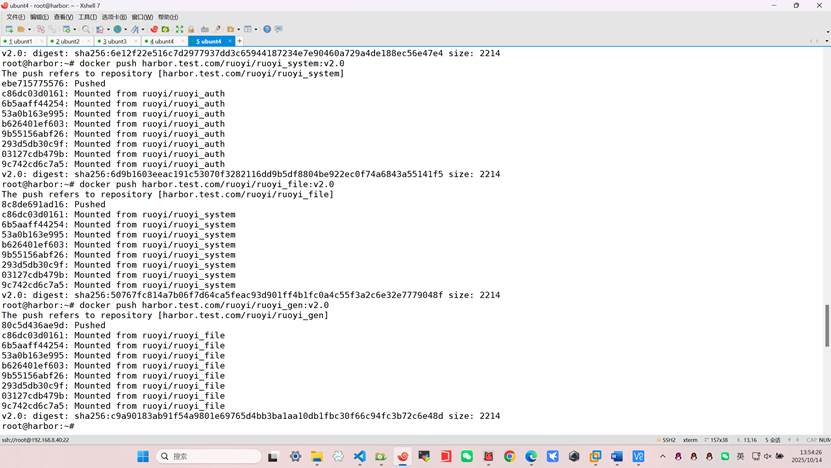

推送镜像到harbor仓库

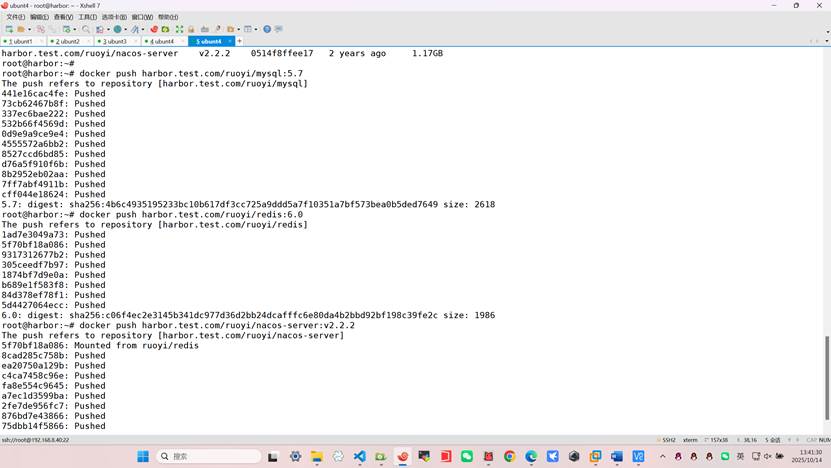

root@harbor:~# docker push harbor.test.com/ruoyi/mysql:5.7

root@harbor:~# docker push harbor.test.com/ruoyi/redis:6.0

root@harbor:~# docker push harbor.test.com/ruoyi/nacos-server:v2.2.2

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_gateway:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_auth:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_system:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_file:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_gen:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_monitor:v2.0

root@harbor:~# docker push harbor.test.com/ruoyi/ruoyi_ui:v2.0

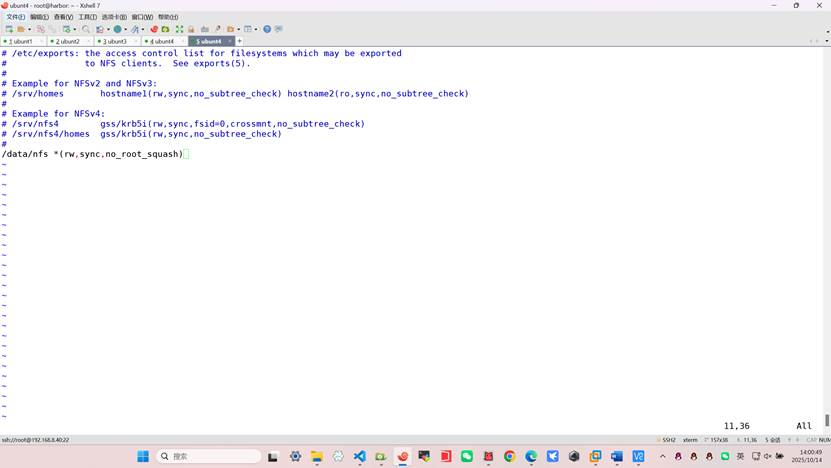

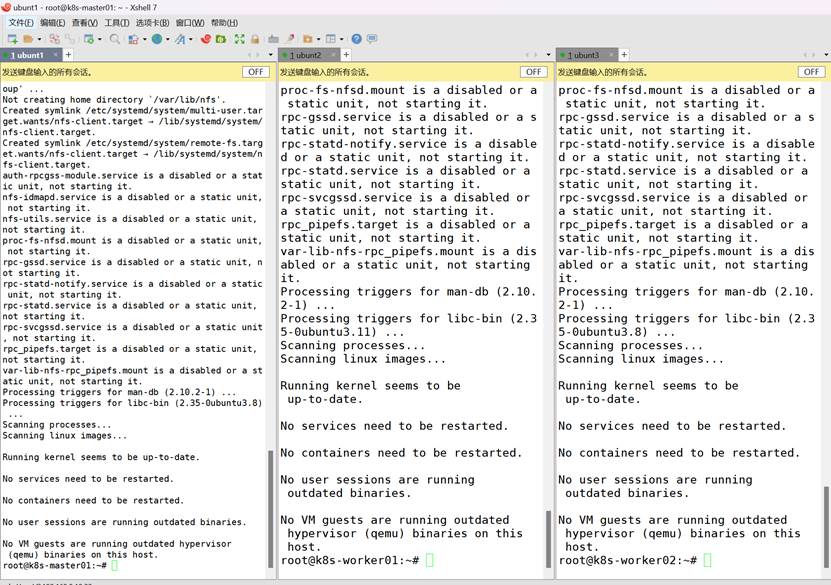

七、配置nfs动态供给

1、nfs服务器安装nfs服务(也就是8.40主机)

root@harbor:~# apt -y install nfs-kernel-server

root@harbor:~# mkdir -p /data/nfs

root@harbor:~# chmod 777 /data/nfs/

root@harbor:~# vim /etc/exports

/data/nfs *(rw,sync,no_root_squash)

重启服务

root@harbor:~# systemctl restart nfs-kernel-server

root@harbor:~# systemctl enable nfs-kernel-server

客户端安装

apt install nfs-common

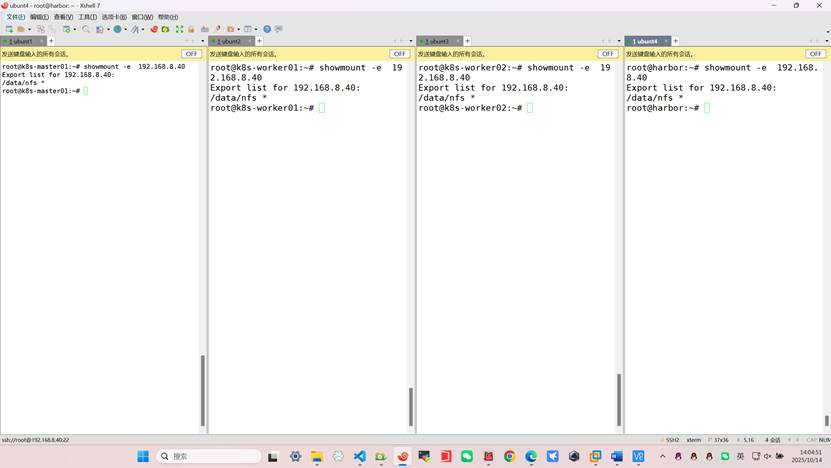

测试nfs可用性

showmount -e 192.168.8.40

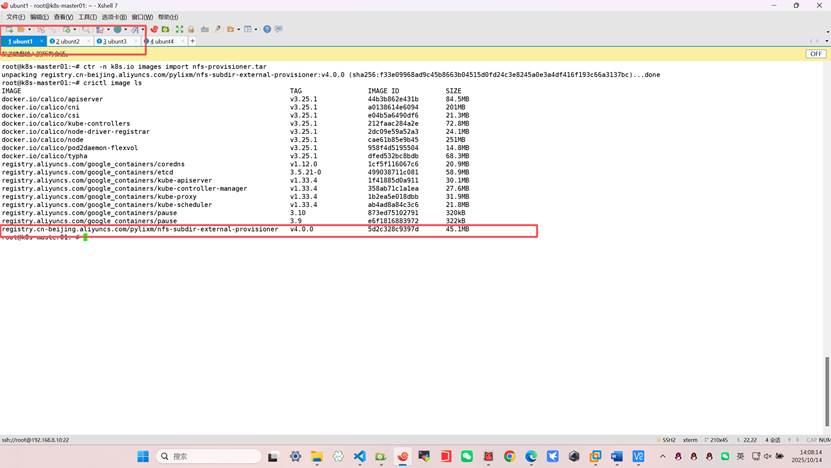

2、将nfs-provisioner镜像复制到三台k8s

并导入镜像

root@k8s-master01:~# ctr -n k8s.io images import nfs-provisioner.tar

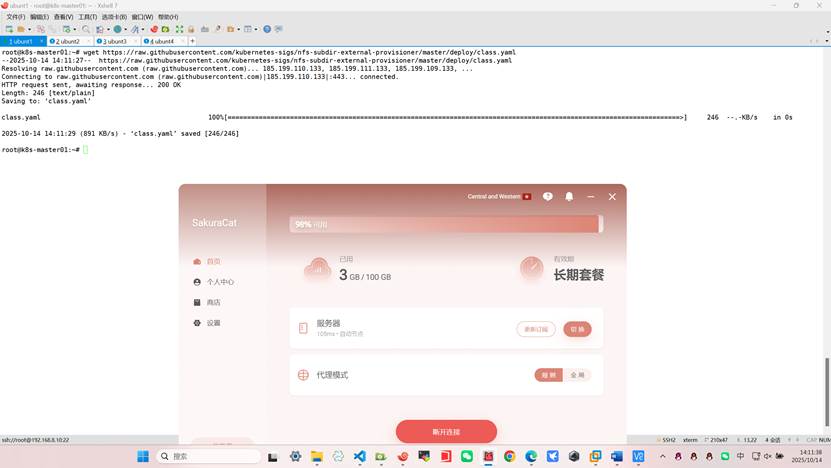

- 下载并创建storageclass

连接vpn(可选但是没vpn可以拖包到主机)

[root@master01 ~]# wget https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/class.yaml

[root@master01 ~]# mv class.yaml storageclass-nfs.yml

如果没有vpn直接执行下面操作执行

root@k8s-master01:~# vim storageclass-nfs.yml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

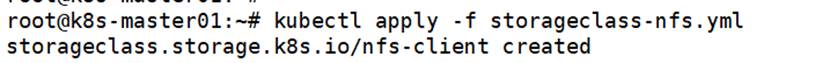

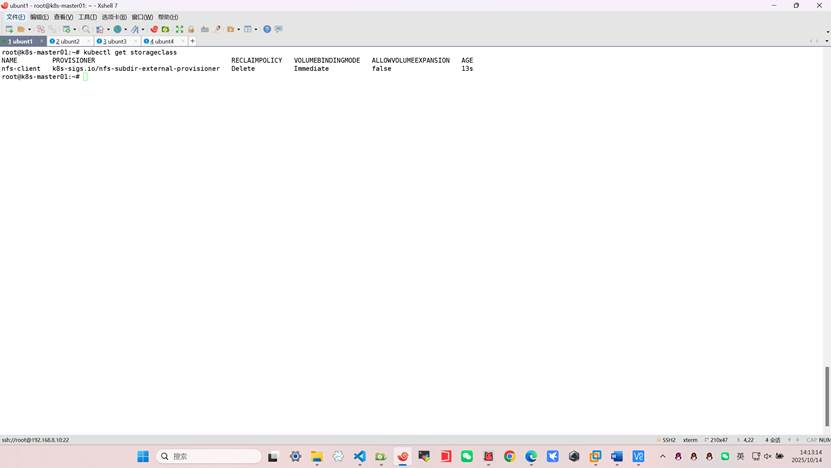

[root@master01 ~]# kubectl apply -f storageclass-nfs.yml

[root@master01 ~]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 39s

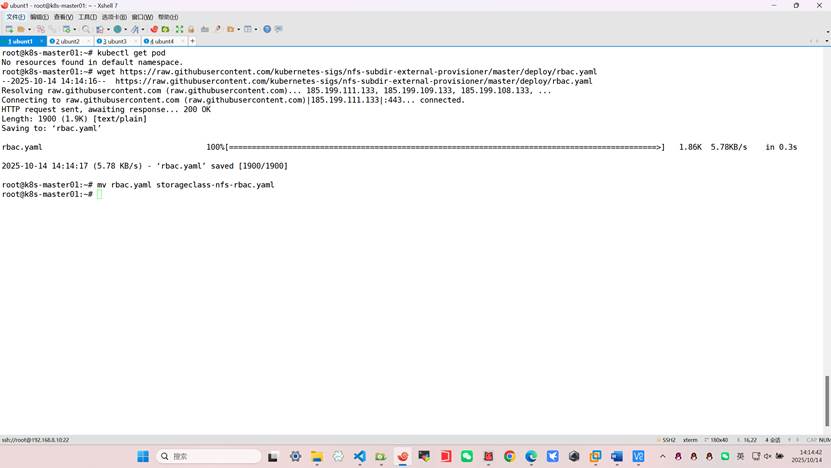

- 下载配置清单文件,创建rbac(创建账号,并授权)

[root@master01 ~]# wget https://raw.githubusercontent.com/kubernetes-sigs/nfs-subdir-external-provisioner/master/deploy/rbac.yaml

[root@master01 ~]# mv rbac.yaml storageclass-nfs-rbac.yaml

如果没有vpn直接执行下面操作执行

root@k8s-master01:~# vim storageclass-nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

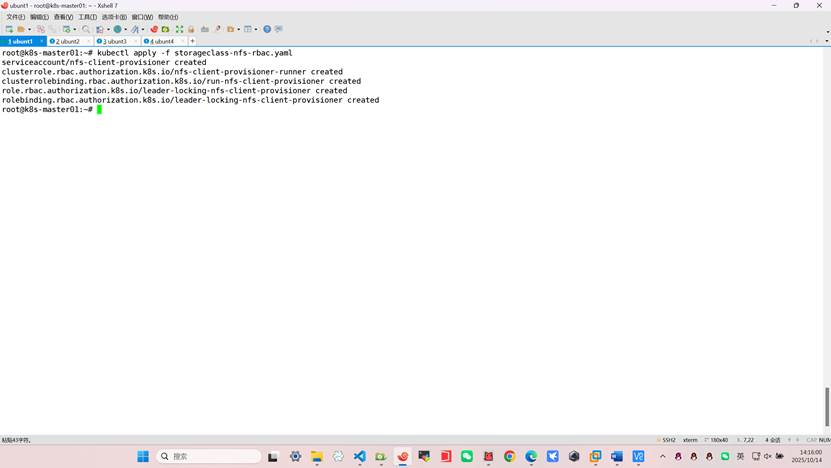

[root@master01 ~]# kubectl apply -f storageclass-nfs-rbac.yaml

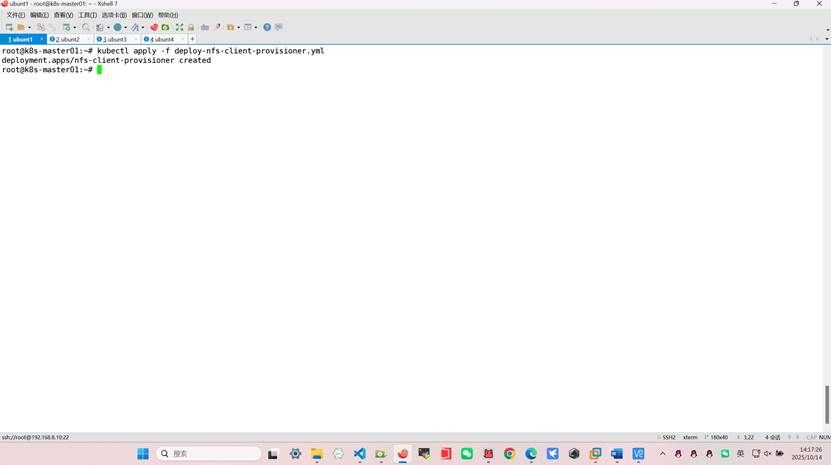

- 创建动态供给的deployment

通过中间件将访问账号与共享存储关联

需要一个deployment来专门实现pv与pvc的自动创建

[root@master01 ~]# vim deploy-nfs-client-provisioner.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/pylixm/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.8.40

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.8.40

path: /data/nfs

[root@master01 ~]# kubectl apply -f deploy-nfs-client-provisioner.yml

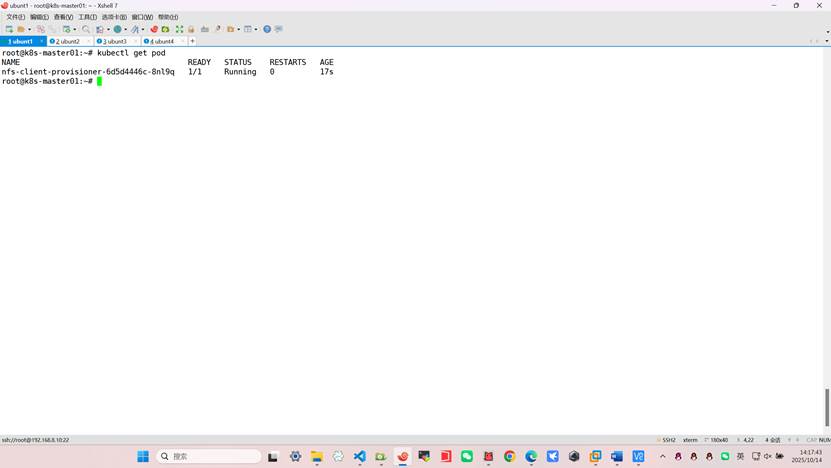

[root@master01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-6d5d4446c-8nl9q 1/1 Running 0 17s

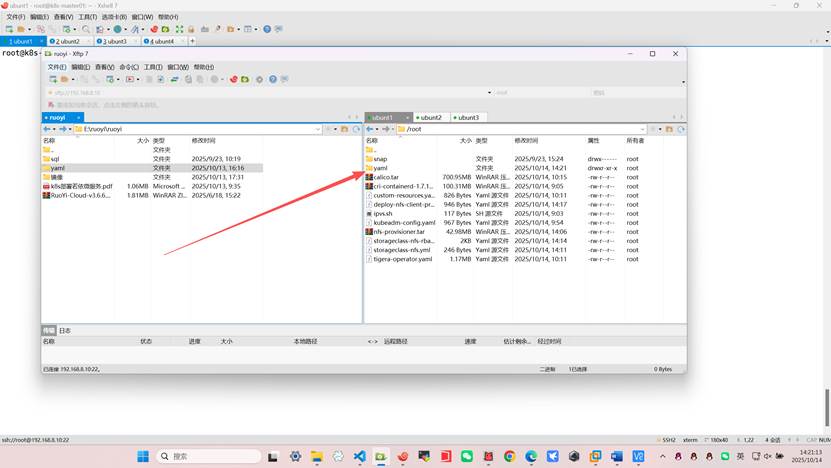

九、部署ruoyi微服务

将所有yaml清单复制到master(提前准备好的)如果没有可以复制下面的yaml内容都是可以的但是需要注意修改成你自己的harbor仓库地址

第⼀步:部署中间件 (MySQL, Redis, Nacos)

强烈建议: ⽣产环境应使⽤⾼可⽤的外部中间件集群。以下为在K8s内部快速部署单实例⽰例。

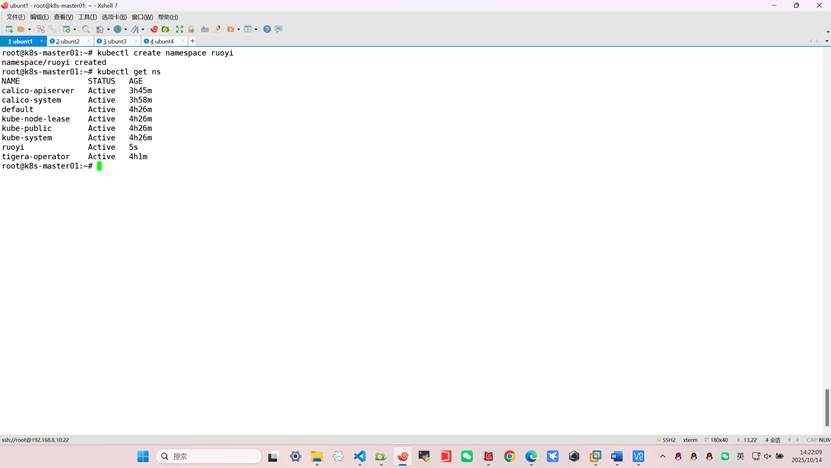

1、创建命名空间:

root@k8s-master01:~# kubectl create namespace ruoyi

namespace/ruoyi created

root@k8s-master01:~# kubectl get ns

NAME STATUS AGE

calico-apiserver Active 3h45m

calico-system Active 3h58m

default Active 4h26m

kube-node-lease Active 4h26m

kube-public Active 4h26m

kube-system Active 4h26m

ruoyi Active 5s

tigera-operator Active 4h1m

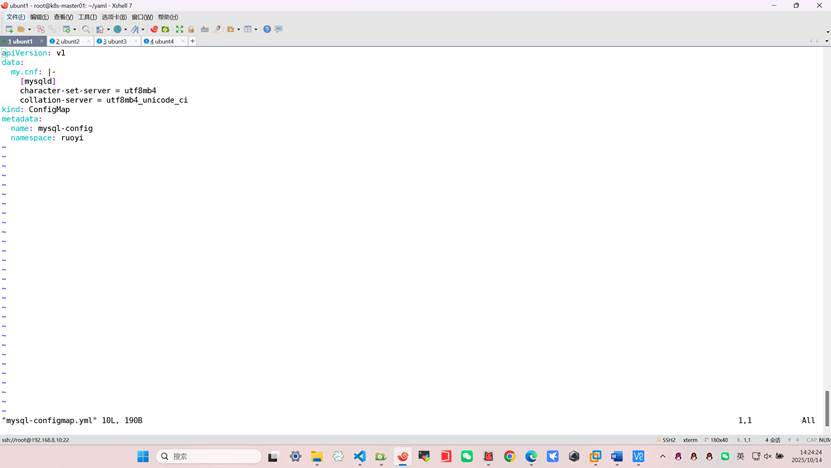

2、部署 MySQL:

创建configmap⽂件。

root@k8s-master01:~/yaml# vim mysql-configmap.yml

apiVersion: v1

data:

my.cnf: |-

[mysqld]

character-set-server = utf8mb4

collation-server = utf8mb4_unicode_ci

kind: ConfigMap

metadata:

name: mysql-config

namespace: ruoyi

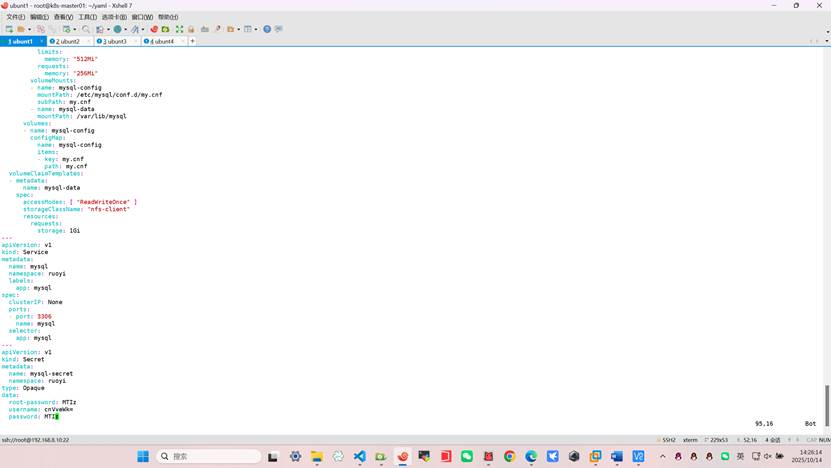

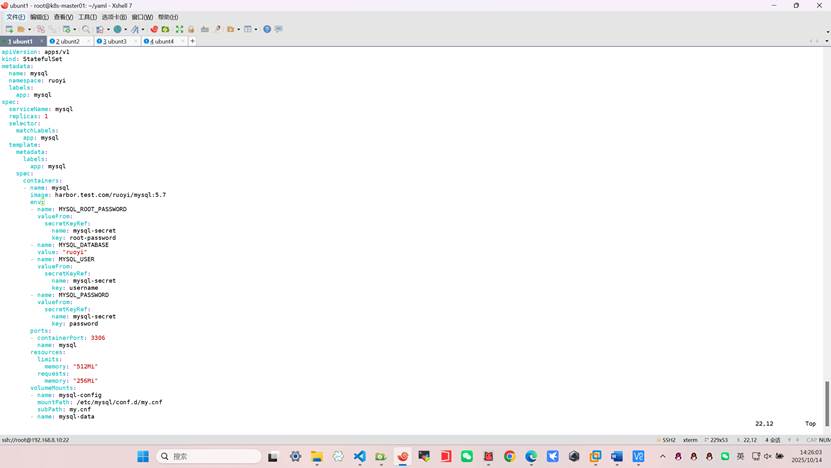

3、创建 mysql_statfulset.yml 和 mysql-nodeport.yml 并应⽤

root@k8s-master01:~/yaml# vim mysql_statfulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: ruoyi

labels:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: ruoyi

labels:

app: mysql

spec:

serviceName: mysql

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: harbor.test.com/ruoyi/mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: root-password

- name: MYSQL_DATABASE

value: "ruoyi"

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: mysql-secret

key: username

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: password

ports:

- containerPort: 3306

name: mysql

resources:

limits:

memory: "512Mi"

requests:

memory: "256Mi"

volumeMounts:

- name: mysql-config

mountPath: /etc/mysql/conf.d/my.cnf

subPath: my.cnf

- name: mysql-data

mountPath: /var/lib/mysql

volumes:

- name: mysql-config

configMap:

name: mysql-config

items:

- key: my.cnf

path: my.cnf

volumeClaimTemplates:

- metadata:

name: mysql-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-client"

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: mysql

namespace: ruoyi

labels:

app: mysql

spec:

clusterIP: None

ports:

- port: 3306

name: mysql

selector:

app: mysql

---

apiVersion: v1

kind: Secret

metadata:

name: mysql-secret

namespace: ruoyi

type: Opaque

data:

root-password: MTIz

username: cnVveWk=

password: MTIz

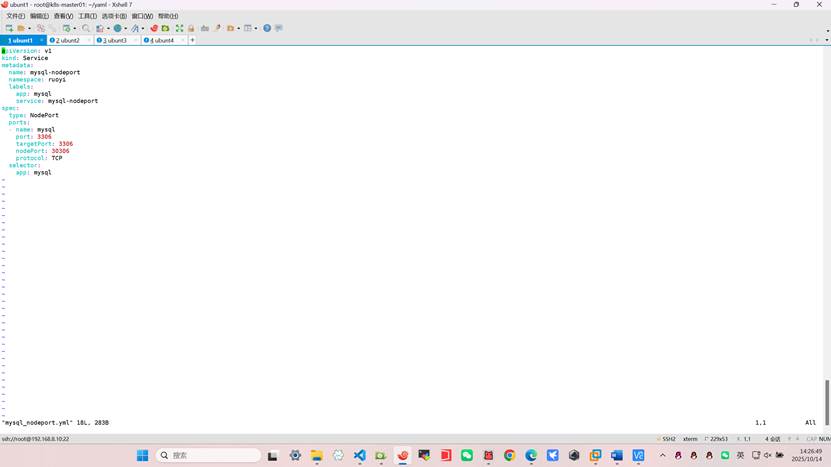

root@k8s-master01:~/yaml# vim mysql_nodeport.yml

apiVersion: v1

kind: Service

metadata:

name: mysql-nodeport

namespace: ruoyi

labels:

app: mysql

service: mysql-nodeport

spec:

type: NodePort

ports:

- name: mysql

port: 3306

targetPort: 3306

nodePort: 30306

protocol: TCP

selector:

app: mysql

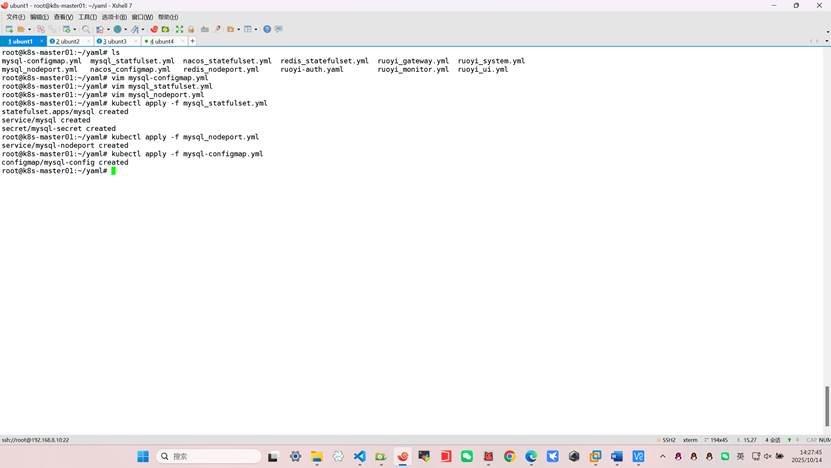

root@k8s-master01:~/yaml# kubectl apply -f mysql_statfulset.yml

statefulset.apps/mysql created

service/mysql created

secret/mysql-secret created

root@k8s-master01:~/yaml# kubectl apply -f mysql_nodeport.yml

service/mysql-nodeport created

root@k8s-master01:~/yaml# kubectl apply -f mysql-configmap.yml

configmap/mysql-config created

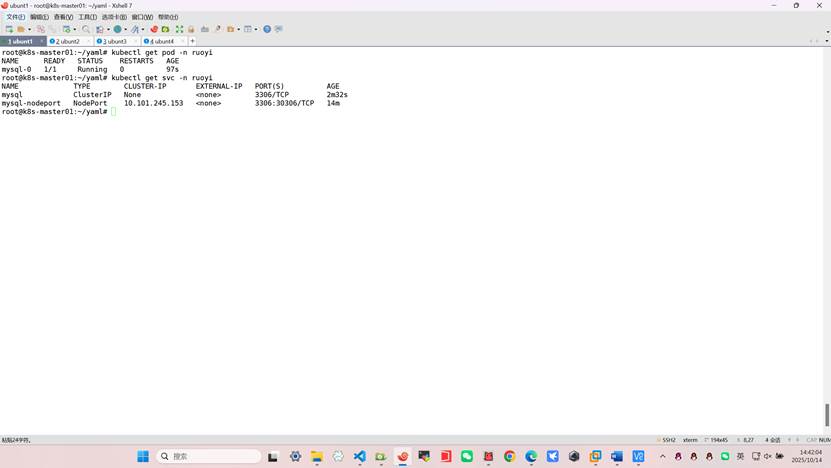

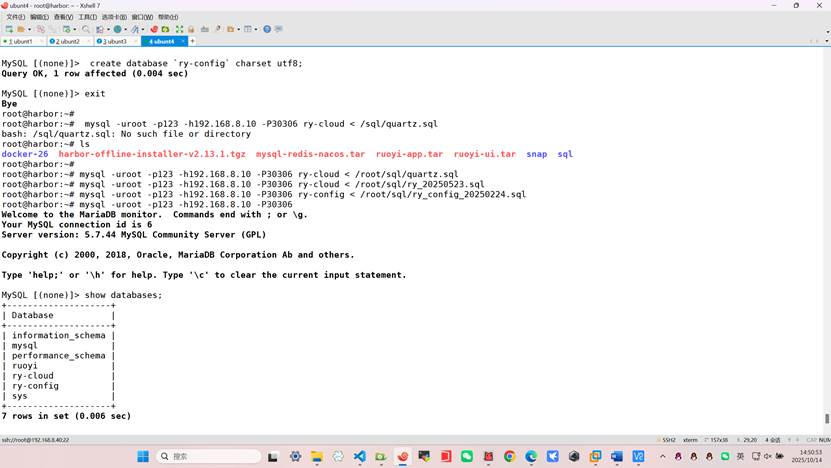

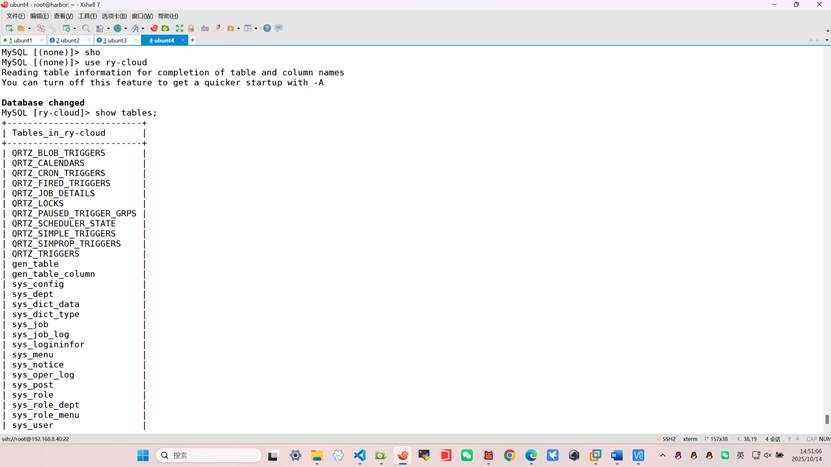

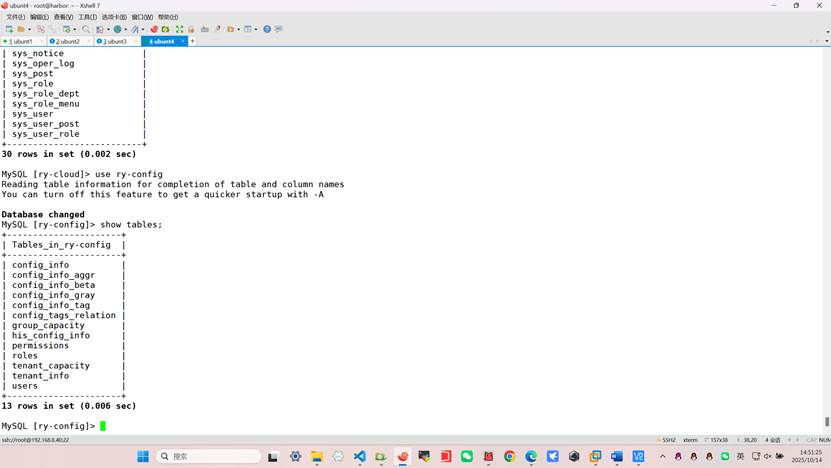

4、远程连接MySQL,创建ry-config和ry-cloud库,并导⼊表

在harbor公共服务主机操作

安装mysql客户端

root@harbor:~# apt -y install mariadb-server

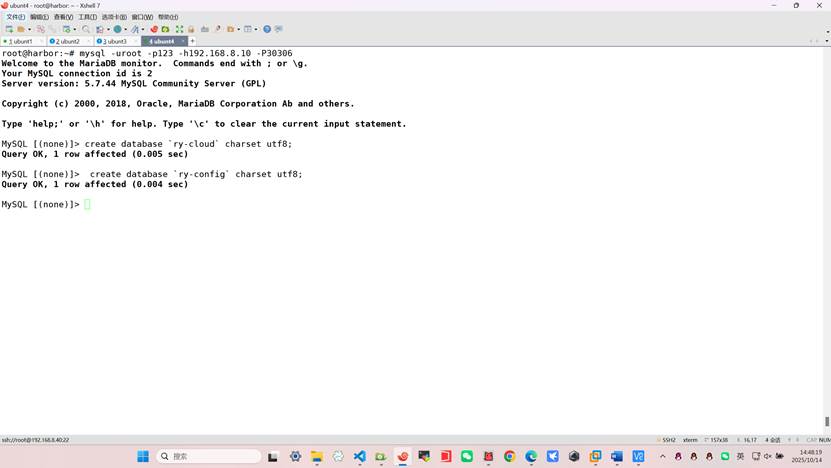

root@harbor:~# mysql -uroot -p123 -h192.168.8.10 -P30306

MySQL [(none)]> create database `ry-cloud` charset utf8;

MySQL [(none)]> create database `ry-config` charset utf8;

root@harbor:~# mysql -uroot -p123 -h192.168.8.10 -P30306 ry-cloud < /root/sql/quartz.sql

root@harbor:~# mysql -uroot -p123 -h192.168.8.10 -P30306 ry-cloud < /root/sql/ry_20250523.sql

root@harbor:~# mysql -uroot -p123 -h192.168.8.10 -P30306 ry-config < /root/sql/ry_config_20250224.sql

查看数据表是否导入

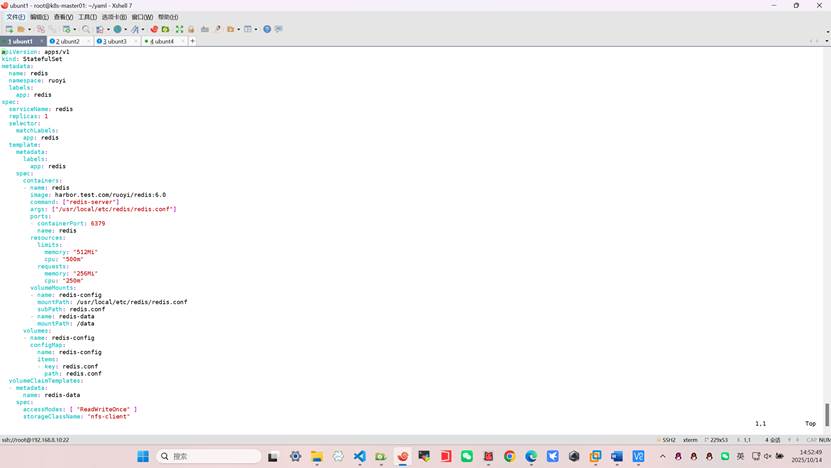

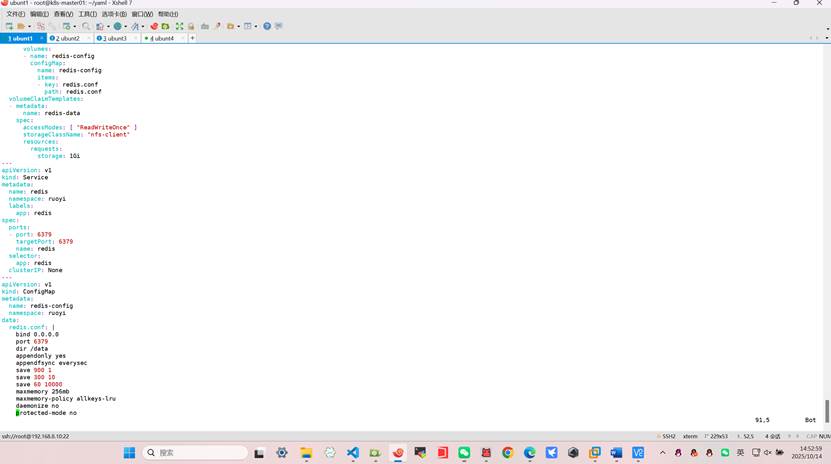

5、部署 Redis:

创建 redis-statefulset.yaml 和 redis-service.yaml 并应⽤

root@k8s-master01:~/yaml# vim redis_statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: ruoyi

labels:

app: redis

spec:

serviceName: redis

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: redis

image: harbor.test.com/ruoyi/redis:6.0

command: ["redis-server"]

args: ["/usr/local/etc/redis/redis.conf"]

ports:

- containerPort: 6379

name: redis

resources:

limits:

memory: "512Mi"

cpu: "500m"

requests:

memory: "256Mi"

cpu: "250m"

volumeMounts:

- name: redis-config

mountPath: /usr/local/etc/redis/redis.conf

subPath: redis.conf

- name: redis-data

mountPath: /data

volumes:

- name: redis-config

configMap:

name: redis-config

items:

- key: redis.conf

path: redis.conf

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-client"

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: ruoyi

labels:

app: redis

spec:

ports:

- port: 6379

targetPort: 6379

name: redis

selector:

app: redis

clusterIP: None

---

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-config

namespace: ruoyi

data:

redis.conf: |

bind 0.0.0.0

port 6379

dir /data

appendonly yes

appendfsync everysec

save 900 1

save 300 10

save 60 10000

maxmemory 256mb

maxmemory-policy allkeys-lru

daemonize no

protected-mode no

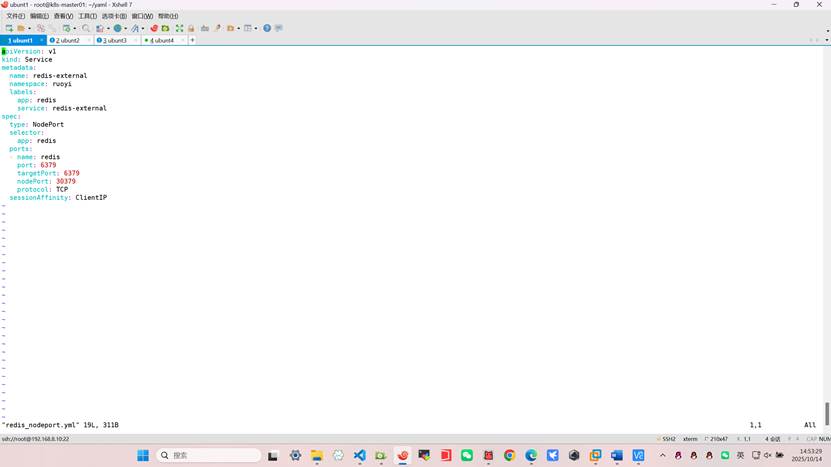

root@k8s-master01:~/yaml# vim redis_nodeport.yml

apiVersion: v1

kind: Service

metadata:

name: redis-external

namespace: ruoyi

labels:

app: redis

service: redis-external

spec:

type: NodePort

selector:

app: redis

ports:

- name: redis

port: 6379

targetPort: 6379

nodePort: 30379

protocol: TCP

sessionAffinity: ClientIP

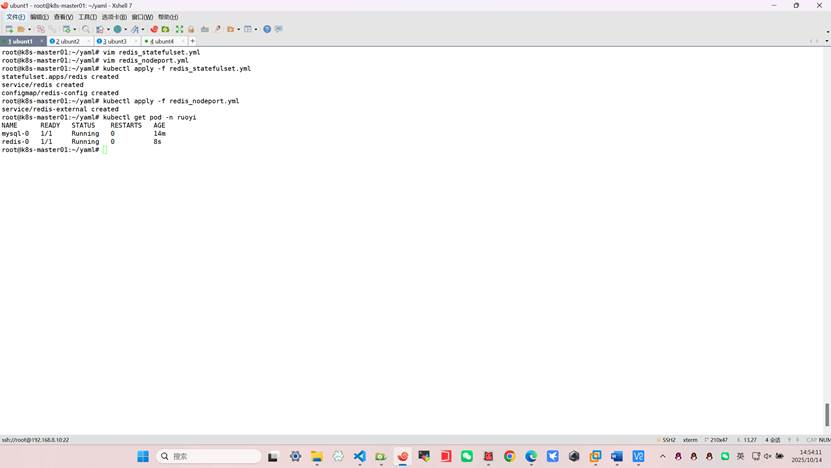

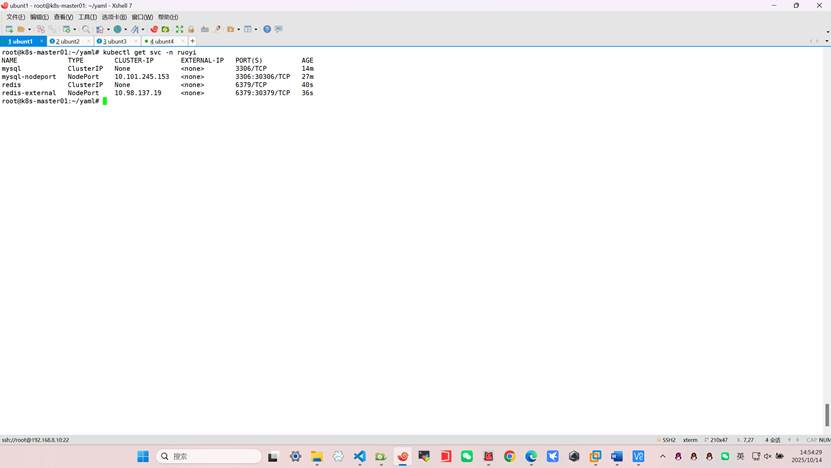

root@k8s-master01:~/yaml# kubectl apply -f redis_statefulset.yml

root@k8s-master01:~/yaml# kubectl apply -f redis_nodeport.yml

root@k8s-master01:~/yaml# kubectl get pod -n ruoyi

NAME READY STATUS RESTARTS AGE

mysql-0 1/1 Running 0 14m

redis-0 1/1 Running 0 8s

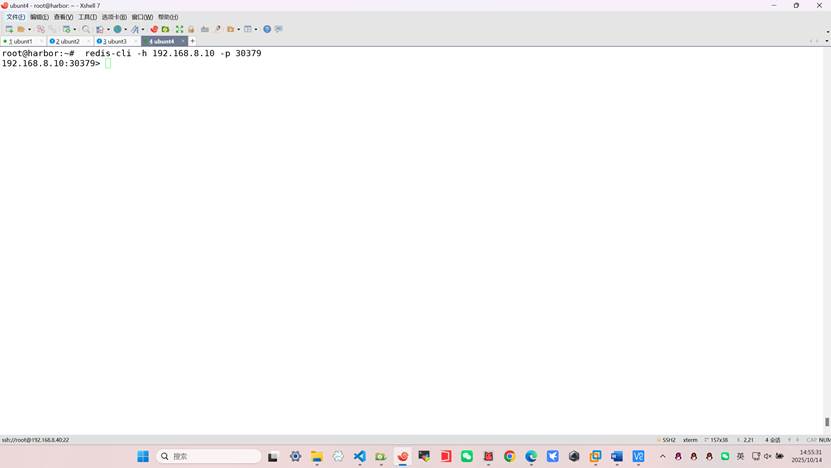

验证redis远程访问(harbor)

root@harbor:~# apt -y install redis

root@harbor:~# redis-cli -h 192.168.8.10 -p 30379

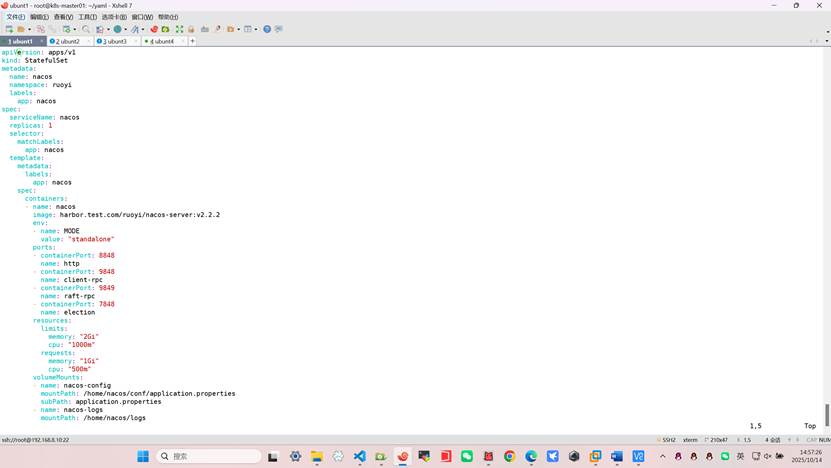

6、部署 Nacos:

创建nacos-configmap.yaml配置⽂件

root@k8s-master01:~/yaml# vim nacos_statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos

namespace: ruoyi

labels:

app: nacos

spec:

serviceName: nacos

replicas: 1

selector:

matchLabels:

app: nacos

template:

metadata:

labels:

app: nacos

spec:

containers:

- name: nacos

image: harbor.test.com/ruoyi/nacos-server:v2.2.2

env:

- name: MODE

value: "standalone"

ports:

- containerPort: 8848

name: http

- containerPort: 9848

name: client-rpc

- containerPort: 9849

name: raft-rpc

- containerPort: 7848

name: election

resources:

limits:

memory: "2Gi"

cpu: "1000m"

requests:

memory: "1Gi"

cpu: "500m"

volumeMounts:

- name: nacos-config

mountPath: /home/nacos/conf/application.properties

subPath: application.properties

- name: nacos-logs

mountPath: /home/nacos/logs

- name: nacos-data

mountPath: /home/nacos/data

volumes:

- name: nacos-config

configMap:

name: ry-nacos

items:

- key: application.properties

path: application.properties

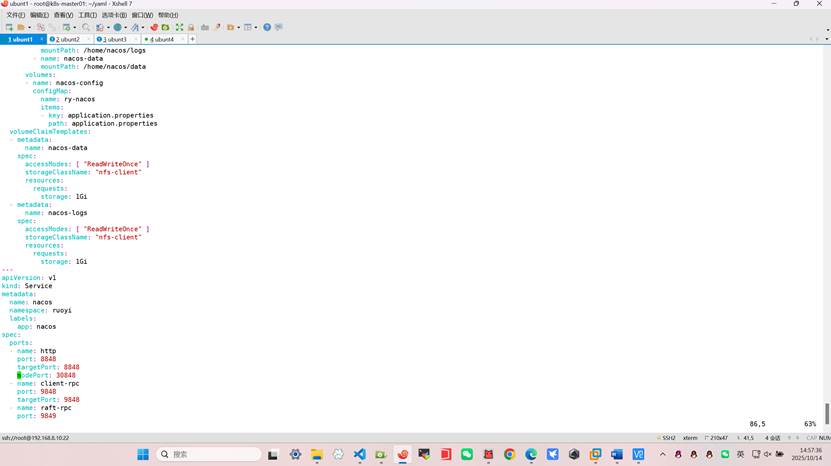

volumeClaimTemplates:

- metadata:

name: nacos-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-client"

resources:

requests:

storage: 1Gi

- metadata:

name: nacos-logs

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-client"

resources:

requests:

storage: 1Gi

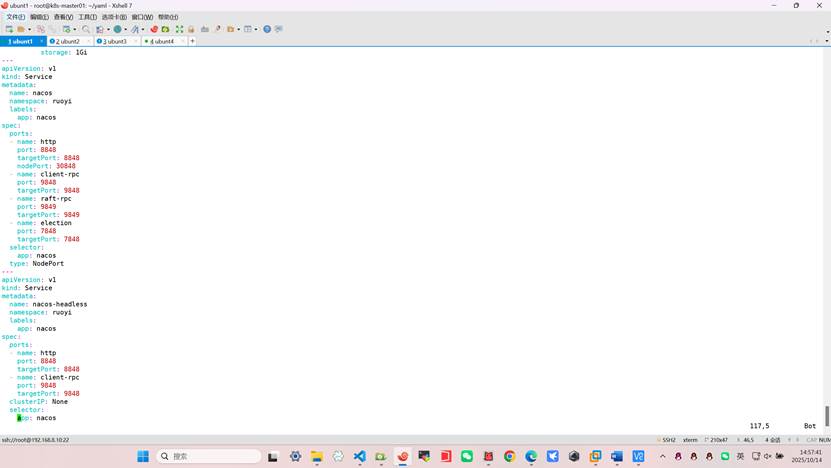

---

apiVersion: v1

kind: Service

metadata:

name: nacos

namespace: ruoyi

labels:

app: nacos

spec:

ports:

- name: http

port: 8848

targetPort: 8848

nodePort: 30848

- name: client-rpc

port: 9848

targetPort: 9848

- name: raft-rpc

port: 9849

targetPort: 9849

- name: election

port: 7848

targetPort: 7848

selector:

app: nacos

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

name: nacos-headless

namespace: ruoyi

labels:

app: nacos

spec:

ports:

- name: http

port: 8848

targetPort: 8848

- name: client-rpc

port: 9848

targetPort: 9848

clusterIP: None

selector:

app: nacos

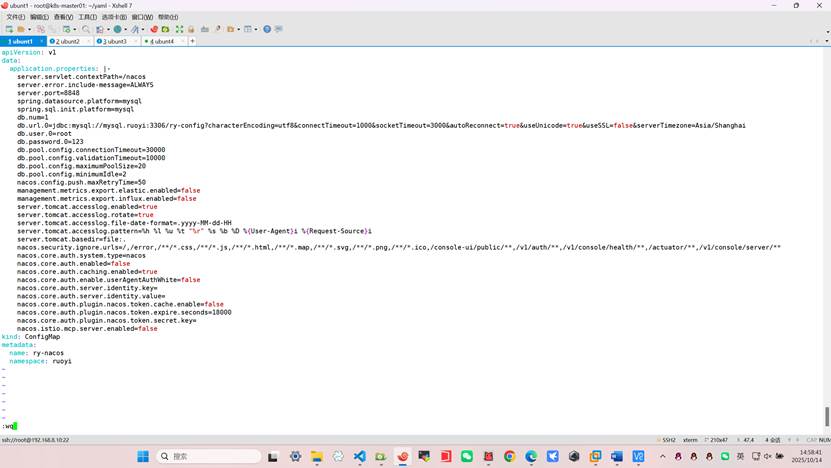

root@k8s-master01:~/yaml# vim nacos_configmap.yml

apiVersion: v1

data:

application.properties: |-

server.servlet.contextPath=/nacos

server.error.include-message=ALWAYS

server.port=8848

spring.datasource.platform=mysql

spring.sql.init.platform=mysql

db.num=1

db.url.0=jdbc:mysql://mysql.ruoyi:3306/ry-config?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=Asia/Shanghai

db.user.0=root

db.password.0=123

db.pool.config.connectionTimeout=30000

db.pool.config.validationTimeout=10000

db.pool.config.maximumPoolSize=20

db.pool.config.minimumIdle=2

nacos.config.push.maxRetryTime=50

management.metrics.export.elastic.enabled=false

management.metrics.export.influx.enabled=false

server.tomcat.accesslog.enabled=true

server.tomcat.accesslog.rotate=true

server.tomcat.accesslog.file-date-format=.yyyy-MM-dd-HH

server.tomcat.accesslog.pattern=%h %l %u %t "%r" %s %b %D %{User-Agent}i %{Request-Source}i

server.tomcat.basedir=file:.

nacos.security.ignore.urls=/,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-ui/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/**

nacos.core.auth.system.type=nacos

nacos.core.auth.enabled=false

nacos.core.auth.caching.enabled=true

nacos.core.auth.enable.userAgentAuthWhite=false

nacos.core.auth.server.identity.key=

nacos.core.auth.server.identity.value=

nacos.core.auth.plugin.nacos.token.cache.enable=false

nacos.core.auth.plugin.nacos.token.expire.seconds=18000

nacos.core.auth.plugin.nacos.token.secret.key=

nacos.istio.mcp.server.enabled=false

kind: ConfigMap

metadata:

name: ry-nacos

namespace: ruoyi

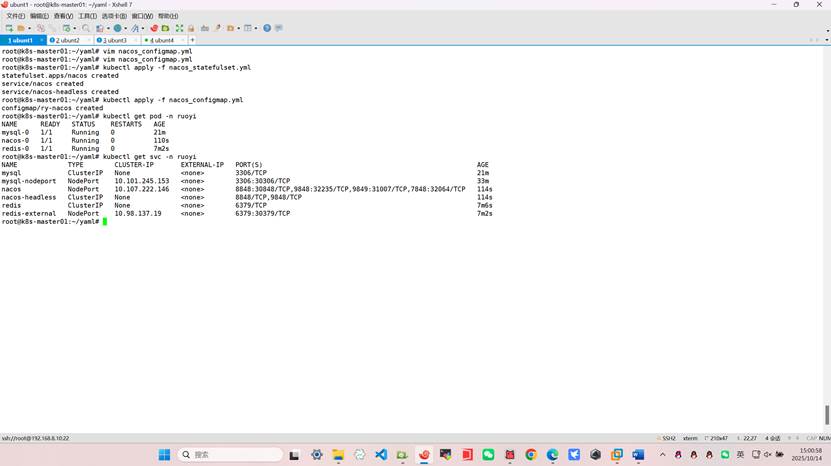

root@k8s-master01:~/yaml# kubectl apply -f nacos_statefulset.yml

root@k8s-master01:~/yaml# kubectl apply -f nacos_configmap.yml

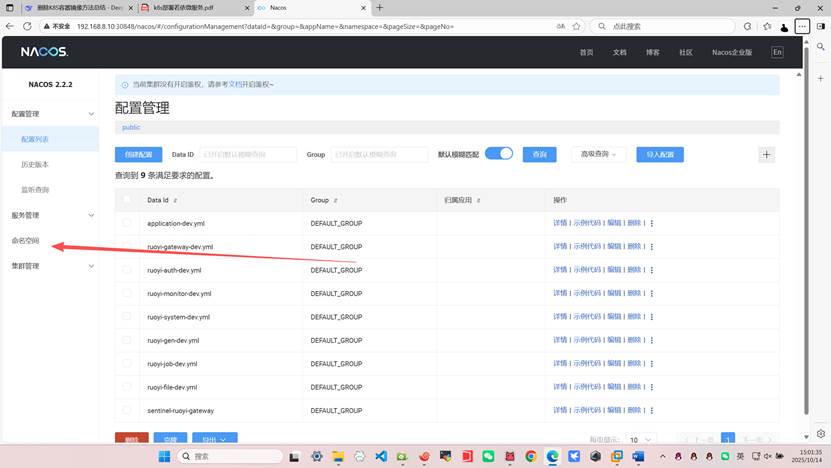

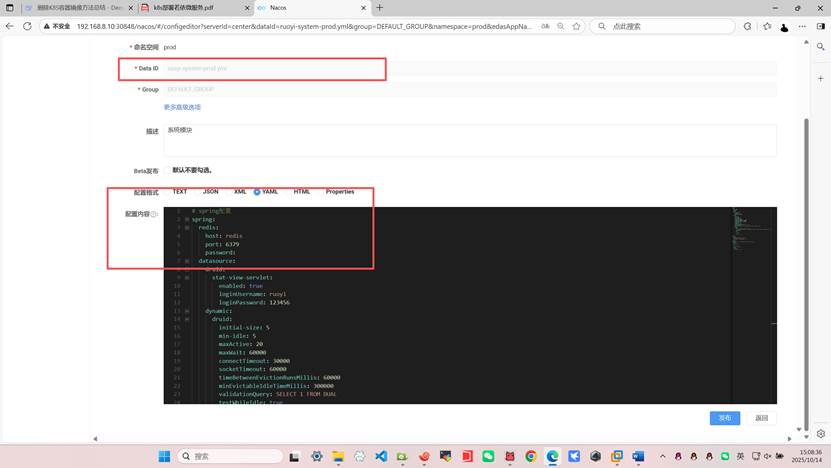

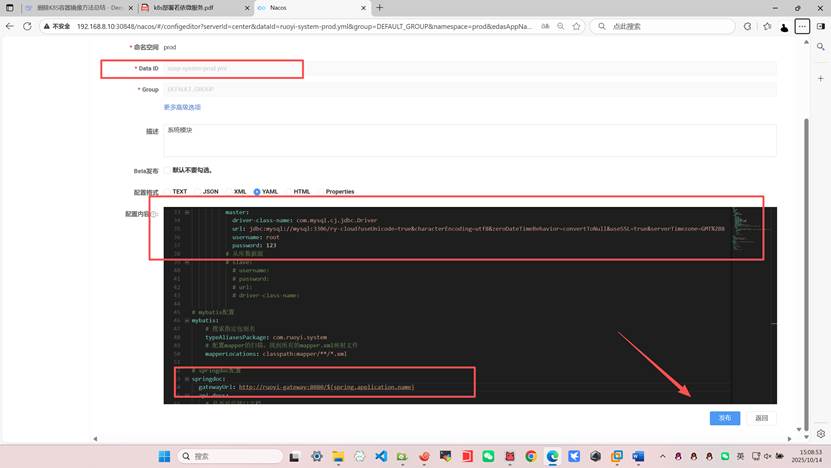

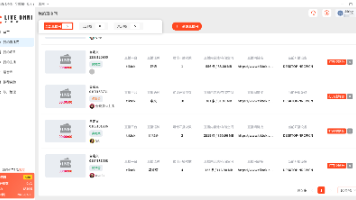

7、配置nacos服务发现和服务注册

等待 Nacos 启动后,通过其 Service 的 NodePort 或端⼝转发访问管理界⾯ ( e-ip 30848/nacos )

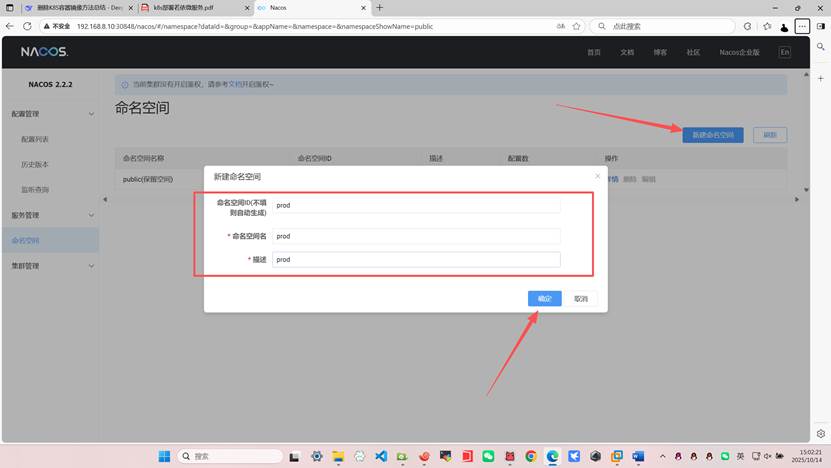

命名空间选项中新建命名空间

命名空间选项中新建命名空间

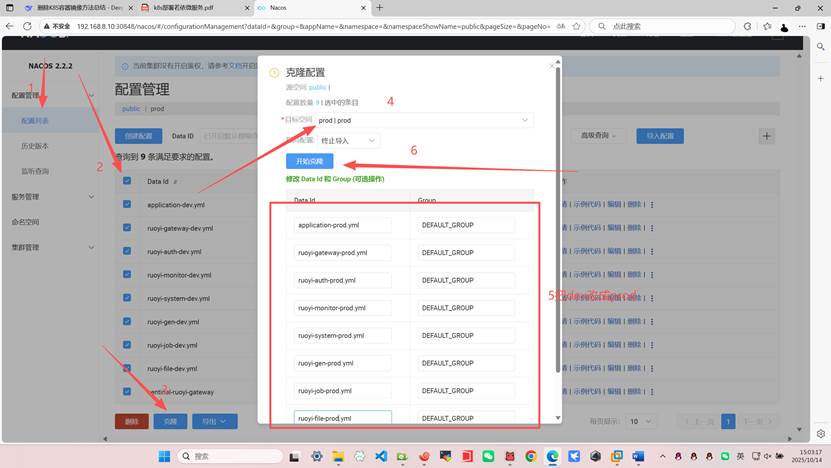

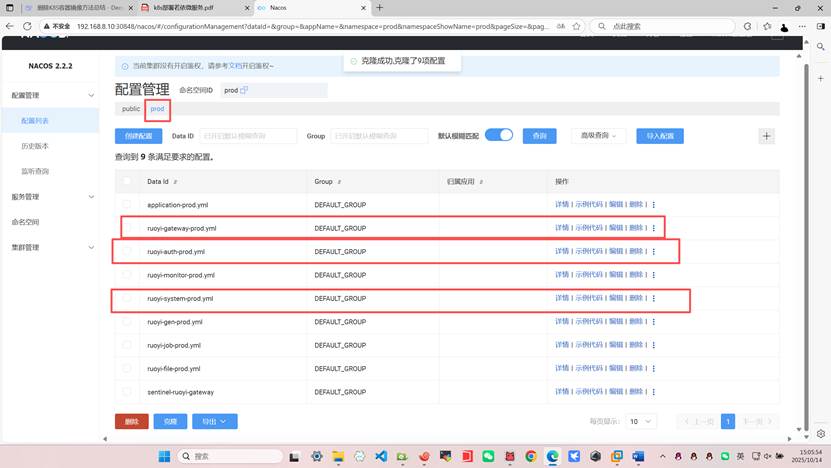

配置管理=>配置列表=>选public中所有配置=>克隆

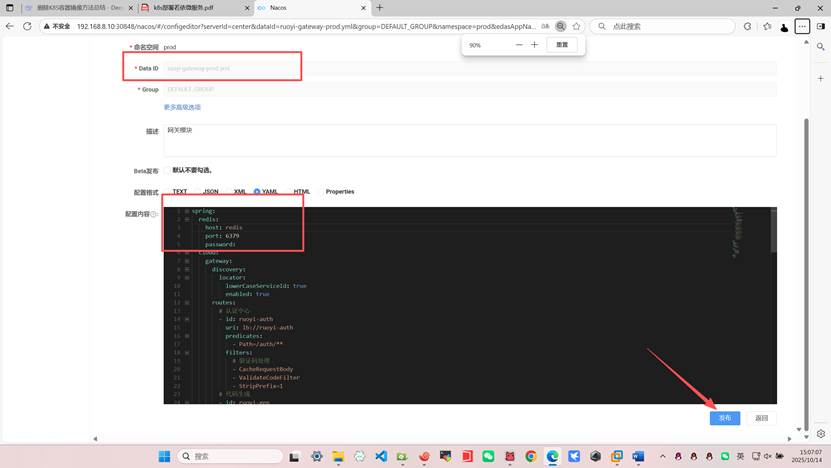

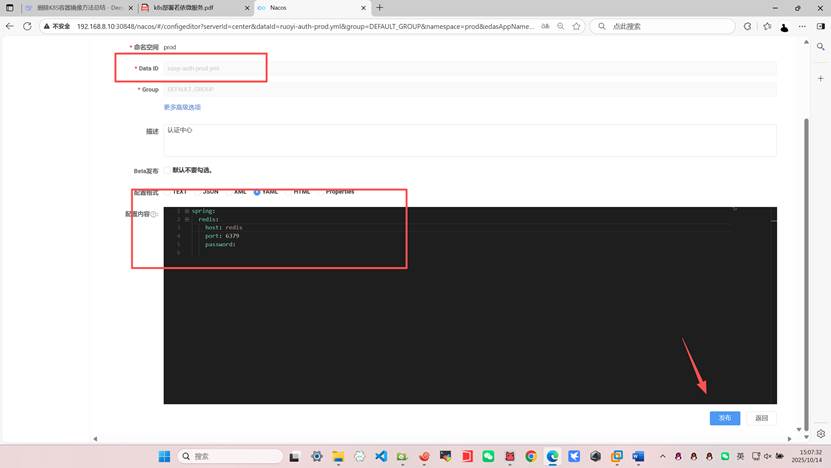

选择⽬标空间为 prod|prod,并把所有配置的dev改成prod

编辑下面三个

下面的gen或者file一系列微服务可以不修改和不部署但是system系统模块必须部署

├── ruoyi-modules // 业务模块

│ └── ruoyi-system // 系统模块 [9201] 必须

│ └── ruoyi-gen // 代码生成 [9202]

│ └── ruoyi-job // 定时任务 [9203]

│ └── ruoyi-file // 文件服务 [9300]

8、部署微服务

第⼆步:

部署若依微服务应⽤ . 为业务微服务创建statefulset : 将每个服务的 application.yml 配置⽂件创建为 ConfigMap,实现配置统⼀管理。 ⽰例( system-configmap.yaml )

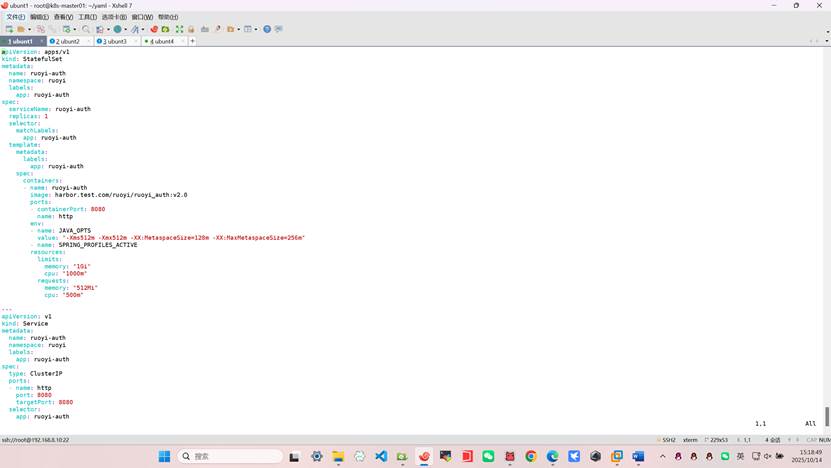

root@k8s-master01:~/yaml# vim ruoyi-auth.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ruoyi-auth

namespace: ruoyi

labels:

app: ruoyi-auth

spec:

serviceName: ruoyi-auth

replicas: 1

selector:

matchLabels:

app: ruoyi-auth

template:

metadata:

labels:

app: ruoyi-auth

spec:

containers:

- name: ruoyi-auth

image: harbor.test.com/ruoyi/ruoyi_auth:v2.0

ports:

- containerPort: 8080

name: http

env:

- name: JAVA_OPTS

value: "-Xms512m -Xmx512m -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=256m"

- name: SPRING_PROFILES_ACTIVE

resources:

limits:

memory: "1Gi"

cpu: "1000m"

requests:

memory: "512Mi"

cpu: "500m"

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-auth

namespace: ruoyi

labels:

app: ruoyi-auth

spec:

type: ClusterIP

ports:

- name: http

port: 8080

targetPort: 8080

selector:

app: ruoyi-auth

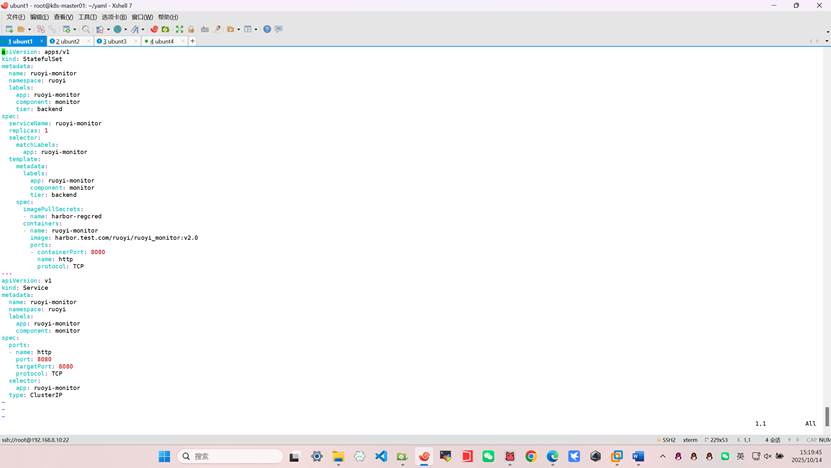

root@k8s-master01:~/yaml# vim ruoyi_monitor.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ruoyi-monitor

namespace: ruoyi

labels:

app: ruoyi-monitor

component: monitor

tier: backend

spec:

serviceName: ruoyi-monitor

replicas: 1

selector:

matchLabels:

app: ruoyi-monitor

template:

metadata:

labels:

app: ruoyi-monitor

component: monitor

tier: backend

spec:

imagePullSecrets:

- name: harbor-regcred

containers:

- name: ruoyi-monitor

image: harbor.test.com/ruoyi/ruoyi_monitor:v2.0

ports:

- containerPort: 8080

name: http

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-monitor

namespace: ruoyi

labels:

app: ruoyi-monitor

component: monitor

spec:

ports:

- name: http

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: ruoyi-monitor

type: ClusterIP

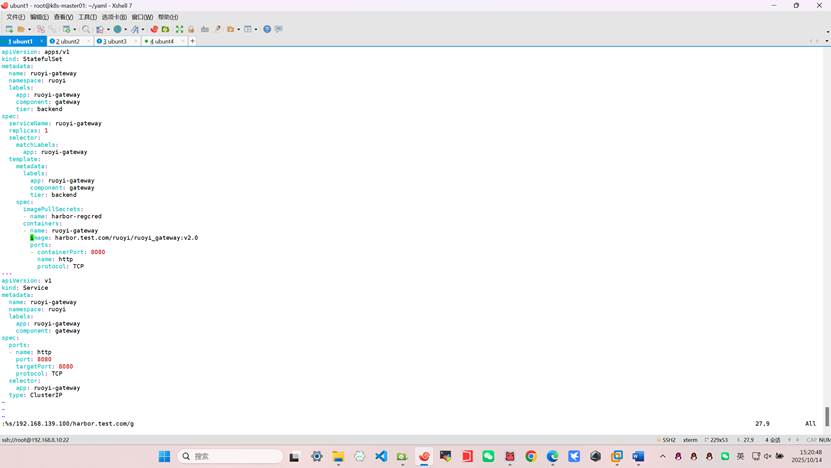

root@k8s-master01:~/yaml# vim ruoyi_gateway.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ruoyi-gateway

namespace: ruoyi

labels:

app: ruoyi-gateway

component: gateway

tier: backend

spec:

serviceName: ruoyi-gateway

replicas: 1

selector:

matchLabels:

app: ruoyi-gateway

template:

metadata:

labels:

app: ruoyi-gateway

component: gateway

tier: backend

spec:

imagePullSecrets:

- name: harbor-regcred

containers:

- name: ruoyi-gateway

image: harbor.test.com/ruoyi/ruoyi_gateway:v2.0

ports:

- containerPort: 8080

name: http

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-gateway

namespace: ruoyi

labels:

app: ruoyi-gateway

component: gateway

spec:

ports:

- name: http

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: ruoyi-gateway

type: ClusterIP

:%s/192.168.139.100/harbor.test.com/g

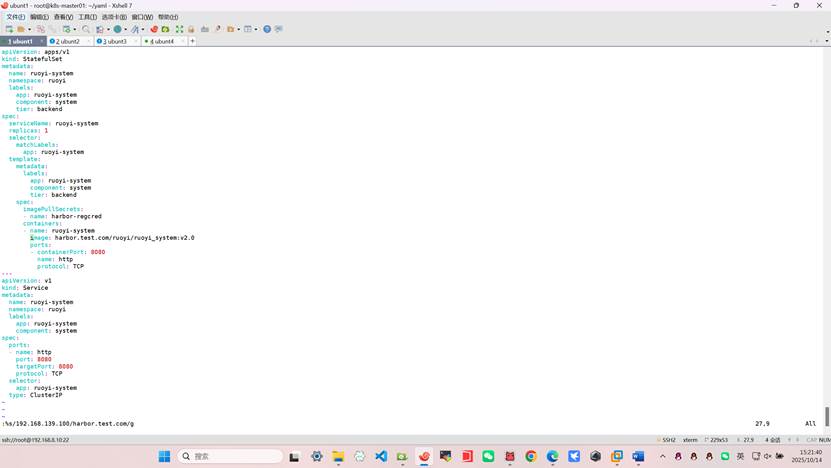

root@k8s-master01:~/yaml# vim ruoyi_system.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ruoyi-system

namespace: ruoyi

labels:

app: ruoyi-system

component: system

tier: backend

spec:

serviceName: ruoyi-system

replicas: 1

selector:

matchLabels:

app: ruoyi-system

template:

metadata:

labels:

app: ruoyi-system

component: system

tier: backend

spec:

imagePullSecrets:

- name: harbor-regcred

containers:

- name: ruoyi-system

image: harbor.test.com/ruoyi/ruoyi_system:v2.0

ports:

- containerPort: 8080

name: http

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-system

namespace: ruoyi

labels:

app: ruoyi-system

component: system

spec:

ports:

- name: http

port: 8080

targetPort: 8080

protocol: TCP

selector:

app: ruoyi-system

type: ClusterIP

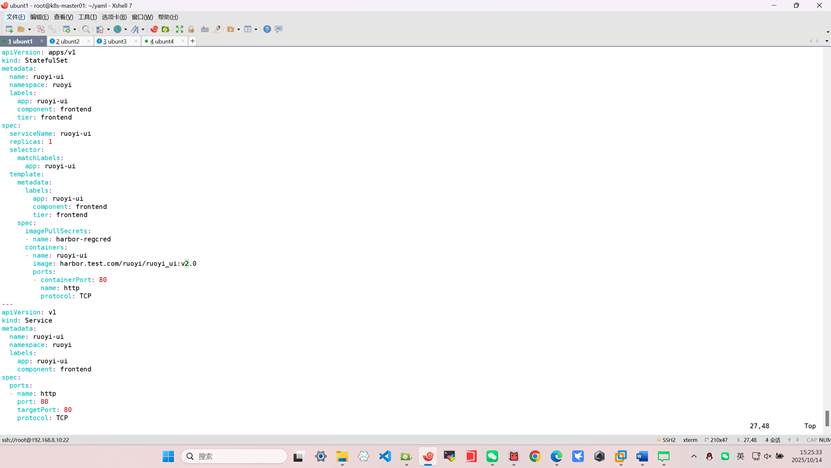

root@k8s-master01:~/yaml# vim ruoyi_ui.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ruoyi-ui

namespace: ruoyi

labels:

app: ruoyi-ui

component: frontend

tier: frontend

spec:

serviceName: ruoyi-ui

replicas: 1

selector:

matchLabels:

app: ruoyi-ui

template:

metadata:

labels:

app: ruoyi-ui

component: frontend

tier: frontend

spec:

imagePullSecrets:

- name: harbor-regcred

containers:

- name: ruoyi-ui

image: harbor.test.com/ruoyi/ruoyi_ui:v2.0

ports:

- containerPort: 80

name: http

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-ui

namespace: ruoyi

labels:

app: ruoyi-ui

component: frontend

spec:

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

selector:

app: ruoyi-ui

type: ClusterIP

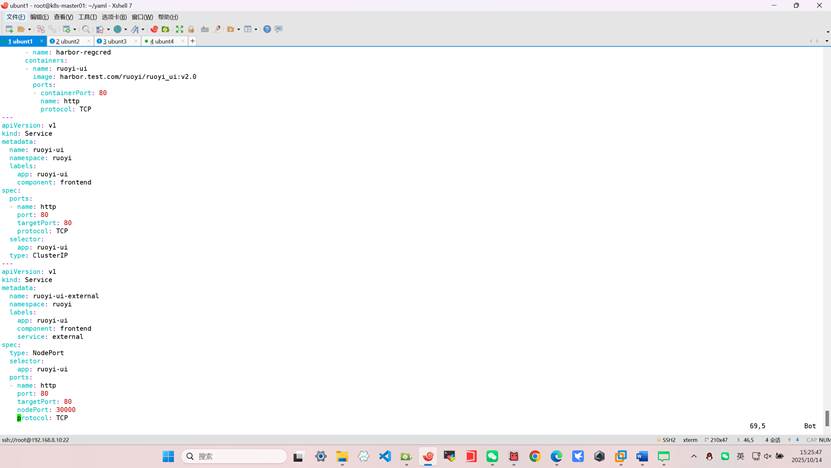

---

apiVersion: v1

kind: Service

metadata:

name: ruoyi-ui-external

namespace: ruoyi

labels:

app: ruoyi-ui

component: frontend

service: external

spec:

type: NodePort

selector:

app: ruoyi-ui

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30000

protocol: TCP

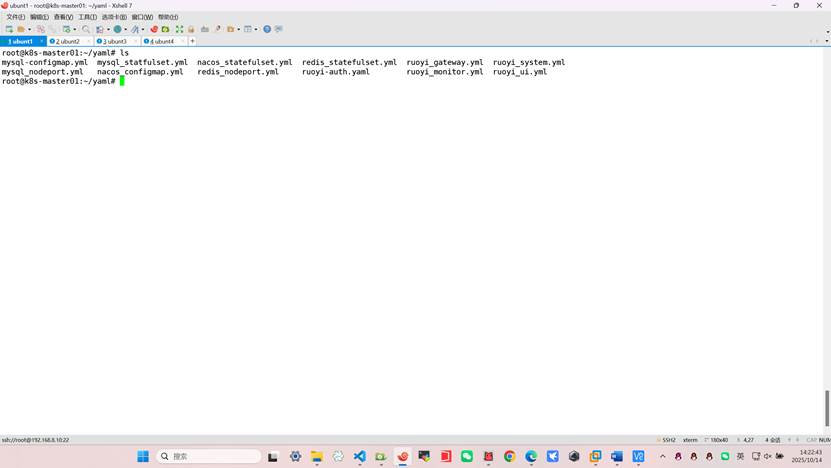

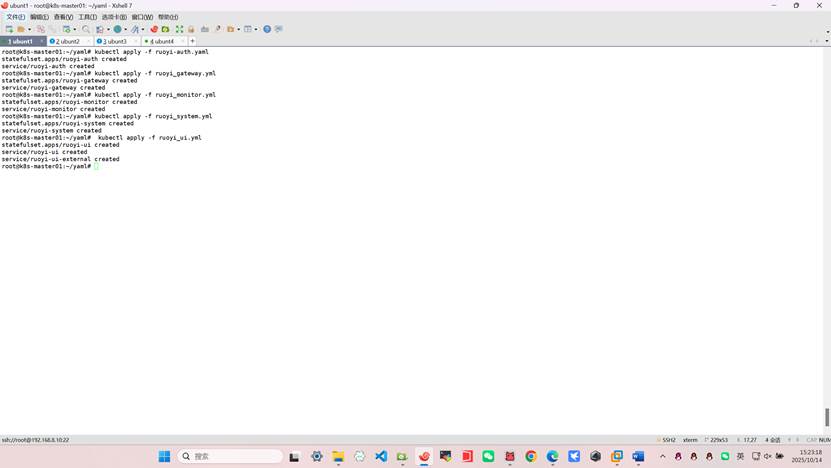

9、执行微服务资源清单yaml

root@k8s-master01:~/yaml# kubectl apply -f ruoyi-auth.yaml

root@k8s-master01:~/yaml# kubectl apply -f ruoyi_gateway.yml

root@k8s-master01:~/yaml# kubectl apply -f ruoyi_monitor.yml

root@k8s-master01:~/yaml# kubectl apply -f ruoyi_system.yml

root@k8s-master01:~/yaml# kubectl apply -f ruoyi_ui.yml

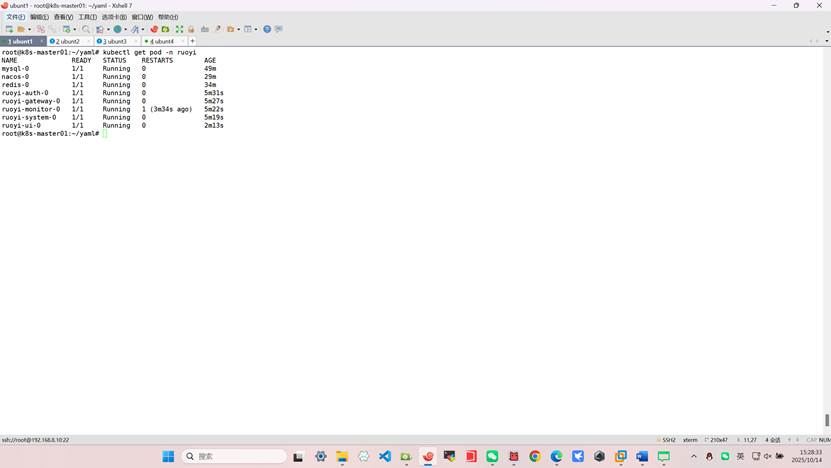

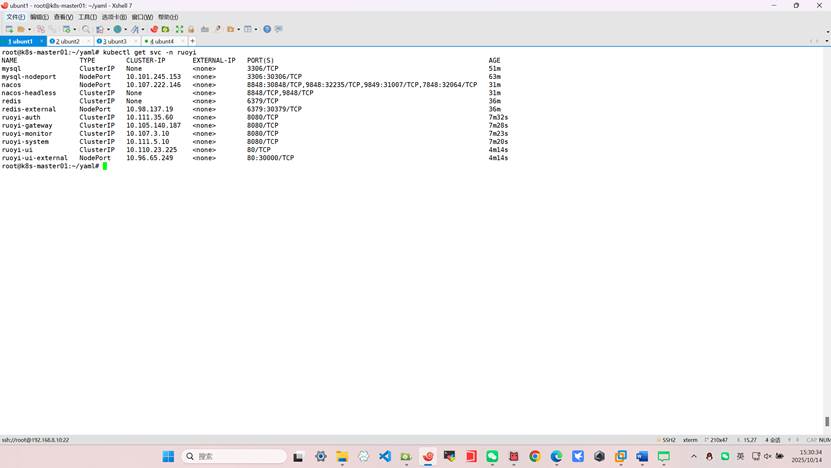

查看内部微服务启动状态

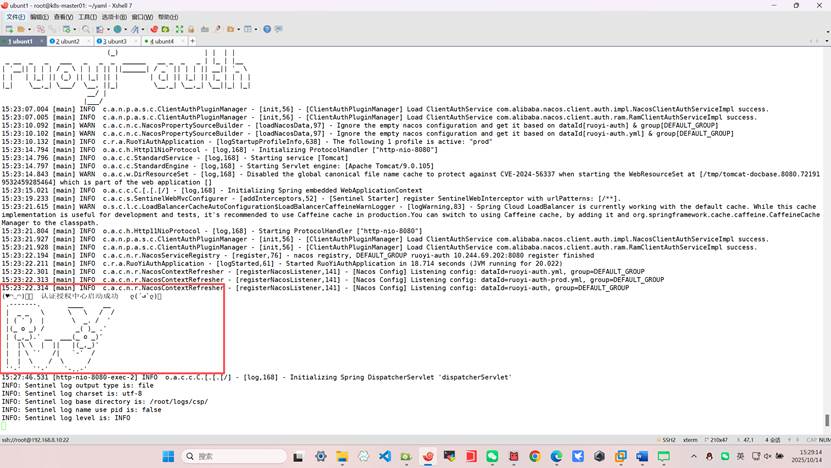

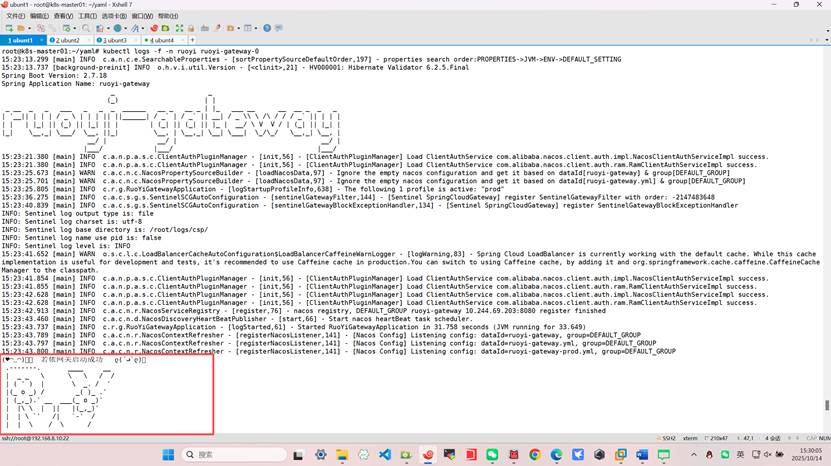

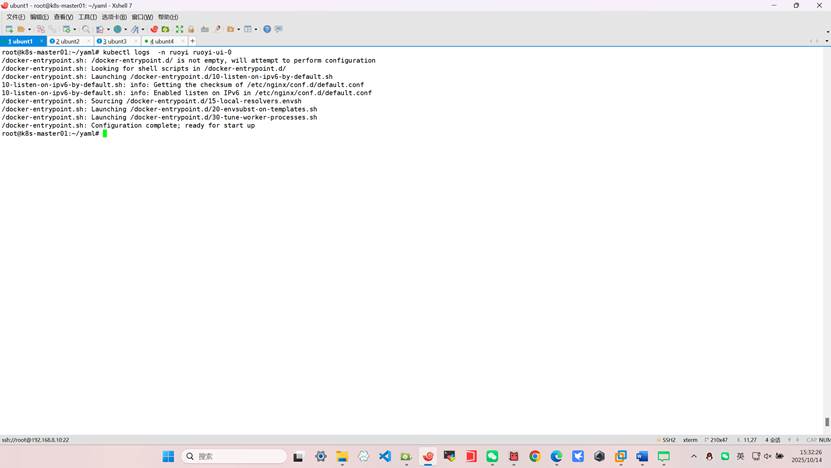

root@k8s-master01:~/yaml# kubectl logs -f -n ruoyi ruoyi-auth-0

kubectl logs -f -n ruoyi ruoyi-gateway-0

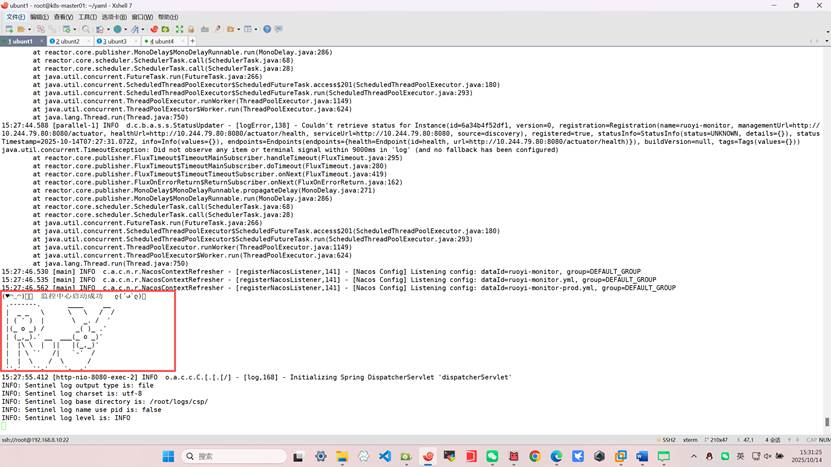

root@k8s-master01:~/yaml# kubectl logs -f -n ruoyi ruoyi-monitor-0

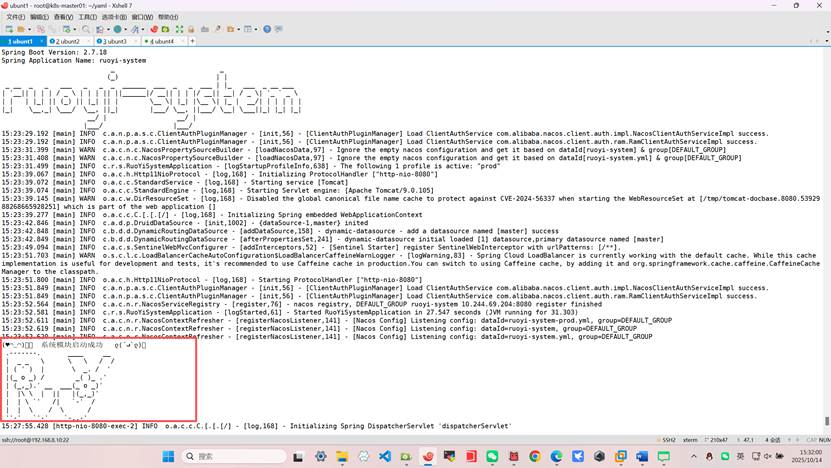

root@k8s-master01:~/yaml# kubectl logs -f -n ruoyi ruoyi-system-0

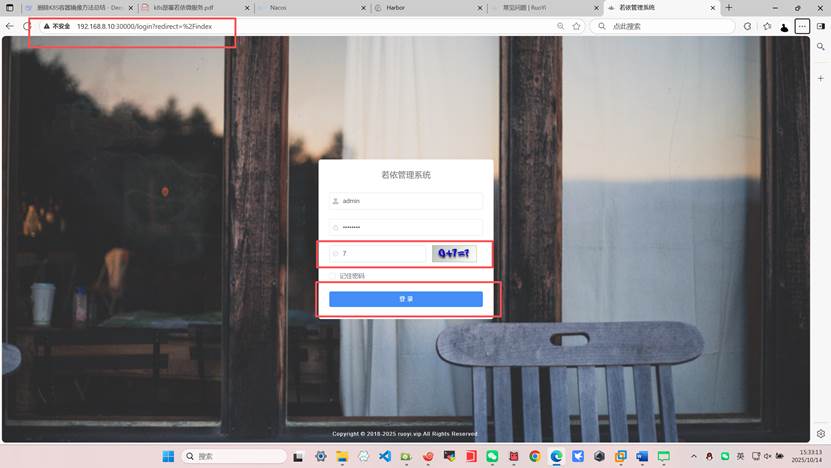

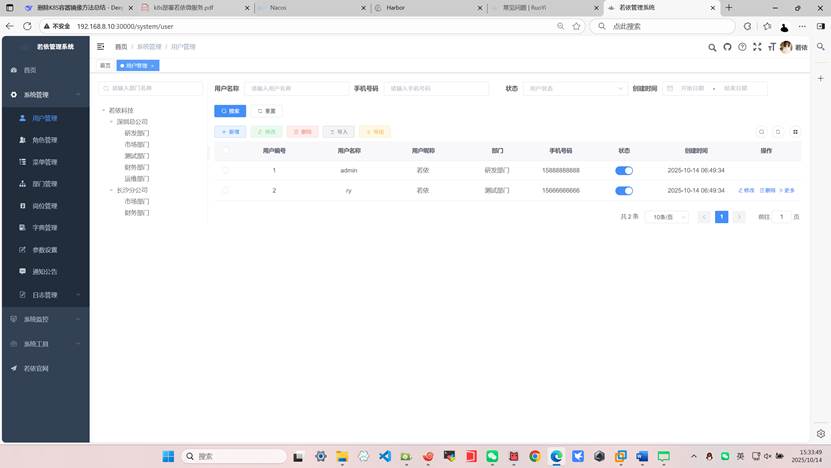

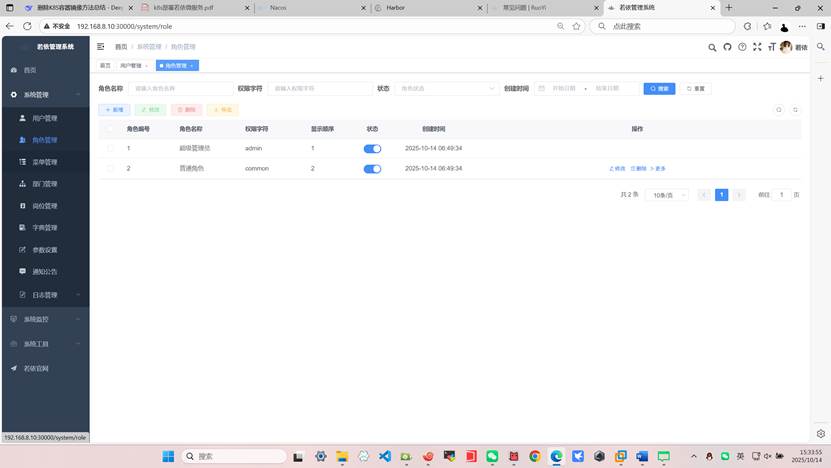

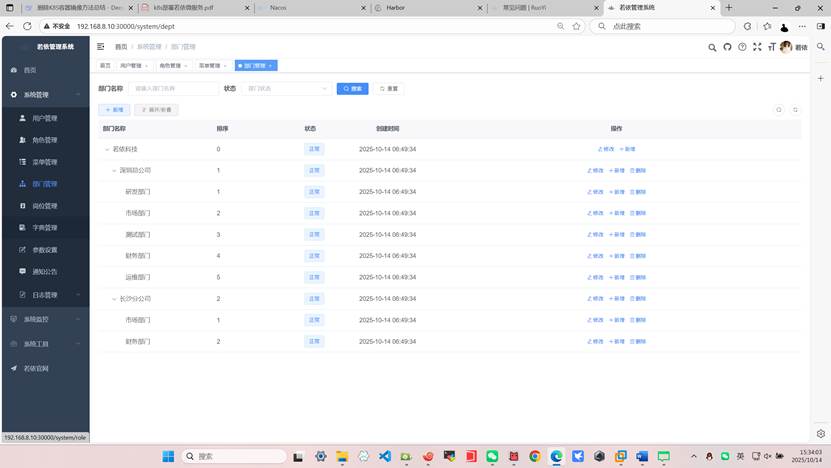

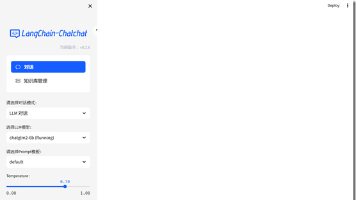

十、微服务测试

浏览器访问

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)