如何基于Ollama小模型运行LightRAG

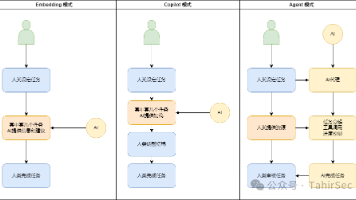

LightRAG是基于GraphRAG的改进RAG方案,旨在通过双层检索,在最小化计算开销同时增强检索信息的全面性。检索效率更高,并且与GraphRAG相比,在效果和速度之间实现了更好平衡。

LightRAG是基于GraphRAG的改进方案,旨在通过双层检索,最小化开销同时增强检索全面性。

与GraphRAG相比,LightRAG检索效率更高,在效果和速度之间实现了更好平衡。

LIghtRAG时代tokens资源消耗依然巨大,这里在本地尝试基于Ollama运行LightRAG。

1 LIghtRAG安装

1.1 python环境

这里使用conda 安装python=3.10

conda create -n lightrag python=3.10

conda activate lightrag

1.2 LightRAG环境

然后是安装LightRAG,安装指令如下所示。

如果git clone失败可以先下载zip包,然后上传到服务器减压。

git clone https://github.com/HKUDS/LightRAG.git

cd LightRAG

# Install LightRAG Core

pip install -e . -i https://pypi.tuna.tsinghua.edu.cn/simple

# create a Python virtual enviroment if neccesary

# Install in editable mode with API support

pip install -e ".[api]" -i https://pypi.tuna.tsinghua.edu.cn/simple

1.3 Ollama环境

假设ollama已安装,具体安装过程参考

https://blog.csdn.net/liliang199/article/details/149267372

考虑到CPU算力,这里选择下载llm模型qwen3:4b和embedding模型bge-m3:latest。

ollama pull qwen3:4b

ollama pull bge-m3:latest

ollama list

# 更新lightrag环境的ollama包

pip install --upgrade ollama -i https://pypi.tuna.tsinghua.edu.cn/simple

2 LightRAG测试

2.1 配置修改

LightRAG提供了多种example程序,包括基于ollama的example程序。

这里使用examples/lightrag_ollama_demo.py,需要修改llm相关配置。

llm相关配置在initialize_rag(),修改示例如下,涉及以下三点:

1)llm_model_name=os.getenv("LLM_MODEL", "qwen3:4b"),模型为qwen3:4b

2) "timeout": int(os.getenv("TIMEOUT", "30000")), 本地CPU运行,设置一个超大timeout

3) embed_model=os.getenv("EMBEDDING_MODEL", "bge-m3:latest"), 模型为bge-m3:latest

async def initialize_rag():

rag = LightRAG(

working_dir=WORKING_DIR,

llm_model_func=ollama_model_complete,

llm_model_name=os.getenv("LLM_MODEL", "qwen3:4b"), # qwen2.5-coder:7b

summary_max_tokens=8192,

llm_model_kwargs={

"host": os.getenv("LLM_BINDING_HOST", "http://localhost:11434"),

"options": {"num_ctx": 8192},

"timeout": int(os.getenv("TIMEOUT", "30000")),

},

embedding_func=EmbeddingFunc(

embedding_dim=int(os.getenv("EMBEDDING_DIM", "1024")),

max_token_size=int(os.getenv("MAX_EMBED_TOKENS", "8192")),

func=lambda texts: ollama_embed(

texts,

embed_model=os.getenv("EMBEDDING_MODEL", "bge-m3:latest"),

host=os.getenv("EMBEDDING_BINDING_HOST", "http://localhost:11434"),

),

),

)

await rag.initialize_storages()

await initialize_pipeline_status()

return rag

2.2 准备输入

输入默认为examples/book.txt,内容如下

Introduction to Transformer Neural Networks

Transformer neural networks represent a revolutionary architecture in the field of deep learning, particularly for natural language processing (NLP) tasks. Introduced in the seminal paper "Attention is All You Need" by Vaswani et al. in 2017, transformers have since become the backbone of numerous state-of-the-art models due to their ability to handle long-range dependencies and parallelize training processes. Unlike traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs), transformers rely entirely on a mechanism called self-attention to process input data. This mechanism allows transformers to weigh the importance of different words in a sentence or elements in a sequence simultaneously, thus capturing context more effectively and efficiently.

Architecture of Transformers

The core component of the transformer architecture is the self-attention mechanism, which enables the model to focus on different parts of the input sequence when producing an output. The transformer consists of an encoder and a decoder, each made up of a stack of identical layers. The encoder processes the input sequence and generates a set of attention-weighted vectors, while the decoder uses these vectors, along with the previously generated outputs, to produce the final sequence. Each layer in the encoder and decoder contains sub-layers, including multi-head self-attention mechanisms and position-wise fully connected feed-forward networks, followed by layer normalization and residual connections. This design allows the transformer to process entire sequences at once rather than step-by-step, making it highly parallelizable and efficient for training on large datasets.

Applications of Transformer Neural Networks

Transformers have revolutionized various applications across different domains. In NLP, they power models like BERT (Bidirectional Encoder Representations from Transformers), GPT (Generative Pre-trained Transformer), and T5 (Text-to-Text Transfer Transformer), which excel in tasks such as text classification, machine translation, question answering, and text generation. Beyond NLP, transformers have also shown remarkable performance in computer vision with models like Vision Transformer (ViT), which treats images as sequences of patches, similar to words in a sentence. Additionally, transformers are being explored in areas such as speech recognition, protein folding, and reinforcement learning, demonstrating their versatility and robustness in handling diverse types of data. The ability to process long-range dependencies and capture intricate patterns has made transformers indispensable in advancing the state of the art in many machine learning tasks.

Challenges and Limitations

Despite their success, transformer neural networks come with several challenges and limitations. One of the primary concerns is their computational and memory requirements, which are significantly higher compared to traditional models. The quadratic complexity of the self-attention mechanism with respect to the input sequence length can lead to inefficiencies, especially when dealing with very long sequences. To mitigate this, various approaches like sparse attention and efficient transformers have been proposed. Another challenge is the interpretability of transformers, as the attention mechanisms, though providing some insights, do not fully explain the model's decisions. Furthermore, transformers require large amounts of data and computational resources for training, which can be a barrier for smaller organizations or those with limited resources. Addressing these challenges is crucial for making transformers more accessible and scalable for a broader range of applications.

Future Directions

The future of transformer neural networks is bright, with ongoing research focused on enhancing their efficiency, scalability, and applicability. One promising direction is the development of more efficient transformer architectures that reduce computational complexity and memory usage, such as the Reformer, Linformer, and Longformer. These models aim to make transformers feasible for longer sequences and real-time applications. Another important area is improving the interpretability of transformers, with efforts to develop methods that provide clearer explanations of their decision-making processes. Additionally, integrating transformers with other neural network architectures, such as combining them with convolutional networks for multimodal tasks, holds significant potential. The application of transformers beyond traditional domains, like in time-series forecasting, healthcare, and finance, is also expected to grow. As advancements continue, transformers are set to remain at the forefront of AI and machine learning, driving innovation and breakthroughs across various fields.

2.3 测试运行

CPU运行比较慢,不管是LLM还是EMBEDDING,都需要设置一个超长timeout防止超时退出。

https://github.com/HKUDS/LightRAG/blob/main/lightrag/constants.py

运行指令如下所示。

cd examples

export DEFAULT_EMBEDDING_TIMEOUT=300000

export DEFAULT_LLM_TIMEOUT=180000

export DEFAULT_TIMEOUT=300000

python lightrag_ollama_demo.py

输出示例

LightRAG compatible demo log file: /path/to/LightRAG/examples/lightrag_ollama_demo.log

Deleting old file:: ./dickens/kv_store_doc_status.json

Deleting old file:: ./dickens/kv_store_full_docs.json

INFO: [_] Created new empty graph fiel: ./dickens/graph_chunk_entity_relation.graphml

INFO:nano-vectordb:Init {'embedding_dim': 1024, 'metric': 'cosine', 'storage_file': './dickens/vdb_entities.json'} 0 data

INFO:nano-vectordb:Init {'embedding_dim': 1024, 'metric': 'cosine', 'storage_file': './dickens/vdb_relationships.json'} 0 data

INFO:nano-vectordb:Init {'embedding_dim': 1024, 'metric': 'cosine', 'storage_file': './dickens/vdb_chunks.json'} 0 data

INFO: [_] Process 17595 KV load full_docs with 0 records

INFO: [_] Process 17595 KV load text_chunks with 0 records

INFO: [_] Process 17595 KV load full_entities with 0 records

INFO: [_] Process 17595 KV load full_relations with 0 records

INFO: [_] Process 17595 KV load llm_response_cache with 0 records

INFO: [_] Process 17595 doc status load doc_status with 0 records

INFO: Embedding func: 8 new workers initialized (Timeouts: Func: 30s, Worker: 60s, Health Check: 75s)=======================

Test embedding function

========================

Test dict: ['This is a test string for embedding.']

Detected embedding dimension: 1024

input: xx

INFO: Processing 1 document(s)

INFO: Extracting stage 1/1: unknown_source

INFO: Processing d-id: doc-78319af24e8b60a5165ca45a7c32c1e6

...

由于本地CPU超慢,耗时虽然没有GraphRAG那么夸张,但是也是很长。

如果不差钱,可以借助OneAPI调用外部LLM服务,使用方法参考

https://blog.csdn.net/liliang199/article/details/151393128

reference

---

LightRAG

https://github.com/HKUDS/LightRAG

LightRAG: Simple and Fast Retrieval-Augmented Generation

https://arxiv.org/abs/2410.05779

本地安装 light RAG + ollama 本地启动

https://blog.csdn.net/weixin_43664254/article/details/148788828

ollama本地部署LightRAG(已跑通)

https://blog.csdn.net/weixin_63866037/article/details/143818073

图结构增强的GraphRAG方案:NodeRAG实现思路解读

https://zhuanlan.zhihu.com/p/1897406866306348098

OneAPI-通过OpenAI API访问所有大模型

为武汉地区的开发者提供学习、交流和合作的平台。社区聚集了众多技术爱好者和专业人士,涵盖了多个领域,包括人工智能、大数据、云计算、区块链等。社区定期举办技术分享、培训和活动,为开发者提供更多的学习和交流机会。

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)