基于k8s的三master三nodeHA高性能集群

模仿企业开发环境,部署一个三master三node的k8s集群

目录

1.在六台k8s机器上安装部署好k8s,三台作为master,三台node,实现负载均衡,安装部署k8s集群

二.部署nfs服务,让所有的web业务pod都取访问,通过pv,pvc和卷挂载实现

三.安装部署ansible机器,写好主机清单,便于日后的自动化运维。

1.建立免密通道 在ansible主机上生成密钥对,上传公钥到所有服务器的root用户家目录下

四.启动nginx和MySQL的pod,采用HPA技术,cpu使用率高时进行水平扩缩,使用ab进行压力测试。

3.启动开启了HPA功能的nginx的部署控制器,启动nginx的pod

五.使用探针对web业务pod进行监控, 一旦出现问题马上重启, 增强业务pod的可靠性。

七.使用ingress给web业务做基于域名和url的负载均衡的实现

八.构建CI/CD环境, k8smaster2上安装部署Jenkins,一台机器上安装部署harbor仓库。

3.在master或者被监听的机器上操作(安装相应的exporter,常规的是node_exporter)

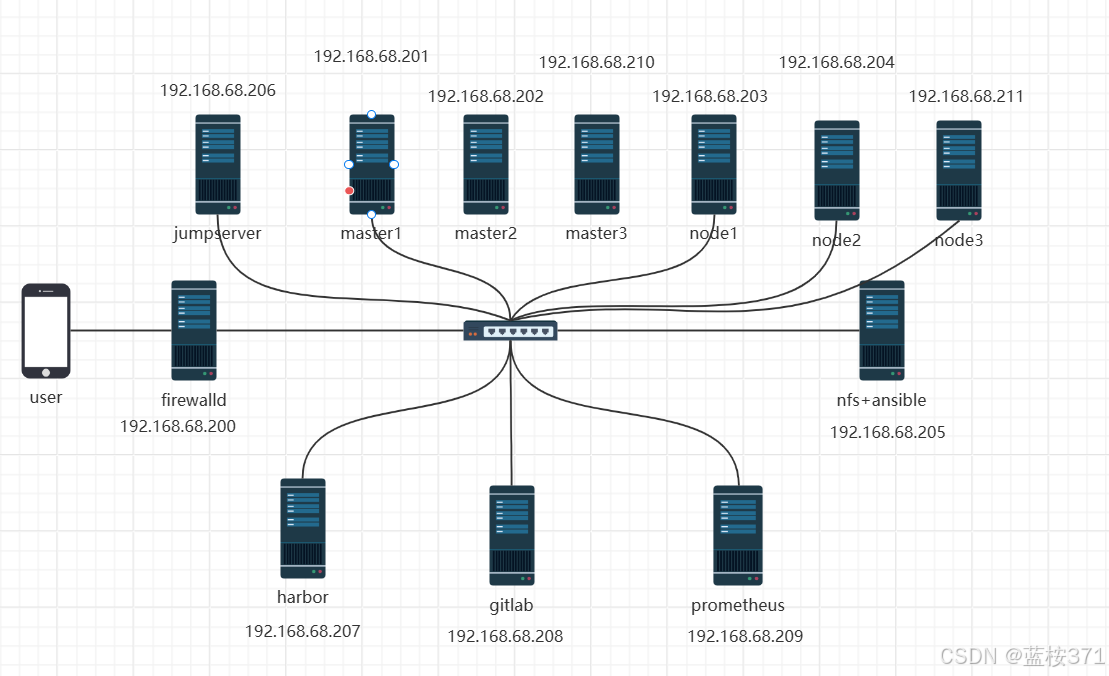

项目架构图

项目意图

模拟企业里的k8s开发环境,部署web,mysql,nfs,harbor,gitlab,Jenkins,jumpServer等应用,构建一个高可用高性能的k8s集群,同时使用prometheus监控整个k8s集群的使用,同时还部署了CICD系统。

项目步骤

一.k8s集群搭建

1.在六台k8s机器上安装部署好k8s,三台作为master,三台node,实现负载均衡,安装部署k8s集群

###下面操作每台机器都要操作,建议xshell上开启输入到所有会话

配置静态ip地址和设置主机名和关闭selinux和firewalld

hostnamectl set-hostname master1 && bash

hostnamectl set-hostname master2 && bash

hostnamectl set-hostname master3 && bash

hostnamectl set-hostname node1 && bash

hostnamectl set-hostname node2 && bash

hostnamectl set-hostname node3 && bash

#关闭firewalld防火墙服务,并且设置开机不要启动

systemctl stop firewalld

systemctl disable firewalld#关闭selinux

setenforce 0 #临时

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config #永久#添加域名解析

[root@master ~]# vim /etc/hosts

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.68.201 master1

192.168.68.202 master2

192.168.68.210 master3

192.168.68.203 node1

192.168.68.204 node2

192.168.68.211 node3##### 注意!!!下面为六台机器都要操作;

关闭交换分区 k8s设计的时候为了能提升性能,默认是不允许使用交换分区的。

[root@master ~]# swapoff -a 临时关闭

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

[root@master ~]# vim /etc/fstab

加注释:

#/dev/mapper/centos-swap swap swap defaults 0 0 #调整内核参数

# 修改linux的内核参数,添加网桥过滤和地址转发功能,转发IPv4并让iptables看到桥接流量

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

# 加载网桥过滤模块

modprobe overlay

modprobe br_netfilter

# 编辑/etc/sysctl.d/kubernetes.conf文件,添加如下配置:

cat << EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用sysctl参数

sysctl --system

# 查看br_netfilter和 overlay模块是否加载成功

lsmod | grep -e br_netfilter -e overlay

br_netfilter 22256 0

bridge 151336 1 br_netfilter

overlay 91659 0#更新和配置软件源

curl -O http://mirrors.aliyun.com/repo/Centos-7.repo

yum clean all && yum makecache

yum install -y yum-utils

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo#配置ipvs功能

# 安装ipset和ipvsadm

yum install ipset ipvsadmin -y

# 添加需要加载的模块写入脚本文件

cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 为脚本文件添加执行权限

chmod +x /etc/sysconfig/modules/ipvs.modules

# 执行脚本文件

/bin/bash /etc/sysconfig/modules/ipvs.modules

# 重启

reboot

# 查看对应的模块是否加载成功

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 15053 24

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 105

ip_vs 145497 111 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 10 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_nat_masquerade_ipv6,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack#配置时间同步

systemctl start chronyd && systemctl enable chronyd

timedatectl set-timezone Asia/Shangha2.配置docker环境和k8s环境

# 安装docker

yum install -y docker-ce-20.10.24-3.el7 docker-ce-cli-20.10.24-3.el

# 配置docker

mkdir /etc/docker -p

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://hub.docker-alhk.dkdun.com/"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload && systemctl restart docker

# 配置docker服务自启动

systemctl enable --now docker

systemctl status docker

# 配置k8s集群环境

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 更新索引缓冲

yum makecache

# 安装

yum install -y kubeadm-1.23.17-0 kubelet-1.23.17-0 kubectl-1.23.17-0 --disableexcludes=kubernetes

cat <<EOF > /etc/sysconfig/kubelet

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

# 启动kubelet并设置开机自启

systemctl enable --now kubelet

# master节点初始化(仅在master1执行)

kubeadm init \

--kubernetes-version=v1.23.17 \

--pod-network-cidr=10.224.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=192.168.68.201 \

--image-repository=registry.aliyuncs.com/google_containers

(不能有空格)成功后会提示以下信息:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.18.212.47:6443 --token nyjc81.tvhtt2h67snpkf48 \

--discovery-token-ca-cert-hash sha256:cf8458e93e3510cf77dd96a73d39acd3f6284034177f8bad4d8452bb7f5f6e62 # 暂存这条命令然后继续执行

#执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# node节点加入集群

kubeadm join 192.168.68.201:6443 --token 6ob60e.360e3fnjgbl8rawi \

--discovery-token-ca-cert-hash sha256:e3eeb69a2482e138ca6893948bdfecc0e118e7e3ae284755796fe2000ba6b1ae

# node节点上出现代表成功加入集群

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# 给node节点打上标签

kubectl label node node1 node-role.kubernetes.io/worker=worker

kubectl label node node2 node-role.kubernetes.io/worker=worker

kubectl label node node3 node-role.kubernetes.io/worker=worker

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 28m v1.23.17

node1 NotReady worker 6m3s v1.23.17

node2 NotReady worker 5m57s v1.23.17

node3 NotReady worker 5m59s v1.23.17

# master 节点加入集群

# 因为Kubernetes 集群需要稳定的 controlPlaneEndpoint 地址,这是在多主节点(multi-master)配置中至关重要的。controlPlaneEndpoint 是集群所有控制平面节点的统一访问地址,通常是通过负载均衡器(Load Balancer)来实现的。所有控制平面节点应该共享这个公共 IP 或 DNS 名称。这次暂时将master1的ip用作controlPlaneEndpoint 地址,使用 kubectl 命令获取 kubeadm-config ConfigMap 的信息

kubectl -n kube-system edit cm kubeadm-config -o yaml # edit,交互式编辑

# 添加如下字段:controlPlaneEndpoint: “192.168.68.201:6443” (master1节点服务器的ip)

apiVersion: v1

data:

ClusterConfiguration: |

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.68.201:6443" # 在这里添加

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.17

networking:

dnsDomain: cluster.local

podSubnet: 10.224.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

kubeadm-config.yaml: |+

apiVersion: v1

data:

ClusterConfiguration: |

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.17

networking:

dnsDomain: cluster.local

podSubnet: 10.224.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

kind: ConfigMap

metadata:

creationTimestamp: "2024-08-18T08:21:33Z"

name: kubeadm-config

namespace: kube-system

resourceVersion: "211"

uid: 19cf8515-01df-4adb-aadf-bf94a9c3cfe3

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"ClusterConfiguration":"apiServer:\n extraArgs:\n authorization-mode: Node,RBAC\n timeoutForControlPlane: 4m0s\napiVersion: kubeadm.k8s.io/v1beta3\ncertificatesDir: /etc/kubernetes/pki\nclusterName: kubernetes\ncontrollerManager: {}\ndns: {}\netcd:\n local:\n dataDir: /var/lib/etcd\nimageRepository: registry.aliyuncs.com/google_containers\nkind: ClusterConfiguration\nkubernetesVersion: v1.23.17\nnetworking:\n dnsDomain: cluster.local\n podSubnet: 10.224.0.0/16\n serviceSubnet: 10.96.0.0/12\nscheduler: {}\n","kubeadm-config.yaml":"apiVersion: v1\ndata:\n ClusterConfiguration: |\n apiServer:\n extraArgs:\n authorization-mode: Node,RBAC\n timeoutForControlPlane: 4m0s\n apiVersion: kubeadm.k8s.io/v1beta3\n certificatesDir: /etc/kubernetes/pki\n clusterName: kubernetes\n controllerManager: {}\n dns: {}\n etcd:\n local:\n dataDir: /var/lib/etcd\n imageRepository: registry.aliyuncs.com/google_containers\n kind: ClusterConfiguration\n kubernetesVersion: v1.23.17\n networking:\n dnsDomain: cluster.local\n podSubnet: 10.224.0.0/16\n serviceSubnet: 10.96.0.0/12\n scheduler: {}\nkind: ConfigMap\nmetadata:\n creationTimestamp: \"2024-08-18T08:21:33Z\"\n name: kubeadm-config\n namespace: kube-system\n resourceVersion: \"211\"\n uid: 19cf8515-01df-4adb-aadf-bf94a9c3cfe3\n\n"},"kind":"ConfigMap","metadata":{"annotations":{},"creationTimestamp":"2024-08-18T08:21:33Z","name":"kubeadm-config","namespace":"kube-system","resourceVersion":"3278","uid":"19cf8515-01df-4adb-aadf-bf94a9c3cfe3"}}

creationTimestamp: "2024-08-20T12:42:36Z"

name: kubeadm-config

namespace: kube-system

resourceVersion: "84228"

uid: fb611090-ff5a-46b2-88f8-965354e3a78e

# master节点需要配置相同的证书!!!

# 在master2创建证书存放目录:

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

# 在master1上执行

scp /etc/kubernetes/pki/ca.crt root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key root@192.168.68.202:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt root@192.168.68.202:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key root@192.168.68.202:/etc/kubernetes/pki/etcd/

# 生成引导令牌

kubeadm token create

# 获取 ca-cert-hash

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

# 上传证书并获取 certificate-key

kubeadm init phase upload-certs --upload-certs

# 最终生成的加入命令如下:

kubeadm join <master-ip>:6443 --token <token> \

--discovery-token-ca-cert-hash sha256:<ca-cert-hash> \

--control-plane --certificate-key <certificate-key>

kubeadm join 192.168.68.201:6443 --token wgin1j.virtqdr06tjxxonz \

--discovery-token-ca-cert-hash sha256:e3eeb69a2482e138ca6893948bdfecc0e118e7e3ae284755796fe2000ba6b1ae \

--control-plane --certificate-key f02cb1df6c73f41ed412e44af6030595a4a5600580fd8753d827c0fa456974cc

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@master2 ~]# mkdir -p $HOME/.kube

[root@master2 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master2 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master2 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 2d5h v1.23.17

master2 Ready control-plane,master 108s v1.23.17

node1 Ready worker 2d5h v1.23.17

node2 Ready worker 2d5h v1.23.17

node3 Ready worker 2d5h v1.23.17

# 由上可以继续把master3也加入集群

kubeadm reset 可以清除初始化的操作

3.安装Calico网络插件及常规配置

# master1执行

kubectl apply -f https://docs.projectcalico.org/archive/v3.25/manifests/calico.yaml # k8s 1.23适用此版本

# 验证 节点状态 NotReady => Ready

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 2d5h v1.23.17

master2 Ready control-plane,master 108s v1.23.17

master3 Ready control-plane,master 209s v1.23.17

node1 Ready worker 2d5h v1.23.17

node2 Ready worker 2d5h v1.23.17

node3 Ready worker 2d5h v1.23.17

# k8s配置ipvs 和 命令自动补全

kubectl edit configmap kube-proxy -n kube-system

# 修改配置

mode: "ipvs" # 在这行添加ipvs

# 删除所有kube-proxy pod使之重启

kubectl delete pods -n kube-system -l k8s-app=kube-proxy

# 安装kubectl命令自动补全

yum install -y bash-completion

# 临时设置自动补全

source <(kubectl completion bash)

# 永久设置自动补全

echo "source <(kubectl completion bash)" >> ~/.bashrc && bash

#常用命令

# 看k8s容器(pod):

kubectl get pod -n kube-system

kubectl get pod # 是查看有哪些pod在运行 --》docker ps

-n kube-system # 是查看kube-system命名空间里运行的pod namespace

kube-system # 是k8s控制平面的pod所在的命名空间

# pod 是运行容器的单元

# 以pod治理pod

# 住在kube-system 命名空间里的pod是控制平面的pod

kubectl get ns = kubectl get namespace # 查看有哪些命名空间

NAME STATUS AGE

default Active 78m # 是创建的普通的pod运行的命名空间

kube-node-lease Active 78m

kube-public Active 78m

kube-system Active 78m # 是管理相关的命名空间二.部署nfs服务,让所有的web业务pod都取访问,通过pv,pvc和卷挂载实现

# 注意点:nfs服务器上:

# 关闭firewalld防火墙服务,并且设置开机不要启动

service firewalld stop

systemctl disable firewalld

# 临时关闭selinux

setenforce 0

# 永久关闭selinux

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config1.搭建好nfs服务器

# 在nfs服务器和k8s集群上安装nfs

[root@nfs ~]# yum install nfs-utils -y

[root@master1 ~]# yum install nfs-utils -y

[root@master2 ~]# yum install nfs-utils -y

[root@master3 ~]# yum install nfs-utils -y

[root@node1 ~]# yum install nfs-utils -y

[root@node2 ~]# yum install nfs-utils -y

[root@node3 ~]# yum install nfs-utils -y2.设置共享目录

[root@ansible nfs ~]# vim /etc/exports

[root@ansible nfs ~]# cat /etc/exports

/web 192.168.68.0/24(rw,no_root_squash,sync)

[root@ansible nfs ~]# mkdir /web

[root@ansible nfs ~]# cd /web

[root@ansible nfs web]# echo "lanan371" >index.html

[root@ansible nfs web]# ls

index.html

[root@ansible nfs web]# exportfs -rv #刷新nfs服务

exporting 192.168.68.0/24:/web

#重启服务并且设置开机启动

[root@ansible nfs web]# systemctl restart nfs && systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.3.创建pv使用nfs服务器上的共享目录

[root@master1 ~]# mkdir /pv

[root@master1 ~]# cd /pv/

[root@master1 pv]# vim nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-web

labels:

type: pv-web

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs # pv对应的名字

nfs:

path: "/web" # nfs共享的目录

server: 192.168.68.205 # nfs服务器的ip地址

readOnly: false # 访问模式

[root@master1 pv]# kubectl apply -f nfs-pv.yml

persistentvolume/pv-web created

[root@master1 pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-web 10Gi RWX Retain Available nfs 12s

# 创建pvc使用pv

[root@master1 pv]# vim nfs-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-web

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs #使用nfs类型的pv

[root@master1 pv]# kubectl apply -f nfs-pvc.yml

persistentvolumeclaim/pvc-web created

[root@master1 pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-web Bound pv-web 10Gi RWX nfs 13s

#创建pod使用pvc

[root@master1 pv]# vim nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: sc-pv-storage-nfs

persistentVolumeClaim:

claimName: pvc-web

containers:

- name: sc-pv-container-nfs

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: sc-pv-storage-nfs

[root@master1 pv]# kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

[root@master1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-d4c8d4d89-l5b9m 1/1 Running 1 (20h ago) 21h 10.224.166.132 node1 <none> <none>

nginx-deployment-d4c8d4d89-l9wvj 1/1 Running 1 (20h ago) 21h 10.224.166.131 node2 <none> <none>

nginx-deployment-d4c8d4d89-r9t8j 1/1 Running 1 (20h ago) 21h 10.224.104.11 node3 <none> <none>

4.验证

[root@master1 ~]# curl 10.224.104.11

nfs lanan371

# 修改下nfs服务器上的index.html的内容

[[root@ansible nfs web]# vim index.html

[root@ansible nfs web]# cat index.html

nfs lanan371

welcome to hunannongda!!

[root@master1 ~]# curl 10.224.104.11

nfs lanan371

welcome to hunannongda!!

# 访问也变了表示成功三.安装部署ansible机器,写好主机清单,便于日后的自动化运维。

1.建立免密通道 在ansible主机上生成密钥对,上传公钥到所有服务器的root用户家目录下

# 所有服务器上开启ssh服务 ,开放22号端口,允许root用户登录

[root@ansible nfs ~]# yum install -y epel-release

[root@ansible nfs ~]# yum install ansible

[root@ansible nfs ~]# ssh-keygen

[root@ansible nfs ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.68.200

[root@ansible nfs ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.68.201

[root@ansible nfs ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.68.202

[root@ansible nfs ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.68.203

[root@ansible nfs ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub 192.168.68.204

(......)2.编写主机清单

# 路径为 /etc/ansible/hosts

[root@ansible ansible]# vim hosts

[firewalld]

192.168.68.200

[master]

192.168.68.201

192.168.68.202

192.168.68.210

[node]

192.168.68.203

192.168.68.204

192.168.68.211

[nfs]

192.168.68.205

[jumpserver]

192.168.68.206

[harbor]

192.168.68.207

[gitlab]

192.168.68.208

[prometheus]

192.168.68.209

3.测试

[root@ansible nfs ansible]# ansible all -m shell -a "ip add"四.启动nginx和MySQL的pod,采用HPA技术,cpu使用率高时进行水平扩缩,使用ab进行压力测试。

1.k8s部署mysql pod

1.编写yaml文件,包括了deployment、service

[root@maste1r ~]# mkdir /mysql

[root@master1 ~]# cd /mysql/

[root@master1 mysql]# vim mysql.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mysql

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:latest

name: mysql

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456" #mysql的密码

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

labels:

app: svc-mysql

name: svc-mysql

spec:

selector:

app: mysql

type: NodePort

ports:

- port: 3306

protocol: TCP

targetPort: 3306

nodePort: 30007

2.部署

[root@master1 ~]# mkdir /mysql

[root@master1 ~]# cd /mysql/

[root@master1 mysql]# vim mysql.yaml

[root@master1 mysql]# kubectl apply -f mysql.yaml

deployment.apps/mysql created

service/svc-mysql created

[root@master1 mysql]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24h

svc-mysql NodePort 10.99.72.37 <none> 3306:30007/TCP 30s

[root@master1 mysql]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-597ff9595d-tf59x 0/1 ContainerCreating 0 78s

nginx-deployment-d4c8d4d89-l5b9m 1/1 Running 1 (20h ago) 21h

nginx-deployment-d4c8d4d89-l9wvj 1/1 Running 1 (20h ago) 21h

nginx-deployment-d4c8d4d89-r9t8j 1/1 Running 1 (20h ago) 21h

[root@master1 mysql]# kubectl describe pod mysql-597ff9595d-tf59x #describe可以查看pod详情

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m2s default-scheduler Successfully assigned default/mysql-597ff9595d-tf59x to node1

Normal Pulling 2m1s kubelet Pulling image "mysql:latest"

Normal Pulled 44s kubelet Successfully pulled image "mysql:latest" in 1m17.709941074s (1m17.709944318s including waiting)

Normal Created 43s kubelet Created container mysql

Normal Started 43s kubelet Started container mysql

[root@master1 mysql]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-597ff9595d-tf59x 1/1 Running 0 2m49s

nginx-deployment-d4c8d4d89-l5b9m 1/1 Running 1 (20h ago) 21h

nginx-deployment-d4c8d4d89-l9wvj 1/1 Running 1 (20h ago) 21h

nginx-deployment-d4c8d4d89-r9t8j 1/1 Running 1 (20h ago) 21h

[root@master1 mysql]# kubectl exec -it mysql-597ff9595d-tf59x -- bash

bash-5.1# mysql -uroot -p123456 # #容器内部进入mysql

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

Server version: 9.0.1 MySQL Community Server - GPL

Copyright (c) 2000, 2024, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

2.安装metric-server

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.2/components.yaml

# 修改

vim修改第140行左右

# 修改前

containers:

- args:

...

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.2

#修改后:

containers:

- args:

...

- --kubelet-insecure-tls # 添加这一行

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.2 # 修改镜像仓库地址

# 应用

kubectl apply -f components.yaml

[root@master1 metrics]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master1 metrics]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-64cc74d646-86bfg 1/1 Running 2 (21h ago) 24h

calico-node-2rqq6 1/1 Running 2 (21h ago) 24h

calico-node-dl4d8 1/1 Running 2 (21h ago) 24h

calico-node-tptqc 1/1 Running 2 (21h ago) 24h

coredns-6d8c4cb4d-p29dw 1/1 Running 2 (21h ago) 25h

coredns-6d8c4cb4d-sxcd6 1/1 Running 2 (21h ago) 25h

etcd-master1 1/1 Running 2 (21h ago) 25h

kube-apiserver-master1 1/1 Running 2 (21h ago) 25h

kube-controller-manager-master1 1/1 Running 2 (21h ago) 25h

kube-proxy-g8ts5 1/1 Running 2 (21h ago) 24h

kube-proxy-lr4rh 1/1 Running 2 (21h ago) 24h

kube-proxy-spswm 1/1 Running 2 (21h ago) 24h

kube-scheduler-master1 1/1 Running 2 (21h ago) 25h

metrics-server-784768bd4b-v2b5q 0/1 Running 0 9s

[root@master1 metrics]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master1 231m 11% 1124Mi 30%

master2 229m 12% 1123Mi 34%

master3 237m 10% 1118Mi 27%

node1 148m 7% 1309Mi 48%

node2 135m 6% 812Mi 29%

node2 137m 6% 812Mi 36%

[root@master1 metrics]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

mysql-597ff9595d-tf59x 13m 465Mi

nginx-deployment-d4c8d4d89-l5b9m 0m 2Mi

nginx-deployment-d4c8d4d89-l9wvj 0m 5Mi

nginx-deployment-d4c8d4d89-r9t8j 0m 3Mi 3.启动开启了HPA功能的nginx的部署控制器,启动nginx的pod

[root@master1 metrics]# cd /

[root@master1 /]# mkdir hpa

[root@master1 /]# cd hpa/

[root@master1 hpa]# vim nginx-hpa.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ab-nginx

spec:

selector:

matchLabels:

run: ab-nginx

template:

metadata:

labels:

run: ab-nginx

spec:

containers:

- name: ab-nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: ab-nginx-svc

labels:

run: ab-nginx-svc

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 31000

selector:

run: ab-nginx

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: ab-nginx

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ab-nginx

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 70

# 创建具有hpa功能的nginx的pod

[root@master1 hpa]# kubectl apply -f nginx-hpa.yaml

deployment.apps/ab-nginx created

service/ab-nginx-svc created

horizontalpodautoscaler.autoscaling/ab-nginx created

[root@master1 hpa]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

ab-nginx [root@master1 hpa]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

ab-nginx Deployment/ab-nginx 0%/70% 1 10 1 103s

1/1 1 1 34s

[root@master1 hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ab-nginx-794dd6d597-7cwzl 1/1 Running 0 2m14s

[root@master1 hpa]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ab-nginx-svc NodePort 10.96.87.118 <none> 80:31000/TCP 2m38s

# 访问宿主机的31000端口

http://192.168.68.201:31000/

# 测试nginx pod是否启动成功

[root@master1 hpa]# curl http://192.168.68.201:31000/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 成功

4.下面在nfs上用ab工具进行压力测试

# 安装http-tools工具得到ab软件

[root@ansible nfs ~]# yum install httpd-tools -y

# 模拟访问

[root@ansible nfs ~]# ab -n 10000 -c50 http://192.168.68.201:31000/index.html

[root@master1 hpa]# kubectl get hpa --watch

增加并发数和请求总数

[root@ansible nfs ~]# ab -n 5000 -c100 http://192.168.68.201:31000/index.html

[root@ansible nfs ~]# ab -n 10000 -c200 http://192.168.68.201:31000/index.html

[root@ansible nfs ~]# ab -n 20000 -c400 http://192.168.68.201:31000/index.html

[root@master1 hpa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ab-nginx-794dd6d597-44q7w 1/1 Running 0 22s

ab-nginx-794dd6d597-7cwzl 1/1 Running 0 13m

ab-nginx-794dd6d597-7njld 1/1 Running 0 7s

ab-nginx-794dd6d597-9pzj7 1/1 Running 0 4m23s

ab-nginx-794dd6d597-qqpq6 1/1 Running 0 22s

ab-nginx-794dd6d597-slh5p 1/1 Running 0 2m8s

ab-nginx-794dd6d597-vswfp 1/1 Running 0 4m38s

[root@master1 hpa]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

ab-nginx 7/7 7 7 13m

[root@master1 hpa]# kubectl describe pod ab-nginx-794dd6d597-44q7w

Warning OutOfmemory 98s kubelet Node didn't have enough resource: memory, requested: 268435456, used: 3584032768, capacity: 3848888320

[root@master hpa]#

# 原因是node2节点没有足够的内存去启动新的pod了五.使用探针对web业务pod进行监控, 一旦出现问题马上重启, 增强业务pod的可靠性。

[root@master1 probe]# vim probe.yaml

[root@master1 probe]# cat probe.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myweb

name: myweb

spec:

replicas: 3

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

resources:

limits:

cpu: 300m

requests:

cpu: 100m

livenessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

startupProbe:

httpGet:

path: /

port: 8000

failureThreshold: 30

periodSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myweb-svc

name: myweb-svc

spec:

selector:

app: myweb

type: NodePort

ports:

- port: 8000

protocol: TCP

targetPort: 8000

nodePort: 30001

# probe部分

livenessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

startupProbe:

httpGet:

path: /

port: 8000

failureThreshold: 30

periodSeconds: 10

[root@master1 probe]# kubectl apply -f probe.yaml

deployment.apps/myweb created

service/myweb-svc created

[root@master1 probe]# kubectl get pod|grep myweb

myweb-7df8f89d75-jn68t 0/1 Running 0 41s

myweb-7df8f89d75-nw2db 0/1 Running 0 41s

myweb-7df8f89d75-s82kw 0/1 Running 0 41s

[root@master1 probe]# kubectl describe pod myweb-7df8f89d75-jn68t

......

Liveness: exec [ls /tmp] delay=5s timeout=1s period=5s #success=1 #failure=3

Readiness: exec [ls /tmp] delay=5s timeout=1s period=5s #success=1 #failure=3

Startup: http-get http://:8000/ delay=0s timeout=1s period=10s #success=1 #failure=30

......六.使用dashboard对整个集群资源进行掌控

1.安装dashboard

[root@master1 /]# mkdir dashboard

[root@master1 /]# cd dashboard/

[root@master1 dashboard]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

--2024-08-19 18:51:18-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.111.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:7621 (7.4K) [text/plain]

正在保存至: “recommended.yaml”

100%[=================================================================================================================================>] 7,621 --.-K/s 用时 0.02s

2024-08-19 18:51:21 (442 KB/s) - 已保存 “recommended.yaml” [7621/7621])

# 修改service为NodePort

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30088

selector:

k8s-app: kubernetes-dashboard

[root@master1 dashboard]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master1 dashboard]# kubectl get pods,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-6f669b9c9b-2rjmf 1/1 Running 0 20s

pod/kubernetes-dashboard-758765f476-nm4bx 1/1 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.104.194.175 <none> 8000/TCP 20s

service/kubernetes-dashboard NodePort 10.108.210.103 <none> 443:30088/TCP 20s

2.创建账号

[root@master1 dashboard]# vim dashboard-access-token.yaml

[root@master1 dashboard]# cat dashboard-access-token.yaml

# Creating a Service Account

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

# Creating a ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

---

# Getting a long-lived Bearer Token for ServiceAccount

apiVersion: v1

kind: Secret

metadata:

name: admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-token

[root@master1 dashboard]# kubectl apply -f dashboard-access-token.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

secret/admin-user created

# 获取token

kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Inlmb3BjVUhjaTJtLUR1b09QdDZxMjVhRVY2R0wzUVZpckROTkVOcFdhVncifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2MWYzNDZhNC0xZjMzLTRmMGMtYmEyNy1jYWZiMTEzNDNhZWQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.wqe17Kldf1ohhSL5fgvwu0AIuy8qtlNsx_IHPKmN54NEbhmqHZjpiftFMeb3KS1KQXeO6Nwie0IUXSdz6LZwGb1q-pu4r5BCicQmkQjPAcSdZmh6HDFoTS-PqQWssUuuAJFGLGgQDFbpA9sPnqcKIslU5daIgRuMX2fEmOZCf5l33C5P7wJxxiVcscbRY6QfMAErDSdTuWU4r_nE_gen0ER-haGQqxO7tBVJht2A1Bn7hpeaq3uRAXIFSM7gyeMizUTCSKUjv6WUaU4noAvjRXMF2cUVfH7kCxMkc1m57RvBalNmsdUnLKxVelkcLOjUUtet4XAWh6ibQ1HbBn60DA

# https访问https://192.168.68.201:30088/

# 会出现登录界面

# 选择token

# 输入上面得到的token

# 就可以看到集群内部的相关数据了七.使用ingress给web业务做基于域名和url的负载均衡的实现

1.安装ingress controller

# 使用旧版本ingress controller v1.1完成

# 准备工作:需要提前上传下面的这些镜像和yaml文件到k8s集群里的linux系统里,建议存放到master节点上,然后再scp到node节点上

[root@master1 /]# mkdir ingress

[root@master1 /]# cd ingress/

[root@master1 ingress]# ls

ingress-controller-deploy.yaml 是部署ingress controller使用的yaml文件

ingress-nginx-controllerv1.1.0.tar.gz ingress-nginx-controller镜像

kube-webhook-certgen-v1.1.0.tar.gz kube-webhook-certgen镜像

#kube-webhook-certgen镜像主要用于生成Kubernetes集群中用于Webhook的证书。生成的证书,可以确保Webhook服务在Kubernetes集群中的安全通信和身份验证

1.将镜像scp到所有的node节点服务器上

[root@master1 ingress]# scp ingress-nginx-controllerv1.1.0.tar.gz kube-webhook-certgen-v1.1.0.tar.gz root@192.168.68.203:/root

root@192.168.68.203's password:

ingress-nginx-controllerv1.1.0.tar.gz 100% 276MB 91.9MB/s 00:03

kube-webhook-certgen-v1.1.0.tar.gz 100% 47MB 88.8MB/s 00:00

2导入镜像,在所有的节点服务器(node1,node2,node3)上进行

[root@node1 ~]# docker load -i ingress-nginx-controllerv1.1.0.tar.gz

e2eb06d8af82: Loading layer [==================================================>] 5.865MB/5.865MB

ab1476f3fdd9: Loading layer [==================================================>] 120.9MB/120.9MB

ad20729656ef: Loading layer [==================================================>] 4.096kB/4.096kB

0d5022138006: Loading layer [==================================================>] 38.09MB/38.09MB

8f757e3fe5e4: Loading layer [==================================================>] 21.42MB/21.42MB

a933df9f49bb: Loading layer [==================================================>] 3.411MB/3.411MB

7ce1915c5c10: Loading layer [==================================================>] 309.8kB/309.8kB

986ee27cd832: Loading layer [==================================================>] 6.141MB/6.141MB

b94180ef4d62: Loading layer [==================================================>] 38.37MB/38.37MB

d36a04670af2: Loading layer [==================================================>] 2.754MB/2.754MB

2fc9eef73951: Loading layer [==================================================>] 4.096kB/4.096kB

1442cff66b8e: Loading layer [==================================================>] 51.67MB/51.67MB

1da3c77c05ac: Loading layer [==================================================>] 3.584kB/3.584kB

Loaded image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0

[root@node1 ~]# docker load -i kube-webhook-certgen-v1.1.0.tar.gz

c0d270ab7e0d: Loading layer [==================================================>] 3.697MB/3.697MB

ce7a3c1169b6: Loading layer [==================================================>] 45.38MB/45.38MB

Loaded image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

3.使用ingress-controller-deploy.yaml 文件去启动ingress controller

[root@master1 ingress]# cat ingress-controller-deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

allow-snippet-annotations: 'true'

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ipFamilyPolicy: SingleStack

ipFamilies:

- IPv4

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

hostNetwork: true

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

topologyKey: kubernetes.io/hostname

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.10

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.1.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

allowPrivilegeEscalation: false

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

[root@master1 ingress]# kubectl apply -f ingress-controller-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

# 查看ingress controller的相关命名空间

[root@master1 ingress]# kubectl get ns

NAME STATUS AGE

default Active 28h

ingress-nginx Active 41s

kube-node-lease Active 28h

kube-public Active 28h

kube-system Active 28h

kubernetes-dashboard Active 102m

# 查看ingress controller的相关service

[root@master1 ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.107.152.90 <none> 80:32725/TCP,443:32262/TCP 110s

ingress-nginx-controller-admission ClusterIP 10.98.220.252 <none> 443/TCP 110s

# 查看ingress controller的相关pod

[root@master1 ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-fdqcr 0/1 Completed 0 2m31s

ingress-nginx-admission-patch-cprpv 0/1 Completed 2 2m31s

ingress-nginx-controller-7cd558c647-csmdr 1/1 Running 0 2m31s

ingress-nginx-controller-7cd558c647-wvgk9 1/1 Running 0 2m31s

2.创建pod并进行服务暴露(基于域名的负载均衡)

# www.zhang.com域名的pod和service

[root@master1 ingress]# cat nginx-svc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy-1

labels:

app: nginx-www-1

spec:

replicas: 3

selector:

matchLabels:

app: nginx-www-1

template:

metadata:

labels:

app: nginx-www-1

spec:

containers:

- name: nginx-www-1

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-1

labels:

app: nginx-svc-1

spec:

selector:

app: nginx-www-1

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

# www.jun.com域名的pod和service

[root@master1 ingress]# cat nginx-svc-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy-2

labels:

app: nginx-www-2

spec:

replicas: 3

selector:

matchLabels:

app: nginx-www-2

template:

metadata:

labels:

app: nginx-www-2

spec:

containers:

- name: nginx-www-2

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-2

labels:

app: nginx-svc-2

spec:

selector:

app: nginx-www-2

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

# 基于域名的ingress配置

[root@master1 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: www-ingress

annotations:

kubernets.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: www.zhang.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginx-svc-1

port:

number: 80

- host: www.jun.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginx-svc-2

port:

number: 80

# 将三个yaml文件全部kubectl apply

[root@master1 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

www-ingress nginx www.zhang.com,www.jun.com 192.168.68.203,192.168.68.204 80 3m5s

# 用一台机器验证这里用的是ansible+nfs

[root@ansiblenfs ~]# vim /etc/hosts

[root@ansiblenfs ~]# 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.68.203 www.zhang.com

192.168.68.204 www.jun.com

cat /etc/hosts

# 然后curl 这两个域名

[root@ansiblenfs ~]# curl www.zhang.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@ansiblenfs ~]# curl www.jun.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 均能curl通,基于域名的ingress负载均衡成功

3.创建pod并进行服务暴露(基于url的负载均衡)

# 基于url的pod和service

[root@master1 ingress]# cat nginx-svc-3.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy-3

labels:

app: nginx-url-3

spec:

replicas: 2

selector:

matchLabels:

app: nginx-url-3

template:

metadata:

labels:

app: nginx-url-3

spec:

containers:

- name: nginx-url-3

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-3

labels:

app: nginx-svc-3

spec:

selector:

app: nginx-url-3

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

[root@master1 ingress]# cat nginx-svc-4.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy-4

labels:

app: nginx-url-4

spec:

replicas: 2

selector:

matchLabels:

app: nginx-url-4

template:

metadata:

labels:

app: nginx-url-4

spec:

containers:

- name: nginx-url-4

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-4

labels:

app: nginx-svc-4

spec:

selector:

app: nginx-url-4

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

# 基于url的ingress配置

[root@master1 ingress]# cat ingress-url.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: url-ingress

annotations:

kubernets.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: www.zhangjun.com

http:

paths:

- path: /zz

pathType: Prefix

backend:

service:

name: nginx-svc-3

port:

number: 80

- path: /jj

pathType: Prefix

backend:

service:

name: nginx-svc-4

port:

number: 80

[root@master1 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.zhang.com,www.jun.com 192.168.68.203,192.168.68.204 80 23m

url-ingress nginx www.zhangjun.com 192.168.68.203,192.168.68.204 80 35s

# 因为是基于url的负载均衡,所以在ingress-url.yaml文件的根目录下建立了zz和jj目录用以实现负载均衡(用户访问不同目录以实现分流,达到负载均衡的目的),但容器不会自己创建这两个目录,需要手动进入容器内部创建,我们在zz和jj目录下创建一个首页文件以方便访问及验证

[root@master1 ingress]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-3-6c8dd95b89-5qkpk 1/1 Running 0 63s

nginx-deploy-3-6c8dd95b89-tn5vp 1/1 Running 0 63s

nginx-deploy-4-58984c95c5-69q4x 1/1 Running 0 2s

nginx-deploy-4-58984c95c5-rglx4 1/1 Running 0 2s

[root@master1 ingress]# kubectl exec -it nginx-deploy-3-6c8dd95b89-5qkpk -- bash

root@nginx-deploy-3-6c8dd95b89-5qkpk:/# cd /usr/share/nginx/html

root@nginx-deploy-3-6c8dd95b89-5qkpk:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-deploy-3-6c8dd95b89-5qkpk:/usr/share/nginx/html# mkdir zz

root@nginx-deplroot@nginx-deploy-3-6c8dd95b89-5qkpk:/usr/share/nginx/html/zz# echo "i love zz" >index.html

root@nginx-deploy-3-6c8dd95b89-5qkpk:/usr/share/nginx/html/zz# cat index.html

i love zz

oy-3-6c8dd95b89-5qkpk:/usr/share/nginx/html# cd zz

[root@master1 ingress]# kubectl exec -it nginx-deploy-4-58984c95c5-69q4x -- bash

root@nginx-deploy-4-58984c95c5-69q4x:/# cd /usr/share/nginx/html/

root@nginx-deploy-4-58984c95c5-69q4x:/usr/share/nginx/html# ls

50x.html index.html

root@nginx-deploy-4-58984c95c5-69q4x:/usr/share/nginx/html# mkdir jj

root@nginx-deploy-4-58984c95c5-69q4x:/usr/share/nginx/html# cd jj

root@nginx-deploy-4-58984c95c5-69q4x:/usr/share/nginx/html/jj# echo "i hate jj" >index.html

root@nginx-deploy-4-58984c95c5-69q4x:/usr/share/nginx/html/jj# cat index.html

i hate jj

# 验证依旧使用ansible+nfs

[root@ansiblenfs ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.68.203 www.zhang.com

192.168.68.204 www.jun.com

192.168.68.203 www.zhangjun.com

192.168.68.204 www.zhangjun.com

[root@ansiblenfs ~]# curl www.zhangjun.com/zz/index.html

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.27.1</center>

</body>

</html>

[root@ansiblenfs ~]# curl www.zhangjun.com/zz/index.html

i love zz

[root@ansiblenfs ~]# curl www.zhangjun.com/jj/index.html

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.27.1</center>

</body>

</html>

[root@ansiblenfs ~]# curl www.zhangjun.com/jj/index.html

i hate jj

# 上述出现404错误代表访问的url不存在,因为我们只在服务3和服务4中启动的两个pod的其中一个做了新建目录和首页文件的行为,解决该问题只需要在另外的pod进行同样操作即可,因为service背后的ipvs的调度算法是轮询的,所以建议每个pod都建立对应的目录和首页文件八.构建CI/CD环境, k8smaster2上安装部署Jenkins,一台机器上安装部署harbor仓库。

1.安装jenkins

# Jenkins部署到k8s里

# 1.安装git软件

[root@master1 jenkins]# yum install git -y

# 2.下载相关的yaml文件

[root@master1 jenkins]# git clone https://github.com/scriptcamp/kubernetes-jenkins

[root@master1 jenkins]# ls

kubernetes-jenkins

[root@master1 jenkins]# cd kubernetes-jenkins/

[root@master1 kubernetes-jenkins]# ls

deployment.yaml namespace.yaml README.md serviceAccount.yaml service.yaml volume.yaml

# 3.创建命名空间

[root@master1 kubernetes-jenkins]# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: devops-tools

[root@master1 kubernetes-jenkins]# kubectl apply -f namespace.yaml

namespace/devops-tools created

[root@master1 kubernetes-jenkins]# kubectl get ns

NAME STATUS AGE

default Active 22h

devops-tools Active 19s

kube-node-lease Active 22h

kube-public Active 22h

kube-system Active 22h

# 4.创建服务账号,集群角色,绑定

[root@master1 kubernetes-jenkins]# cat serviceAccount.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: jenkins-admin

rules:

- apiGroups: [""]

resources: ["*"]

verbs: ["*"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins-admin

namespace: devops-tools

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jenkins-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: jenkins-admin

subjects:

- kind: ServiceAccount

name: jenkins-admin

[root@master1 kubernetes-jenkins]# kubectl apply -f serviceAccount.yaml

clusterrole.rbac.authorization.k8s.io/jenkins-admin created

serviceaccount/jenkins-admin created

clusterrolebinding.rbac.authorization.k8s.io/jenkins-admin created

# 5.创建卷,用来存放数据

[root@master1 kubernetes-jenkins]# cat volume.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-pv-volume

labels:

type: local

spec:

storageClassName: local-storage

claimRef:

name: jenkins-pv-claim

namespace: devops-tools

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: /mnt

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node1 # 需要修改为k8s里的node节点的名字

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pv-claim

namespace: devops-tools

spec:

storageClassName: local-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

[root@master1 kubernetes-jenkins]# kubectl apply -f volume.yaml

storageclass.storage.k8s.io/local-storage created

persistentvolume/jenkins-pv-volume created

persistentvolumeclaim/jenkins-pv-claim created

[root@master1 kubernetes-jenkins]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

jenkins-pv-volume 10Gi RWO Retain Bound devops-tools/jenkins-pv-claim local-storage 33s

[root@master1 kubernetes-jenkins]# kubectl describe pv jenkins-pv-volume

Name: jenkins-pv-volume

Labels: type=local

Annotations: <none>

Finalizers: [kubernetes.io/pv-protection]

StorageClass: local-storage

Status: Bound

Claim: devops-tools/jenkins-pv-claim

Reclaim Policy: Retain

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 10Gi

Node Affinity:

Required Terms:

Term 0: kubernetes.io/hostname in [k8snode1]

Message:

Source:

Type: LocalVolume (a persistent volume backed by local storage on a node)

Path: /mnt

Events: <none>

# 6.部署Jenkins

[root@master1 kubernetes-jenkins]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: devops-tools

spec:

replicas: 1

selector:

matchLabels:

app: jenkins-server

template:

metadata:

labels:

app: jenkins-server

spec:

securityContext:

fsGroup: 1000

runAsUser: 1000

serviceAccountName: jenkins-admin

containers:

- name: jenkins