DevOps 内容分享(四):基于 Jenkins 和 K8S 构建企业级 DevOps 容器云平台

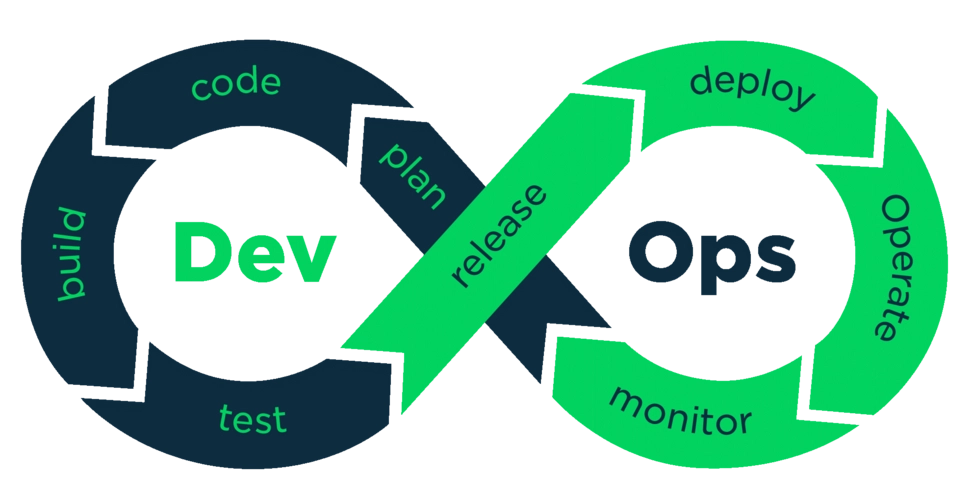

DevOps 中的 Dev 是 Devlopment(开发),Ops 是 Operation(运维),用一句话来说 DevOps 就是打通开发运维的壁垒,实现开发运维一体化。DevOps 整个流程包括敏捷开发->持续集成->持续交 付->持续部署。

·

目录

3、配置k8s基于containerd从harbor仓库找镜像运行pod

6、测试通过jenkins发布代码到k8s开发环境、测试环境、生产环境

一、什么是DevOps

DevOps 中的 Dev 是 Devlopment(开发),Ops 是 Operation(运维),用一句话来说 DevOps 就是打通开发运维的壁垒,实现开发运维一体化。DevOps 整个流程包括敏捷开发->持续集成->持续交 付->持续部署。

1.敏捷开发提高开发效率,及时跟进用户需求,缩短开发周期。敏捷开发包括编写代码和构建代码两个阶段,可以使用 git 或者 svn 来管理代码,比方说 java 代 码,用 maven 对代码进行构建。2.持续集成(CI)持续集成(CI)是在源代码变更后自动检测、拉取、构建和(在大多数情况下)进行单元测试的过 程。持续集成是启动管道的环节(经过某些预验证 —— 通常称为 上线前检查 (pre-flight checks) —— 有时会被归在持续集成之前)。持续集成的目标是快速确保开发人员新提交的变更是好的,并且适 合在代码库中进一步使用。常见的持续集成工具:1. Jenkins Jenkins 是用 Java 语言编写的,是目前使用最多和最受欢迎的持续集成工具,使用 Jenkins,可以 自动监测到 git 或者 svn 存储库代码的更新,基于最新的代码进行构建,把构建好的源码或者镜像发布到 生产环境。Jenkins 还有个非常好的功能:它可以在多台机器上进行分布式地构建和负载测试2. TeamCity3. Travis CI4. Go CD5. Bamboo6. GitLab CI7. Codeship它的好处主要有以下几点:1)较早的发现错误:每次集成都通过自动化的构建(包括编译,发布,自动化测试)来验证,哪个 环节出现问题都可以较早的发现。2)快速的发现错误:每完成一部分代码的更新,就会把代码集成到主干中,这样就可以快速的发现 错误,比较容易的定位错误。3)提升团队绩效:持续集成中代码更新速度快,能及时发现小问题并进行修改,使团队能创造出更 好的产品。4)防止分支过多的偏离主干:经常持续集成,会使分支代码经常向主干更新,当单元测试失败或者 出现 bug,如果开发者需要在没有调试的情况下恢复仓库的代码到没有 bug 的状态,只有很小部分的代 码会丢失。持续集成的目的是提高代码质量,让产品快速的更新迭代。它的核心措施是,代码集成到主干之前, 必须通过自动化测试。只要有一个测试用例失败,就不能集成。3.持续交付持续交付在持续集成的基础上,将集成后的代码部署到更贴近真实运行环境的「类生产环境」(production-like environments)中。交付给质量团队或者用户,以供评审。如果评审通过,代码就进入生产阶段。如果所有的代码完成之后一起交付,会导致很多问题爆发出来,解决起来很麻烦,所以持续集成,也就是每更新一次代码,都向下交付一次,这样可以及时发现问题,及时解决,防止问题大量堆积。4.持续部署(CD)持续部署是指当交付的代码通过评审之后,自动部署到生产环境中。持续部署是持续交付的最高阶段。Puppet,SaltStack 和 Ansible 是这个阶段使用的流行工具。容器化工具在部署阶段也发挥着重要作用。Docker 和 k8s 是流行的工具,有助于在开发,测试和生产环境中实现一致性。 除此之外,k8s还可以实现自动扩容缩容等功能。

二、k8s 在 DevOps 中可实现的功能

1、自动化持续交付->持续部署。2、多集群管理可以根据客户需求对开发,测试,生产环境部署多套 kubernetes 集群,每个环境使用独立的物理资源,相互之间避免影响。或者,可以搭建一套 k8s,划分名称空间,给不同的环境用。3、多环境一致性Kubernetes 是基于 docker 的容器编排工具,因为容器的镜像是不可变的,所以镜像把 OS、业务 代码、运行环境、程序库、目录结构都包含在内,镜像保存在我们的私有仓库,只要用户从我们提供的私 有仓库拉取镜像,就能保证环境的一致性。

三、DevOps 容器云平台工作流程

jenkins 主节点:

负责维护 pipeline

jenkins 从节点:

负责把主节点 pipeline 里的步骤运行起来

jenkins 主节点立即构建 pipeline,会调用 k8s apiserver 在 k8s 集群里创建一个 pod(jenkins 从节点)。所有 pipeline 里的步骤会在 jenkins 从节点运行。

Jenkins—>k8s,帮助你创建一个 pod,pod 里面封装的是 jenkins 服务

主 Jenkins pipeline 流水线:

第一步:从 gitlab 拉代码

第二步:如果 java 开发,对代码编译打包并且把代码传到代码扫描仓库 sonarqube:maven 生成jar、war、go build 生成一个可执行的文件、python 代码,直接运行.py 结尾的文件

第三步:把 jar 或者 war 包基于 dockerfile 构建镜像

第四步: 把镜像传到镜像仓库 harbor

第五步:写 yaml 文件,把开发、测试、生产环境的资源对应的 yaml 都写出来

主 jenkins 构建 pipeline,调用 k8s api,在 k8s 里创建一个 pod,这个 pod 里面运行的是jenkins 服务,只不过这个 pod 里运行的 jenkins 服务是 jenkins 从节点,刚才这五个步骤,都是在jenkins 从节点这个 pod 里做的。完成之后,jenkins 从节点这个 pod 就会被删除。主 jenkins 是包工四、搭建Harbor

设置主机名

hostnamectl set-hostname harbor.cn && bash

修改hosts文件

vim /etc/hosts

192.168.1.100 lx100

192.168.1.110 lx110

192.168.1.120 lx120

192.168.1.130 harbor.cn

永久关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

临时关闭selinux

setenforce 0

配置yum源

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

配置时间同步

yum -y install chrony

vim /etc/chrony.conf

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp1.tencent.com iburst

server ntp2.tencent.com iburst

设置为开机自启并且现在启动

systemctl enable chronyd --now

编写计划任务定时同步时间

crontab -e

* * * * * /usr/bin/systemctl restart chronyd

修改内核参数

modprobe br_netfilter

cat <<EOF >/etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

将K8S节点的hosts文件同步

vim /etc/hosts

192.168.1.100 lx100

192.168.1.110 lx110

192.168.1.120 lx120

192.168.1.130 harbor.cn

在K8S控制节点到harbor.cn机器免密登录

生成公钥密钥

ssh-keygen -t rsa

将公钥发送到目标机器(倘若之前生成过公钥密钥可以直接发送)

ssh-copy-id -i .ssh/id_rsa.pub root@harbor.cn

为Harbor自签发证书

mkdir /data/ssl -p

cd /data/ssl

生成CA证书

生成一个 3072 位的 key,也就是私钥

openssl genrsa -out ca.key 3072

生成一个数字证书 ca.pem,3650 表示证书的有效时间是 10 年,按箭头提示填写即可,没有箭头标注的为空

openssl req -new -x509 -days 3650 -key ca.key -out ca.pem

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:beijing

Locality Name (eg, city) [Default City]:beijing

Organization Name (eg, company) [Default Company Ltd]:lx

Organizational Unit Name (eg, section) []:CA

Common Name (eg, your name or your server's hostname) []:harbor.cn

Email Address []:lx@163.com

生成域名的证书

openssl genrsa -out harbor.key 3072

生成一个 3072 位的 key,也就是私钥

openssl req -new -key harbor.key -out harbor.csr

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:beijing

Locality Name (eg, city) [Default City]:beijing

Organization Name (eg, company) [Default Company Ltd]:lx

Organizational Unit Name (eg, section) []:CA

Common Name (eg, your name or your server's hostname) []:harbor.cn

Email Address []:lx@163.com

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

签发证书

openssl x509 -req -in harbor.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out harbor.pem -days 3650

Signature ok

subject=C = CN, ST = beijing, L = beijing, O = lx, OU = CA, CN = harbor.cn, emailAddress = lx@163.com

Getting CA Private Key

查看证书是否有效

openssl x509 -noout -text -in harbor.pem

安装docker

yum -y install docker-ce

启动docker并配置开机自启

systemctl start docker && systemctl enable docker

配置镜像加速器

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://vljhz7wt.mirror.aliyuncs.com"],

"insecure-registries":["192.168.1.130","harbor.cn"]

}

重启docker

systemctl restart docker

安装harbor

创建安装目录

mkdir /data/install -p

把harbor的离线包 harbor-offline-installer-v2.3.0-rc3.tgz上传到这个目录

下载离线包地址:

https://github.com/goharbor/harbor/releases/tag/

解压压缩包

tar zxf harbor-offline-installer-v2.3.0-rc3.tgz

cd harbor

cp harbor.yml.tmpl harbor.yml

vim harbor.yml

hostname: harbor.cn

certificate: /data/ssl/harbor.pem

private_key: /data/ssl/harbor.key

harbor_admin_password: Harbor12345

安装docker-compose

下载二进制文件地址:

https://github.com/docker/compose/

上传到服务器

mv docker-compose-Linux-x86_64.64 /usr/local/bin/dockercompose

添加执行权限

chmod +x /usr/local/bin/docker-compose

docker load -i 解压镜像

docker load -i docker-harbor-2-3-0.tar.gz

安装

cd /data/install/harbor

./install.sh

停止:

cd /data/install/harbor

docker-compose stop

启动:

cd /data/install/harbor

docker-compose up -d

修改自己电脑的hosts文件,C:\Windows\System32\drivers\etc

添加:

192.168.1.130 harbor.cn访问:

新建一个test项目

在其他机器上测试harbor

vim /etc/docker/daemon.json

添加:

"insecure-registries":["192.168.1.130","harbor.cn"]

登录harbor

docker login 192.168.1.130

Username: admin

Password: Harbor12345

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

打包镜像

docker tag busybox:1.28 192.168.1.130/test/busybox:1.28

上传镜像

docker push 192.168.1.130/test/busybox:1.28

拉取镜像

docker pull 192.168.1.130/test/busybox:1.28

1.28: Pulling from test/busybox

Digest: sha256:74f634b1bc1bd74535d5209589734efbd44a25f4e2dc96d78784576a3eb5b335

Status: Image is up to date for 192.168.1.130/test/busybox:1.28

192.168.1.130/test/busybox:1.28

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

php 5-apache 24c791995c1e 4 years ago 355MB

192.168.1.130/test/busybox 1.28 8c811b4aec35 5 years ago 1.15MB

busybox 1.28 8c811b4aec35 5 years ago 1.15MB验证

五、安装Jenkins

1、可用如下两种方法

- 通过 docker 直接下载 jenkins 镜像,基于镜像启动服务

- 在 k8s 中部署 Jenkins 服务,我们基于此方法

安装nfs服务

在主节点上安装nfs server端

yum install nfs-utils -y && systemctl start nfs-server && systemctl enable nfs-server

在从节点上安装nfs client端

yum install nfs-utils -y && systemctl start nfs-server && systemctl enable nfs-server

在主节点上新建一个nfs共享目录

mkdir /data/v1 -p

vim /etc/export

/data/v1 *(rw,no_root_squash)

exportfs -arv

在kubenets中部署jenkins

创建命名空间

kubectl create ns jenkins-k8s

创建PV

cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-k8s-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.100

path: /data/v1

更新资源清单

kubectl apply -f pv.yaml

创建PVC

cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-k8s-pvc

namespace: jenkins-k8s

spec:

resources:

requests:

storage: 10Gi

accessModes:

- ReadWriteMany

更新资源清单

kubectl apply -f pvc.yaml

查看pvc和pv是否绑定

kubectl get pvc -n jenkins-k8s

创建SA

kubectl create sa jenkins-k8s-sa -n jenkins-k8s

kubectl create clusterrolebinding jenkins-k8s-sa-cluster --clusterrole=cluster-admin --serviceaccount=jenkins-k8s:jenkins-k8s-sa

解压镜像

ctr -n=k8s.io images import jenkins-slave-latest.tar.gz

ctr -n=k8s.io images import jenkins2.421.tar.gz

通过deployment部署jenkins

cat jenkins-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: jenkins-k8s

labels:

app: jenkins

spec:

replicas: 2

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

serviceAccountName: jenkins-k8s-sa

containers:

- name: jenkins

image: docker.io/jenkins/jenkins:2.421

imagePullPolicy: IfNotPresent

ports:

- name: web

containerPort: 8080

protocol: TCP

- name: agent

containerPort: 50000

protocol: TCP

livenessProbe: #存活监测

httpGet: #尝试连接8080端口的/login地址

path: /login

port: 8080

initialDelaySeconds: 60 #每间隔60秒重新连接

timeoutSeconds: 5 #每次存活监测请求将在5秒内返回响应,超时则为失败

failureThreshold: 12 #失败12次说明这个容器不健康,容器会被重启

readinessProbe: #就绪监测

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

failureThreshold: 12

timeoutSeconds: 5

volumeMounts:

- name: jenkins-volumes

subPath: jenkins_home

mountPath: /var/jenkins_home

volumes:

- name: jenkins-volumes

persistentVolumeClaim:

claimName: jenkins-k8s-pvc

chown -R 1000.1000 /data/v1/

更新资源清单

kubectl apply -f jenkins-deployment.yaml

kubectl get deployment -n=jenkins-k8s

为jenkins前端创建service

cat jenkins-service.yaml

apiVersion: v1

kind: Service

metadata:

name: jenkins-svc

namespace: jenkins-k8s

labels:

app: jenkins

spec:

selector:

app: jenkins

type: NodePort

ports:

- name: web

port: 8080

targetPort: web

nodePort: 30002 #这个范围在(30000-32767)

- name: target

port: 50000

targetPort: agent

更新资源清单

kubectl apply -f jenkins-service.yaml

查看密码

cat /data/v1/jenkins_home/secrets/initialAdminPassword

8a817d95a7804f34bf2d80e7ec25291f访问:

2、离线安装插件

查看版本

在清华源下载离线插件

https://mirrors.tuna.tsinghua.edu.cn/jenkins/plugins/上面对应的版本是1.8.0,所以下载1.8.0版本的gitlab

离线安装

3、配置k8s基于containerd从harbor仓库找镜像运行pod

修改控制节点和工作节点的/etc/containerd/config.toml文件

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.1.130".tls]

insecure_skip_verify = true

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.1.130".auth]

username = "admin"

password = "Harbor12345"

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.1.130"]

endpoint = ["https://192.168.1.130:443"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://vh3bm52y.mirror.aliyuncs.com","https://registry.docker-cn.com"]

创建pod测试

#导入tomcat镜像

docker load -i tomcat.tar.gz

#打包tomcat镜像

docker tag tomcat:8.5-jre8-alpine 192.168.1.130/test/tomcat:8.5

上传镜像到仓库

docker push 192.168.1.130/test/tomcat:8.5

编写yaml文件创建pod

cat ceshi.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

spec:

containers:

- name: test

ports:

- containerPort: 8080

image: 192.168.1.130/test/tomcat:8.5

imagePullPolicy: IfNotPresent

更新资源清单

kubectl apply -f ceshi.yaml

查看pod

kubectl get pods

NAME READY STATUS RESTARTS AGE

test 1/1 Running 0 2m16s

4、测试jenkins的CICD

在harbor中创建jenkins-demo项目

在jenkins中安装kubernetes插件

配置jenkins访问K8S集群

如果结果为示 Connection test successful 或Connected to Kubernetes 1.26 则测试成功

配置jenkins的地址为域名

配置pod模板

添加卷------>选择 Host Path Volume

控制节点存在污点,所以需要把.kube文件夹传入所有工作节点把所有工作节点都导入jenkins-slave-latest.tar.gzctr -n=k8s.io images import jenkins-slave-latest.tar.gz工作节点:mkdir -p /root/.kube控制节点:scp /root/.kube/config 节点名称:/root/.kube

5、添加登录harbor需要的凭据

5、添加登录harbor需要的凭据

username:admin

password:Harbor12345

ID:dockerharbor

描述:随意

上面改好之后选择 create 即可

6、测试通过jenkins发布代码到k8s开发环境、测试环境、生产环境

在k8s的控制节点创建命名空间kubectl create ns devlopmentnamespace/devlopment createdkubectl create ns productionnamespace/production createdkubectl create ns qatestnamespace/qatest created

新建任务

编写pipeline脚本

node('testhan') { #testhan为以上设置的Pod模板标签

stage('Clone') {

echo "1.Clone Stage" #第一步克隆代码

git url: "https://gitee.com/howehonkei/jenkins-sample" #gitee地址

script {

build_tag = sh(returnStdout: true, script: 'git rev-parse --short HEAD').trim()

}

}

stage('Test') {

echo "2.Test Stage" #打印2.Test Stage

}

stage('Build') { #构建镜像

echo "3.Build Docker Image Stage"

sh "docker build -t 192.168.1.130/jenkins-demo/jenkins-demo:${build_tag} ." #构建后的名字:仓库地址/项目名/镜像名:版本号

}

stage('Push') { #上传到镜像仓库

echo "4.Push Docker Image Stage"

withCredentials([usernamePassword(credentialsId: 'dockerharbor',passwordVariable: 'dockerHubPassword', usernameVariable: 'dockerHubUser')]) {

sh "docker login 192.168.1.130 -u ${dockerHubUser} -p ${dockerHubPassword}"

sh "docker push 192.168.1.130/jenkins-demo/jenkins-demo:${build_tag}"

}

}

stage('Deploy to dev') {

echo "5. Deploy DEV"

sh "sed -i 's/<BUILD_TAG>/${build_tag}/' k8s-dev-harbor.yaml "

sh "kubectl apply -f k8s-dev-harbor.yaml --validate=false"

}

stage('Promote to qa') {

def userInput = input(

id: 'userInput',

message: 'Promote to qa?', #是否部署到qatest测试环境

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "YES\nNO",

name: 'Env'

]

]

)

echo "This is a deploy step to ${userInput}"

if (userInput == "YES") { #如果是则部署,如果不是则exit退出

sh "sed -i 's/<BUILD_TAG>/${build_tag}/' k8s-qa-harbor.yaml "

sh "kubectl apply -f k8s-qa-harbor.yaml --validate=false"

sh "sleep 6"

sh "kubectl get pods -n qatest"

} else {

//exit

}

}

stage('Promote to pro') {

def userInput = input(

id: 'userInput',

message: 'Promote to pro?', #是否部署到pro生产环境,如果是则部署,如果不是,则退出

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "YES\nNO",

name: 'Env'

]

]

)

echo "This is a deploy step to ${userInput}"

if (userInput == "YES") {

sh "sed -i 's/<BUILD_TAG>/${build_tag}/' k8s-prod-harbor.yaml "

sh "kubectl apply -f k8s-prod-harbor.yaml --record --validate=false"

}

}

}

结果(期间需要点一下yes/no):

Started by user admin

[Pipeline] Start of Pipeline

[Pipeline] node

Agent test2-q4bzl is provisioned from template test2

---

apiVersion: "v1"

kind: "Pod"

metadata:

labels:

jenkins: "slave"

jenkins/label-digest: "109f4b3c50d7b0df729d299bc6f8e9ef9066971f"

jenkins/label: "test2"

name: "test2-q4bzl"

namespace: "jenkins-k8s"

spec:

containers:

- env:

- name: "JENKINS_SECRET"

value: "********"

- name: "JENKINS_AGENT_NAME"

value: "test2-q4bzl"

- name: "JENKINS_NAME"

value: "test2-q4bzl"

- name: "JENKINS_AGENT_WORKDIR"

value: "/home/jenkins/agent"

- name: "JENKINS_URL"

value: "http://jenkins-service.jenkins-k8s.svc.cluster.local:8080/"

image: "docker.io/library/jenkins-slave-latest:v1"

imagePullPolicy: "IfNotPresent"

name: "jnlp"

resources: {}

tty: true

volumeMounts:

- mountPath: "/var/run/docker.sock"

name: "volume-0"

readOnly: false

- mountPath: "/home/jenkins/agent/.kube"

name: "volume-1"

readOnly: false

- mountPath: "/home/jenkins/agent"

name: "workspace-volume"

readOnly: false

workingDir: "/home/jenkins/agent"

hostNetwork: false

nodeSelector:

kubernetes.io/os: "linux"

restartPolicy: "Never"

serviceAccountName: "jenkins-k8s-sa"

volumes:

- hostPath:

path: "/var/run/docker.sock"

name: "volume-0"

- hostPath:

path: "/root/.kube/"

name: "volume-1"

- emptyDir:

medium: ""

name: "workspace-volume"

Running on test2-q4bzl in /home/jenkins/agent/workspace/jenkins-variable-test-deploy

[Pipeline] {

[Pipeline] stage

[Pipeline] { (Clone)

[Pipeline] echo

1.Clone Stage

[Pipeline] git

The recommended git tool is: NONE

No credentials specified

Cloning the remote Git repository

Cloning repository https://gitee.com/howehonkei/jenkins-sample

> git init /home/jenkins/agent/workspace/jenkins-variable-test-deploy # timeout=10

Fetching upstream changes from https://gitee.com/howehonkei/jenkins-sample

> git --version # timeout=10

> git --version # 'git version 2.20.1'

> git fetch --tags --force --progress -- https://gitee.com/howehonkei/jenkins-sample +refs/heads/*:refs/remotes/origin/* # timeout=10

> git config remote.origin.url https://gitee.com/howehonkei/jenkins-sample # timeout=10

> git config --add remote.origin.fetch +refs/heads/*:refs/remotes/origin/* # timeout=10

Avoid second fetch

Checking out Revision c84e9c996d94a1a70acef249f02defa1dd8eba2d (refs/remotes/origin/master)

Commit message: "update k8s-qa-harbor.yaml."

> git rev-parse refs/remotes/origin/master^{commit} # timeout=10

> git config core.sparsecheckout # timeout=10

> git checkout -f c84e9c996d94a1a70acef249f02defa1dd8eba2d # timeout=10

> git branch -a -v --no-abbrev # timeout=10

> git checkout -b master c84e9c996d94a1a70acef249f02defa1dd8eba2d # timeout=10

> git rev-list --no-walk 695badebb4fcec5ec7121a7ee0ad7c01470c1f64 # timeout=10

[Pipeline] script

[Pipeline] {

[Pipeline] sh

+ git rev-parse --short HEAD

[Pipeline] }

[Pipeline] // script

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Test)

[Pipeline] echo

2.Test Stage

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Build)

[Pipeline] echo

3.Build Docker Image Stage

[Pipeline] sh

+ docker build -t 192.168.1.130/jenkins-demo/jenkins-demo:c84e9c9 .

#1 [internal] load build definition from Dockerfile

#1 digest: sha256:bbdc400cb3325a1a54ecadf376a32be85651b9bbeab05d06b9b4b0a9f344485a

#1 name: "[internal] load build definition from Dockerfile"

#1 started: 2024-01-18 05:39:21.487644177 +0000 UTC

#1 completed: 2024-01-18 05:39:21.487777495 +0000 UTC

#1 duration: 133.318µs

#1 started: 2024-01-18 05:39:21.488051257 +0000 UTC

#1 completed: 2024-01-18 05:39:21.517441795 +0000 UTC

#1 duration: 29.390538ms

#1 transferring dockerfile: 173B done

#2 [internal] load .dockerignore

#2 digest: sha256:a25b10a47fbc69d60cbf739aef03488d480632cae80dcc6c485f67467bfbefdf

#2 name: "[internal] load .dockerignore"

#2 started: 2024-01-18 05:39:21.4905305 +0000 UTC

#2 completed: 2024-01-18 05:39:21.490626377 +0000 UTC

#2 duration: 95.877µs

#2 started: 2024-01-18 05:39:21.490805911 +0000 UTC

#2 completed: 2024-01-18 05:39:21.521249966 +0000 UTC

#2 duration: 30.444055ms

#2 transferring context: 2B done

#3 [internal] load metadata for docker.io/library/golang:1.10.4-alpine

#3 digest: sha256:2975609dc4ce6156e263e0aef9cc4d1986d8d39308587e04d395debc0a55fa8f

#3 name: "[internal] load metadata for docker.io/library/golang:1.10.4-alpine"

#3 started: 2024-01-18 05:39:21.574908704 +0000 UTC

#3 completed: 2024-01-18 05:39:37.023026759 +0000 UTC

#3 duration: 15.448118055s

#7 [internal] load build context

#7 digest: sha256:fe0a52a2d68662efe5c62b5b5d4ee7c0517de72c69e6d5137d9381488396a5ca

#7 name: "[internal] load build context"

#7 started: 2024-01-18 05:39:37.024698497 +0000 UTC

#7 completed: 2024-01-18 05:39:37.024729067 +0000 UTC

#7 duration: 30.57µs

#7 started: 2024-01-18 05:39:37.025855149 +0000 UTC

#7 completed: 2024-01-18 05:39:37.061667526 +0000 UTC

#7 duration: 35.812377ms

#7 transferring context: 154.11kB 0.0s done

#8 [1/4] FROM docker.io/library/golang:1.10.4-alpine@sha256:55ff778715a4f37...

#8 digest: sha256:2e76b53f7d06a662855ef278018be0816404be0c654422b28f3c5748b69d9a3a

#8 name: "[1/4] FROM docker.io/library/golang:1.10.4-alpine@sha256:55ff778715a4f37ef0f2f7568752c696906f55602be0e052cbe147becb65dca3"

#8 started: 2024-01-18 05:39:37.025007713 +0000 UTC

#8 completed: 2024-01-18 05:39:37.025587905 +0000 UTC

#8 duration: 580.192µs

#8 started: 2024-01-18 05:39:37.063816307 +0000 UTC

#8 completed: 2024-01-18 05:39:37.063891457 +0000 UTC

#8 duration: 75.15µs

#8 cached: true

#6 [2/4] ADD . /go/src/app

#6 digest: sha256:b1214ffb8f26db98d8e364fb36a7b0c224468782e60f842bfde3b2a8fadf22e6

#6 name: "[2/4] ADD . /go/src/app"

#6 started: 2024-01-18 05:39:37.065077866 +0000 UTC

#6 completed: 2024-01-18 05:39:37.148605762 +0000 UTC

#6 duration: 83.527896ms

#5 [3/4] WORKDIR /go/src/app

#5 digest: sha256:488c9bdf567ea00c52990bc15ca82f860bf423238f30ac537905dd32f415bd44

#5 name: "[3/4] WORKDIR /go/src/app"

#5 started: 2024-01-18 05:39:37.150149484 +0000 UTC

#5 completed: 2024-01-18 05:39:37.185096765 +0000 UTC

#5 duration: 34.947281ms

#4 [4/4] RUN go build -v -o /go/src/app/jenkins-app

#4 digest: sha256:6d351affa2573a9722610567c90ae6d63b273e369ca4a584ee5cf359e9c4fad5

#4 name: "[4/4] RUN go build -v -o /go/src/app/jenkins-app"

#4 started: 2024-01-18 05:39:37.187433605 +0000 UTC

#4 0.992 app

#4 completed: 2024-01-18 05:39:40.084060901 +0000 UTC

#4 duration: 2.896627296s

#9 exporting to image

#9 digest: sha256:f018acb84f7e531332e57af780b511f745fbb13eb6a7aeed98d98f227d4802ce

#9 name: "exporting to image"

#9 started: 2024-01-18 05:39:40.086667144 +0000 UTC

#9 exporting layers 0.1s done

#9 writing image sha256:43a1bfd469004719b88f9bcbf984176df4fca3f7ab2bedc54a89bc0a554e10c1

#9 completed: 2024-01-18 05:39:40.170335485 +0000 UTC

#9 duration: 83.668341ms

#9 writing image sha256:43a1bfd469004719b88f9bcbf984176df4fca3f7ab2bedc54a89bc0a554e10c1 done

#9 naming to 192.168.1.130/jenkins-demo/jenkins-demo:c84e9c9 done

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Push)

[Pipeline] echo

4.Push Docker Image Stage

[Pipeline] withCredentials

Masking supported pattern matches of $dockerHubPassword

[Pipeline] {

[Pipeline] sh

Warning: A secret was passed to "sh" using Groovy String interpolation, which is insecure.

Affected argument(s) used the following variable(s): [dockerHubPassword]

See https://jenkins.io/redirect/groovy-string-interpolation for details.

+ docker login 192.168.1.130 -u admin -p ****

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[Pipeline] sh

+ docker push 192.168.1.130/jenkins-demo/jenkins-demo:c84e9c9

The push refers to repository [192.168.1.130/jenkins-demo/jenkins-demo]

5cb61b0deea9: Preparing

5f70bf18a086: Preparing

087cad268eff: Preparing

ac1c7fa88ed0: Preparing

cc5fec2c1edc: Preparing

93448d8c2605: Preparing

c54f8a17910a: Preparing

df64d3292fd6: Preparing

93448d8c2605: Waiting

c54f8a17910a: Waiting

df64d3292fd6: Waiting

cc5fec2c1edc: Layer already exists

ac1c7fa88ed0: Layer already exists

5f70bf18a086: Layer already exists

93448d8c2605: Layer already exists

c54f8a17910a: Layer already exists

df64d3292fd6: Layer already exists

087cad268eff: Pushed

5cb61b0deea9: Pushed

c84e9c9: digest: sha256:ca0508a398d69f452e42d4f2bd9704bcee0e8f18f58e5f0bfb90b0c08de6494f size: 1991

[Pipeline] }

[Pipeline] // withCredentials

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Deploy to dev)

[Pipeline] echo

5. Deploy DEV

[Pipeline] sh

+ sed -i s/<BUILD_TAG>/c84e9c9/ k8s-dev-harbor.yaml

[Pipeline] sh

+ kubectl apply -f k8s-dev-harbor.yaml --validate=false

deployment.apps/jenkins-demo configured

service/demo unchanged

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Promote to qa)

[Pipeline] input

Input requested

Approved by admin

[Pipeline] echo

This is a deploy step to YES

[Pipeline] sh

+ sed -i s/<BUILD_TAG>/c84e9c9/ k8s-qa-harbor.yaml

[Pipeline] sh

+ kubectl apply -f k8s-qa-harbor.yaml --validate=false

deployment.apps/jenkins-demo created

service/demo created

[Pipeline] sh

+ sleep 6

[Pipeline] sh

+ kubectl get pods -n qatest

NAME READY STATUS RESTARTS AGE

jenkins-demo-7f7fb56856-zzj9r 1/1 Running 0 8s

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Promote to pro)

[Pipeline] input

Input requested

Approved by admin

[Pipeline] echo

This is a deploy step to YES

[Pipeline] sh

+ sed -i s/<BUILD_TAG>/c84e9c9/ k8s-prod-harbor.yaml

[Pipeline] sh

+ kubectl apply -f k8s-prod-harbor.yaml --record --validate=false

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/jenkins-demo created

service/jenkins-demo created

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

Finished: SUCCESS

更多推荐

已为社区贡献18条内容

已为社区贡献18条内容

所有评论(0)