k8s的nfs和ingress

写这些,仅记录自己学习使用k8s的过程。如果有什么错误的地方,还请大家批评指正。最后,希望小伙伴们都能有所收获。

k8s的NFS和ingress

安装和使用nfs

安装和配置nfs服务器

首先,我们需要准备一个 NFS 服务器来提供存储服务。可以使用一个现有的 NFS 服务器或者自己搭建一个。

# 在 Ubuntu 上安装 NFS 服务器

apt update

apt install nfs-kernel-server

创建共享目录

mkdir /root/nfs

编辑 /etc/exports文件,配置共享目录

echo "/root/nfs *(insecure,rw,sync,no_subtree_check,no_root_squash)" >> /etc/exports

# 重新加载 NFS 配置

exportfs -a

# 启动 NFS 服务

systemctl start nfs-kernel-server

systemctl enable nfs-kernel-server

使用nfs

pod内直接使用nfs

创建pod描述yaml文件

apiVersion: v1

kind: Pod

metadata:

name: pod

namespace: default

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

nfs:

server: 10.206.16.4 #nfs服务器的ip

path: /root/nfs/nginx #nfs共享目录,目录必须存在,否则pod创建会失败

pod创建成功后,在nfs服务器的/root/nfs/nginx目录下创建一个index.html文件,然后进入pods里nginx容器里挂载目录下也可以看到相关文件

nfs StorageClass

使用StorageClass可以根据PVC动态的创建PV,减少管理员手工创建PV的工作。安装nfs大体分下面3步

- 创建 RBAC 的权限控制文件

vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: dev #这里的namespace可根据自己的需求修改,但与下面的namespace需保持一致

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: dev

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: dev

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: dev

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: dev

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

#创建namespace

kubectl create namespace dev

#应用rbac资源文件

kubectl apply -f rbac.yaml

- 部署NFS-Subdir-External-Provisioner

vim deploy.yaml

注意修改下面配置文件中的server ip和path路径

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate #设置升级策略为删除再创建(默认为滚动更新)

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #上一步创建的ServiceAccount名称

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # Provisioner的名称,以后设置的storageclass要和这个保持一致

value: storage-nfs

- name: NFS_SERVER # NFS服务器地址,需和valumes参数中配置的保持一致

value: 10.206.16.5

- name: NFS_PATH # NFS服务器数据存储目录,需和valumes参数中配置的保持一致

value: /root/nfs

- name: ENABLE_LEADER_ELECTION

value: "true"

volumes:

- name: nfs-client-root

nfs:

server: 10.206.16.5 # NFS服务器地址

path: /root/nfs # NFS共享目录

kubectl apply -f deploy.yaml

- 创建 NFS StorageClass

vim nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

namespace: dev

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "false" ## 是否设置为默认的storageclass

provisioner: storage-nfs ## 动态卷分配者名称,必须和上面创建的deploy中环境变量“PROVISIONER_NAME”变量值一致

parameters:

archiveOnDelete: "true" ## 设置为"false"时删除PVC不会保留数据,"true"则保留数据

kubectl apply -f nfs-storageclass.yaml

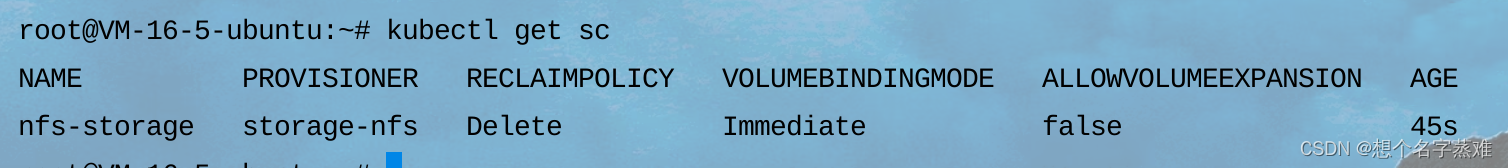

查看创建的nfs存储类

kubectl get sc

下面是使用nfs存储类动态创建pv的一个示例

vim nginx.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-pvc

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-storage"

resources:

requests:

storage: 10Mi

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: web

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumes:

- name: www

persistentVolumeClaim:

claimName: nfs-pvc

查看pvc/pv

进入pod容器nginx挂载的目录上,创建一个文件,在nfs服务器的目录下也可查看到

安装和使用ingress

ingress也是k8s里的一种资源,默认k8s是不带ingress的,需要我们单独安装,在 Kubernetes 中,有很多不同的 Ingress 控制器可以选择,例如 Nginx、Traefik、HAProxy、Envoy 等等。不同的控制器可能会提供不同的功能、性能和可靠性,可以根据实际需求来选择合适的控制器,下面以Nginx Ingress Controller为示例

安装ingress

安装过程很简单,先获取下面的文件,然后对文件中关于镜像和Service.ingress-nginx-controller的type修改即可,具体步骤如下

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/cloud/deploy.yaml

cat deploy.yaml |grep image:

需要修改镜像的位置有3个地方,将文件中上面位置的内容修改为下面的内容

第一个镜像时将前面的仓库源和镜像名都改了,版本号不变,哈希值删除。第二三两个镜像只需将仓库源地址修改,哈希值删除即可

#修改前

image: registry.k8s.io/ingress-nginx/controller:v1.8.2@sha256:74834d3d25b336b62cabeb8bf7f1d788706e2cf1cfd64022de4137ade8881ff2

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b

#修改后

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.8.2

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20230407

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v20230407

然后就是修改文件中Service.ingress-nginx-controller的type的值,默认为LoadBalancer,vim查找一下LoadBalancer即可,将LoadBalancer修改为NodePort

修改完成后应用文件

kubectl apply -f deploy.yaml

查看查看资源创建情况

kubectl get all -n ingress-nginx

ingress的简单使用

先创建一个test.yaml,用来描述service和deployment,下面是文件内容

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 1

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 1

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- name: nginx

image: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 80

创建ingress-test.yaml文件用来代理上面的service

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-test

spec:

ingressClassName: nginx

rules:

- host: "nginx.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

- host: "hello.com"

http:

paths:

- pathType: Prefix

path: "/hello" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: hello-server

port:

number: 8000

创建ingress资源

kubectl apply -f ingress-test.yaml

查看创建的ingress资源

kubectl get ing

访问nginx.com代理的服务

成功访问

访问hello.com代理的服务

报错404,因为hello.com代理的hello-server服务对应的pod没有hello的请求路径,我们修改一下,进入到hello-server的pod,在/usr/share/nginx/html下新建一个hello的文件

先查看pod名

进入pod并创建文件

访问ingress

结语

写这些,仅记录自己学习使用k8s的过程。如果有什么错误的地方,还请大家批评指正。最后,希望小伙伴们都能有所收获。

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)