二进制方式部署k8s集群(实践)

二进制部署Kubernetes (二进制部署k8s) 是一种手动安装和配置Kubernetes集群的方法,适用于那些对Kubernetes架构和组件有深入了解的高级用户和管理员。

前言

Kubeadm降低部署门槛,但屏蔽了很多细节,遇到问题的时候很难排查。如果想更容易可控,推荐使用二进制包部署Kubernetes集群,虽然手动部署麻烦了点,但是部署期间可以学习很多工作原理,也利于后期维护。

k8s集群控制节点,对集群进行调度管理,接受集群外用户去集群从中请求。

master节点上的主要组件包括:

1、kube-apiserver:集群控制的入口,提供HTTP REST服务,同时交给etcd存储,提供认证、授权、访问控制、API注册和发现等机制。

2、kube-controller-manager:Kubernetes集群中所有的资源对象的自动化控制中心,管理集群中藏柜后台任务,一个资源对应一个控制器。

3、kube-scheduler:负责Pod的调度,选择node节点应用部署。

4、etcd数据库(也可以安装在单独的服务器上):存储系统,用于保存集群中的相关数据。

node节点上的主要组件包括:

1、kubelet:master派到node节点代表,管理本机容器;一个集群中每个节点上运行的代理,它保证容器都运行在Pod中, 负责维护容器的生命周期,同时也负责Volume(CSI)和网络(CNI)的管理。

2、kube-proxy:提供网络代理,负载均衡等操作。

3、容器运行环境(Container runtime)如docker,容器运行环境是负责运行容器的软件

一、二进制部署环境准备

1.1 前期环境准备

| 软件 | 版本 |

|---|---|

| 操作系统 | CentOS Linux release 7.9.2009 (Core) |

| 容器引擎 | Docker version 20.10.21, build baeda1f |

| Kubernetes | Kubernetes V1.20.15 |

本次部署是单 master服务器:

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master1 | 192.168.176.140 | kube-apiserver,kube-controller-manager,kube-scheduler,kubelet,kube-proxy,docker,etcd |

| k8s-node1 | 192.168.176.141 | kubelet,kube-proxy,docker,etcd |

| k8s-node2 | 192.168.176.142 | kubelet,kube-proxy,docker,etcd |

1.2 操作系统初始化配置(所有节点执行)

关闭系统防火墙,selinux和swap分区

# 关闭系统防火墙

# 临时关闭

systemctl stop firewalld

# 永久关闭

systemctl disable firewalld

# 关闭selinux

# 永久关闭

sed -i 's/enforcing/disabled/' /etc/selinux/config

# 临时关闭

setenforce 0

# 关闭swap

# 临时关闭

swapoff -a

# 永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab修改主机名并写入hosts中

# 根据规划设置主机名

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

# 添加hosts

cat >> /etc/hosts << EOF

192.168.176.140 k8s-master1

192.168.176.141 k8s-node1

192.168.176.142 k8s-node2

EOF将桥接的IPV4流量传递到iptables的链,以及时间同步

# 将桥接的IPV4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 生效

sysctl --system

# 时间同步

# 使用阿里云时间服务器进行临时同步

yum install ntpdate

ntpdate ntp.aliyun.com二、部署etcd集群

2.1 etcd简介

etcd 是一个分布式键值对存储系统,由coreos 开发,内部采用 raft 协议作为一致性算法,用于可靠、快速地保存关键数据,并提供访问。通过分布式锁、leader选举和写屏障(write barriers),来实现可靠的分布式协作。etcd 服务作为 Kubernetes 集群的主数据库,在安装 Kubernetes 各服务之前需要首先安装和启动。

2.2 对应服务器信息

| 节点名称 | IP |

|---|---|

| etcd-1 | 192.168.176.140 |

| etcd-2 | 192.168.176.141 |

| etcd-3 | 192.168.176.142 |

本次部署为了节省机器,这里与 k8s 节点复用,也可以部署在 k8s 机器之外,只要 apiserver 能连接到就行。

2.3 cfssl证书生成工具准备

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书。下面操作在k8s-master1节点上进行。

# k8s-master1节点执行

# 创建目录存放cfssl工具

mkdir /software-cfssl下载cfssl相关工具

# 下载相关工具

# 这些都是可执行文件

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -P /software-cfssl/

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -P /software-cfssl/

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -P /software-cfssl/给cfssl相关工具赋可执行权限,并复制到对应目录下

cd /software-cfssl/

chmod +x *

cp cfssl_linux-amd64 /usr/local/bin/cfssl

cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

cp cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo2.4 自签证书颁发机构(CA)

(1)创建工作目录

# k8s-master1节点执行

mkdir -p ~/TLS/{etcd,k8s}

cd ~/TLS/etcd/(2)生成自签证书CA配置

# k8s-master1节点执行

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOFcat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "YuMingYu",

"ST": "YuMingYu"

}

]

}

EOF(3)生成自签CA证书

# k8s-master1节点执行

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -命令执行完,会生成以下几个相关文件

# k8s-master1节点执行

[root@k8s-master1 etcd]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem2.5 使用自签CA签发etcd https证书

(1)创建证书申请文件

# k8s-master1节点执行

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.176.140",

"192.168.176.141",

"192.168.176.142",

"192.168.176.143"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "YuMingYu",

"ST": "YuMingYu"

}

]

}

EOF注:192.168.176.143为方便后期扩容预留ip,本次单master部署请忽略。

(2)生成证书

# k8s-master1节点执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

查看当前生成的一些相关证书

# k8s-master1节点执行

[root@k8s-master1 etcd]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem2.6 下载etcd二进制文件

# 下载etcd二进制文件

wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz2.7 部署etcd集群

以下操作在 k8s-master1 上面操作,为简化操作,待会将 k8s-master1 节点生成的所有文件拷贝到其他节点。

(1)创建工作目录并解压二进制文件

# k8s-master1节点执行

mkdir /opt/etcd/{bin,cfg,ssl} -p

# 将安装包放在~目录下

cd ~

tar -xf etcd-v3.4.9-linux-amd64.tar.gz

# etcd,etcdctl为可执行文件

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/2.8 创建etcd配置文件

# k8s-master1节点执行

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.176.140:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.176.140:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.176.140:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.176.140:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.176.140:2380,etcd-2=https://192.168.176.141:2380,etcd-3=https://192.168.176.142:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF配置说明:

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通讯监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIALCLUSTER_TOKEN:集群Token

ETCD_INITIALCLUSTER_STATE:加入集群的状态,new是新集群,existing表示加入已有集群

2.9 systemd管理etcd

# k8s-master1节点执行

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF2.10 将master1、node1和node2三台服务器做ssh免密

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-master1

ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-node1

ssh-copy-id -i ~/.ssh/id_rsa.pub k8s-node22.11 将master1节点所有生成的文件拷贝到node1节点和node2节点

# k8s-master1节点执行

#!/bin/bash

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

for i in {1..2}

do

scp -r /opt/etcd/ root@192.168.176.14$i:/opt/

scp /usr/lib/systemd/system/etcd.service root@192.168.176.14$i:/usr/lib/systemd/system/

done查看下master1节点的树形结构

tree /opt/etcd/

查看etcd服务是否显示存在

tree /usr/lib/systemd/system/ | grep etcd

以上两个命令同时在node1和node2两个节点执行,查看下对应的文件是否拷贝成功。

2.12 修改node1节点和node2节点中etcd.conf配置文件

修改其节点名称和当前服务器IP

vim /opt/etcd/cfg/etcd.conf

# k8s-node1节点执行

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.176.141:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.176.141:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.176.141:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.176.141:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.176.140:2380,etcd-2=https://192.168.176.141:2380,etcd-3=https://192.168.176.142:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"vim /opt/etcd/cfg/etcd.conf

# k8s-node2节点执行

#[Member]

ETCD_NAME="etcd-3"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.176.142:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.176.142:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.176.142:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.176.142:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.176.140:2380,etcd-2=https://192.168.176.141:2380,etcd-3=https://192.168.176.142:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"2.13 启动etcd并设置开机自启

etcd需要多个节点同时启动,单个启动会卡住。下面每个命令在三台服务器上同时执行。

# k8s-master1、k8s-node1和k8s-node2节点执行

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

systemctl status etcd2.14 检查etcd集群状态

执行etcdctl cluster-health,验证etcd是否正确启动

# k8s-master1节点执行

[root@k8s-master1 ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.176.140:2379,https://192.168.176.141:2379,https://192.168.176.142:2379" endpoint health --write-out=table

+------------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+------------------------------+--------+-------------+-------+

| https://192.168.176.140:2379 | true | 9.679688ms | |

| https://192.168.176.142:2379 | true | 9.897207ms | |

| https://192.168.176.141:2379 | true | 11.282224ms | |

+------------------------------+--------+-------------+-------+

通过执行etcdctl cluster-health,在三个节点进行验证,若都为以上状态,则证明etcd部署完成。

如果和上面的结果不符,可通过下面的命令查看etcd日志,对错误进行排查

less /var/log/message

journalctl -u etcd三、安装Docker(操作在所有节点执行)

3.1 下载并解压二进制包

cd ~

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

tar -xf docker-19.03.9.tgz

mv docker/* /usr/bin/3.2 配置镜像加速

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF3.3 docker.service配置

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd --selinux-enabled=false --insecure-registry=127.0.0.1

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

#TasksMax=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF3.4 启动docker并设置开机启动

systemctl daemon-reload

systemctl start docker

systemctl enable docker

systemctl status docker查看状态如下:

四、部署master节点

4.1 生成kube-apiserver证书

(1)自签证书(CA)

# k8s-master1节点执行

cd ~/TLS/k8s

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF# k8s-master1节点执行

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF生成证书:

# k8s-master1节点执行

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -查看生成的证书文件:

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem(2)使用自签CA签发kube-apiserver https证书

创建证书申请文件:

# k8s-master1节点执行

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.176.140",

"192.168.176.141",

"192.168.176.142",

"192.168.176.143",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF注:192.168.176.143为后期扩容预留ip,本次单master部署忽略即可。

生成证书:

# k8s-master1节点执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server查看生成的证书文件:

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem4.2 下载并解压二进制包

下载的 k8s 软件包到服务器上。将 kube-apiserver、kube-controller-manager 和 kube-scheduler 文件复制到 /opt/kubernetes/bin 目录。

# k8s-master1节点执行

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

wget https://storage.googleapis.com/kubernetes-release/release/v1.20.15/kubernetes-server-linux-amd64.tar.gz

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/检查是否复制完成

# k8s-master1节点执行

[root@k8s-master1 ~]# tree /opt/kubernetes/bin

[root@k8s-master1 ~]# tree /usr/bin/ | grep kubectl

4.3 部署kube-apiserver

(1)创建配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcd-servers=https://192.168.176.140:2379,https://192.168.176.141:2379,https://192.168.176.142:2379 \\

--bind-address=192.168.176.140 \\

--secure-port=6443 \\

--advertise-address=192.168.176.140 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--service-account-issuer=api \\

--service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \\

--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \\

--requestheader-allowed-names=kubernetes \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF--logtostderr:启用日志,设置为false表示将日志写入文件,不写入stderr。

--v:日志等级。

--log-dir:日志目录。

--etcd-servers:etcd集群地址,指定etcd服务的URL。

--bind-address:监听地址,API Server绑定主机的安全IP地址,设置0.0.0.0表示绑定所有IP地址。

--secure-port:https安全端口,API Server绑定主机的安全端口号,默认为8080。

--advertise-address:集群通告地址。

--allow-privileged:启动授权。

--service-cluster-ip-range:Service虚拟IP地址段,Kubernetes集群中Service的虚拟IP地址范围,以

CIDR格式表示,例如10.0.0.0/24,该IP范围不能与物理机的IP地址有重合。

--enable-admission-plugins :准入控制模块,Kubernetes集群的准入控制设置,各控制模块以插件的形式依次生效。

--authorization-mode:认证授权,启用RBAC授权和节点自管理。

--enable-bootstrap-token-auth:启用TLS bootstrap机制。

--token-auth-file:bootstrap token文件。

--service-node-port-range:Service nodeport类型默认分配端口范围,Kubernetes集群中Service可使用的物理机端口号范围,默认值为30000~32767。

--kubelet-client-xxx:apiserver访问kubelet客户端证书。

--tls-xxx-file:apiserver https证书。

1.20版本必须加的参数:

--service-account-issuer、--service-account-signing-key-file

--etcd-xxxfile:连接etcd集群证书。

--audit-log-xxx:审计日志。

启动聚合层网关配置:

--requestheader-client-ca-file、--proxy-client-cert-file、

--proxy-client-key-file、--requestheader-allowed-names、

-requestheader-extra-headers-prefix、--requestheader-group-headers、

--requestheader-username-headers、--enable-aggregator-routing

--storage-backend:指定etcd的版本,从Kubernetes 1.6开始,默认为etcd 3。

(2)拷贝刚才生成的证书

# k8s-master1节点执行

# 把刚才生成的证书拷贝到配置文件中的路径

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/(3)启用TLS bootstrapping机制

TLS Bootstraping:Master apiserver 启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

创建上述配置文件中 token 文件:

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/token.csv << EOF

4136692876ad4b01bb9dd0988480ebba,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF格式:token,用户名,UID,用户组

token也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '(4)systemd管理apiserver

# k8s-master1节点执行

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF(5)启动kube-apiserver并设置开机启动

# k8s-master1节点执行

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

systemctl status kube-apiserver查看kube-apiserver状态:

4.4 部署kube-controller-manager

(1)创建配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--cluster-signing-duration=87600h0m0s"

EOF--kubeconfig:连接apiserver配置文件,设置与API Server连接的相关配置。

--leader-elect:当该组件启动多个时,自动选举(HA)

--cluster-signing-cert-file:自动为kubelet颁发证书的CA,apiserver保持一致

--cluster-signing-key-file:自动为kubelet颁发证书的CA,apiserver保持一致

(2)生成kubeconfig文件

[root@k8s-master1 ~]# cat /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0t

server: https://192.168.176.140:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-controller-manager

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-controller-manager

user:

client-certificate-data: LS0t

client-key-data: LS0t创建证书请求文件:

# k8s-master1节点执行

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF生成 kube-controller-manager 证书:

# 生成证书

# k8s-master1节点执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager查看生成的证书文件:

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls kube-controller-manager*

kube-controller-manager.csr kube-controller-manager-key.pem

kube-controller-manager-csr.json kube-controller-manager.pem生成 kubeconfig 文件:

# k8s-master1节点执行

KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"

KUBE_APISERVER="https://192.168.176.140:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-controller-manager \

--client-certificate=./kube-controller-manager.pem \

--client-key=./kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 会生成kube-controller-manager.kubeconfig文件(3) systemd管理controller-manager

# k8s-master1节点执行

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF查看kube-controller-manager.service服务是否添加成功

ls /usr/lib/systemd/system/ | grep kube-controller-manager

(4)启动kube-controller-manager并设置开机自启

# k8s-master1节点执行

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

systemctl status kube-controller-manager查看kube-controller-manager状态

4.5 部署 kube-scheduler

(1)创建配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect \\

--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\

--bind-address=127.0.0.1"

EOF[root@k8s-master1 ~]# cat /opt/kubernetes/cfg/kube-scheduler.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0t

server: https://192.168.176.140:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-scheduler

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-scheduler

user:

client-certificate-data: LS0t

client-key-data: LS0t(2)生成kubeconfig文件

创建证书请求文件

# k8s-master1节点执行

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF生成 kube-scheduler 证书:

# k8s-master1节点执行

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

查看生成的证书文件:

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls kube-scheduler*

kube-scheduler.csr kube-scheduler-csr.json kube-scheduler-key.pem kube-scheduler.pem生成 kubeconfig 文件:

# k8s-master1节点执行

KUBE_CONFIG="/opt/kubernetes/cfg/kube-scheduler.kubeconfig"

KUBE_APISERVER="https://192.168.176.140:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-scheduler \

--client-certificate=./kube-scheduler.pem \

--client-key=./kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 会生成 kube-scheduler.kubeconfig文件

(3)systemd管理scheduler

# k8s-master1节点执行

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF(4)启动kube-scheduler并设置开机启动

# k8s-master1节点执行

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

systemctl status kube-scheduler查看kube-scheduler状态

至此,Master上所需的服务就全部启动完成。

(5)生成 kubectl 连接集群的证书

# k8s-master1节点执行

# 切换工作目录

cd ~/TLS/k8s

cat > admin-csr.json << EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF# k8s-master1节点执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin查看生成的证书文件:

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem生成 kubeconfig 文件 :

# k8s-master1节点执行

mkdir /root/.kube

KUBE_CONFIG="/root/.kube/config"

KUBE_APISERVER="https://192.168.176.140:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials cluster-admin \

--client-certificate=./admin.pem \

--client-key=./admin-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 会生成/root/.kube/config文件(6)通过 kubectl 工具查看当前集群组件状态

# k8s-master1节点执行

[root@k8s-master1 k8s]# kubectl get cs

查看状态如上所示,说明master节点部署的组件运行正常。

(7)授权kubelet-bootstrap用户允许请求证书

# k8s-master1节点执行

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

五、部署Work Node

5.1 创建工作目录并拷贝二进制文件

在所有 work node 创建工作目录:

# k8s-master1、k8s-node1和k8s-node2节点执行

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从 master 节点 k8s-server 软件包中拷贝到所有 work 节点:

# k8s-master1节点执行

#进入到k8s-server软件包目录

#!/bin/bash

cd ~/kubernetes/server/bin

for i in {0..2}

do

scp kubelet kube-proxy root@192.168.176.14$i:/opt/kubernetes/bin/

done

5.2 部署kubelet

(1)创建配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--hostname-override=k8s-master1 \\

--network-plugin=cni \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

# 会生成kubelet.kubeconfig文件

--hostname-override:显示名称,集群唯一(不可重复),设置本Node的名称。

--network-plugin:启用CNI。

--kubeconfig :空路径,会自动生成,后面用于连接 apiserver。设置与 API Server 连接的相关配置,可以与 kube-controller-manager 使用的 kubeconfig 文件相同。

# k8s-master1节点执行

[root@k8s-master1 ~]# cat /opt/kubernetes/cfg/kubelet.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0t

server: https://192.168.176.140:6443

name: default-cluster

contexts:

- context:

cluster: default-cluster

namespace: default

user: default-auth

name: default-context

current-context: default-context

kind: Config

preferences: {}

users:

- name: default-auth

user:

client-certificate: /opt/kubernetes/ssl/kubelet-client-current.pem

client-key: /opt/kubernetes/ssl/kubelet-client-current.pem

--bootstrap-kubeconfig:首次启动向apiserver申请证书。--config:配置文件参数。--cert-dir:kubelet证书目录。--pod-infra-container-image:管理Pod网络容器的镜像 init container

(2)配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF(3)生成kubelet初次加入集群引导kubeconfig文件

# k8s-master1节点执行

KUBE_CONFIG="/opt/kubernetes/cfg/bootstrap.kubeconfig"

KUBE_APISERVER="https://192.168.176.140:6443" # apiserver IP:PORT

TOKEN="4136692876ad4b01bb9dd0988480ebba" # 与token.csv里保持一致 /opt/kubernetes/cfg/token.csv

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 会生成bootstrap.kubeconfig文件(4)systemd管理kubelet

# k8s-master1节点执行

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF看下kubelet服务是否创建

[root@k8s-master1 ~]# ls /usr/lib/systemd/system/ | grep kubelet

(5)启动kubelet并设置开机启动

# k8s-master1节点执行

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

systemctl status kubelet

查看kubelet状态:

(6)允许kubelet证书申请并加入集群

# k8s-master1节点执行

# 查看kubelet证书请求

[root@k8s-master1 k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM 28s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# k8s-master1节点执行

# 允许kubelet节点申请

# node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM是上面生成的

[root@k8s-master1 k8s]# kubectl certificate approve node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM

certificatesigningrequest.certificates.k8s.io/node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM approved# k8s-master1节点执行

# 查看申请

[root@k8s-master1 k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM 2m31s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

查看节点:

# k8s-master1节点执行

# 查看节点

[root@k8s-master1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady <none> 62s v1.20.15

由于网络插件还没有部署,节点会没有准备就绪 NotReady。

5.3 部署kube-proxy

(1)创建配置文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

(2)配置参数文件

# k8s-master1节点执行

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-master1

clusterCIDR: 10.244.0.0/16

EOF

(3)创建证书请求文件

# k8s-master1节点执行

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

(4)生成kube-proxy证书文件

# 生成证书

# k8s-master1节点执行

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

(5)查看生成的证书文件

# k8s-master1节点执行

[root@k8s-master1 k8s]# ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

(6)生成kube-proxy.kubeconfig文件

# k8s-master1节点执行

KUBE_CONFIG="/opt/kubernetes/cfg/kube-proxy.kubeconfig"

KUBE_APISERVER="https://192.168.54.101:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

# 会生成kube-proxy.kubeconfig文件

(7)systemd管理kube-proxy

# k8s-master1节点执行

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

Requires=network.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF(8)启动kube-proxy并设置开机自启

# k8s-master1节点执行

systemctl daemon-reload

systemctl start kube-proxy

systemctl enable kube-proxy

systemctl status kube-proxy

查看kube-proxy状态:

5.4 部署网络组件(Calico)

Calico 是一个纯三层的数据中心网络方案,是目前 Kubernetes 主流的网络方案。

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl apply -f https://docs.projectcalico.org/archive/v3.14/manifests/calico.yaml

[root@k8s-master1 ~]# kubectl get pods -n kube-system

等 Calico Pod 都 Running,节点也会准备就绪。

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl get pods -n kube-system

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl get nodes

5.5 授权apiserver访问kubelet

# k8s-master1节点执行

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl apply -f apiserver-to-kubelet-rbac.yaml六、新增加Work Node

6.1 把部署好的相关文件复制到新节点

# k8s-master1节点执行

#!/bin/bash

for i in {1..2}; do scp -r /opt/kubernetes root@192.168.176.14$i:/opt/; done

for i in {1..2}; do scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.176.14$i:/usr/lib/systemd/system; done

for i in {1..2}; do scp -r /opt/kubernetes/ssl/ca.pem root@192.168.176.14$i:/opt/kubernetes/ssl/; done

6.2 删除kubelet证书和kubeconfig文件

# k8s-node1和k8s-node2节点执行

rm -f /opt/kubernetes/cfg/kubelet.kubeconfig

rm -f /opt/kubernetes/ssl/kubelet*

注:删除的文件是证书申请审批后自动生成的,每个 Node 是不同的。

6.3 修改主机名

# k8s-node1和k8s-node2节点执行

vi /opt/kubernetes/cfg/kubelet.conf

# k8s-node1和k8s-node2节点执行

vi /opt/kubernetes/cfg/kube-proxy-config.yml

6.4 启动并设置开机自启

# k8s-node1和k8s-node2节点执行

systemctl daemon-reload

systemctl start kubelet kube-proxy

systemctl enable kubelet kube-proxy

systemctl status kubelet kube-proxy

查看kubelet kube-proxy状态:

上述命令在node2同样执行,需要显示和上述相同状态才算成功。

6.5 在master上同意新的Node kubelet证书申请

# k8s-master1节点执行

# 查看证书请求

[root@k8s-master1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-EAPxdWuBmwdb4CJQeWRfDLi2cvzmQZ9VIh3uSgcz1Lk 98s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-_ljl7ZmiNPDjavyhJA6aMJ4bnZHp7WAml3XPEn8BzoM 79m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-e2BoSziWYL-gcJ-0BIcXXY1-wmR8YBlojENV6-FpIJU 102s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# k8s-master1节点执行

# 同意node加入

[root@k8s-master1 ~]# kubectl certificate approve node-csr-EAPxdWuBmwdb4CJQeWRfDLi2cvzmQZ9VIh3uSgcz1Lk

certificatesigningrequest.certificates.k8s.io/node-csr-EAPxdWuBmwdb4CJQeWRfDLi2cvzmQZ9VIh3uSgcz1Lk approved

[root@k8s-master1 ~]# kubectl certificate approve node-csr-e2BoSziWYL-gcJ-0BIcXXY1-wmR8YBlojENV6-FpIJU

certificatesigningrequest.certificates.k8s.io/node-csr-e2BoSziWYL-gcJ-0BIcXXY1-wmR8YBlojENV6-FpIJU approved

6.6 查看Node状态(要稍等会才会变成ready,会下载一些初始化镜像)

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready <none> 81m v1.20.15

k8s-node1 Ready <none> 98s v1.20.15

k8s-node2 Ready <none> 118s v1.20.15

至此,3 个节点的k8s集群搭建完成。

七、部署Dashboard

7.1 创建kubernetes-dashboard.yaml文件

# k8s-master1节点执行

# 创建kubernetes-dashboard.yaml文件

[root@k8s-master1 ~]#vim kubernetes-dashboard.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.2.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

# 部署

[root@k8s-master1 ~]# kubectl apply -f kubernetes-dashboard.yaml查看部署情况:

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl get pods,svc -n kubernetes-dashboard

创建 service account 并绑定默认 cluster-admin 管理员集群角色。

# k8s-master1节点执行

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

# k8s-master1节点执行

[root@k8s-master1 ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@k8s-master1 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s-master1 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-cd77q

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: c713b50a-c11d-4708-9c7b-be7835bf53b9

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

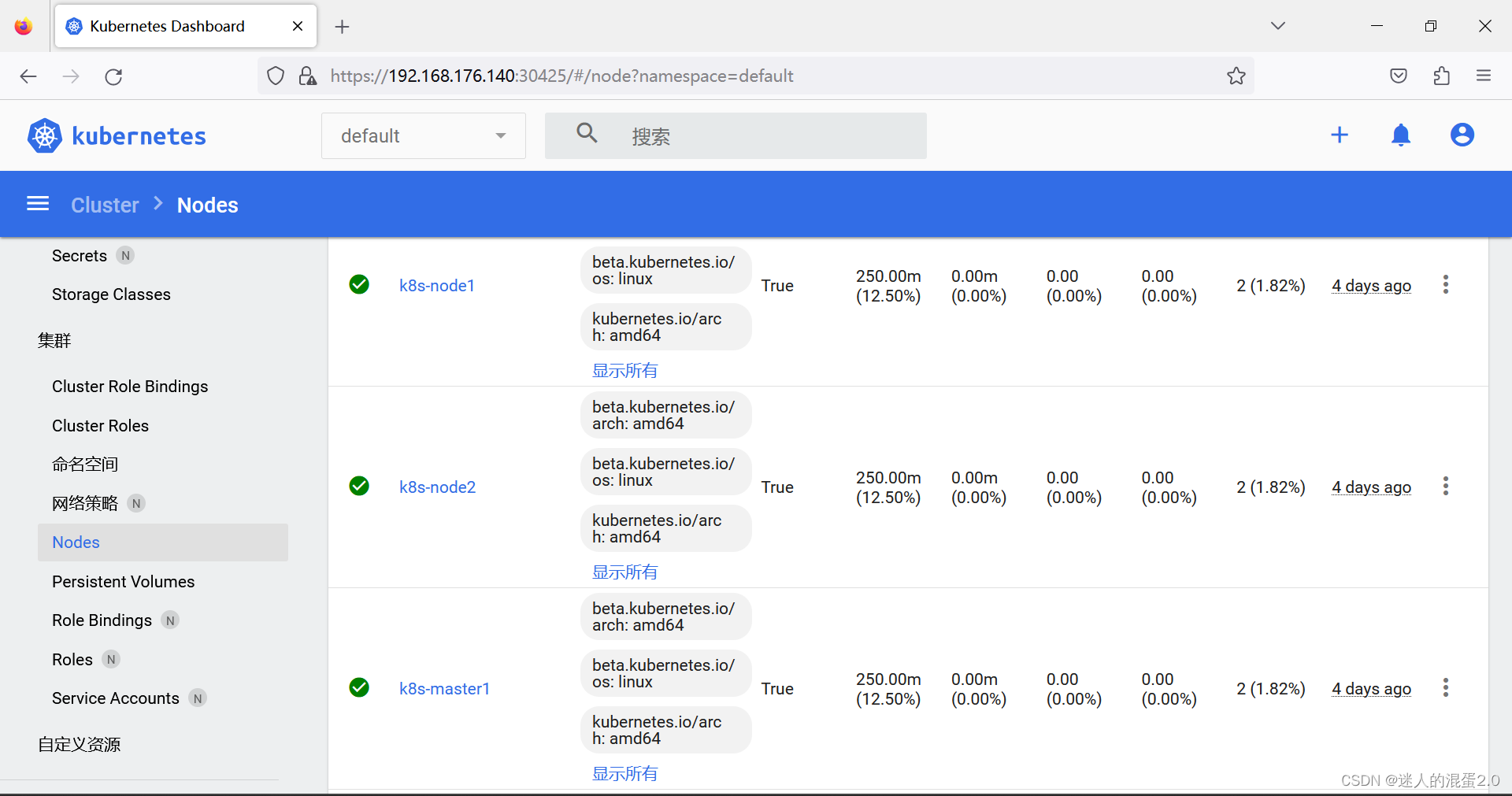

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImJoMTlpTE1idkRiNHpqcHoxanctaGoxVFRrOU80S1pQaURqbTJnMy1xUE0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tY2Q3N3EiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYzcxM2I1MGEtYzExZC00NzA4LTljN2ItYmU3ODM1YmY1M2I5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.BV0LA43Wqi3Chxpz9GCyl1P0C924CZ5FAzFSfN6dNmC0kKEdK-7mPzWhoLNdRxXHTCV-INKU5ZRCnl5xekmIKHfONtC-DbDerFyhxdpeFbsDO93mfH6Xr1d0nVaapMh9FwQM0cOtyIWbBWkXzVKPu8EDVkS-coJZyJoDoaTlHFZxaTx3VkrxK_eLz-a9HfWfEjPVKgCf6XJgOt9y0tcCgAA9DEhpbNbOCTM7dvLD_mIwPm8HIId8-x0jkr4cNQq6wLcULhSPZg4gghYZ8tlLrjixzhYahz4Q1RuhYDCbHKLj4PLltXZTkJ3GHo4upG3ehto7A6zuhKGL21KzDW80NQ访问地址:https://NodeIP:30425

使用输出的 token 登陆 Dashboard (如访问提示 https 异常,可使用火狐浏览器)

至此,Dashboard和单master节点的k8s集群均部署完成。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)