【Q&A】Python代码调试之解决Segmentation fault (core dumped)问题

在使用半导体作为内存的材料前,人类是利用线圈当作内存的材料(发明者为王安),线圈就叫作 core ,用线圈做的内存就叫作 core memory。如今 ,半导体工业澎勃发展,已经没有人用 core memory 了,不过,在许多情况下,人们还是把记忆体叫作 core。

问题描述

Python3执行某一个程序时,报Segmentation fault (core dumped)错,且没有其他任何提示,无法查问题。

Segmentation fault (core dumped)多为内存不当操作造成。空指针、野指针的读写操作,数组越界访问,破坏常量等。对每个指针声明后进行初始化为NULL是避免这个问题的好办法。排除此问题的最好办法则是调试。

排查过程

错误排查过程如下:

1. 定位错误,

第一种方式是利用python3的faulthandler,可定位到出错的代码行,具体操作有两种方式如下:

(1) 在代码中写入faulthandler

import faulthandler

# 在import之后直接添加以下启用代码即可

faulthandler.enable()

# 后边正常写你的代码

(2)直接通过命令行来启用,运行时添加-X faulthandler参数即可:

python -X faulthandler your_script.py

这里我们为了保持代码的纯洁,选用第二种方式

再次执行主程序,发现输出的信息很多了:

Fatal Python error: Segmentation fault

Current thread 0x00007f9f89fa8740 (most recent call first):

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/ctypes/__init__.py", line 374 in __init__

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/site-packages/torch/_ops.py", line 255 in load_library

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/site-packages/torch_sparse/__init__.py", line 19 in <module>

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap_external>", line 850 in exec_module

File "<frozen importlib._bootstrap>", line 680 in _load_unlocked

File "<frozen importlib._bootstrap>", line 986 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/site-packages/torch_geometric/data/data.py", line 20 in <module>

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap_external>", line 850 in exec_module

File "<frozen importlib._bootstrap>", line 680 in _load_unlocked

File "<frozen importlib._bootstrap>", line 986 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/site-packages/torch_geometric/data/__init__.py", line 1 in <module>

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap_external>", line 850 in exec_module

File "<frozen importlib._bootstrap>", line 680 in _load_unlocked

File "<frozen importlib._bootstrap>", line 986 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "/home/xinzhepang/anaconda3/envs/train/lib/python3.9/site-packages/torch_geometric/__init__.py", line 4 in <module>

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap_external>", line 850 in exec_module

File "<frozen importlib._bootstrap>", line 680 in _load_unlocked

File "<frozen importlib._bootstrap>", line 986 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap>", line 972 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "/home/xinzhepang/workspace/iGNC/scripts/bioinfo_training.py", line 6 in <module>

File "<frozen importlib._bootstrap>", line 228 in _call_with_frames_removed

File "<frozen importlib._bootstrap_external>", line 850 in exec_module

File "<frozen importlib._bootstrap>", line 680 in _load_unlocked

File "<frozen importlib._bootstrap>", line 986 in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 1007 in _find_and_load

File "/home/xinzhepang/workspace/iGNC/main.py", line 11 in <module>

./run_main.sh: line 2: 38634 Segmentation fault (core dumped) python -X faulthandler main.py --use_cuda --batch_size 16 --num_workers 2

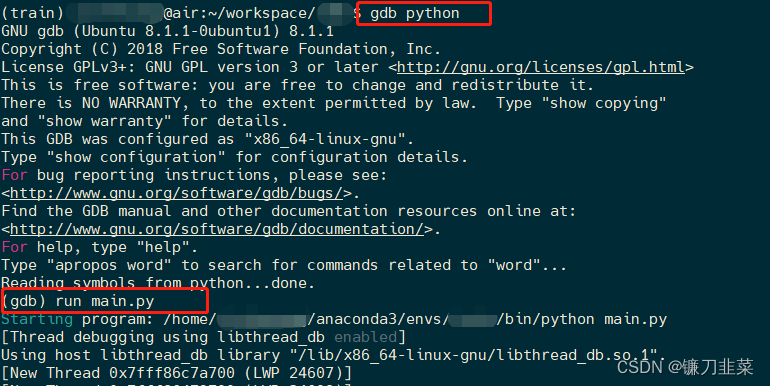

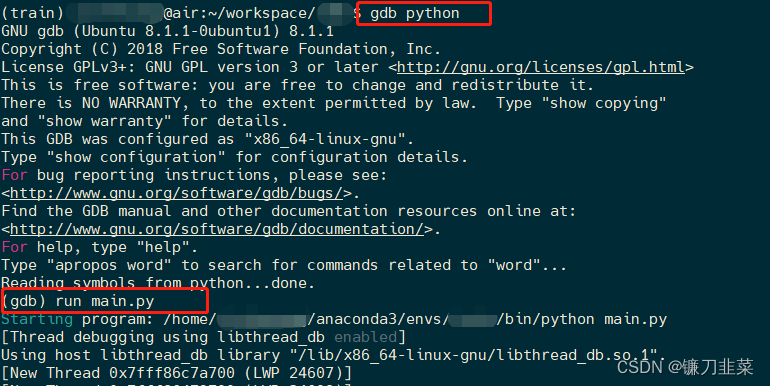

第二种方式是利用gdb,操作方式如下:

gdb python

(gdb) run /path/to/your_script.py

## wait for segfault ##

(gdb) backtrace

## stack trace of the py code

追踪产生segmenttation fault的位置及代码函数调用情况:

gdb>bt

这样,一般就可以看到出错的代码是哪一句了,还可以打印出相应变量的数值,进行进一步分析。另外需要注意的是,如果机器上跑很多的应用,生成的core又不知道是哪个应用产生的,可以通过下列命令进行查看:file core

可以看到出错的地方是:torch/lib/libtorch_cpu.so

我们执行如下代码:

>>> import torch

>>> print(torch.cuda.current_device())

0

>>> print(torch.cuda.is_available())

True

然后,执行nvidia-smi命令可知,我们有4块显卡:

Thu Apr 20 11:14:37 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.182.03 Driver Version: 470.182.03 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA TITAN X ... Off | 00000000:02:00.0 Off | N/A |

| 25% 45C P8 13W / 250W | 0MiB / 12196MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA TITAN X ... Off | 00000000:03:00.0 Off | N/A |

| 28% 50C P8 12W / 250W | 0MiB / 12196MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA TITAN X ... Off | 00000000:82:00.0 Off | N/A |

| 31% 55C P8 13W / 250W | 0MiB / 12196MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA TITAN X ... Off | 00000000:83:00.0 Off | N/A |

| 30% 53C P8 13W / 250W | 0MiB / 12196MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

查看nvcc的版本:

(train) xxxxx@air:~$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Mar_21_19:15:46_PDT_2021

Cuda compilation tools, release 11.3, V11.3.58

Build cuda_11.3.r11.3/compiler.29745058_0

因此,我们怀疑是CUDA、显卡驱动、pytorch、pytorchvision、torch_scatter、torch_sparse、torch_geometric版本不对应。

2. 解决办法

我们选择重新安装Pytorch 1.10

conda install pytorch==1.10.1 torchvision==0.11.2 torchaudio==0.10.1 cudatoolkit=11.3 -c pytorch -c conda-forge

什么是Core:

在使用半导体作为内存的材料前,人类是利用线圈当作内存的材料(发明者为王安),线圈就叫作 core ,用线圈做的内存就叫作 core memory。如今 ,半导体工业澎勃发展,已经没有人用 core memory 了,不过,在许多情况下,人们还是把记忆体叫作 core 。

什么是Core Dump:

我们在开发(或使用)一个程序时,最怕的就是程序莫明其妙地当掉。虽然系统没事,但我们下次仍可能遇到相同的问题。于是这时操作系统就会把程序当掉 时的内存内容 dump 出来(现在通常是写在一个叫 core 的 file 里面),让 我们或是 debugger 做为参考。这个动作就叫作 core dump。

参考资料

更多推荐

已为社区贡献12条内容

已为社区贡献12条内容

所有评论(0)