K8s之Pod亲和性与互斥调度

前面我们讲了Node的亲和性调度,但那只是对于Pod与Node之间关系能够更加容易的表达,但是实际的生产环境中对于Pod的调度还有一些特殊的需求,比如Pod之间存在相互依赖关系,调用频繁,对于这一类的Pod我们希望它们尽量部署在同一个机房,甚至同一个节点上,相反,两个毫无关系的Pod并且有可能存在一些竞争,会影响到该节点上其它的Pod,我们希望这些Pod尽量远离,所以K8s 1.4之后就引入了Po

前言

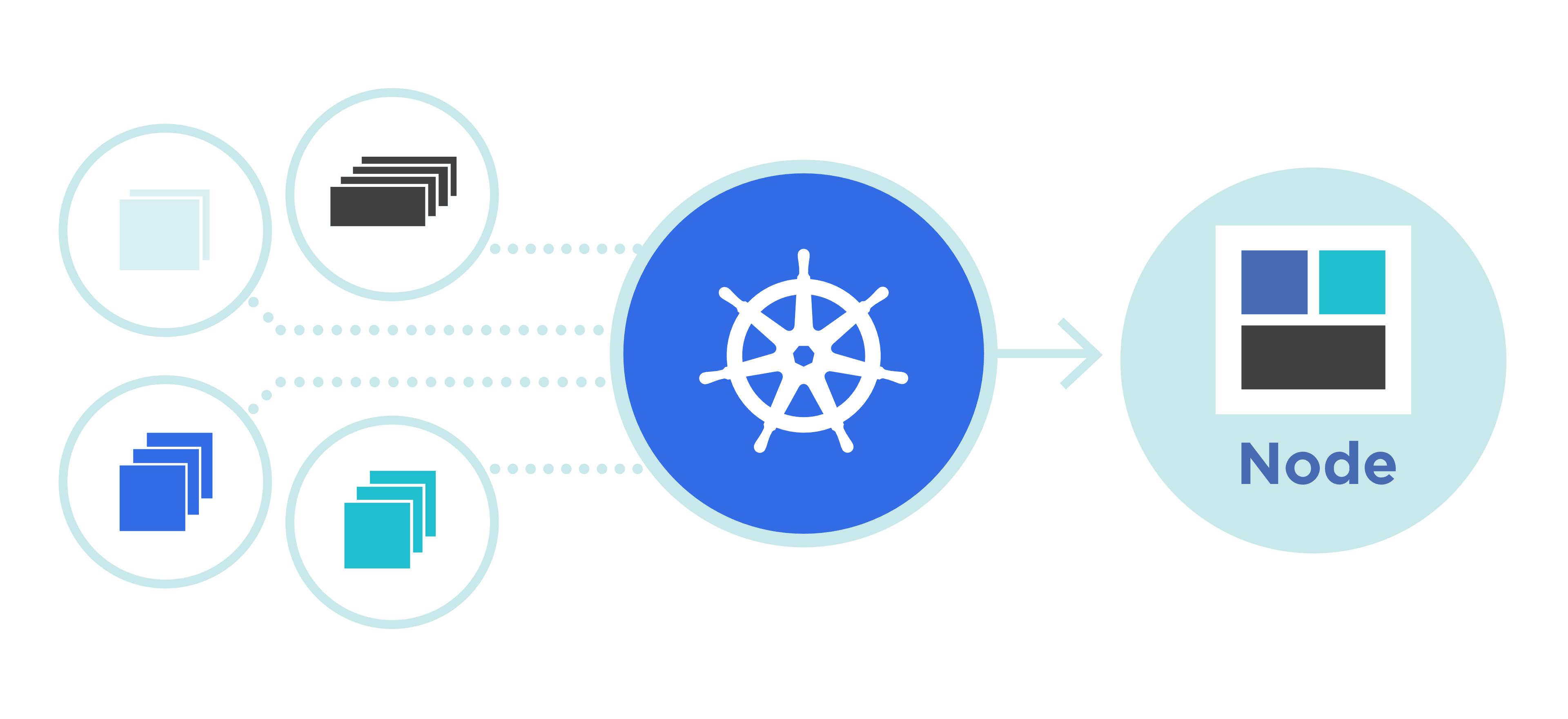

前面我们讲了Node的亲和性调度,但那只是对于Pod与Node之间关系能够更加容易的表达,但是实际的生产环境中对于Pod的调度还有一些特殊的需求,比如Pod之间存在相互依赖关系,调用频繁,对于这一类的Pod我们希望它们尽量部署在同一个机房,甚至同一个节点上,相反,两个毫无关系的Pod并且有可能存在一些竞争,会影响到该节点上其它的Pod,我们希望这些Pod尽量远离,所以K8s 1.4之后就引入了Pod亲和性与反亲和性调度。

亲和性

如果两个应用交互频繁,那么就有必要让两个应用尽量的靠近,这样可以减少网络通信带来的性能损耗

亲和性主要由三组条件决定

一是命名空间namespace

二是拓扑域 topology,拓扑域可以理解为是一组Node的集群,这些Node通常是有相同的地理空间坐标,如同机架、机房或区域等,在一些极端情况下一个Node也可以是一个拓扑域,K8s也给我们内置了一些拓扑域

- kubernetes.io/hostname

- topology.kubernetes.io/zone

- topology.kubernetes.io/region

region一般表示机架,机房等,zone的跨度更大,一般表示地域。kubernetes.io/hostname被设置为Node节点上的hostname,其它两个则是由公有云厂商提供。

三是目标Pod的标签label,通过寻找带有label标签的Pod所在的节点进行调度(通常我们的场景就使用它来完成)

可以通过describe查看Node自带标签

[root@master ~]# kubectl describe node node01

Name: node01

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node01

kubernetes.io/os=linux

与Node亲和性一样,也是有着硬限制和软限制之分

通过 kubectl explain pods.spec.affinity.podAffinity 命令可以查看所有的配置项

配置项如下

requiredDuringSchedulingIgnoredDuringExecution # 硬限制

- namespaces # 指定参照pod的namespace

topologyKey # 拓扑域,必须填写

labelSelector # 标签选择器

matchExpressions # 按节点标签列出的节点选择器要求列表(推荐)

- key # 标签

operator # 操作符 In, NotIn, Exists, DoesNotExist

values # 标签值

matchLabels # {key,value} 是一个map,相当于matchExpressions的in操作

namespaceSelector # 还只是测试版本,暂时不介绍

preferredDuringSchedulingIgnoredDuringExecution # 软限制

- podAffinityTerm # 选项

namespaces # 指定参照pod的namespace

topologyKey # 拓扑域,必须填写

labelSelector

matchExpressions

- key # 标签

operator # 操作符 In, NotIn, Exists, DoesNotExist

values # 标签值

matchLabels # {key,value} 是一个map,相当于matchExpressions的in操作

weight # 权重 范围1-100

硬限制

Pod亲和性需要有一个已经运行Pod作为参照,从而实现新的Pod与参照Pod在同一区域的功能

编写参照Pod podaffinity-target.yaml ,该yaml下有两个Pod,分别在node01与node02节点,标签分别是pro与test

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-target1

labels:

env: pro

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

nodeName: node01 # 将target放到node01

---

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-target2

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

nodeName: node02 # 将target放到node02

编写 podaffinity-required.yaml 内容如下,亲和性选择标签带有env=pro的Pod

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-required

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

affinity:

podAffinity: # 使用Pod亲和性

requiredDuringSchedulingIgnoredDuringExecution: # 硬限制

- labelSelector:

matchExpressions:

- key: env

operator: In

values: ["pro"]

topologyKey: kubernetes.io/hostname

在不启动podaffinity-target的情况下直接启动 podaffinity-required,观察Pod情况

# 启动

[root@master pod-affinity]# kubectl create -f podaffinity-required.yaml

pod/podaffinity-required created

# 观察Pod详情,启动失败

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-required 0/1 Pending 0 7s <none> <none> <none> <none>

# 还是熟悉的错误,一个污点,两个标签不匹配问题

[root@master pod-affinity]# kubectl describe pod podaffinity-required |grep -A 100 Event

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 20s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't match pod affinity rules.

启动podaffinity-target,再启动podaffinity-required,此时node01节点有标签为env=pro的Pod,所以podaffinity-required应该调度到node01

# 启动podaffinity-target

[root@master pod-affinity]# kubectl create -f podaffinity-target.yaml

pod/podaffinity-target1 created

pod/podaffinity-target2 created

# 启动podaffinity-required

[root@master pod-affinity]# kubectl create -f podaffinity-required.yaml

pod/podaffinity-required created

# 观察Pod详情,已经调度到node01

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-required 1/1 Running 0 5s 10.244.1.82 node01 <none> <none>

podaffinity-target1 1/1 Running 0 12s 10.244.1.81 node01 <none> <none>

podaffinity-target2 1/1 Running 0 12s 10.244.2.28 node02 <none> <none>

修改 podaffinity-required.yaml 将 values: [“pro”]改为 values: [“test”],重新启动podaffinity-required,此时node02节点有标签为env=test的Pod,所以podaffinity-required应该调度到node02

# 删除之前的Pod

[root@master pod-affinity]# kubectl delete -f podaffinity-required.yaml

pod "podaffinity-required" deleted

# 修改yaml后重新启动

[root@master pod-affinity]# kubectl create -f podaffinity-required.yaml

pod/podaffinity-required created

# 观察Pod详情,已经调度到node02

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-required 1/1 Running 0 4s 10.244.2.29 node02 <none> <none>

podaffinity-target1 1/1 Running 0 2m6s 10.244.1.81 node01 <none> <none>

podaffinity-target2 1/1 Running 0 2m6s 10.244.2.28 node02 <none> <none>

修改 podaffinity-required.yaml 将 values: [“pro”]改为 values: [“dev”],匹配不到任何Pod,应该报错

# 删除之前的Pod

[root@master pod-affinity]# kubectl delete -f podaffinity-required.yaml

pod "podaffinity-required" deleted

# 修改yaml后重新启动

[root@master pod-affinity]# kubectl create -f podaffinity-required.yaml

pod/podaffinity-required created

# 观察Pod详情,启动失败

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-required 0/1 Pending 0 17s <none> <none> <none> <none>

podaffinity-target1 1/1 Running 0 4m26s 10.244.1.81 node01 <none> <none>

podaffinity-target2 1/1 Running 0 4m26s 10.244.2.28 node02 <none> <none>

# 依然是这个错误

[root@master pod-affinity]# kubectl describe pod podaffinity-required |grep -A 100 Event

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 28s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't match pod affinity rules.

软限制

从上面的结果可以看出,硬限制如果匹配不到,那么Pod就运行不起来,软限制则相反,匹配不到那么就退而求其次,找一个资源满足的Pod调度就行

编写 podaffinity-preferred.yaml 内容如下,标签匹配为env=dev,此时寻找不到可以匹配的Pod

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-preferred

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

affinity:

podAffinity: # 使用Pod亲和性

preferredDuringSchedulingIgnoredDuringExecution: # 软限制

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: env

operator: In

values: ["dev"]

topologyKey: kubernetes.io/hostname

weight: 1

启动podaffinity-preferred,观察Pod是否可以正常运行

# 启动podaffinity-preferred

[root@master pod-affinity]# kubectl create -f podaffinity-preferred.yaml

pod/podaffinity-preferred created

# 观察Pod详情,podaffinity-preferred可以正常运行

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-preferred 1/1 Running 0 12s 10.244.2.31 node02 <none> <none>

podaffinity-target1 1/1 Running 0 19s 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 19s 10.244.2.30 node02 <none> <none>

修改podaffinity-preferred.yaml,设置两个亲和性规则,分别设置不同权重

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-preferred

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

affinity:

podAffinity: # 使用Pod亲和性

preferredDuringSchedulingIgnoredDuringExecution: # 软限制

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: env

operator: In

values: ["pro"]

topologyKey: kubernetes.io/hostname

weight: 1

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: env

operator: In

values: ["test"]

topologyKey: kubernetes.io/hostname

weight: 2

修改完yaml后重新启动podaffinity-preferred,由于env=test的权重比较大,应该匹配到node02节点

# 启动podaffinity-preferred

[root@master pod-affinity]# kubectl create -f podaffinity-preferred.yaml

pod/podaffinity-preferred created

# 观察Pod详情,已经调度到node02

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-preferred 1/1 Running 0 4s 10.244.2.32 node02 <none> <none>

podaffinity-target1 1/1 Running 0 2m10s 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 2m10s 10.244.2.30 node02 <none> <none>

修改podaffinity-preferred.yaml,将env=pro的权重设置为3,重新启动podaffinity-preferred,此时应该调度到node01节点

[root@master pod-affinity]# kubectl delete -f podaffinity-preferred.yaml

pod "podaffinity-preferred" deleted

[root@master pod-affinity]# kubectl create -f podaffinity-preferred.yaml

pod/podaffinity-preferred created

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-preferred 1/1 Running 0 5s 10.244.1.85 node01 <none> <none>

podaffinity-target1 1/1 Running 0 2m55s 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 2m55s 10.244.2.30 node02 <none> <none>

相互性

其实亲和性也是相互的,下面验证一下

在编写一个podaffinity-target345.yaml ,同样带有标签env=pro

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-target3

labels:

env: pro

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

---

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-target4

labels:

env: pro

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

---

apiVersion: v1

kind: Pod

metadata:

name: podaffinity-target5

labels:

env: pro

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

启动 podaffinity-target345,如果亲和性具有相互性,应该也会调度到node01

# 启动podaffinity-target345

[root@master pod-affinity]# kubectl create -f podaffinity-target345.yaml

pod/podaffinity-target3 created

pod/podaffinity-target4 created

pod/podaffinity-target5 created

# 启动成功,均调度到了node01节点

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-preferred 1/1 Running 0 31m 10.244.1.85 node01 <none> <none>

podaffinity-target1 1/1 Running 0 34m 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 34m 10.244.2.30 node02 <none> <none>

podaffinity-target3 1/1 Running 0 10s 10.244.1.87 node01 <none> <none>

podaffinity-target4 1/1 Running 0 10s 10.244.1.88 node01 <none> <none>

podaffinity-target5 1/1 Running 0 10s 10.244.1.89 node01 <none> <none>

反亲和性

反亲和性的应用场景也挺多,如多副本部署应用的时候,我们希望应用可以打散分布在各个Node节点,这样可以提高服务可用性

反亲和性的配置项与亲和性一样,只需要将 podAffinity 改为 podAntiAffinity就可以,同样也是会有硬限制和软限制

删除上面测试创建的Pod,保留最开始的两个target,node01与node02各一个

[root@master pod-affinity]# kubectl delete -f podaffinity-target345.yaml

pod "podaffinity-target3" deleted

pod "podaffinity-target4" deleted

pod "podaffinity-target5" deleted

[root@master pod-affinity]# kubectl delete -f podaffinity-preferred.yaml

pod "podaffinity-preferred" deleted

# 保留这两个target

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-target1 1/1 Running 0 42m 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 42m 10.244.2.30 node02 <none> <none>

硬限制

编写 podantiaffinity-required.yaml 内容如下,反亲和性则相反,下面yaml表示匹配到env=pro,test的节点就不能调度,所以node01与node02均不能调度,又因为是硬限制,所以Pod应该无法正常运行

apiVersion: v1

kind: Pod

metadata:

name: podantiaffinity-required

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

affinity:

podAntiAffinity: # 使用Pod反亲和性

requiredDuringSchedulingIgnoredDuringExecution: # 硬限制

- labelSelector:

matchExpressions:

- key: env

operator: In

values: ["pro","test"]

topologyKey: kubernetes.io/hostname

启动 podantiaffinity-required ,观察Pod是否正常运行

# 启动 podantiaffinity-required

[root@master pod-affinity]# kubectl create -f podantiaffinity-required.yaml

pod/podantiaffinity-required created

# Pod状态为Pending,启动失败

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-target1 1/1 Running 0 53m 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 53m 10.244.2.30 node02 <none> <none>

podantiaffinity-required 0/1 Pending 0 6s <none> <none> <none> <none>

# 熟悉的错误

[root@master pod-affinity]# kubectl describe pod podantiaffinity-required |grep -A 100 Event

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 40s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't match pod anti-affinity rules.

将podantiaffinity-required.yaml的 values: [“pro”,“test”] 改为 values: [“pro”],代表不能调度到有标签为env=pro的Pod所在的节点,也就是node01节点,所以只能调度到node02节点,启动podantiaffinity-required

# 删除之前的podantiaffinity-required

[root@master pod-affinity]# kubectl delete -f podantiaffinity-required.yaml

pod "podantiaffinity-required" deleted

# 启动podantiaffinity-required

[root@master pod-affinity]# kubectl create -f podantiaffinity-required.yaml

pod/podantiaffinity-required created

# Pod正常运行,并且调度到了node02节点

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-target1 1/1 Running 0 54m 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 54m 10.244.2.30 node02 <none> <none>

podantiaffinity-required 1/1 Running 0 4s 10.244.2.33 node02 <none> <none>

软限制

一样的,如果匹配不到指定Node,那么选择一个资源充足的Node即可

编写 podantiaffinity-preferred.yaml 内容如下,表示不能调度到 node01与node02节点,但是没有其他节点了,由于是软限制,那么最终还是会在两个节点之间选择一个调度

apiVersion: v1

kind: Pod

metadata:

name: podantiaffinity-preferred

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent # 本地有不拉取镜像

affinity:

podAntiAffinity: # 使用Pod亲和性

preferredDuringSchedulingIgnoredDuringExecution: # 软限制

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: env

operator: In

values: ["pro","test"]

topologyKey: kubernetes.io/hostname

weight: 1

启动 podantiaffinity-preferred ,观察Pod是否正常运行

# 启动 podantiaffinity-preferred

[root@master pod-affinity]# kubectl create -f podantiaffinity-preferred.yaml

pod/podantiaffinity-preferred created

# Pod正常运行

[root@master pod-affinity]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podaffinity-target1 1/1 Running 0 58m 10.244.1.84 node01 <none> <none>

podaffinity-target2 1/1 Running 0 58m 10.244.2.30 node02 <none> <none>

podantiaffinity-preferred 1/1 Running 0 7s 10.244.1.90 node01 <none> <none>

注意

- 亲和性调度不允许使用空的 topologyKey

- 反亲和性硬限制不允许使用空的 topologyKey

- 反亲和性软限制空的topologyKey默认使用这三种标签组合 kubernetes.io/hostname、failure-domain.beta.kubernetes.io/zone、failure-domain.beta.kubernetes.io/region

- 如果admission controller 设置了LimitPodHardAntiAffinityTopology, 则互斥性被限制在 kubernetes.io/hostname ,要使用自定义的topologyKey需要改写或者禁用该控制器

如果没有上述情况,就可以使用任意合法的key

Pod的亲和性与互斥性调度就介绍到这里了,后面我们介绍污点与容忍

欢迎关注,学习不迷路!

更多推荐

已为社区贡献10条内容

已为社区贡献10条内容

所有评论(0)