高可用K8S集群搭建部署

1 环境准备1.1 集群规划图1.2 系统配置一台或多台机器,操作系统CentOS7.x-x86_64硬件配置:2G或更多ARM,2个CPU或更多CPU,硬盘20G及以上集群中所有机器网络互通可以访问外网,需要拉取镜像禁止swap分区1.3 环境规划主机名系统配置IP服务master1centos2

1 环境准备

1.1 集群规划图

1.2 系统配置

- 一台或多台机器,操作系统CentOS7.x-x86_64

- 硬件配置:2G或更多ARM,2个CPU或更多CPU,硬盘20G及以上

- 集群中所有机器网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

1.3 环境规划

|

主机名 |

系统 |

配置 |

IP |

服务 |

|

master1 |

centos |

2C4G |

172.16.0.6 |

kubelet,kubeadm ,kubectl,etc |

|

master2 |

centos |

2C4G |

172.16.0.7 |

kubelet ,kubeadm ,kubectl,etc |

|

master3 |

centos |

2C4G |

172.16.0.8 |

kubelet,kubeadm,kubectl,etc |

|

node1 |

centos |

2C4G |

kubelet,kubeadm ,kubectl |

|

|

node2 |

centos |

2C4G |

kubelet,kubeadm ,kubectl |

|

|

vip |

172.16.0.10 |

虚拟IP |

1.4 部署说明

单主节点master部署

步骤:2 ---> 5.2 ---> 5.3 ---> 5.4 ---> 5.5 ---> 6

高可用master部署

步骤:2 ---> 3 ---> 5.1.1(或者5.2) ---> 5.3 ---> 5.4 ---> 5.5 ---> 6

高可用master部署(etc独立集群)

步骤:2 ---> 3 ---> 4 ---> 5.1.2 ---> 5.3 ---> 5.4 ---> 5.5 ---> 6

2 系统初始化

2.1 脚本初始化

每台服务器中分别安装Docker、kubeadm和kubectl以及kubelet,也可以使用初始化脚本完成系统初始化,所有k8s节点都必须初始化,具体代码如下所示

#!/bin/bash

k8s_version=1.18.0 #指定安装的版本

DOCKER_version=docker-ce

set -o errexit

set -o nounset

set -o pipefail

function prefight() {

echo "Step.1 system check"

check::root

check::disk '/opt' 30

check::disk '/var/lib' 20

}

function check::root() {

if [ "root" != "$(whoami)" ]; then

echo "only root can execute this script"

exit 1

fi

echo "root: yes"

}

function check::disk() {

local -r path=$1

local -r size=$2

disk_avail=$(df -BG "$path" | tail -1 | awk '{print $4}' | grep -oP '\d+')

if ((disk_avail < size)); then

echo "available disk space for $path needs be greater than $size GiB"

exit 1

fi

echo "available disk space($path): $disk_avail GiB"

}

function system_inint() {

echo "Step.2 stop firewall and swap"

stop_firewalld

stop_swap

}

function stop_firewalld() {

echo "stop firewalld [doing]"

setenforce 0 >/dev/null 2>&1 || :

sed -i 's/SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

systemctl stop firewalld >/dev/null 2>&1 || :

systemctl disable firewalld >/dev/null 2>&1 || :

echo "stop firewalld [ok]"

}

function stop_swap() {

echo "stop swap [doing]"

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a

echo "stop swap [ok]"

}

function iptables_check() {

echo "Step.3 iptables check [doing]"

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system >/dev/null 2>&1 || :

echo "Step.3 iptables check [ok]"

}

function ensure_docker() {

echo "Step.4 ensure docker is ok"

if ! [ -x "$(command -v docker)" ]; then

echo "command docker not find"

install_docker

fi

if ! systemctl is-active --quiet docker; then

echo "docker status is not running"

install_docker

fi

}

function install_docker() {

echo "install docker [doing]"

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo >/dev/null 2>&1 || :

yum install -y ${DOCKER_version} >/dev/null 2>&1 || :

echo "install docker"

systemctl restart docker

echo "start dockcer"

systemctl enable docker >/dev/null 2>&1 || :

mkdir -p /etc/docker

cat << EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://x1362v6d.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

echo "install docker [ok]"

}

function install_kubelet() {

echo "Step.5 install kubelet-${k8s_version} [doing]"

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#yum install -y kubelet kubeadm kubectl

yum install -y kubelet-${k8s_version} kubeadm-${k8s_version} kubectl-${k8s_version} >/dev/null 2>&1 || :

systemctl enable kubelet >/dev/null 2>&1 || :

echo "Step.5 install kubelet-${k8s_version} [ok]"

}

prefight

system_inint

iptables_check

ensure_docker

install_kubelet2.2 手动初始化

2.2.1 关闭防火墙

关闭selinux和firewall

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

systemctl stop firewalld

systemctl disable firewalld2.2.2 关闭swap分区

永久关闭swap分区,需要重启:

sed -ri 's/.*swap.*/#&/' /etc/fstab临时关闭swap交换分区,重启之后,无效

swapoff -a2.2.3 修改iptables

修改下面内核参数,否则请求数据经过iptables的路由可能有问题

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效2.2.4 安装docker

安装docker-ce

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

systemctl start docker

systemctl enable docker

systemctl status docker

docker version配置镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://x1362v6d.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker2.2.5 安装kubeadm

在除了haproxy以外所有节点上操作,将Kubernetes安装源改为阿里云,方便国内网络环境安装

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF安装最新kubelet, kubeadm ,kubectl

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet安装指定版本kubelet, kubeadm ,kubectl

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

systemctl enable kubelet3 高可用组件安装

注意:如果不是高可用集群,haproxy和keepalived无需安装。

- 所有master节点(master1、master2、master3节点)通过yum安装HAProxy和keepAlived。

yum -y install keepalived haproxy3.1 配置haproxy

所有master节点:

创建相应目录

mkdir -pv /etc/haproxy修改haproxy配置

cat << EOF > /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

# 下面的配置根据实际情况修改,主机名 IP地址

server 172.16.0.6 172.16.0.6:6443 check

server 172.16.0.7 172.16.0.7:6443 check

server 172.16.0.8 172.16.0.8:6443 check

EOF3.2 配置keepalived

master1配置Keepalived:

cat << EOF > /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

## 标识本节点的字条串,通常为 hostname

router_id 172.16.0.6

script_user root

enable_script_security

}

## 检测脚本

## keepalived 会定时执行脚本并对脚本执行的结果进行分析,动态调整 vrrp_instance 的优先级。如果脚本执行结果为 0,并且 weight 配置的值大于 0,则优先级相应的增加。如果脚本执行结果非 0,并且 weight配置的值小于 0,则优先级相应的减少。其他情况,维持原本配置的优先级,即配置文件中 priority 对应的值。

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

# 每2秒检查一次

interval 2

# 一旦脚本执行成功,权重减少5

weight -5

fall 3

rise 2

}

## 定义虚拟路由,VI_1 为虚拟路由的标示符,自己定义名称

vrrp_instance VI_1 {

## 主节点为 MASTER,对应的备份节点为 BACKUP

state MASTER

## 绑定虚拟 IP 的网络接口,与本机 IP 地址所在的网络接口相同

interface eth0

# 主机的IP地址

mcast_src_ip 172.16.0.6

# 虚拟路由id

virtual_router_id 100

## 节点优先级,值范围 0-254,MASTER 要比 BACKUP 高

priority 100

## 优先级高的设置 nopreempt 解决异常恢复后再次抢占的问题

nopreempt

## 组播信息发送间隔,所有节点设置必须一样,默认 1s

advert_int 2

## 设置验证信息,所有节点必须一致

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

## 虚拟 IP 池, 所有节点设置必须一样,默认填写VIP

virtual_ipaddress {

## 虚拟 ip,可以定义多个 ,默认填写VIP

172.16.0.10

}

track_script {

chk_apiserver

}

}

EOFmaster2配置Keepalived:

cat << EOF > /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 172.16.0.7

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 172.16.0.7

virtual_router_id 101

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

172.16.0.10

}

track_script {

chk_apiserver

}

}

EOFmaster3配置Keepalived:

cat << EOF > /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 172.16.0.8

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 172.16.0.8

virtual_router_id 102

priority 98

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

172.16.0.10

}

track_script {

chk_apiserver

}

}

EOF3.3 配置监控脚本

新建监控脚本请点这里📎check_apiserver.sh,并设置权限:

vim /etc/keepalived/check_apiserver.sh#!/bin/bash

err=0

for k in $(seq 1 5)

do

check_code=$(pgrep kube-apiserver)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 5

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fichmod +x /etc/keepalived/check_apiserver.sh3.4 启动高可用组件

在master节点(master1、master2、master3)上启动haproxy和keepalived:

systemctl daemon-reload

systemctl enable --now haproxy

systemctl enable --now keepalived测试VIP(虚拟IP)是否正常:

ping 172.16.0.10 -c 44 高可用储存安装

如果搭建高可用etc,直接使用<5.1.2 部署master节点(使用etc集群)>;

如果不搭高可用etc,请使用5.1.1或者5.2部署k8s master节点

相关网址

cfssl下载地址:https://github.com/cloudflare/cfssl/releases

cfsslgit地址:https://github.com/cloudflare/cfssl

4.1安装配置etc

安装etc

yum -y install etcd4.2 安装配置cfssl

此步只需要在master1节点上执行即可

4.2.1 安装cfssl

wget -O /bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget -O /bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget -O /bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x /bin/cfssl*4.2.2 配置证书

编辑etcd-csr.json

cat << EOF > /root/etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.16.0.6",

"172.16.0.7",

"172.16.0.8"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GD",

"L": "guangzhou",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF编辑ca-config.json

cat << EOF > /root/ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"etcd": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF编辑ca-csr.json

cat << EOF > /root/ca-csr.json

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GD",

"L": "guangzhou",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF4.2.2 生成CA证书

创建CA证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca生成etcd证书和私钥

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=etcd etcd-csr.json | cfssljson -bare etcd查看证书

[root@VM-0-6-centos ~]# ls *.pem

ca-key.pem ca.pem etcd-key.pem etcd.pem拷贝证书

mkdir -pv /etc/etcd/ssl

cp -r ./{ca-key,ca,etcd-key,etcd}.pem /etc/etcd/ssl/将复制证书到其他节点

scp -r ./ root@172.16.0.7:/etc/etcd/ssl

scp -r ./ root@172.16.0.8:/etc/etcd/ssl4.3 配置etc

修改etcd各个节点的etcd.conf配置文件

master1修改配置

cat << EOF > /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.0.6:2380"

ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.6:2379"

ETCD_NAME="VM-0-6-centos"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.0.6:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.6:2379"

ETCD_INITIAL_CLUSTER="VM-0-6-centos=https://172.16.0.6:2380,VM-0-7-centos=https://172.16.0.7:2380,VM-0-8-centos=https://172.16.0.8:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOFmaster2修改配置

cat << EOF > /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.0.7:2380"

ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.7:2379"

ETCD_NAME="VM-0-7-centos"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.0.7:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.7:2379"

ETCD_INITIAL_CLUSTER="VM-0-6-centos=https://172.16.0.6:2380,VM-0-7-centos=https://172.16.0.7:2380,VM-0-8-centos=https://172.16.0.8:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOFmaster3修改配置

cat << EOF > /etc/etcd/etcd.conf

ETCD_DATA_DIR="/data/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.0.8:2380"

ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.8:2379"

ETCD_NAME="VM-0-8-centos"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.0.8:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379,https://172.16.0.8:2379"

ETCD_INITIAL_CLUSTER="VM-0-6-centos=https://172.16.0.6:2380,VM-0-7-centos=https://172.16.0.7:2380,VM-0-8-centos=https://172.16.0.8:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOF· ETCD_NAME 节点名称 · ETCD_DATA_DIR 数据目录 · ETCD_LISTEN_PEER_URLS 集群通信监听地址 · ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址 · ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址 · ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址 · ETCD_INITIAL_CLUSTER 集群节点地址 · ETCD_INITIAL_CLUSTER_TOKEN 集群Token · ETCD_INITIAL_CLUSTER_STATE 加入集群的当前状态,new是新集群,existing表示加入已有集群

4.4 启动etc

4.4.1 添加权限和文件夹

mkdir -p /data/etcd/default.etcd

chmod 777 /etc/etcd/ssl/*

chmod 777 /data/etcd/ -R4.4.2 启动etc

systemctl start etcd

systemctl enable etcd4.4.3 验证集群

验证命令如下

etcdctl --endpoints "https://172.16.0.6:2379,https://172.16.0.7:2379,https://172.16.0.8:2379" --ca-file=/etc/etcd/ssl/ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem cluster-health显示结果如下所示,说明etc集群搭建成功

5 部署k8s

5.1 yaml文件部署

5.1.1 部署master节点(etc使用本地)

在master1创建kubeadm-config.yaml(请点这里📎kubeadm-config.yaml),内容如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.0.6 # 本机IP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: 172.16.0.6 # 本主机名

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.16.0.10:16443" # 虚拟IP(VIP)和haproxy端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 镜像仓库源

kind: ClusterConfiguration

kubernetesVersion: v1.22.0 # k8s版本

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.96.0.0/12"

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs初始化k8s

kubeadm init --config=kubeadm-config.yaml --upload-certs

如果初始化失败,重置后再次初始化,命令如下:

kubeadm reset -f;ipvsadm --clear;rm -rf ~/.kube初始化成功后,会产生token值,保持后用于其他节点加入时使用:

You can now join any number of the control-plane node running the following command on each as root:

#master节点命令 直接把图片对应位置复制出来,不能修改里面IP

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6fd8ca0b4faf647ad068f1580f3b0a0e785335b8d54cf85dfebe2701a56e719d \

--control-plane --certificate-key e0d4acd9b25b94f5b45bcb486e0c2209c8b09c4de3d3e60cecc8f561a52bf9be

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

#node节点命令 图片对应位置数据不能修改

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6fd8ca0b4faf647ad068f1580f3b0a0e785335b8d54cf85dfebe2701a56e719d 按照提示在master1执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config5.1.2 部署master节点(使用etc集群)

将etcd的证书移动至/etc/kubernetes/pki/

mkdir -p /etc/kubernetes/pki/

cp -r {ca,etcd,etcd-key}.pem /etc/kubernetes/pki/在master1创建kubeadm-config.yaml(请点这里📎kubeadm-config.yaml),内容如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.0.6 # 本机IP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: 172.16.0.6 # 本主机名

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.16.0.10:16443" # 虚拟IP(VIP)和haproxy端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 镜像仓库源

kind: ClusterConfiguration

kubernetesVersion: v1.18.0 # k8s版本

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.96.0.0/12"

scheduler: {}

etcd:

external:

endpoints:

- https://172.16.0.6:2379

- https://172.16.0.7:2379

- https://172.16.0.8:2379

caFile: /etc/kubernetes/pki/ca.pem

certFile: /etc/kubernetes/pki/etcd.pem

keyFile: /etc/kubernetes/pki/etcd-key.pem

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs初始化k8s

kubeadm init --config=kubeadm-config.yaml --upload-certs

如果初始化失败,重置后再次初始化,命令如下:

kubeadm reset -f;ipvsadm --clear;rm -rf ~/.kube初始化成功后,会产生token值,保持后用于其他节点加入时使用:

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:14c22ebab20872f4d8ad8a2c01412ee32c06a8c95e15de698a64ee541afe9601 \

--control-plane --certificate-key ba75ea8428681d4cdb64f8bf3da5cac8b39922cd336e96bd2b5de7d2ba4a82c7

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:14c22ebab20872f4d8ad8a2c01412ee32c06a8c95e15de698a64ee541afe9601按照提示在master1执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

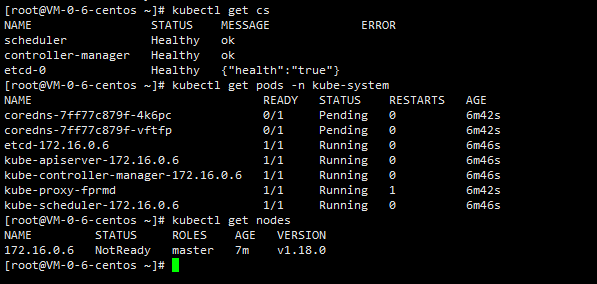

sudo chown $(id -u):$(id -g) $HOME/.kube/config5.1.3 查看状态

在所有Master节点查看集群健康状况

#查看集群健康状况

kubectl get cs

# 查看pod信息

kubectl get pods -n kube-system

# 查看节点信息

kubectl get nodes

5.2 命令行部署

- 在k8s-master01、k8s-master02以及k8s-master03节点输入如下的命令:

kubeadm config images pull --kubernetes-version=v1.18.0 --image-repository=registry.aliyuncs.com/google_containers- 在k8s-master01节点输入如下的命令

kubeadm init \

--apiserver-advertise-address=172.16.0.6 \

--image-repository registry.aliyuncs.com/google_containers \

--control-plane-endpoint=172.16.0.10:16443 \

--kubernetes-version v1.18.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--upload-certs5.3 加入master节点

将master2和master3加入集群

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6fd8ca0b4faf647ad068f1580f3b0a0e785335b8d54cf85dfebe2701a56e719d \

--control-plane --certificate-key e0d4acd9b25b94f5b45bcb486e0c2209c8b09c4de3d3e60cecc8f561a52bf9be5.4 加入node节点

将node节点加入集群

kubeadm join 172.16.0.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6fd8ca0b4faf647ad068f1580f3b0a0e785335b8d54cf85dfebe2701a56e719d 5.5 安装集群网络

kubernetes支持多种网络插件,比如flannel、calico、canal等,任选一种即可,本次选择flannel。

在所有Master节点上获取flannel配置文件(可能会失败,如果失败,请下载到本地,然后安装,如果网速不行,请点这里📎kube-flannel.yml,当然,你也可以安装calico,请点这里📎calico.yaml,推荐安装calico):

# 如果此地址无法访问,可以更改网络的DNS为8.8.8.8试试

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

6 部署ingress-nginx

6.1 相关网址

ingress-nginx部署文档:ingress-nginx/index.md at main · kubernetes/ingress-nginx · GitHub

ingress-nginx源码:GitHub - kubernetes/ingress-nginx: NGINX Ingress Controller for Kubernetes

6.2 部署ingress-nginx

去到官方ingress-nginx下载yaml文件

#最新yaml配置文件:https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.2/deploy/static/provider/cloud/deploy.yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/cloud/deploy.yaml修改相对应的配置文件(修改后的yaml文件,请点这里📎deploy.yaml)

#注释掉两行

276 spec:

277 #type: LoadBalancer

278 #externalTrafficPolicy: Local

#将Deployment模式改成DaemonSet,每一个节点自动安装一个ingress-nginx

295 #kind: Deployment

295 kind: DaemonSet

#将dnsPolicy: ClusterFirst改成 dnsPolicy: ClusterFirstWithHostNet

#增添一行,hostNetwork: true

321 #dnsPolicy: ClusterFirst

321 hostNetwork: true

322 dnsPolicy: ClusterFirstWithHostNet

#将controller的镜像地址换成阿里云的controller镜像地址

325 #image: k8s.gcr.io/ingress-nginx/controller:v0.46.0@sha256:52f0058bed0a17ab0fb35628ba97e8d52b5d32299fbc03cc0f6c7b9ff036b61a

325 image: registry.aliyuncs.com/kubeadm-ha/ingress-nginx_controller:v0.47.0

#将源换成国内阿里云的

591 #image: docker.io/jettech/kube-webhook-certgen:v1.5.1

591 image: registry.aliyuncs.com/kubeadm-ha/jettech_kube-webhook-certgen:v1.5.1

#将源换成国内阿里云的

640 #image: docker.io/jettech/kube-webhook-certgen:v1.5.1

640 image: registry.aliyuncs.com/kubeadm-ha/jettech_kube-webhook-certgen:v1.5.1启动ingress-nginx服务

kubectl apply -f deploy.yaml7 搭建遇到问题

7.1 故障排定,查看证书

openssl x509 -noout -text -in /etc/kubernetes/pki/apiserver-kubelet-client.crt | grep Not

Not Before: Mar 29 16:28:16 2021 GMT

Not After : Mar 29 16:32:53 2022 GMT #过期日期7.2 token过期问题

使用kubeadm join命令新增节点,需要2个参数,--token与--discovery-token-ca-cert-hash。其中,token有限期一般是24小时,如果超过时间要新增节点,就需要重新生成token。

# 重新创建token,创建完也可以通过kubeadm token list命令查看token列表

$ kubeadm token create

s058gw.c5x6eeze28****

# 通过以下命令查看sha256格式的证书hash

$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

9592464b295699696ce35e5d1dd155580ee29d9bd0884b*****

# 在新node节点执行join

$ kubeadm join api-serverip:port --token s058gw.c5x6eeze28**** --discovery-token-ca-cert-hash 9592464b295699696ce35e5d1dd155580ee29d9bd0884b*****7.3 更新证书

一、首先在master上生成新的token

kubeadm token create --print-join-command

kubeadm join 172.16.0.8:8443 --token ortvag.ra0654faci8y8903 --discovery-token-ca-cert-hash sha256:04755ff1aa88e7db283c85589bee31fabb7d32186612778e53a536a297fc9010二、在master上生成用于新master加入的证书,命令如下

kubeadm init phase upload-certs --experimental-upload-certs[upload-certs] Storing the certificates in ConfigMap "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

f8d1c027c01baef6985ddf24266641b7c64f9fd922b15a32fce40b6b4b21e47d三、添加新node

kubeadm join 172.16.0.8:8443 --token ortvag.ra0654faci8y8903 \

--discovery-token-ca-cert-hash sha256:04755ff1aa88e7db283c85589bee31fabb7d32186612778e53a536a297fc9010四、添加新master,把红色部分加到--experimental-control-plane --certificate-key后。

kubeadm join 172.31.182.156:8443 --token ortvag.ra0654faci8y8903 \

--discovery-token-ca-cert-hash sha256:04755ff1aa88e7db283c85589bee31fabb7d32186612778e53a536a297fc9010 \

--experimental-control-plane --certificate-key f8d1c027c01baef6985ddf24266641b7c64f9fd922b15a32fce40b6b4b21e47d7.4 删除node节点

kubectl drain vm-0-16-centos --delete-local-data --force --ignore-daemonsetsnode/vm-0-16-centos cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-lbh8x, kube-system/kube-proxy-lglxz

node/vm-0-16-centos drained

[root@VM-0-17-centos ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

vm-0-15-centos Ready master 38m v1.18.0

vm-0-16-centos NotReady,SchedulingDisabled <none> 11m v1.18.0

vm-0-17-centos Ready master 30m v1.18.0

[root@VM-0-17-centos ~]# kubectl delete node vm-0-16-centos

node "vm-0-16-centos" deleted

[root@VM-0-17-centos ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

vm-0-15-centos Ready master 39m v1.18.0

vm-0-17-centos Ready master 30m v1.18.0

7.5 虚拟IP

在云主机上需要额外开通高可用虚拟IP才可以使用Keepalived的虚拟IP,以腾讯云为例子:

在所有网络---IP与网卡---高可用虚拟IP--申请

具体使用指南参考,私有网络 高可用虚拟 IP 概述 - 操作指南 - 文档中心 - 腾讯云

7.6 本地连K8S集群

node节点IP:192.168.10.243

pod网段:10.96.0.0/16

route -p add 10.96.0.0/12 192.168.10.243 metric 1更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)