SpringBoot整合Kafka

现在大多数公司都是使用SpringBoot技术,所以使用SpringBoot整合Kafka是比较重要的。接下来我们就来使用SpringBoot整合Kafka。一、项目构建我们首先创建一个基于maven的SpringBoot项目.1、pom依赖<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apa

·

现在大多数公司都是使用SpringBoot技术,所以使用SpringBoot整合Kafka是比较重要的。接下来我们就来使用SpringBoot整合Kafka。

一、项目构建

我们首先创建一个基于maven的SpringBoot项目.

1、pom依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.jihu</groupId>

<artifactId>spring-boot-kafka</artifactId>

<version>1.0-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.2.RELEASE</version>

<relativePath /> <!-- lookup parent from repository -->

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

</dependencies>

</project>

2、application.yml配置文件

server:

port: 8080

spring:

kafka:

bootstrap-servers: 192.168.131.171:9092,192.168.131.171:9093,192.168.131.171:9094

producer: # 生产者

retries: 3 # 设置大于0的值,则客户端会将发送失败的记录重新发送

batch-size: 16384

buffer-memory: 33554432

acks: 1

# 指定消息key和消息体的编解码方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

consumer:

group-id: default-group

enable-auto-commit: false

auto-offset-reset: earliest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

listener:

# 当每一条记录被消费者监听器(ListenerConsumer)处理之后提交

# RECORD

# 当每一批poll()的数据被消费者监听器(ListenerConsumer)处理之后提交

# BATCH

# 当每一批poll()的数据被消费者监听器(ListenerConsumer)处理之后,距离上次提交时间大于TIME时提交

# TIME

# 当每一批poll()的数据被消费者监听器(ListenerConsumer)处理之后,被处理record数量大于等于COUNT时提交

# COUNT

# TIME | COUNT 有一个条件满足时提交

# COUNT_TIME

# 当每一批poll()的数据被消费者监听器(ListenerConsumer)处理之后, 手动调用Acknowledgment.acknowledge()后提交

# MANUAL

# 手动调用Acknowledgment.acknowledge()后立即提交,一般使用这种

# MANUAL_IMMEDIATE

ack-mode: manual_immediate

3、启动类

@SpringBootApplication

public class KafkaApplication {

public static void main(String[] args) {

SpringApplication.run(KafkaApplication.class);

}

}

4、生产者代码

@RestController

public class KafkaController {

private final static String TOPIC_NAME = "my-replicated-topic";

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@RequestMapping("/send")

public String send(@RequestParam("msg") String msg) {

kafkaTemplate.send(TOPIC_NAME, "key", msg);

return String.format("消息 %s 发送成功!", msg);

}

}

5、消费者代码

@Component

public class MyConsumer {

/**

* @param record record

* @KafkaListener(groupId = "testGroup", topicPartitions = {

* @TopicPartition(topic = "topic1", partitions = {"0", "1"}),

* @TopicPartition(topic = "topic2", partitions = "0",

* partitionOffsets = @PartitionOffset(partition = "1", initialOffset = "100"))

* },concurrency = "6")

* //concurrency就是同组下的消费者个数,就是并发消费数,必须小于等于分区总数

*/

@KafkaListener(topics = "my-replicated-topic", groupId = "jihuGroup")

public void listenJihuGroup(ConsumerRecord<String, String> record, Acknowledgment ack) {

String value = record.value();

System.out.println("jihuGroup message: " + value);

System.out.println("jihuGroup record: " + record);

//手动提交offset,一般是提交一个banch,幂等性防止重复消息

// === 每条消费完确认性能不好!

ack.acknowledge();

}

//配置多个消费组

@KafkaListener(topics = "my-replicated-topic", groupId = "jihuGroup2")

public void listenJihuGroup2(ConsumerRecord<String, String> record, Acknowledgment ack) {

String value = record.value();

System.out.println("jihuGroup2 message: " + value);

System.out.println("jihuGroup2 record: " + record);

//手动提交offset

ack.acknowledge();

}

}

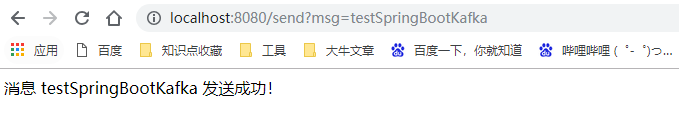

二、测试

编写完成后我们来启动这个SpringBoot项目。

我们在浏览器输入消息进行发送:

检查控制台,发现收到了消息,至此SpringBoot整合Kafka完成。

当然,我们还有很多更复杂的消费和发送场景,会在后面的文章更新,欢迎大家关注。

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)