Kubesphere闭源替代方案:Docker安装Rancher v2.12.0

背景

由于Kubesphere的突然闭源,需要寻找相关的替代方案,最终决定使用Rancher来做替代方案。之所以选择Docker部署,Rancher主要用于简单的控制台操作,不可用的容忍性比较高,对于容忍性不高,可以使用Rancher的高可用方案。

环境介绍

| 组件名称 | 组件版本 |

|---|---|

| Rancher | v2.12.0 |

| Docker | 26.1.3 |

| Kernel | 3.10.0-1127.19.1.el7.x86_64 |

| System | Centos 7.8 |

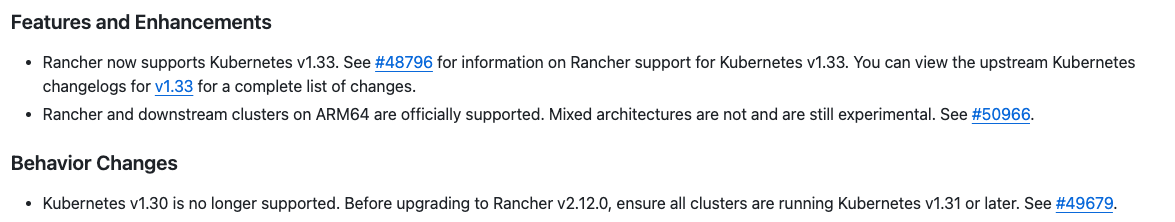

Rancher 2.12.0支持的版本范围

基本范围是1.31-1.33,1.30以及之前的版本可能会出现一些兼容性问题。

Docker安装

配置阿里云yum源

[root@xxxx ~]#cat /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

安装

sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

可能遇到的问题

Transaction check error:

file /usr/bin/docker from install of docker-ce-cli-1:26.1.4-1.el7.x86_64 conflicts with file from package docker-common-2:1.13.1-210.git7d71120.el7.centos.x86_64

file /usr/bin/dockerd from install of docker-ce-3:26.1.4-1.el7.x86_64 conflicts with file from package docker-common-2:1.13.1-210.git7d71120.el7.centos.x86_64

Error Summary

解决方案:

[root@xxxxx ~]# yum list installed | grep docker

docker-client.x86_64 2:1.13.1-210.git7d71120.el7.centos @extras

docker-common.x86_64 2:1.13.1-210.git7d71120.el7.centos @extras

[root@xxxxx ~]# rpm -e docker-client.x86_64 docker-common.x86_64

配置Docker数据目录

[root@xxxx ~]# cat /etc/docker/daemon.json

{

"data-root":"/data/docker"

}

启动Docker

systemctl start docker

查看Docker版本信息

Client: Docker Engine - Community

Version: 26.1.3

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.14.0

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.27.0

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 4

Server Version: 26.1.3

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: false

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 1

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: runc io.containerd.runc.v2

Default Runtime: runc

Init Binary: docker-init

containerd version: 8b3b7ca2e5ce38e8f31a34f35b2b68ceb8470d89

runc version: v1.1.12-0-g51d5e94

init version: de40ad0

Security Options:

seccomp

Profile: builtin

Kernel Version: 5.10.134-18.al8.x86_64

Operating System: Alibaba Cloud Linux 3.2104 U11 (OpenAnolis Edition)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.827GiB

Name: ali-id-jump-10-48-10-115

ID: 929bed63-ea71-4ad0-929b-89763ced16b9

Docker Root Dir: /data/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Rancher安装

- 国内环境

docker run --name rancher -itd --restart=unless-stopped \

-p 80:80 \

-p 443:443 \

-v /etc/ssl/certs/rancher/xxx.pem:/etc/rancher/ssl/cert.pem \

-v /etc/ssl/certs/rancher/xxxxx.pem:/etc/rancher/ssl/key.pem \

-v /var/log/rancher-auditlog:/var/log/rancher/auditlog \

-v /data/rancher:/var/lib/rancher \

-e CATTLE_SYSTEM_DEFAULT_REGISTRY=registry.cn-hangzhou.aliyuncs.com \

--privileged \

registry.cn-hangzhou.aliyuncs.com/rancher/rancher:v2.12.0 --no-cacerts

- 海外环境/国内可以连接外网的环境

docker run --name rancher -itd --restart=unless-stopped \

-p 80:80 \

-p 443:443 \

-v /etc/ssl/certs/xxx.pem:/etc/rancher/ssl/cert.pem \

-v /etc/ssl/certs/xxx.pem:/etc/rancher/ssl/key.pem \

-v /var/log/rancher-auditlog:/var/log/rancher/auditlog \

-v /data/rancher:/var/lib/rancher \

--privileged \

rancher/rancher:v2.12.0 --no-cacerts

参数解释:-p:Rancher的管理控制台的端口,映射到主机-v /etc/ssl/certs/certChain.pem:/etc/rancher/ssl/cert.pem:映射证书链文件到主机-v /etc/ssl/certs/serverkey.pem:/etc/rancher/ssl/key.pem:映射私钥到主机-v /var/log/rancher-auditlog:/var/log/rancher/auditlog:把Rancher的审计日志持久化到主机-v /data/rancher:/var/lib/rancher:把Rancher的数据目录持久化到主机--privileged:使用容器的特权模式

证书链文件是一个包含多个证书的文件,博主这里的场景是把 bundle.crt、server.crt的内容直接追加到一个certChain.pem空文件中。serverkey.pem是博主转换私钥文件后的文件名称。

证书转换问题

.key转换.pem

openssl rsa -in temp.key -out temp.pem

.crt转换.pem

openssl x509 -in tmp.crt -out tmp.pem

可能遇到的问题

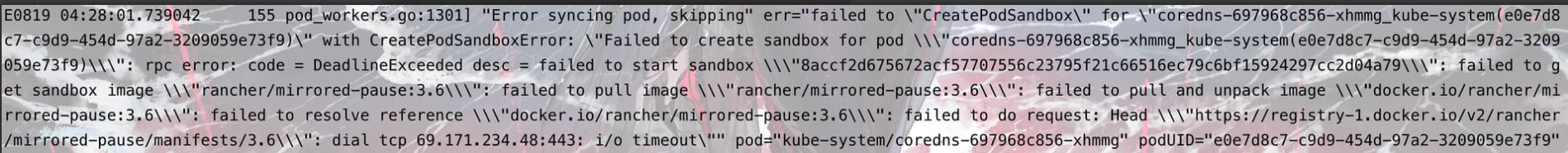

国内安装,容器日志出现拉取mirrored-pause等使用的海外仓库地址

docker logs发现拉取的国外仓库的pause等镜像仓库地址,导致无法正常工作。

解决方案: 使用未映射的镜像把需要的离线镜像包copy到本地的映射目录中,然后重启映射的Rancher服务就可以了。

mkdir -p /data/rancher/k3s/agent/images/

docker run --rm --entrypoint "" -v $(pwd):/output registry.cn-hangzhou.aliyuncs.com/rancher/rancher:v2.12.0 cp /var/lib/rancher/k3s/agent/images/k3s-airgap-images.tar /output/k3s-airgap-images.tar

cp k3s-airgap-images.tar /data/rancher/k3s/agent/images/

参考链接:相关帖子来源

iptables问题

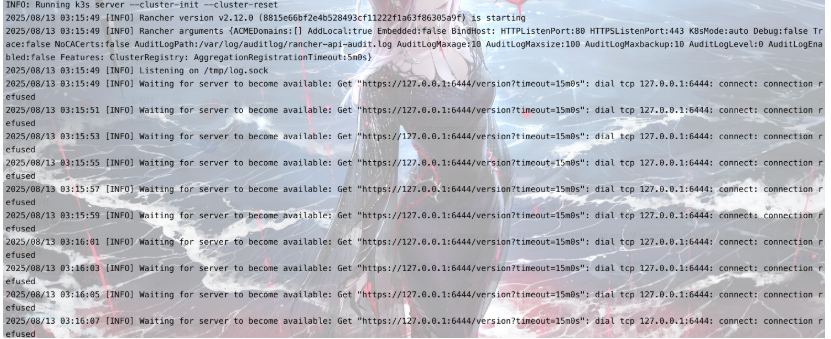

docker logs 容器ID,发现一直在重启,日志输出如下:

进入rancher容器之后,查看/var/lib/rancher/k3s.log内容如下:

F0313 05:40:49.389689 51 network_policy_controller.go:404] failed to run iptables command to create KUBE-ROUTER-INPUT chain due to running [/usr/bin/iptables -t filter -S KUBE-ROUTER-INPUT 1 --wait]: exit status 3: iptables v1.8.8 (legacy): can't initialize iptables table `filter': Table does not exist (do you need to insmod?)

Perhaps iptables or your kernel needs to be upgraded.

panic: F0313 05:40:49.389689 51 network_policy_controller.go:404] failed to run iptables command to create KUBE-ROUTER-INPUT chain due to running [/usr/bin/iptables -t filter -S KUBE-ROUTER-INPUT 1 --wait]: exit status 3: iptables v1.8.8 (legacy): can't initialize iptables table `filter': Table does not exist (do you need to insmod?)

Perhaps iptables or your kernel needs to be upgraded.

解决方法: 在主机上执行以下命令,重启Rancher容器

sudo modprobe iptable_filter

sudo modprobe iptable_nat

sudo modprobe iptable_mangle

命令解释

- sudo modprobe iptable_filter

加载 iptable_filter 内核模块这是 iptables 的基础过滤模块,提供基本的包过滤功能,支持 INPUT、FORWARD 和 OUTPUT 链的过滤规则

- sudo modprobe iptable_nat

加载 iptable_nat 内核模块,提供网络地址转换(NAT)功能,支持 PREROUTING 和 POSTROUTING 链的 NAT 规则,用于实现端口转发、IP 伪装(MASQUERADE)等

- sudo modprobe iptable_mangle

加载 iptable_mangle 内核模块,提供修改(或"mangle")数据包的功能,可以修改 IP 头部的 TOS、TTL 等字段,常用于流量整形、QoS 等高级网络配置

查看模块加载信息

[root@xxxx ~]# lsmod | grep iptable

iptable_mangle 16384 1

iptable_nat 16384 1

iptable_filter 16384 1

ip_tables 36864 6 iptable_filter,iptable_nat,iptable_mangle

nf_nat 57344 4 xt_nat,nft_chain_nat,iptable_nat,xt_MASQUERADE

配置问题(未经过实际验证,可以尝试)

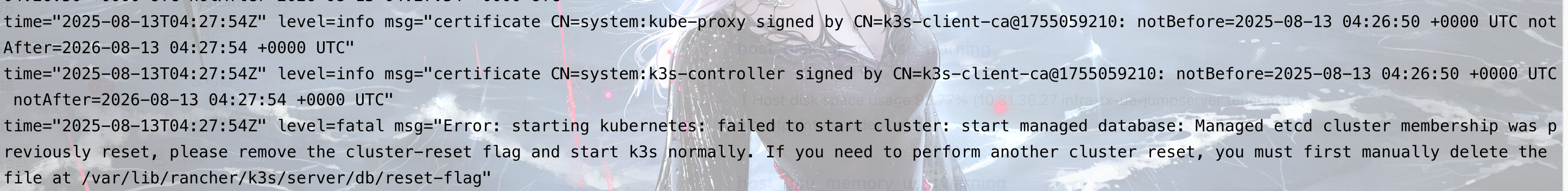

docker logs 容器名称,出现以下内容:

解决方案:登录Rancher容器,删除/var/lib/rancher/k3s/server/db/reset-flag文件后,重启容器。

Rancher登录

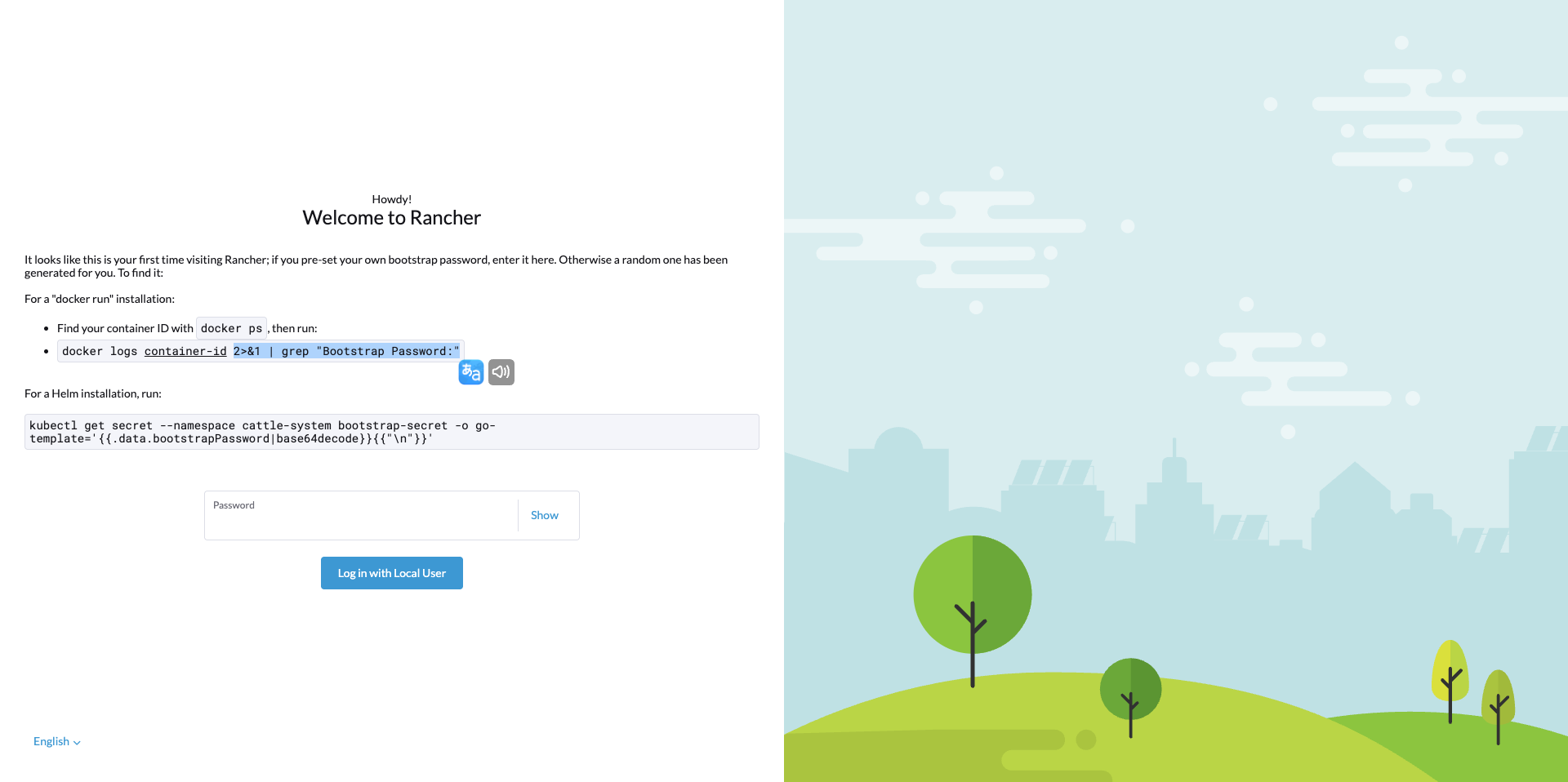

- 直接浏览器打开,可以根据不同的安装方式获取Rancher的初始化密码。

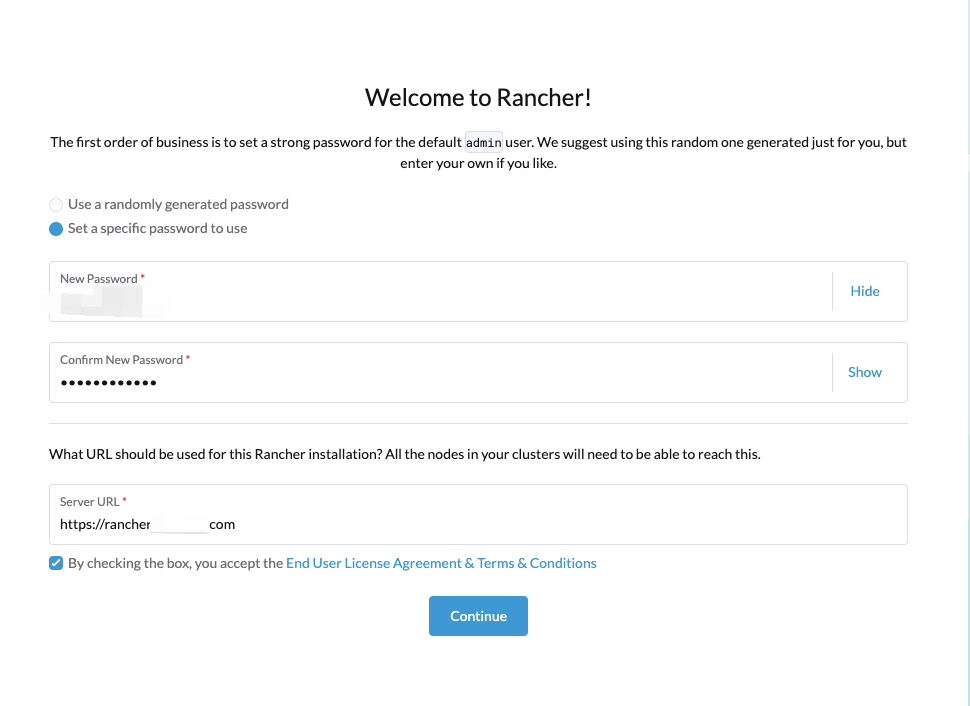

- 这里选择的是自定义密码,密码长度不少于12位,我这边的访问地址是域名访问,使用的就是上面的域名证书文件。

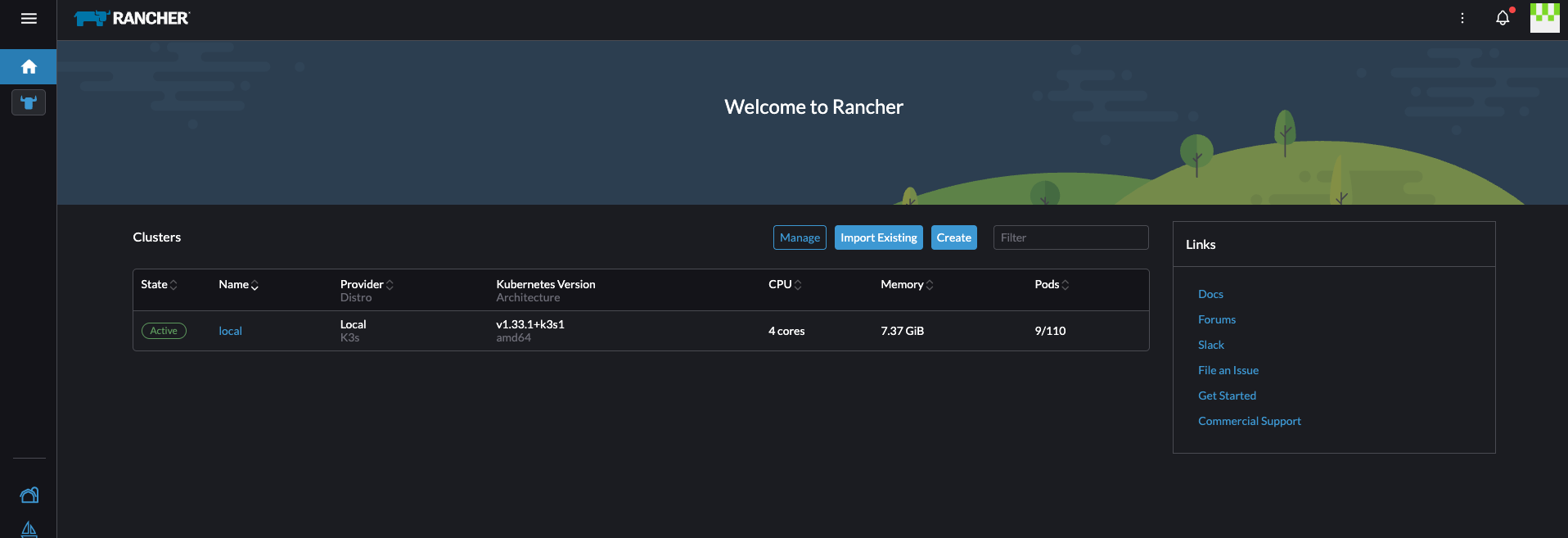

- 登录成功

结语

相信大家已经看到网上关于kubesphere闭源操作的讨论,身为运维工作者,还是要额外关注一下开源社区动态,及时评估业务更新方案,我们下次再见拜拜。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)