K8s认证机制、kubeconfig及配置、Service Account,K8s鉴权体系、RBAC及配置案例、Ingress工作机制,Ingress配置方式及金丝雀发布案例、Helm及常见用法

K8s认证机制、kubeconfig及配置、Service Account,K8s鉴权体系、RBAC及配置案例、Ingress工作机制,Ingress配置方式及金丝雀发布案例、Helm及常见用法

-

添加两个以上静态令牌认证的用户,例如 tom 和 jerry,并认证到 Kubernetes 上;

#生成token

root@k8s-master01:~# echo "$(openssl rand -hex 3).$(openssl rand -hex 8)"

8617d8.e788f04ff704665c

root@k8s-master01:~# echo "$(openssl rand -hex 3).$(openssl rand -hex 8)"

091ddd.42f931a5e4939b95

# 生成static token文件

root@k8s-master01:/etc/kubernetes# mkdir authfiles

root@k8s-master01:/etc/kubernetes# cd authfiles/

root@k8s-master01:/etc/kubernetes/authfiles# vim token.csv

8617d8.e788f04ff704665c,tom,1001,kuberuser

091ddd.42f931a5e4939b95,jerry,1002,kuberadmin

#配置kube-apiserver加载该静态令牌文件以启用相应的认证功能

root@k8s-master01:/etc/kubernetes/manifests# cp kube-apiserver.yaml /tmp

vim /tmp/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 172.31.6.100:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=172.31.6.100

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --token-auth-file=/etc/kubernetes/authfiles/token.csv #开启token认证

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.26.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 172.31.6.100

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 172.31.6.100

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 172.31.6.100

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/ca-certificates

name: etc-ca-certificates

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /usr/local/share/ca-certificates

name: usr-local-share-ca-certificates

readOnly: true

- mountPath: /usr/share/ca-certificates

name: usr-share-ca-certificates

readOnly: true

- mountPath: /etc/kubernetes/authfiles #挂载token认证的卷

name: authfiles

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

securityContext:

seccompProfile:

type: RuntimeDefault

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/ca-certificates

type: DirectoryOrCreate

name: etc-ca-certificates

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /usr/local/share/ca-certificates

type: DirectoryOrCreate

name: usr-local-share-ca-certificates

- hostPath:

path: /usr/share/ca-certificates

type: DirectoryOrCreate

name: usr-share-ca-certificates

- hostPath: # 定义token认证要挂载的卷

path: /etc/kubernetes/authfiles

type: DirectoryOrCreate

name: authfiles

status: {}

## 应用编辑后的kube-apiserver资源编排文件

root@k8s-master01:/tmp# cp kube-apiserver.yaml /etc/kubernetes/manifests/

## 查看资源失败,kube-apiserver正在重启

root@k8s-master01:/tmp# kubectl get pod

The connection to the server kubeapi.magedu.com:6443 was refused - did you specify the right host or port?## 等重启完成,再次查看资源

root@k8s-master01:/tmp# kubectl get pod

No resources found in default namespace.

#测试, 登陆node3使用kubectl用tom和jerry的token进行验证

root@k8s-node03:~# kubectl -s https://kubeapi.magedu.com:6443 --token="8617d8.e788f04ff704665c" --certificate-authority=/etc/kubernetes/pki/ca.crt get pods

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

root@k8s-node03:~# kubectl -s https://kubeapi.magedu.com:6443 --token="091ddd.42f931a5e4939b95" --certificate-authority=/etc/kubernetes/pki/ca.crt get pods

Error from server (Forbidden): pods is forbidden: User "jerry" cannot list resource "pods" in API group "" in the namespace "default"

## 使用curl用tom和jerry的token进行验证

root@k8s-node03:~# curl -k -H "Authorization: Bearer 8617d8.e788f04ff704665c" -k https://kubeapi.magedu.com:6443/api/v1/namespaces/default/pods/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "pods is forbidden: User \"tom\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

}root@k8s-node03:~# curl -k -H "Authorization: Bearer 091ddd.42f931a5e4939b95" -k https://kubeapi.magedu.com:6443/api/v1/namespaces/default/pods/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "pods is forbidden: User \"jerry\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

## kubectl和curl验证都能识别到用户tom和jerry的信息,认证成功。但是缺少鉴权,查看不到资源

-

添加两个以上的 X509 证书认证到 Kubernetes 的用户,比如 mason 和 magedu。

#生成mason,magedu私钥

root@k8s-master01:/etc/kubernetes# umask 077; openssl genrsa -out ./pki/mason.key 4096

Generating RSA private key, 4096 bit long modulus (2 primes)

.....................................................++++

......................................................................................................................................................................++++

e is 65537 (0x010001)

root@k8s-master01:/etc/kubernetes# umask 077; openssl genrsa -out ./pki/magedu.key 4096

Generating RSA private key, 4096 bit long modulus (2 primes)

.....................................................................................++++

...............................++++

e is 65537 (0x010001)

#创建证书签署请求

root@k8s-master01:/etc/kubernetes# openssl req -new -key ./pki/mason.key -out ./pki/mason.csr -subj "/CN=mason/O=developers"

root@k8s-master01:/etc/kubernetes# openssl req -new -key ./pki/mason.key -out ./pki/magedu.csr -subj "/CN=magedu/O=operation"

#由Kubernetes CA签署证书

root@k8s-master01:/etc/kubernetes# openssl x509 -req -days 365 -CA ./pki/ca.crt -CAkey ./pki/ca.key -CAcreateserial -in ./pki/mason.csr -out ./pki/mason.crt

Signature ok

subject=CN = mason, O = developers

Getting CA Private Key

root@k8s-master01:/etc/kubernetes# openssl x509 -req -days 365 -CA ./pki/ca.crt -CAkey ./pki/ca.key -CAcreateserial -in ./pki/magedu.csr -out ./pki/magedu.crt

Signature ok

subject=CN = magedu, O = operation

Getting CA Private Key

#将pki目录下的mason.crt、mason.key和ca.crt复制到node3主机上,进行测试

root@k8s-master01:/etc/kubernetes# scp -rp ./pki/{mason.key,mason.csr,magedu.key,magedu.csr} root@k8s-node03:/etc/kubernetes/pki/

root@k8s-node03's password:

mason.key 100% 3243 2.5MB/s 00:00

mason.csr 100% 1606 1.5MB/s 00:00

magedu.key 100% 3247 1.6MB/s 00:00

magedu.csr 100% 1606 1.3MB/s 00:00

## 在k8s-node03上发起访问测试

root@k8s-node03:/# kubectl -s https://kubeapi.magedu.com:6443 --client-certificate=/etc/kubernetes/pki/mason.crt --client-key=/etc/kubernetes/pki/mason.key --certificate-authority=/etc/kubernetes/pki/ca.crt get pods

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

root@k8s-node03:/# kubectl -s https://kubeapi.magedu.com:6443 --client-certificate=/etc/kubernetes/pki/magedu.crt --client-key=/etc/kubernetes/pki/magedu.key --certificate-authority=/etc/kubernetes/pki/ca.crt get pods

Error from server (Forbidden): pods is forbidden: User "magedu" cannot list resource "pods" in API group "" in the namespace "default"

-

把认证凭据添加到 kubeconfig 配置文件进行加载。

#为静态令牌认证的用户设定一个自定义的kubeconfig文件

#定义cluster,提供包括集群名称、API Server URL和信任的CA的证书相关的配置;clusters配置段中的各列表项名称需要惟一;

root@k8s-master01:~# kubectl config set-cluster kube-test --embed-certs=true --certificate-authority=/etc/kubernetes/pki/ca.crt --server="https://kubeapi.magedu.com:6443" --kubeconfig=$HOME/.kube/kubeusers.conf

Cluster "kube-test" set.

#定义User,添加身份凭据,使用静态令牌文件认证的客户端提供令牌令牌即可

root@k8s-master01:~# kubectl config set-credentials jerry --token="091ddd.42f931a5e4939b95" --kubeconfig=$HOME/.kube/kubeusers.conf

User "jerry" set.

#定义Context,为用户jerry的身份凭据与kube-test集群建立映射关系

root@k8s-master01:~# kubectl config set-context jerry@kube-test --cluster=kube-test --user=jerry --kubeconfig=$HOME/.kube/kubeusers.conf

Context "jerry@kube-test" created.

# 设定Current-Context

root@k8s-master01:~# kubectl config use-context jerry@kube-test --kubeconfig=$HOME/.kube/kubeusers.conf

Switched to context "jerry@kube-test".

#测试jerry用户

root@k8s-master01:~# kubectl get pod --kubeconfig=$HOME/.kube/kubeusers.conf

Error from server (Forbidden): pods is forbidden: User "jerry" cannot list resource "pods" in API group "" in the namespace "default"

#基于X509客户端证书认证的mason用户添加至kubeusers.conf文件中

#定义Cluster,使用不同的身份凭据访问同一集群时,集群相关的配置无须重复定义

#定义User,添加身份凭据,基于X509客户端证书认证时,需要提供客户端证书和私钥

root@k8s-master01:~# kubectl config set-credentials mason --embed-certs=true --client-certificate=/etc/kubernetes/pki/mason.crt --client-key=/etc/kubernetes/pki/mason.key --kubeconfig=$HOME/.kube/kubeusers.conf

User "mason" set.

#定义Context,为用户mason的身份凭据与kube-test集群建立映射关系

root@k8s-master01:~# kubectl config set-context mason@kube-test --cluster=kube-test --user=mason --kubeconfig=$HOME/.kube/kubeusers.conf

Context "mason@kube-test" created.

#设定Current-Context

root@k8s-master01:~# kubectl config use-context mason@kube-test --kubeconfig=$HOME/.kube/kubeusers.conf

Switched to context "mason@kube-test".

#测试mason用户

root@k8s-master01:~# kubectl get pod --kubeconfig=$HOME/.kube/kubeusers.conf

Error from server (Forbidden): pods is forbidden: User "mason" cannot list resource "pods" in API group "" in the namespace "default"

-

使用资源配置文件创建 ServiceAccount,并附加一个 imagePullSecrets。

#创建docker-registry secret资源

root@k8s-master01:/# kubectl create secret docker-registry mydockhub --docker-username=USER --docker-password=PASSWORD -n test

secret/mydockhub created

#serviceaccount配置文件

root@k8s-master01:~/learning-k8s/serviceaccount# cat mysa-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

imagePullSecrets:

- name: mydockhub #应用上面创建的mydockhub资源

metadata:

creationTimestamp: null

name: mysa

#apply生成mysa资源

root@k8s-master01:~/learning-k8s/serviceaccount# kubectl apply -f mysa-serviceaccount.yaml -n test

serviceaccount/mysa created

root@k8s-master01:~/learning-k8s/serviceaccount# kubectl get serviceaccount -n test

NAME SECRETS AGE

default 0 17d

mysa 0 19s

#在deployment里应用serviceaccount,注意需要automountServiceAccountToken

root@k8s-master01:~/learning-k8s/serviceaccount# cat sa-demoapp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoapp10

name: demoapp10

spec:

replicas: 1

selector:

matchLabels:

app: demoapp10

strategy: {}

template:

metadata:

labels:

app: demoapp10

spec:

containers:

- image: ikubernetes/demoapp:v1.0

name: demoapp

resources: {}

serviceAccountName: mysa

automountServiceAccountToken: True

status: {}

#应用sa-demoapp.yaml,生成deployment资源

root@k8s-master01:~/learning-k8s/serviceaccount# kubectl apply -f sa-demoapp.yaml -n test

deployment.apps/demoapp10 configured

root@k8s-master01:~/learning-k8s/serviceaccount# kubectl get deployment -n test

NAME READY UP-TO-DATE AVAILABLE AGE

demoapp10 1/1 1 1 31m

#查看对应的pods状态,正常

root@k8s-master01:~/learning-k8s/serviceaccount# kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

demoapp10-5cd5f49bb-6qtn6 1/1 Running 0 36s

-

为 tom 用户授予管理 blog 名称空间的权限;

# 创建 blog namespace

root@k8s-master01:~# kubectl create namespace blog

namespace/blog created

# 创建nginx deployment控制器应用

root@k8s-master01:~# kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 -n blog

deployment.apps/demoapp10 created

#创建blog空间下Services资源,关联demoapp10 label

root@k8s-master01:~# kubectl get pods -n blog --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demoapp10-795f57b569-szktv 1/1 Running 0 116s app=demoapp10,pod-template-hash=795f57b569

root@k8s-master01:~# kubectl create service clusterip demoapp10 --tcp=80:80 -n blog

service/demoapp10 created

root@k8s-master01:~# kubectl get svc -n blog --show-labels

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELS

demoapp10 ClusterIP 10.105.252.99 <none> 80/TCP 34s app=demoapp10

#创建role资源,允许管理blog命名空间下的所有资源。verb:允许在资源上使用的操作(verb)列表,resources.group/subresource:操作可施加的目标资源类型或子资源列表

root@k8s-master01:~# kubectl create role manager-blog-ns --verb=* --resource=pods,deployments,daemonsets,replicasets,statefulsets,jobs,cronjobs,ingresses,events,configmaps,endpoints,services -n blog

role.rbac.authorization.k8s.io/manager-blog-ns created

#为tom用户授权

# 定义Cluster,提供包括集群名称、API Server URL和信任的CA的证书相关的配置;clusters配置段中的各列表项名称需要惟

root@k8s-master01:~# kubectl config set-cluster kube-test --embed-certs=true --certificate-authority=/etc/kubernetes/pki/ca.crt --server="https://kubeapi.magedu.com:6443" --kubeconfig=$HOME/.kube/kubeusers.conf

Cluster "kube-test" set.

#定义User tom,添加身份凭据,使用静态令牌文件认证的客户端提供令牌令牌

root@k8s-master01:~# kubectl config set-credentials tom --token="8617d8.e788f04ff704665c" --kubeconfig=$HOME/.kube/kubeusers.conf

User "tom" set.

root@k8s-master01:~# kubectl create rolebinding tom-as-manage-blog-ns --role=manager-blog-ns --user=tom -n blog

rolebinding.rbac.authorization.k8s.io/tom-as-manage-blog-ns created

#定义Context,为用户tom的身份凭据与kube-test集群建立映射关系

root@k8s-master01:~# kubectl config set-context tom@kube-test --cluster=kube-test --user=tom --kubeconfig=$HOME/.kube/kubeusers.conf

Context "tom@kube-test" created.

#切换Current-Context为tom用户配置,并验证

root@k8s-master01:~# kubectl config use-context tom@kube-test --kubeconfig=$HOME/.kube/kubeusers.conf

Switched to context "tom@kube-test".

root@k8s-master01:~# kubectl get pods -n blog --kubeconfig=$HOME/.kube/kubeusers.conf

NAME READY STATUS RESTARTS AGE

demoapp10-795f57b569-szktv 1/1 Running 0 37m

root@k8s-master01:~# kubectl get pods -n blog --kubeconfig=$HOME/.kube/kubeusers.conf

NAME READY STATUS RESTARTS AGE

demoapp10-795f57b569-szktv 1/1 Running 0 37m

root@k8s-master01:~# kubectl get pods,svc -n blog --kubeconfig=$HOME/.kube/kubeusers.conf

NAME READY STATUS RESTARTS AGE

pod/demoapp10-795f57b569-szktv 1/1 Running 0 40m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/demoapp10 ClusterIP 10.105.252.99 <none> 80/TCP 38m

#查看默认default命名空间下资源,报禁用,无权限

root@k8s-master01:~# kubectl get pods,svc --kubeconfig=$HOME/.kube/kubeusers.conf

Error from server (Forbidden): pods is forbidden: User "tom" cannot list resource "pods" in API group "" in the namespace "default"

Error from server (Forbidden): services is forbidden: User "tom" cannot list resource "services" in API group "" in the namespace "default"

#验证删除blog空间下资源,可以

root@k8s-master01:~# kubectl delete deployment demoapp10 -n blog

deployment.apps "demoapp10" deleted

root@k8s-master01:~# kubectl delete svc demoapp10 -n blog --kubeconfig=$HOME/.kube/kubeusers.conf

service "demoapp10" deleted

-

为 jerry 授予管理整个集群的权限;

#管理整个集群权限,需要创建clusterrolebinding资源

#列出clusterrole权限名称

root@k8s-master01:~# kubectl get clusterrole

NAME CREATED AT

admin 2023-02-02T14:59:01Z

cluster-admin 2023-02-02T14:59:01Z

edit 2023-02-02T14:59:01Z

flannel 2023-02-02T15:07:32Z

kubeadm:get-nodes 2023-02-02T14:59:03Z

mysql-operator 2023-02-25T02:08:14Z

#创建clusterrolebinding资源

root@k8s-master01:~# kubectl create clusterrolebinding jerry--as-cluster-admin --clusterrole=cluster-admin --user=jerry

clusterrolebinding.rbac.authorization.k8s.io/jerry--as-cluster-admin created

#切换当前context至jerry

root@k8s-master01:~# kubectl config use-context jerry@kube-test --kubeconfig=$HOME/.kube/kubeusers.conf

Switched to context "jerry@kube-test".

#验证,查看pod资源

root@k8s-master01:~# kubectl get pod -A --kubeconfig=$HOME/.kube/kubeusers.conf

NAMESPACE NAME READY STATUS RESTARTS AGE

demodb demodb-0 0/1 Error 4 19d

demodb demodb-1 0/1 Error 4 19d

kube-flannel kube-flannel-ds-7bk7c 1/1 Running 30 (178m ago) 40d

kube-flannel kube-flannel-ds-jq7jp 1/1 Running 30 (178m ago) 40d

kube-flannel kube-flannel-ds-jvg2j 1/1 Running 35 (178m ago) 39d

kube-flannel kube-flannel-ds-rpzsj 1/1 Running 33 (11h ago) 40d

kube-system coredns-5bbd96d687-jl7cq 1/1 Running 30 (11h ago) 40d

kube-system coredns-5bbd96d687-r7jhr 1/1 Running 30 (11h ago) 40d

kube-system csi-nfs-controller-67dd4499b5-tz22p 3/3 Running 67 (178m ago) 31d

kube-system csi-nfs-node-5wvvn 3/3 Running 66 (11h ago) 31d

kube-system csi-nfs-node-dbt99 3/3 Running 3 (178m ago) 12h

kube-system csi-nfs-node-jf5bs 3/3 Running 66 (11h ago) 31d

kube-system csi-nfs-node-pltmn 3/3 Running 66 (178m ago) 31d

kube-system etcd-k8s-master01 1/1 Running 30 (11h ago) 40d

kube-system etcd-k8s-node01 1/1 Running 30 (11h ago) 39d

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (178m ago) 21h

kube-system kube-apiserver-k8s-node01 1/1 Running 30 (178m ago) 39d

kube-system kube-controller-manager-k8s-master01 1/1 Running 38 (178m ago) 40d

kube-system kube-controller-manager-k8s-node01 1/1 Running 31 (178m ago) 39d

kube-system kube-proxy-fcqkv 1/1 Running 30 (11h ago) 40d

kube-system kube-proxy-p7jbn 1/1 Running 29 (11h ago) 39d

kube-system kube-proxy-ql72g 1/1 Running 30 (178m ago) 40d

kube-system kube-proxy-v8txv 1/1 Running 30 (11h ago) 40d

kube-system kube-scheduler-k8s-master01 1/1 Running 33 (178m ago) 40d

kube-system kube-scheduler-k8s-node01 1/1 Running 32 (178m ago) 39d

mysql-operator mysql-cluster01-0 0/2 Init:0/3 0 10d

mysql-operator mysql-cluster01-1 0/2 Init:0/3 0 13d

mysql-operator mysql-cluster01-2 0/2 Init:0/3 0 2d12h

nfs nfs-server-857f859f57-z9lkh 1/1 Running 8 (11h ago) 15d

nfs nfs-server-857f859f57-zjqsc 0/1 Completed 14 (16d ago) 31d

ns-job nginx-5b9c7b4c8f-2nzst 1/1 Running 3 (11h ago) 10d

ns-job nginx-5b9c7b4c8f-6zdrw 1/1 Running 3 (178m ago) 10d

ns-job nginx-5b9c7b4c8f-9tggd 0/1 Completed 8 11d

ns-job nginx-5b9c7b4c8f-bsrkg 0/1 Completed 8 (11d ago) 11d

ns-job nginx-5b9c7b4c8f-cvn99 1/1 Running 3 (178m ago) 10d

ns-job nginx-5b9c7b4c8f-dwj5b 0/1 Completed 8 (11d ago) 11d

ns-job nginx-5b9c7b4c8f-tdzfl 1/1 Running 11 (11h ago) 11d

ns-job nginx-5b9c7b4c8f-vhg4w 1/1 Running 11 (11h ago) 11d

ns-job wordpress-664cfb496b-m7w7s 1/1 Running 3 (11h ago) 10d

ns-job wordpress-664cfb496b-q7nbx 0/1 Completed 0 10d

ns-job wordpress-664cfb496b-sbdg2 1/1 Running 3 (11h ago) 10d

ns-job wordpress-664cfb496b-sq299 1/1 Running 3 (11h ago) 10d

prom daemonset-demo-d446v 1/1 Running 13 (11h ago) 20d

prom daemonset-demo-ffnsq 1/1 Running 13 (11h ago) 20d

prom daemonset-demo-zjqzb 1/1 Running 1 (178m ago) 12h

test demoapp10-5cd5f49bb-6qtn6 1/1 Running 1 (178m ago) 12h

#验证,创建deployment资源

root@k8s-master01:~# kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --kubeconfig=$HOME/.kube/kubeusers.conf

deployment.apps/demoapp10 created

root@k8s-master01:~# kubectl get deployment --kubeconfig=$HOME/.kube/kubeusers.conf

NAME READY UP-TO-DATE AVAILABLE AGE

demoapp10 1/1 1 1 29s

-

为 mason 用户授予读取集群资源的权限。

#创建mason用户下clusterrolebinding,view权限

root@k8s-master01:~# kubectl create clusterrolebinding mason-as-cluster-view --clusterrole=view --user=mason

clusterrolebinding.rbac.authorization.k8s.io/mason-as-cluster-view created

#切换当前context至mason用户下

root@k8s-master01:~# kubectl config use-context mason@kube-test --kubeconfig=$HOME/.kube/kubeusers.conf

Switched to context "mason@kube-test".

#验证,查看pod资源,可以

root@k8s-master01:~# kubectl get pod -A --kubeconfig=$HOME/.kube/kubeusers.conf

NAMESPACE NAME READY STATUS RESTARTS AGE

default demoapp10-795f57b569-m5qz2 1/1 Running 0 4m7s

demodb demodb-0 0/1 Error 4 19d

demodb demodb-1 0/1 Error 4 19d

kube-flannel kube-flannel-ds-7bk7c 1/1 Running 30 (3h6m ago) 40d

kube-flannel kube-flannel-ds-jq7jp 1/1 Running 30 (3h6m ago) 40d

kube-flannel kube-flannel-ds-jvg2j 1/1 Running 35 (3h6m ago) 39d

kube-flannel kube-flannel-ds-rpzsj 1/1 Running 33 (11h ago) 40d

kube-system coredns-5bbd96d687-jl7cq 1/1 Running 30 (11h ago) 40d

kube-system coredns-5bbd96d687-r7jhr 1/1 Running 30 (11h ago) 40d

kube-system csi-nfs-controller-67dd4499b5-tz22p 3/3 Running 67 (3h6m ago) 31d

kube-system csi-nfs-node-5wvvn 3/3 Running 66 (11h ago) 31d

kube-system csi-nfs-node-dbt99 3/3 Running 3 (3h6m ago) 13h

kube-system csi-nfs-node-jf5bs 3/3 Running 66 (11h ago) 31d

kube-system csi-nfs-node-pltmn 3/3 Running 66 (3h6m ago) 31d

kube-system etcd-k8s-master01 1/1 Running 30 (11h ago) 40d

kube-system etcd-k8s-node01 1/1 Running 30 (11h ago) 39d

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (3h6m ago) 21h

kube-system kube-apiserver-k8s-node01 1/1 Running 30 (3h6m ago) 39d

kube-system kube-controller-manager-k8s-master01 1/1 Running 38 (3h6m ago) 40d

kube-system kube-controller-manager-k8s-node01 1/1 Running 31 (3h6m ago) 39d

kube-system kube-proxy-fcqkv 1/1 Running 30 (11h ago) 40d

kube-system kube-proxy-p7jbn 1/1 Running 29 (11h ago) 39d

kube-system kube-proxy-ql72g 1/1 Running 30 (3h6m ago) 40d

kube-system kube-proxy-v8txv 1/1 Running 30 (11h ago) 40d

kube-system kube-scheduler-k8s-master01 1/1 Running 33 (3h6m ago) 40d

kube-system kube-scheduler-k8s-node01 1/1 Running 32 (3h6m ago) 39d

mysql-operator mysql-cluster01-0 0/2 Init:0/3 0 10d

mysql-operator mysql-cluster01-1 0/2 Init:0/3 0 13d

mysql-operator mysql-cluster01-2 0/2 Init:0/3 0 2d12h

nfs nfs-server-857f859f57-z9lkh 1/1 Running 8 (11h ago) 15d

nfs nfs-server-857f859f57-zjqsc 0/1 Completed 14 (16d ago) 31d

ns-job nginx-5b9c7b4c8f-2nzst 1/1 Running 3 (11h ago) 10d

ns-job nginx-5b9c7b4c8f-6zdrw 1/1 Running 3 (3h6m ago) 10d

ns-job nginx-5b9c7b4c8f-9tggd 0/1 Completed 8 11d

ns-job nginx-5b9c7b4c8f-bsrkg 0/1 Completed 8 (11d ago) 11d

ns-job nginx-5b9c7b4c8f-cvn99 1/1 Running 3 (3h6m ago) 10d

ns-job nginx-5b9c7b4c8f-dwj5b 0/1 Completed 8 (11d ago) 11d

ns-job nginx-5b9c7b4c8f-tdzfl 1/1 Running 11 (11h ago) 11d

ns-job nginx-5b9c7b4c8f-vhg4w 1/1 Running 11 (11h ago) 11d

ns-job wordpress-664cfb496b-m7w7s 1/1 Running 3 (11h ago) 10d

ns-job wordpress-664cfb496b-q7nbx 0/1 Completed 0 10d

ns-job wordpress-664cfb496b-sbdg2 1/1 Running 3 (11h ago) 10d

ns-job wordpress-664cfb496b-sq299 1/1 Running 3 (11h ago) 10d

prom daemonset-demo-d446v 1/1 Running 13 (11h ago) 20d

prom daemonset-demo-ffnsq 1/1 Running 13 (11h ago) 20d

prom daemonset-demo-zjqzb 1/1 Running 1 (3h6m ago) 12h

test demoapp10-5cd5f49bb-6qtn6 1/1 Running 1 (3h6m ago) 12h

#验证,创建pods权限,无权限

root@k8s-master01:~# kubectl create deployment demoapp10 --image=ikubernetes/demoapp:v1.0 --kubeconfig=$HOME/.kube/kubeusers.conf

error: failed to create deployment: deployments.apps is forbidden: User "mason" cannot create resource "deployments" in API group "apps" in the namespace "default"

-

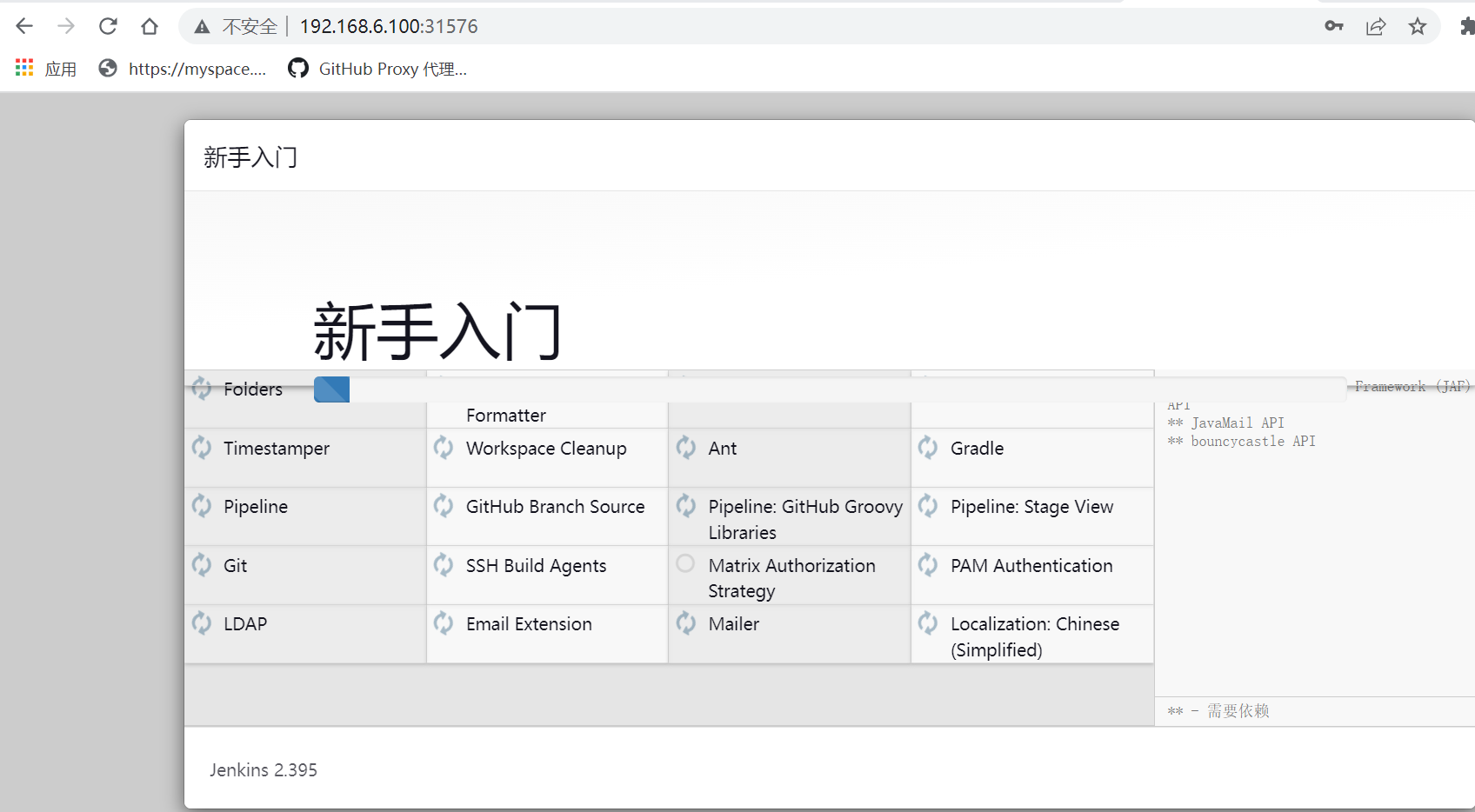

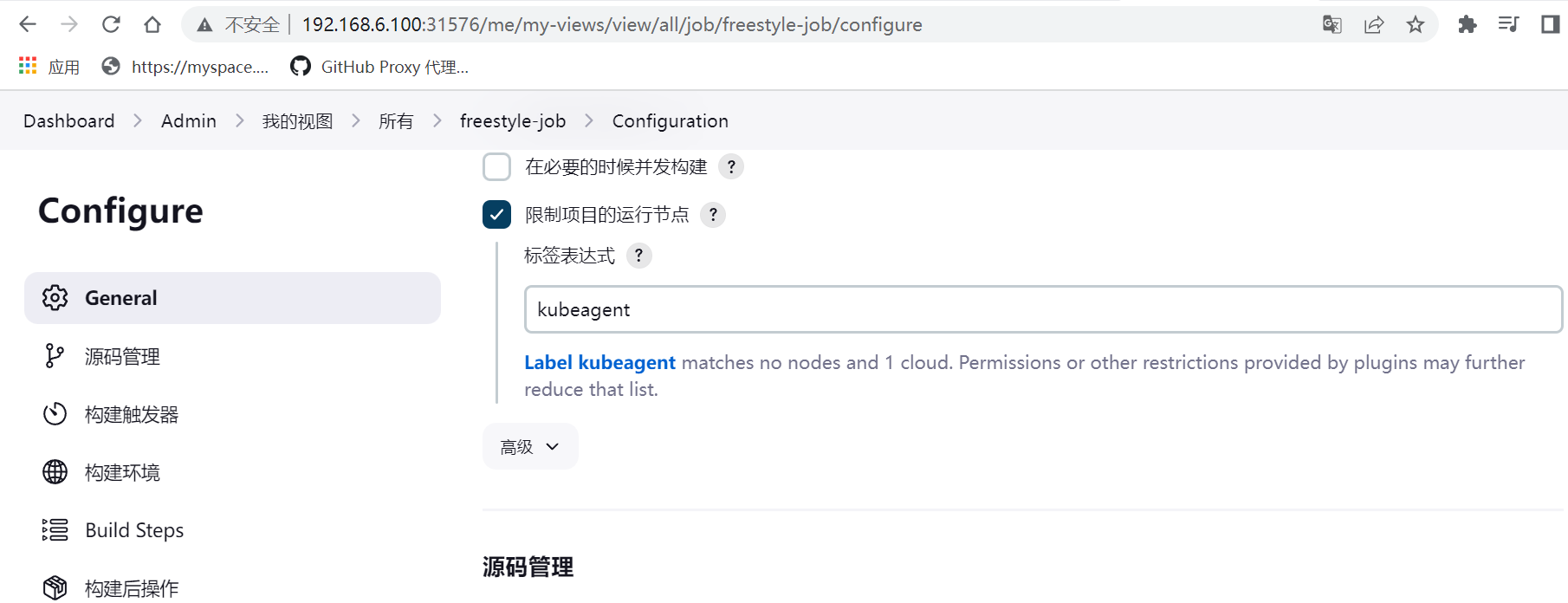

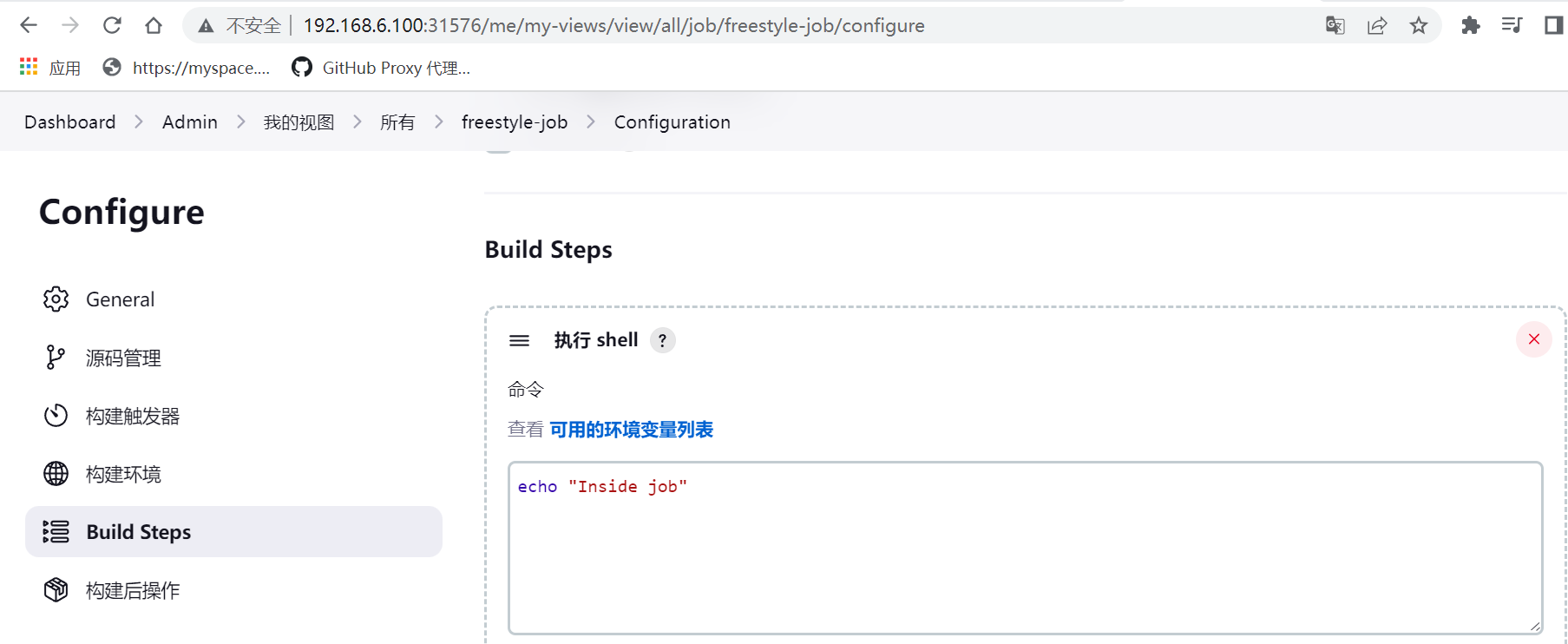

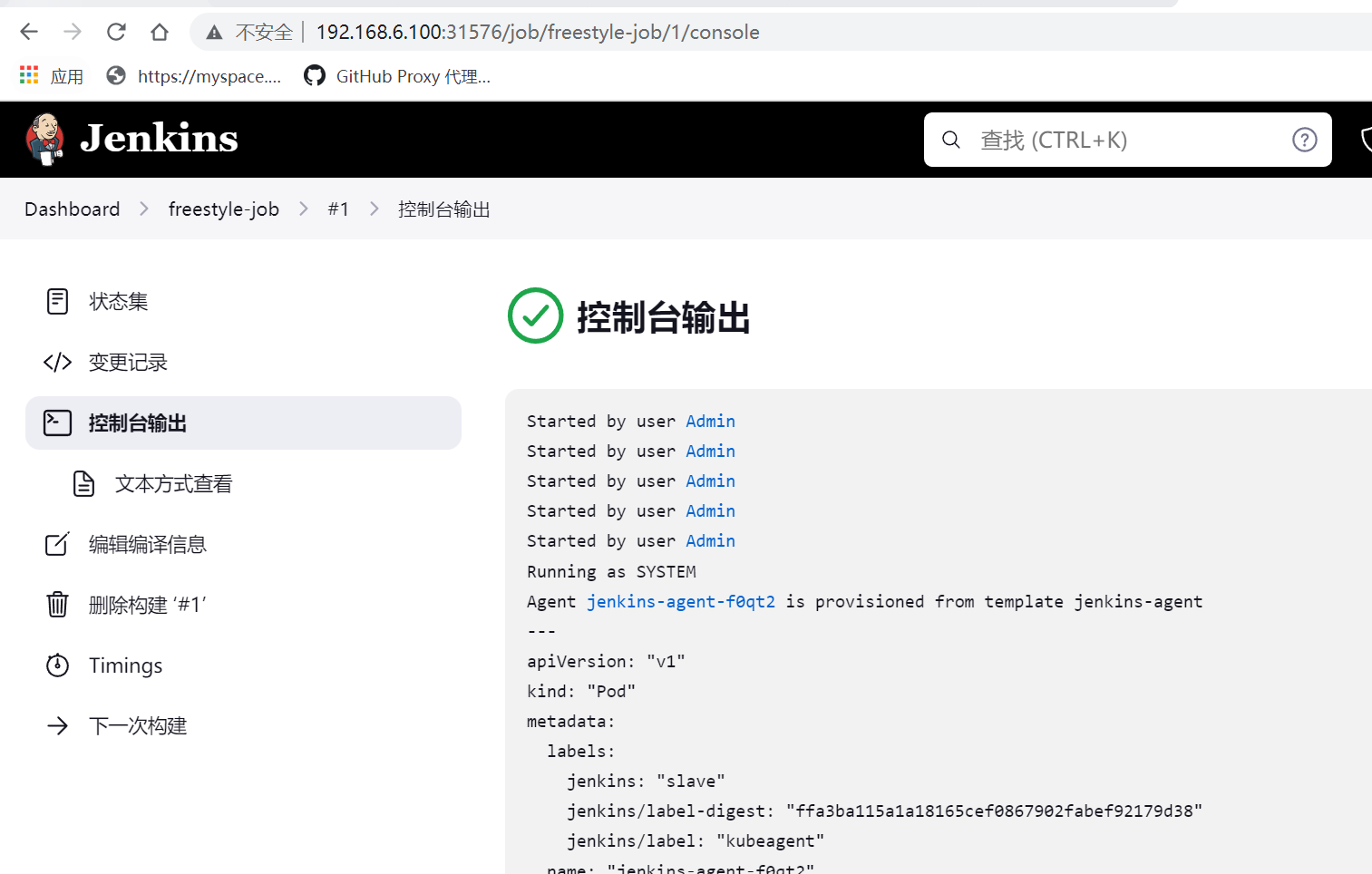

部署 Jenkins至 Kubernetes 集群;

#cd 到learning-k8s脚本目录下,创建jenkins资源

root@k8s-master01:~/learning-k8s/jenkins/deploy# kubectl apply -f 01-namespace-jenkins.yaml -f 02-pvc-jenkins.yaml -f 03-rbac-jenkins.yaml -f 04-deploy-jenkins.yaml -f 05-service-jenkins.yaml -f 06-pvc-maven-cache.yaml

namespace/jenkins created

persistentvolumeclaim/jenkins-pvc created

serviceaccount/jenkins-master created

clusterrole.rbac.authorization.k8s.io/jenkins-master created

clusterrolebinding.rbac.authorization.k8s.io/jenkins-master created

deployment.apps/jenkins created

service/jenkins created

service/jenkins-jnlp created

persistentvolumeclaim/pvc-maven-cache created

#查看创建的jenkins资源

root@k8s-master01:~/learning-k8s/jenkins/deploy# kubectl get all -n jenkins

NAME READY STATUS RESTARTS AGE

pod/jenkins-6c5f8665c8-4mxnc 0/1 Error 0 15m

pod/jenkins-6c5f8665c8-wbpf4 0/1 Error 0 16m

pod/jenkins-6c5f8665c8-zx9vr 1/1 Running 0 14m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/jenkins NodePort 10.100.222.203 <none> 8080:31576/TCP 16m

service/jenkins-jnlp ClusterIP 10.111.224.234 <none> 50000/TCP 16m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/jenkins 1/1 1 1 16m

NAME DESIRED CURRENT READY AGE

replicaset.apps/jenkins-6c5f8665c8 1 1 1 16m

#查看jenkins logs信息,里面包含初始密码,后面登录需要用到

root@k8s-master01:~/learning-k8s/jenkins/deploy# kubectl logs jenkins-6c5f8665c8-zx9vr -n jenkins

VM settings:

Max. Heap Size (Estimated): 976.00M

Using VM: OpenJDK 64-Bit Server VM

Running from: /usr/share/jenkins/jenkins.war

webroot: /var/jenkins_home/war

2023-03-15 03:22:54.056+0000 [id=1]INFOwinstone.Logger#logInternal: Beginning extraction from war file

2023-03-15 03:22:54.322+0000 [id=1]WARNINGo.e.j.s.handler.ContextHandler#setContextPath: Empty contextPath

2023-03-15 03:22:54.444+0000 [id=1]INFOorg.eclipse.jetty.server.Server#doStart: jetty-10.0.13; built: 2022-12-07T20:13:20.134Z; git: 1c2636ea05c0ca8de1ffd6ca7f3a98ac084c766d; jvm 11.0.18+10

2023-03-15 03:22:55.452+0000 [id=1]INFOo.e.j.w.StandardDescriptorProcessor#visitServlet: NO JSP Support for /, did not find org.eclipse.jetty.jsp.JettyJspServlet

2023-03-15 03:22:55.640+0000 [id=1]INFOo.e.j.s.s.DefaultSessionIdManager#doStart: Session workerName=node0

2023-03-15 03:22:57.155+0000 [id=1]INFOhudson.WebAppMain#contextInitialized: Jenkins home directory: /var/jenkins_home found at: EnvVars.masterEnvVars.get("JENKINS_HOME")

2023-03-15 03:22:57.598+0000 [id=1]INFOo.e.j.s.handler.ContextHandler#doStart: Started w.@29a23c3d{Jenkins v2.395,/,file:///var/jenkins_home/war/,AVAILABLE}{/var/jenkins_home/war}

2023-03-15 03:22:57.645+0000 [id=1]INFOo.e.j.server.AbstractConnector#doStart: Started ServerConnector@1757cd72{HTTP/1.1, (http/1.1)}{0.0.0.0:8080}

2023-03-15 03:22:57.685+0000 [id=1]INFOorg.eclipse.jetty.server.Server#doStart: Started Server@dd0c991{STARTING}[10.0.13,sto=0] @4733ms

2023-03-15 03:22:57.694+0000 [id=23]INFOwinstone.Logger#logInternal: Winstone Servlet Engine running: controlPort=disabled

2023-03-15 03:22:58.575+0000 [id=30]INFOjenkins.InitReactorRunner$1#onAttained: Started initialization

2023-03-15 03:22:58.798+0000 [id=31]INFOjenkins.InitReactorRunner$1#onAttained: Listed all plugins

2023-03-15 03:23:01.009+0000 [id=30]INFOjenkins.InitReactorRunner$1#onAttained: Prepared all plugins

2023-03-15 03:23:01.028+0000 [id=31]INFOjenkins.InitReactorRunner$1#onAttained: Started all plugins

2023-03-15 03:23:01.052+0000 [id=28]INFOjenkins.InitReactorRunner$1#onAttained: Augmented all extensions

2023-03-15 03:23:02.024+0000 [id=29]INFOjenkins.InitReactorRunner$1#onAttained: System config loaded

2023-03-15 03:23:02.025+0000 [id=28]INFOjenkins.InitReactorRunner$1#onAttained: System config adapted

2023-03-15 03:23:02.027+0000 [id=28]INFOjenkins.InitReactorRunner$1#onAttained: Loaded all jobs

2023-03-15 03:23:02.030+0000 [id=28]INFOjenkins.InitReactorRunner$1#onAttained: Configuration for all jobs updated

2023-03-15 03:23:02.208+0000 [id=29]INFOjenkins.install.SetupWizard#init:

*************************************************************

*************************************************************

*************************************************************

Jenkins initial setup is required. An admin user has been created and a password generated.

Please use the following password to proceed to installation:

9c50352482004252a3c603fef2e7a688

This may also be found at: /var/jenkins_home/secrets/initialAdminPassword

*************************************************************

*************************************************************

*************************************************************

2023-03-15 03:23:02.275+0000 [id=44]INFOhudson.util.Retrier#start: Attempt #1 to do the action check updates server

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.codehaus.groovy.vmplugin.v7.Java7$1 (file:/var/jenkins_home/war/WEB-INF/lib/groovy-all-2.4.21.jar) to constructor java.lang.invoke.MethodHandles$Lookup(java.lang.Class,int)

WARNING: Please consider reporting this to the maintainers of org.codehaus.groovy.vmplugin.v7.Java7$1

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

2023-03-15 03:23:37.194+0000 [id=28]INFOjenkins.InitReactorRunner$1#onAttained: Completed initialization

2023-03-15 03:23:37.311+0000 [id=22]INFOhudson.lifecycle.Lifecycle#onReady: Jenkins is fully up and running

2023-03-15 03:23:37.411+0000 [id=44]INFOh.m.DownloadService$Downloadable#load: Obtained the updated data file for hudson.tasks.Maven.MavenInstaller

2023-03-15 03:23:37.412+0000 [id=44]INFOhudson.util.Retrier#start: Performed the action check updates server successfully at the attempt #1

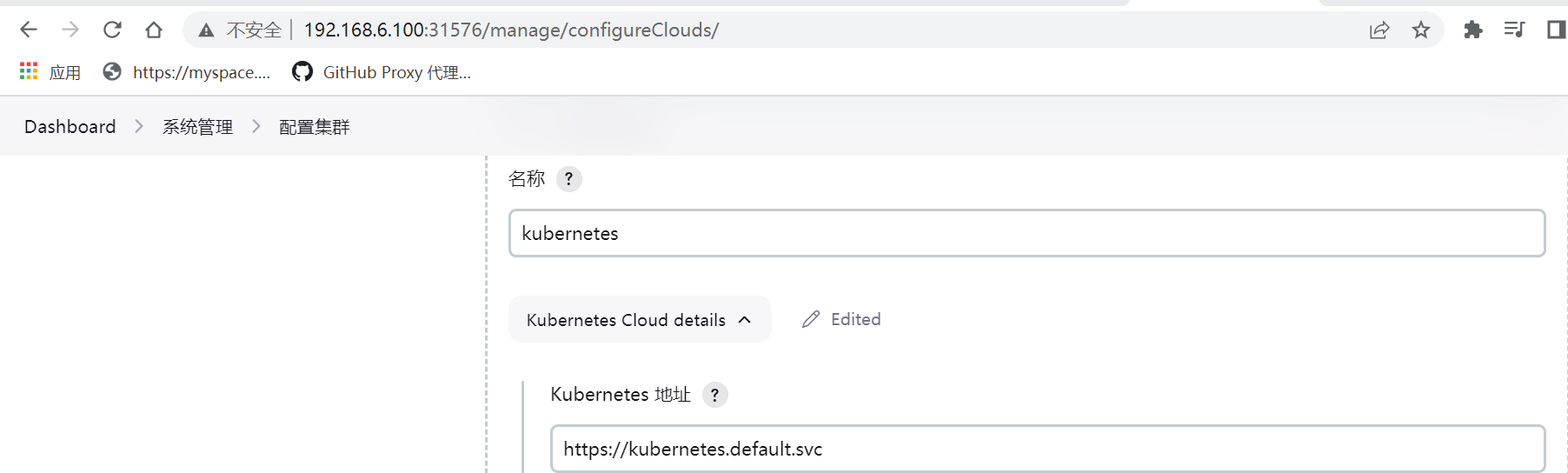

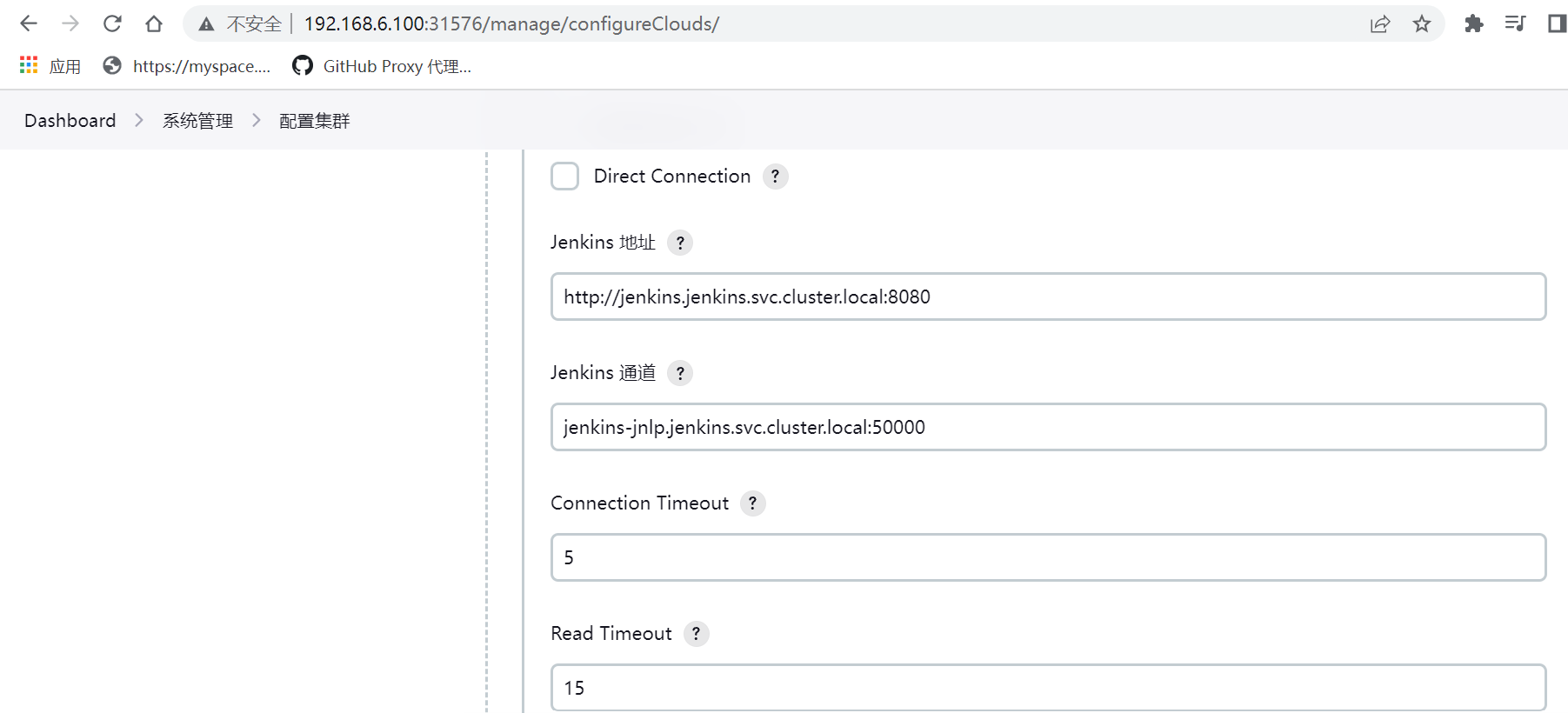

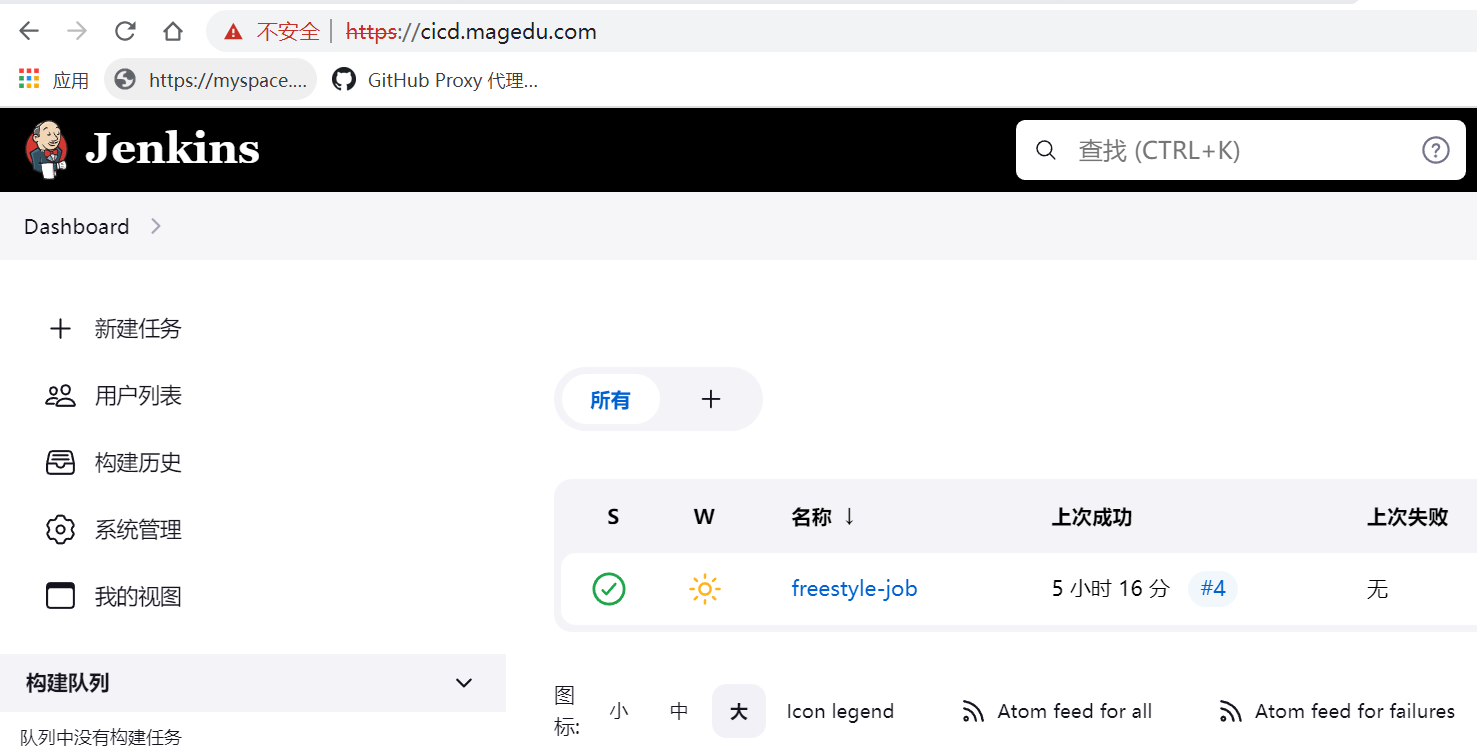

#登录jenkins,设置和安装kubernetes插件

#在kubenetes master上查看,会对应生成pod agent来执行Jenkins任务

root@k8s-master01:~# kubectl get pods -n jenkins -w

NAME READY STATUS RESTARTS AGE

jenkins-6c5f8665c8-npvhv 1/1 Running 0 3h20m

jenkins-agent-d7x2f 0/1 Pending 0 0s

jenkins-agent-d7x2f 0/1 Pending 0 0s

jenkins-agent-d7x2f 0/1 ContainerCreating 0 0s

jenkins-agent-d7x2f 1/1 Running 0 2s

jenkins-agent-d7x2f 1/1 Terminating 0 9s

jenkins-agent-d7x2f 0/1 Terminating 0 10s

jenkins-agent-d7x2f 0/1 Terminating 0 10s

jenkins-agent-d7x2f 0/1 Terminating 0 10s

jenkins-agent-w7gzq 0/1 Pending 0 0s

jenkins-agent-w7gzq 0/1 Pending 0 0s

jenkins-agent-w7gzq 0/1 ContainerCreating 0 0s

jenkins-agent-w7gzq 1/1 Running 0 2s

jenkins-agent-w7gzq 1/1 Terminating 0 8s

-

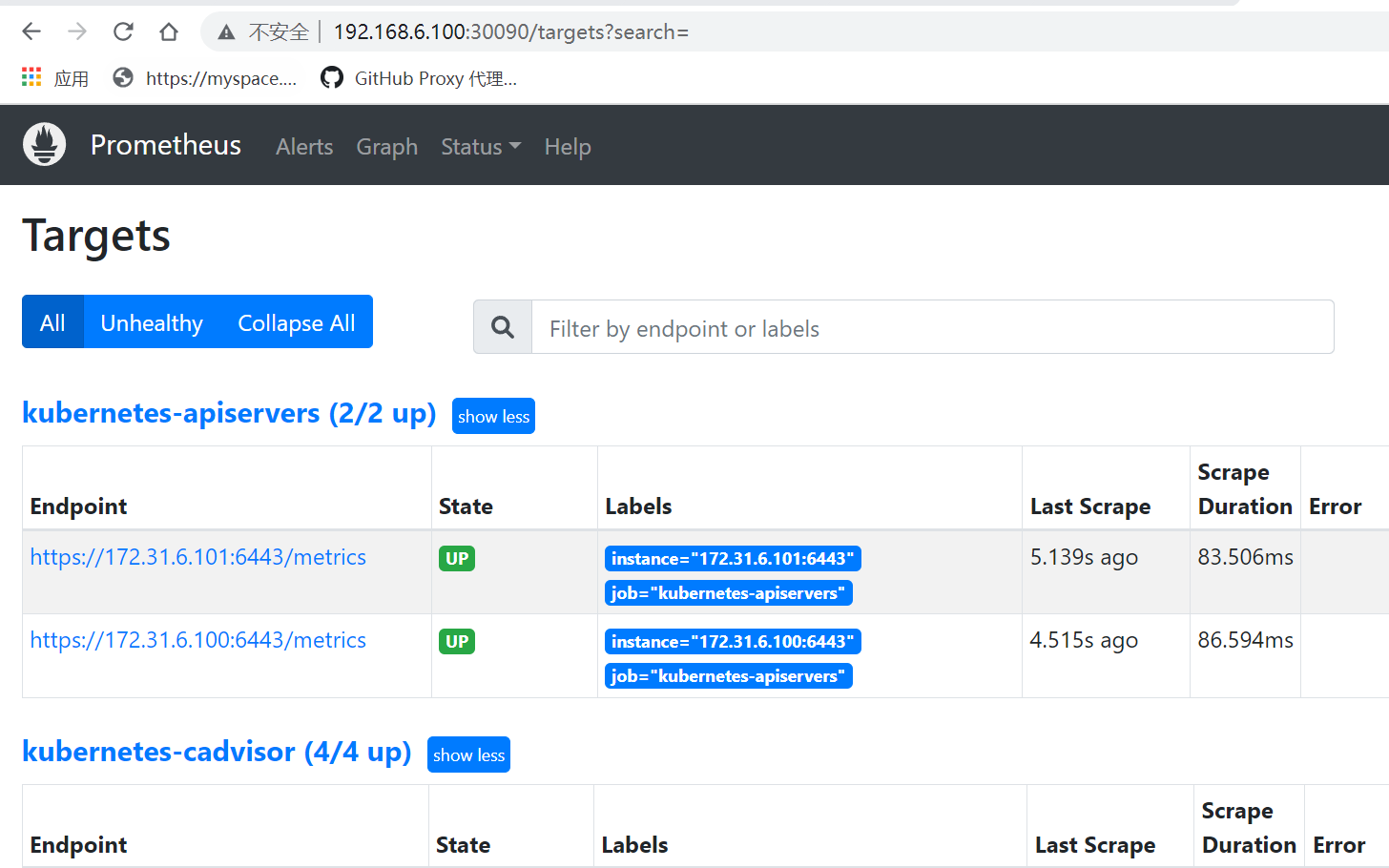

部署 Prometheus-Server 至Kubernetes 集群;

#git clone prometheus配置文件

root@k8s-master01:~# git clone https://github.com/iKubernetes/k8s-prom.git

Cloning into 'k8s-prom'...

remote: Enumerating objects: 89, done.

remote: Counting objects: 100% (40/40), done.

remote: Compressing objects: 100% (29/29), done.

remote: Total 89 (delta 18), reused 29 (delta 11), pack-reused 49

Unpacking objects: 100% (89/89), 20.76 KiB | 386.00 KiB/s, done.

#生成prometheus资源

root@k8s-master01:~/k8s-prom# ls

k8s-prometheus-adapter kube-state-metrics namespace.yaml node_exporter podinfo prometheus prometheus-ingress README.md

root@k8s-master01:~/k8s-prom# kubectl apply -f prometheus

configmap/prometheus-config created

deployment.apps/prometheus-server created

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

#查看生成的prometheus各资源信息

root@k8s-master01:~/k8s-prom# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/prometheus-server-8f7bc69b5-nlcmg 0/1 ContainerCreating 0 9s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.108.105.227 <none> 9090:30090/TCP 9s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-server 0/1 1 0 9s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-server-8f7bc69b5 1 1 0 9s

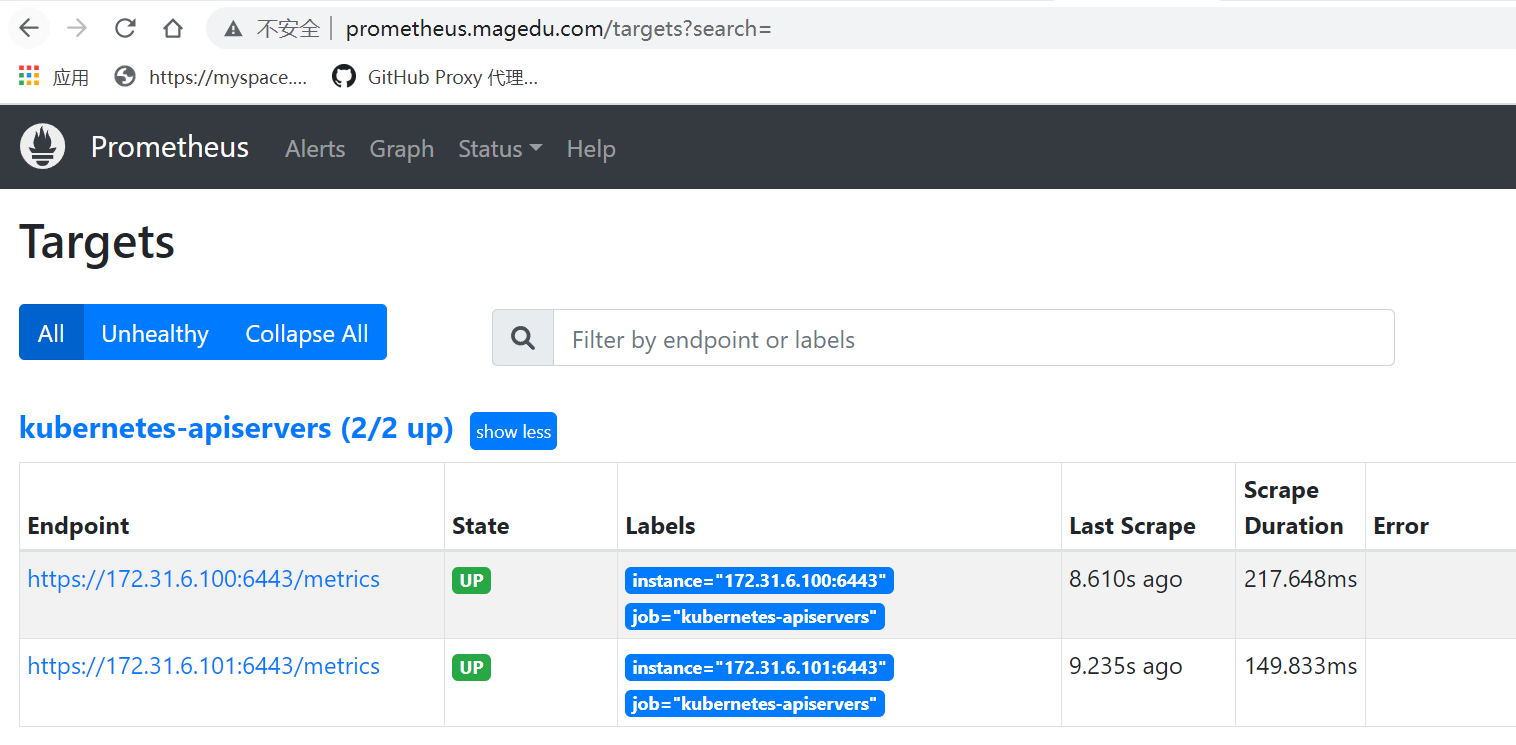

#访问prometheus服务,验证 正常

-

部署ingress

#下载ingress资源编排文件

root@k8s-master01:/# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml

--2023-03-15 19:16:52-- https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.111.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... Read error (Connection reset by peer) in headers.

Retrying.

--2023-03-15 19:17:15-- (try: 2) https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 15574 (15K) [text/plain]

Saving to: ‘deploy.yaml’

deploy.yaml 100%[======================================================================>] 15.21K --.-KB/s in 0s

2023-03-15 19:17:15 (55.3 MB/s) - ‘deploy.yaml’ saved [15574/15574]

#修改镜像源地址

root@k8s-master01:~# vi deploy.yaml

registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343 --> yang5188/kube-webhook-certgen:v20220916-gd32f8c343

registry.k8s.io/ingress-nginx/controller:v1.6.4 --> registry.aliyuncs.com/google_containers/nginx-ingress-controller:v1.6.4

#生成ingress-nginx资源

root@k8s-master01:~# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

#查看生成的ingress-nginx资源

root@k8s-master01:~# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-c76qn 0/1 Completed 0 75s

pod/ingress-nginx-admission-patch-88zbp 0/1 Completed 0 75s

pod/ingress-nginx-controller-66457b7747-f5zxk 1/1 Running 0 75s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.103.166.101 <pending> 80:31410/TCP,443:30782/TCP 75s

service/ingress-nginx-controller-admission ClusterIP 10.110.202.249 <none> 443/TCP 75s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 75s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ingress-nginx-controller-66457b7747 1 1 1 75s

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 31s 75s

job.batch/ingress-nginx-admission-patch 1/1 49s 75s

#修改ingress-nginx的SVC externelips地址

root@k8s-master01:~# kubectl edit svc ingress-nginx-controller -n ingress-nginx

service/ingress-nginx-controller edited

externalTrafficPolicy: Cluster

externalIPs: 192.168.6.100

root@k8s-master01:~# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.103.166.101 192.168.6.100 80:31410/TCP,443:30782/TCP 28m

ingress-nginx-controller-admission ClusterIP 10.110.202.249 <none> 443/TCP 28m

#访问externelips地址,出现nginx页面

-

使用 Ingress 开放至集群外部,Jenkins 要使用 https 协议开放。

##prometheus配置ingress

root@k8s-master01:~/k8s-prom/prometheus-ingress# cat ingress-prometheus.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus

namespace: prom

labels:

app: prometheus

spec:

ingressClassName: 'nginx'

rules:

- host: prom.magedu.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus

port:

number: 9090

- host: prometheus.magedu.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus

port:

number: 9090

root@k8s-master01:~/k8s-prom/prometheus-ingress# ls

ingress-prometheus.yaml

root@k8s-master01:~/k8s-prom/prometheus-ingress# kubectl apply -f ingress-prometheus.yaml

ingress.networking.k8s.io/prometheus created

root@k8s-master01:~/k8s-prom/prometheus-ingress# kubectl get ingress -n prom

NAME CLASS HOSTS ADDRESS PORTS AGE

prometheus nginx prom.magedu.com,prometheus.magedu.com 80 10s

在本机上hosts配置 192.168.6.100 prom.magedu.com prometheus.magedu.com,通过域名访问

-

jenkins配置nginx,支持http访问

# 生成jenkins证书

root@k8s-master01:~/learning-k8s/jenkins/deploy# mkdir ssl

root@k8s-master01:~/learning-k8s/jenkins/deploy# cd ssl/

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl# ls

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl# openssl req \

> -newkey rsa:2048 \

> -x509 \

> -nodes \

> -keyout jenkins.key \

> -new \

> -out jenkins.crt \

> -subj /CN=magedu.com \

> -reqexts SAN \

> -extensions SAN \

> -config <(cat /etc/ssl/openssl.cnf \

> <(printf '[SAN]\nsubjectAltName=DNS:magedu.com,DNS:*.magedu.com')) \

> -sha256 \

> -days 3650

Generating a RSA private key

..............................................................................................................+++++

............................................................+++++

writing new private key to 'jenkins.key'

-----

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl#

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl#

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl# ls

jenkins.crt jenkins.key

#生成jenkins ssl

root@k8s-master01:~/learning-k8s/jenkins/deploy/ssl# kubectl create secret tls jenkins-ssl --key jenkins.key --cert jenkins.crt -n jenkins

secret/jenkins-ssl created

#修改ingress jenkins配置文件,增加tls信息

root@k8s-master01:~/learning-k8s/jenkins/deploy# vim 07-ingress-jenkins.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: jenkins

namespace: jenkins

spec:

ingressClassName: nginx

rules:

- host: cicd.magedu.com

http:

paths:

- backend:

service:

name: jenkins

port:

number: 8080

path: /

pathType: Prefix

- host: jenkins.magedu.com

http:

paths:

- backend:

service:

name: jenkins

port:

number: 8080

path: /

pathType: Prefix

tls:

- hosts:

secretName: jenkins-ssl

#生成的ingress信息

root@k8s-master01:~/learning-k8s/jenkins/deploy# kubectl apply -f 07-ingress-jenkins.yaml -n jenkins

ingress.networking.k8s.io/jenkins created

#查看生成ingress信息

root@k8s-master01:~/learning-k8s/jenkins/deploy# kubectl get ingress -n jenkins

NAME CLASS HOSTS ADDRESS PORTS AGE

jenkins nginx cicd.magedu.com,jenkins.magedu.com 192.168.6.100 80, 443 7m17s

-

使用 helm 部署主从复制的 MySQL 集群,部署 wordpress,并使用 ingress 暴露到集群外部;

# helm 添加repo

root@k8s-master01:/# helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

root@k8s-master01:/# helm repo list

NAME URL

mysql-operatorhttps://mysql.github.io/mysql-operator/

bitnami https://charts.bitnami.com/bitnami

# 安装主从节点的mysql集群

root@k8s-master01:/# helm install mysql \

> --set auth.rootPassword=MageEdu \

> --set global.storageClass=nfs-csi \

> --set architecture=replication \

> --set auth.database=wpdb \

> --set auth.username=wpuser \

> --set auth.password='magedu.com' \

> --set secondary.replicaCount=1 \

> --set auth.replicationPassword='replpass' \

> bitnami/mysql \

> -n blog

NAME: mysql

LAST DEPLOYED: Wed Mar 15 20:39:47 2023

NAMESPACE: blog

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: mysql

CHART VERSION: 9.6.0

APP VERSION: 8.0.32

** Please be patient while the chart is being deployed **

Tip:

Watch the deployment status using the command: kubectl get pods -w --namespace blog

Services:

echo Primary: mysql-primary.blog.svc.cluster.local:3306

echo Secondary: mysql-secondary.blog.svc.cluster.local:3306

Execute the following to get the administrator credentials:

echo Username: root

MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace blog mysql -o jsonpath="{.data.mysql-root-password}" | base64 -d)

To connect to your database:

1. Run a pod that you can use as a client:

kubectl run mysql-client --rm --tty -i --restart='Never' --image docker.io/bitnami/mysql:8.0.32-debian-11-r14 --namespace blog --env MYSQL_ROOT_PASSWORD=$MYSQL_ROOT_PASSWORD --command -- bash

2. To connect to primary service (read/write):

mysql -h mysql-primary.blog.svc.cluster.local -uroot -p"$MYSQL_ROOT_PASSWORD"

3. To connect to secondary service (read-only):

mysql -h mysql-secondary.blog.svc.cluster.local -uroot -p"$MYSQL_ROOT_PASSWORD"

# 查看创建的资源

root@k8s-master01:/# kubectl get pod,svc,pvc -n blog

NAME READY STATUS RESTARTS AGE

pod/mysql-primary-0 0/1 Running 0 111s

pod/mysql-secondary-0 0/1 Running 0 111s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql-primary ClusterIP 10.109.232.7 <none> 3306/TCP 112s

service/mysql-primary-headless ClusterIP None <none> 3306/TCP 112s

service/mysql-secondary ClusterIP 10.103.40.29 <none> 3306/TCP 112s

service/mysql-secondary-headless ClusterIP None <none> 3306/TCP 112s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-mysql-primary-0 Bound pvc-20dc41df-4ab3-439b-ab5b-1f89119b4885 8Gi RWO nfs-csi 111s

persistentvolumeclaim/data-mysql-secondary-0 Bound pvc-a827f3bd-d87f-4734-abda-e07c9fcec2de 8Gi RWO nfs-csi 111s

persistentvolumeclaim/mysql-data Bound pvc-fb7bde59-bc60-4b71-a299-9f43267d8efe 10Gi RWO nfs-csi 16d

persistentvolumeclaim/wordpress-app-data Bound pvc-738de60f-0abe-44c1-beb1-68ec55cdca98 10Gi RWX nfs-csi 16d

#获得mysql root密码

root@k8s-master01:/# MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace blog mysql -o jsonpath="{.data.mysql-root-password}" | base64 -d)

root@k8s-master01:/# echo $MYSQL_ROOT_PASSWORD

MageEdu

#启动一个mysql的客户端

root@k8s-master01:/# kubectl run mysql-client --rm --tty -i --restart='Never' --image docker.io/bitnami/mysql:8.0.32-debian-11-r14 --namespace blog --env MYSQL_ROOT_PASSWORD=$MYSQL_ROOT_PASSWORD --command -- bash

If you don't see a command prompt, try pressing enter.

I have no name!@mysql-client:/$

# 连接主实例,创建一个test 数据库

I have no name!@mysql-client:/$ mysql -h mysql-primary.blog.svc.cluster.local -uroot -p"$MYSQL_ROOT_PASSWORD"

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 28

Server version: 8.0.32 Source distribution

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| wpdb |

+--------------------+

5 rows in set (0.01 sec)

mysql> create database test;

Query OK, 1 row affected (0.02 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| test |

| wpdb |

+--------------------+

6 rows in set (0.00 sec)

mysql> exit

Bye

# 连接从库,查看数据库,主库创建的库已同步

I have no name!@mysql-client:/$ mysql -h mysql-secondary.blog.svc.cluster.local -uroot p"$MYSQL_ROOT_PASSWORD"

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 36

Server version: 8.0.32 Source distribution

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>mysql> show databases;+--------------------+| Database |+--------------------+| information_schema || mysql || performance_schema || sys || test || wpdb |+--------------------+6 rows in set (0.00 sec)

-

使用 helm 部署 harbor,成功验证推送 Image 至 Harbor 上;

#添加harbor repo

root@k8s-master01:~# helm repo add harbor https://helm.goharbor.io

"harbor" has been added to your repositories

root@k8s-master01:~# cd learning-k8s/helm/harbor/

root@k8s-master01:~/learning-k8s/helm/harbor# ls

harbor-values.yaml README.md

#helm安装 harbor

root@k8s-master01:~/learning-k8s/helm/harbor# helm install harbor -f harbor-values.yaml harbor/harbor -n harbor --create-namespace

NAME: harbor

LAST DEPLOYED: Thu Mar 16 10:17:09 2023

NAMESPACE: harbor

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://hub.magedu.com

For more details, please visit https://github.com/goharbor/harbor

#查看安装的harbor资源

root@k8s-master01:~/learning-k8s/helm/harbor# kubectl get all -n harbor

NAME READY STATUS RESTARTS AGE

pod/harbor-chartmuseum-67c7d66d79-2ng2p 0/1 Error 0 63m

pod/harbor-chartmuseum-67c7d66d79-7p54h 1/1 Running 0 57m

pod/harbor-core-574c7dd8b5-892nk 1/1 Running 0 59m

pod/harbor-core-574c7dd8b5-pdzrd 0/1 Error 2 (60m ago) 63m

pod/harbor-database-0 1/1 Running 0 63m

pod/harbor-jobservice-79c549cdbd-747bb 1/1 Running 3 (58m ago) 60m

pod/harbor-jobservice-79c549cdbd-r2bdp 0/1 Error 0 63m

pod/harbor-notary-server-8bf48d76b-qtpsw 0/1 Error 2 63m

pod/harbor-notary-server-8bf48d76b-zqp6b 1/1 Running 0 60m

pod/harbor-notary-signer-67d7f4c7d7-7b8j8 0/1 ContainerStatusUnknown 3 (60m ago) 63m

pod/harbor-notary-signer-67d7f4c7d7-jwmtr 1/1 Running 0 60m

pod/harbor-portal-687956689b-gtdwq 1/1 Running 0 63m

pod/harbor-redis-0 1/1 Running 0 63m

pod/harbor-registry-6c585cfc59-6rwds 2/2 Running 0 57m

pod/harbor-registry-6c585cfc59-trrvt 0/2 ContainerStatusUnknown 1 63m

pod/harbor-trivy-0 1/1 Running 0 63m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/harbor-chartmuseum ClusterIP 10.103.63.222 <none> 80/TCP 63m

service/harbor-core ClusterIP 10.96.143.168 <none> 80/TCP 63m

service/harbor-database ClusterIP 10.107.85.181 <none> 5432/TCP 63m

service/harbor-jobservice ClusterIP 10.97.152.137 <none> 80/TCP 63m

service/harbor-notary-server ClusterIP 10.100.124.245 <none> 4443/TCP 63m

service/harbor-notary-signer ClusterIP 10.99.17.181 <none> 7899/TCP 63m

service/harbor-portal ClusterIP 10.99.18.209 <none> 80/TCP 63m

service/harbor-redis ClusterIP 10.98.174.55 <none> 6379/TCP 63m

service/harbor-registry ClusterIP 10.104.206.135 <none> 5000/TCP,8080/TCP 63m

service/harbor-trivy ClusterIP 10.104.191.98 <none> 8080/TCP 63m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/harbor-chartmuseum 1/1 1 1 63m

deployment.apps/harbor-core 1/1 1 1 63m

deployment.apps/harbor-jobservice 1/1 1 1 63m

deployment.apps/harbor-notary-server 1/1 1 1 63m

deployment.apps/harbor-notary-signer 1/1 1 1 63m

deployment.apps/harbor-portal 1/1 1 1 63m

deployment.apps/harbor-registry 1/1 1 1 63m

NAME DESIRED CURRENT READY AGE

replicaset.apps/harbor-chartmuseum-67c7d66d79 1 1 1 63m

replicaset.apps/harbor-core-574c7dd8b5 1 1 1 63m

replicaset.apps/harbor-jobservice-79c549cdbd 1 1 1 63m

replicaset.apps/harbor-notary-server-8bf48d76b 1 1 1 63m

replicaset.apps/harbor-notary-signer-67d7f4c7d7 1 1 1 63m

replicaset.apps/harbor-portal-687956689b 1 1 1 63m

replicaset.apps/harbor-registry-6c585cfc59 1 1 1 63m

NAME READY AGE

statefulset.apps/harbor-database 1/1 63m

statefulset.apps/harbor-redis 1/1 63m

statefulset.apps/harbor-trivy 1/1 63m

root@k8s-master01:~/learning-k8s/helm/harbor# kubectl get pvc -n harbor

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-harbor-redis-0 Bound pvc-07329d7f-4513-4a05-9f5f-d33399dc27d2 2Gi RWX nfs-csi 64m

data-harbor-trivy-0 Bound pvc-1465c8d4-5e98-493b-8c13-ce5bf615eb93 5Gi RWX nfs-csi 64m

database-data-harbor-database-0 Bound pvc-9d80a4a6-004c-44bc-8a1e-c03a8f04e55d 2Gi RWX nfs-csi 64m

harbor-chartmuseum Bound pvc-01c2fbde-0409-429f-8315-1470438e0699 5Gi RWX nfs-csi 64m

harbor-jobservice Bound pvc-99e1f1e2-28ba-4a12-b521-439b9f8a5ea9 1Gi RWO nfs-csi 64m

harbor-registry Bound pvc-0114fe72-eaec-4abd-b65c-34c7074b4908 5Gi RWX nfs-csi 64m

#添加hosts 域名

在笔记本上hosts里添加 192.168.6.100 hub.magedu.com域名记录信息

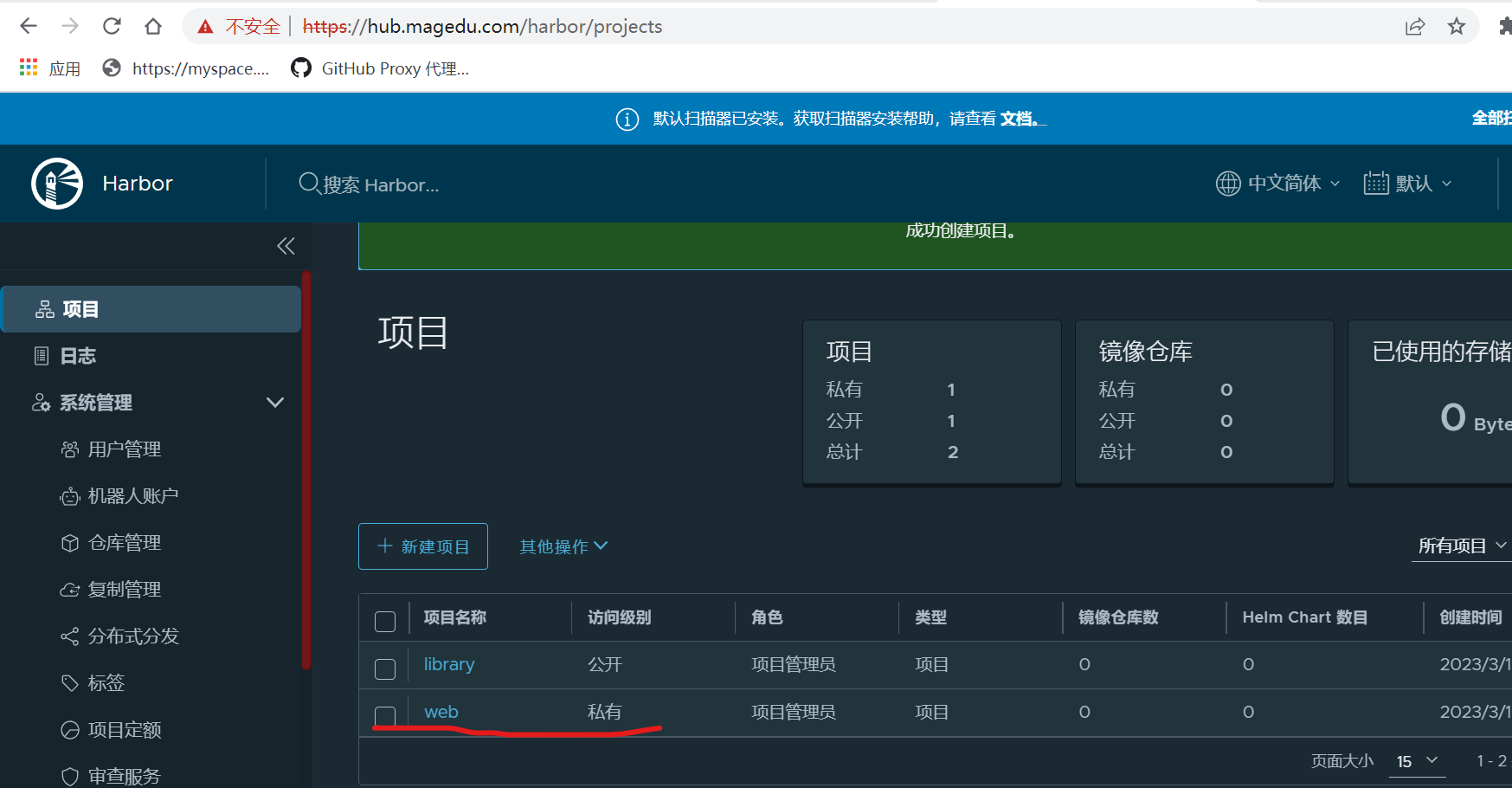

#使用域名访问harbor服务,创建web项目

#修改docker daemon.json文件,添加insecure-registries

root@k8s-master01:~/learning-k8s/helm/harbor# vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://registry.docker-cn.com"

],

"insecure-registries": ["harbor.magedu.com","harbor.myserver.com","172.31.7.105","hub.magedu.com"],

"registry-mirrors": ["https://9916w1ow.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "200m"

},

"storage-driver": "overlay2"

}

#重启docker 服务

root@k8s-master01:~/learning-k8s/helm/harbor# systemctl restart docker

root@k8s-master01:~/learning-k8s/helm/harbor# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2023-03-16 11:43:49 CST; 11s ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 228562 (dockerd)

Tasks: 19

Memory: 44.8M

CGroup: /system.slice/docker.service

└─228562 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Mar 16 11:43:49 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:49.169006118+08:00" level=info msg="API listen on /run/docker.sock"

Mar 16 11:43:49 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:49.354907924+08:00" level=warning msg="Published ports are discarded when usin>

Mar 16 11:43:49 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:49.455351381+08:00" level=warning msg="Published ports are discarded when usin>

Mar 16 11:43:50 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:50.337914291+08:00" level=warning msg="Your kernel does not support swap limit>

Mar 16 11:43:50 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:50.376960960+08:00" level=warning msg="Your kernel does not support swap limit>

Mar 16 11:43:51 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:51.327302830+08:00" level=info msg="ignoring event" container=2ee1d4f2256ac472>

Mar 16 11:43:51 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:51.462976462+08:00" level=warning msg="Your kernel does not support swap limit>

Mar 16 11:43:52 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:52.080480043+08:00" level=warning msg="Your kernel does not support swap limit>

Mar 16 11:43:54 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:54.692449234+08:00" level=info msg="ignoring event" container=55339b364b934674>

Mar 16 11:43:58 k8s-master01 dockerd[228562]: time="2023-03-16T11:43:58.758520001+08:00" level=warning msg="Your kernel does not support swap limit>

#登录harbor,后面拉取和商场镜像

root@k8s-master01:~/learning-k8s/helm/harbor# docker login https://hub.magedu.com -u admin -p magedu.com

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#拉取nginx latest镜像

root@k8s-master01:~/learning-k8s/helm/harbor# docker pull nginx:latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

#对nginx镜像重新打tag

root@k8s-master01:~/learning-k8s/helm/harbor# docker tag nginx:latest hub.magedu.com/web/nginx:latest

#对打新的tag ningx镜像,上传到harbor上

root@k8s-master01:~/learning-k8s/helm/harbor# docker push hub.magedu.com/web/nginx:latest

The push refers to repository [hub.magedu.com/web/nginx]

d874fd2bc83b: Pushed

32ce5f6a5106: Pushed

f1db227348d0: Pushed

b8d6e692a25e: Pushed

e379e8aedd4d: Pushed

2edcec3590a4: Pushed

latest: digest: sha256:ee89b00528ff4f02f2405e4ee221743ebc3f8e8dd0bfd5c4c20a2fa2aaa7ede3 size: 1570

#进入到harbor web服务里,在web项目里查看上传的nginx镜像

-

使用 helm 部署一个 redis cluster 至 Kubernetes 上

#查看helm repo

root@k8s-master01:~/learning-k8s/helm/harbor# helm repo list

NAME URL

mysql-operatorhttps://mysql.github.io/mysql-operator/

bitnami https://charts.bitnami.com/bitnami

harbor https://helm.goharbor.io

root@k8s-master01:~/learning-k8s/helm/harbor# helm search repo redis

NAME CHART VERSIONAPP VERSIONDESCRIPTION

bitnami/redis 17.8.5 7.0.9 Redis(R) is an open source, advanced key-value ...

bitnami/redis-cluster8.3.11 7.0.9 Redis(R) is an open source, scalable, distribut...

#安装redis-cluster

root@k8s-master01:~# helm install redis-cluster -f redis-cluster-values.yaml bitnami/redis-cluster -n redis --create-namespace

NAME: redis-cluster

LAST DEPLOYED: Thu Mar 16 11:56:29 2023

NAMESPACE: redis

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: redis-cluster

CHART VERSION: 8.3.11

APP VERSION: 7.0.9** Please be patient while the chart is being deployed **

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace "redis" redis-cluster -o jsonpath="{.data.redis-password}" | base64 -d)

You have deployed a Redis® Cluster accessible only from within you Kubernetes Cluster.INFO: The Job to create the cluster will be created.To connect to your Redis® cluster:

1. Run a Redis® pod that you can use as a client:

kubectl run --namespace redis redis-cluster-client --rm --tty -i --restart='Never' \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image docker.io/bitnami/redis-cluster:7.0.8-debian-11-r14 -- bash

2. Connect using the Redis® CLI:

redis-cli -c -h redis-cluster -a $REDIS_PASSWORD

#查看生成的redis资源

root@k8s-master01:~# kubectl get pod,svc,pvc -n redis

NAME READY STATUS RESTARTS AGE

pod/redis-cluster-0 1/1 Running 2 (99s ago) 111s

pod/redis-cluster-1 1/1 Running 1 (6m35s ago) 8m3s

pod/redis-cluster-2 1/1 Running 0 8m3s

pod/redis-cluster-3 1/1 Running 1 (101s ago) 107s

pod/redis-cluster-4 1/1 Running 1 (6m59s ago) 8m3s

pod/redis-cluster-5 1/1 Running 1 (6m30s ago) 8m3s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/redis-cluster ClusterIP 10.106.6.254 <none> 6379/TCP 8m3s

service/redis-cluster-headless ClusterIP None <none> 6379/TCP,16379/TCP 8m3s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/redis-data-redis-cluster-0 Bound pvc-eb8f84d1-92b7-4da5-93b9-962b29a72771 8Gi RWO nfs-csi 8m3s

persistentvolumeclaim/redis-data-redis-cluster-1 Bound pvc-8cc820e1-73ff-45c9-9fbf-0b4ba9c8dbe2 8Gi RWO nfs-csi 8m3s

persistentvolumeclaim/redis-data-redis-cluster-2 Bound pvc-28548ea5-99a8-4a0c-a2da-0b19d5990247 8Gi RWO nfs-csi 8m3s

persistentvolumeclaim/redis-data-redis-cluster-3 Bound pvc-11c7064b-7da4-4354-b3ac-a216072931d3 8Gi RWO nfs-csi 8m3s

persistentvolumeclaim/redis-data-redis-cluster-4 Bound pvc-fc633920-50bf-49b0-a6e6-938a97b67440 8Gi RWO nfs-csi 8m3s

persistentvolumeclaim/redis-data-redis-cluster-5 Bound pvc-1f845b12-fbec-41dd-9e7e-31cbfcbf72fc 8Gi RWO nfs-csi 8m3s

#登录进行验证

root@k8s-master01:~# export REDIS_PASSWORD=$(kubectl get secret --namespace "redis" redis-cluster -o jsonpath="{.data.redis-password}" | base64 -d)

root@k8s-master01:~# kubectl run --namespace redis redis-cluster-client --rm --tty -i --restart='Never' \

> --env REDIS_PASSWORD=$REDIS_PASSWORD \

> --image docker.io/bitnami/redis-cluster:7.0.8-debian-11-r14 -- bash

If you don't see a command prompt, try pressing enter.

I have no name!@redis-cluster-client:/$ redis-cli -c -h redis-cluster -a $REDIS_PASSWORD

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

redis-cluster:6379> set key "Hello World"

-> Redirected to slot [12539] located at 10.244.3.240:6379

OK

10.244.3.240:6379> get key

"Hello World"

10.244.3.240:6379>

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)