K8s初探入门详细教程(二)

例如:本篇接续上一篇主要介绍使用VirtualBox部署K8S遇到的一些小问题以及calico和dashoboard的部署。K8s的一些基础功能和dashboard以及calico的安装到此已经全部完成了,接下来可以使用命令行或者dashboard界面来操作k8s了,如果再部署过程中遇到了新的问题欢迎留言。...

提示:这一篇是第一篇的延续如果没看过第一篇请参考K8s初探入门详细教程(一)

前言

例如:本篇接续上一篇主要介绍使用VirtualBox部署K8S遇到的一些小问题以及calico和dashoboard的部署。

一、calico部署

#使用此命令下载calico的yaml配置文件

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O下载完成后需要对其进行修改

在 K8s初探入门详细教程(一)的init阶段我们在master节点进行了初始化主节点,其中重点强调了还有就是-pod-network-cidr=193.31.0.0/16 这个地址必须确保不会与自己的ip网络范围重叠。因此我们也需要修改calico.yaml的配置文件

vi calico.yaml

#进入calico.yaml文件后接下来执行

%s/192.168.0.0/193.31.0.0/gc

这里注意要去掉这一行的注释以及上一行 -name的注释 并注意格式对齐修改完成之后使用

kubectl apply -f calico.yaml

#此命令将会开始部署calico插件。部署完成后可以进行查看,如果部署running过一段时间再来看

[root@k8s-master fate01]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default mynginx 1/1 Running 0 85m

kube-system calico-kube-controllers-577f77cb5c-qnjb5 1/1 Running 0 107m

kube-system calico-node-4qgkl 1/1 Running 0 107m

kube-system calico-node-74hf7 1/1 Running 0 107m

kube-system calico-node-h5hk4 1/1 Running 0 107m

kube-system coredns-5897cd56c4-5zbcn 1/1 Running 0 3h4m

kube-system coredns-5897cd56c4-qzfgk 1/1 Running 0 3h4m

kube-system etcd-k8s-master 1/1 Running 0 3h4m

kube-system kube-apiserver-k8s-master 1/1 Running 0 3h4m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 3h4m

kube-system kube-proxy-4ffq9 1/1 Running 0 132m

kube-system kube-proxy-bc7lq 1/1 Running 0 112m

kube-system kube-proxy-s4shd 1/1 Running 0 3h4m

kube-system kube-scheduler-k8s-master 1/1 Running 0 3h4m

当显示如下操作之后说明calico部署成功了。

二、dashboard部署

可以去官方下载配置yaml文件

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml这里我提供了我自己修改后的yaml文件

vi dashboard.yaml# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 32500

type: NodePort

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: k8s-master

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta6

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

nodeName: k8s-master

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

官方下载的文档设置访问端口(复制我的yaml文件可以忽略这一步,我已经提前改完了)

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboardtype: ClusterIP 改为 type: NodePort

完成之后

kubectl apply -f dashboard.yaml等待部署成功后,

kubectl get svc -A |grep kubernetes-dashboard 查看端口映射

[root@k8s-master fate01]# kubectl get svc -A |grep kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.x6.xx.73 <none> 8000/TCP 107m

kubernetes-dashboard kubernetes-dashboard NodePort 10.x6.xx.46 <none> 443:32500/TCP 107m可以看到我上面的端口映射为32500

访问: https://集群任意IP:端口 https://139.198.165.238:32500(记住替换为自己的IP地址)

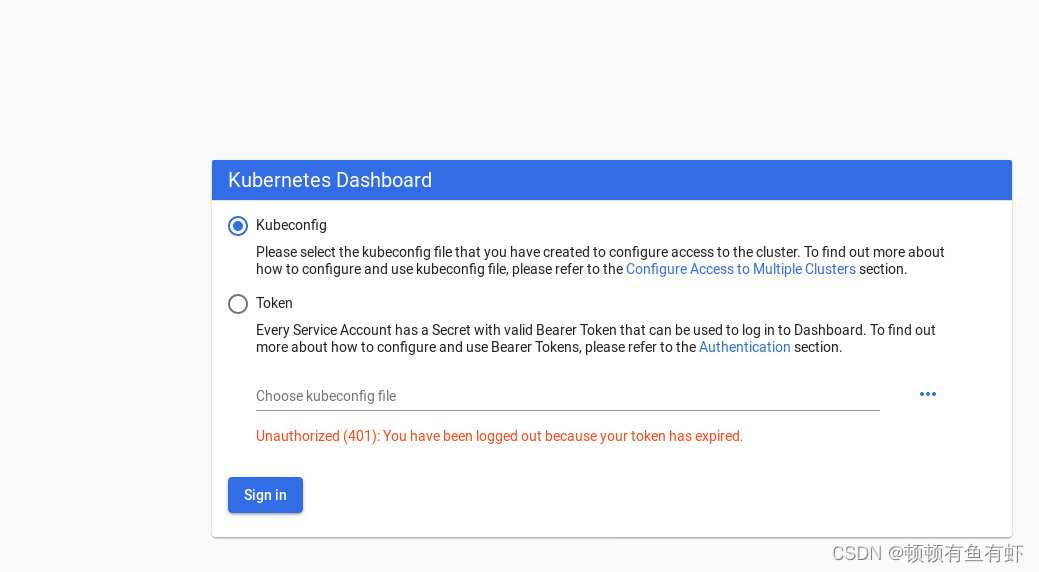

进入显示如下

可以看到需要token才能进入,接下来创建用户

#创建访问账号,准备一个yaml文件; vi dash.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboardkubectl apply -f dash.yaml#获取访问令牌

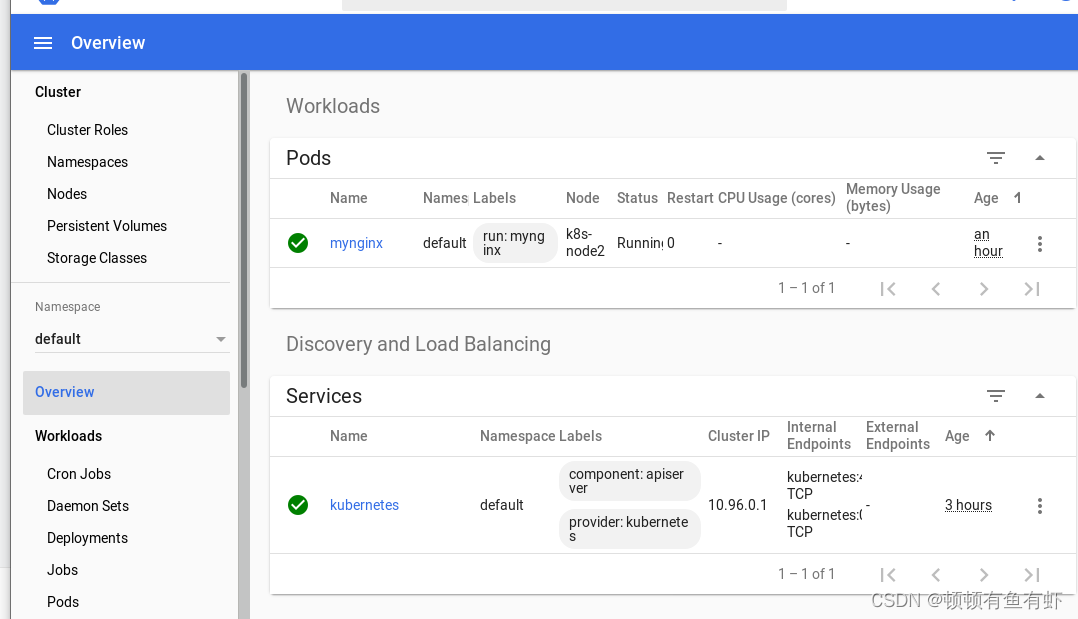

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"使用令牌后就可以进入了。

目录

三、遇到的错误

由于部署过程使用的是virtuaBox虚拟机,所以在部署过程中遇到了一些错误,首先是IP地址的问题,在使用命令 kubectl get nodes -owide可以显示所以节点的详细信息但是我们发现这时候三个节点的IP地址是一样的,这会导致非常严重的问题,我们接下来将无法使用kubectl exec和其他的一些功能。例如

[root@master yaml]# kubectl exec -it db-756759796-gfl8d bash

error: unable to upgrade connection: pod does not exist

[root@master yaml]# kubectl exec -it db-756759796-gfl8d bash

error: unable to upgrade connection: pod does not exist

解决办法:

CENTOS 中,打开:

vi /etc/sysconfig/kubelet

#添加以下内容(ip换成自己的IP,三台都需要换)

KUBELET_EXTRA_ARGS=--node-ip=192.168.56.21

添加完成后:wq保存退出,然后重启kubelet

执行下面命令即可:

systemctl stop kubelet.service && \

systemctl daemon-reload && \

systemctl start kubelet.service

此时你再执行

kubectl get nodes -owide

会发现三台机器的IP变的有所不同了。总结

K8s的一些基础功能和dashboard以及calico的安装到此已经全部完成了,接下来可以使用命令行或者dashboard界面来操作k8s了,如果再部署过程中遇到了新的问题欢迎留言。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)