使用rancher在树莓派与IPC和upboard上搭建一个异构高可用k8s集群的详细教程(手把手教学--配置NTP DNS 静态IP等等)

使用rancher在树莓派与IPC和upboard上搭建一个异构高可用k8s集群的详细教程(手把手教学--配置NTP DNS 静态IP等等)

Rancher文档 : Rancher Docs: Overview.

rancher版本的兼容性 : Support matrix | SUSE.

生成一个自签名证书 : RKE+helm+Rancher(2.5.9)环境搭建 - 追光D影子

由于项目的需要,本文搭建了一个在硬件设备上amd 64 和arm 64架构不同架构的k8s集群。在下面的目录中,第2步搭建基本系统(是所有节点包括loadbalancer,master,worker必须完成的步骤,如果你的所有节点都是VM不是我这种实体设备,那就直接利用这个步骤搭建一个basic system,然后克隆出其他节点node,后面就很简单了)。第3步仅针对于loadbalancer。只要第2步与第3步你做完了,就只剩下安装集群了,安装集群的cluster.yml我放在最后的。

该方法适用于所有实体机HARDWARE和虚拟机VM。

context

- Overview

- 搭建基本系统(loadbalancer masters workers)

- 搭建loadbalancer

- 安装k8s集群

- Troubleshooting

1.Overview

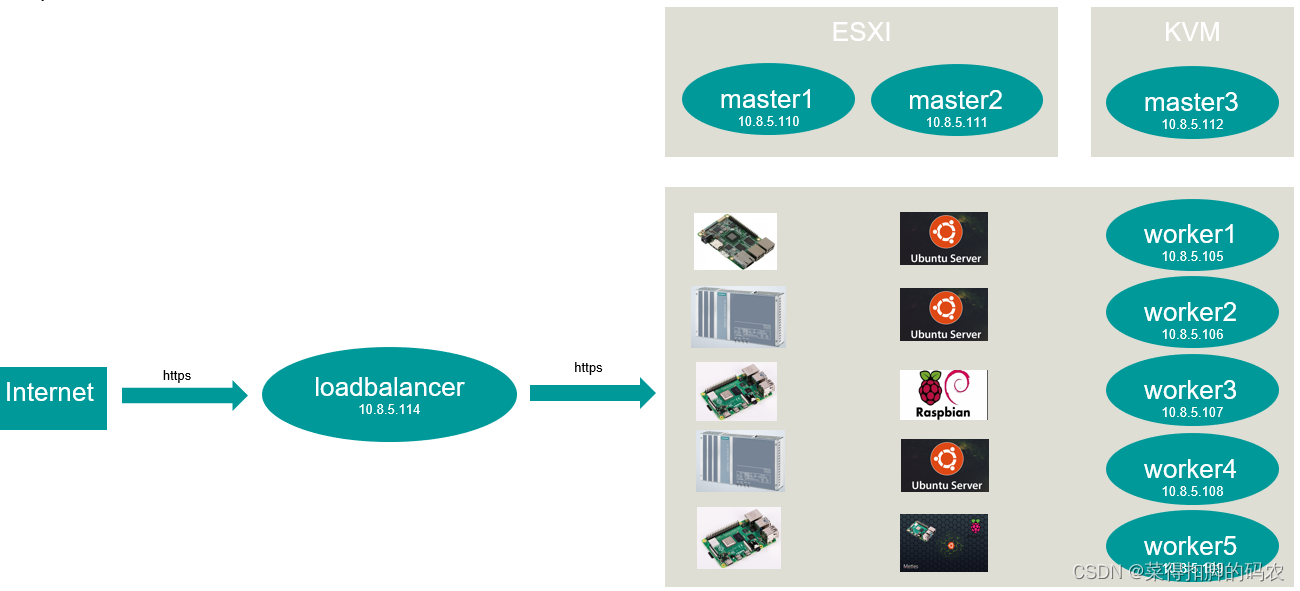

异构k8s集群包括3个主节点和5个工作节点。5个worker分布在AMD64 UPboard上,2个ARM64 Raspberry PI和2个AMD64 IPC上。这三个主机是Unbuntu Server虚拟机。操作系统包括ubuntu server 20.04和raspbian。我的三个master分别位于服务器1的ESXI操作系统上以及服务器2的Ubuntu系统上的KVM虚拟机上,非常推荐ESXI操作系统(流畅,安装启动快,VMWARE有免费单机版的可以申请试用)。

2.搭建一个基本系统

对于包括master,worker和loadbalancer在内的所有节点,您需要执行以下步骤。

2.1安装必要得到工具

确保安装了SSH。如果没有安装SSH,请先安装SSH。

sudo apt-get update

sudo apt-get install net-tools nmap members dnsutils ntp wget -y

安装docker文档 : Install Docker Engine on Ubuntu | Docker Documentation.

配置docker JSON File logging driver :

cat /etc/docker/daemon.json

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}2.2更新你的证书

这个证书怎样生成:RKE+helm+Rancher(2.5.9)环境搭建 - 追光D影子 Download cert from https://code.xxxx.com/svc-china/kubeflow/tls

sudo cp ca.cert /usr/local/share/ca-certificates/ca.crt

sudo update-ca-certificates2.3设置 hostname

#for all nodes,you need to set hostname

hostnamectl set-hostname master1

...

hostnamectl set-hostname worker5

cat /etc/hostname2.4Disable the SWAP

可以通过top命令查看SWAP是否被禁用。

#permanently closed for ubuntu20.04

cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/disk/by-uuid/22f46255-84cf-4611-9afd-1b3a936d32d2 none swap sw,noauto 0 0

# / was on /dev/sda3 during curtin installation

/dev/disk/by-uuid/a52091cb-93d5-4de1-b8dd-ec88b912ee1c / ext4 defaults 0 1

#/swap.img none swap sw 0 0

reboot#temporarily closed

sudo swapoff -a2.5创建一个管理k8s集群的新用户,并将该用户添加到相关组

#add current_user ingroup sudo

sudo /usr/sbin/usermod -aG sudo $current_user

# create a new user

sudo adduser $new_username(I add rancher)

#add rancher ingroup sudo,docker,$current_user

sudo adduser rancher sudo

sudo adduser rancher docker

sudo adduser rancher $current_user

#sudo NOPASSWD

cat /etc/sudoers

$username ALL=(ALL)NOPASSWD:ALL

%group_name ALL=(ALL)NOPASSWD:ALL2.6配置ntp service(所有节点时间同步)

#for ntp server

cat /etc/ntp/ntp.service #add the following script

restrict 10.8.5.114 nomodify notrap nopeer noquery #The IP of ntp server

restrict 10.8.5.254 mask 255.255.255.0 nomodify notrap #The gateway and mask of ntp server

server 127.127.1.0

Fudge 127.127.1.0 stratum 10

#for ntp client

cat /etc/ntp/ntp.service #add the following script

restrict 10.8.5.109 nomodify notrap nopeer noquery #The IP of ntp client

restrict 10.8.5.254 mask 255.255.255.0 nomodify notrap #The gateway and mask of ntp server

server 10.8.5.114

Fudge 10.8.5.114 stratum 10

#restart ntp service

systemctl restart ntp.service2.7设置静态ip

#for ubuntu

cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens160:

dhcp4: false

addresses: [10.8.5.110/24]

gateway4: 10.8.5.254

nameservers:

addresses: [139.24.63.66,139.24.63.68]

version: 2

sudo netplan apply

#for raspbian

cat /etc/network/interfaces

# interfaces(5) file used by ifup(8) and ifdown(8)

# Include files from /etc/network/interfaces.d:

source /etc/network/interfaces.d/*

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.8.5.107

netmask 255.255.255.0

gateway 10.8.5.254

dns-nameservers 10.8.5.114 139.24.63.66 139.24.63.68

systemctl restart networking.service 2.8配置DNS client

在loadbalancer中设置DNS服务器(IP为10.8.5.114)。其他节点已建立DNS客户端。

#for ubuntu

cat /etc/systemd/resolv.conf

# See resolved.conf(5) for details

[Resolve]

DNS=10.8.5.114

systemctl restart systemd-resolved.service

#for raspbian,you can just write the DNS IP in /etc/network/interfaces

cat /etc/network/interfaces

dns-nameservers 10.8.5.114 139.24.63.66 139.24.63.68

systemctl restart networking.service Loadbalancer不需要配置DNS client,但需要配置DNS server。

3.搭建 loadbalancer

3.1配置 DNS server

1)安装bind9.servece:apt-get install bind9.

2)declare the zones in /etc/bind/named.conf.local.

#/etc/bind/named.conf.local

cat /etc/bind/named.conf.local

//

// Do any local configuration here

//

// Consider adding the 1918 zones here, if they are not used in your

// organization

//include "/etc/bind/zones.rfc1918";

zone "svc.lan" {

type master;

file"/etc/bind/db.svc.lan";

};

zone "5.8.10.in-addr.arpa" {

type master;

file"/etc/bind/db.5.8.10";

};3)使用cmd [named-checkconf]检查Bind9配置文件的语法。(也可以不检查,直接复制我的去改没有问题)

4)添加RR文件用于名称解析(db.svc.lan)。

#/etc/bind/db.svc.lan

cat /etc/bind/db.svc.lan

; BIND reverse data file for empty rfc1918 zone

;

; DO NOT EDIT THIS FILE - it is used for multiple zones.

; Instead, copy it, edit named.conf, and use that copy.

;

$TTL 86400

@ IN SOA localhost. root.localhost. (

6 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

86400 ) ; Negative Cache TTL

;

@ IN NS localhost.

master1.svc.lan. IN A 10.8.5.110

master2.svc.lan. IN A 10.8.5.111

master3.svc.lan. IN A 10.8.5.112

master4.svc.lan. IN A 10.8.5.113

worker1.svc.lan. IN A 10.8.5.105

worker2.svc.lan. IN A 10.8.5.106

worker3.svc.lan. IN A 10.8.5.107

worker4.svc.lan. IN A 10.8.5.108

worker5.svc.lan. IN A 10.8.5.109

rancher.svc.lan. IN A 10.8.5.114

* IN CNAME rancher5)添加RR文件用于反向名称解析(db.5.8.10)。

#/etc/bind/db.5.8.10

cat /etc/bind/db.5.8.10

;

; BIND reverse data file for broadcast zone

;

$TTL 604800

@ IN SOA localhost. root.localhost. (

6 ; Serial

604800 ; Refresh

86400 ; Retry

2419200 ; Expire

604800 ) ; Negative Cache TTL

;

@ IN NS localhost.

110 IN PTR master1.svc.lan.

111 IN PTR master2.svc.lan.

112 IN PTR master3.svc.lan.

113 IN PTR master4.svc.lan.

105 IN PTR worker1.svc.lan.

106 IN PTR worker2.svc.lan.

107 IN PTR worker3.svc.lan.

108 IN PTR worker4.svc.lan.

109 IN PTR worker5.svc.lan.

114 IN PTR rancher.svc.lan.6)检查RR文件的所有者和组都是root。(ll查看,修改sudo chown user:user RR_FILE)

7)用户可以在 cmd [named-checkzone]在重置配置前验证区域文件的有效性。

8)systemctl restart bind9.service.

9)systemctl status bind9.service, check status of bind9.

3.2安装必要的集群工具

这些工具 RKE, helm 和 kubectl.

#RKE is the tool to boot the k8s cluster

wget https://github.com/rancher/rke/releases/download/v1.3.8/rke_linux-amd64

#Helm is the tool to deploy the rancher

wget https://get.helm.sh/helm-v3.9.0-linux-amd64.tar.gz

#Kubectl is a tool for manipulating k8s cluster

sudo apt-get update

sudo apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubectl3.3配置 nginx service(为了在windows上访问rancher的UI界面)

#install nginx

sudo apt-get install nginx

cat /etc/nginx/nginx.conf

load_module /usr/lib/nginx/modules/ngx_stream_module.so;

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

stream {

upstream k8s_servers_http {

least_conn;

server worker1.svc.lan:80 max_fails=3 fail_timeout=5s;

server worker2.svc.lan:80 max_fails=3 fail_timeout=5s;

server worker3.svc.lan:80 max_fails=3 fail_timeout=5s;

server worker4.svc.lan:80 max_fails=3 fail_timeout=5s;

server worker5.svc.lan:80 max_fails=3 fail_timeout=5s;

}

server {

listen 80;

proxy_pass k8s_servers_http;

}

upstream k8s_servers_https {

least_conn;

server worker1.svc.lan:443 max_fails=3 fail_timeout=5s;

server worker2.svc.lan:443 max_fails=3 fail_timeout=5s;

server worker3.svc.lan:443 max_fails=3 fail_timeout=5s;

server worker4.svc.lan:443 max_fails=3 fail_timeout=5s;

server worker5.svc.lan:443 max_fails=3 fail_timeout=5s;

}

server {

listen 443;

proxy_pass k8s_servers_https;

}

}

systemctl restart nginx.service3.4设置免密登录(loadbalancer到所有worker不需要密码,才能RKE自动引导集群)

在loadbalancer上,用你的新用户 $new_user登录。

#su $new_user

su rancher

ssh-keygen -t rsa

ssh-copy-id rancher@master1.svc.lan

...

ssh-copy-id rancher@worker5.svc.lan4.安装 k8s cluster

4.1检查相关准备工作是否做完了?

- DNS 是否工作?→nslookup master1.svc.lan(on all nodes)

- ssh免密登录?→ssh master1.svc.lan(on loadbalancer)

- 检查 nginx.service 的状态是 running?→systemctl status nginx.service

- 每一个节点的hostname是否已经修改成功?→cat /etc/hostname cat /etc/hosts

4.2用RKE安装k8s cluster

如果您的节点安装在arm64设备上,请在配置cluster.yml时将网络设置为flannel。具体的cluster.yml贴在最后,仅供参考。

#Create a cluster.yml template

rke config

rke up4.3安装 rancher

下面的tls.crt tls.key cacerts.pem都是由工具生成,请参考这个链接:生成key的链接

#create a new namespace

kubectl create namespace cattle-system

#import certificate

kubectl -n cattle-system create secret tls tls-rancher-ingress \

--cert=tls.crt \

--key=tls.key

kubectl -n cattle-system create secret generic tls-ca \

--from-file=cacerts.pem

#add helm repo

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

#install rancher with helm

helm install rancher rancher-stable/rancher \

--namespace cattle-system \

--set hostname=rancher.svc.lan \

--set ingress.tls.source=secret \

--set privateCA=true

#check the pod status in cattle-system namespace

kubectl get all -n cattle-system5.Troubleshooting

Rancher 官方的 troubleshooting :Rancher Docs: Troubleshooting.

5.0 rancher成功安装好后,在Windows去访问rancher UI,不能访问rancher UI

1)可能你没有在Windows去配置你的DNS server的地址。

2) 那可能是你的bind9配置DNS server出错了,你可以手动修改

C:\Windows\System32\drivers\etc\hosts,然后再去访问https://rancher.svc.lan。这里DNS sever最常见的问题就是,你的/etc/bind/文件夹下新创建的db.svc.lan,db.5.8.10,named.conf.local必须是root:root权限。

5.1Can't add a node to k8s cluster

Method1 : sudo date -s 12:00:31 sudo date s 2022 07-28

Method2 : systemctl restart ntp.service

5.2树莓派不能安装docker

#cat cluster.yml svc@worker3:/etc $ sudo date -s 2022-01-01

Sat 01 Jan 2022 12:00:00 AM UTC

svc@worker3:/etc $ sudo apt update

Hit:1 http://archive.raspberrypi.org/debian bullseye InRelease

Hit:2 http://deb.debian.org/debian bullseye InRelease

Hit:3 http://security.debian.org/debian-security bullseye-security InRelease

Hit:4 http://deb.debian.org/debian bullseye-updates InRelease

Reading package lists... Done

E: Release file for http://archive.raspberrypi.org/debian/dists/bullseye/InRelease is not valid yet (invalid for another 198d 21h 15min 7s). Updates for this repository will not be applied.

E: Release file for http://deb.debian.org/debian/dists/bullseye/InRelease is not valid yet (invalid for another 189d 9h 42min 46s). Updates for this repository will not be applied.

E: Release file for http://security.debian.org/debian-security/dists/bullseye-security/InRelease is not valid yet (invalid for another 197d 12h 11min 32s). Updates for this repository will not be applied.

E: Release file for http://deb.debian.org/debian/dists/bullseye-updates/InRelease is not valid yet (invalid for another 199d 2h 14min 28s). Updates for this repository will not be applied. Method1 : sudo date -s 12:00:31 sudo date -s 2022-07-28

Method2 : systemctl restart ntp.service

5.3 搭建好集群之后,kubectl get nodes -A出错

需要设置环境变量,kubectl找不到kubeconfig文件。

在loadbalancer这边的/home/rancher/~/.bashrc中,添加export KUBECONFIG=/home/rancher/kube_config_cluster.yml,然后source ~/.bashrc

5.4 部署一个节点在树莓派这种arm64 device, the node is Notready

#cat cluster.yml

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: master1.svc.lan

port: "22"

internal_address: ""

role:

- controlplane

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: master2.svc.lan

port: "22"

internal_address: ""

role:

- controlplane

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: master3.svc.lan

port: "22"

internal_address: ""

role:

- controlplane

- etcd

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: worker1.svc.lan

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: worker2.svc.lan

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: worker3.svc.lan

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: worker4.svc.lan

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: worker5.svc.lan

port: "22"

internal_address: ""

role:

- worker

hostname_override: ""

user: rancher

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: flannel

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.0

alpine: rancher/rke-tools:v0.1.80

nginx_proxy: rancher/rke-tools:v0.1.80

cert_downloader: rancher/rke-tools:v0.1.80

kubernetes_services_sidecar: rancher/rke-tools:v0.1.80

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.17.4

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.17.4

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.17.4

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.3

coredns: rancher/mirrored-coredns-coredns:1.8.6

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.22.7-rancher1

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.21.1

calico_cni: rancher/mirrored-calico-cni:v3.21.1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.21.1

calico_ctl: rancher/mirrored-calico-ctl:v3.21.1

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.21.1

canal_node: rancher/mirrored-calico-node:v3.21.1

canal_cni: rancher/mirrored-calico-cni:v3.21.1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.21.1

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.21.1

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.1.0-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.5.1

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.1.1.0.1ae238a

aci_host_container: noiro/aci-containers-host:5.1.1.0.1ae238a

aci_opflex_container: noiro/opflex:5.1.1.0.1ae238a

aci_mcast_container: noiro/opflex:5.1.1.0.1ae238a

aci_ovs_container: noiro/openvswitch:5.1.1.0.1ae238a

aci_controller_container: noiro/aci-containers-controller:5.1.1.0.1ae238a

aci_gbp_server_container: noiro/gbp-server:5.1.1.0.1ae238a

aci_opflex_server_container: noiro/opflex-server:5.1.1.0.1ae238a

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null5.5在Ubuntu中由KVM引导的虚拟机VM不能ssh登录到其他VM 。

设置一个网桥,KVM的虚拟机使用桥接模式就可以了。要保证所有节点都在10.8.5.*这个网段。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)