- Deploying Using Google Cloud Build

-

Deploying Using Azure Pipelines

- Setting up Google Service Account

- Storing Security Key

- Creating CI/CD pipeline

- Final thoughts

It goes without saying that Azure Devops pipelines and Azure cloud is a natural fit. A deployment to Azure is streamlined with many ready-to-use templates and Azure CLI installed by default on managed agents. However the reality is that a lot of companies have to deal with a multi-cloud environment. It would be beneficial if we can manage our builds and deployment in one place, no matter what cloud provider is used.

In this recipe we will consider the deployment options for Google cloud function on GCP and walk through detailed steps of creating CI/CD pipeline in Azure DevOps. For the purpose of this exercise we assume that you already developed a function. If not, you can use one of the many tutorials, like this one, to create one.

Using Google Cloud Build

So one way to deploy a cloud function would be to use a native Google Cloud Build. We can set up a connected external Cloud repository at https://source.cloud.google.com that will be automatically synchronized with our main repo. Then we can create a Cloud Build Trigger that can run a YAML pipeline not dissimilar to Azure's one. When a new change is pushed to the Git repo, it will be synced to the Google repository and trigger the build and deployment.

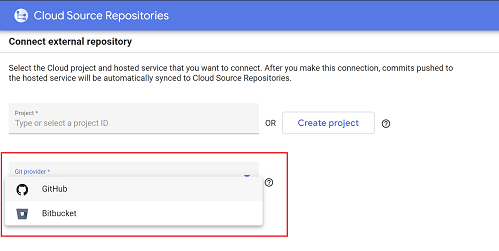

There are however several issues with that. First of all, an external repository could be hosted only on Github or Bitbucket, as seen on a screenshot below. So if your source code is in Azure Repos or anywhere else, you are out of luck.

But most importantly, it moves a control and auditing out of Azure Devops and that contradicts our goal of keeping everything under one roof.

Using Azure Pipelines

Fortunately, Azure Pipelines are flexible enough to deploy to practically any environment. We will outline basic steps to do that for Google Cloud Functions and GCP.

Setting up a Service Account

We will need a Google Service Account to secure a communication between Azure Pipelines and GCP. Taking from the Google documentation here is how to do it using Google Cloud Shell.

- Login to GCP Console.

- Select the project where your function is deployed.

- Activate Cloud Shell.

-

Set default configuration values to save some typing. Replace [PROJECT_ID] and [ZONE] with appropriate values.

gcloud config set project [PROJECT_ID] gcloud config set compute/zone [ZONE] -

Create a Service Account:

gcloud iam service-accounts create azure-pipelines-publisher --display-name "Azure Pipelines Publisher" -

Assign the Storage Admin IAM role to the service account:

PROJECT_NUMBER=$(gcloud projects describe \ $(gcloud config get-value core/project) \ --format='value(projectNumber)') AZURE_PIPELINES_PUBLISHER=$(gcloud iam service-accounts list \ --filter="displayName:Azure Pipelines Publisher" \ --format='value(email)') gcloud projects add-iam-policy-binding \ $(gcloud config get-value core/project) \ --member serviceAccount:$AZURE_PIPELINES_PUBLISHER \ --role roles/storage.admin

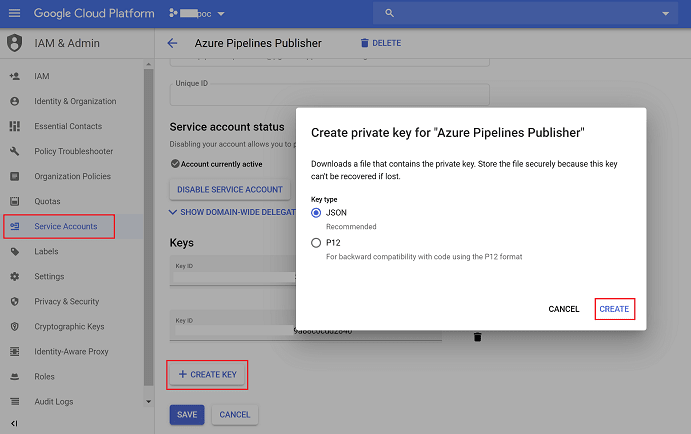

We also need to generate and download a service account key to use later on in an Azure Pipeline. The easiest way is to navigate to the IAM & Admin / Service Accounts menu, and select "Edit" on azure-pipelines-publisher@[PROJECT_ID].iam.gserviceaccount.com that we just created. Then create a key as on a screenshot below.

Keep the file as we are going to use it in a moment.

Storing service account key in Azure DevOps

We pretty much finished with Google Platform, let's switch to Azure DevOps and continue there.

To upload a JSON file go to the Library page under the Pipelines navigation panel and select the "Secure Files" tab. Here we can add our key to the library.

After the key is uploaded, edit it and toggle "Authorize for all pipelines" to be able to use it in our pipeline.

Creating CI/CD pipeline

Our simple pipeline will deploy one cloud function to GCP. Of course, it can be extended with unit tests and other functions, but we want to show the bare minimum.

- First, let's use the secure key that we uploaded earlier:

- task: DownloadSecureFile@1

name: authkey

displayName: 'Download Service Account Key'

inputs:

secureFile: 'GoogleServiceAccountKey.json'

retryCount: '2'

- Next, we need a Google Cloud SDK to deploy our function.

!!!UPDATE 2020-05: Good news, on the "ubuntu-latest" hosts Google Cloud SDK (292.0.0) is installed by default. So you probably can skip this step. See this link for more details: Ubuntu1804

The biggest challenge is that Google Cloud SDK is not installed on Microsoft Hosted Agents, understandably so. There are a couple of ways to do that.

Official Google Documentation did not work for me though right out of the gate. But you can follow the link if you'd like to install it using apt-get. Alternatively, we can get the package directly from the Google download site.

- script: |

wget https://dl.google.com/dl/cloudsdk/release/google-cloud-sdk.tar.gz

tar zxvf google-cloud-sdk.tar.gz && ./google-cloud-sdk/install.sh --quiet --usage-reporting=false --path-update=true

PATH="google-cloud-sdk/bin:${PATH}"

gcloud --quiet components update

displayName: 'install gcloud SDK'

- Finally, we are ready to deploy the function:

- script:

gcloud auth activate-service-account --key-file $(authkey.secureFilePath)

gcloud functions deploy [FUNCTION_NAME] --runtime nodejs8 --trigger-http --region=[REGION] --project=[PROJECT_ID]

displayName: 'deploy cloud function'

As usual, here is a full source code of the YAML pipeline:

Final thoughts

When you work in a multi-cloud environment it is especially important to consolidate DevOps operations for better control, monitoring and auditing. Azure DevOps could be one of the answers as it allows deployment to multiple platforms and integrates many aspects of software development life cycle into a cohesive, easy to use product. As an example, in this recipe we created a sample CI/CD pipeline to deploy a Google function to GCP.

I hope that was useful, here is a cat (in a cloud!) for you.

已为社区贡献22914条内容

已为社区贡献22914条内容

所有评论(0)