腾讯云轻量应用服务器部署MCP Server实践

本文介绍了在腾讯云轻量应用服务器(Lighthouse)上部署MCP Server(模型容器平台服务)的完整实践指南。文章首先对比了腾讯云轻量应用服务器与传统云服务器的优势,随后详细说明了环境准备、服务器创建配置、必要软件安装等前置步骤。重点讲解了MCP Server的微服务架构、Docker部署方式、配置文件准备以及容器启动流程,为开发者提供了从零开始部署AI模型容器化服务的技术方案。通过本文,

引言

在当今人工智能技术飞速发展的时代,AI能力已经成为推动各行各业数字化转型的核心驱动力。腾讯云作为国内领先的云计算服务提供商,推出了多项AI相关产品和服务,其中MCP Server(Model Container Platform Server)作为模型容器平台服务,为开发者提供了便捷的AI模型部署和管理能力。

本文将详细介绍如何利用腾讯云轻量应用服务器(Lighthouse)部署MCP Server,并结合实际应用场景,展示如何通过这一技术栈解决实际业务问题。通过本实践指南,读者将能够:

- 理解MCP Server的核心功能和应用场景

- 掌握腾讯云轻量应用服务器的基本使用方法

- 学会在轻量应用服务器上部署和配置MCP Server

- 实现AI模型的容器化部署和管理

- 构建完整的AI应用解决方案

1. 技术背景与概念解析

腾讯云轻量应用服务器(Lighthouse)

腾讯云轻量应用服务器(Lightweight Cloud Server,简称Lighthouse)是新一代面向轻量级应用场景的云服务器产品。它具有以下特点:

- 开箱即用:提供丰富的应用镜像,支持一键部署常见应用

- 简化运维:集成化的界面管理,降低服务器管理和维护复杂度

- 高性价比:针对轻量级应用场景优化,提供更具性价比的计算资源

- 网络优化:针对中国大陆网络环境优化,提供更好的访问体验

- 安全可靠:基于腾讯云成熟的虚拟化技术和安全体系,保障应用安全稳定运行

对比云服务器 CVM 具有如下优势:

将MCP Server部署在腾讯云轻量应用服务器上,可以获得以下优势:

- 成本效益:轻量应用服务器提供更具性价比的计算资源,适合中小型AI应用部署

- 快速部署:通过轻量应用服务器的应用镜像功能,可以快速完成MCP Server的初始化部署

- 简化管理:轻量应用服务器提供的集成化管理界面,降低了MCP Server的运维复杂度

- 弹性扩展:根据业务需求,可以灵活调整服务器配置,满足不同规模的AI应用需求

- 生态整合:与腾讯云其他服务(如COS、CLS等)无缝集成,构建完整的AI应用解决方案

2. 环境准备与前置条件

2.1 账户准备

在开始部署之前,需要完成以下准备工作:

- 腾讯云账户注册:访问腾讯云官网(https://cloud.tencent.com)注册账户

- 实名认证:完成个人或企业实名认证

- 账户充值:确保账户余额充足,以支付服务器和相关服务费用

- 权限配置:确保账户具有创建和管理轻量应用服务器的权限

2.2 本地环境准备

为了顺利完成部署过程,需要在本地环境中准备以下工具:

- SSH客户端:用于远程连接轻量应用服务器(Windows推荐使用PuTTY,macOS/Linux可使用系统自带的SSH命令)

- Docker环境:用于本地模型容器的构建和测试

- Git工具:用于代码版本管理和部署脚本的获取

- 文本编辑器:推荐使用VS Code等支持语法高亮的编辑器

2.3 技术知识储备

部署MCP Server需要具备以下技术知识:

- Linux基础操作:熟悉常用的Linux命令和文件系统操作

- Docker容器技术:了解Docker的基本概念、镜像构建和容器管理

- 网络基础知识:理解TCP/IP协议、端口映射等网络概念

- AI模型基础:了解常见的AI模型格式和推理流程

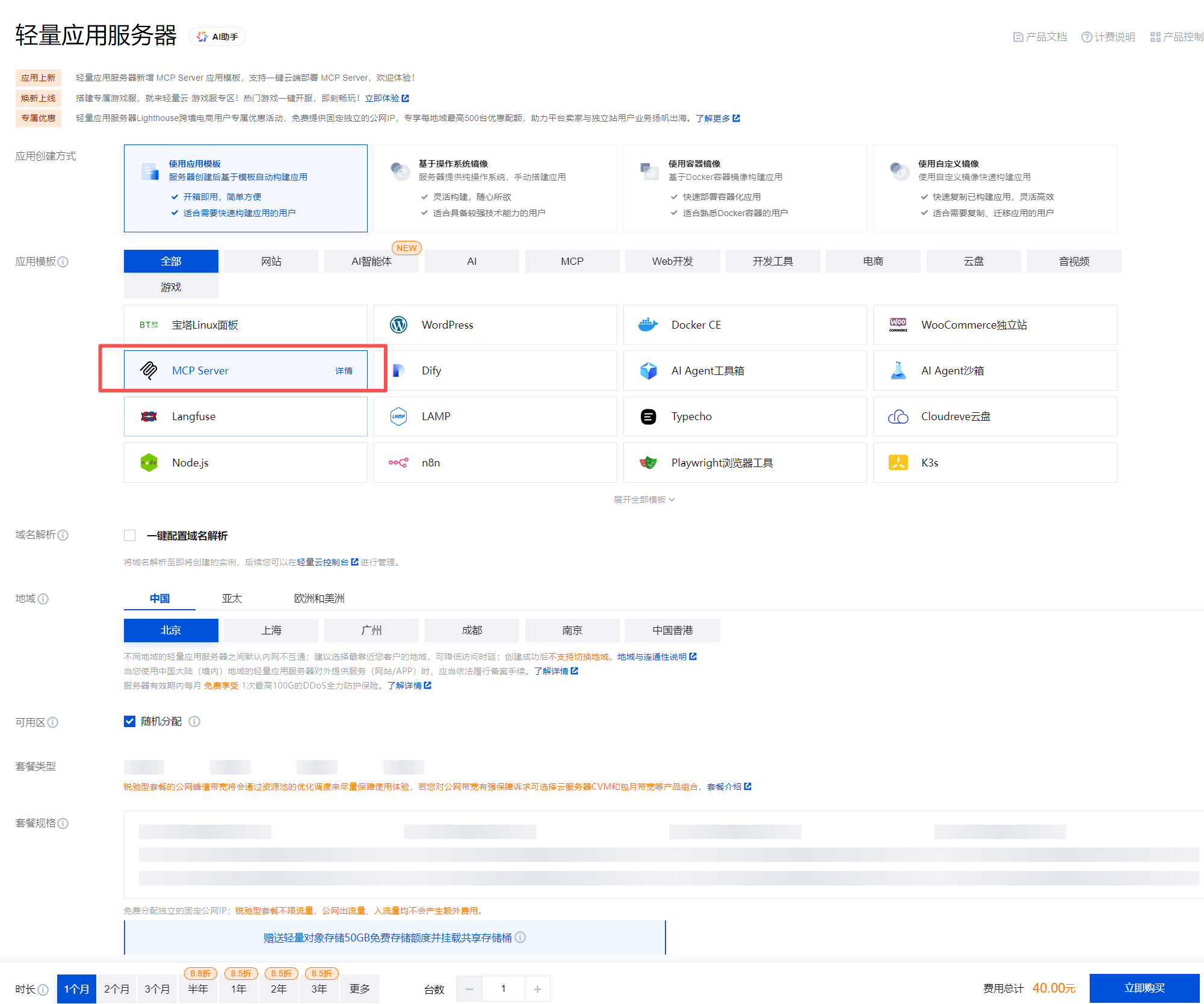

3. 轻量应用服务器创建与配置

3.1 创建轻量应用服务器

- 登录腾讯云控制台:访问腾讯云官网,使用已注册的账户登录控制台

- 进入轻量应用服务器管理页面:

- 在控制台首页找到"轻量应用服务器"产品

- 点击进入Lighthouse管理控制台

- 创建服务器实例:

- 点击"新建实例"按钮

- 选择合适的地域(建议选择业务主要服务的地域)

- 选择实例套餐(根据AI模型的计算需求选择合适的配置)

- 选择镜像(推荐选择Ubuntu 20.04或CentOS 8等较新版本的Linux系统)

- 配置实例名称和密码

- 确认配置并完成支付

3.2 服务器基础配置

创建完成后,需要对服务器进行基础配置:

-

安全组配置:

- 在服务器详情页面找到"防火墙"选项卡

- 添加必要的端口规则,包括:

- SSH端口(默认22)

- MCP Server管理端口(默认8080)

- 模型推理端口(根据实际需求配置)

- 建议限制访问IP范围,提高安全性

-

网络配置:

- 记录服务器的公网IP地址

- 配置域名解析(可选,但推荐)

- 测试网络连通性

-

系统更新: # Ubuntu/Debian系统

sudo apt update && sudo apt upgrade -yCentOS/RHEL系统

sudo yum update -y

3.3 必要软件安装

在部署MCP Server之前,需要安装必要的依赖软件:

# Ubuntu/Debian系统

sudo apt install -y docker.io docker-compose git curl wget vim nginx

# 启动Docker服务

sudo systemctl start docker

sudo systemctl enable docker

# CentOS/RHEL系统

sudo yum install -y docker git curl wget vim nginx

sudo systemctl start docker

sudo systemctl enable docker

4. MCP Server部署详解

4.1 MCP Server架构概述

MCP Server采用微服务架构设计,主要包含以下组件:

- API Gateway:统一入口,负责请求路由和负载均衡

- Model Manager:模型管理服务,负责模型的注册、更新和删除

- Inference Engine:推理引擎,负责模型推理请求的处理

- Storage Service:存储服务,负责模型文件的存储和管理

- Monitoring Service:监控服务,负责系统性能监控和日志收集

4.2 获取MCP Server部署包

MCP Server的部署可以通过多种方式获取,推荐使用官方提供的Docker镜像:

# 拉取MCP Server核心服务镜像

docker pull tencentcloud/mcp-server:latest

docker pull tencentcloud/mcp-model-manager:latest

docker pull tencentcloud/mcp-inference-engine:latest

# 拉取相关依赖服务镜像

docker pull mysql:8.0

docker pull redis:6.0

docker pull nginx:latest

4.3 配置文件准备

创建MCP Server的配置目录和配置文件:

# 创建工作目录

mkdir -p /opt/mcp-server/{config,data,logs}

cd /opt/mcp-server

# 创建Docker Compose配置文件

cat > docker-compose.yml << 'EOF'

version: '3.8'

services:

mysql:

image: mysql:8.0

container_name: mcp-mysql

environment:

MYSQL_ROOT_PASSWORD: mcp_root_password

MYSQL_DATABASE: mcp_server

MYSQL_USER: mcp_user

MYSQL_PASSWORD: mcp_password

volumes:

- ./data/mysql:/var/lib/mysql

ports:

- "3306:3306"

networks:

- mcp-network

redis:

image: redis:6.0

container_name: mcp-redis

ports:

- "6379:6379"

networks:

- mcp-network

model-manager:

image: tencentcloud/mcp-model-manager:latest

container_name: mcp-model-manager

ports:

- "8081:8080"

environment:

- DATABASE_HOST=mysql

- DATABASE_PORT=3306

- DATABASE_NAME=mcp_server

- DATABASE_USER=mcp_user

- DATABASE_PASSWORD=mcp_password

- REDIS_HOST=redis

- REDIS_PORT=6379

depends_on:

- mysql

- redis

networks:

- mcp-network

inference-engine:

image: tencentcloud/mcp-inference-engine:latest

container_name: mcp-inference-engine

ports:

- "8082:8080"

environment:

- MODEL_MANAGER_HOST=model-manager

- MODEL_MANAGER_PORT=8080

- REDIS_HOST=redis

- REDIS_PORT=6379

depends_on:

- model-manager

- redis

networks:

- mcp-network

api-gateway:

image: tencentcloud/mcp-server:latest

container_name: mcp-api-gateway

ports:

- "8080:8080"

environment:

- MODEL_MANAGER_HOST=model-manager

- MODEL_MANAGER_PORT=8080

- INFERENCE_ENGINE_HOST=inference-engine

- INFERENCE_ENGINE_PORT=8080

depends_on:

- model-manager

- inference-engine

networks:

- mcp-network

networks:

mcp-network:

driver: bridge

EOF

4.4 启动MCP Server服务

使用Docker Compose启动MCP Server服务:

# 进入配置目录

cd /opt/mcp-server

# 启动服务

docker-compose up -d

# 查看服务状态

docker-compose ps

# 查看服务日志

docker-compose logs -f

4.5 服务验证

启动完成后,需要验证各服务是否正常运行:

# 检查各服务端口是否正常监听

netstat -tlnp | grep -E "8080|8081|8082|3306|6379"

# 检查Docker容器状态

docker ps | grep mcp

# 测试API网关是否响应

curl -X GET http://localhost:8080/health

5. AI模型部署实践

5.1 模型准备

在部署AI模型之前,需要准备模型文件。以图像分类模型为例:

# 创建模型目录

mkdir -p /opt/mcp-server/models/image-classifier

# 准备模型文件(以TensorFlow SavedModel格式为例)

# 实际应用中,需要将训练好的模型文件放置在此目录

cd /opt/mcp-server/models/image-classifier

# 创建模型描述文件

cat > model.json << 'EOF'

{

"name": "image-classifier",

"version": "1.0.0",

"framework": "tensorflow",

"input": {

"type": "image",

"format": "jpeg",

"shape": [224, 224, 3]

},

"output": {

"type": "classification",

"classes": 1000

},

"description": "Image classification model based on ResNet50"

}

EOF

5.2 模型容器化

为了在MCP Server中部署模型,需要将模型打包成Docker容器:

# 创建Dockerfile

cat > Dockerfile << 'EOF'

FROM tensorflow/serving:2.8.0

# 复制模型文件

COPY models/image-classifier /models/image-classifier

# 设置环境变量

ENV MODEL_NAME=image-classifier

ENV MODEL_BASE_PATH=/models

# 暴露TensorFlow Serving端口

EXPOSE 8500 8501

# 启动TensorFlow Serving

CMD ["tensorflow_model_server", "--rest_api_port=8501", "--model_name=image-classifier", "--model_base_path=/models/image-classifier"]

EOF

# 构建模型镜像

docker build -t mcp-model-image-classifier:1.0 .

# 验证镜像构建

docker images | grep mcp-model-image-classifier

5.3 模型注册到MCP Server

通过MCP Server的API接口注册模型:

# 注册模型

curl -X POST http://localhost:8080/api/v1/models \

-H "Content-Type: application/json" \

-d '{

"name": "image-classifier",

"version": "1.0.0",

"description": "Image classification model based on ResNet50",

"framework": "tensorflow",

"image": "mcp-model-image-classifier:1.0",

"port": 8501,

"health_check_path": "/v1/models/image-classifier"

}'

# 查看已注册的模型

curl -X GET http://localhost:8080/api/v1/models

5.4 模型部署与启动

注册完成后,可以部署并启动模型:

# 部署模型

curl -X POST http://localhost:8080/api/v1/models/image-classifier/deploy

# 查看模型部署状态

curl -X GET http://localhost:8080/api/v1/models/image-classifier/status

# 测试模型推理

curl -X POST http://localhost:8080/api/v1/models/image-classifier/predict \

-H "Content-Type: application/json" \

-d '{

"instances": [

{

"input": "base64_encoded_image_data"

}

]

}'

6. 性能优化与监控配置

6.1 资源优化配置

为了提高MCP Server的性能,需要对系统资源进行合理配置:

# 创建系统优化脚本

cat > /opt/mcp-server/scripts/system-tuning.sh << 'EOF'

#!/bin/bash

# 调整内核参数

echo 'net.core.somaxconn = 65535' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_max_syn_backlog = 65535' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_fin_timeout = 30' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_keepalive_time = 1200' >> /etc/sysctl.conf

echo 'net.ipv4.tcp_max_tw_buckets = 5000' >> /etc/sysctl.conf

# 应用内核参数

sysctl -p

# 调整Docker daemon配置

cat > /etc/docker/daemon.json << 'DOCKEREOF'

{

"registry-mirrors": ["https://mirror.ccs.tencentyun.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

},

"default-ulimits": {

"nofile": {

"Name": "nofile",

"Hard": 65536,

"Soft": 65536

}

}

}

DOCKEREOF

# 重启Docker服务

systemctl restart docker

EOF

# 执行系统优化

chmod +x /opt/mcp-server/scripts/system-tuning.sh

sudo /opt/mcp-server/scripts/system-tuning.sh

6.2 监控系统配置

配置监控系统以实时了解MCP Server的运行状态:

# 安装监控组件

docker pull prom/prometheus:latest

docker pull grafana/grafana:latest

# 创建Prometheus配置文件

mkdir -p /opt/mcp-server/monitoring/prometheus

cat > /opt/mcp-server/monitoring/prometheus/prometheus.yml << 'EOF'

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'mcp-server'

static_configs:

- targets: ['api-gateway:8080', 'model-manager:8080', 'inference-engine:8080']

- job_name: 'system'

static_configs:

- targets: ['localhost:9100']

EOF

# 创建Grafana配置

mkdir -p /opt/mcp-server/monitoring/grafana

# 更新docker-compose.yml添加监控服务

cat >> /opt/mcp-server/docker-compose.yml << 'EOF'

prometheus:

image: prom/prometheus:latest

container_name: mcp-prometheus

ports:

- "9090:9090"

volumes:

- ./monitoring/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

networks:

- mcp-network

grafana:

image: grafana/grafana:latest

container_name: mcp-grafana

ports:

- "3000:3000"

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin123

volumes:

- ./monitoring/grafana:/var/lib/grafana

depends_on:

- prometheus

networks:

- mcp-network

EOF

# 重启服务以应用监控配置

cd /opt/mcp-server

docker-compose down

docker-compose up -d

6.3 日志管理配置

配置日志管理系统以方便问题排查:

# 安装ELK Stack相关组件

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.17.0

docker pull docker.elastic.co/logstash/logstash:7.17.0

docker pull docker.elastic.co/kibana/kibana:7.17.0

# 创建日志目录

mkdir -p /opt/mcp-server/logging/{elasticsearch,logstash,kibana}

# 创建Logstash配置文件

cat > /opt/mcp-server/logging/logstash/logstash.conf << 'EOF'

input {

file {

path => "/var/log/mcp-server/*.log"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

json {

source => "message"

}

date {

match => [ "timestamp", "ISO8601" ]

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

index => "mcp-server-%{+YYYY.MM.dd}"

}

}

EOF

# 更新docker-compose.yml添加日志服务

cat >> /opt/mcp-server/docker-compose.yml << 'EOF'

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.17.0

container_name: mcp-elasticsearch

environment:

- discovery.type=single-node

- ES_JAVA_OPTS=-Xms512m -Xmx512m

ports:

- "9200:9200"

- "9300:9300"

volumes:

- ./logging/elasticsearch:/usr/share/elasticsearch/data

networks:

- mcp-network

logstash:

image: docker.elastic.co/logstash/logstash:7.17.0

container_name: mcp-logstash

ports:

- "5044:5044"

volumes:

- ./logging/logstash/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

- ./logs:/var/log/mcp-server

depends_on:

- elasticsearch

networks:

- mcp-network

kibana:

image: docker.elastic.co/kibana/kibana:7.17.0

container_name: mcp-kibana

ports:

- "5601:5601"

environment:

- ELASTICSEARCH_HOSTS=["http://elasticsearch:9200"]

depends_on:

- elasticsearch

networks:

- mcp-network

EOF

# 重启服务以应用日志配置

cd /opt/mcp-server

docker-compose down

docker-compose up -d

7. 安全配置与访问控制

7.1 网络安全配置

配置网络安全策略以保护MCP Server免受未授权访问:

# 创建安全配置脚本

cat > /opt/mcp-server/scripts/security-setup.sh << 'EOF'

#!/bin/bash

# 安装fail2ban防止暴力破解

apt update

apt install -y fail2ban

# 配置fail2ban

cat > /etc/fail2ban/jail.local << 'JAILCONF'

[sshd]

enabled = true

port = ssh

filter = sshd

logpath = /var/log/auth.log

maxretry = 3

bantime = 3600

findtime = 600

[nginx-http-auth]

enabled = true

port = http,https

filter = nginx-http-auth

logpath = /var/log/nginx/error.log

maxretry = 3

bantime = 3600

findtime = 600

JAILCONF

# 启动fail2ban

systemctl start fail2ban

systemctl enable fail2ban

# 配置iptables防火墙规则

iptables -A INPUT -i lo -j ACCEPT

iptables -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT

iptables -A INPUT -p tcp --dport 22 -j ACCEPT

iptables -A INPUT -p tcp --dport 80 -j ACCEPT

iptables -A INPUT -p tcp --dport 443 -j ACCEPT

iptables -A INPUT -p tcp --dport 8080 -j ACCEPT

iptables -A INPUT -j DROP

# 保存iptables规则

iptables-save > /etc/iptables/rules.v4

EOF

# 执行安全配置

chmod +x /opt/mcp-server/scripts/security-setup.sh

sudo /opt/mcp-server/scripts/security-setup.sh

7.2 API访问认证配置

配置API访问认证以保护MCP Server的API接口:

# 创建JWT认证中间件

mkdir -p /opt/mcp-server/auth

cat > /opt/mcp-server/auth/jwt-middleware.js << 'EOF'

const jwt = require('jsonwebtoken');

const fs = require('fs');

// 生成JWT密钥对

const privateKey = fs.readFileSync('/opt/mcp-server/auth/private.key', 'utf8');

const publicKey = fs.readFileSync('/opt/mcp-server/auth/public.key', 'utf8');

// JWT认证中间件

function authenticateToken(req, res, next) {

const authHeader = req.headers['authorization'];

const token = authHeader && authHeader.split(' ')[1];

if (token == null) return res.sendStatus(401);

jwt.verify(token, publicKey, (err, user) => {

if (err) return res.sendStatus(403);

req.user = user;

next();

});

}

module.exports = { authenticateToken };

EOF

# 生成JWT密钥对

openssl genrsa -out /opt/mcp-server/auth/private.key 2048

openssl rsa -in /opt/mcp-server/auth/private.key -pubout -out /opt/mcp-server/auth/public.key

# 创建API密钥管理脚本

cat > /opt/mcp-server/scripts/api-key-manager.sh << 'EOF'

#!/bin/bash

# 生成API密钥

generate_api_key() {

local key_name=$1

local expiration_days=${2:-365}

# 生成JWT token

local payload="{\"name\":\"$key_name\",\"exp\":$(($(date +%s) + $expiration_days * 24 * 3600))}"

local token=$(echo -n "$payload" | openssl dgst -sha256 -hmac "mcp-server-secret" -binary | base64)

echo "API Key for $key_name: $token"

echo "$key_name:$token" >> /opt/mcp-server/auth/api-keys.txt

}

# 验证API密钥

validate_api_key() {

local provided_key=$1

if grep -q ":$provided_key$" /opt/mcp-server/auth/api-keys.txt; then

echo "Valid API key"

return 0

else

echo "Invalid API key"

return 1

fi

}

# 删除过期的API密钥

cleanup_expired_keys() {

# 实现过期密钥清理逻辑

echo "Cleaning up expired API keys..."

}

case "$1" in

generate)

generate_api_key "$2" "$3"

;;

validate)

validate_api_key "$2"

;;

cleanup)

cleanup_expired_keys

;;

*)

echo "Usage: $0 {generate|validate|cleanup} [args...]"

exit 1

;;

esac

EOF

chmod +x /opt/mcp-server/scripts/api-key-manager.sh

7.3 数据加密配置

配置数据加密以保护敏感信息:

# 安装加密相关工具

apt install -y openssl

# 创建数据加密脚本

cat > /opt/mcp-server/scripts/data-encryption.sh << 'EOF'

#!/bin/bash

# 创建加密密钥

create_encryption_key() {

local key_file="/opt/mcp-server/encryption/keyfile.key"

if [ ! -f "$key_file" ]; then

openssl rand -base64 32 > "$key_file"

chmod 600 "$key_file"

echo "Encryption key created at $key_file"

else

echo "Encryption key already exists"

fi

}

# 加密文件

encrypt_file() {

local input_file=$1

local output_file="${2:-$input_file.enc}"

local key_file="/opt/mcp-server/encryption/keyfile.key"

if [ ! -f "$key_file" ]; then

echo "Encryption key not found. Creating one..."

create_encryption_key

fi

openssl enc -aes-256-cbc -salt -in "$input_file" -out "$output_file" -kfile "$key_file"

echo "File encrypted: $output_file"

}

# 解密文件

decrypt_file() {

local input_file=$1

local output_file="${2:-${input_file%.enc}}"

local key_file="/opt/mcp-server/encryption/keyfile.key"

openssl enc -aes-256-cbc -d -in "$input_file" -out "$output_file" -kfile "$key_file"

echo "File decrypted: $output_file"

}

# 数据库连接信息加密

encrypt_db_config() {

local db_config_file="/opt/mcp-server/config/database.json"

if [ -f "$db_config_file" ]; then

encrypt_file "$db_config_file"

rm "$db_config_file"

echo "Database configuration encrypted"

else

echo "Database configuration file not found"

fi

}

case "$1" in

create-key)

create_encryption_key

;;

encrypt)

encrypt_file "$2" "$3"

;;

decrypt)

decrypt_file "$2" "$3"

;;

encrypt-db)

encrypt_db_config

;;

*)

echo "Usage: $0 {create-key|encrypt|decrypt|encrypt-db} [args...]"

exit 1

;;

esac

EOF

chmod +x /opt/mcp-server/scripts/data-encryption.sh

mkdir -p /opt/mcp-server/encryption

/opt/mcp-server/scripts/data-encryption.sh create-key

8. 自动化部署与CI/CD集成

8.1 部署脚本编写

创建自动化部署脚本来简化MCP Server的部署流程:

# 创建部署脚本

cat > /opt/mcp-server/scripts/deploy.sh << 'EOF'

#!/bin/bash

# MCP Server自动化部署脚本

set -e

# 配置变量

PROJECT_DIR="/opt/mcp-server"

COMPOSE_FILE="$PROJECT_DIR/docker-compose.yml"

LOG_FILE="$PROJECT_DIR/logs/deploy.log"

# 日志函数

log() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" | tee -a "$LOG_FILE"

}

# 检查先决条件

check_prerequisites() {

log "Checking prerequisites..."

# 检查Docker是否安装

if ! command -v docker &> /dev/null; then

log "ERROR: Docker is not installed"

exit 1

fi

# 检查Docker Compose是否安装

if ! command -v docker-compose &> /dev/null; then

log "ERROR: Docker Compose is not installed"

exit 1

fi

log "Prerequisites check passed"

}

# 拉取最新镜像

pull_images() {

log "Pulling latest images..."

cd "$PROJECT_DIR"

docker-compose pull

log "Images pulled successfully"

}

# 启动服务

start_services() {

log "Starting services..."

cd "$PROJECT_DIR"

docker-compose up -d

log "Services started successfully"

}

# 等待服务就绪

wait_for_services() {

log "Waiting for services to be ready..."

local max_attempts=30

local attempt=1

while [ $attempt -le $max_attempts ]; do

if curl -s -f http://localhost:8080/health > /dev/null; then

log "Services are ready"

return 0

fi

log "Attempt $attempt/$max_attempts: Services not ready yet, waiting..."

sleep 10

attempt=$((attempt + 1))

done

log "ERROR: Services failed to start within timeout"

exit 1

}

# 部署模型

deploy_models() {

log "Deploying models..."

# 这里可以添加具体的模型部署逻辑

log "Models deployed successfully"

}

# 主部署流程

main() {

log "Starting MCP Server deployment..."

check_prerequisites

pull_images

start_services

wait_for_services

deploy_models

log "MCP Server deployment completed successfully"

}

# 执行主流程

main

EOF

chmod +x /opt/mcp-server/scripts/deploy.sh

8.2 CI/CD流水线配置

配置CI/CD流水线以实现自动化部署:

# 创建GitHub Actions工作流

mkdir -p /opt/mcp-server/.github/workflows

cat > /opt/mcp-server/.github/workflows/deploy.yml << 'EOF'

name: MCP Server Deployment

on:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v2

- name: Test Docker Compose

run: |

docker-compose config

docker-compose pull

docker-compose up -d

sleep 30

docker-compose ps

docker-compose down

deploy:

needs: test

runs-on: ubuntu-latest

if: github.ref == 'refs/heads/main'

steps:

- uses: actions/checkout@v3

- name: Deploy to Tencent Cloud Lighthouse

uses: appleboy/ssh-action@v0.1.5

with:

host: ${{ secrets.LIGHTHOUSE_IP }}

username: ${{ secrets.LIGHTHOUSE_USER }}

key: ${{ secrets.LIGHTHOUSE_SSH_KEY }}

script: |

cd /opt/mcp-server

git pull origin main

./scripts/deploy.sh

notify:

needs: deploy

runs-on: ubuntu-latest

steps:

- name: Send notification

uses: slackapi/slack-github-action@v1.23.0

with:

payload: |

{

"text": "MCP Server deployment completed successfully",

"blocks": [

{

"type": "section",

"text": {

"type": "mrkdwn",

"text": ":rocket: *MCP Server Deployment Completed*\nDeployment to Tencent Cloud Lighthouse finished successfully."

}

}

]

}

env:

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL }}

EOF

8.3 监控告警配置

配置监控告警以及时发现和处理问题:

# 创建监控告警脚本

cat > /opt/mcp-server/scripts/monitoring-alerts.sh << 'EOF'

#!/bin/bash

# MCP Server监控告警脚本

# 配置变量

ALERT_EMAIL="admin@example.com"

LOG_FILE="/opt/mcp-server/logs/monitoring.log"

# 日志函数

log() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" | tee -a "$LOG_FILE"

}

# 检查服务状态

check_service_status() {

local service_name=$1

local service_port=$2

if ! nc -z localhost "$service_port"; then

log "ERROR: Service $service_name on port $service_port is not responding"

send_alert "Service $service_name is down" "Service $service_name on port $service_port is not responding"

return 1

fi

log "Service $service_name is running"

return 0

}

# 检查磁盘使用率

check_disk_usage() {

local threshold=${1:-80}

local usage=$(df / | tail -1 | awk '{print $5}' | sed 's/%//')

if [ "$usage" -gt "$threshold" ]; then

log "WARNING: Disk usage is ${usage}%"

send_alert "High disk usage" "Disk usage is ${usage}%, which exceeds threshold of ${threshold}%"

return 1

fi

log "Disk usage is ${usage}%"

return 0

}

# 检查内存使用率

check_memory_usage() {

local threshold=${1:-80}

local usage=$(free | grep Mem | awk '{printf("%.0f", $3/$2 * 100.0)}')

if [ "$usage" -gt "$threshold" ]; then

log "WARNING: Memory usage is ${usage}%"

send_alert "High memory usage" "Memory usage is ${usage}%, which exceeds threshold of ${threshold}%"

return 1

fi

log "Memory usage is ${usage}%"

return 0

}

# 检查CPU使用率

check_cpu_usage() {

local threshold=${1:-80}

local usage=$(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | sed 's/%us,//')

if (( $(echo "$usage > $threshold" | bc -l) )); then

log "WARNING: CPU usage is ${usage}%"

send_alert "High CPU usage" "CPU usage is ${usage}%, which exceeds threshold of ${threshold}%"

return 1

fi

log "CPU usage is ${usage}%"

return 0

}

# 发送告警通知

send_alert() {

local subject=$1

local message=$2

# 发送邮件告警

echo "$message" | mail -s "$subject" "$ALERT_EMAIL"

# 发送Slack通知(如果配置了webhook)

if [ -n "$SLACK_WEBHOOK_URL" ]; then

curl -X POST -H 'Content-type: application/json' \

--data "{\"text\":\"$subject: $message\"}" \

"$SLACK_WEBHOOK_URL"

fi

log "Alert sent: $subject"

}

# 主监控函数

main() {

log "Starting monitoring checks..."

# 检查各服务状态

check_service_status "API Gateway" 8080

check_service_status "Model Manager" 8081

check_service_status "Inference Engine" 8082

check_service_status "MySQL" 3306

check_service_status "Redis" 6379

# 检查系统资源

check_disk_usage 80

check_memory_usage 80

check_cpu_usage 80

log "Monitoring checks completed"

}

# 执行主监控函数

main

EOF

chmod +x /opt/mcp-server/scripts/monitoring-alerts.sh

# 配置定时任务

cat > /opt/mcp-server/scripts/crontab-config.sh << 'EOF'

#!/bin/bash

# 添加定时监控任务

(crontab -l 2>/dev/null; echo "*/5 * * * * /opt/mcp-server/scripts/monitoring-alerts.sh") | crontab -

# 添加日志轮转配置

cat > /etc/logrotate.d/mcp-server << 'LOGROTATE'

/opt/mcp-server/logs/*.log {

daily

rotate 30

compress

delaycompress

missingok

notifempty

create 644 root root

}

LOGROTATE

echo "Cron jobs and log rotation configured"

EOF

chmod +x /opt/mcp-server/scripts/crontab-config.sh

sudo /opt/mcp-server/scripts/crontab-config.sh

9. 实际应用案例

9.1 图像识别服务部署

以图像识别服务为例,展示MCP Server在实际业务中的应用:

# 创建图像识别模型部署脚本

cat > /opt/mcp-server/examples/image-recognition/deploy.sh << 'EOF'

#!/bin/bash

# 图像识别模型部署脚本

set -e

# 配置变量

MODEL_NAME="image-classifier"

MODEL_VERSION="1.0.0"

MODEL_DIR="/opt/mcp-server/examples/image-recognition/model"

# 构建模型镜像

build_model_image() {

echo "Building model image..."

# 创建Dockerfile

cat > "$MODEL_DIR/Dockerfile" << 'DOCKERFILE'

FROM tensorflow/serving:2.8.0

COPY model /models/model

ENV MODEL_NAME=model

ENV MODEL_BASE_PATH=/models

EXPOSE 8501

CMD ["tensorflow_model_server", "--rest_api_port=8501", "--model_name=model", "--model_base_path=/models/model"]

DOCKERFILE

# 构建镜像

cd "$MODEL_DIR"

docker build -t "mcp-model-$MODEL_NAME:$MODEL_VERSION" .

echo "Model image built successfully"

}

# 注册模型到MCP Server

register_model() {

echo "Registering model to MCP Server..."

curl -X POST http://localhost:8080/api/v1/models \

-H "Content-Type: application/json" \

-d "{

\"name\": \"$MODEL_NAME\",

\"version\": \"$MODEL_VERSION\",

\"description\": \"Image classification model\",

\"framework\": \"tensorflow\",

\"image\": \"mcp-model-$MODEL_NAME:$MODEL_VERSION\",

\"port\": 8501,

\"health_check_path\": \"/v1/models/model\"

}"

echo "Model registered successfully"

}

# 部署模型

deploy_model() {

echo "Deploying model..."

curl -X POST http://localhost:8080/api/v1/models/$MODEL_NAME/deploy

echo "Model deployed successfully"

}

# 测试模型

test_model() {

echo "Testing model..."

# 创建测试图像(这里使用占位符)

local test_image="iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAYAAAAfFcSJAAAADUlEQVR42mP8/5+hHgAHggJ/PchI7wAAAABJRU5ErkJggg=="

curl -X POST http://localhost:8080/api/v1/models/$MODEL_NAME/predict \

-H "Content-Type: application/json" \

-d "{

\"instances\": [

{

\"input\": \"$test_image\"

}

]

}"

echo "Model test completed"

}

# 主函数

main() {

echo "Starting image recognition model deployment..."

build_model_image

register_model

deploy_model

test_model

echo "Image recognition model deployment completed"

}

# 执行主函数

main

EOF

chmod +x /opt/mcp-server/examples/image-recognition/deploy.sh

9.2 自然语言处理服务部署

展示自然语言处理模型的部署过程:

# 创建NLP模型部署脚本

cat > /opt/mcp-server/examples/nlp-service/deploy.sh << 'EOF'

#!/bin/bash

# NLP模型部署脚本

set -e

# 配置变量

MODEL_NAME="text-classifier"

MODEL_VERSION="1.0.0"

MODEL_DIR="/opt/mcp-server/examples/nlp-service/model"

# 准备模型文件

prepare_model() {

echo "Preparing model files..."

mkdir -p "$MODEL_DIR"

# 这里应该放置实际的模型文件

# 例如BERT模型文件、词汇表等

echo "Model files prepared"

}

# 构建模型镜像

build_model_image() {

echo "Building model image..."

# 创建Dockerfile

cat > "$MODEL_DIR/Dockerfile" << 'DOCKERFILE'

FROM python:3.8-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY model/ ./model/

COPY app.py .

EXPOSE 8000

CMD ["python", "app.py"]

DOCKERFILE

# 创建应用代码

cat > "$MODEL_DIR/app.py" << 'PYTHONCODE'

from flask import Flask, request, jsonify

import torch

from transformers import BertTokenizer, BertForSequenceClassification

app = Flask(__name__)

# 加载模型和分词器

model = BertForSequenceClassification.from_pretrained('./model')

tokenizer = BertTokenizer.from_pretrained('./model')

@app.route('/predict', methods=['POST'])

def predict():

data = request.json

text = data['text']

# 分词

inputs = tokenizer(text, return_tensors='pt', truncation=True, padding=True)

# 预测

with torch.no_grad():

outputs = model(**inputs)

predictions = torch.nn.functional.softmax(outputs.logits, dim=-1)

return jsonify({

'predictions': predictions.tolist()

})

@app.route('/health', methods=['GET'])

def health():

return jsonify({'status': 'healthy'})

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8000)

PYTHONCODE

# 创建依赖文件

cat > "$MODEL_DIR/requirements.txt" << 'REQUIREMENTS'

torch==1.9.0

transformers==4.9.2

flask==2.0.1

REQUIREMENTS

# 构建镜像

cd "$MODEL_DIR"

docker build -t "mcp-model-$MODEL_NAME:$MODEL_VERSION" .

echo "Model image built successfully"

}

# 注册和部署模型

register_and_deploy() {

echo "Registering and deploying model..."

# 注册模型

curl -X POST http://localhost:8080/api/v1/models \

-H "Content-Type: application/json" \

-d "{

\"name\": \"$MODEL_NAME\",

\"version\": \"$MODEL_VERSION\",

\"description\": \"Text classification model based on BERT\",

\"framework\": \"pytorch\",

\"image\": \"mcp-model-$MODEL_NAME:$MODEL_VERSION\",

\"port\": 8000,

\"health_check_path\": \"/health\"

}"

# 部署模型

curl -X POST http://localhost:8080/api/v1/models/$MODEL_NAME/deploy

echo "Model registered and deployed successfully"

}

# 主函数

main() {

echo "Starting NLP model deployment..."

prepare_model

build_model_image

register_and_deploy

echo "NLP model deployment completed"

}

# 执行主函数

main

EOF

chmod +x /opt/mcp-server/examples/nlp-service/deploy.sh

9.3 推理服务性能测试

进行推理服务的性能测试以评估系统性能:

# 创建性能测试脚本

cat > /opt/mcp-server/scripts/performance-test.sh << 'EOF'

#!/bin/bash

# MCP Server性能测试脚本

# 配置变量

API_ENDPOINT="http://localhost:8080/api/v1/models"

TEST_MODEL="image-classifier"

CONCURRENT_USERS=10

REQUESTS_PER_USER=100

TEST_DURATION=300

# 日志函数

log() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1"

}

# 准备测试数据

prepare_test_data() {

log "Preparing test data..."

# 创建测试图像数据(base64编码的占位符)

echo "iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAYAAAAfFcSJAAAADUlEQVR42mP8/5+hHgAHggJ/PchI7wAAAABJRU5ErkJggg==" > /tmp/test_image.txt

log "Test data prepared"

}

# 单次请求测试

single_request_test() {

local image_data=$(cat /tmp/test_image.txt)

curl -s -X POST "$API_ENDPOINT/$TEST_MODEL/predict" \

-H "Content-Type: application/json" \

-d "{

\"instances\": [

{

\"input\": \"$image_data\"

}

]

}"

}

# 并发测试

concurrent_test() {

log "Starting concurrent test with $CONCURRENT_USERS users, $REQUESTS_PER_USER requests each..."

for i in $(seq 1 $CONCURRENT_USERS); do

{

for j in $(seq 1 $REQUESTS_PER_USER); do

single_request_test > /dev/null

echo "User $i, Request $j completed"

done

} &

done

wait

log "Concurrent test completed"

}

# 压力测试

stress_test() {

log "Starting stress test for $TEST_DURATION seconds..."

timeout $TEST_DURATION bash -c "

while true; do

single_request_test > /dev/null

done

" &

STRESS_PID=$!

# 监控系统资源

while kill -0 $STRESS_PID 2>/dev/null; do

echo "CPU: $(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | sed 's/%us,//')%"

echo "Memory: $(free | grep Mem | awk '{printf("%.0f", $3/$2 * 100.0)}')%"

sleep 5

done

log "Stress test completed"

}

# 性能指标收集

collect_metrics() {

log "Collecting performance metrics..."

# 收集API响应时间

for i in {1..100}; do

START_TIME=$(date +%s.%N)

single_request_test > /dev/null

END_TIME=$(date +%s.%N)

RESPONSE_TIME=$(echo "$END_TIME - $START_TIME" | bc)

echo "Response time: $RESPONSE_TIME seconds"

done

# 收集系统指标

echo "System metrics:"

echo "CPU usage: $(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | sed 's/%us,//')%"

echo "Memory usage: $(free | grep Mem | awk '{printf("%.0f", $3/$2 * 100.0)}')%"

echo "Disk usage: $(df / | tail -1 | awk '{print $5}')"

log "Metrics collection completed"

}

# 主测试函数

main() {

log "Starting MCP Server performance test..."

prepare_test_data

concurrent_test

stress_test

collect_metrics

log "Performance test completed"

}

# 执行主测试函数

main

EOF

chmod +x /opt/mcp-server/scripts/performance-test.sh

10. 故障排查与常见问题解决

10.1 常见部署问题

在部署MCP Server过程中可能遇到的问题及解决方案:

# 创建故障排查脚本

cat > /opt/mcp-server/scripts/troubleshooting.sh << 'EOF'

#!/bin/bash

# MCP Server故障排查脚本

# 日志函数

log() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1"

}

# 检查Docker服务状态

check_docker_status() {

log "Checking Docker service status..."

if ! systemctl is-active --quiet docker; then

log "ERROR: Docker service is not running"

log "Attempting to start Docker service..."

sudo systemctl start docker

if systemctl is-active --quiet docker; then

log "Docker service started successfully"

else

log "ERROR: Failed to start Docker service"

return 1

fi

else

log "Docker service is running"

fi

}

# 检查端口占用

check_port_usage() {

log "Checking port usage..."

local ports=(8080 8081 8082 3306 6379)

for port in "${ports[@]}"; do

if lsof -i :$port > /dev/null 2>&1; then

log "Port $port is in use:"

lsof -i :$port

else

log "Port $port is available"

fi

done

}

# 检查磁盘空间

check_disk_space() {

log "Checking disk space..."

local usage=$(df / | tail -1 | awk '{print $5}' | sed 's/%//')

if [ "$usage" -gt 90 ]; then

log "WARNING: Disk usage is ${usage}%, which is very high"

log "Consider cleaning up unused Docker images and containers:"

log "docker system prune -a"

else

log "Disk usage is ${usage}%"

fi

}

# 检查内存使用

check_memory_usage() {

log "Checking memory usage..."

local usage=$(free | grep Mem | awk '{printf("%.0f", $3/$2 * 100.0)}')

if [ "$usage" -gt 90 ]; then

log "WARNING: Memory usage is ${usage}%, which is very high"

log "Consider checking for memory leaks or increasing server memory"

else

log "Memory usage is ${usage}%"

fi

}

# 检查Docker容器状态

check_container_status() {

log "Checking Docker container status..."

docker ps -a

log "Checking for exited containers..."

local exited_containers=$(docker ps -aq -f status=exited)

if [ -n "$exited_containers" ]; then

log "Found exited containers:"

docker ps -a -f status=exited

log "Consider removing exited containers:"

log "docker rm $exited_containers"

else

log "No exited containers found"

fi

}

# 检查服务日志

check_service_logs() {

log "Checking service logs..."

local services=("mcp-api-gateway" "mcp-model-manager" "mcp-inference-engine" "mcp-mysql" "mcp-redis")

for service in "${services[@]}"; do

log "Logs for $service:"

docker logs --tail 20 "$service" 2>&1 || log "Failed to get logs for $service"

echo "---"

done

}

# 重启所有服务

restart_all_services() {

log "Restarting all services..."

cd /opt/mcp-server

docker-compose down

sleep 5

docker-compose up -d

log "Waiting for services to start..."

sleep 30

log "Services restarted"

}

# 清理系统资源

cleanup_system() {

log "Cleaning up system resources..."

# 清理未使用的Docker资源

docker system prune -f

# 清理未使用的卷

docker volume prune -f

# 清理未使用的网络

docker network prune -f

log "System cleanup completed"

}

# 主排查函数

main() {

log "Starting MCP Server troubleshooting..."

check_docker_status

check_port_usage

check_disk_space

check_memory_usage

check_container_status

check_service_logs

log "Troubleshooting completed"

log "If issues persist, consider running:"

log "1. restart_all_services - to restart all services"

log "2. cleanup_system - to clean up system resources"

}

# 执行主排查函数

main

EOF

chmod +x /opt/mcp-server/scripts/troubleshooting.sh

10.2 性能优化建议

针对MCP Server的性能优化建议:

# 创建性能优化脚本

cat > /opt/mcp-server/scripts/performance-optimization.sh << 'EOF'

#!/bin/bash

# MCP Server性能优化脚本

# 日志函数

log() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1"

}

# Docker性能优化

optimize_docker() {

log "Optimizing Docker performance..."

# 创建优化的daemon.json

cat > /etc/docker/daemon.json << 'DOCKEREOF'

{

"registry-mirrors": ["https://mirror.ccs.tencentyun.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"default-ulimits": {

"nofile": {

"Name": "nofile",

"Hard": 65536,

"Soft": 65536

}

},

"live-restore": true,

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 10

}

DOCKEREOF

# 重启Docker服务

systemctl restart docker

log "Docker performance optimized"

}

# 系统内核参数优化

optimize_kernel() {

log "Optimizing kernel parameters..."

# 备份当前配置

cp /etc/sysctl.conf /etc/sysctl.conf.backup

# 添加优化参数

cat >> /etc/sysctl.conf << 'KERNELEOF'

# 网络优化

net.core.somaxconn = 65535

net.ipv4.tcp_max_syn_backlog = 65535

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_keepalive_time = 1200

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.ip_local_port_range = 1024 65535

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_syncookies = 1

# 文件系统优化

fs.file-max = 1000000

fs.nr_open = 1000000

# 内存优化

vm.swappiness = 1

vm.dirty_ratio = 15

vm.dirty_background_ratio = 5

KERNELEOF

# 应用配置

sysctl -p

log "Kernel parameters optimized"

}

# 数据库性能优化

optimize_database() {

log "Optimizing database performance..."

# 创建MySQL优化配置

cat > /opt/mcp-server/config/mysql-optimization.cnf << 'MYSQLEOF'

[mysqld]

# 基本设置

max_connections = 200

max_connect_errors = 10000

# 内存设置

innodb_buffer_pool_size = 512M

innodb_log_file_size = 64M

innodb_log_buffer_size = 16M

innodb_flush_log_at_trx_commit = 2

# 查询优化

query_cache_type = 1

query_cache_size = 32M

query_cache_limit = 2M

# 其他优化

table_open_cache = 2000

thread_cache_size = 50

tmp_table_size = 64M

max_heap_table_size = 64M

MYSQLEOF

log "Database optimization configuration created"

log "Please restart MySQL container to apply changes"

}

# 模型推理优化

optimize_inference() {

log "Optimizing model inference performance..."

# 创建推理引擎优化配置

cat > /opt/mcp-server/config/inference-optimization.json << 'INFERENCEEOF'

{

"batch_size": 32,

"max_concurrent_requests": 100,

"request_timeout": 30,

"model_cache_size": 1000,

"enable_model_preloading": true,

"enable_request_batching": true,

"batching_timeout_ms": 10

}

INFERENCEEOF

log "Inference engine optimization configuration created"

}

# 负载均衡优化

optimize_load_balancing() {

log "Optimizing load balancing..."

# 创建Nginx优化配置

cat > /opt/mcp-server/config/nginx-optimization.conf << 'NGINXEOF'

upstream mcp_backend {

least_conn;

keepalive 32;

server api-gateway:8080 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

location / {

proxy_pass http://mcp_backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_connect_timeout 30s;

proxy_send_timeout 30s;

proxy_read_timeout 30s;

}

}

NGINXEOF

log "Load balancing optimization configuration created"

}

# 主优化函数

main() {

log "Starting MCP Server performance optimization..."

optimize_docker

optimize_kernel

optimize_database

optimize_inference

optimize_load_balancing

log "Performance optimization completed"

log "Please restart services to apply all changes"

}

# 执行主优化函数

main

EOF

chmod +x /opt/mcp-server/scripts/performance-optimization.sh

11. 最佳实践总结

11.1 部署最佳实践

通过前面的实践,总结出以下部署最佳实践:

- 环境准备:

- 选择合适的轻量应用服务器配置,根据预期负载合理分配CPU、内存和存储资源

- 确保网络连通性和安全组配置正确

- 定期更新系统和软件包,保持环境安全

- 容器化部署:

- 使用Docker Compose管理多容器应用,简化部署和维护

- 为每个服务创建独立的容器,确保服务间的隔离性

- 合理配置容器资源限制,避免资源争用

- 配置管理:

- 使用环境变量管理配置,便于不同环境间的切换

- 敏感信息(如密码、密钥)应加密存储

- 配置文件版本化管理,便于回滚和审计

- 数据持久化:

- 重要数据应挂载到宿主机目录,避免容器删除时数据丢失

- 定期备份关键数据和配置

- 使用数据库主从复制提高数据可靠性

11.2 运维最佳实践

- 监控告警:

- 部署完善的监控系统,实时监控服务状态和系统资源

- 设置合理的告警阈值,及时发现和处理异常

- 定期检查和优化监控规则,减少误报和漏报

- 日志管理:

- 统一日志格式,便于分析和排查问题

- 配置日志轮转,避免日志文件过大

- 集中化日志存储,便于统一分析和检索

- 安全防护:

- 定期更新系统和软件,修复安全漏洞

- 配置防火墙规则,限制不必要的端口访问

- 使用API密钥和认证机制,保护API接口安全

- 备份恢复:

- 制定定期备份策略,包括数据备份和配置备份

- 定期测试备份数据的完整性和可恢复性

- 制定灾难恢复计划,确保业务连续性

11.3 性能优化最佳实践

- 资源优化:

- 根据实际负载调整容器资源配置

- 使用连接池减少数据库连接开销

- 合理配置缓存策略,提高访问速度

- 模型优化:

- 对AI模型进行量化和压缩,减少推理时间

- 使用模型并行和流水线技术提高吞吐量

- 合理设置批处理大小,平衡延迟和吞吐量

- 网络优化:

- 使用CDN加速静态资源访问

- 配置HTTP/2提高传输效率

- 合理设置超时和重试机制

结论

通过本文的详细介绍,我们全面了解了如何在腾讯云轻量应用服务器上部署和管理MCP Server。从环境准备、服务部署到性能优化和故障排查,我们构建了一套完整的AI模型服务平台解决方案。

MCP Server与腾讯云轻量应用服务器的结合,为中小型AI应用提供了高性价比的部署方案。通过容器化技术,我们实现了模型的标准化部署和管理;通过完善的监控和告警系统,确保了服务的稳定运行;通过性能优化措施,提升了系统的处理能力。

在实际应用中,这套解决方案可以广泛应用于图像识别、自然语言处理、推荐系统等各类AI场景。随着AI技术的不断发展,MCP Server将为更多企业和开发者提供便捷的AI模型部署和管理能力,推动AI技术在各行各业的落地应用。

未来,我们可以进一步探索以下方向:

- 边缘计算集成:将MCP Server部署到边缘节点,降低推理延迟

- 自动化运维:利用AI技术实现智能监控和自动故障恢复

- 多云部署:构建跨云平台的MCP Server集群,提高服务可用性

- 模型市场:建立模型共享和交易平台,促进AI模型的流通和复用

通过不断的技术创新和实践探索,我们相信MCP Server将在AI应用开发和部署领域发挥越来越重要的作用,为数字化转型提供强有力的技术支撑。

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)