基于 Amazon SageMaker 和CloudFormation 的一站式无代码模型微调部署平台 Model Hub

本文将探索并实践结合Amazon SageMaker与 LlamaFactory 的一站式无代码模型微调部署平台——Model Hub,详细阐述基于 Amazon SageMaker 和 LlamaFactory 构建的 Model Hub 平台的优势、架构设计及其具体实践,旨在帮助读者更好地理解并利用这一强大工具,从而全面提升 AI 项目的开发效率与最终效果。

在当前人工智能技术飞速发展的浪潮中,大型语言模型(LLMs)已然成为推动各类应用创新的核心驱动力。然而,随着模型复杂度的日益提升和应用场景的不断拓展,如何高效地进行模型微调(Fine-tuning)与部署,成为了广大开发者和研究人员面临的严峻挑战。

为了应对这一挑战,本文将探索并实践结合Amazon SageMaker与 LlamaFactory 的一站式无代码模型微调部署平台——Model Hub,详细阐述基于 Amazon SageMaker 和 LlamaFactory 构建的 Model Hub 平台的优势、架构设计及其具体实践,旨在帮助读者更好地理解并利用这一强大工具,从而全面提升 AI 项目的开发效率与最终效果。

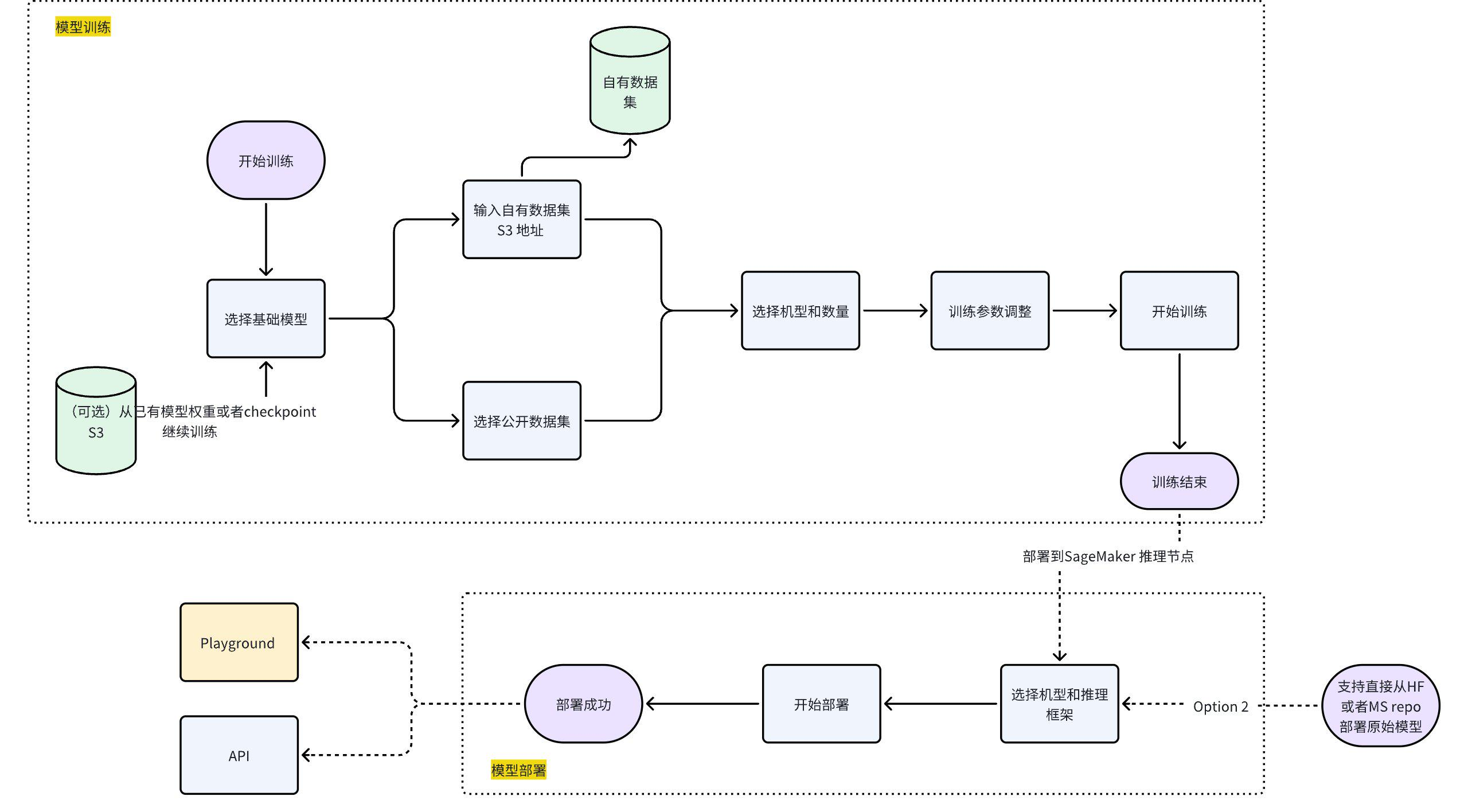

一、架构解析

1.1、LLaMA-Factory 框架概览

LLaMA-Factory 是一个设计精良、易于上手的开源大模型训练框架,其通过用户友好的界面和高效的算法实现,极大地降低了大模型训练的学习曲线和资源消耗。该框架不仅支持超过百种单模态及多模态大模型的预训练、SFT(监督式微调)和 RLHF(基于人类反馈的强化学习)训练,还深度集成了 LoRA、QLoRA、Flash Attention 和 Liger Kernel 等一系列先进的大模型优化技术。LLaMA-Factory 的主要特点体现在以下几个方面:

首先,其模型支持速度快,能够迅速集成近期开源的大语言模型作为基座,使用户能够充分利用最新的预训练模型能力来构建领域专用模型。其次,数据支持种类多样,LLaMA-Factory 允许用户选择多轮对话数据、人类偏好数据以及纯文本数据进行模型调优,同时还支持多图理解、视频理解和图文交错数据的学习,极大地拓宽了应用场景。再者,模型训练成本显著降低,通过结合 LoRA、模型量化、高性能算子等技术,LLaMA-Factory 将大模型的训练成本降低了 3 到 20 倍,同时训练速度提升了 2 倍。

1.2、Model Hub 平台定位

Model Hub 平台作为亚马逊云科技在 GitHub 上的一个开源项目,其定位是基于 LLaMA-Factory 和 Amazon SageMaker,提供一个集模型微调、部署、调试于一体的零代码可视化平台。该平台旨在帮助用户快速微调各类开源模型,高效并行地进行大量实验和验证,从而显著提升模型微调的工程效率。

在与 LLaMA-Factory 开源框架的对比中,Model Hub 展现出其独特的优势。LLaMA-Factory 提供了 Web UI 和命令行接口两种使用方式,Model Hub 平台将 LLaMA-Factory 的训练部分封装到 SageMaker Training Job 中,用户在 Model Hub 上进行单机或分布式训练时,只需选择合适的机型和数量,无需自行搭建分布式集群,真正实现了即需即用。任务结束后,资源会自动释放,避免了提前购买资源的成本,提供了更简易、经济且一致的使用体验。

Model Hub 平台的核心在于其巧妙地融合了 Amazon SageMaker 的强大能力与 LlamaFactory 的灵活特性,构建了一个可视化、无代码的模型微调与部署环境。

二、环境准备

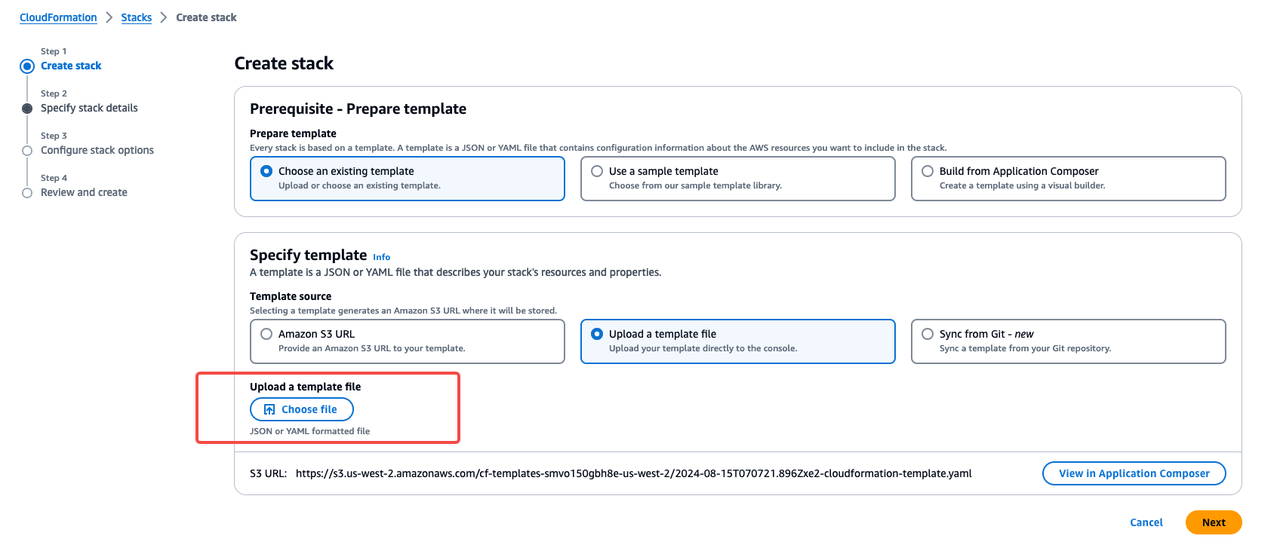

Model Hub 在 亚马逊云科技海外区域已支持通过 CloudFormation 进行自动化部署,极大地简化了部署流程,这里我们主要介绍 CloudFormation 的自动化部署流程。

首先,登录亚马逊云科技控制台,进入 CloudFormation 服务,并选择“创建堆栈”。

然后,上传 Model Hub 提供的 cloudformation-template.yaml 部署文件。

AWSTemplateFormatVersion: '2010-09-09'

Description: 'CloudFormation template for EC2 instance for ModelHubStack'

Metadata:

AWS::CloudFormation::Interface:

StackName:

Default: "ModelHubStack"

Parameters:

KeyName:

Description: Name of an existing EC2 KeyPair to enable SSH access to the instance

Type: AWS::EC2::KeyPair::KeyName

ConstraintDescription: Must be the name of an existing EC2 KeyPair.

InstanceType:

Type: String

Default: m5.xlarge

Description: EC2 instance type

AMIId:

Type: AWS::SSM::Parameter::Value<AWS::EC2::Image::Id>

Default: /aws/service/canonical/ubuntu/server/24.04/stable/current/amd64/hvm/ebs-gp3/ami-id

Description: Ubuntu 24.04 AMI ID

HuggingFaceHubToken:

Type: String

Description: Optional Hugging Face Hub Token

Default: ""

WandbApiKey:

Type: String

Description: Optional WANDB API Key for view W&B in wandb.ai

Default: ""

WandbBaseUrl:

Type: String

Description: Optional WANDB Base URL for view W&B own Wandb portal

Default: ""

SwanlabApiKey:

Type: String

Description: Optional SWANLAB for view Metrics on https://swanlab.cn/

Default: ""

Resources:

EC2Instance:

Type: AWS::EC2::Instance

CreationPolicy:

ResourceSignal:

Timeout: PT1H30M

Properties:

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: ModelHubServer

InstanceType: !Ref InstanceType

ImageId: !Ref AMIId

SecurityGroupIds:

- !Ref EC2SecurityGroup

BlockDeviceMappings:

- DeviceName: /dev/sda1

Ebs:

VolumeSize: 500

VolumeType: gp3

IamInstanceProfile: !Ref EC2InstanceProfile

UserData:

Fn::Base64:

!Sub

- |

#!/bin/bash

# Set up logging

LOG_FILE="/var/log/user-data.log"

exec > >(tee -a "$LOG_FILE") 2>&1

echo "$(date '+%Y-%m-%d %H:%M:%S') - Starting UserData script execution"

# setup cfn-signal

if [ ! -f /usr/local/bin/cfn-signal ]; then

echo "Installing cfn-signal for error reporting"

apt-get update

apt-get install -y python3-pip python3-venv

# 使用 Python 虚拟环境安装

python3 -m venv /opt/aws/cfn-bootstrap

/opt/aws/cfn-bootstrap/bin/pip install https://s3.amazonaws.com/cloudformation-examples/aws-cfn-bootstrap-py3-latest.tar.gz

# 创建符号链接到 /usr/local/bin

ln -s /opt/aws/cfn-bootstrap/bin/cfn-signal /usr/local/bin/cfn-signal

ln -s /opt/aws/cfn-bootstrap/bin/cfn-init /usr/local/bin/cfn-init

ln -s /opt/aws/cfn-bootstrap/bin/cfn-get-metadata /usr/local/bin/cfn-get-metadata

ln -s /opt/aws/cfn-bootstrap/bin/cfn-hup /usr/local/bin/cfn-hup

fi

chmod +x /usr/local/bin/cfn-signal

# Define error handling function

function error_exit {

echo "ERROR: $1" | tee -a "$LOG_FILE"

/usr/local/bin/cfn-signal -e 1 --stack ${AWS::StackName} --resource EC2Instance --region ${AWS::Region}

exit 1

}

# Update and install basic software

echo "Updating apt and installing required packages"

sudo apt-get update || error_exit "Failed to update apt"

sudo apt-get install -y git unzip || error_exit "Failed to install basic packages"

# Check and install AWS CLI

if ! command -v aws &> /dev/null; then

echo "Installing AWS CLI"

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" || error_exit "Failed to download AWS CLI"

unzip awscliv2.zip || error_exit "Failed to unzip AWS CLI"

sudo ./aws/install || error_exit "Failed to install AWS CLI"

else

echo "AWS CLI already installed"

fi

# Install Node.js

echo "Installing Node.js"

curl -fsSL https://deb.nodesource.com/setup_20.x | sudo -E bash - || error_exit "Failed to setup Node.js repository"

sudo apt-get install -y nodejs || error_exit "Failed to install Node.js"

echo "Installing yarn"

sudo npm install --global yarn || error_exit "Failed to install yarn"

# Clone repository

echo "Cloning repository"

cd /home/ubuntu/ || error_exit "Failed to change to ubuntu home directory"

sudo -u ubuntu git clone --recurse-submodule https://github.com/aws-samples/llm_model_hub.git || error_exit "Failed to clone repository"

cd /home/ubuntu/llm_model_hub || error_exit "Failed to change to repository directory"

sudo -u ubuntu yarn install || error_exit "Failed to run yarn install"

# Install pm2

echo "Installing pm2"

sudo yarn global add pm2 || error_exit "Failed to install pm2"

# Wait for instance to fully start

echo "Waiting for instance to fully start"

sleep 30

# Get instance metadata

echo "Retrieving instance metadata"

TOKEN=$(curl -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600") || error_exit "Failed to get metadata token"

EC2_IP=$(curl -H "X-aws-ec2-metadata-token: $TOKEN" -s http://169.254.169.254/latest/meta-data/public-ipv4) || error_exit "Failed to get public IP"

REGION=$(curl -H "X-aws-ec2-metadata-token: $TOKEN" -s http://169.254.169.254/latest/meta-data/placement/region) || error_exit "Failed to get region"

echo "Got IP: $EC2_IP and Region: $REGION"

# Generate random key

RANDOM_KEY=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | fold -w 32 | head -n 1) || error_exit "Failed to generate random key"

# Write environment variables

echo "Writing environment variables"

echo "REACT_APP_API_ENDPOINT=http://$EC2_IP:8000/v1" > /home/ubuntu/llm_model_hub/.env || error_exit "Failed to write frontend env file"

echo "REACT_APP_API_KEY=$RANDOM_KEY" >> /home/ubuntu/llm_model_hub/.env || error_exit "Failed to append to frontend env file"

echo "REACT_APP_CALCULATOR=https://aws-gpu-memory-caculator.streamlit.app/" >> /home/ubuntu/llm_model_hub/.env || error_exit "Failed to append to frontend env file"

# Write backend environment variables

echo "Writing backend environment variables"

cat << EOF > /home/ubuntu/llm_model_hub/backend/.env || error_exit "Failed to write backend env file"

AK=

SK=

role=${SageMakerRoleArn}

region=$REGION

db_host=127.0.0.1

db_name=llm

db_user=llmdata

db_password=llmdata

api_keys=$RANDOM_KEY

HUGGING_FACE_HUB_TOKEN=${HuggingFaceHubToken}

WANDB_API_KEY=${WandbApiKey}

WANDB_BASE_URL=${WandbBaseUrl}

SWANLAB_API_KEY=${SwanlabApiKey}

EOF

# Set permissions

echo "Setting proper permissions"

sudo chown -R ubuntu:ubuntu /home/ubuntu/ || error_exit "Failed to set permissions"

# Generate random password and store

RANDOM_PASSWORD=$(openssl rand -base64 12 | tr -dc 'a-zA-Z0-9' | fold -w 8 | head -n 1) || error_exit "Failed to generate random password"

aws ssm put-parameter --name "/${AWS::StackName}/RandomPassword" --value "$RANDOM_PASSWORD" --type "SecureString" --overwrite --region ${AWS::Region} || error_exit "Failed to store password in SSM"

# Run setup script

echo "Running setup script"

cd /home/ubuntu/llm_model_hub/backend || error_exit "Failed to change to backend directory"

sudo -u ubuntu bash 01.setup.sh || error_exit "Failed to run setup script"

sleep 30

# Start parallel builds for all Docker images

echo "Starting parallel builds for all Docker images"

# Create log files for each build

VLLM_LOG="/tmp/vllm_build.log"

SGLANG_LOG="/tmp/sglang_build.log"

LLAMAFACTORY_LOG="/tmp/llamafactory_build.log"

EASYR1_LOG="/tmp/easyr1_build.log"

# Start builds in parallel

echo "$(date '+%Y-%m-%d %H:%M:%S') - Starting vllm image build"

(cd /home/ubuntu/llm_model_hub/backend/byoc && sudo -u ubuntu bash build_and_push.sh > "$VLLM_LOG" 2>&1) &

VLLM_PID=$!

echo "$(date '+%Y-%m-%d %H:%M:%S') - Starting sglang image build"

(cd /home/ubuntu/llm_model_hub/backend/byoc && sudo -u ubuntu bash build_and_push_sglang.sh > "$SGLANG_LOG" 2>&1) &

SGLANG_PID=$!

echo "$(date '+%Y-%m-%d %H:%M:%S') - Starting llamafactory image build"

(cd /home/ubuntu/llm_model_hub/backend/docker && sudo -u ubuntu bash build_and_push.sh > "$LLAMAFACTORY_LOG" 2>&1) &

LLAMAFACTORY_PID=$!

echo "$(date '+%Y-%m-%d %H:%M:%S') - Starting easyr1 image build"

(cd /home/ubuntu/llm_model_hub/backend/docker_easyr1 && sudo -u ubuntu bash build_and_push.sh > "$EASYR1_LOG" 2>&1) &

EASYR1_PID=$!

# Wait for all builds to complete and collect exit codes

echo "$(date '+%Y-%m-%d %H:%M:%S') - Waiting for all builds to complete..."

BUILD_FAILED=0

# Wait for each process and store exit codes

echo "$(date '+%Y-%m-%d %H:%M:%S') - Waiting for vllm build..."

wait $VLLM_PID

VLLM_EXIT=$?

echo "$(date '+%Y-%m-%d %H:%M:%S') - Waiting for sglang build..."

wait $SGLANG_PID

SGLANG_EXIT=$?

echo "$(date '+%Y-%m-%d %H:%M:%S') - Waiting for llamafactory build..."

wait $LLAMAFACTORY_PID

LLAMAFACTORY_EXIT=$?

echo "$(date '+%Y-%m-%d %H:%M:%S') - Waiting for easyr1 build..."

wait $EASYR1_PID

EASYR1_EXIT=$?

# Check results for each build

if [ $VLLM_EXIT -eq 0 ]; then

echo "$(date '+%Y-%m-%d %H:%M:%S') - vllm build completed successfully"

else

echo "$(date '+%Y-%m-%d %H:%M:%S') - ERROR: vllm build failed (exit code: $VLLM_EXIT)"

echo "Last 20 lines of vllm build log:"

tail -20 "$VLLM_LOG" || echo "Could not read log file"

BUILD_FAILED=1

fi

if [ $SGLANG_EXIT -eq 0 ]; then

echo "$(date '+%Y-%m-%d %H:%M:%S') - sglang build completed successfully"

else

echo "$(date '+%Y-%m-%d %H:%M:%S') - ERROR: sglang build failed (exit code: $SGLANG_EXIT)"

echo "Last 20 lines of sglang build log:"

tail -20 "$SGLANG_LOG" || echo "Could not read log file"

BUILD_FAILED=1

fi

if [ $LLAMAFACTORY_EXIT -eq 0 ]; then

echo "$(date '+%Y-%m-%d %H:%M:%S') - llamafactory build completed successfully"

else

echo "$(date '+%Y-%m-%d %H:%M:%S') - ERROR: llamafactory build failed (exit code: $LLAMAFACTORY_EXIT)"

echo "Last 20 lines of llamafactory build log:"

tail -20 "$LLAMAFACTORY_LOG" || echo "Could not read log file"

BUILD_FAILED=1

fi

if [ $EASYR1_EXIT -eq 0 ]; then

echo "$(date '+%Y-%m-%d %H:%M:%S') - easyr1 build completed successfully"

else

echo "$(date '+%Y-%m-%d %H:%M:%S') - ERROR: easyr1 build failed (exit code: $EASYR1_EXIT)"

echo "Last 20 lines of easyr1 build log:"

tail -20 "$EASYR1_LOG" || echo "Could not read log file"

BUILD_FAILED=1

fi

# Check if any build failed

if [ $BUILD_FAILED -eq 1 ]; then

error_exit "One or more Docker image builds failed. Check the logs above for details."

fi

echo "$(date '+%Y-%m-%d %H:%M:%S') - All Docker image builds completed successfully"

# Upload dummy tar.gz

echo "Uploading dummy tar.gz"

cd /home/ubuntu/llm_model_hub/backend/byoc || error_exit "Failed to change to byoc directory"

sudo -u ubuntu ../../miniconda3/envs/py311/bin/python startup.py || error_exit "Failed to upload dummy tar.gz"

# Add user to database

echo "Adding user to database"

cd /home/ubuntu/llm_model_hub/backend/ || error_exit "Failed to change to backend directory"

sudo -u ubuntu bash -c "source ../miniconda3/bin/activate py311 && python3 users/add_user.py demo_user $RANDOM_PASSWORD default" || error_exit "Failed to add user to database"

# Start backend

echo "Starting backend"

cd /home/ubuntu/llm_model_hub/backend/ || error_exit "Failed to change to backend directory"

sudo -u ubuntu bash 02.start_backend.sh || error_exit "Failed to start backend"

sleep 15

# Start frontend

echo "Starting frontend"

cd /home/ubuntu/llm_model_hub/ || error_exit "Failed to change to repository directory"

sudo -u ubuntu pm2 start pm2run.config.js || error_exit "Failed to start frontend"

# Send success signal

echo "Sending success signal to CloudFormation"

/usr/local/bin/cfn-signal -e 0 --stack ${AWS::StackName} --resource EC2Instance --region ${AWS::Region} || error_exit "Failed to send success signal"

echo "RandomPassword=$RANDOM_PASSWORD" >> /etc/environment

echo "RandomPassword=$RANDOM_PASSWORD"

echo "UserData script execution completed successfully"

- SageMakerRoleArn: !GetAtt SageMakerExecutionRole.Arn

HuggingFaceHubToken: !Ref HuggingFaceHubToken

WandbApiKey: !Ref WandbApiKey

WandbBaseUrl: !Ref WandbBaseUrl

SwanlabApiKey: !Ref SwanlabApiKey

EC2SecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow SSH, port 8000 and 3000

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 8000

ToPort: 8000

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: 3000

ToPort: 3000

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: 0.0.0.0/0

EC2Role:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: ec2.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/CloudWatchLogsFullAccess

- arn:aws:iam::aws:policy/AmazonEC2ReadOnlyAccess

- arn:aws:iam::aws:policy/AmazonSageMakerFullAccess

Policies:

- PolicyName: CloudFormationSignalPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: cloudformation:SignalResource

Resource: !Sub 'arn:aws:cloudformation:${AWS::Region}:${AWS::AccountId}:stack/${AWS::StackName}/*'

- PolicyName: SSMParameterAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ssm:PutParameter

Resource: !Sub 'arn:aws:ssm:${AWS::Region}:${AWS::AccountId}:parameter/${AWS::StackName}/*'

EC2InstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Path: "/"

Roles:

- !Ref EC2Role

SageMakerExecutionRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: sagemaker.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AmazonSageMakerFullAccess

Policies:

- PolicyName: S3AccessPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:GetObject

- s3:PutObject

- s3:DeleteObject

- s3:ListBucket

- s3:CreateBucket

Resource:

- arn:aws:s3:::*

- PolicyName: SSMSessionManagerPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ssmmessages:CreateControlChannel

- ssmmessages:CreateDataChannel

- ssmmessages:OpenControlChannel

- ssmmessages:OpenDataChannel

Resource: !Sub 'arn:aws:sagemaker:${AWS::Region}:${AWS::AccountId}:*'

SSMConsoleAccessRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: ec2.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AmazonSSMFullAccess

Policies:

- PolicyName: SSMConsoleAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ssm:StartSession

- ssm:DescribeSessions

- ssm:TerminateSession

- ssm:ResumeSession

Resource: '*'

Outputs:

InstanceId:

Description: ID of the EC2 instance

Value: !Ref EC2Instance

PublicIP:

Description: Public IP of the EC2 instance

Value: !GetAtt EC2Instance.PublicIp

SageMakerRoleArn:

Description: ARN of the SageMaker Execution Role

Value: !GetAtt SageMakerExecutionRole.Arn

RandomPasswordParameter:

Description: AWS Systems Manager Parameter name for the random password

Value: !Sub '/${AWS::StackName}/RandomPassword'

这个 YAML 文件定义了 Model Hub 平台所需的所有亚马逊云科技资源,包括 EC2 实例、IAM 角色、安全组、数据库等。

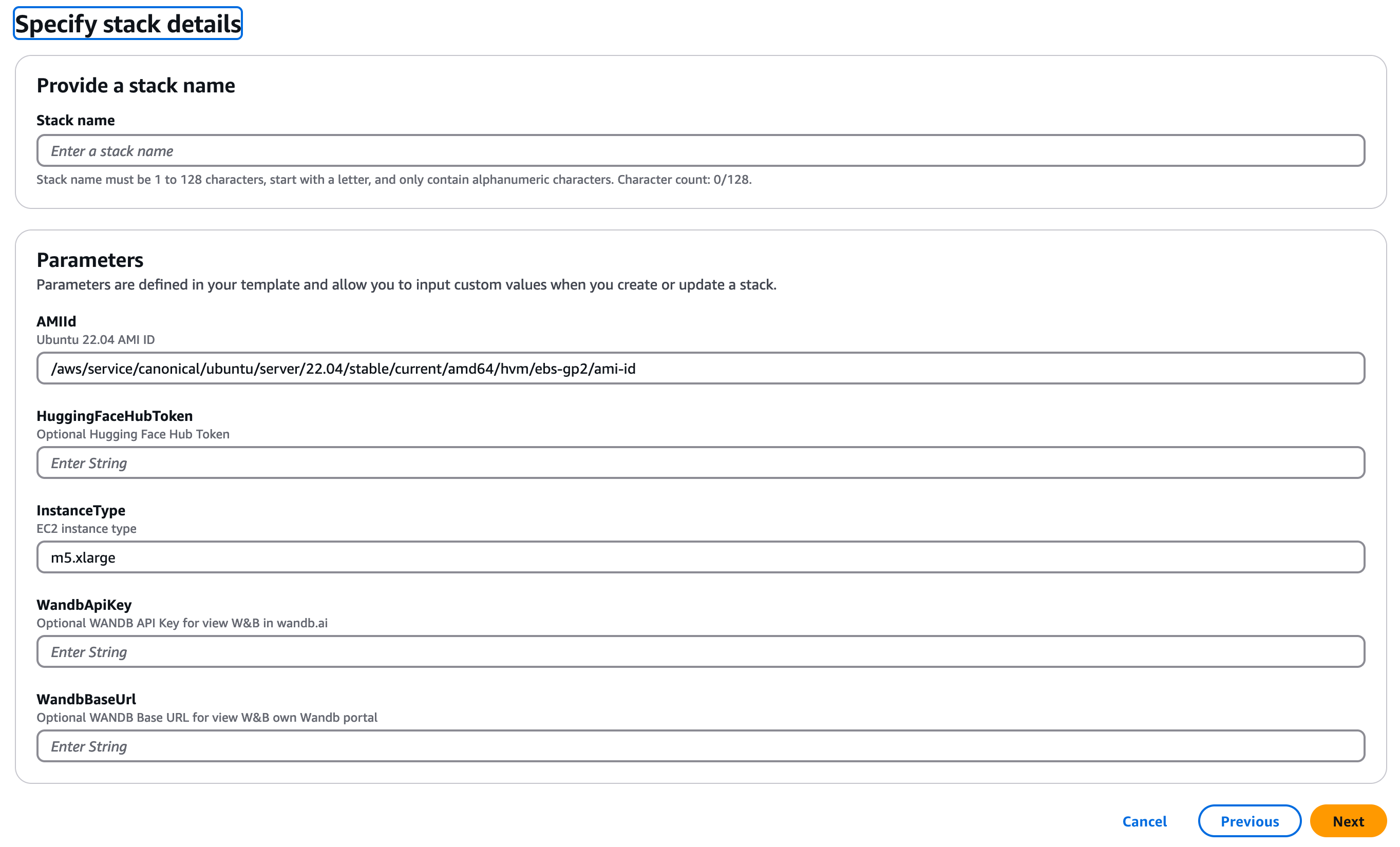

在接下来的配置步骤中,需要填写一些关键参数来定制Model Hub 部署:

-

堆栈名称(Stack Name):用于标识部署。

-

HuggingFaceHubToken(可选,但建议添加):如果需要从 HuggingFace 下载某些需要授权的模型或数据集(例如 Llama3 模型),则需要提供此令牌。此令牌将用于授权 Model Hub 访问 HuggingFace 上的私有或受限资源。

-

WandbApiKey(可选):如果计划使用 Weights & Biases (Wandb) 来记录实时训练指标,则需要提供 API Key。这将允许 Model Hub 将训练日志和指标同步到 Wandb 项目中。

-

WandbBaseUrl(可选):如果使用的是私有化部署的 Wandb 服务,则需要指定其 Base URL。

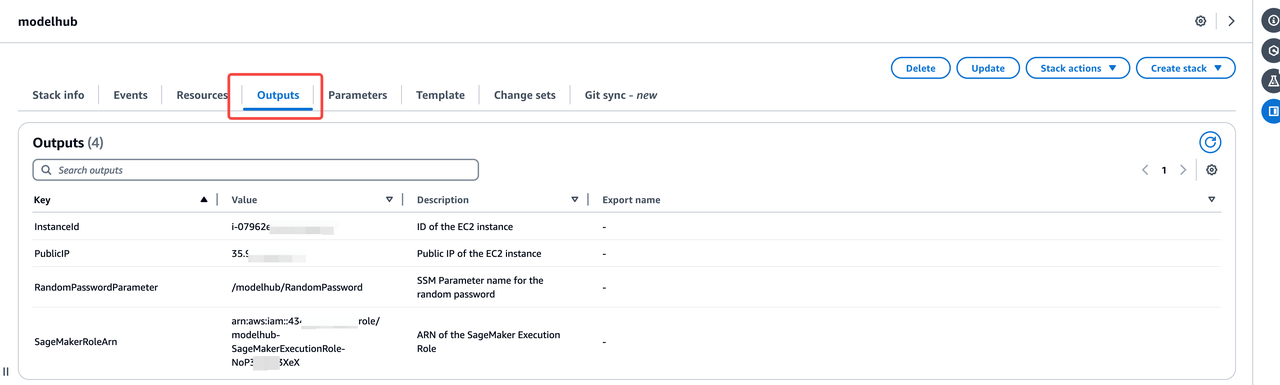

完成这些配置后,继续下一步,直到勾选确认框并提交。整个堆栈的创建过程大约需要 25 分钟。在此期间,CloudFormation 会自动创建和配置所有必要的亚马逊云科技资源。部署完成后,可以在 CloudFormation 堆栈的“输出”(Outputs)栏中找到 Public IP 地址。随后,通过访问 http://{ip}:3000 即可进入 Model Hub 的 Web 界面。默认的登录用户名是 demo_user。

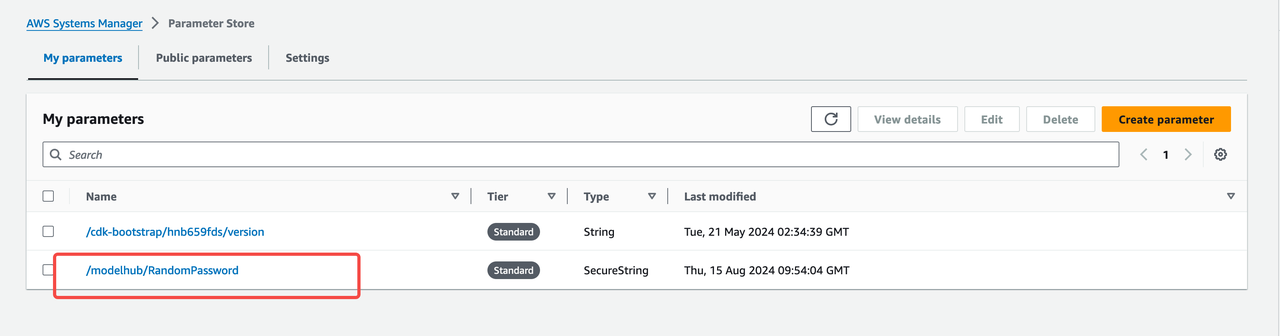

密码可以访问亚马逊云科技的 System Manager 服务控制台,在 Parameter Store 中查找名为 /modelhub/RandomPassword 的参数。点击进入后,开启“显示解密值”(Show decrypted value)开关,即可获取到自动生成的登录密码。

三、实践流程

3.1、模型训练流程

Model Hub 平台的核心价值在于其提供了一站式的无代码操作体验,使得大模型的微调和部署变得前所未有的简单。这里将通过具体的示例,详细阐述模型训练、模型部署以及实际项目中的应用,并深入探讨其关键优势。

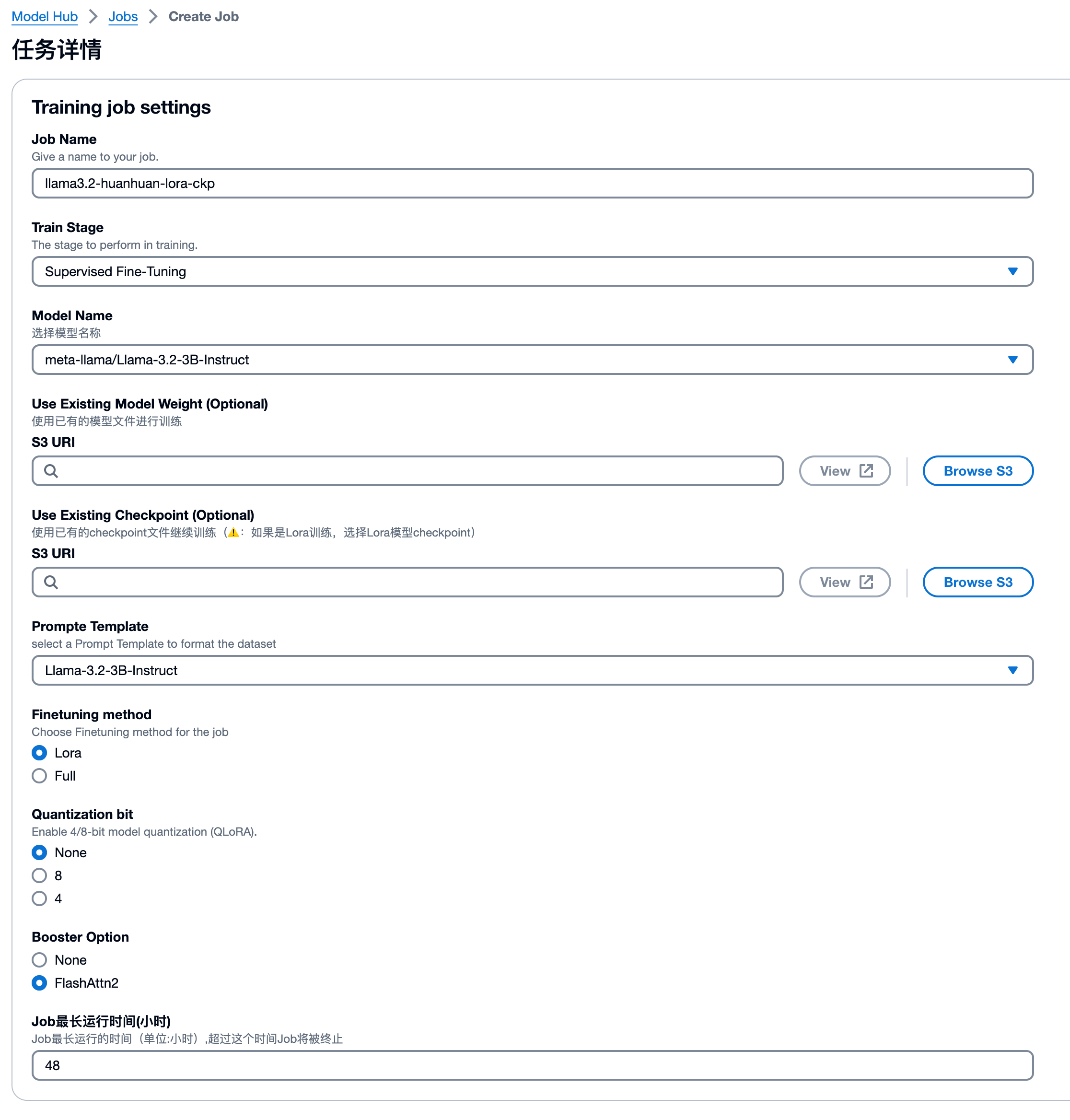

Model Hub 的模型训练流程设计直观且高效,用户无需编写复杂的训练代码,即可完成大模型的微调。整个过程可概括为选择基础模型、数据准备、选择机型和数量、训练参数调整、启动训练这几个步骤:

首先是 选择基础模型。用户可以从 Model Hub 预置的模型列表(如 HuggingFace 或 ModelScope)中选择一个开源模型作为微调的基座。例如,可以选择 Llama3.2-3B-Instruct 模型。此外,平台也支持从已有的模型权重或检查点(checkpoint)继续训练,这为模型的迭代开发提供了极大的灵活性。在 Model Hub 的 Web 界面中,通常会在“Training Jobs”或“模型选择”界面提供下拉菜单或搜索功能,方便用户快速定位所需模型。

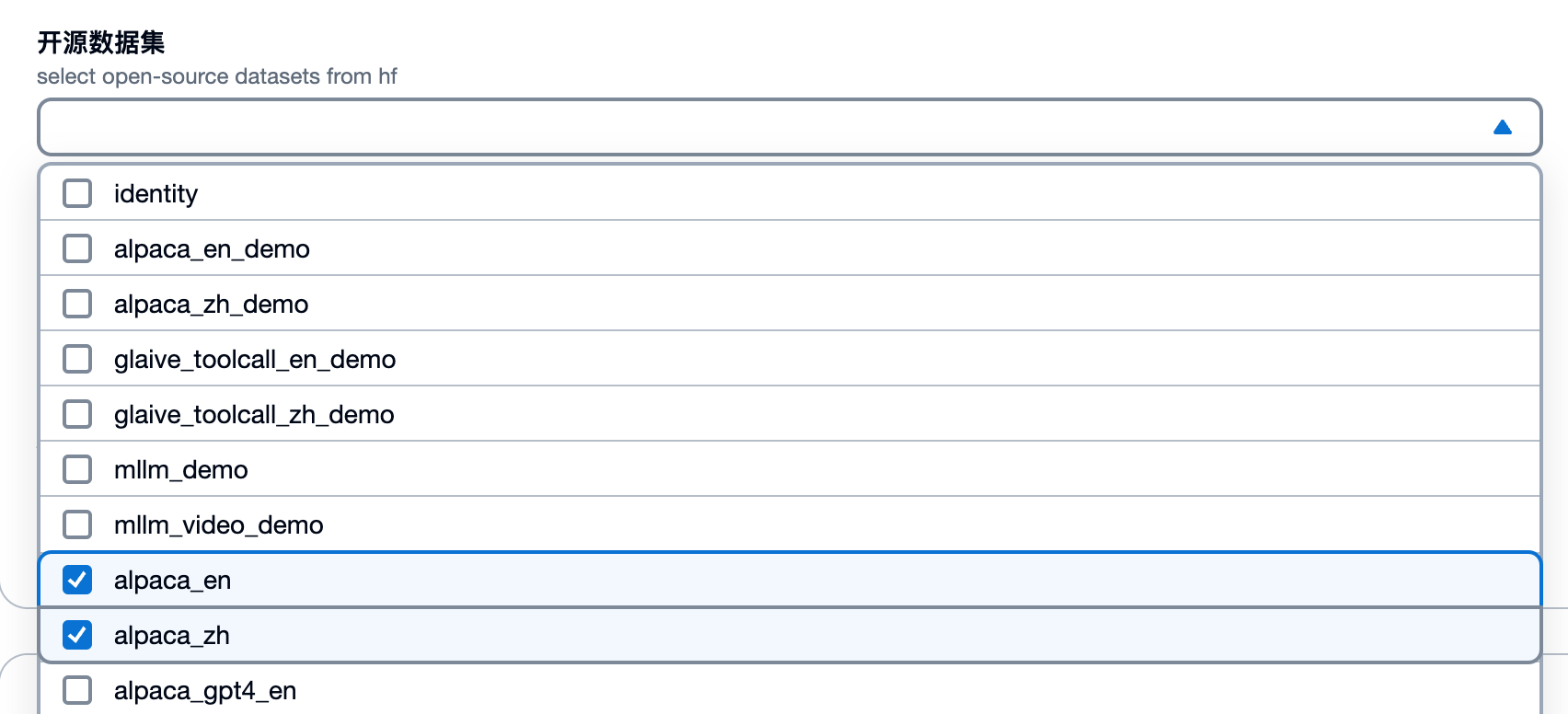

其次是 数据准备。用户可以输入自有数据集的 Amazon S3 路径地址,或者从 Model Hub 预置的公开数据集中进行选择。平台支持多轮对话数据、人类偏好数据、纯文本数据,乃至多图理解、视频理解和图文交错数据等多种数据类型,以满足不同微调场景的需求。

例如,在微调 Llama 3.2-3B 角色扮演模型的示例中,用户可以将电视剧《甄嬛传》中甄嬛的对话数据上传至 S3 桶,作为训练集。数据集的格式通常遵循 LlamaFactory 的官方指南,例如 Alpaca 或 ShareGPT 格式。

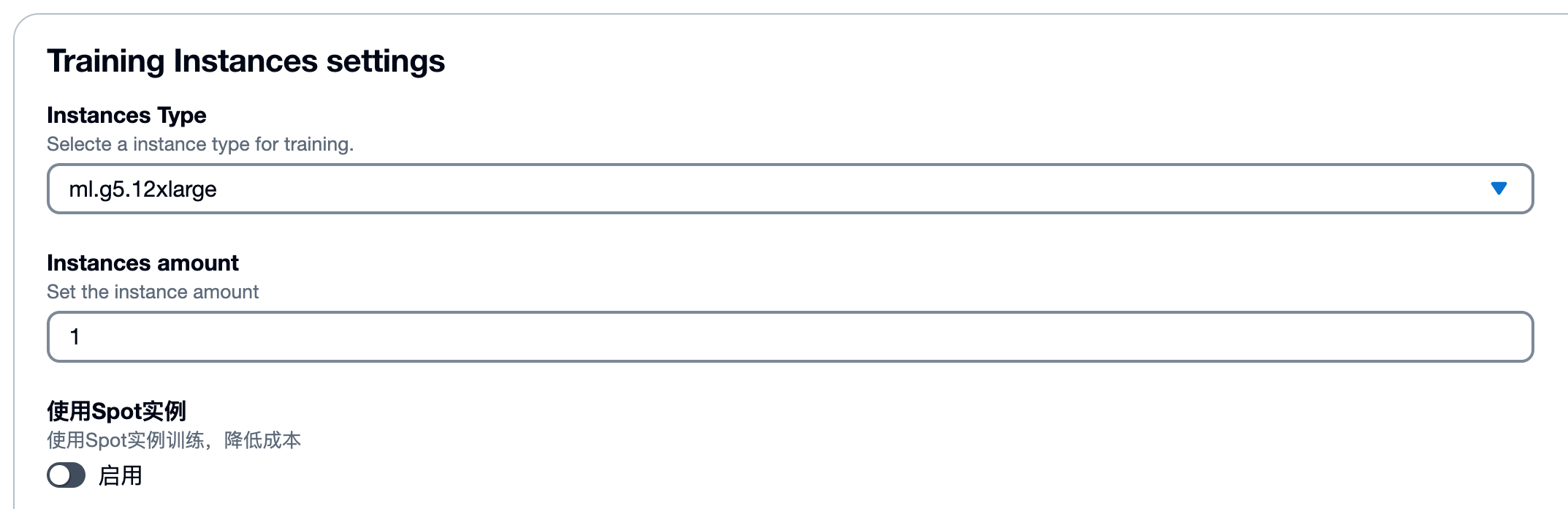

接着,需要 选择机型和数量。Model Hub 将 LlamaFactory 的训练部分封装为 SageMaker Training Job,用户只需选择所需的 Amazon EC2 实例类型和数量,即可轻松进行单机或分布式训练。例如,选择一台 g5.12xlarge 实例,该实例配备 4 张 A10g GPU,可采用分布式数据并行(DDP)方式进行训练。对于数据量较小的任务,也可以选择 g5.2xlarge 单卡实例。在 Model Hub 界面中,通常会有资源配置选项,允许用户选择实例类型和数量。

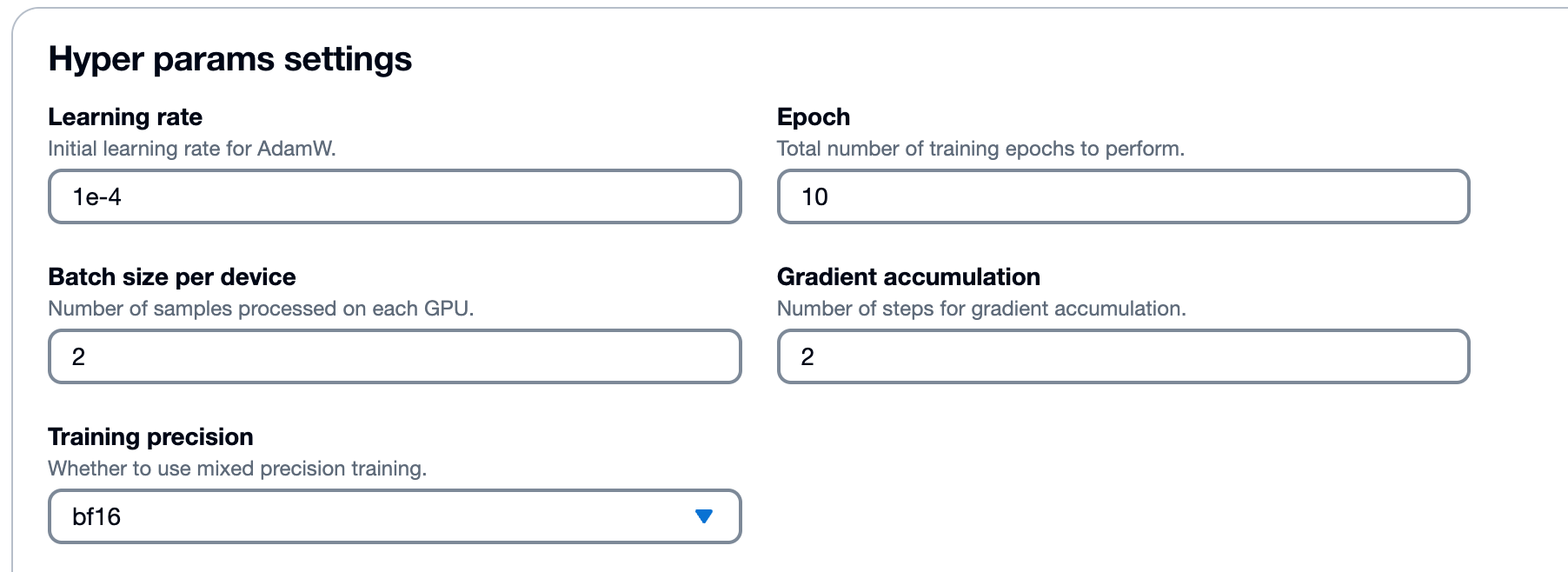

然后是 训练参数调整。用户可以在界面上直观地配置各项超参数,如学习率(Learning rate = 1e-4)、训练轮次(Epoch = 10)、每个设备的批次大小(Batch size per device = 2)、梯度累积步数(Gradient accumulation = 2)和训练精度(Training precision = bf16)等。这些参数的灵活配置,使得用户能够根据具体任务需求进行精细化调优。此外,还可以选择 Flashattn2 等加速选项来进一步提升训练效率。

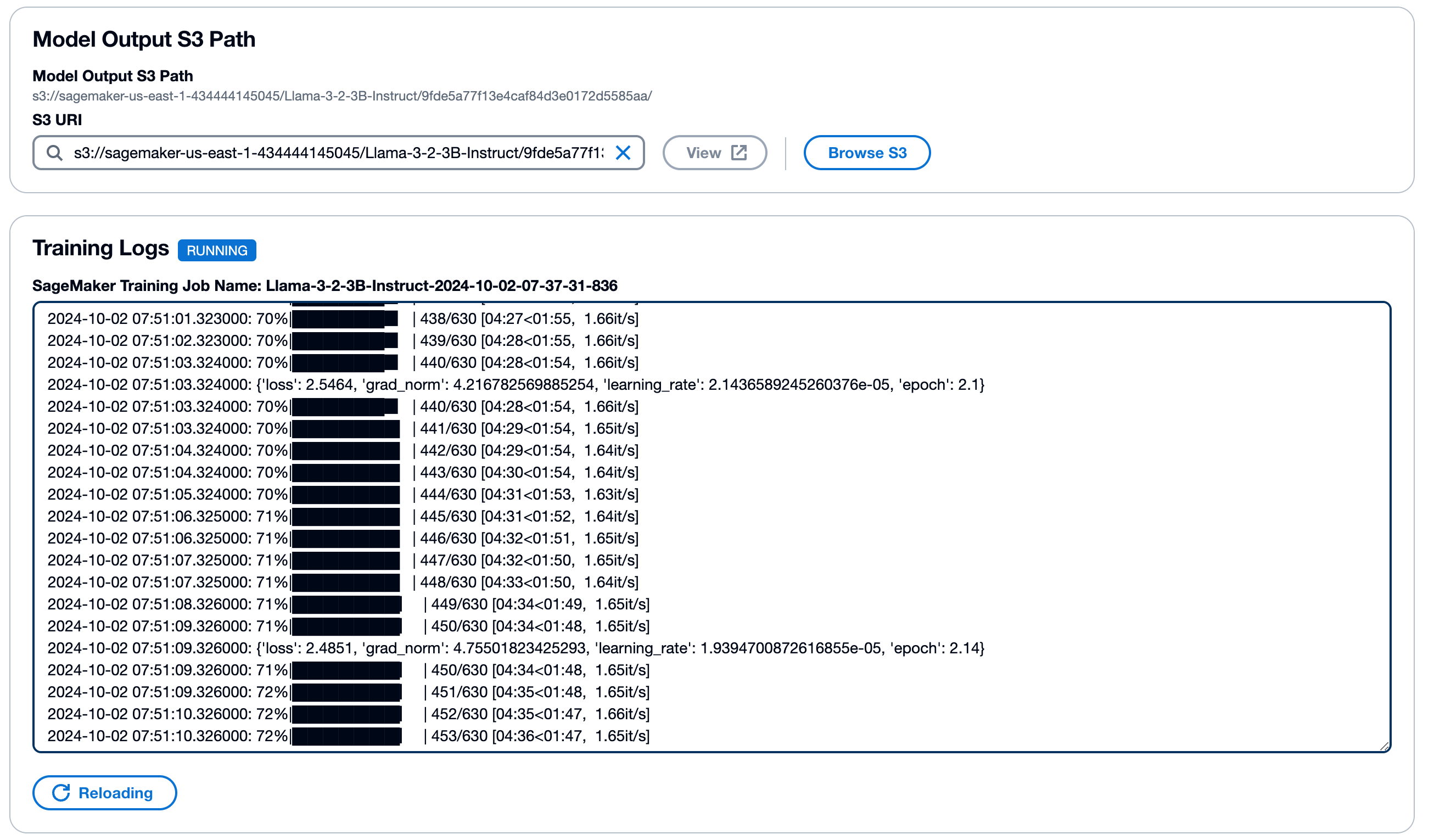

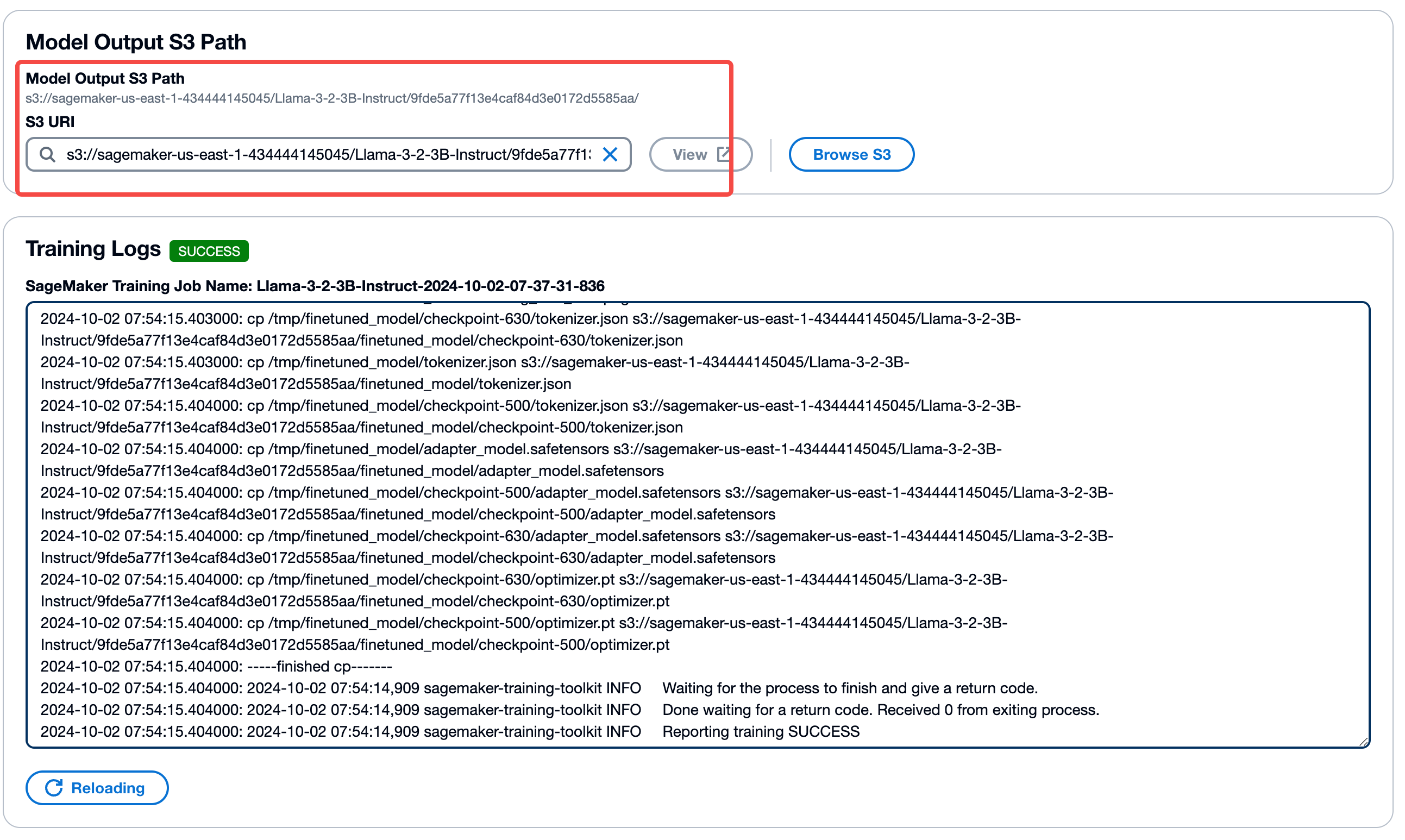

最后,用户只需 启动训练。训练任务启动后,在详情页下方可以实时查看日志输出,监控训练进度。训练完成后,状态将变为“SUCCESS”,相关的日志和模型文件在 S3 中的保存路径也会在页面上展示出来。

整个训练过程,大约可在 25 分钟内完成,完成后如下所示:

3.2、模型部署流程

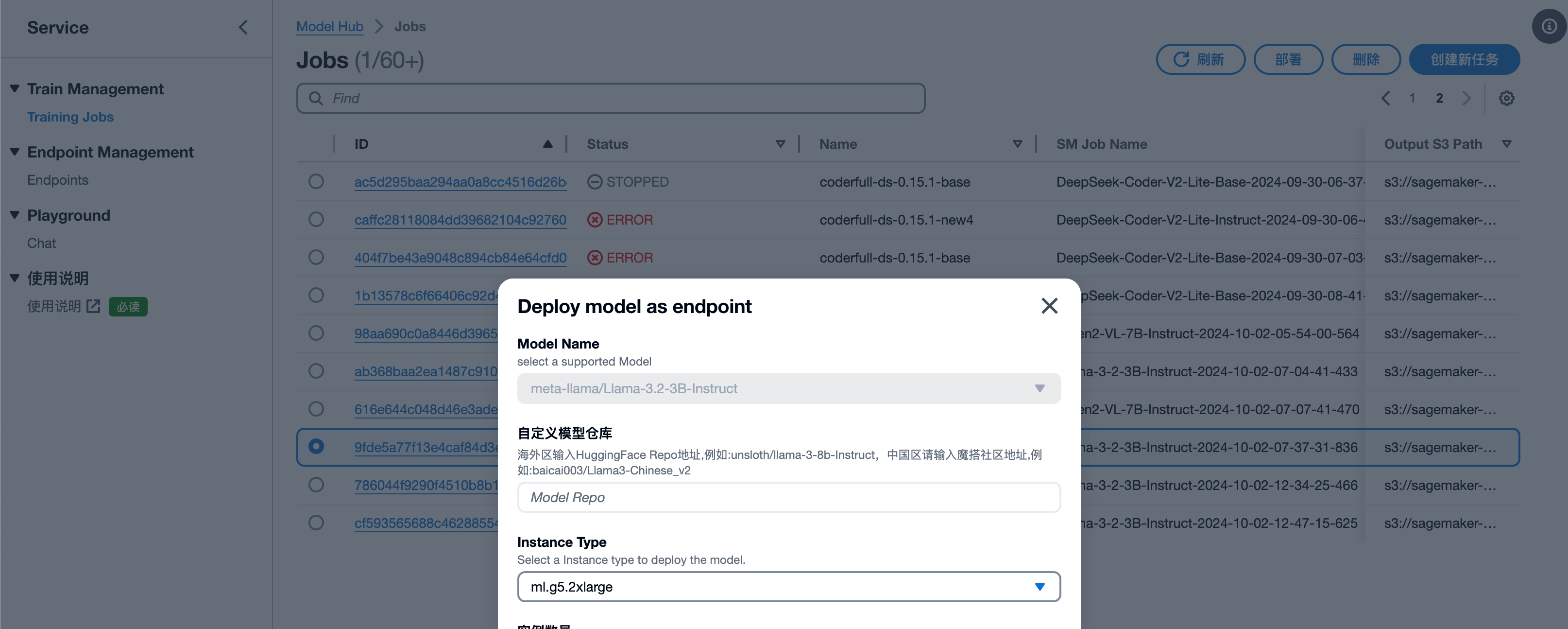

Model Hub 同样提供了简洁高效的模型部署流程。首先**选择模型和推理框架,**用户可以选择直接从 HuggingFace 或 ModelScope Repo 地址部署原始模型,也可以选择之前训练好的模型检查点进行部署。平台支持多种推理框架,确保了部署的灵活性。在 Model Hub 的“Training Jobs”列表中,选中已完成训练的任务,点击右上角的“部署”按钮,系统会引导进入部署配置页面。

接着,用户只需点击 开始部署。在部署配置页面,通常会提供默认的推理实例类型和配置,用户可以根据需求进行调整。保持默认值不变,然后点击“Confirm”即可启动部署。

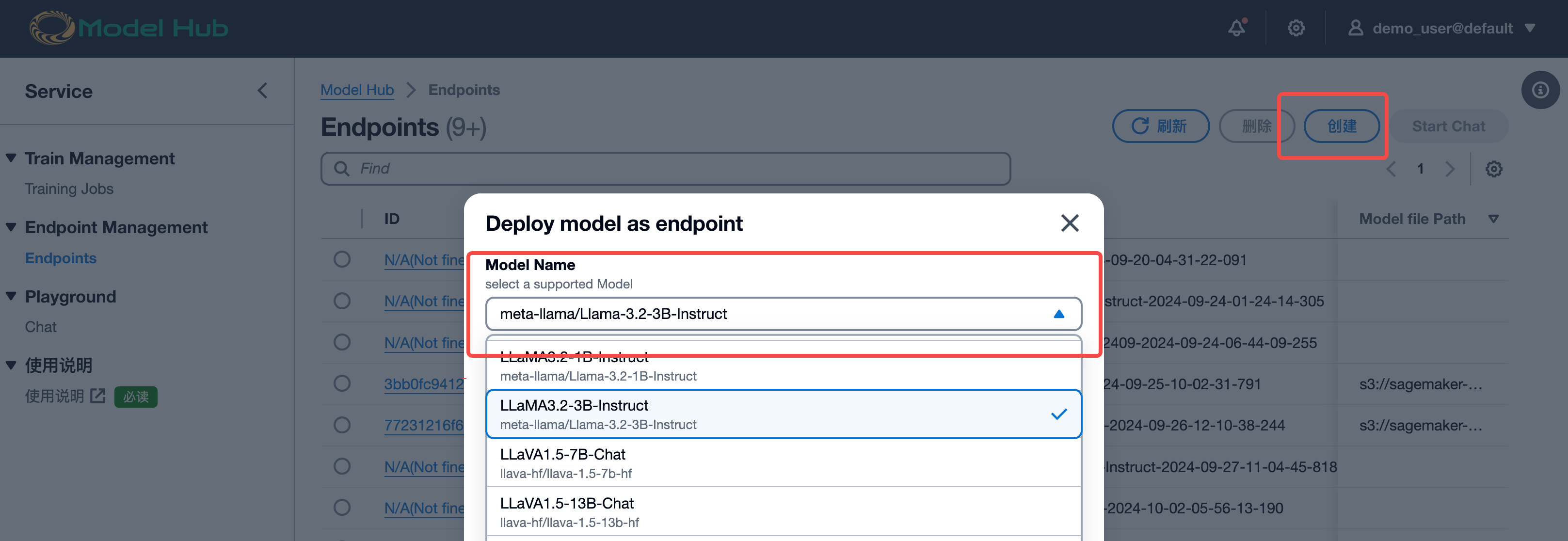

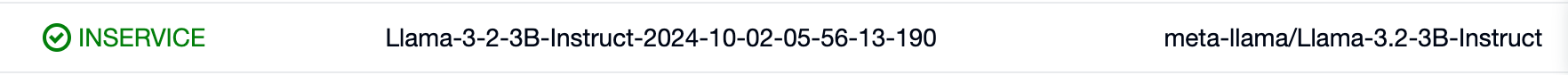

为了进行对比,也可以在“Endpoints”菜单中,点击“创建”来部署一个未经微调的原始模型,例如选择 Llama-3.2-3B-Instruct,并保持其余默认值不变,点击“Confirm”。部署过程大约需要 8 分钟,之后刷新列表,直到状态变为“InService”。

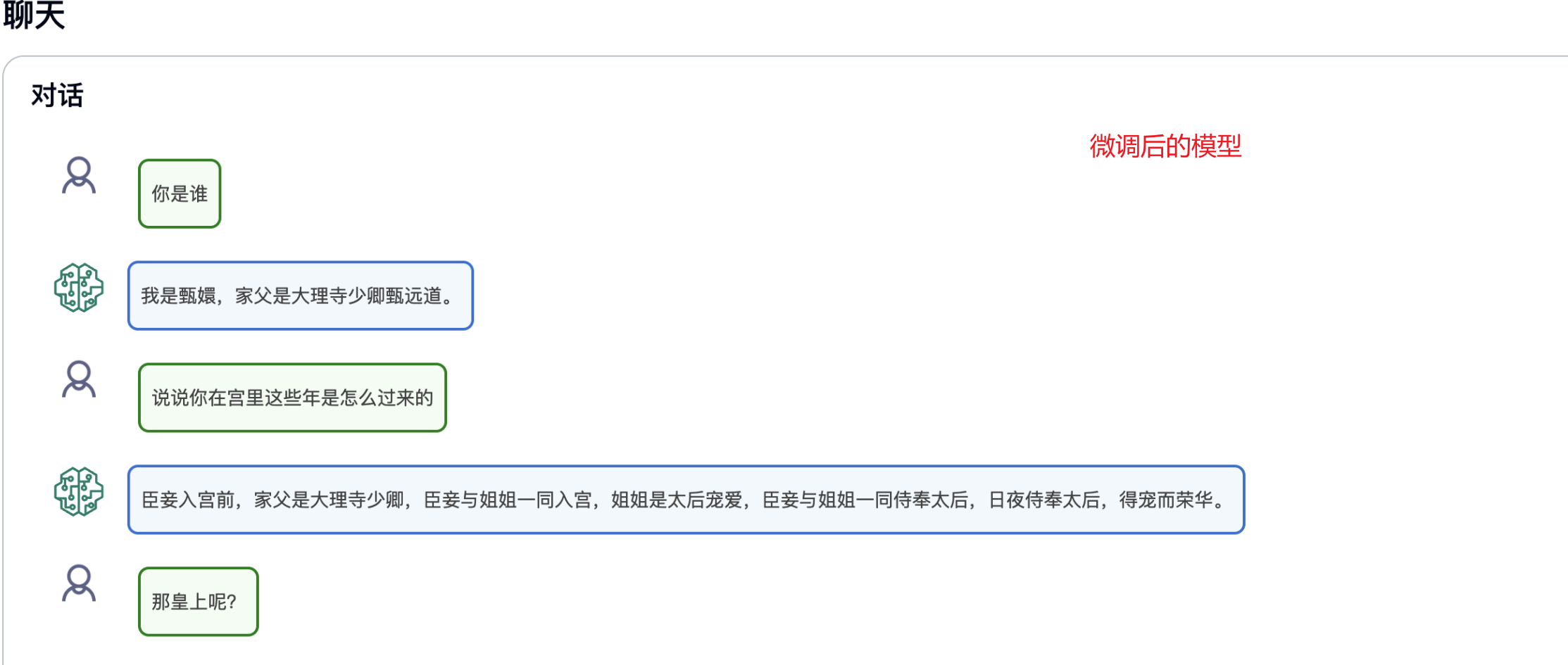

部署成功后,用户可以通过在 Playground 界面中直观地与模型进行交互。只需要选中部署成功的端点,点击右上角的“Start Chat”进入 Playground。

在“更多设置”中,可以填写“系统角色提示词”,例如“假设你是皇帝身边的女人–甄嬛”,然后在页面底部的对话框输入想要和模型对话的内容,点击提交即可发送消息。

3.3、实际项目示例与优势

Model Hub 在实际项目中展现了其强大的工程效率。得益于 Model Hub 的可视化和无代码特性,项目人员无需关注训练数据加载、基座模型下载打包、训练脚本修改适配、训练后模型保存和部署等重复性的代码工作。只需在 Model Hub 上进行简单的点击操作,即可一站式地完成几十个模型的训练和部署。部署模型时,也无需关心底层使用的推理框架和不同模型推理接口的差异,最终通过统一的接口进行推理。这使得项目团队能够从多个基础模型候选中,快速实验并训练出满足业务预定目标的最佳模型。

Model Hub 的这种设计,使得开发人员能够将更多精力投入到业务场景分析、数据准备和实验设计上,而无需耗费大量时间编写和维护重复代码。它以可视化、无代码的方式支持大量并发实验,显著提高了大模型微调的工程效率。

3.4、总结与展望

在许多开源大语言模型的应用场景中,结合特定数据和需求进行模型微调是提升模型性能和适应性的关键。然而,这通常涉及大量的数据准备、模型训练和验证工作,工作量巨大且缺乏有效的工程支持平台,导致任务难以快速推进。Model Hub 正是为了解决这些痛点而应运而生。

亚马逊云科技现提供免费套餐服务,登录亚马逊云科技官网,即可获得亚马逊云科技产品和服务的免费实践体验,注册即可获得 100 美元的服务抵扣金。

Model Hub 不仅是一个技术平台,更是一种高效开发大模型应用的解决方案,它赋能开发者,让他们能够更专注于创新,而非繁琐的工程细节。未来,我们期待 Model Hub 能够持续演进,为大模型生态带来更多价值。

以上就是本文的全部内容啦。最后提醒一下各位工友,如果后续不再使用相关服务,别忘了在控制台关闭,避免超出免费额度产生费用~

为武汉地区的开发者提供学习、交流和合作的平台。社区聚集了众多技术爱好者和专业人士,涵盖了多个领域,包括人工智能、大数据、云计算、区块链等。社区定期举办技术分享、培训和活动,为开发者提供更多的学习和交流机会。

更多推荐

已为社区贡献16条内容

已为社区贡献16条内容

所有评论(0)