centos7.9安装k8s集群v1.28.x版本

一、系统情况虚拟机版本:pve系统版本:centos7.9_2009_x86镜像地址:http://isoredirect.centos.org/centos/7/isos/x86_64/配置:4核8G(官网最低要求2核2G)主机说明192.168.240.162master节点192.168.240.163node1节点192.168.240.164node2节点192.168.240.165

一、系统情况

虚拟机版本:pve

系统版本:centos7.9_2009_x86

镜像地址:http://isoredirect.centos.org/centos/7/isos/x86_64/

配置:4核8G(官网最低要求2核2G)

主机 说明

192.168.240.162 master节点

192.168.240.163 node1节点

192.168.240.164 node2节点

192.168.240.165 harbor仓库

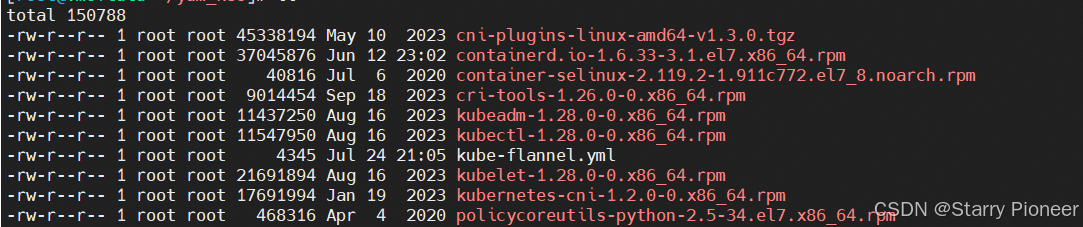

离线安装需要准备的安装包

k8s

harbor

离线安装harbor镜像仓库

https://blog.csdn.net/qq_33807380/article/details/137878258

二、环境配置

2.1、所有节点修改防火墙

本次是实验环境,图省事选择关闭防火墙,如果是生产,除非做了公网和内网隔离,还是别关闭吧,做好相关接口开发就行。

systemctl stop firewalld #停止防火墙

systemctl disable firewalld #设置开机不启动

2.2、所有节点禁用selinux

#修改/etc/selinux/config文件中的SELINUX=permissive

将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

禁用selinux

2.3、所有节点关闭swap分区

#永久禁用swap,删除或注释掉/etc/fstab里的swap设备的挂载命令即可

vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

关闭swap分区

修改后重启服务器

reboot

2.4、所有节点时间同步

yum -y install ntp

systemctl start ntpd

systemctl enable ntpd

2.5、开启bridge-nf-call-iptalbes

执行下述指令

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

应用 sysctl 参数而不重新启动

sudo sysctl --system

通过运行以下指令确认 br_netfilter 和 overlay 模块被加载:

lsmod | grep br_netfilter

lsmod | grep overlay

# 通过运行以下指令确认 net.bridge.bridge-nf-call-iptables、net.bridge.bridge-nf-call-ip6tables 和 net.ipv4.ip_forward 系统变量在你的 sysctl 配置中被设置为 1:

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

三、所有节点安装containerd

3.1、安装containerd

yum install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum -y install containerd.io

3.2、生成config.toml配置

containerd config default > /etc/containerd/config.toml

3.3、配置 systemd cgroup 驱动

在 /etc/containerd/config.toml 中设置

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

[plugins.“io.containerd.grpc.v1.cri”.containerd.runtimes.runc] …

[plugins.“io.containerd.grpc.v1.cri”.containerd.runtimes.runc.options]

SystemdCgroup = true

最新的配置方法是只在config.toml 中指定hosts.toml的config_path

sed -i 's|config_path = ""|config_path = "/etc/containerd/certs.d"|g' /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d"

将sandbox_image下载地址改为harbor地址

sed -i 's|sandbox_image = "registry.k8s.io/pause:3.6"|sandbox_image = "harbor.com/google_containers/pause:3.9"|g' /etc/containerd/config.toml

[plugins.“io.containerd.grpc.v1.cri”]

…

sandbox_image = “harbor.com/google_containers/pause:3.9”

在hosts.toml配置真正的harbor的URL, 默认是https,这里改成了http

mkdir -p /etc/containerd/certs.d/harbor.com

tee /etc/containerd/certs.d/harbor.com/hosts.toml << 'EOF'

server = "http://harbor.com"

[host."http://harbor.com"]

capabilities = ["pull", "resolve", "push"]

skip_verify = true

EOF

修改完,需要

systemctl daemon-reload

systemctl restart containerd

3.4、设置开机自启动

systemctl enable containerd

验证pull image是否成功:

ctr images pull --hosts-dir "/etc/containerd/certs.d" harbor.com/google_containers/pause:3.9

四、k8s配置阿里云yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name = Kubernetes

baseurl = https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled = 1

gpgcheck = 0

repo_gpgcheck = 0

gpgkey = https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

五、yum安装kubeadm、kubelet、kubectl

在所有服务器上都安装kubeadm、kubelet、kubectl

5.1、删除历史版本

如果之前没装过就跳过此步骤

yum -y remove kubelet kubeadm kubectl

5.2、安装kubeadm、kubelet、kubectl

这些说明适用于 Kubernetes 1.28,阿里的yum源,kubelet版本只更新到1.28.0版本,所以下面命令需要加上版本号。

yum install -y kubelet-1.28.0 kubeadm-1.28.0 kubectl-1.28.0 --disableexcludes=kubernetes

systemctl enable kubelet

六、初始化master节点

kubeadm init \

--apiserver-advertise-address=192.168.240.162 \

--image-repository harbor.com/google_containers \

--kubernetes-version v1.28.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

得到以下内容,就为成功

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.240.162:6443 --token xelp6q.zmhxqw2s3ove5iza \

--discovery-token-ca-cert-hash sha256:baf4a716154fc6f59c209312fb681b67e29ebede35767eba1bdf25ac52b6b9e0

然后按照上面提示,一步步执行命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

现在可以看到master节点了

kubectl get node

主节点

七、子节点加入master节点

kubeadm join 192.168.240.162:6443 --token xelp6q.zmhxqw2s3ove5iza \

--discovery-token-ca-cert-hash sha256:baf4a716154fc6f59c209312fb681b67e29ebede35767eba1bdf25ac52b6b9e0

加入master节点

这里面经常遇到的情况是命令卡住不动,大概率是token过期了,回到master节点,执行

kubeadm token create

创建新的token,替换后重新执行就行

现在可以看到master节点和子节点了

kubectl get node

节点信息

8、部署CNI网络

虽然现在有了master和node节点,但是所有节点状态都是NotReady,这是因为没有cni网络插件。

8.1、下载cni插件

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -pv /opt/cni/bin

tar zxvf cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin/

8.2、master安装flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

master安装flannel成功

这时候再看节点状态

节点状态

都已经成为ready了,在master服务器执行

kubectl get pods -n kube-system

查看pod状态,如果是

pod状态

说明可用了。

九、安装dashboard

9.1、下载recommended.yaml文件

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

由于外网比较慢,这儿提供我目前用的,和原版唯一不同的,就是以下内容,目的是为了暴露端口,外网直接访问。

spec:

ports:

- port: 443

targetPort: 8443

name: https # 原版没有name

nodePort: 32001 # 原版没有nodePort

type: NodePort # 原版没有nodePort

kubectl apply -f [你的本地路径]/recommended.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

name: https

nodePort: 32001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.7.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

9.2、创建用户示例

本地创建dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kubectl apply -f [你的文件路径]/dashboard-adminuser.yaml

kubectl -n kubernetes-dashboard create token admin-user

token

输入token

首页

至此,安装完成!

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)