k8s和deepflow部署与测试

环境详情:当您在上安装登录到主节点并使用在每个节点的/etc/hosts$(-cs部分配置手动修改设置crictl安装一个 Pod 网络插件,例如 Calico 或 Flannel。启动otel-collector和otel-agent需要程序集成API,发送到以DaemonSet运行在每个节点的otel-agent,otel-agent再将数据发送给otel-collector汇总,然后发往可以

Ubuntu-22-LTS部署k8s和deepflow

环境详情:

Static hostname: k8smaster.example.net

Icon name: computer-vm

Chassis: vm

Machine ID: 22349ac6f9ba406293d0541bcba7c05d

Boot ID: 605a74a509724a88940bbbb69cde77f2

Virtualization: vmware

Operating System: Ubuntu 22.04.4 LTS

Kernel: Linux 5.15.0-106-generic

Architecture: x86-64

Hardware Vendor: VMware, Inc.

Hardware Model: VMware Virtual Platform

当您在 Ubuntu 22.04 上安装 Kubernetes 集群时,您可以遵循以下步骤:

-

设置主机名并在 hosts 文件中添加条目:

-

登录到主节点并使用

hostnamectl命令设置主机名:hostnamectl set-hostname "k8smaster.example.net" -

在工作节点上,运行以下命令设置主机名(分别对应第一个和第二个工作节点):

hostnamectl set-hostname "k8sworker1.example.net" # 第一个工作节点 hostnamectl set-hostname "k8sworker2.example.net" # 第二个工作节点 -

在每个节点的

/etc/hosts文件中添加以下条目:10.1.1.70 k8smaster.example.net k8smaster 10.1.1.71 k8sworker1.example.net k8sworker1

-

-

禁用 swap 并添加内核设置:

-

在所有节点上执行以下命令以禁用交换功能:

swapoff -a sed -i '/swap/ s/^\(.*\)$/#\1/g' /etc/fstab -

加载以下内核模块:

tee /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF modprobe overlay modprobe br_netfilter -

为 Kubernetes 设置以下内核参数:

tee /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

-

-

安装 containerd 运行时:

-

首先安装 containerd 的依赖项:

apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates -

启用 Docker 存储库:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add - add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" -

安装 containerd:

apt update apt install -y containerd.io -

配置 containerd 使用 systemd 作为 cgroup:

containerd config default | tee /etc/containerd/config.toml > /dev/null 2>&1 sed -i 's/SystemdCgroup\\=false/SystemdCgroup\\=true/g' /etc/containerd/config.toml部分配置手动修改

disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_deprecation_warnings = [] ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false # 修改以下这行 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] # 添加如下4行 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.mirrors.ustc.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"] endpoint = ["https://registry.aliyuncs.com/google_containers"] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 -

重启并启用容器服务:

systemctl restart containerd systemctl enable containerd -

设置crictl

cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///var/run/containerd/containerd.sock image-endpoint: unix:///var/run/containerd/containerd.sock timeout: 10 debug: false pull-image-on-create: false EOF

-

-

添加阿里云的 Kubernetes 源:

-

首先,导入阿里云的 GPG 密钥:

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - -

然后,添加阿里云的 Kubernetes 源:

tee /etc/apt/sources.list.d/kubernetes.list <<EOF deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF

-

-

安装 Kubernetes 组件:

-

更新软件包索引并安装 kubelet、kubeadm 和 kubectl:

apt-get update apt-get install -y kubelet kubeadm kubectl -

设置 kubelet 使用 systemd 作为 cgroup 驱动:

# 可忽略 # sed -i 's/cgroup-driver=systemd/cgroup-driver=cgroupfs/g' /var/lib/kubelet/kubeadm-flags.env # systemctl daemon-reload # systemctl restart kubelet

-

-

初始化 Kubernetes 集群:

-

使用 kubeadm 初始化集群,并指定阿里云的镜像仓库:

# kubeadm init --image-repository registry.aliyuncs.com/google_containers I0513 14:16:59.740096 17563 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.28 [init] Using Kubernetes version: v1.28.9 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0513 14:17:01.440936 17563 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8smaster.example.net kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc. cluster.local] and IPs [10.96.0.1 10.1.1.70] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 4.002079 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes. io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: m9z4yq.dok89ro6yt23wykr [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.1.1.70:6443 --token m9z4yq.dok89ro6yt23wykr \ --discovery-token-ca-cert-hash sha256:17c3f29bd276592e668e9e6a7a187140a887254b4555cf7d293c3313d7c8a178

-

-

配置 kubectl:

-

为当前用户设置 kubectl 访问:

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

-

-

安装网络插件:

-

安装一个 Pod 网络插件,例如 Calico 或 Flannel。例如,使用 Calico:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml # 网络插件初始化完毕之后,coredns容器就正常了 kubectl logs -n kube-system -l k8s-app=kube-dns

-

-

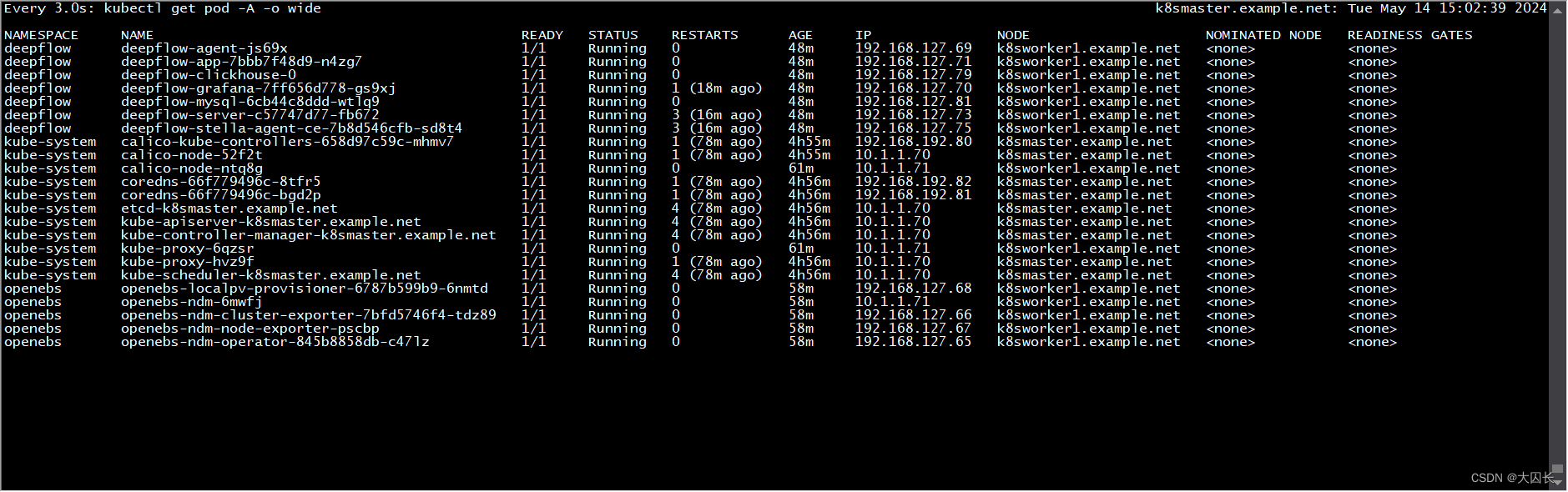

验证集群:

-

启动一个nginx pod:

# vim nginx_pod.yml apiVersion: v1 kind: Pod metadata: name: test-nginx-pod namespace: test labels: app: nginx spec: containers: - name: test-nginx-container image: nginx:latest ports: - containerPort: 80 tolerations: - key: "node-role.kubernetes.io/control-plane" operator: "Exists" effect: "NoSchedule" --- apiVersion: v1 kind: Service # service和pod必须位于同一个namespace metadata: name: nginx-service namespace: test spec: type: NodePort # selector应该匹配pod的labels selector: app: nginx ports: - protocol: TCP port: 80 nodePort: 30007 targetPort: 80启动

kubectl apply -f nginx_pod.yml

-

部署opentelemetry-collector测试

otel-collector和otel-agent需要程序集成API,发送到以DaemonSet运行在每个节点的otel-agent,otel-agent再将数据发送给otel-collector汇总,然后发往可以处理otlp trace数据的后端,如zipkin、jaeger等。

自定义测试yaml文件

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

namespace: default

data:

# 你的配置数据

config.yaml: |

receivers:

otlp:

protocols:

grpc:

http:

processors:

batch:

exporters:

logging:

loglevel: debug

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [logging]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

type: NodePort

ports:

- port: 4317

targetPort: 4317

nodePort: 30080

name: otlp-grpc

- port: 8888

targetPort: 8888

name: metrics

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

replicas: 1

selector:

matchLabels:

component: otel-collector

template:

metadata:

labels:

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- name: otel-collector

image: otel/opentelemetry-collector:latest

ports:

- containerPort: 4317

- containerPort: 8888

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-collector-conf

name: otel-collector-config-vol

启动

mkdir /conf

kubectl apply -f otel-collector.yaml

kubectl get -f otel-collector.yaml

删除

kubectl delete -f otel-collector.yaml

使用官方提供示例

kubectl apply -f https://raw.githubusercontent.com/open-telemetry/opentelemetry-collector/main/examples/k8s/otel-config.yaml

根据需要修改文件

otel-config.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-conf

labels:

app: opentelemetry

component: otel-agent-conf

data:

otel-agent-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

exporters:

otlp:

endpoint: "otel-collector.default:4317"

tls:

insecure: true

sending_queue:

num_consumers: 4

queue_size: 100

retry_on_failure:

enabled: true

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 400

# 25% of limit up to 2G

spike_limit_mib: 100

check_interval: 5s

extensions:

zpages: {}

service:

extensions: [zpages]

pipelines:

traces:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [otlp]

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: otel-agent

labels:

app: opentelemetry

component: otel-agent

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-agent

template:

metadata:

labels:

app: opentelemetry

component: otel-agent

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-agent-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-agent

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 55679 # ZPages endpoint.

- containerPort: 4317 # Default OpenTelemetry receiver port.

- containerPort: 8888 # Metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 400MiB

volumeMounts:

- name: otel-agent-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-agent-conf

items:

- key: otel-agent-config

path: otel-agent-config.yaml

name: otel-agent-config-vol

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

labels:

app: opentelemetry

component: otel-collector-conf

data:

otel-collector-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 1500

# 25% of limit up to 2G

spike_limit_mib: 512

check_interval: 5s

extensions:

zpages: {}

exporters:

otlp:

endpoint: "http://someotlp.target.com:4317" # Replace with a real endpoint.

tls:

insecure: true

zipkin:

endpoint: "http://10.1.1.10:9411/api/v2/spans"

format: "proto"

service:

extensions: [zpages]

pipelines:

traces/1:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [zipkin]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

ports:

- name: otlp-grpc # Default endpoint for OpenTelemetry gRPC receiver.

port: 4317

protocol: TCP

targetPort: 4317

- name: otlp-http # Default endpoint for OpenTelemetry HTTP receiver.

port: 4318

protocol: TCP

targetPort: 4318

- name: metrics # Default endpoint for querying metrics.

port: 8888

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-collector

minReadySeconds: 5

progressDeadlineSeconds: 120

replicas: 1 #TODO - adjust this to your own requirements

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-collector-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-collector

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

ports:

- containerPort: 55679 # Default endpoint for ZPages.

- containerPort: 4317 # Default endpoint for OpenTelemetry receiver.

- containerPort: 14250 # Default endpoint for Jaeger gRPC receiver.

- containerPort: 14268 # Default endpoint for Jaeger HTTP receiver.

- containerPort: 9411 # Default endpoint for Zipkin receiver.

- containerPort: 8888 # Default endpoint for querying metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 1600MiB

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

# - name: otel-collector-secrets

# mountPath: /secrets

volumes:

- configMap:

name: otel-collector-conf

items:

- key: otel-collector-config

path: otel-collector-config.yaml

name: otel-collector-config-vol

# - secret:

# name: otel-collector-secrets

# items:

# - key: cert.pem

# path: cert.pem

# - key: key.pem

# path: key.pem

部署deepflow监控单个k8s集群

安装helm

snap install helm --classic

设置pv

kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml

## config default storage class

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

部署deepflow

helm repo add deepflow https://deepflowio.github.io/deepflow

helm repo update deepflow # use `helm repo update` when helm < 3.7.0

helm install deepflow -n deepflow deepflow/deepflow --create-namespace

# 显示如下

NAME: deepflow

LAST DEPLOYED: Tue May 14 14:13:50 2024

NAMESPACE: deepflow

STATUS: deployed

REVISION: 1

NOTES:

██████╗ ███████╗███████╗██████╗ ███████╗██╗ ██████╗ ██╗ ██╗

██╔══██╗██╔════╝██╔════╝██╔══██╗██╔════╝██║ ██╔═══██╗██║ ██║

██║ ██║█████╗ █████╗ ██████╔╝█████╗ ██║ ██║ ██║██║ █╗ ██║

██║ ██║██╔══╝ ██╔══╝ ██╔═══╝ ██╔══╝ ██║ ██║ ██║██║███╗██║

██████╔╝███████╗███████╗██║ ██║ ███████╗╚██████╔╝╚███╔███╔╝

╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝ ╚══════╝ ╚═════╝ ╚══╝╚══╝

An automated observability platform for cloud-native developers.

# deepflow-agent Port for receiving trace, metrics, and log

deepflow-agent service: deepflow-agent.deepflow

deepflow-agent Host listening port: 38086

# Get the Grafana URL to visit by running these commands in the same shell

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)

NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}")

echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow"

节点安装deepflow-ctl

curl -o /usr/bin/deepflow-ctl https://deepflow-ce.oss-cn-beijing.aliyuncs.com/bin/ctl/stable/linux/$(arch | sed 's|x86_64|amd64|' | sed 's|aarch64|arm64|')/deepflow-ctl

chmod a+x /usr/bin/deepflow-ctl

访问grafana页面

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)

NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}")

echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow"

Ubuntu-22-LTS部署k8s和deepflow

环境详情:

Static hostname: k8smaster.example.net

Icon name: computer-vm

Chassis: vm

Machine ID: 22349ac6f9ba406293d0541bcba7c05d

Boot ID: 605a74a509724a88940bbbb69cde77f2

Virtualization: vmware

Operating System: Ubuntu 22.04.4 LTS

Kernel: Linux 5.15.0-106-generic

Architecture: x86-64

Hardware Vendor: VMware, Inc.

Hardware Model: VMware Virtual Platform

当您在 Ubuntu 22.04 上安装 Kubernetes 集群时,您可以遵循以下步骤:

-

设置主机名并在 hosts 文件中添加条目:

-

登录到主节点并使用

hostnamectl命令设置主机名:hostnamectl set-hostname "k8smaster.example.net" -

在工作节点上,运行以下命令设置主机名(分别对应第一个和第二个工作节点):

hostnamectl set-hostname "k8sworker1.example.net" # 第一个工作节点 hostnamectl set-hostname "k8sworker2.example.net" # 第二个工作节点 -

在每个节点的

/etc/hosts文件中添加以下条目:10.1.1.70 k8smaster.example.net k8smaster 10.1.1.71 k8sworker1.example.net k8sworker1

-

-

禁用 swap 并添加内核设置:

-

在所有节点上执行以下命令以禁用交换功能:

swapoff -a sed -i '/swap/ s/^\(.*\)$/#\1/g' /etc/fstab -

加载以下内核模块:

tee /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF modprobe overlay modprobe br_netfilter -

为 Kubernetes 设置以下内核参数:

tee /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

-

-

安装 containerd 运行时:

-

首先安装 containerd 的依赖项:

apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates -

启用 Docker 存储库:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add - add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" -

安装 containerd:

apt update apt install -y containerd.io -

配置 containerd 使用 systemd 作为 cgroup:

containerd config default | tee /etc/containerd/config.toml > /dev/null 2>&1 sed -i 's/SystemdCgroup\\=false/SystemdCgroup\\=true/g' /etc/containerd/config.toml部分配置手动修改

disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_deprecation_warnings = [] ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false # 修改以下这行 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] # 添加如下4行 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.mirrors.ustc.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"] endpoint = ["https://registry.aliyuncs.com/google_containers"] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 -

重启并启用容器服务:

systemctl restart containerd systemctl enable containerd -

设置crictl

cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///var/run/containerd/containerd.sock image-endpoint: unix:///var/run/containerd/containerd.sock timeout: 10 debug: false pull-image-on-create: false EOF

-

-

添加阿里云的 Kubernetes 源:

-

首先,导入阿里云的 GPG 密钥:

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - -

然后,添加阿里云的 Kubernetes 源:

tee /etc/apt/sources.list.d/kubernetes.list <<EOF deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF

-

-

安装 Kubernetes 组件:

-

更新软件包索引并安装 kubelet、kubeadm 和 kubectl:

apt-get update apt-get install -y kubelet kubeadm kubectl -

设置 kubelet 使用 systemd 作为 cgroup 驱动:

# 可忽略 # sed -i 's/cgroup-driver=systemd/cgroup-driver=cgroupfs/g' /var/lib/kubelet/kubeadm-flags.env # systemctl daemon-reload # systemctl restart kubelet

-

-

初始化 Kubernetes 集群:

-

使用 kubeadm 初始化集群,并指定阿里云的镜像仓库:

# kubeadm init --image-repository registry.aliyuncs.com/google_containers I0513 14:16:59.740096 17563 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.28 [init] Using Kubernetes version: v1.28.9 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0513 14:17:01.440936 17563 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8smaster.example.net kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc. cluster.local] and IPs [10.96.0.1 10.1.1.70] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 4.002079 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes. io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: m9z4yq.dok89ro6yt23wykr [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.1.1.70:6443 --token m9z4yq.dok89ro6yt23wykr \ --discovery-token-ca-cert-hash sha256:17c3f29bd276592e668e9e6a7a187140a887254b4555cf7d293c3313d7c8a178

-

-

配置 kubectl:

-

为当前用户设置 kubectl 访问:

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

-

-

安装网络插件:

-

安装一个 Pod 网络插件,例如 Calico 或 Flannel。例如,使用 Calico:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml # 网络插件初始化完毕之后,coredns容器就正常了 kubectl logs -n kube-system -l k8s-app=kube-dns

-

-

验证集群:

-

启动一个nginx pod:

# vim nginx_pod.yml apiVersion: v1 kind: Pod metadata: name: test-nginx-pod namespace: test labels: app: nginx spec: containers: - name: test-nginx-container image: nginx:latest ports: - containerPort: 80 tolerations: - key: "node-role.kubernetes.io/control-plane" operator: "Exists" effect: "NoSchedule" --- apiVersion: v1 kind: Service # service和pod必须位于同一个namespace metadata: name: nginx-service namespace: test spec: type: NodePort # selector应该匹配pod的labels selector: app: nginx ports: - protocol: TCP port: 80 nodePort: 30007 targetPort: 80启动

kubectl apply -f nginx_pod.yml

-

部署opentelemetry-collector测试

otel-collector和otel-agent需要程序集成API,发送到以DaemonSet运行在每个节点的otel-agent,otel-agent再将数据发送给otel-collector汇总,然后发往可以处理otlp trace数据的后端,如zipkin、jaeger等。

自定义测试yaml文件

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

namespace: default

data:

# 你的配置数据

config.yaml: |

receivers:

otlp:

protocols:

grpc:

http:

processors:

batch:

exporters:

logging:

loglevel: debug

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [logging]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

type: NodePort

ports:

- port: 4317

targetPort: 4317

nodePort: 30080

name: otlp-grpc

- port: 8888

targetPort: 8888

name: metrics

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

replicas: 1

selector:

matchLabels:

component: otel-collector

template:

metadata:

labels:

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- name: otel-collector

image: otel/opentelemetry-collector:latest

ports:

- containerPort: 4317

- containerPort: 8888

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-collector-conf

name: otel-collector-config-vol

启动

mkdir /conf

kubectl apply -f otel-collector.yaml

kubectl get -f otel-collector.yaml

删除

kubectl delete -f otel-collector.yaml

使用官方提供示例

kubectl apply -f https://raw.githubusercontent.com/open-telemetry/opentelemetry-collector/main/examples/k8s/otel-config.yaml

根据需要修改文件

otel-config.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-conf

labels:

app: opentelemetry

component: otel-agent-conf

data:

otel-agent-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

exporters:

otlp:

endpoint: "otel-collector.default:4317"

tls:

insecure: true

sending_queue:

num_consumers: 4

queue_size: 100

retry_on_failure:

enabled: true

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 400

# 25% of limit up to 2G

spike_limit_mib: 100

check_interval: 5s

extensions:

zpages: {}

service:

extensions: [zpages]

pipelines:

traces:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [otlp]

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: otel-agent

labels:

app: opentelemetry

component: otel-agent

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-agent

template:

metadata:

labels:

app: opentelemetry

component: otel-agent

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-agent-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-agent

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 55679 # ZPages endpoint.

- containerPort: 4317 # Default OpenTelemetry receiver port.

- containerPort: 8888 # Metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 400MiB

volumeMounts:

- name: otel-agent-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-agent-conf

items:

- key: otel-agent-config

path: otel-agent-config.yaml

name: otel-agent-config-vol

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

labels:

app: opentelemetry

component: otel-collector-conf

data:

otel-collector-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 1500

# 25% of limit up to 2G

spike_limit_mib: 512

check_interval: 5s

extensions:

zpages: {}

exporters:

otlp:

endpoint: "http://someotlp.target.com:4317" # Replace with a real endpoint.

tls:

insecure: true

zipkin:

endpoint: "http://10.1.1.10:9411/api/v2/spans"

format: "proto"

service:

extensions: [zpages]

pipelines:

traces/1:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [zipkin]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

ports:

- name: otlp-grpc # Default endpoint for OpenTelemetry gRPC receiver.

port: 4317

protocol: TCP

targetPort: 4317

- name: otlp-http # Default endpoint for OpenTelemetry HTTP receiver.

port: 4318

protocol: TCP

targetPort: 4318

- name: metrics # Default endpoint for querying metrics.

port: 8888

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-collector

minReadySeconds: 5

progressDeadlineSeconds: 120

replicas: 1 #TODO - adjust this to your own requirements

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-collector-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-collector

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

ports:

- containerPort: 55679 # Default endpoint for ZPages.

- containerPort: 4317 # Default endpoint for OpenTelemetry receiver.

- containerPort: 14250 # Default endpoint for Jaeger gRPC receiver.

- containerPort: 14268 # Default endpoint for Jaeger HTTP receiver.

- containerPort: 9411 # Default endpoint for Zipkin receiver.

- containerPort: 8888 # Default endpoint for querying metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 1600MiB

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

# - name: otel-collector-secrets

# mountPath: /secrets

volumes:

- configMap:

name: otel-collector-conf

items:

- key: otel-collector-config

path: otel-collector-config.yaml

name: otel-collector-config-vol

# - secret:

# name: otel-collector-secrets

# items:

# - key: cert.pem

# path: cert.pem

# - key: key.pem

# path: key.pem

部署deepflow监控单个k8s集群

安装helm

snap install helm --classic

设置pv

kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml

## config default storage class

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

部署deepflow

helm repo add deepflow https://deepflowio.github.io/deepflow

helm repo update deepflow # use `helm repo update` when helm < 3.7.0

helm install deepflow -n deepflow deepflow/deepflow --create-namespace

# 显示如下

NAME: deepflow

LAST DEPLOYED: Tue May 14 14:13:50 2024

NAMESPACE: deepflow

STATUS: deployed

REVISION: 1

NOTES:

██████╗ ███████╗███████╗██████╗ ███████╗██╗ ██████╗ ██╗ ██╗

██╔══██╗██╔════╝██╔════╝██╔══██╗██╔════╝██║ ██╔═══██╗██║ ██║

██║ ██║█████╗ █████╗ ██████╔╝█████╗ ██║ ██║ ██║██║ █╗ ██║

██║ ██║██╔══╝ ██╔══╝ ██╔═══╝ ██╔══╝ ██║ ██║ ██║██║███╗██║

██████╔╝███████╗███████╗██║ ██║ ███████╗╚██████╔╝╚███╔███╔╝

╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝ ╚══════╝ ╚═════╝ ╚══╝╚══╝

An automated observability platform for cloud-native developers.

# deepflow-agent Port for receiving trace, metrics, and log

deepflow-agent service: deepflow-agent.deepflow

deepflow-agent Host listening port: 38086

# Get the Grafana URL to visit by running these commands in the same shell

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)

NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}")

echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow"

节点安装deepflow-ctl

curl -o /usr/bin/deepflow-ctl https://deepflow-ce.oss-cn-beijing.aliyuncs.com/bin/ctl/stable/linux/$(arch | sed 's|x86_64|amd64|' | sed 's|aarch64|arm64|')/deepflow-ctl

chmod a+x /usr/bin/deepflow-ctl

访问grafana页面

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)

NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}")

echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow"

Ubuntu-22-LTS部署k8s和deepflow

环境详情:

Static hostname: k8smaster.example.net

Icon name: computer-vm

Chassis: vm

Machine ID: 22349ac6f9ba406293d0541bcba7c05d

Boot ID: 605a74a509724a88940bbbb69cde77f2

Virtualization: vmware

Operating System: Ubuntu 22.04.4 LTS

Kernel: Linux 5.15.0-106-generic

Architecture: x86-64

Hardware Vendor: VMware, Inc.

Hardware Model: VMware Virtual Platform

当您在 Ubuntu 22.04 上安装 Kubernetes 集群时,您可以遵循以下步骤:

-

设置主机名并在 hosts 文件中添加条目:

-

登录到主节点并使用

hostnamectl命令设置主机名:hostnamectl set-hostname "k8smaster.example.net" -

在工作节点上,运行以下命令设置主机名(分别对应第一个和第二个工作节点):

hostnamectl set-hostname "k8sworker1.example.net" # 第一个工作节点 hostnamectl set-hostname "k8sworker2.example.net" # 第二个工作节点 -

在每个节点的

/etc/hosts文件中添加以下条目:10.1.1.70 k8smaster.example.net k8smaster 10.1.1.71 k8sworker1.example.net k8sworker1

-

-

禁用 swap 并添加内核设置:

-

在所有节点上执行以下命令以禁用交换功能:

swapoff -a sed -i '/swap/ s/^\(.*\)$/#\1/g' /etc/fstab -

加载以下内核模块:

tee /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF modprobe overlay modprobe br_netfilter -

为 Kubernetes 设置以下内核参数:

tee /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

-

-

安装 containerd 运行时:

-

首先安装 containerd 的依赖项:

apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates -

启用 Docker 存储库:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add - add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" -

安装 containerd:

apt update apt install -y containerd.io -

配置 containerd 使用 systemd 作为 cgroup:

containerd config default | tee /etc/containerd/config.toml > /dev/null 2>&1 sed -i 's/SystemdCgroup\\=false/SystemdCgroup\\=true/g' /etc/containerd/config.toml部分配置手动修改

disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2 [cgroup] path = "" [debug] address = "" format = "" gid = 0 level = "" uid = 0 [grpc] address = "/run/containerd/containerd.sock" gid = 0 max_recv_message_size = 16777216 max_send_message_size = 16777216 tcp_address = "" tcp_tls_ca = "" tcp_tls_cert = "" tcp_tls_key = "" uid = 0 [metrics] address = "" grpc_histogram = false [plugins] [plugins."io.containerd.gc.v1.scheduler"] deletion_threshold = 0 mutation_threshold = 100 pause_threshold = 0.02 schedule_delay = "0s" startup_delay = "100ms" [plugins."io.containerd.grpc.v1.cri"] device_ownership_from_security_context = false disable_apparmor = false disable_cgroup = false disable_hugetlb_controller = true disable_proc_mount = false disable_tcp_service = true drain_exec_sync_io_timeout = "0s" enable_selinux = false enable_tls_streaming = false enable_unprivileged_icmp = false enable_unprivileged_ports = false ignore_deprecation_warnings = [] ignore_image_defined_volumes = false max_concurrent_downloads = 3 max_container_log_line_size = 16384 netns_mounts_under_state_dir = false restrict_oom_score_adj = false # 修改以下这行 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8" selinux_category_range = 1024 stats_collect_period = 10 stream_idle_timeout = "4h0m0s" stream_server_address = "127.0.0.1" stream_server_port = "0" systemd_cgroup = false tolerate_missing_hugetlb_controller = true unset_seccomp_profile = "" [plugins."io.containerd.grpc.v1.cri".cni] bin_dir = "/opt/cni/bin" conf_dir = "/etc/cni/net.d" conf_template = "" ip_pref = "" max_conf_num = 1 [plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2" [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options] [plugins."io.containerd.grpc.v1.cri".image_decryption] key_model = "node" [plugins."io.containerd.grpc.v1.cri".registry] config_path = "" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] # 添加如下4行 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"] endpoint = ["https://docker.mirrors.ustc.edu.cn"] [plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"] endpoint = ["https://registry.aliyuncs.com/google_containers"] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = "" [plugins."io.containerd.internal.v1.opt"] path = "/opt/containerd" [plugins."io.containerd.internal.v1.restart"] interval = "10s" [plugins."io.containerd.internal.v1.tracing"] sampling_ratio = 1.0 service_name = "containerd" [plugins."io.containerd.metadata.v1.bolt"] content_sharing_policy = "shared" [plugins."io.containerd.monitor.v1.cgroups"] no_prometheus = false [plugins."io.containerd.runtime.v1.linux"] no_shim = false runtime = "runc" runtime_root = "" shim = "containerd-shim" shim_debug = false [plugins."io.containerd.runtime.v2.task"] platforms = ["linux/amd64"] sched_core = false [plugins."io.containerd.service.v1.diff-service"] default = ["walking"] [plugins."io.containerd.service.v1.tasks-service"] rdt_config_file = "" [plugins."io.containerd.snapshotter.v1.aufs"] root_path = "" [plugins."io.containerd.snapshotter.v1.btrfs"] root_path = "" [plugins."io.containerd.snapshotter.v1.devmapper"] async_remove = false base_image_size = "" discard_blocks = false fs_options = "" fs_type = "" pool_name = "" root_path = "" [plugins."io.containerd.snapshotter.v1.native"] root_path = "" [plugins."io.containerd.snapshotter.v1.overlayfs"] mount_options = [] root_path = "" sync_remove = false upperdir_label = false [plugins."io.containerd.snapshotter.v1.zfs"] root_path = "" [plugins."io.containerd.tracing.processor.v1.otlp"] endpoint = "" insecure = false protocol = "" [proxy_plugins] [stream_processors] [stream_processors."io.containerd.ocicrypt.decoder.v1.tar"] accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar" [stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"] accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"] args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"] env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"] path = "ctd-decoder" returns = "application/vnd.oci.image.layer.v1.tar+gzip" [timeouts] "io.containerd.timeout.bolt.open" = "0s" "io.containerd.timeout.shim.cleanup" = "5s" "io.containerd.timeout.shim.load" = "5s" "io.containerd.timeout.shim.shutdown" = "3s" "io.containerd.timeout.task.state" = "2s" [ttrpc] address = "" gid = 0 uid = 0 -

重启并启用容器服务:

systemctl restart containerd systemctl enable containerd -

设置crictl

cat > /etc/crictl.yaml <<EOF runtime-endpoint: unix:///var/run/containerd/containerd.sock image-endpoint: unix:///var/run/containerd/containerd.sock timeout: 10 debug: false pull-image-on-create: false EOF

-

-

添加阿里云的 Kubernetes 源:

-

首先,导入阿里云的 GPG 密钥:

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - -

然后,添加阿里云的 Kubernetes 源:

tee /etc/apt/sources.list.d/kubernetes.list <<EOF deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF

-

-

安装 Kubernetes 组件:

-

更新软件包索引并安装 kubelet、kubeadm 和 kubectl:

apt-get update apt-get install -y kubelet kubeadm kubectl -

设置 kubelet 使用 systemd 作为 cgroup 驱动:

# 可忽略 # sed -i 's/cgroup-driver=systemd/cgroup-driver=cgroupfs/g' /var/lib/kubelet/kubeadm-flags.env # systemctl daemon-reload # systemctl restart kubelet

-

-

初始化 Kubernetes 集群:

-

使用 kubeadm 初始化集群,并指定阿里云的镜像仓库:

# kubeadm init --image-repository registry.aliyuncs.com/google_containers I0513 14:16:59.740096 17563 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.28 [init] Using Kubernetes version: v1.28.9 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0513 14:17:01.440936 17563 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8smaster.example.net kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc. cluster.local] and IPs [10.96.0.1 10.1.1.70] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8smaster.example.net localhost] and IPs [10.1.1.70 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 4.002079 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes. io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8smaster.example.net as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: m9z4yq.dok89ro6yt23wykr [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.1.1.70:6443 --token m9z4yq.dok89ro6yt23wykr \ --discovery-token-ca-cert-hash sha256:17c3f29bd276592e668e9e6a7a187140a887254b4555cf7d293c3313d7c8a178

-

-

配置 kubectl:

-

为当前用户设置 kubectl 访问:

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

-

-

安装网络插件:

-

安装一个 Pod 网络插件,例如 Calico 或 Flannel。例如,使用 Calico:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml # 网络插件初始化完毕之后,coredns容器就正常了 kubectl logs -n kube-system -l k8s-app=kube-dns

-

-

验证集群:

-

启动一个nginx pod:

# vim nginx_pod.yml apiVersion: v1 kind: Pod metadata: name: test-nginx-pod namespace: test labels: app: nginx spec: containers: - name: test-nginx-container image: nginx:latest ports: - containerPort: 80 tolerations: - key: "node-role.kubernetes.io/control-plane" operator: "Exists" effect: "NoSchedule" --- apiVersion: v1 kind: Service # service和pod必须位于同一个namespace metadata: name: nginx-service namespace: test spec: type: NodePort # selector应该匹配pod的labels selector: app: nginx ports: - protocol: TCP port: 80 nodePort: 30007 targetPort: 80启动

kubectl apply -f nginx_pod.yml

-

部署opentelemetry-collector测试

otel-collector和otel-agent需要程序集成API,发送到以DaemonSet运行在每个节点的otel-agent,otel-agent再将数据发送给otel-collector汇总,然后发往可以处理otlp trace数据的后端,如zipkin、jaeger等。

自定义测试yaml文件

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

namespace: default

data:

# 你的配置数据

config.yaml: |

receivers:

otlp:

protocols:

grpc:

http:

processors:

batch:

exporters:

logging:

loglevel: debug

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [logging]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

type: NodePort

ports:

- port: 4317

targetPort: 4317

nodePort: 30080

name: otlp-grpc

- port: 8888

targetPort: 8888

name: metrics

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

spec:

replicas: 1

selector:

matchLabels:

component: otel-collector

template:

metadata:

labels:

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- name: otel-collector

image: otel/opentelemetry-collector:latest

ports:

- containerPort: 4317

- containerPort: 8888

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-collector-conf

name: otel-collector-config-vol

启动

mkdir /conf

kubectl apply -f otel-collector.yaml

kubectl get -f otel-collector.yaml

删除

kubectl delete -f otel-collector.yaml

使用官方提供示例

kubectl apply -f https://raw.githubusercontent.com/open-telemetry/opentelemetry-collector/main/examples/k8s/otel-config.yaml

根据需要修改文件

otel-config.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-conf

labels:

app: opentelemetry

component: otel-agent-conf

data:

otel-agent-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

exporters:

otlp:

endpoint: "otel-collector.default:4317"

tls:

insecure: true

sending_queue:

num_consumers: 4

queue_size: 100

retry_on_failure:

enabled: true

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 400

# 25% of limit up to 2G

spike_limit_mib: 100

check_interval: 5s

extensions:

zpages: {}

service:

extensions: [zpages]

pipelines:

traces:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [otlp]

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: otel-agent

labels:

app: opentelemetry

component: otel-agent

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-agent

template:

metadata:

labels:

app: opentelemetry

component: otel-agent

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-agent-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-agent

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 55679 # ZPages endpoint.

- containerPort: 4317 # Default OpenTelemetry receiver port.

- containerPort: 8888 # Metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 400MiB

volumeMounts:

- name: otel-agent-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-agent-conf

items:

- key: otel-agent-config

path: otel-agent-config.yaml

name: otel-agent-config-vol

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

labels:

app: opentelemetry

component: otel-collector-conf

data:

otel-collector-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: ${env:MY_POD_IP}:4317

http:

endpoint: ${env:MY_POD_IP}:4318

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 1500

# 25% of limit up to 2G

spike_limit_mib: 512

check_interval: 5s

extensions:

zpages: {}

exporters:

otlp:

endpoint: "http://someotlp.target.com:4317" # Replace with a real endpoint.

tls:

insecure: true

zipkin:

endpoint: "http://10.1.1.10:9411/api/v2/spans"

format: "proto"

service:

extensions: [zpages]

pipelines:

traces/1:

receivers: [otlp]

processors: [memory_limiter, batch]

exporters: [zipkin]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

ports:

- name: otlp-grpc # Default endpoint for OpenTelemetry gRPC receiver.

port: 4317

protocol: TCP

targetPort: 4317

- name: otlp-http # Default endpoint for OpenTelemetry HTTP receiver.

port: 4318

protocol: TCP

targetPort: 4318

- name: metrics # Default endpoint for querying metrics.

port: 8888

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-collector

minReadySeconds: 5

progressDeadlineSeconds: 120

replicas: 1 #TODO - adjust this to your own requirements

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

tolerations:

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-collector-config.yaml"

image: otel/opentelemetry-collector:0.94.0

name: otel-collector

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

ports:

- containerPort: 55679 # Default endpoint for ZPages.

- containerPort: 4317 # Default endpoint for OpenTelemetry receiver.

- containerPort: 14250 # Default endpoint for Jaeger gRPC receiver.

- containerPort: 14268 # Default endpoint for Jaeger HTTP receiver.

- containerPort: 9411 # Default endpoint for Zipkin receiver.

- containerPort: 8888 # Default endpoint for querying metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: GOMEMLIMIT

value: 1600MiB

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

# - name: otel-collector-secrets

# mountPath: /secrets

volumes:

- configMap:

name: otel-collector-conf

items:

- key: otel-collector-config

path: otel-collector-config.yaml

name: otel-collector-config-vol

# - secret:

# name: otel-collector-secrets

# items:

# - key: cert.pem

# path: cert.pem

# - key: key.pem

# path: key.pem

部署deepflow监控单个k8s集群

安装helm

snap install helm --classic

设置pv

kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml

## config default storage class

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

部署deepflow

helm repo add deepflow https://deepflowio.github.io/deepflow

helm repo update deepflow # use `helm repo update` when helm < 3.7.0

helm install deepflow -n deepflow deepflow/deepflow --create-namespace

# 显示如下

NAME: deepflow

LAST DEPLOYED: Tue May 14 14:13:50 2024

NAMESPACE: deepflow

STATUS: deployed

REVISION: 1

NOTES:

██████╗ ███████╗███████╗██████╗ ███████╗██╗ ██████╗ ██╗ ██╗

██╔══██╗██╔════╝██╔════╝██╔══██╗██╔════╝██║ ██╔═══██╗██║ ██║

██║ ██║█████╗ █████╗ ██████╔╝█████╗ ██║ ██║ ██║██║ █╗ ██║

██║ ██║██╔══╝ ██╔══╝ ██╔═══╝ ██╔══╝ ██║ ██║ ██║██║███╗██║

██████╔╝███████╗███████╗██║ ██║ ███████╗╚██████╔╝╚███╔███╔╝

╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝ ╚══════╝ ╚═════╝ ╚══╝╚══╝

An automated observability platform for cloud-native developers.

# deepflow-agent Port for receiving trace, metrics, and log

deepflow-agent service: deepflow-agent.deepflow

deepflow-agent Host listening port: 38086

# Get the Grafana URL to visit by running these commands in the same shell

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)

NODE_IP=$(kubectl get nodes -o jsonpath="{.items[0].status.addresses[0].address}")

echo -e "Grafana URL: http://$NODE_IP:$NODE_PORT \nGrafana auth: admin:deepflow"

节点安装deepflow-ctl

curl -o /usr/bin/deepflow-ctl https://deepflow-ce.oss-cn-beijing.aliyuncs.com/bin/ctl/stable/linux/$(arch | sed 's|x86_64|amd64|' | sed 's|aarch64|arm64|')/deepflow-ctl

chmod a+x /usr/bin/deepflow-ctl

访问grafana页面

NODE_PORT=$(kubectl get --namespace deepflow -o jsonpath="{.spec.ports[0].nodePort}" services deepflow-grafana)