0110.Kubeadm.安装k8s1.28

本文详细介绍了如何在Rocky Linux操作系统上,聚焦于基于containerd作为容器运行时的实践,利用kubeadm工具部署Kubernetes 1.28版本集群,并安装dashboard、k9s工具,注册consule服务中心。

·

1. 环境准备

1. 操作系统rocky linux 9.3

2. K8S1.28

| K8S集群角色 | IP | 主机名 | 安装的组件 |

|---|---|---|---|

| 控制节点 | 192.168.8.21 | k8s-master01 | apiserver、controller-manager、schedule、etcd、kube-proxy、容器运行时 |

| 工作节点 | 192.168.8.22 | k8s-node01 | Kube-proxy、calico、coredns、容器运行时、kubelet |

| 工作节点 | 192.168.8.23 | k8s-node01 | Kube-proxy、calico、coredns、容器运行时、kubelet |

2. 环境搭建

2.1 rocky-k8s-template 网络和主机名配置

# rocky-k8s-template

[root@localhost ~]# hostnamectl hostname rocky-k8s-template && bash

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# systemctl is-active network.service

inactive

[root@rocky-k8s-template ~]# nmcli connection modify ens160 ipv4.addresses 192.168.8.20/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

[root@rocky-k8s-template ~]# reboot

[root@rocky-k8s-template ~]# ip add show | grep -i ens160

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

inet 192.168.8.20/24 brd 192.168.8.255 scope global noprefixroute ens160

[root@rocky-k8s-template ~]# cat /etc/NetworkManager/system-connections/ens160.nmconnection

[connection]

id=ens160

uuid=435a1f8b-75a9-38c2-ba68-ee4de06d45cb

type=ethernet

autoconnect-priority=-999

interface-name=ens160

timestamp=1705154755

[ethernet]

[ipv4]

address1=192.168.8.20/24,192.168.8.2

dns=8.8.8.8;

method=manual

[ipv6]

addr-gen-mode=eui64

method=auto

[proxy]

[root@rocky-k8s-template ~]#

2.2 配置hosts文件

[root@rocky-k8s-template ~]# echo "192.168.8.21 k8s-master01

192.168.8.22 k8s-node01

192.168.8.23 k8s-node02 " >> /etc/hosts

[root@rocky-k8s-template ~]# more /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.8.21 k8s-master01

192.168.8.22 k8s-node01

192.168.8.23 k8s-node02

[root@rocky-k8s-template ~]#

2.3 关闭swap分区,提升性能

[root@rocky-k8s-template ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

[root@rocky-k8s-template ~]# more /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Jan 11 08:20:26 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/rl-root / xfs defaults 0 0

UUID=dbc0d512-4e18-4e58-b225-07fd8c9f929f /boot xfs defaults 0 0

/dev/mapper/rl-home /home xfs defaults 0 0

#/dev/mapper/rl-swap none swap defaults 0 0

[root@rocky-k8s-template ~]#

2.4 修改机器内核参数

[root@rocky-k8s-template ~]# modprobe br_netfilter

[root@rocky-k8s-template ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@rocky-k8s-template ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@rocky-k8s-template ~]#

2.5 关闭firewalld 和 Selinux

[root@rocky-k8s-template ~]# systemctl --now disable firewalld

Removed "/etc/systemd/system/multi-user.target.wants/firewalld.service".

Removed "/etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service".

[root@rocky-k8s-template ~]# systemctl is-active firewalld.service

inactive

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

[root@rocky-k8s-template ~]# reboot

[root@rocky-k8s-template ~]# getenforce

Disabled

[root@rocky-k8s-template ~]#

2.6 配置阿里云源和安装基本软件包

[root@rocky-k8s-template ~]# sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.aliyun.com/rockylinux|g' \

-i.bak \

/etc/yum.repos.d/rocky-*.repo

[root@rocky-k8s-template ~]# more /etc/yum.repos.d/rocky-addons.repo

[highavailability]

name=Rocky Linux $releasever - High Availability

#mirrorlist=https://mirrors.rockylinux.org/mirrorlist?arch=$basearch&repo=HighAvailability-$releasever$rltype

baseurl=https://mirrors.aliyun.com/rockylinux/$releasever/HighAvailability/$basearch/os/

gpgcheck=1

enabled=0

countme=1

metadata_expire=6h

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-Rocky-9

[highavailability-debug]

name=Rocky Linux $releasever - High Availability - Debug

#mirrorlist=https://mirrors.rockylinux.org/mirrorlist?arch=$basearch&repo=HighAvailability-$releasever-debug$rltype

baseurl=https://mirrors.aliyun.com/rockylinux/$releasever/HighAvailability/$basearch/debug/tree/

gpgcheck=1

enabled=0

metadata_expire=6h

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-Rocky-9

[highavailability-source]

name=Rocky Linux $releasever - High Availability - Source

#mirrorlist=https://mirrors.rockylinux.org/mirrorlist?arch=source&repo=HighAvailability-$releasever-source$rltype

baseurl=https://mirrors.aliyun.com/rockylinux/$releasever/HighAvailability/source/tree/

--更多--(21%)

[root@rocky-k8s-template ~]# dnf makecache

Rocky Linux 9 - BaseOS 2.2 kB/s | 4.1 kB 00:01

Rocky Linux 9 - BaseOS 313 kB/s | 2.2 MB 00:07

Rocky Linux 9 - AppStream 1.3 MB/s | 7.4 MB 00:05

Rocky Linux 9 - Extras 1.8 kB/s | 14 kB 00:07

元数据缓存已建立。

[root@rocky-k8s-template ~]#

# 直接装就行了

dnf install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils

dnf install -y lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel

dnf install -y curl curl-devel unzip sudo ntp libaio-devel wget vim

dnf install -y ncurses-devel autoconf automake zlib-devel python-devel epel-release

dnf install -y openssh-server socat ipvsadm conntrack telnet ipvsadm yum-utils

[root@rocky-k8s-template ~]# dnf-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

添加仓库自:http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@rocky-k8s-template ~]#

2.7 配置安装k8s组件需要的阿里云repo源

[root@rocky-k8s-template ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@rocky-k8s-template ~]#

2.8 配置时间同步

[root@rocky-k8s-template ~]# dnf install -y chrony

Docker CE Stable - x86_64 16 kB/s | 33 kB 00:02

Kubernetes 287 B/s | 454 B 00:01

Kubernetes 473 B/s | 2.6 kB 00:05

导入 GPG 公钥 0x13EDEF05:

Userid: "Rapture Automatic Signing Key (cloud-rapture-signing-key-2022-03-07-08_01_01.pub)"

指纹: A362 B822 F6DE DC65 2817 EA46 B53D C80D 13ED EF05

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

导入 GPG 公钥 0xDC6315A3:

Userid: "Artifact Registry Repository Signer <artifact-registry-repository-signer@google.com>"

指纹: 35BA A0B3 3E9E B396 F59C A838 C0BA 5CE6 DC63 15A3

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Kubernetes 1.1 kB/s | 975 B 00:00

导入 GPG 公钥 0x3E1BA8D5:

Userid: "Google Cloud Packages RPM Signing Key <gc-team@google.com>"

指纹: 3749 E1BA 95A8 6CE0 5454 6ED2 F09C 394C 3E1B A8D5

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

Kubernetes 88 kB/s | 182 kB 00:02

软件包 chrony-4.3-1.el9.x86_64 已安装。

依赖关系解决。

无需任何处理。

完毕!

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# vim /etc/chrony.conf

[root@rocky-k8s-template ~]# head -n 5 /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (https://www.pool.ntp.org/join.html).

server ntp.aliyun.com iburst

# Use NTP servers from DHCP.

[root@rocky-k8s-template ~]#

2.9 安装并配置contarinerd服务

[root@rocky-k8s-template ~]# dnf install containerd.io-1.6.6 -y

上次元数据过期检查:0:05:16 前,执行于 2024年01月13日 星期六 22时59分52秒。

依赖关系解决。

===================================================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================================================

安装:

containerd.io x86_64 1.6.6-3.1.el9 docker-ce-stable 32 M

事务概要

===================================================================================================================================

安装 1 软件包

总下载:32 M

安装大小:125 M

下载软件包:

containerd.io-1.6.6-3.1.el9.x86_64.rpm 3.6 MB/s | 32 MB 00:08

-----------------------------------------------------------------------------------------------------------------------------------

总计 3.6 MB/s | 32 MB 00:08

Docker CE Stable - x86_64 2.2 kB/s | 1.6 kB 00:00

导入 GPG 公钥 0x621E9F35:

Userid: "Docker Release (CE rpm) <docker@docker.com>"

指纹: 060A 61C5 1B55 8A7F 742B 77AA C52F EB6B 621E 9F35

来自: https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

导入公钥成功

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : containerd.io-1.6.6-3.1.el9.x86_64 1/1

运行脚本: containerd.io-1.6.6-3.1.el9.x86_64 1/1

验证 : containerd.io-1.6.6-3.1.el9.x86_64 1/1

已安装:

containerd.io-1.6.6-3.1.el9.x86_64

完毕!

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# mkdir -p /etc/containerd

[root@rocky-k8s-template ~]# containerd config default > /etc/containerd/config.toml

[root@rocky-k8s-template ~]# vim /etc/containerd/config.toml

把SystemdCgroup = false修改成SystemdCgroup = true

把sandbox_image = "k8s.gcr.io/pause:3.6"修改成sandbox_image="registry.aliyuncs.com/google_containers/pause:3.7"

[root@rocky-k8s-template ~]# cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# systemctl enable containerd --now

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# vim /etc/containerd/config.toml

config_path = "/etc/containerd/certs.d"

[root@rocky-k8s-template ~]# mkdir /etc/containerd/certs.d/docker.io/ -p

[root@rocky-k8s-template ~]# vim /etc/containerd/certs.d/docker.io/hosts.toml

#写入如下内容:

[host."https://ws81rcka.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]

capabilities = ["pull","push"]

[root@rocky-k8s-template ~]# systemctl restart containerd

[root@rocky-k8s-template ~]#

2.10 安装docker

[root@rocky-k8s-template ~]# dnf install docker-ce -y

上次元数据过期检查:0:16:58 前,执行于 2024年01月13日 星期六 22时59分52秒。

依赖关系解决。

===================================================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================================================

安装:

docker-ce x86_64 3:24.0.7-1.el9 docker-ce-stable 24 M

安装依赖关系:

docker-ce-cli x86_64 1:24.0.7-1.el9 docker-ce-stable 7.1 M

安装弱的依赖:

docker-buildx-plugin x86_64 0.11.2-1.el9 docker-ce-stable 13 M

docker-ce-rootless-extras x86_64 24.0.7-1.el9 docker-ce-stable 3.9 M

docker-compose-plugin x86_64 2.21.0-1.el9 docker-ce-stable 13 M

事务概要

===================================================================================================================================

安装 5 软件包

总下载:60 M

安装大小:252 M

下载软件包:

(1/5): docker-ce-cli-24.0.7-1.el9.x86_64.rpm 786 kB/s | 7.1 MB 00:09

(2/5): docker-buildx-plugin-0.11.2-1.el9.x86_64.rpm 997 kB/s | 13 MB 00:13

(3/5): docker-ce-rootless-extras-24.0.7-1.el9.x86_64.rpm 642 kB/s | 3.9 MB 00:06

(4/5): docker-ce-24.0.7-1.el9.x86_64.rpm 1.1 MB/s | 24 MB 00:20

(5/5): docker-compose-plugin-2.21.0-1.el9.x86_64.rpm 1.6 MB/s | 13 MB 00:08

-----------------------------------------------------------------------------------------------------------------------------------

总计 2.8 MB/s | 60 MB 00:21

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : docker-compose-plugin-2.21.0-1.el9.x86_64 1/5

运行脚本: docker-compose-plugin-2.21.0-1.el9.x86_64 1/5

安装 : docker-buildx-plugin-0.11.2-1.el9.x86_64 2/5

运行脚本: docker-buildx-plugin-0.11.2-1.el9.x86_64 2/5

安装 : docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

运行脚本: docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

安装 : docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

运行脚本: docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

安装 : docker-ce-3:24.0.7-1.el9.x86_64 5/5

运行脚本: docker-ce-3:24.0.7-1.el9.x86_64 5/5

验证 : docker-buildx-plugin-0.11.2-1.el9.x86_64 1/5

验证 : docker-ce-3:24.0.7-1.el9.x86_64 2/5

验证 : docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

验证 : docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

验证 : docker-compose-plugin-2.21.0-1.el9.x86_64 5/5

已安装:

docker-buildx-plugin-0.11.2-1.el9.x86_64 docker-ce-3:24.0.7-1.el9.x86_64 docker-ce-cli-1:24.0.7-1.el9.x86_64

docker-ce-rootless-extras-24.0.7-1.el9.x86_64 docker-compose-plugin-2.21.0-1.el9.x86_64

完毕!

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# systemctl enable docker --now

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# echo '{

"registry-mirrors":["https://ws81rcka.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.com"]

}' > /etc/docker/daemon.json

[root@rocky-k8s-template ~]# more /etc/docker/daemon.json

{

"registry-mirrors":["https://ws81rcka.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","

https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.

com"]

}

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# systemctl restart docker

2.11 安装初始化K8s软件包

[root@rocky-k8s-template ~]# dnf install docker-ce -y

上次元数据过期检查:0:16:58 前,执行于 2024年01月13日 星期六 22时59分52秒。

依赖关系解决。

===================================================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================================================

安装:

docker-ce x86_64 3:24.0.7-1.el9 docker-ce-stable 24 M

安装依赖关系:

docker-ce-cli x86_64 1:24.0.7-1.el9 docker-ce-stable 7.1 M

安装弱的依赖:

docker-buildx-plugin x86_64 0.11.2-1.el9 docker-ce-stable 13 M

docker-ce-rootless-extras x86_64 24.0.7-1.el9 docker-ce-stable 3.9 M

docker-compose-plugin x86_64 2.21.0-1.el9 docker-ce-stable 13 M

事务概要

===================================================================================================================================

安装 5 软件包

总下载:60 M

安装大小:252 M

下载软件包:

(1/5): docker-ce-cli-24.0.7-1.el9.x86_64.rpm 786 kB/s | 7.1 MB 00:09

(2/5): docker-buildx-plugin-0.11.2-1.el9.x86_64.rpm 997 kB/s | 13 MB 00:13

(3/5): docker-ce-rootless-extras-24.0.7-1.el9.x86_64.rpm 642 kB/s | 3.9 MB 00:06

(4/5): docker-ce-24.0.7-1.el9.x86_64.rpm 1.1 MB/s | 24 MB 00:20

(5/5): docker-compose-plugin-2.21.0-1.el9.x86_64.rpm 1.6 MB/s | 13 MB 00:08

-----------------------------------------------------------------------------------------------------------------------------------

总计 2.8 MB/s | 60 MB 00:21

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : docker-compose-plugin-2.21.0-1.el9.x86_64 1/5

运行脚本: docker-compose-plugin-2.21.0-1.el9.x86_64 1/5

安装 : docker-buildx-plugin-0.11.2-1.el9.x86_64 2/5

运行脚本: docker-buildx-plugin-0.11.2-1.el9.x86_64 2/5

安装 : docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

运行脚本: docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

安装 : docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

运行脚本: docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

安装 : docker-ce-3:24.0.7-1.el9.x86_64 5/5

运行脚本: docker-ce-3:24.0.7-1.el9.x86_64 5/5

验证 : docker-buildx-plugin-0.11.2-1.el9.x86_64 1/5

验证 : docker-ce-3:24.0.7-1.el9.x86_64 2/5

验证 : docker-ce-cli-1:24.0.7-1.el9.x86_64 3/5

验证 : docker-ce-rootless-extras-24.0.7-1.el9.x86_64 4/5

验证 : docker-compose-plugin-2.21.0-1.el9.x86_64 5/5

已安装:

docker-buildx-plugin-0.11.2-1.el9.x86_64 docker-ce-3:24.0.7-1.el9.x86_64 docker-ce-cli-1:24.0.7-1.el9.x86_64

docker-ce-rootless-extras-24.0.7-1.el9.x86_64 docker-compose-plugin-2.21.0-1.el9.x86_64

完毕!

[root@rocky-k8s-template ~]# systemctl enable docker --now

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# echo '{

"registry-mirrors":["https://ws81rcka.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.com"]

}' > /etc/docker/daemon.json

[root@rocky-k8s-template ~]# more /etc/docker/daemon.json

{

"registry-mirrors":["https://ws81rcka.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","

https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.

com"]

}

[root@rocky-k8s-template ~]# systemctl restart docker

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]#

[root@rocky-k8s-template ~]# dnf install -y kubelet-1.28.1 kubeadm-1.28.1 kubectl-1.28.1

上次元数据过期检查:0:02:16 前,执行于 2024年01月13日 星期六 23时17分45秒。

依赖关系解决。

===================================================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================================================

安装:

kubeadm x86_64 1.28.1-0 kubernetes 11 M

kubectl x86_64 1.28.1-0 kubernetes 11 M

kubelet x86_64 1.28.1-0 kubernetes 21 M

安装依赖关系:

cri-tools x86_64 1.26.0-0 kubernetes 8.6 M

kubernetes-cni x86_64 1.2.0-0 kubernetes 17 M

事务概要

===================================================================================================================================

安装 5 软件包

总下载:68 M

安装大小:290 M

下载软件包:

(1/5): 3f5ba2b53701ac9102ea7c7ab2ca6616a8cd5966591a77577585fde1c434ef74-cri-tools-1.26.0-0.x86_64. 1.0 MB/s | 8.6 MB 00:08

(2/5): 2e9cd5948c00117eb7bb23870ecc7c550c63bebe9d7e96c2879727a96f16d9f3-kubeadm-1.28.1-0.x86_64.rp 906 kB/s | 11 MB 00:12

(3/5): 29fac258a278e5eceae13f53eb196fb17f29c5d4df1f130b79070efdf1d44ded-kubectl-1.28.1-0.x86_64.rp 902 kB/s | 11 MB 00:12

(4/5): e4eb1fb2afc8e3bf3701b8c2d1e1aff5d1b6112c4993f4068d9eb4058c5394a1-kubelet-1.28.1-0.x86_64.rp 2.0 MB/s | 21 MB 00:10

(5/5): 0f2a2afd740d476ad77c508847bad1f559afc2425816c1f2ce4432a62dfe0b9d-kubernetes-cni-1.2.0-0.x86 1.4 MB/s | 17 MB 00:11

-----------------------------------------------------------------------------------------------------------------------------------

总计 2.8 MB/s | 68 MB 00:24

Kubernetes 3.3 kB/s | 2.6 kB 00:00

导入 GPG 公钥 0x13EDEF05:

Userid: "Rapture Automatic Signing Key (cloud-rapture-signing-key-2022-03-07-08_01_01.pub)"

指纹: A362 B822 F6DE DC65 2817 EA46 B53D C80D 13ED EF05

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

导入公钥成功

导入 GPG 公钥 0xDC6315A3:

Userid: "Artifact Registry Repository Signer <artifact-registry-repository-signer@google.com>"

指纹: 35BA A0B3 3E9E B396 F59C A838 C0BA 5CE6 DC63 15A3

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

导入公钥成功

Kubernetes 1.2 kB/s | 975 B 00:00

导入 GPG 公钥 0x3E1BA8D5:

Userid: "Google Cloud Packages RPM Signing Key <gc-team@google.com>"

指纹: 3749 E1BA 95A8 6CE0 5454 6ED2 F09C 394C 3E1B A8D5

来自: https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

导入公钥成功

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : kubernetes-cni-1.2.0-0.x86_64 1/5

安装 : kubelet-1.28.1-0.x86_64 2/5

安装 : kubectl-1.28.1-0.x86_64 3/5

安装 : cri-tools-1.26.0-0.x86_64 4/5

安装 : kubeadm-1.28.1-0.x86_64 5/5

运行脚本: kubeadm-1.28.1-0.x86_64 5/5

验证 : cri-tools-1.26.0-0.x86_64 1/5

验证 : kubeadm-1.28.1-0.x86_64 2/5

验证 : kubectl-1.28.1-0.x86_64 3/5

验证 : kubelet-1.28.1-0.x86_64 4/5

验证 : kubernetes-cni-1.2.0-0.x86_64 5/5

已安装:

cri-tools-1.26.0-0.x86_64 kubeadm-1.28.1-0.x86_64 kubectl-1.28.1-0.x86_64 kubelet-1.28.1-0.x86_64 kubernetes-cni-1.2.0-0.x86_64

完毕!

[root@rocky-k8s-template ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@rocky-k8s-template ~]#

2.12 模版制作完成

做好快照

链接克隆k8s-master01节点虚拟机

链接克隆k8s-node01节点虚拟机

链接克隆k8s-node01节点虚拟机

2.13 个性化修改k8s-master01

1. 修改主机名、ip、配置主机间免密登录

[root@rocky-k8s-template ~]# hostnamectl hostname k8s-master01 && bash

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# nmcli connection modify ens160 ipv4.addresses 192.168.8.21/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

[root@k8s-master01 ~]#

root@k8s-master01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:xT2LsFhmujlLh5G9hdby6GrA5nSBSw0NNEIbrKBxU4E root@k8s-master01

The key's randomart image is:

+---[RSA 3072]----+

| o++*+ |

|o E+... . . |

|o+.. + = o o |

|o o oO * . o |

| o .=.S + . |

| * .* * |

| + o* + . |

| ...= |

| .o.. |

+----[SHA256]-----+

[root@k8s-master01 ~]#

[root@k8s-node01 ~]# ssh-copy-id k8s-master01

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-master01 (192.168.8.21)' can't be established.

ED25519 key fingerprint is SHA256:yiAsdeU/IZl0MnnicAdE9OYKCRLeQ1yUFt1J8yKrAfo.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: k8s-node01

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-master01's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-master01'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-node01 ~]# reboot

# 做快照

2.14 个性化修改k8s-node01

1. 修改主机名、ip、配置主机间免密登录

[root@rocky-k8s-template ~]# hostnamectl hostname k8s-node01 && bash

[root@k8s-node01 ~]#

[root@k8s-node01 ~]# nmcli connection modify ens160 ipv4.addresses 192.168.8.22/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

[root@k8s-node01 ~]#

[root@k8s-node01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:qhxs57BvQwFGEOj+8tOI6mZcVliW7CZ4w66o9a/zjUQ root@k8s-node01

The key's randomart image is:

+---[RSA 3072]----+

| .o+o . |

|. o= |

|. o.=. |

| o * +. |

|. o = E.S |

| . = ... |

|o B B.+ |

|.O B.Xoo |

|O.o.BB*.. |

+----[SHA256]-----+

[root@k8s-node01 ~]#

[root@k8s-node01 ~]# ssh-copy-id k8s-node01

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-node01 (192.168.8.22)' can't be established.

ED25519 key fingerprint is SHA256:yiAsdeU/IZl0MnnicAdE9OYKCRLeQ1yUFt1J8yKrAfo.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node01's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node01'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-node01 ~]# reboot

# 做快照

2.15 个性化修改k8s-node02

1. 修改主机名、ip、配置主机间免密登录

[root@rocky-k8s-template ~]# hostnamectl hostname k8s-node02 && bash

[root@k8s-node02 ~]#

[root@k8s-node02 ~]# nmcli connection modify ens160 ipv4.addresses 192.168.8.23/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

[root@k8s-node02 ~]#

[root@k8s-node02 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:nRI3t0tgIpB3lhn+U9Gd1pxEsYWoz7jg31uybJEWM74 root@k8s-node02

The key's randomart image is:

+---[RSA 3072]----+

| .. .+ .ooB*|

| ....= ..o+*|

| ..o+ =.o .. |

| . B.*+. |

| S ==o= |

| ...oB. |

| . . ooo. |

| . .oE+ |

| ...=. |

+----[SHA256]-----+

[root@k8s-node02 ~]#

[root@k8s-node02 ~]# ssh-copy-id k8s-node02

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-node02 (192.168.8.23)' can't be established.

ED25519 key fingerprint is SHA256:yiAsdeU/IZl0MnnicAdE9OYKCRLeQ1yUFt1J8yKrAfo.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node02's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node02'"

and check to make sure that only the key(s) you wanted were added.

[root@k8s-node02 ~]# reboot

# 做快照

2.16 kubeadm 初始化k8s集群

[root@k8s-master01 ~]# kubeadm config print init-defaults > kubeadm.yaml

[root@k8s-master01 ~]# vim kubeadm.yaml

#修改一下配置

advertiseAddress: 192.168.8.21

criSocket: unix:///run/containerd/containerd.sock #指定containerd容器运行时

name: k8s-master01

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kubernetesVersion: 1.28.1

#添加这个

podSubnet: 10.244.0.0/16

#增加如下配置

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

[root@k8s-master01 ~]# kubeadm config print init-defaults > kubeadm.yaml

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# vim kubeadm.yaml

[root@k8s-master01 ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification

[init] Using Kubernetes version: v1.28.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W0114 00:36:01.648821 2721 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.7" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.8.21]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.8.21 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.8.21 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.505449 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.8.21:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:dd52fc0b3dc4caec737282930abd191b92ae7a96003b620b94cafaf3ea8700fa

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 18m v1.28.1

[root@k8s-master01 ~]#

2.17 扩容k8s集群-添加第一个工作节点

[root@k8s-master01 ~]# kubeadm token create --print-join-command

kubeadm join 192.168.8.21:6443 --token cb9i30.s9gpcklxol2ewz3j --discovery-token-ca-cert-hash sha256:dd52fc0b3dc4caec737282930abd191b92ae7a96003b620b94cafaf3ea8700fa

[root@k8s-master01 ~]#

[root@k8s-node01 ~]# kubeadm join 192.168.8.21:6443 --token cb9i30.s9gpcklxol2ewz3j --discovery-token-ca-cert-hash sha256:dd52fc0b3dc4caec737282930abd191b92ae7a96003b620b94cafaf3ea8700fa --ignore-preflight-errors=SystemVerification

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-node01 ~]#

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 22m v1.28.1

k8s-node01 NotReady <none> 68s v1.28.1

[root@k8s-master01 ~]# kubectl label nodes k8s-node01 node-role.kubernetes.io/work=worknode

node/k8s-node01 labeled

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 28m v1.28.1

k8s-node01 NotReady work 6m54s v1.28.1

[root@k8s-master01 ~]#

2.18 扩容k8s集群-添加第二个工作节点

[root@k8s-node02 ~]# kubeadm join 192.168.8.21:6443 --token cb9i30.s9gpcklxol2ewz3j --discovery-token-ca-cert-hash sha256:dd52fc0b3dc4caec737282930abd191b92ae7a96003b620b94cafaf3ea8700fa --ignore-preflight-errors=SystemVerification

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-master01 ~]# kubectl label nodes k8s-node02 node-role.kubernetes.io/work=worknode

node/k8s-node02 labeled

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 28m v1.28.1

k8s-node01 NotReady work 6m54s v1.28.1

k8s-node02 NotReady work 3m46s v1.28.1

[root@k8s-master01 ~]#

2.19 安装kubernetes网络组件Calico

[root@k8s-master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6554b8b87f-2zv9c 0/1 Pending 0 36m

coredns-6554b8b87f-9cv9c 0/1 Pending 0 36m

etcd-k8s-master01 1/1 Running 1 (22m ago) 36m

kube-apiserver-k8s-master01 1/1 Running 1 (22m ago) 36m

kube-controller-manager-k8s-master01 1/1 Running 1 (22m ago) 36m

kube-proxy-6d7dr 1/1 Running 0 12m

kube-proxy-br956 1/1 Running 1 (22m ago) 36m

kube-proxy-rdh9g 1/1 Running 0 15m

kube-scheduler-k8s-master01 1/1 Running 1 (22m ago) 36m

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get ns

NAME STATUS AGE

default Active 11h

kube-node-lease Active 11h

kube-public Active 11h

kube-system Active 11h

[root@k8s-master01 ~]#

# 官⽹地址:https://docs.tigera.io/calico/latest/getting-started/kubernetes/quickstart

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/tigera-operator.yaml

[root@k8s-master01 ~]# kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/tigera-operator.yaml

namespace/tigera-operator created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

[root@k8s-master01 ~]# ls

公共 模板 视频 图片 文档 下载 音乐 桌面 anaconda-ks.cfg calico.yaml kubeadm.yaml 'New Folder'

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get ns

NAME STATUS AGE

default Active 11h

kube-node-lease Active 11h

kube-public Active 11h

kube-system Active 11h

tigera-operator Active 84s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get pods -n tigera-operator

NAME READY STATUS RESTARTS AGE

tigera-operator-55585899bf-gq5lq 1/1 Running 0 6m15s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# wget --no-check-certificate https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/custom-resources.yaml

--2024-01-14 12:27:14-- https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/custom-resources.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.108.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:810 [text/plain]

正在保存至: “custom-resources.yaml”

custom-resources.yaml 100%[=========================================================>] 810 --.-KB/s 用时 0s

2024-01-14 12:27:21 (1.61 MB/s) - 已保存 “custom-resources.yaml” [810/810])

[root@k8s-node01 ~]#

[root@k8s-master01 ~]# cp custom-resources.yaml custom-resources.yaml.bak

[root@k8s-master01 ~]# vim custom-resources.yaml

#更改以下内容

cidr: 10.244.0.0/16 # 更改

[root@k8s-master01 ~]# kubectl apply -f custom-resources.yaml

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get ns

NAME STATUS AGE

calico-system Active 15s

default Active 11h

kube-node-lease Active 11h

kube-public Active 11h

kube-system Active 11h

tigera-operator Active 51m

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# scp calico.yaml root@192.168.8.22:~/

The authenticity of host '192.168.8.22 (192.168.8.22)' can't be established.

ED25519 key fingerprint is SHA256:yiAsdeU/IZl0MnnicAdE9OYKCRLeQ1yUFt1J8yKrAfo.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: k8s-master01

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.8.22' (ED25519) to the list of known hosts.

root@192.168.8.22's password:

calico.yaml 100% 239KB 26.3MB/s 00:00

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# scp calico.yaml root@192.168.8.23:~/

The authenticity of host '192.168.8.23 (192.168.8.23)' can't be established.

ED25519 key fingerprint is SHA256:yiAsdeU/IZl0MnnicAdE9OYKCRLeQ1yUFt1J8yKrAfo.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: k8s-master01

~/.ssh/known_hosts:4: 192.168.8.22

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.8.23' (ED25519) to the list of known hosts.

root@192.168.8.23's password:

calico.yaml 100% 239KB 29.1MB/s 00:00

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@k8s-master01 ~]#

#* 注意需要良好的网络: 不然可能需要很久才能成功,多等一会儿

[root@k8s-master01 ~]# kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-846b7b4574-fs4kk 0/1 ContainerCreating 0 85s

calico-node-gcxdz 0/1 Init:ErrImagePull 0 85s

calico-node-mp7gb 0/1 Init:ImagePullBackOff 0 85s

calico-node-nl4jx 0/1 Init:0/2 0 85s

calico-typha-76977b894c-c2dg2 0/1 ImagePullBackOff 0 85s

calico-typha-76977b894c-tgrmx 0/1 ErrImagePull 0 82s

csi-node-driver-27rll 0/2 ContainerCreating 0 85s

csi-node-driver-4cz4t 0/2 ContainerCreating 0 85s

csi-node-driver-zprng 0/2 ContainerCreating 0 85s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6554b8b87f-2zv9c 1/1 Running 1 (84m ago) 13h

coredns-6554b8b87f-9cv9c 1/1 Running 1 (84m ago) 13h

etcd-k8s-master01 1/1 Running 3 (84m ago) 13h

kube-apiserver-k8s-master01 1/1 Running 3 (84m ago) 13h

kube-controller-manager-k8s-master01 1/1 Running 4 (84m ago) 13h

kube-proxy-6d7dr 1/1 Running 2 (84m ago) 12h

kube-proxy-br956 1/1 Running 3 (84m ago) 13h

kube-proxy-rdh9g 1/1 Running 2 (84m ago) 12h

kube-scheduler-k8s-master01 1/1 Running 4 (84m ago) 13h

[root@k8s-master01 ~]#

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get pods -n kube-system -l k8s-app=calico-node --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-node-kkgmc 1/1 Running 0 9m11s

kube-system calico-node-wvds9 1/1 Running 0 9m11s

kube-system calico-node-zqgkw 1/1 Running 0 9m11s

[root@k8s-master01 ~]#

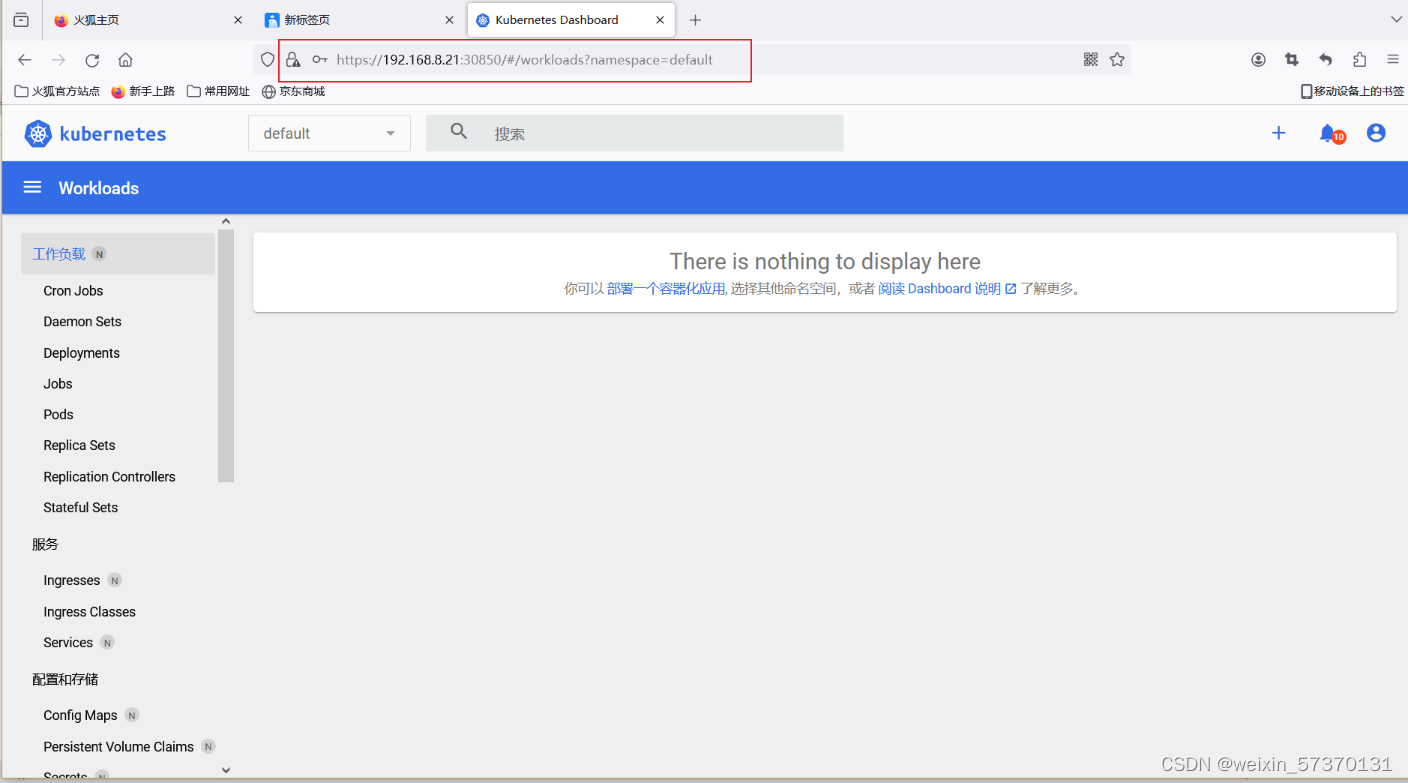

2.20 master节点安装Dashboard

# 我的主节点解析端口,访问不到,这里采用node1节点scp过去

[root@k8s-master01 ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?

[root@k8s-master01 ~]#

[root@k8s-node01 ~]# wget --no-check-certificate https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

--2024-01-14 13:57:42-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:7621 (7.4K) [text/plain]

正在保存至: “recommended.yaml”

recommended.yaml 100%[=========================================================>] 7.44K 3.77KB/s 用时 2.0s

2024-01-14 13:57:47 (3.77 KB/s) - 已保存 “recommended.yaml” [7621/7621])

[root@k8s-node01 ~]#

[root@k8s-node01 ~]# scp recommended.yaml root@192.168.8.21:~/

recommended.yaml 100% 7621 4.2MB/s 00:00

[root@k8s-node01 ~]#

[root@k8s-master01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get pods -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-5657497c4c-zqqrv 1/1 Running 1 (89s ago) 5m7s 10.244.85.194 k8s-node01 <none> <none>

kubernetes-dashboard-78f87ddfc-rlm7c 1/1 Running 0 5m7s 10.244.58.194 k8s-node02 <none> <none>

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard ClusterIP 10.99.254.186 <none> 443/TCP 4m44s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard edit service kubernetes-dashboard

# 改这条语句type: NodePort

service/kubernetes-dashboard edited

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.97.79.12 <none> 443:31746/TCP 14m

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# curl 10.99.254.115:443

Client sent an HTTP request to an HTTPS server.

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.99.254.115 <none> 443:30850/TCP 2m59s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

serviceaccount/dashboard-admin created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin-rb created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl -n kubernetes-dashboard get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.99.254.115 <none> 443:30850/TCP 2m59s

[root@k8s-master01 ~]#

[root@k8s-master01 ~]#

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

serviceaccount/dashboard-admin created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin-rb created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin

[root@k8s-master01 ~]#

没有得到令牌用下面这种方法

[root@k8s-master01 ~]# echo 'apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin-sa

namespace: kubernetes-dashboard' >> dashboard-admin-sa.yaml

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl apply -f dashboard-admin-sa.yaml

serviceaccount/dashboard-admin-sa created

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# more dashboard-admin-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin-sa

namespace: kubernetes-dashboard

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# echo 'apiVersion: v1

kind: Secret

metadata:

name: dashboard-admin-token

namespace: kubernetes-dashboard

type: kubernetes.io/service-account-token' >> dashboard-admin-token.yaml

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubectl apply -f dashboard-admin-token.yaml

The Secret "dashboard-admin-token" is invalid: metadata.annotations[kubernetes.io/service-account.name]: Required value

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# more dashboard-admin-token.yaml

apiVersion: v1

kind: Secret

metadata:

name: dashboard-admin-token

namespace: kubernetes-dashboard

type: kubernetes.io/service-account-token

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# vim dashboard-admin-token.yaml

[root@k8s-master01 ~]# kubectl apply -f dashboard-admin-token.yaml

secret/dashboard-admin-token created

[root@k8s-master01 ~]# TOKEN=$(kubectl get secret dashboard-admin-token -n kubernetes-dashboard -o jsonpath="{.data.token}" | base64 --decode)

echo $TOKEN

eyJhbGciOiJSUzI1NiIsImtpZCI6IkpyT29ObFhKUzNiVF9tMTBMcF81WE52QUhjYVpPWGQwTnk0UFFVeUZDWDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluLXNhIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2U4MjQ0ZjYtZGJjNC00ZTJjLTkzNjctMTJlNDRmOWZhYTBjIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbi1zYSJ9.NkUajc7zmilZkQbjFCzO_3XyfcooB9xeQz64VwI7qwne7ZNmHrrvM8CTstoezFFYgpbHYAzfKpnPIcRi46910FG02KVhxIDE8D9jZI3syOdchVox3iMoTgVp3nMPQV1ILIdRiS7sheEFsTJEkIznRdCkaPajC7NmdIIRce5X77d86Iye6FTOIFNkATnViaELk11TzeU288PEMjfc8WT8yjUc75pWBqCxADgyZU1jCyXfJcR5lRMMQCmc89kpbAL2uMSP44buFLX6t4jGELBIlqlJQ7wqrgBzKmFuEmbf0X2SbylkYM46n7pq8eBttOyg_7SMz52wj4weWru3pZ78w

[root@k8s-master01 ~]#

2.21 部署consul注册中心

2.21.1 先拉取镜像

[root@k8s-master01 ~]# crictl pull docker.io/library/consul:1.11.1

[root@k8s-master01 ~]# crictl images

[root@k8s-node01 ~]# crictl pull docker.io/library/consul:1.11.1

[root@k8s-node01 ~]# crictl images

[root@k8s-node02 ~]# crictl pull docker.io/library/consul:1.11.1

[root@k8s-node02 ~]# crictl images

2.21.2 创建所需文件夹

[root@k8s-master01 ~]# mkdir -p /consul/userconfig

[root@k8s-master01 ~]# mkdir -p /consul/data

[root@k8s-node01 ~]# mkdir -p /consul/userconfig

[root@k8s-node01 ~]# mkdir -p /consul/data

[root@k8s-node02 ~]# mkdir -p /consul/userconfig

[root@k8s-k8s-node02 ~]# mkdir -p /consul/data

2.21.3 创建命名空间

[root@k8s-master01 ~]# kubectl create namespace public-service

2.21.4 创建yaml文件

参考文档: https://blog.csdn.net/WuLex/article/details/127738998

# 在k8s-master01节点上 vim public-service-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: public-service

# 在k8s-master01节点上 vim consul-client.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: consul-client-config

namespace: public-service

data:

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: consul

namespace: public-service

spec:

selector:

matchLabels:

app: consul

component: client

template:

metadata:

labels:

app: consul

component: client

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "component"

operator: In

values:

- client

topologyKey: "kubernetes.io/hostname"

terminationGracePeriodSeconds: 10

containers:

- name: consul

image: consul:1.11.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8500

name: http

- containerPort: 8600

name: dns-tcp

protocol: TCP

- containerPort: 8600

name: dns-udp

protocol: UDP

- containerPort: 8301

name: serflan

- containerPort: 8302

name: serfwan

- containerPort: 8300

name: server

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- "agent"

- "-advertise=$(POD_IP)"

- "-bind=0.0.0.0"

- "-datacenter=dc1"

- "-config-dir=/consul/userconfig"

- "-data-dir=/consul/data"

- "-disable-host-node-id=true"

- "-domain=cluster.local"

- "-retry-join=consul-server-0.consul-server.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-server-1.consul-server.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-server-2.consul-server.$(NAMESPACE).svc.cluster.local"

- "-client=0.0.0.0"

resources:

limits:

cpu: "50m"

memory: "32Mi"

requests:

cpu: "50m"

memory: "32Mi"

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

volumeMounts:

- name: data

mountPath: /consul/data

- name: user-config

mountPath: /consul/userconfig

volumes:

- name: user-config

configMap:

name: consul-client-config

- name: data

emptyDir: {}

securityContext:

fsGroup: 1000

# volumeClaimTemplates:

# - metadata:

# name: data

# spec:

# accessModes:

# - ReadWriteMany

# storageClassName: "gluster-heketi-2"

# resources:

# requests:

# storage: 10Gi

# 在k8s-master01节点上 vim consul-server.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: consul

namespace: public-service

spec:

rules:

- host: 192.168.8.21

http:

paths:

- path: /

backend:

serviceName: consul-ui

servicePort: 80

---

apiVersion: v1

kind: Service

metadata:

name: consul-ui

namespace: public-service

labels:

app: consul

component: server

spec:

selector:

app: consul

component: server

ports:

- name: http

port: 80

targetPort: 8500

---

apiVersion: v1

kind: Service

metadata:

name: consul-dns

namespace: public-service

labels:

app: consul

component: dns

spec:

selector:

app: consul

ports:

- name: dns-tcp

protocol: TCP

port: 53

targetPort: dns-tcp

- name: dns-udp

protocol: UDP

port: 53

targetPort: dns-udp

---

apiVersion: v1

kind: Service

metadata:

name: consul-server

namespace: public-service

labels:

app: consul

component: server

spec:

selector:

app: consul

component: server

ports:

- name: http

port: 8500

targetPort: 8500

- name: dns-tcp

protocol: TCP

port: 8600

targetPort: dns-tcp

- name: dns-udp

protocol: "UDP"

port: 8600

targetPort: dns-udp

- name: serflan-tcp

protocol: TCP

port: 8301

targetPort: 8301

- name: serflan-udp

protocol: UDP

port: 8301

targetPort: 8302

- name: serfwan-tcp

protocol: TCP

port: 8302

targetPort: 8302

- name: serfwan-udp

protocol: UDP

port: 8302

targetPort: 8302

- name: server

port: 8300

targetPort: 8300

publishNotReadyAddresses: true

clusterIP: None

---

apiVersion: v1

kind: ConfigMap

metadata:

name: consul-server-config

namespace: public-service

data:

---

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: consul-server

namespace: public-service

spec:

selector:

matchLabels:

app: consul

component: server

minAvailable: 2

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: consul-server

namespace: public-service

spec:

serviceName: consul-server

replicas: 3

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

app: consul

component: server

template:

metadata:

labels:

app: consul

component: server

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "component"

operator: In

values:

- server

topologyKey: "kubernetes.io/hostname"

terminationGracePeriodSeconds: 10

containers:

- name: consul

image: consul:1.11.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8500

name: http

- containerPort: 8600

name: dns-tcp

protocol: TCP

- containerPort: 8600

name: dns-udp

protocol: UDP

- containerPort: 8301

name: serflan

- containerPort: 8302

name: serfwan

- containerPort: 8300

name: server

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- "agent"

- "-server"

- "-advertise=$(POD_IP)"

- "-bind=0.0.0.0"

- "-bootstrap-expect=3"

- "-datacenter=dc1"

- "-config-dir=/consul/userconfig"

- "-data-dir=/consul/data"

- "-disable-host-node-id"

- "-domain=cluster.local"

- "-retry-join=consul-server-0.consul-server.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-server-1.consul-server.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-server-2.consul-server.$(NAMESPACE).svc.cluster.local"

- "-client=0.0.0.0"

- "-ui"

resources:

limits:

cpu: "100m"

memory: "128Mi"

requests:

cpu: "100m"

memory: "128Mi"

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

volumeMounts:

- name: data

mountPath: /consul/data

- name: user-config

mountPath: /consul/userconfig

volumes:

- name: user-config

configMap:

name: consul-server-config

- name: data

emptyDir: {}

securityContext:

fsGroup: 1000

# volumeClaimTemplates:

# - metadata:

# name: data

# spec:

# accessModes:

# - ReadWriteMany

# storageClassName: "gluster-heketi-2"

# resources:

# requests:

# storage: 10Gi

# 在k8s-master01节点上 vim stafulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: consul

spec:

serviceName: consul

replicas: 3

selector:

matchLabels:

app: consul

template:

metadata:

labels:

app: consul

spec:

hostNetwork: true

terminationGracePeriodSeconds: 10

volumes:

- name: data-volume

persistentVolumeClaim:

claimName: consul-data-pvc

- name: config-volume

emptyDir: {}

containers:

- name: consul

image: consul:1.11.1

imagePullPolicy: IfNotPresent

args:

- "agent"

- "-server"

- "-bootstrap-expect=3"

- "-ui"

- "-data-dir=/consul/data"

- "-bind=0.0.0.0"

- "-client=0.0.0.0"

- "-retry-join=consul-0.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-1.consul.$(NAMESPACE).svc.cluster.local"

- "-retry-join=consul-2.consul.$(NAMESPACE).svc.cluster.local"

- "-domain=cluster.local"

- "-disable-host-node-id"

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- containerPort: 8500

name: ui-port

- containerPort: 8443

name: https-port

volumeMounts:

- mountPath: /consul/data

name: data-volume

- mountPath: /consul/userconfig

name: config-volume

readinessProbe:

httpGet:

path: /health

port: 8500

initialDelaySeconds: 15

periodSeconds: 10

livenessProbe:

httpGet:

path: /health

port: 8500

initialDelaySeconds: 15

periodSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

name: consul-ui

spec:

type: NodePort

selector:

app: consul

role: server

ports:

- name: ui

port: 8500

targetPort: 8500

nodePort: 30003

2.21.5 加载yaml

# 在k8s-master01节点上

kubectl apply -f public-service-ns.yaml

kubectl apply -f consul-server.yaml

kubectl apply -f consul-client.yaml

kubectl apply -f stafulset.yaml

2.21.6 查看

# 在k8s-master01节点上

kubectl get pod -n public-service

kubectl get svc -n public-service

kubectl exec -n public-service consul-server-0 -- consul members

2.2 设置node1节点和node2节点也可以执行master01上面的管理命令

[root@k8s-node01 ~]# mkdir -p $HOME/.kube

[root@k8s-node01 ~]#

[root@k8s-node02 ~]# mkdir -p $HOME/.kube

[root@k8s-node02 ~]#

[root@k8s-master01 ~]# scp /etc/kubernetes/admin.conf root@192.168.8.22:~/.kube/config

root@192.168.8.22's password:

admin.conf 100% 5648 4.4MB/s 00:00

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# scp /etc/kubernetes/admin.conf root@192.168.8.23:~/.kube/config

root@192.168.8.23's password:

admin.conf 100% 5648 5.6MB/s 00:00

[root@k8s-master01 ~]#

[root@k8s-node01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-node01 ~]#

[root@k8s-node02 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-node02 ~]#

2.3 k9s工具的使用

[root@k8s-master01 ~]# docker --version

Docker version 24.0.7, build afdd53b

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# docker pull derailed/k9s

Using default tag: latest

latest: Pulling from derailed/k9s

97518928ae5f: Pull complete

4cc49068a57b: Pull complete

7c5d7b7e79a7: Pull complete

Digest: sha256:f2e0df2763f4c5bd52acfd02bb9c8158c91b8b748acbb7df890b9ee39dbeeb61

Status: Downloaded newer image for derailed/k9s:latest

docker.io/derailed/k9s:latest

[root@k8s-master01 ~]# docker run -it --rm -v $HOME/.kube/config:/root/.kube/config derailed/k9s

3. 配置整理

3.1 网络地址和主机名配置

nmcli connection modify ens160 ipv4.addresses 192.168.8.21/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

hostnamectl hostname k8s-master01 && bash

3.2 配置hosts文件

echo "192.168.8.21 k8s-master01

192.168.8.22 k8s-node01

192.168.8.23 k8s-node02 " >> /etc/hosts

3.3 关闭swap分区,提升性能

#临时关闭:

swapoff -a

#永久关闭:注释swap挂载,给swap这行开头加一下注释

sed -ri 's/.*swap.*/#&/' /etc/fstab

或者

vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

3.4修改机器内核参数

modprobe br_netfilter

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

3.5 关闭firewalld 和 Selinux

systemctl --now disable firewalld

systemctl is-active firewalld.service

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

reoot

getenforce

3.6 配置阿里云源和安装基本软件包

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.aliyun.com/rockylinux|g' \

-i.bak \

/etc/yum.repos.d/rocky-*.repo

dnf makecache

dnf install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils

dnf install -y lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel

dnf install -y curl curl-devel unzip sudo ntp libaio-devel wget vim

dnf install -y ncurses-devel autoconf automake zlib-devel python-devel epel-release

dnf install -y openssh-server socat ipvsadm conntrack telnet ipvsadm yum-utils

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3.7 配置安装k8s组件需要的阿里云repo源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

参考链接:https://developer.aliyun.com/mirror/kubernetesspm=a2c6h.13651102.0.0.48031b11SVpN6F

3.8 配置时间同步

yum install -y chrony

vim /etc/chrony.conf

server ntp.aliyun.com iburst

timedatectl set-timezone Asia/Shanghai

systemctl restart chronyd && systemctl enable chronyd

chronyc tracking

3.9 安装并配置contarinerd服务

yum install containerd.io-1.6.6 -y

#生成配置文件

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

#修改配置文件:

#打开/etc/containerd/config.toml

vim /etc/containerd/config.toml

把SystemdCgroup = false修改成SystemdCgroup = true

把sandbox_image = "k8s.gcr.io/pause:3.6"修改成sandbox_image="registry.aliyuncs.com/google_containers/pause:3.7"

#创建/etc/crictl.yaml文件

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

#配置 containerd 开机启动,并启动 containerd

systemctl enable containerd --now

#配置containerd镜像加速器,k8s所有节点均按照以下配置:

#编辑vim /etc/containerd/config.toml文件

#找到config_path = "",修改成如下目录:

config_path = "/etc/containerd/certs.d"

mkdir /etc/containerd/certs.d/docker.io/ -p

vim /etc/containerd/certs.d/docker.io/hosts.toml

#写入如下内容:

[host."https://ws81rcka.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]

capabilities = ["pull","push"]

#重启containerd:

systemctl restart containerd

3.10 安装docker

#docker也要安装,docker跟containerd不冲突,安装docker是为了能基于dockerfile构建

dnf install docker-ce -y

systemctl enable docker --now

#配置docker镜像加速

echo '{

"registry-mirrors":["https://ws81rcka.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.com"]

}' > /etc/docker/daemon.json

#重启docker

systemctl restart docker

3.11 安装初始化K8s软件包

yum install -y kubelet-1.28.1 kubeadm-1.28.1 kubectl-1.28.1

systemctl enable kubelet

3.12 模版制作完成

做好快照

链接克隆k8s-master01节点虚拟机

链接克隆k8s-node01节点虚拟机

链接克隆k8s-node01节点虚拟机

3.13 个性化修改k8s-master01

1. 修改主机名、ip,配置主机间免密登录

hostnamectl hostname k8s-master01 && bash

nmcli connection modify ens160 ipv4.addresses 192.168.8.21/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

ssh-keygen #一路回车,不输入密码

ssh-copy-id k8s-master01

#在master01节点上操作

kubeadm config print init-defaults > kubeadm.yaml

#根据我们自己的需求修改配置,比如修改 imageRepository 的值,kube-proxy 的模式为 ipvs,需要注意的是由于我们使用的containerd作为运行时,所以在初始化节点的时候需要指定cgroupDriver为systemd

kubeadm.yaml配置文件如下:

apiVersion: kubeadm.k8s.io/v1beta3

。。。

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.153.21 #控制节点的ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/containerd/containerd.sock #指定containerd容器运行时

imagePullPolicy: IfNotPresent

name: k8s-master01 #控制节点主机名

taints: null

---

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

# 指定阿里云镜像仓库地址,这样在安装k8s时,会自动从阿里云镜像仓库拉取镜像

kind: ClusterConfiguration

kubernetesVersion: 1.28.1 #k8s版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 #指定pod网段, 需要新增加这个

serviceSubnet: 10.96.0.0/12 #指定Service网段

scheduler: {}

#增加如下配置

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

#安装

kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification

#配置kubectl的配置文件config,相当于对kubectl进行授权,这样kubectl命令可以使用这个证书对k8s集群进行管理

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 101m v1.28.1

3.14 个性化修改k8s-node01

hostnamectl hostname k8s-node01 && bash

mcli connection modify ens160 ipv4.addresses 192.168.8.22/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

ssh-keygen #一路回车,不输入密码

ssh-copy-id k8s-node01

3.15 个性化修改k8s-node02

hostnamectl hostname k8s-node02 && bash

mcli connection modify ens160 ipv4.addresses 192.168.8.23/24 ipv4.gateway 192.168.8.2 ipv4.dns 8.8.8.8 ipv4.method manual autoconnect yes

ssh-keygen #一路回车,不输入密码

ssh-copy-id k8s-node02

3.16 kubeadm 初始化k8s集群

#在master01节点上操作

kubeadm config print init-defaults > kubeadm.yaml

#根据我们自己的需求修改配置,比如修改 imageRepository 的值,kube-proxy 的模式为 ipvs,需要注意的是由于我们使用的containerd作为运行时,所以在初始化节点的时候需要指定cgroupDriver为systemd

kubeadm.yaml配置文件如下:

apiVersion: kubeadm.k8s.io/v1beta3

。。。

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.8.21 #控制节点的ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/containerd/containerd.sock #指定containerd容器运行时

imagePullPolicy: IfNotPresent

name: k8s-master01 #控制节点主机名

taints: null

---

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki