利用pytorch搭建VGG16、VGG19卷积神经网络对cifar10数据集进行训练(置顶有详细注释源码,免费下载,仅供学习参考,欢迎大家在评论区学习交流)

数据集大小:60000张彩色图片,其中训练集50000张,测试集10000张;图像大小:32*32*3,3通道RGB,32*32个像素点;

1.CIFAR10数据集简介

数据集大小:60000张彩色图片,其中训练集50000张,测试集10000张;

图像大小:32*32*3,3通道RGB,32*32个像素点;

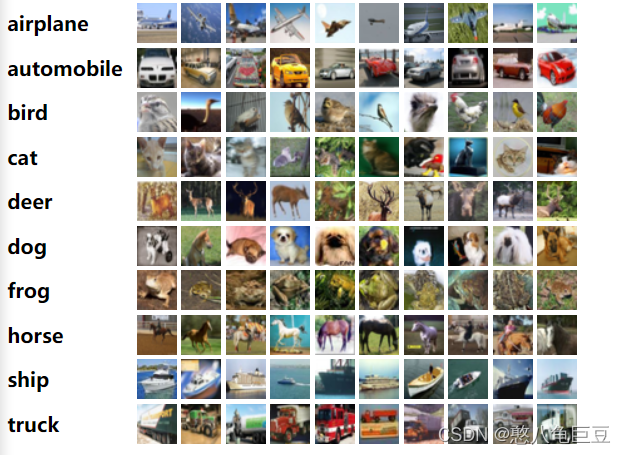

标签:一共有10个,按顺序依次是{“airplane”, "automobile", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "truck"},在数据集中随机选取的10*10张图像如下所示:

CIFAR10数据集官方简介:

CIFAR-10 and CIFAR-100 datasets (toronto.edu)

CIFAR10数据集官方用法及示例:

CIFAR10 — Torchvision 0.16 documentation (pytorch.org)

2.VGG16和VGG19卷积神经网络

VGG卷积神经网络在提出之时便证明了在一定程度上加深网络深度能够提升网络性能,VGG卷积神经网络包括VGG11、VGG13、VGG16以及VGG19,在这里,主要就VGG16和VGG19两种较为常用的VGG神经网络进行相关讲解和CIFAR10模型训练。

VGG卷积神经网络官方用法:

VGG — Torchvision 0.16 documentation (pytorch.org)

2.1 VGG16卷积神经网络

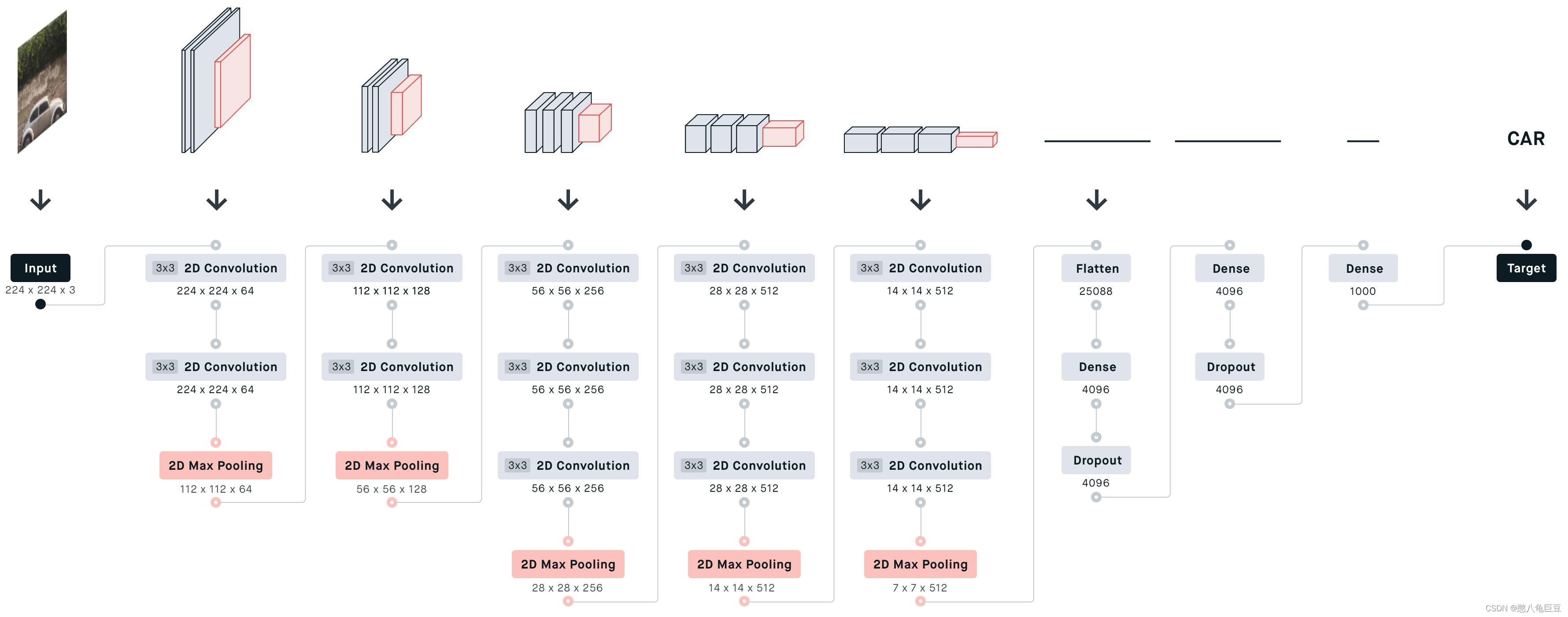

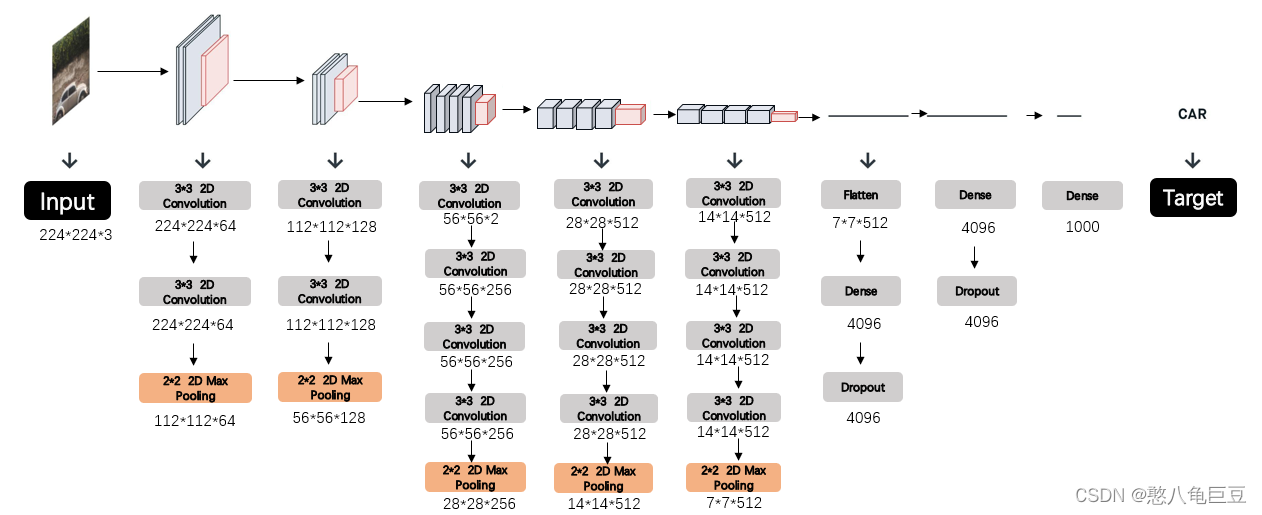

假设输入图像大小为224*224*3:

VGG16神经网络有两个特点:

①采用3*3卷积核,相比于传统的5*5的卷积核,其使用两个3*3便可以得到与一个5*5卷积核相同的感受野,还减少了参数量和计算量(感受野表示一个像素点中所包含的输入图像像素点的量);

②卷积层数更深,在VGG16中一共有13层卷积层,在每次池化后会进行多层卷积再进行池化,所提取的特征数更多。

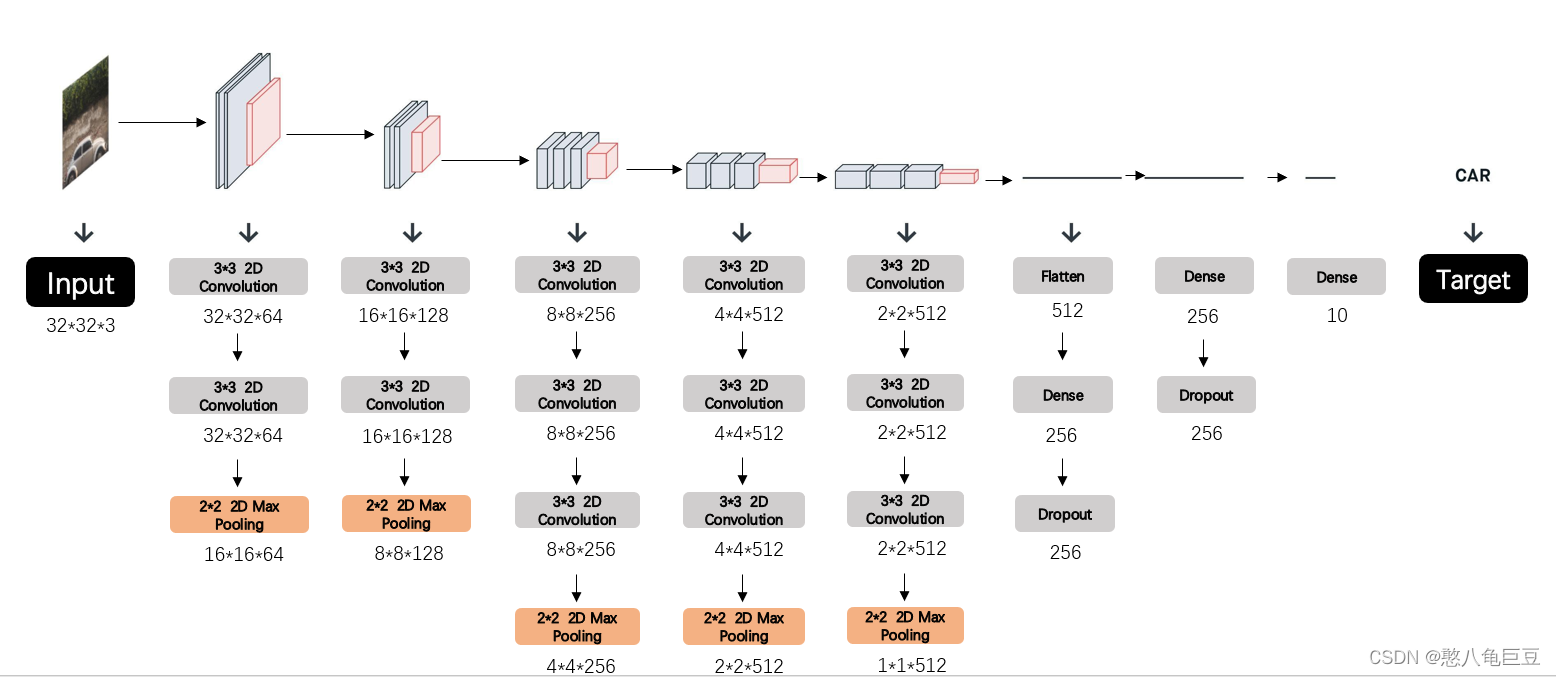

结合CIFAR10模型:(输入为32*32*3,输出为标签数10)

2.2 VGG19卷积神经网络

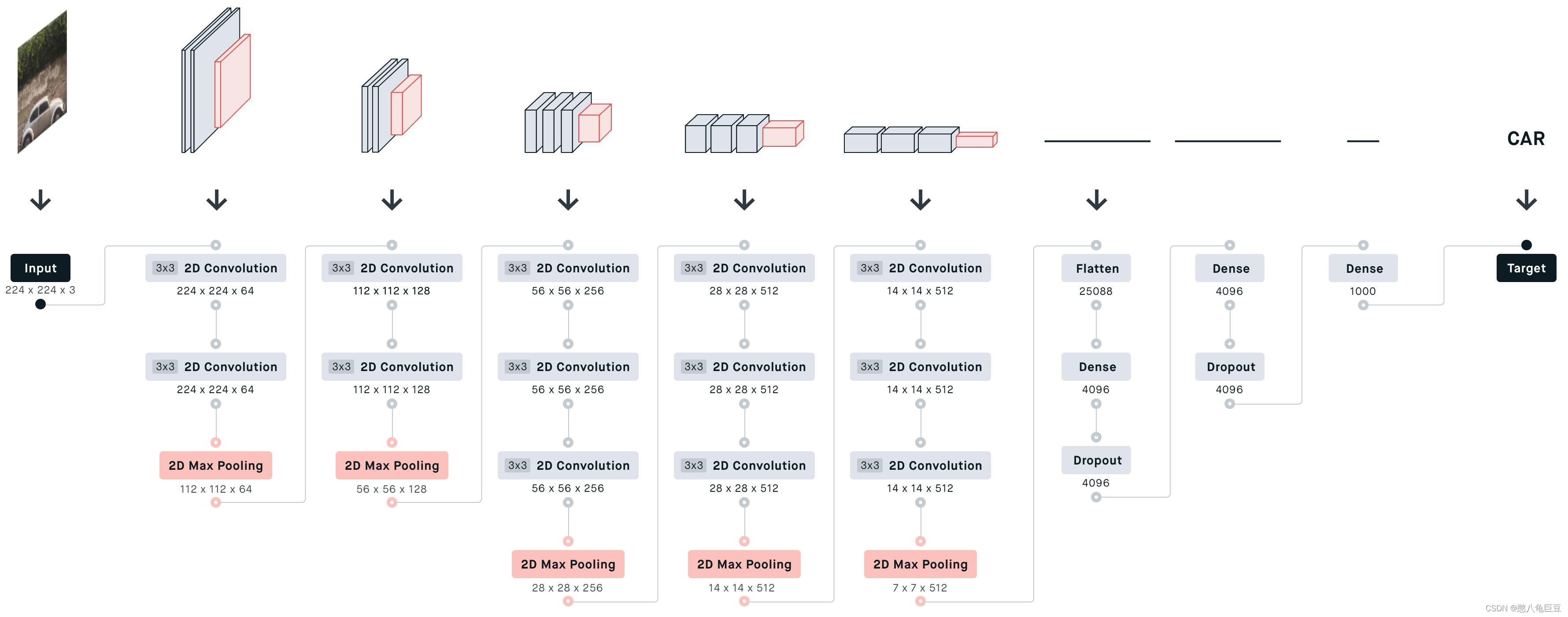

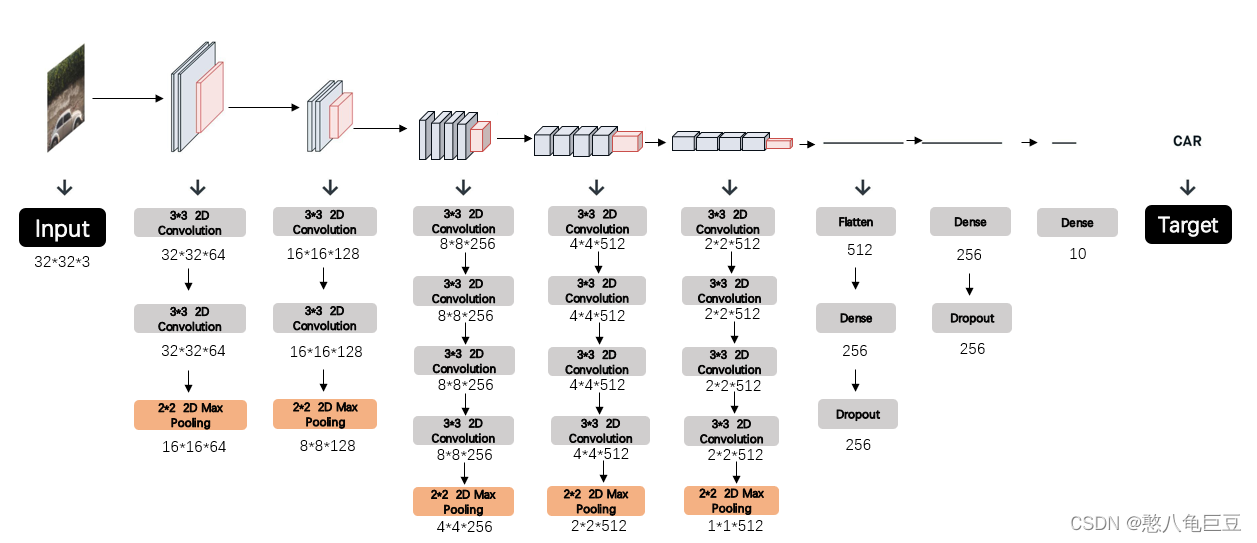

同样的,假设输入图像大小为224*224*3:

相比于VGG16卷积神经网络,VGG19的特点:

VGG19进一步加深了上图所示Layer3、Layer4和Layer4的卷积层数量(增加了一层卷积),并且取得了比VGG16更好的效果,整个VGG卷积神经网络的发展历程就是在最初的VGG11的基础上逐渐深度化,并且剔除掉一些被证明没有实际效果的层级(LRN层):

结合CIFAR10数据集:(输入为32*32*3,输出为标签数10)

3. 利用pytorch搭建VGG16和VGG19模型对CIFAR10数据集进行训练

3. 利用pytorch搭建VGG16和VGG19模型对CIFAR10数据集进行训练

3.1 搭建VGG16对CIFAR10进行训练

新建模型文件model.py,按照VGG16结构图,搭建卷积 神经网络,并在每一次卷积后添加正则化层和ReLU激活层,正则化的目的是降低数据的复杂程度,减少过拟合,ReLU激活层用以防止梯度消失和梯度爆炸现象,缓解过拟合,且在反向传播参数时求导简单,代码中进行了详细的注释:

# 搭建cifar10的vgg16模型

import torch

from torch import nn

class cifar10_Model(nn.Module):

def __init__(self):

super(cifar10_Model, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # 32*32*3->32*32*64

nn.BatchNorm2d(num_features=64),

nn.ReLU(),

nn.Conv2d(64, 64, 3, 1, 1), # 32*32*64->32*32*64

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 32*32*64->16*16*64

)

self.layer2 = nn.Sequential(

nn.Conv2d(64, 128, 3, 1, 1), # 16*16*64->16*16*128

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, 3, 1, 1), # 16*16*128->16*16*128

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 16*16*128->8*8*128

)

self.layer3 = nn.Sequential(

nn.Conv2d(128, 256, 3, 1, 1), # 8*8*128->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, 3, 1, 1), # 8*8*256->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, 3, 1, 1), # 8*8*256->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 8*8*256->4*4*256

)

self.layer4 = nn.Sequential(

nn.Conv2d(256, 512, 3, 1, 1), # 4*4*256->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 4*4*512->2*2*512

)

self.layer5 = nn.Sequential(

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 2*2*512->1*1*512

)

self.layer6 = nn.Sequential(

nn.Flatten(),

nn.Linear(512, 256),

nn.Linear(256, 256),

nn.Dropout2d(p=0.5),

nn.ReLU(),

nn.Linear(256, 256),

nn.Dropout2d(p=0.5),

nn.ReLU(),

)

self.layer7 = nn.Sequential(

nn.Linear(256, 10)

)

self.model = nn.Sequential(

self.layer1,

self.layer2,

self.layer3,

self.layer4,

self.layer5,

self.layer6,

self.layer7,

)

def forward(self, x):

x = self.model(x)

return x新建模型train.py,导入上述模型,下载数据集进行训练和测试,代码中有详细注释 :

import torchvision

from torch.optim.lr_scheduler import StepLR

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model import*

import time

writer = SummaryWriter("cifar10_vgg16") # tensorboard查看运行结果

train_dataset_transform = torchvision.transforms.Compose([ # 将以下几种处理图片的方式组合处理训练集

torchvision.transforms.RandomCrop(32, padding=4), # 上下左右填充4层0并做随机裁剪,防止过拟合

torchvision.transforms.RandomHorizontalFlip(), # 以默认概率0.5对图像进行随机水平翻转,防止过拟合

torchvision.transforms.ToTensor(), # 将图像转化为tensor数据类型

torchvision.transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)) # 对图像进行正则化处理,减小数据量,数据方便处理

])

test_dataset_transform = torchvision.transforms.Compose([ # 将以下几种处理图片的方式组合处理测试集

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

])

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 有gpu在gpu上跑,否则在cpu上跑

train_data = torchvision.datasets.CIFAR10("../dataset", train=True, transform=train_dataset_transform, download=True) # 下载训练数据并做转化

test_data = torchvision.datasets.CIFAR10("../dataset", train=False, transform=test_dataset_transform, download=True) # 下载测试数据并做转化

train_data_size = len(train_data) # 输出训练集和测试集数据长度

test_data_size = len(test_data)

print("训练集的长度为:{}".format(train_data_size))

print("测试集的长度为:{}".format(test_data_size))

train_dataloader = DataLoader(train_data, batch_size=64) # 一批放入64张图片

test_dataloader = DataLoader(test_data, batch_size=64)

cifar10_model = cifar10_Model() # 卷积神经网络模型

cifar10_model = cifar10_model.to(device) # 模型在gpu上跑

loss_fn = nn.CrossEntropyLoss() # 定义损失函数为交叉熵

loss_fn = loss_fn.to(device) # 损失函数在gpu上跑

learning_rate = 0.1 # 学习率

optimizer = torch.optim.SGD(cifar10_model.parameters(), lr=learning_rate) # 定义模型参数优化器

scheduler = StepLR(optimizer, step_size=10, gamma=0.1) # 自适应改变学习率,每经过10轮训练,学习率减少90%

train_step = 0 # 定义训练次数

test_step = 0

epoch = 30 # 定义训练轮数

start_time = time.time() # 开始时间

for i in range(epoch): # 30轮训练

print("第{}轮训练开始".format(i+1))

cifar10_model.train() # 将模型置于训练模式下

for data in train_dataloader: # 取图片

imgs, targets = data # 取图片本身及其标签值

writer.add_images("train_data", imgs, train_step) # 在tensorboard中显示图片

imgs = imgs.to(device) # 让图片和标签在gpu上跑

targets = targets.to(device)

outputs = cifar10_model(imgs) # 将图片经过模型

loss = loss_fn(outputs, targets) # 计算输出和标签的损失函数

optimizer.zero_grad() # 优化器梯度置0

loss.backward() # 反向传播

optimizer.step() # 更新参数

train_step += 1

if train_step % 100 == 0:

end_time = time.time() # 结束一张图片训练时间

print("训练次数:{},Loss:{},所用时间:{}".format(train_step, loss, end_time-start_time))

scheduler.step() # 更新学习率

cifar10_model.eval() # 将模型置于测试状态

test_loss = 0 # 定义测试集损失值

test_accuracy = 0 # 定义测试集准确率

with torch.no_grad(): # 测试集不参与模型训练

for data in test_dataloader: # 取数据

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = cifar10_model(imgs)

loss = loss_fn(outputs, targets)

test_loss += loss # 计算总损失值

accuracy = (outputs.argmax(1) == targets).sum() # 计算10个输出的最大值是否对应正确的标签,是则置1,否则置0,最后求总和得到10000张图片中预测正确的图片数

test_accuracy += accuracy

print("整体测试集上的Loss:{},Accuracy:{}".format(test_loss, test_accuracy/test_data_size))

writer.add_scalar("test_accuracy", test_accuracy / test_data_size, i+1) # 在tensorboard中显示正确率随训练轮数的曲线

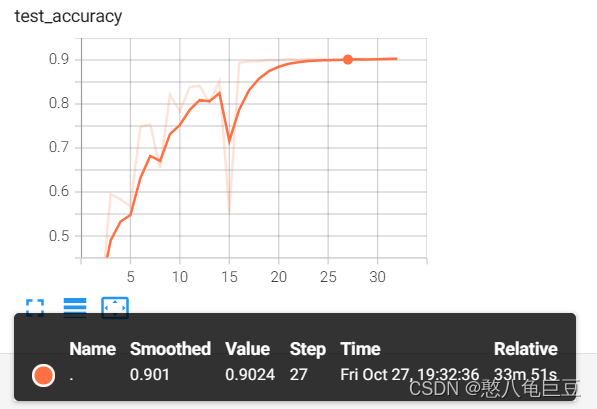

test_step += 1测试集准确率与测试轮数的图像如图所示:

模型在第25轮训练时的测试集准确率已经达到了90%以上,此时准确率便难以再上升,这是多次改变学习率调整步长的结果中最好的。考虑到训练集大小以及训练轮数会耗费大量时间,或许可以采用其它类型的卷积神经网络来训练数据集。

3.2 搭建VGG19对CIFAR10模型进行训练

新建模型文件model.py,相比于VGG16模型,VGG19仅仅在Layer3~Layer5上加深了一层卷积神经网络,只需要在上述模型文件中添加卷积层、正则化层、激活函数层即可:

from torch import nn

class cifar10_Model(nn.Module):

def __init__(self):

super(cifar10_Model, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # 32*32*3->32*32*64

nn.BatchNorm2d(64),

nn.ReLU(),

nn.Conv2d(64, 64, 3, 1, 1), # 32*32*64->32*32*64

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2), # 32*32*64->16*16*64

)

self.layer2 = nn.Sequential(

nn.Conv2d(64, 128, 3, 1, 1), # 16*16*64->16*16*128

nn.BatchNorm2d(128),

nn.ReLU(),

nn.Conv2d(128, 128, 3, 1, 1), # 16*16*128->16*16*128

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 16*16*128->8*8*128

)

self.layer3 = nn.Sequential(

nn.Conv2d(128, 256, 3, 1, 1), # 8*8*128->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, 3, 1, 1), # 8*8*256->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, 3, 1, 1), # 8*8*256->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, 3, 1, 1), # 8*8*256->8*8*256

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 8*8*256->4*4*256

)

self.layer4 = nn.Sequential(

nn.Conv2d(256, 512, 3, 1, 1), # 4*4*256->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 4*4*512->4*4*512

nn.MaxPool2d(2, 2) # 4*4*512->2*2*512

)

self.layer5 = nn.Sequential(

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->2*2*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.Conv2d(512, 512, 3, 1, 1), # 2*2*512->8*8*512

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2) # 2*2*512->1*1*512

)

self.layer6 = nn.Sequential(

nn.Flatten(),

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 256),

nn.ReLU(),

nn.Dropout2d(p=0.5),

nn.Linear(256, 256),

nn.Dropout2d(p=0.5),

)

self.layer7 = nn.Sequential(

nn.Linear(256, 10)

)

self.model = nn.Sequential(

self.layer1,

self.layer2,

self.layer3,

self.layer4,

self.layer5,

self.layer6,

self.layer7,

)

def forward(self, x):

x = self.model(x)

return x模型训练train.py文件与VGG16基本相同:

import torchvision

from torch.optim.lr_scheduler import StepLR

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from model import*

import time

writer = SummaryWriter("cifar10_vgg19") # tensorboard查看运行结果

train_dataset_transform = torchvision.transforms.Compose([ # 将以下几种处理图片的方式组合处理训练集

torchvision.transforms.RandomCrop(32, padding=4), # 上下左右填充4层0并做随机裁剪,防止过拟合

torchvision.transforms.RandomHorizontalFlip(), # 以默认概率0.5对图像进行随机水平翻转,防止过拟合

torchvision.transforms.ToTensor(), # 将图像转化为tensor数据类型

torchvision.transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)) # 对图像进行正则化处理,减小数据量,数据方便处理

])

test_dataset_transform = torchvision.transforms.Compose([ # 将以下几种处理图片的方式组合处理测试集

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

])

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 有gpu在gpu上跑,否则在cpu上跑

train_data = torchvision.datasets.CIFAR10("../dataset", train=True, transform=train_dataset_transform, download=True) # 下载训练数据并做转化

test_data = torchvision.datasets.CIFAR10("../dataset", train=False, transform=test_dataset_transform, download=True) # 下载测试数据并做转化

train_data_size = len(train_data) # 输出训练集和测试集数据长度

test_data_size = len(test_data)

print("训练集的长度为:{}".format(train_data_size))

print("测试集的长度为:{}".format(test_data_size))

train_dataloader = DataLoader(train_data, batch_size=64) # 一批放入64张图片

test_dataloader = DataLoader(test_data, batch_size=64)

cifar10_model = cifar10_Model() # 卷积神经网络模型

cifar10_model = cifar10_model.to(device) # 模型在gpu上跑

loss_fn = nn.CrossEntropyLoss() # 定义损失函数为交叉熵

loss_fn = loss_fn.to(device) # 损失函数在gpu上跑

learning_rate = 0.1 # 学习率

optimizer = torch.optim.SGD(cifar10_model.parameters(), lr=learning_rate) # 定义模型参数优化器

scheduler = StepLR(optimizer, step_size=10, gamma=0.1) # 自适应改变学习率,每经过10轮训练,学习率减少90%

train_step = 0 # 定义训练次数

test_step = 0

epoch = 50 # 定义训练轮数

start_time = time.time() # 开始时间

for i in range(epoch): # 30轮训练

print("第{}轮训练开始".format(i+1))

cifar10_model.train() # 将模型置于训练模式下

for data in train_dataloader: # 取图片

imgs, targets = data # 取图片本身及其标签值

writer.add_images("train_data", imgs, train_step) # 在tensorboard中显示图片

imgs = imgs.to(device) # 让图片和标签在gpu上跑

targets = targets.to(device)

outputs = cifar10_model(imgs) # 将图片经过模型

loss = loss_fn(outputs, targets) # 计算输出和标签的损失函数

optimizer.zero_grad() # 优化器梯度置0

loss.backward() # 反向传播

optimizer.step() # 更新参数

train_step += 1

if train_step % 100 == 0:

end_time = time.time() # 结束一张图片训练时间

print("训练次数:{},Loss:{},所用时间:{}".format(train_step, loss, end_time-start_time))

scheduler.step() # 更新学习率

cifar10_model.eval() # 将模型置于测试状态

test_loss = 0 # 定义测试集损失值

test_accuracy = 0 # 定义测试集准确率

with torch.no_grad(): # 测试集不参与模型训练

for data in test_dataloader: # 取数据

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = cifar10_model(imgs)

loss = loss_fn(outputs, targets)

test_loss += loss # 计算总损失值

accuracy = (outputs.argmax(1) == targets).sum() # 计算10个输出的最大值是否对应正确的标签,是则置1,否则置0,最后求总和得到10000张图片中预测正确的图片数

test_accuracy += accuracy

print("整体测试集上的Loss:{},Accuracy:{}".format(test_loss, test_accuracy/test_data_size))

writer.add_scalar("test_accuracy", test_accuracy / test_data_size, i+1) # 在tensorboard中显示正确率随训练轮数的曲线

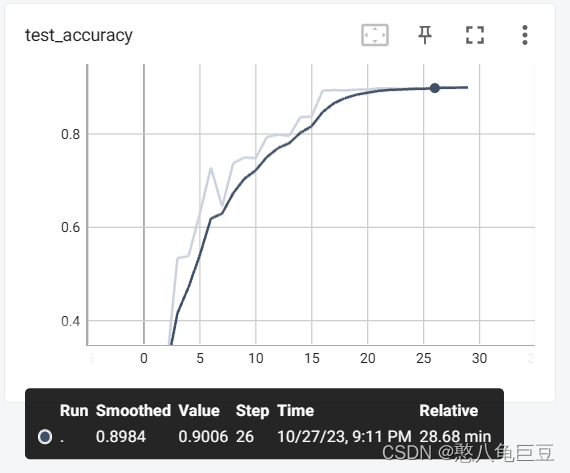

test_step += 1VGG19正确率随训练轮数图像如下所示:

改善程度不是很好,和VGG16的效果差不多,这里本文也尝试过更改许多次学习率步长,基本上和上面的结果相仿,说明此时训练集的大小和训练轮数是主要制约正确率的因素。

4. 总结

优点:VGG卷积神经网络在AlextNet的基础上提出了用3*3卷积层代替5*5卷积层的巧妙想法,在这方面上确实减少了计算量,也证明了加深网络深度可以在一定程度上增加网络的正确率(但当训练数据量和训练轮数不够成为主要制约因素时并不一定)。

缺点:VGG卷积神经网络的全连接层过多,后面有人证明其全连接层并不能起到增加网络正确率的作用,且VGG网络参数、复杂度相较于其它的卷积神经网络都要大,故而对其进行训练和测试比较麻烦,在这里也可以采用pytorch官网上已经训练好的VGG神经网络对测试集进行测试。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)