基于K8S搭建企业级高可用集群

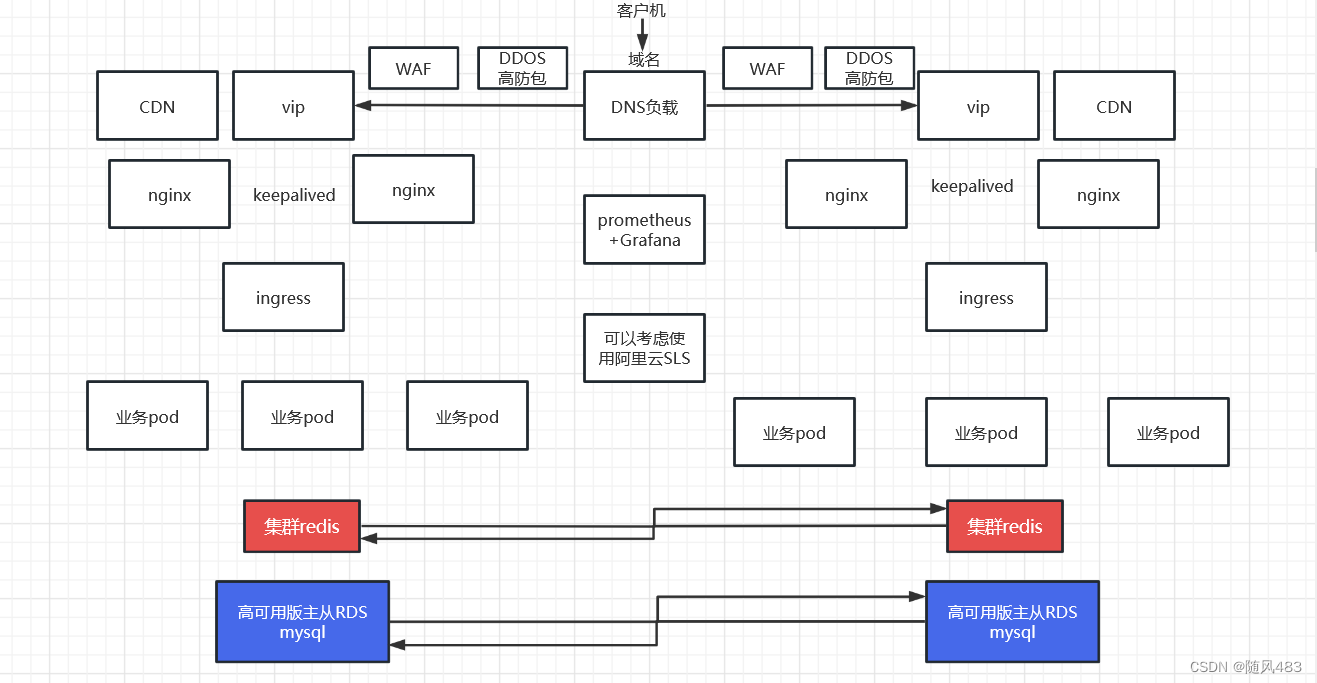

该架构图主要从安全,稳定,效率,成本四个角度出发1.安全方面:在业务入口处,使用阿里云的WAF防火墙产品,以及阿里云的DDOS高防包,可以有效地防止sql注入,DDos攻击等百分之95的安全攻击2.稳定方面:考虑到项目部署在一个机房的一个地区。如果这个地区出现故障将导致整体项目不可用,所以采用同一地区,双机房的部署方式,项目的数据库和redis互相连通,在一个机房出现问题时,可以快速切换到另一个机

目录

1.规划设计整个集群的架构,部署k8s单master的集群环境,1个master2个node点、相关的服务器。

3.将自己的开发的web接口系统制作成镜像,部署到k8s里作为web应用,采用HPA技术,当cpu使用率达到50%的时候,进行水平扩缩,最小20个,最多40个pod。

4.部署nfs服务器,为整个web集群提供数据,让所有的web 业务pod都取访问,通过pv和pvc、卷挂载实现。

5.部署主从复制的Mysql集群,以及Redis集群提供数据库服务

6.安装promethues对所有集群(cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等)进行监控包括k8s集群

8.使用探针(liveless、readiness、startup)的httpGet和exec方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

1.项目架构图

1.1项目介绍

该架构图主要从安全,稳定,效率,成本四个角度出发

1.安全方面:在业务入口处,使用阿里云的WAF防火墙产品,以及阿里云的DDOS高防包,可以有效地防止sql注入,DDos攻击等百分之95的安全攻击

2.稳定方面:考虑到项目部署在一个机房的一个地区。如果这个地区出现故障将导致整体项目不可用,所以采用同一地区,双机房的部署方式,项目的数据库和redis互相连通,在一个机房出现问题时,可以快速切换到另一个机房,最短的时间恢复业务

3.效率方面:如果说业务机房在广州,考虑到用户量的增大,用户分布在全国各地,新疆,河北这一片区域的用户,访问卡顿问题,购买云产商阿里云的CDN服务,可以避免出现这种地域访问卡顿问题,同时整体统一使用Prometheus+Grafana的监控告警,以及阿里云的SLS日志产品,对整个双地区的日志进行收集,方便快速定位日志

4.成本方面:在保障业务高可用的情况下,可以有效的节约成本,减少损失

1.2项目说明

由于本地虚拟机搭建,本项目没有购买CDN,WAF,DDOS,SLS等服务,将会搭建一套k8s集群,在生产环境可以使用以上服务

2.项目步骤

1.规划设计整个集群的架构,部署k8s单master的集群环境,1个master2个node点、相关的服务器。

1.1 部署k8s集群

注意:以下操作标注每台和master执行

1.关闭防火墙和selinux(每台)

[root@scmaster ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@scmaster ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@scmaster ~]# vim /etc/selinux/config

SELINUX=disabled

[root@scmaster ~]# getenforce

Enforcing

2.安装docker(每台)

每台节点服务器上都操作master和node上进行

卸载原来安装过的docker,如果没有安装可以不需要卸载

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

安装yum相关的工具,下载docker-ce.repo文件

[root@cali ~]# yum install -y yum-utils -y

[root@cali ~]#yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

下载docker-ce.repo文件存放在/etc/yum.repos.d

[root@cali yum.repos.d]# pwd

/etc/yum.repos.d

[root@cali yum.repos.d]# ls

CentOS-Base.repo CentOS-Debuginfo.repo CentOS-Media.repo CentOS-Vault.repo docker-ce.repo

CentOS-CR.repo CentOS-fasttrack.repo CentOS-Sources.repo CentOS-x86_64-kernel.repo nginx.repo

安装docker-ce软件

[root@cali yum.repos.d]#yum install docker-ce-20.10.18 docker-ce-cli-20.10.18 containerd.io docker-compose-plugin -y

container engine 容器引擎

docker是一个容器管理的软件

docker-ce 是服务器端软件 server

docker-ce-cli 是客户端软件 client

docker-compose-plugin 是compose插件,用来批量启动很多容器,在单台机器上

containerd.io 底层用来启动容器的

[root@scmaster ~]# docker --version

Docker version 20.10.18, build b40c2f6

4.启动docker服务(每台)

[root@scmaster ~]# systemctl start docker

[root@scmaster ~]# ps aux|grep docker

root 53288 1.5 2.3 1149960 43264 ? Ssl 15:11 0:00 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

root 53410 0.0 0.0 112824 984 pts/0 S+ 15:11 0:00 grep --color=auto docker

5.设置docker服务开机启动

[root@scmaster ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

3.配置 Docker使用systemd作为默认Cgroup驱动

每台服务器上都要操作,master和node上都要操作执行下面的脚本,会产生 /etc/docker/daemon.json文件

cat <<EOF > /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

#重启docker

[root@scmaster docker]# systemctl restart docker

[root@web1 yum.repos.d]# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

关闭swap分区

因为k8s不想使用swap分区来存储数据,使用swap会降低性能

每台服务器都需要操作

swapoff -a # 临时关闭

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab #永久关闭

修改主机名(每台)

[root@prometheus ~]# cat >> /etc/hosts << EOF

192.168.159.137 prometheus

192.168.159.131 web1

192.168.159.132 web2

192.168.159.133 web3

EOF

修改内核参数(每台)

cat <<EOF >> /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

cat /etc/sysctl.conf (每台)

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

每台机器都做

[root@prometheus ~]# sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

6.安装kubeadm,kubelet和kubectl(每台)

kubeadm --》k8s的管理程序--》在master上运行的--》建立整个k8s集群,背后是执行了大量的脚本,帮助我们去启动k8s

kubelet --》在node节点上用来管理容器的--》管理docker,告诉docker程序去启动容器,管理docker容器,告诉docker程序去启动pod

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都运行在 Pod 中。

kubectl --》在master上用来给node节点发号施令的程序,用来控制node节点的,告诉它们做什么事情的,是命令行操作的工具

# 添加kubernetes YUM软件源(每台)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装kubeadm,kubelet和kubectl ,并且指定版本(每台)

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

--》最好指定版本,因为1.24的版本默认的容器运行时环境不是docker了

下面这个网站有解决方法

https://www.docker.com/blog/dockershim-not-needed-docker-desktop-with-kubernetes-1-24/

#设置开机自启,因为kubelet是k8s在node节点上的代理,必须开机要运行的(每台)

systemctl enable kubelet

#提前准备coredns:1.8.4的镜像,后面需要使用,需要在每台机器上下载镜像

[root@master ~]# docker pull coredns/coredns:1.8.4

[root@master ~]# docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4

部署Kubernetes Master

master主机执行

#初始化操作在master服务器上执行

[root@master ~]#kubeadm init \

--apiserver-advertise-address=192.168.237.180 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

#192.168.92.132 是master的ip

# --service-cidr string Use alternative range of IP address for service VIPs. (default "10.96.0.0/12") 服务发布暴露--》dnat

# --pod-network-cidr string Specify range of IP addresses for the pod network. If set, the control plane will automatically allocate CIDRs for every node.

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user

(在master上执行这三个命令)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

(再执行这个命令)

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on eac

(最后复制产生的下面些命令去node)

kubeadm join 192.168.159.137:6443 --token 4aw7ag.a8qzacdm0jcd3hxs \

--discovery-token-ca-cert-hash sha256:09511c561866693a9e7f574c1162b3bc28c7

加入k8s集群(node服务器)2.部署jumpserver堡垒机,接入node节点

1.下载Jumpserver官网提供的文件一键部署(提前部署阿里YUM)

curl -sSL https://github.com/jumpserver/jumpserver/releases/download/v2.24.0/quick_start.sh | bash

2.启动jumpserver服务

cd /opt/jumpserver-installer-v2.24.0 ##(默认安装目录)

#启动

./jmsctl.sh start ## 或者 ./jmsctl.sh restart/down/uninstall来管理服务的状态

3.查看镜像和容器是否存在

docker images

##验证

REPOSITORY TAG IMAGE ID CREATED SIZE

jumpserver/mariadb 10.6 aac2cf878de9 2 months ago 405MB

jumpserver/redis 6.2 48da0c367062 2 months ago 113MB

jumpserver/web v2.24.0 a9046484de3d 6 months ago 416MB

jumpserver/core v2.24.0 83b8321cf9e0 6 months ago 1.84GB

jumpserver/koko v2.24.0 708386a1290e 6 months ago 770MB

jumpserver/lion v2.24.0 81602523a0ac 6 months ago 351MB

jumpserver/magnus v2.24.0 e0a90a2217ad 6 months ago 157MB

docker ps | grep jumpser

##验证 查看容器是否健康

1c26e0acbc8e jumpserver/core:v2.24.0 "./entrypoint.sh sta…" 8 hours ago Up 8 hours (healthy) 8070/tcp, 8080/tcp

d544ec4a155d jumpserver/magnus:v2.24.0 "./entrypoint.sh" 8 hours ago Up 8 hours (healthy) 15211-15212/tcp, 0.0.0.0:33060-33061->33060-33061/tcp, :::33060-33061->33060-33061/tcp, 54320/tcp, 0.0.0.0:63790->63790/tcp, :::63790->63790/tcp jms_magnus

1d409d8d4a62 jumpserver/lion:v2.24.0 "./entrypoint.sh" 8 hours ago Up 8 hours (healthy) 4822/tcp

b6bbd8bf21e8 jumpserver/koko:v2.24.0 "./entrypoint.sh" 8 hours ago Up 8 hours (healthy) 0.0.0.0:2222->2222/tcp, :::2222->2222/tcp, 5000/tcp

5774d0475eef jumpserver/web:v2.24.0 "/docker-entrypoint.…" 8 hours ago Up 8 hours (healthy) 0.0.0.0:80->80/tcp, :::80->80/tcp

18c1f9eecbaf jumpserver/core:v2.24.0 "./entrypoint.sh sta…" 8 hours ago Up 8 hours (healthy) 8070/tcp, 8080/tcp

2767e8938563 jumpserver/mariadb:10.6 "docker-entrypoint.s…" 19 hours ago Up 8 hours (healthy) 3306/tcp

635f74cc0e43 jumpserver/redis:6.2 "docker-entrypoint.s…" 19 hours ago Up 8 hours (healthy) 6379/tcp

4.访问当前部署虚拟机的IP地址

http://192.168.xxx.xxx

默认用户: admin 默认密码: admin (超级用户)

5.接入Master和Node节点(参考jumpserver使用教程)

3.将自己的开发的web接口系统制作成镜像,部署到k8s里作为web应用,采用HPA技术,当cpu使用率达到50%的时候,进行水平扩缩,最小20个,最多40个pod。

3.1使用HPA的配置文件如下

[root@k8s-master HPA]# cat hpa.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: nginx-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

minReplicas: 20

maxReplicas: 40

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

[root@k8s-master HPA]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest # replace it with your exactly <image_name:tags>

ports:

- containerPort: 80

resources:

requests: ##必须设置,不然HPA无法运行。

cpu: 500m

[root@k8s-master HPA]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

nginx-hpa Deployment/nginx 0%/50% 20 40 3 20h

4.部署nfs服务器,为整个web集群提供数据,让所有的web 业务pod都取访问,通过pv和pvc、卷挂载实现。

4.1搭建nfs服务器

使用nfs的数据流程

pod-->volume-->pvc-->pv-->nfs

在每个节点都要安装nfs服务器

yum install nfs-utils -y

[root@master 55]# service nfs restart

2.设置共享目录

[root@master 55]# vim /etc/exports

[root@master 55]# cat /etc/exports

/sc/web 192.168.2.0/24(rw,no_root_squash,sync)

[root@master 55]#

3.新建共享目录和index.html网页

[root@master 55]# mkdir /sc/web -p

[root@master 55]# cd /sc/web/

[root@master web]# echo "welcome to sanchuang" >index.html

[root@master web]# ls

index.html

[root@master web]# cat index.html

welcome to sanchuang

[root@master web]#

4.刷新nfs或者重新输出共享目录

[root@master web]# exportfs -a 输出所有共享目录

[root@master web]# exportfs -v 显示输出的共享目录

/sc/web 192.168.2.0/24(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

[root@master web]# exportfs -r 重新输出所有的共享目录

[root@master web]# exportfs -v

/sc/web 192.168.2.0/24(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

[root@master web]#

[root@master web]# service nfs restart 重启nfs服务

Redirecting to /bin/systemctl restart nfs.service

测试是否成功

[root@web1 ~]# mkdir /sc

[root@web1 ~]# mount 192.168.159.134:/web /sc

[root@web1 ~]# df -Th

192.168.159.134:/web nfs4 17G 2.6G 15G 16% /sc

5.创建pv使用nfs服务器上的共享目录

[root@master 55]# vim nfs-pv.yaml

[root@master 55]# cat nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: sc-nginx-pv

labels:

type: sc-nginx-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs #pv对应的名字

nfs:

path: "/sc/web" #nfs共享的目录

server: 192.168.2.130 #nfs服务器的ip地址

readOnly: false #访问模式

[root@prometheus pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

sc-nginx-pv 10Gi RWX Retain Available nfs 32s

6.创建pvc使用pv

[root@master 55]# vim pvc-nfs.yaml

[root@master 55]# cat pvc-nfs.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: sc-nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs #使用nfs类型的pv

[root@prometheus pv]# kubectl apply -f pvc-nfs.yaml

persistentvolumeclaim/sc-nginx-pvc created

[root@prometheus pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

sc-nginx-pvc Bound sc-nginx-pv 10Gi RWX nfs 4s

7.创建pod使用pvc

[root@master 55]# cat pod-nfs.yaml

apiVersion: v1

kind: Pod

metadata:

name: sc-pv-pod-nfs

spec:

volumes:

- name: sc-pv-storage-nfs

persistentVolumeClaim:

claimName: sc-nginx-pvc

containers:

- name: sc-pv-container-nfs

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: sc-pv-storage-nfs

[root@prometheus pv]# kubectl apply -f pod.yaml

pod/sc-pv-pod-nfs created

[root@prometheus pv]# kubectl get pod

sc-pv-pod-nfs 1/1 Running 0 28s

测试,结果发现内容为nfs1中储存的

[root@prometheus pv]# curl 10.244.3.84

wel come to qiuchao feizi feizi

修改nfs的内容,再次访问

[root@prometheus pv]# curl 10.244.3.84

wel come to qiuchao feizi feizi

qiuchaochao5.部署主从复制的Mysql集群,以及Redis集群提供数据库服务

主从复制实验

准备的机器为:web1,web3

因为web3开启了二进制日志,选web3为主服务器,web1为从

配置web3二进制日志

[root@web3 backup]# cat /etc/my.cnf

[mysqld_safe]

[client]

socket=/data/mysql/mysql.sock

[mysqld]

socket=/data/mysql/mysql.sock

port = 3306

open_files_limit = 8192

innodb_buffer_pool_size = 512M

character-set-server=utf8

log_bin

server_id = 1

expire_logs_days = 3

[mysql]

auto-rehash

prompt=\u@\d \R:\m mysql>

检查是否打开

root@(none) 11:44 mysql>show variables like 'log_bin';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| log_bin | ON |

+---------------+-------+

1 row in set (0.02 sec)

保持主与从的数据一致性

在主服务器上备份数据

[root@web3 backup]# mysqldump -uroot -p'Sanchuang123#' --all-databases > all_db.SAQL

mysqldump: [Warning] Using a password on the command line interface can be insecure.

[root@web3 backup]# ls

all_db.SAQL check_pwd.sh my.cnf passwd qiuchao.sql

传到从服务器上/root目录

[root@web3 backup]# scp all_db.SAQL root@192.168.159.131:/root

all_db.SAQL 100% 885KB 66.2MB/s 00:00

在从服务器上

[root@web1 ~]# mysql -uroot -p'Sanchuang123#' < all_db.SAQL

mysql: [Warning] Using a password on the command line interface can be insecure.

主服务器清除二进制文件

root@(none) 15:17 mysql>reset master;

Query OK, 0 rows affected (0.01 sec)

root@(none) 15:18 mysql>show master status;

+-----------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-----------------+----------+--------------+------------------+-------------------+

| web3-bin.000001 | 154 | | | |

+-----------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

主服务器新建一个授权用户给从服务器复制二进制日志

grant replication slave on *.* to 'ren'@'192.168.92.%' identified by 'Sanchuang123#';

Query OK, 0 rows affected, 1 warning (0.00 sec)

在从服务器上配置master info的信息

root@(none) 15:16 mysql>CHANGE MASTER TO MASTER_HOST='192.168.92.134' ,

MASTER_USER='ren',

MASTER_PASSWORD='Sanchuang123#',

MASTER_PORT=3306,

MASTER_LOG_FILE='nginx-lb1-bin.000001',

MASTER_LOG_POS=154;

Query OK, 0 rows affected, 2 warnings (0.02 sec)

从服务器查看slave信息

show slave status\G;

Slave_IO_State:

Master_Host: 192.168.159.133

Master_User: renxj

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: web3-bin.000001

Read_Master_Log_Pos: 154

Relay_Log_File: web1-relay-bin.000001

Relay_Log_Pos: 4

Relay_Master_Log_File: web3-bin.000001

Slave_IO_Running: No

Slave_SQL_Running: No

从服务器启动slave

root@(none) 15:31 mysql> start slave;

root@(none) 15:31 mysql>show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.159.133

Master_User: renxj

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: web3-bin.000001

Read_Master_Log_Pos: 450

Relay_Log_File: web1-relay-bin.000002

Relay_Log_Pos: 615

Relay_Master_Log_File: web3-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes6.安装promethues对所有集群(cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等)进行监控包括k8s集群

[root@k8s-master prometheus-grafana]# kubectl get pod -n monitor-sa

NAME READY STATUS RESTARTS AGE

monitoring-grafana-7bf44d7b64-9hsks 1/1 Running 1 (5h36m ago) 3d1h

node-exporter-bz8x5 1/1 Running 6 (5h36m ago) 4d1h

node-exporter-hrh2m 1/1 Running 3 (5h36m ago) 4d1h

node-exporter-mf5d6 1/1 Running 4 (5h36m ago) 4d1h

prometheus-server-6885985cfc-fr7k9 1/1 Running 1 (5h36m ago) 3d1h

[root@k8s-master prometheus-grafana]# kubectl get service -n monitor-sa

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager ClusterIP 10.1.245.239 <none> 80/TCP 4d5h

monitoring-grafana NodePort 10.1.92.54 <none> 80:30503/TCP 4d1h

prometheus NodePort 10.1.240.51 <none> 9090:31066/TCP 4d23h

prometheus + Grafana效果图

7.使用ingress给web业务做负载均衡

使用yaml文件方式去安装部署ingress controller

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.7.0/deploy/static/provider/cloud/deploy.yaml

[root@prometheus ingress]# ls

deploy.yaml (新版配置文件)

在冯老师新加坡的服务器上去下载镜像,然后导出,传回国内使用

[root@k8smaster 4-4]# ls

ingress-controller-deploy.yaml

kube-webhook-certgen-v1.1.0.tar.gz

sc-ingress.yaml

ingress-nginx-controllerv1.1.0.tar.gz

nginx-deployment-nginx-svc-2.yaml

sc-nginx-svc-1.yaml

ingress-controller-deploy.yaml 是部署ingress controller使用的yaml文件

ingress-nginx-controllerv1.1.0.tar.gz ingress-nginx-controller镜像

kube-webhook-certgen-v1.1.0.tar.gz kube-webhook-certgen镜像

sc-ingress.yaml 创建ingress的配置文件

sc-nginx-svc-1.yaml 启动sc-nginx-svc服务和相关pod的yaml

nginx-deployment-nginx-svc-2.yaml 启动sc-nginx-svc-2服务和相关pod的yaml

1.将镜像部署到所有的node节点上

2.导入镜像(所有机器)

[root@web1 ~]# docker images|grep nginx-ingress

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.1.0 ae1a7201ec95 16 months ago 285MB

[root@web1 ~]# docker images|grep kube-webhook

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.1.1 c41e9fcadf5a 18 months ago 47.7MB

3.使用ingress-controller-deploy.yaml 文件去启动ingress controller

[root@prometheus 2]# kubectl apply -f ingress-controller-deploy.yaml

namespace/ingress-nginx unchanged

serviceaccount/ingress-nginx unchanged

configmap/ingress-nginx-controller unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

role.rbac.authorization.k8s.io/ingress-nginx unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

service/ingress-nginx-controller-admission unchanged

service/ingress-nginx-controller unchanged

deployment.apps/ingress-nginx-controller configured

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

serviceaccount/ingress-nginx-admission unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

role.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

job.batch/ingress-nginx-admission-create unchanged

job.batch/ingress-nginx-admission-patch unchanged

[root@prometheus 2]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.1.11.51 <none> 80:31493/TCP,443:30366/TCP 103s

ingress-nginx-controller-admission ClusterIP 10.1.243.244 <none> 443/TCP 103s

[root@prometheus 2]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-g4vcz 0/1 Completed 0 2m24s

ingress-nginx-admission-patch-wqlh6 0/1 Completed 1 2m24s

ingress-nginx-controller-7cd558c647-b5pzx 0/1 Pending 0 2m25s

ingress-nginx-controller-7cd558c647-wl9n4 1/1 Running 0 2m25s

[root@prometheus 2]# kubectl get service -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.1.82.223 <none> 80:32222/TCP,443:31427/TCP 112s

ingress-nginx-controller-admission ClusterIP 10.1.35.142 <none> 443/TCP 113s

[root@prometheus 2]# cat sc-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sc-ingress

annotations:

kubernets.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: www.feng.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: sc-nginx-svc 要绑定的服务

port:

number: 80

- host: www.zhang.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: sc-nginx-svc-2

port:

number: 80

创建pod和发布服务一

[root@k8smaster 4-4]# cat sc-nginx-svc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sc-nginx-deploy

labels:

app: sc-nginx-feng

spec:

replicas: 3

selector:

matchLabels:

app: sc-nginx-feng

template:

metadata:

labels:

app: sc-nginx-feng

spec:

containers:

- name: sc-nginx-feng

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: sc-nginx-svc

labels:

app: sc-nginx-svc

spec:

selector:

app: sc-nginx-feng

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

[root@prometheus 2]# kubectl apply -f sc-nginx-svc-1.yaml

deployment.apps/sc-nginx-deploy created

service/sc-nginx-svc created

[root@prometheus 2]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 33m

sc-nginx-svc ClusterIP 10.1.29.219 <none> 80/TCP 12s

[root@prometheus 2]# kubectl apply -f nginx-deployment-nginx-svc-2.yaml

deployment.apps/nginx-deployment created

service/sc-nginx-svc-2 created

[root@prometheus 2]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 51m

sc-nginx-svc ClusterIP 10.1.29.219 <none> 80/TCP 17m

sc-nginx-svc-2 ClusterIP 10.1.183.100 <none> 80/TCP 7s

[root@prometheus 2]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.feng.com,www.zhang.com 192.168.92.140,192.168.92.142 80 20m

[root@prometheus 2]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-7l4v5 0/1 Completed 0 13m

ingress-nginx-admission-patch-bxpfq 0/1 Completed 1 13m

ingress-nginx-controller-7cd558c647-jctwf 1/1 Running 0 13m

ingress-nginx-controller-7cd558c647-sws4b 1/1 Running 0 4m29s

获取ingress controller对应的service暴露宿主机的端口,访问宿主机和相关端口,就可以验证ingress controller是否能进行负载均衡

[root@prometheus 2]# kubectl get service -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.1.236.56 <none> 80:30910/TCP,443:30609/TCP 26m

ingress-nginx-controller-admission ClusterIP 10.1.187.223 <none> 443/TCP 26m

在宿主机上使用域名进行访问

[root@nfs ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.159.134 NFS

192.168.92.140 www.feng.com

192.168.92.142 www.zhang.com

因为我们是基于域名做的负载均衡的配置,所有必须要在浏览器里使用域名去访问,不能使用ip地址

同时ingress controller做负载均衡的时候是基于http协议的,7层负载均衡

[root@nfs ~]# curl www.feng.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For

online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

访问zhang.con

[root@nfs ~]# curl www.zhang.com

<html>

<head><title>503 Service Temporarily Unavailable</title></head>

<body>

<center><h1>503 Service Temporarily Unavailable</h1></center>

<hr><center>nginx</center>

</body>

</html>

service2使用了pv+pvc+nfs

[root@prometheus 2]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

sc-nginx-pv 10Gi RWX Retain Bound default/sc-nginx-pvc nfs 21h

task-pv-volume 10Gi RWO Retain Bound default/task-pv-claim manual 25h

刷新一下nfs服务器,最终成功

[root@nfs ~]# curl www.zhang.com

wel come to qiuchao feizi feizi

qiuchaochao8.使用探针(liveless、readiness、startup)的httpGet和exec方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest # replace it with your exactly <image_name:tags>

ports:

- containerPort: 80

resources:

requests: ##必须设置,不然HPA无法运行。

cpu: 500m

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 209.对整个k8s集群和相关的服务器进行压力测试

使用ab压力测试

安装ab软件

[root@lb conf]# yum install httpd-tools -y

-n:表示请求数

-c:表示并发数

[root@lb conf]# ab -c 100 -n 1000 http://192.168.159.135/

并发数100,请求数1000访问负载均衡器

100人访问,访问1000次

Requests per second: 3206.62 [#/sec] (mean)

Time per request: 31.185 [ms] (mean)

Time per request: 0.312 [ms] (mean, across all concurrent requests)

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)