k8s 使用helm安装longhorn存储控制器

安装longhorn存储控制器需要用到iSCSI工具,所以需要在k8s所有节点上安装。

·

1.安装helm

#master节点安装

yum -y install wget

wget https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz #下载

scp helm-v3.6.3-linux-amd64.tar.gz ip:/root #复制到其他服务器

2、解压并分发其他给机器 (如果没有做高可用则不需要分发)

tar -xf helm-v3.6.3-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin/

mkdir -p $HOME/.cache/helm

mkdir -p $HOME/.config/helm

mkdir -p $HOME/.local/share/helm

helm version

3.配置基础环境

安装longhorn存储控制器需要用到iSCSI工具,所以需要在k8s所有节点上安装

##所有节点上安装

yum -y install iscsi-initiator-utils

4.安装longhorn

4.0 node所有节点拉去镜像,方便部署速度

docker pull longhornio/csi-attacher:v3.4.0

docker pull longhornio/csi-provisioner:v2.1.2

docker pull longhornio/csi-resizer:v1.3.0

docker pull longhornio/longhorn-engine:v1.4.2

docker pull longhornio/longhorn-instance-manager:v1.4.2

docker pull longhornio/csi-node-driver-registrar:v2.5.0

docker pull longhornio/longhorn-manager:v1.4.2

docker pull longhornio/longhorn-ui:v1.4.2

4.1配置helm镜像源

##master上操作

helm repo add longhorn https://charts.longhorn.io

helm repo update

4.2安装longhorn

kubectl create namespace longhorn-system #创建命名空间,把longhorn的所有pod都放这个里面

helm search repo longhorn

helm install longhorn longhorn/longhorn -n longhorn-system

##helm uninstall longhorn longhorn/longhorn -n longhorn-system #卸载

4.3查看helm安装的chart包

helm list -A

4.4查看pod状态

kubectl get pod -n longhorn-system

[root@master ~]# kubectl get pod -n longhorn-system -o wide #全部是 Running和状态READY左右两边一致就可以了

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-attacher-7bf4b7f996-ffhxm 1/1 Running 0 45m 10.244.166.146 node1 <none> <none>

csi-attacher-7bf4b7f996-kts4l 1/1 Running 0 45m 10.244.104.20 node2 <none> <none>

csi-attacher-7bf4b7f996-vtzdb 1/1 Running 0 45m 10.244.166.142 node1 <none> <none>

csi-provisioner-869bdc4b79-bjf8q 1/1 Running 0 45m 10.244.166.150 node1 <none> <none>

csi-provisioner-869bdc4b79-m2swk 1/1 Running 0 45m 10.244.104.18 node2 <none> <none>

csi-provisioner-869bdc4b79-pmkfq 1/1 Running 0 45m 10.244.166.143 node1 <none> <none>

csi-resizer-869fb9dd98-6ndgb 1/1 Running 0 8m47s 10.244.104.28 node2 <none> <none>

csi-resizer-869fb9dd98-czvzh 1/1 Running 0 45m 10.244.104.16 node2 <none> <none>

csi-resizer-869fb9dd98-pq2p5 1/1 Running 0 45m 10.244.166.149 node1 <none> <none>

csi-snapshotter-7d59d56b5c-85cr6 1/1 Running 0 45m 10.244.104.19 node2 <none> <none>

csi-snapshotter-7d59d56b5c-dlwjk 1/1 Running 0 45m 10.244.166.148 node1 <none> <none>

csi-snapshotter-7d59d56b5c-xsc6s 1/1 Running 0 45m 10.244.166.147 node1 <none> <none>

engine-image-ei-f9e7c473-ld6zp 1/1 Running 0 46m 10.244.104.15 node2 <none> <none>

engine-image-ei-f9e7c473-qw96n 1/1 Running 1 (6m5s ago) 8m13s 10.244.166.154 node1 <none> <none>

instance-manager-e-16be548a213303f54febe8742dc8e307 1/1 Running 0 8m19s 10.244.104.29 node2 <none> <none>

instance-manager-e-1e8d2b6ac4bdab53558aa36fa56425b5 1/1 Running 0 7m58s 10.244.166.155 node1 <none> <none>

instance-manager-r-16be548a213303f54febe8742dc8e307 1/1 Running 0 46m 10.244.104.14 node2 <none> <none>

instance-manager-r-1e8d2b6ac4bdab53558aa36fa56425b5 1/1 Running 0 7m31s 10.244.166.156 node1 <none> <none>

longhorn-admission-webhook-69979b57c4-rt7rh 1/1 Running 0 52m 10.244.166.136 node1 <none> <none>

longhorn-admission-webhook-69979b57c4-s2bmd 1/1 Running 0 52m 10.244.104.11 node2 <none> <none>

longhorn-conversion-webhook-966d775f5-st2ld 1/1 Running 0 52m 10.244.166.135 node1 <none> <none>

longhorn-conversion-webhook-966d775f5-z5m4h 1/1 Running 0 52m 10.244.104.12 node2 <none> <none>

longhorn-csi-plugin-gcngb 3/3 Running 0 45m 10.244.166.145 node1 <none> <none>

longhorn-csi-plugin-z4pr6 3/3 Running 0 45m 10.244.104.17 node2 <none> <none>

longhorn-driver-deployer-5d74696c6-g2p7p 1/1 Running 0 52m 10.244.104.9 node2 <none> <none>

longhorn-manager-j69pn 1/1 Running 0 52m 10.244.166.139 node1 <none> <none>

longhorn-manager-rnzwr 1/1 Running 0 52m 10.244.104.10 node2 <none> <none>

longhorn-recovery-backend-6576b4988d-l4lmb 1/1 Running 0 52m 10.244.104.8 node2 <none> <none>

longhorn-recovery-backend-6576b4988d-v79b4 1/1 Running 0 52m 10.244.166.138 node1 <none> <none>

longhorn-ui-596d5f6876-ms4dn 1/1 Running 0 52m 10.244.166.137 node1 <none> <none>

longhorn-ui-596d5f6876-td7ww 1/1 Running 0 52m 10.244.104.7 node2 <none> <none>

[root@master ~]#

4.5设置svc服务

kubectl get svc -n longhorn-system

[root@master ~]# kubectl get svc -n longhorn-system|grep longhorn-frontend #过滤出来

longhorn-frontend ClusterIP 10.106.154.54 <none> 80/TCP 55m

[root@master ~]#

##安装好后名为longhorn-frontend的svc服务默认是clusterip模式,

##除了集群之外的网络是访问不到此服务的,所以要将此svc服务改为nodeport模式

kubectl edit svc longhorn-frontend -n longhorn-system

##type: NodePort

#将type的ClusterIP改为NodePort即可,保存退出

[root@master ~]# kubectl get svc -n longhorn-system|grep longhorn-frontend #查询刚才变更为NodePort暴露的端口

longhorn-frontend NodePort 10.106.154.54 <none> 80:32146/TCP 58m

[root@master ~]#

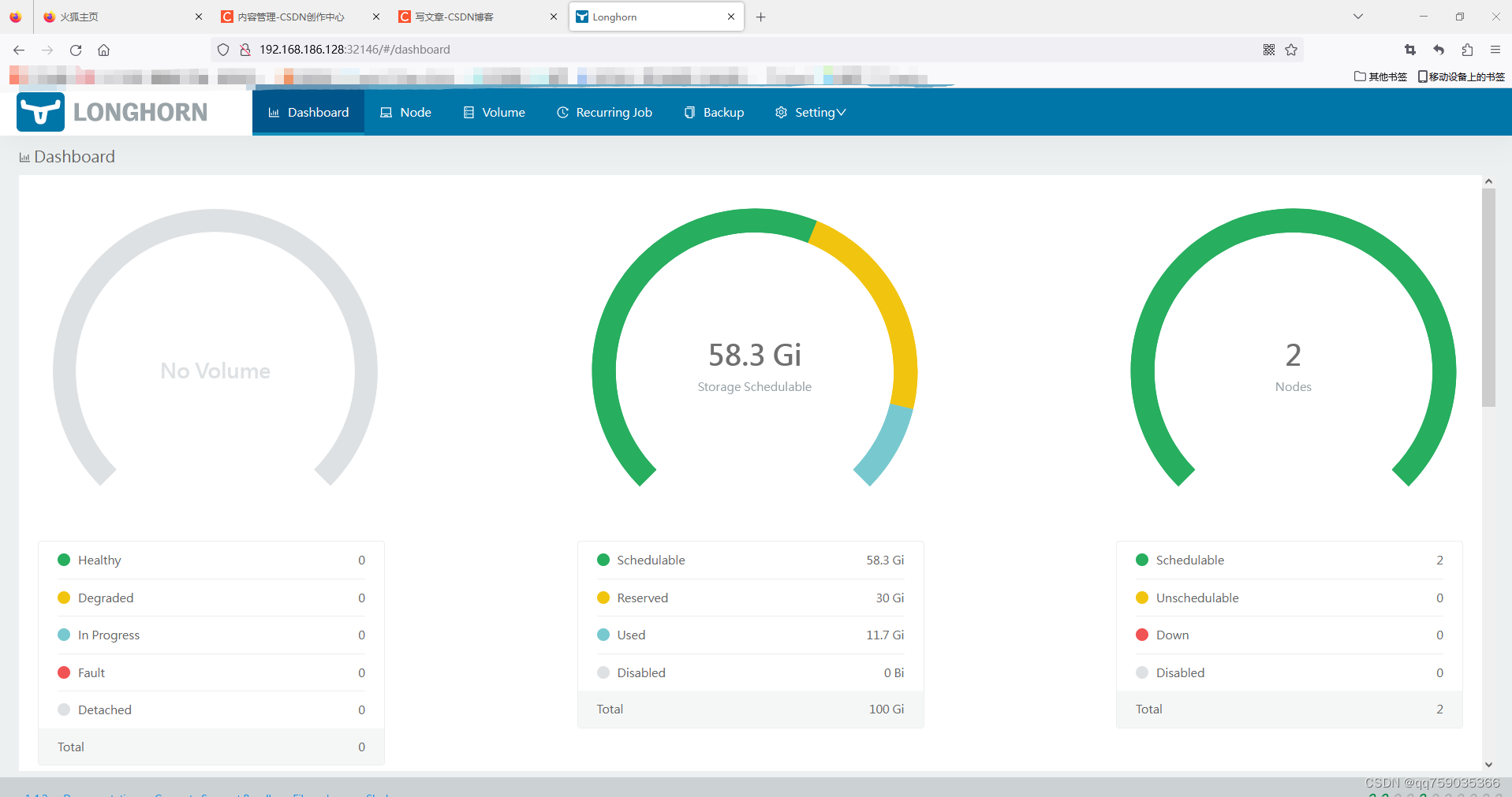

5.在浏览器访问此端口即可

http://ip:32146 #根据自己的k8s宿主机ip地址输入

更多推荐

已为社区贡献47条内容

已为社区贡献47条内容

所有评论(0)