【k8s】Ubuntu22.04离线部署k8s集群:搭建软件仓库和镜像仓库(repo节点)

Ubuntu22.04离线部署k8s集群:搭建软件仓库和镜像仓库(repo节点)

上两篇主要记录了在CentOS 7环境中离线部署k8s的方案,本篇继续介绍方案二在Ubuntu 22.04的实现。

(当然,整体思路还是跟上篇基本相似)

目录

Ubuntu22.04离线部署k8s集群:搭建软件仓库和镜像仓库(repo节点)

一、整体思路

即:在局域网内(k8s集群之外),搭建一台仓库节点,作为软件/镜像仓库,其他节点从它这里获取k8s安装需要的资源。

部分参考:

- 软件仓库:ubuntu使用dpkg-scanpackages和nginx搭建本地源

- 安装docker:Ubuntu安装docker

- Ubuntu部署k8s集群:Ubuntu 安装k8s集群_ubuntu k8s集群搭建

二、安装环境说明

虚拟机:VMware Pro 16

操作系统:ubuntu-22.04.2-desktop-amd64

k8s版本:v 1.27

repo:192.168.253.176

master:192.168.253.177

node1:192.168.253.178

三、准备工作

主要包含:设置静态IP、重设Root密码、修改hosts文件以及连接Xshell,由于此过程不是重点,因此不赘述,这里提供相关命令以及参考资料,供参考。

(三台机器)

# 重设root密码

sudo passwd root

123

# 修改hosts文件

echo "192.168.253.177 master" >> /etc/hosts

echo "192.168.253.178 node1" >> /etc/hosts

# 连接xshell

apt-get install -y openssh-server ssh vim

service ssh start

ps -e|grep ssh

vim /etc/ssh/sshd_config

PermitRootLogin yes

StrictModes yes

/etc/init.d/ssh restart

参考资料:

四、软件仓库:使用dpkg-scanpackages和nginx搭建本地源

注意,ubuntu和centos的软件包管理方式不同,前者的包管理工具用apt(apt-get),后者用yum。

关闭系统交换区

首先,所有机器关闭系统交换区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

free -m

仓库节点搭建本地源

注意:以下命令都只在仓库节点执行!

安装dpkg-dev和nginx。

apt-get install -y dpkg-dev nginx

创建目录。

mkdir -p /var/debs/ubuntu/software/ # 存放deb包的地方

mkdir -p /var/debs/ubuntu/dists/jammy/main/binary-i386/ # jammy是ubuntu的版本号

mkdir -p /var/debs/ubuntu/dists/jammy/main/binary-amd64/ # binary-amd64是64位操作系统

首先,安装k8s前需要先添加证书,并写入软件源信息。

# 添加kubernetes证书

apt-get install -y apt-transport-https

apt install curl

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

# 写入软件源信息

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

下载各种软件。

# 在仓库中下载各种软件

apt clean

aptitude install -y docker.io

aptitude --download-only -y install kubeadm kubelet kubectl

# apt默认下载在/var/cache/apt/archives/下,现在将deb包移到刚刚配置的仓库目录中

mv /var/cache/apt/archives/*.deb /var/debs/ubuntu/software

ll /var/debs/ubuntu/software/

进入刚创建的/var/debs/ubuntu/下,使用dpkg-scanpackages生成Packages.gz。

# 生成包的依赖信息

cd /var/debs/ubuntu/

dpkg-scanpackages software/ /dev/null | gzip > dists/jammy/main/binary-i386/Packages.gz

dpkg-scanpackages software/ /dev/null | gzip > dists/jammy/main/binary-amd64/Packages.gz

cd ~

在/etc/nginx/conf.d/目录下新建default.conf配置文件,配置nginx。

# 配置nginx

cd ~

vim /etc/nginx/conf.d/default.conf

server {

listen 8088;

location / {

autoindex on;

root /var/debs;

}

}

重启nginx服务。

systemctl restart nginx

k8s节点(master+node节点)配置apt源

在/etc/apt/sources.list下,配置apt源。

# master&node中配置apt源

vim /etc/apt/sources.list

deb [trusted=yes] http://192.168.253.176:8088/ubuntu/ jammy main

apt-get update

五、镜像仓库:使用Registry镜像建立镜像仓库

ubuntu中搭建镜像仓库过程与上篇文章中centos搭建镜像仓库过程一致

在所有机器安装docker(仓库和节点均执行)

apt install docker.io

安装完docker后,启动docker(仓库和节点均执行)

systemctl start docker

systemctl enable docker

systemctl status docker

使用registry制作镜像仓库(仓库执行)

docker pull registry

docker save -o registry.tar registry

docker load -i registry.tar

docker run -d -p 5000:5000 --restart=always --name registry registry

修改docker的配置中cgroup驱动(仓库和节点均执行)

vim /etc/docker/daemon.json

{

"registry-mirrors":["https://registry.docker-cn.com"],

"insecure-registries":["192.168.253.176:5000"],

"exec-opts":["native.cgroupdriver=systemd"]

}

重启docker(仓库和节点执行)

systemctl daemon-reload

systemctl restart docker

docker info | grep Cgroup

拉取镜像(仓库执行)

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.27.1

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.27.1

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.27.1

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.27.1

docker pull registry.aliyuncs.com/google_containers/pause:3.9

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.7-0

docker pull registry.aliyuncs.com/google_containers/coredns/coredns:v1.10.1

重新打tag(仓库执行)

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.27.1 192.168.253.176:5000/kube-apiserver:v1.27.1

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.27.1 192.168.253.176:5000/kube-controller-manager:v1.27.1

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.27.1 192.168.253.176:5000/kube-scheduler:v1.27.1

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.27.1 192.168.253.176:5000/kube-proxy:v1.27.1

docker tag registry.aliyuncs.com/google_containers/pause:3.9 192.168.253.176:5000/pause:3.9

docker tag registry.aliyuncs.com/google_containers/etcd:3.5.7-0 192.168.253.176:5000/etcd:3.5.7-0

docker tag registry.aliyuncs.com/google_containers/coredns:v1.10.1 192.168.253.176:5000/coredns:v1.10.1

上传镜像(仓库执行)

docker push 192.168.253.176:5000/kube-apiserver:v1.27.1

docker push 192.168.253.176:5000/kube-controller-manager:v1.27.1

docker push 192.168.253.176:5000/kube-scheduler:v1.27.1

docker push 192.168.253.176:5000/kube-proxy:v1.27.1

docker push 192.168.253.176:5000/pause:3.9

docker push 192.168.253.176:5000/etcd:3.5.7-0

docker push 192.168.253.176:5000/coredns:v1.10.1

拉取镜像(节点执行)

docker pull 192.168.253.176:5000/kube-apiserver:v1.27.1

docker pull 192.168.253.176:5000/kube-controller-manager:v1.27.1

docker pull 192.168.253.176:5000/kube-scheduler:v1.27.1

docker pull 192.168.253.176:5000/kube-proxy:v1.27.1

docker pull 192.168.253.176:5000/pause:3.9

docker pull 192.168.253.176:5000/etcd:3.5.7-0

docker pull 192.168.253.176:5000/coredns:v1.10.1

六、Ubuntu离线部署k8s集群

注意:以下操作与仓库节点无关

修改配置文件

(master+node执行)

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

下载相关资源

下载并启动kubectl、kubeadm、kubelet(master+node执行)

apt-get install -y kubeadm kubelet kubectl

systemctl start kubelet

systemctl enable kubelet

systemctl status kubelet

# 可能需要restart多次才能启动

systemctl restart kubelet

配置master节点

查看k8s需要的镜像列表。

kubeadm config images list

需要将自己这边下载的镜像资源重新打标签,改为刚刚镜像列表中的名称。

docker tag 192.168.253.176:5000/kube-apiserver:v1.27.1 registry.k8s.io/kube-apiserver:v1.27.1

docker tag 192.168.253.176:5000/kube-controller-manager:v1.27.1 registry.k8s.io/kube-controller-manager:v1.27.1

docker tag 192.168.253.176:5000/kube-scheduler:v1.27.1 registry.k8s.io/kube-scheduler:v1.27.1

docker tag 192.168.253.176:5000/kube-proxy:v1.27.1 registry.k8s.io/kube-proxy:v1.27.1

docker tag 192.168.253.176:5000/pause:3.9 registry.k8s.io/pause:3.9

docker tag 192.168.253.176:5000/etcd:3.5.7-0 registry.k8s.io/etcd:3.5.7-0

docker tag 192.168.253.176:5000/coredns:v1.10.1 registry.k8s.io/coredns:v1.10.1

运行kubeadm init命令配置master节点。

# 配置master节点

kubeadm init --apiserver-advertise-address=192.168.253.177 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

配置kubectl工具。

# 为kubectl配置证书才能访问master

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看k8s master节点状态。

kubectl get nodes

安装部署CNI网络插件。

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl get pods -n kube-system

配置node节点

配置node节点加入集群。

# node

kubeadm join 192.168.253.177:6443 --token yiva0e.hr2huwgjhj7h4gba \

--discovery-token-ca-cert-hash sha256:8052d2489725f3165d9a470dd792fb696c43d4d7e2986e3f469fd887e9ca190b

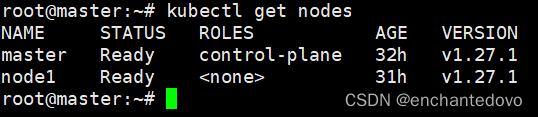

查看是否部署成功

在master和node节点上重启kubelet服务。

systemctl restart kubelet

在master节点查看k8s集群状态。

kubectl get nodes

即部署成功🎉!

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)