kubernetes 部署

k8s是一个开源的系统,可以自动的去部署,扩缩,管理容器的应用程序。k8s是一个多宿主机的容器集群管理软件,编排多台宿主机上的容器。k8s 是Google推动的一个项目--》CNCF 云原生基金会Prometheus是CNCF的第2个大项目。

k8s介绍

k8s是一个开源的系统,可以自动的去部署,扩缩,管理容器的应用程序。

k8s是一个多宿主机的容器集群管理软件,编排多台宿主机上的容器。

k8s 是Google推动的一个项目--》CNCF 云原生基金会。

Prometheus是CNCF的第2个大项目。

k8s的安装方式

1、kubeadm

2、二进制安装

去下载所有组件的二进制程序,在多台宿主机上安装。

3、第3方的平台工具

Rancher 是供采用容器的团队使用的完整软件堆栈。它解决了管理多个Kubernetes集群的运营和安全挑战,并为DevOps团队提供用于运行容器化工作负载的集成工具。

环境准备

3台全新的centos7.9的系统;cpu 2核 内存2G 磁盘200G。

k8s集群角色和ip

| 控制节点 | k8smaster | 192.168.102.136 |

| 工作节点 | k8snode1 | 192.168.102.137 |

| 工作节点 | k8snode2 | 192.168.102.138 |

安装k8s单master环境

3台主机上操作

第1步:配置静态ip

vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.102.136"

PREFIX=24

GATEWAY="192.168.102.2"

DNS1=114.114.114.114修改配置文件之后需要重启网络服务才能使配置生效,重启网络服务命令如下:

service network restart第2步:设置对应的主机名

hostnamcectl set-hostname k8smaster

hostnamcectl set-hostname k8snode1

hostnamcectl set-hostname k8snode2

su - root

[root@k8smaster ~]#

[root@k8snode1 ~]#

[root@k8snode2 ~]#第3步:关闭selinux和防火墙

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config修改selinux配置文件之后,重启机器,selinux配置才能永久生效

执行:reboot

[root@k8smaster ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@k8smaster ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8smaster ~]# reboot

[root@k8smaster ~]# getenforce

Disabled第4步:修改/etc/hosts,添加k8s集群里的主机和ip地址映射关系

[root@k8smaster ~]# vim /etc/hosts

[root@k8smaster ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.102.136 k8smaster

192.168.102.137 k8snode1

192.168.102.138 k8snode2第5步:建立免密通道

ssh-keygen #一路回车,不输入密码

ssh-copy-id k8smaster # yes

ssh-copy-id k8snode1 #yes

ssh-copy-id k8snode2 #yes第6步:关闭交换分区,提升性能

临时关闭

swapoff -a

永久关闭:注释swap挂载,给swap这行开头加一下注释

[root@k8smaster ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Mar 23 15:22:20 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=00236222-82bd-4c15-9c97-e55643144ff3 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0第7步:加载相关内核模块;修改机器内核参数

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf第8步:配置阿里云的repo源

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm第9步:配置安装k8s组件需要的阿里云的repo源

[root@xianchaomaster1 ~]#vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0第10步:配置时间同步

ntpdate命令是用来和互联网上的时间服务器同步。

yum install ntpdate -y

ntpdate cn.pool.ntp.org

[root@k8smaster ~]# crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

#重启crond服务

[root@k8smaster ~]# service crond restart第11步:安装docker服务

yum install docker-ce-20.10.6 -y

systemctl start docker && systemctl enable docker.service第12步:配置docker镜像加速器和驱动

vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重启docker

systemctl daemon-reload && systemctl restart docker第13步:安装初始化k8s需要的软件包

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

systemctl enable kubelet第14步:kubeadm初始化k8s集群

把初始化k8s集群需要的离线镜像包上传到k8smaster、k8snode1、k8snode1机器上,手动解压:

docker load -i k8simage-1-20-6.tar.gzk8smaster主机上操作

使用kubeadm初始化k8s集群

[root@k8smaster ~]# kubeadm config print init-defaults > kubeadm.yaml修改 kubeadm.yaml配置文件

[root@k8smaster ~]# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.102.136

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8smaster

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改镜像仓库源为阿里源

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #指定pod网段, 需要新增加这个

scheduler: {}

#追加如下几行

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd基于kubeadm.yaml文件初始化k8s

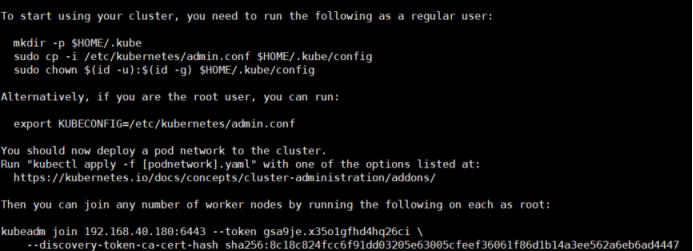

[root@k8smaster ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification显示如下:说明安装完成。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看节点

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 52s v1.20.6扩容k8s集群-添加第一个工作节点

在k8smaster上查看加入节点的命令:

[root@k8smaster ~]# kubeadm token create --print-join-command

kubeadm join 192.168.102.136:6443 --token plwkyt.3fiqdky2m9bftqmr --discovery-token-ca-cert-hash sha256:d6c9b3290f5bda7bc9b43d6591082e2a90136081596826f69c88ff146d3b234f

[root@k8snode1 ~]# kubeadm join 192.168.102.136:6443 --token plwkyt.3fiqdky2m9bftqmr --discovery-token-ca-cert-hash sha256:d6c9b3290f5bda7bc9b43d6591082e2a90136081596826f69c88ff146d3b234f --ignore-preflight-errors=SystemVerification

[root@k8snode2 ~]# kubeadm join 192.168.102.136:6443 --token plwkyt.3fiqdky2m9bftqmr --discovery-token-ca-cert-hash sha256:d6c9b3290f5bda7bc9b43d6591082e2a90136081596826f69c88ff146d3b234f --ignore-preflight-errors=SystemVerification在k8smaster上查看集群节点状况:

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 52s v1.20.6

k8snode1 NotReady <none> 24s v1.20.6

k8snode2 NotReady <none> 20s v1.20.6k8snode1,k8snode2的ROLES角色为空,<none>就表示这个节点是工作节点。

可以把k8snode1,k8snode2的ROLES变成work,按照如下方法:

[root@k8smaster ~]# kubectl label node k8snode1 node-role.kubernetes.io/worker=worker

node/k8snode1 labeled

您在 /var/spool/mail/root 中有新邮件

[root@k8smaster ~]# kubectl label node k8snode2 node-role.kubernetes.io/worker=worker

node/k8snode2 labeled

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 2m43s v1.20.6

k8snode1 NotReady worker 2m15s v1.20.6

k8snode2 NotReady worker 2m11s v1.20.6注意:上面状态都是NotReady状态,说明没有安装网络插件

安装kubernetes网络组件-Calico

wget https://docs.projectcalico.org/v3.23/manifests/calico.yaml --no-check-certificate得到calico.yaml,使用yaml文件安装calico 网络插件 。

kubectl apply -f calico.yaml再次查看集群状态

[root@k8smaster ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster Ready control-plane,master 46h v1.20.6

k8snode1 Ready worker 46h v1.20.6

k8snode2 Ready worker 46h v1.20.6STATUS状态是Ready,说明k8s集群正常运行了

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)