基于kubernetes集群分布式部署gpmall Web应用系统

基于kubernetes集群分布式部署gpmall Web应用系统

【说明】

gpmall是一个基于SpringBoot+Dubbo构建的开源电商平台,许老师针对在k8s集群部署中出现的一些问题,修复了该项目的部分bug并再次发布到gitee,建议从他的gitee下载学习,gpmall的更多介绍以及源码下载链接为:gpamll

以下部署过程借签了许老师的有道云笔记,并做了优化和细化。

由于内部私有云限制访问外网,本次部署期间用到的所有镜像均来自内部部署的Harbor镜像仓库,详细记录了在内部华为私有云上的kubernetes集群中的部署细节。

高性能kubernetes集群手动部署过程可参见:高性能kubernetes集群部署

高性能kubernetes集群自动化部署过程可参见:ansible自动化部署k8s

1 项目编译

1.1 编译环境准备

gpmall是基于前后端分离的,前端环境需要安装node环境,安装教程可以参考:Node安装。

后端代码需要Idea和Maven环境,配置流程可以参考:Idea安装,Maven安装

1.2 编译模块

gpmall是基于微服务的,每个模块需要单独编译,而且编译顺序有讲究,各模块的编译顺序如下:

- gpmall-parent

- gpmall-commons

- user-service

- shopping-service

- order-service

- pay-service

- market-service

- comment-service

- search-service

这边以第一个模块为例说明如何利用IDEA进行模块编译,其他模块编译流程一样。

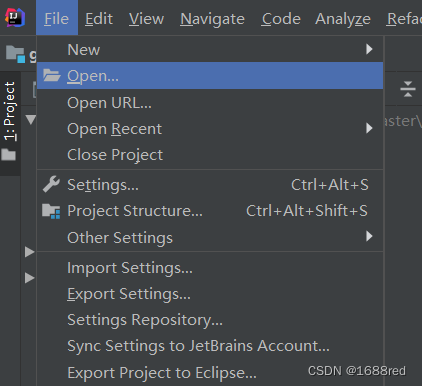

1、打开模块

2、选择要编译的模块

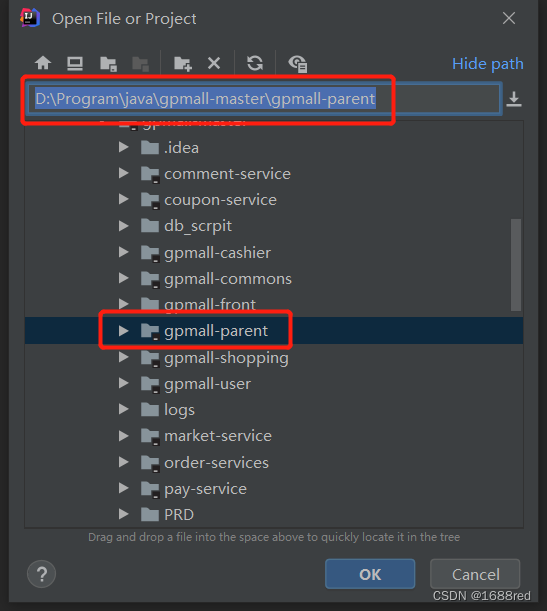

3、打开后双击右侧Maven下Lifecycle的install进行编译

如果有的模块里面有root项目,只需编译root那个项目即可。

4、编译完成后在仓库目录会有jar包,后续部署就可以用这个jar包了,jar包具体生成目录可以查看install的日志信息

按上述的编译顺序依次编译各个模块,最终得到以下jar包用于后续部署:

(1)user-provider-0.0.1-SNAPSHOT.jar

(2)gpmall-user-0.0.1-SNAPSHOT.jar

(3)shopping-provider-0.0.1-SNAPSHOT.jar

(4)order-provider-0.0.1-SNAPSHOT.jar

(5)comment-provider-0.0.1-SNAPSHOT.jar

(6)search-provider-1.0-SNAPSHOT.jar

(7)gpmall-shopping-0.0.1-SNAPSHOT.jar

上述7个jar包需要上传至某台Linux主机中,以便为它们制作Docker镜像,这里以ansible-controller节点为例。

将上述7个jar包利用xftp或sftp等工具传送到ansible控制主机中,并分别存放到7个对应目录,如下图所示。

[zhangsan@controller ~]$ ls -ld /data/zhangsan/gpmall/gpmall-jar/*

drwxr-xr-x 2 root root 4096 3月 24 17:48 /data/zhangsan/gpmall/gpmall-jar/comment-provider

drwxr-xr-x 2 root root 4096 3月 24 17:48 /data/zhangsan/gpmall/gpmall-jar/gpmall-shopping

drwxr-xr-x 2 root root 4096 3月 24 17:48 /data/zhangsan/gpmall/gpmall-jar/gpmall-user

drwxr-xr-x 2 root root 4096 3月 24 17:48 /data/zhangsan/gpmall/gpmall-jar/order-provider

drwxr-xr-x 2 root root 4096 3月 24 17:49 /data/zhangsan/gpmall/gpmall-jar/search-provider

drwxr-xr-x 2 root root 4096 3月 24 17:49 /data/zhangsan/gpmall/gpmall-jar/shopping-provider

drwxr-xr-x 2 root root 4096 3月 24 17:49 /data/zhangsan/gpmall/gpmall-jar/user-provider

1.3 制作Docker镜像

虽然k8s自1.24版本以来就不再支持docker,但是用docker构建的镜像依然可以在k8s集群中使用,因此这里仍然为前面编译出的7个jar包逐个构建docker镜像。

1.3.1 环境准备

1、安装docker

可在任意一台Linux主机上制作Docker镜像,这里使用一台openEuler主机来构建Docker镜像,下面以在k8s-master01节点上操作为例。

由于openEuler默认没有安装docker,可执行以下命令安装docker。

# 安装docker

[zhangsan@controller ~]$ sudo dnf -y install docker

# 新建和编辑/etc/docker/daemon.json 配置文件

2、配置docker服务

默认情况下,docker会从官方镜像仓库拉取镜像,可以通过修改配置文件,修改镜像仓库地址,这里将私有镜像仓库添加其中,如下所示。

[zhangsan@controller ~]$ sudo vim /etc/docker/daemon.json

{

"insecure-registries":["192.168.18.18:9999"]

}3、重启docker服务

# 重新docker服务

[zhangsan@controller ~]$ sudo systemctl restart docker.service

1.3.2 编制Dockerfile

需要在每个jar包所在目录下为该jar包创建Dockerfile文件。下面以第1个jar包(user-provider-0.0.1-SNAPSHOT.jar)为例,在该jar包所在目录下创建和编辑Dockerfile,内容如下(其它jar包可参考其中的说明修改即可):

[zhangsan@controller user-provider]$ sudo vim Dockerfile

FROM 192.168.18.18:9999/common/java:openjdk-8u111-alpine

#记得将下面的zhangsan更改成自己的目录

WORKDIR /data/zhangsan/gpmall

#下边记得根据模块更换对应的jar包

ADD user-provider-0.0.1-SNAPSHOT.jar $WORKDIR

ENTRYPOINT ["java","-jar"]

#下边记得根据模块更换对应的jar包

CMD ["$WORKDIR/user-provider-0.0.1-SNAPSHOT.jar"]1.3.3 利用Dockerfile构建镜像

为使后面进行k8s部署时不用修改yaml中的镜像名,建议直接用模块名作为镜像名,tag统一为latest,各个模块对应的镜像名如下表所示:

| 模块名 | docker 镜像名 | 备注 |

| user-provider | user-provider:latest | |

| gpmall-user | gpmall-user:latest | |

| shopping-provider | shopping-provider:latest | |

| gpmall-shopping | gpmall-shopping:latest | |

| order-provider | order-provider:latest | |

| comment-provider | comment-provider:latest | |

| search-provider | search-provider:latest | |

| gpmall-front | gpmall-front:latest | 前端,后面单独生成镜像 |

执行以下命令创建镜像:

### 构建Docker镜像的命令格式为如下(注意最后的一个点不能省):

### docker build -t 镜像名:tag标签 .

[zhangsan@controller user-provider]$ sudo docker build -t user-provider:latest .

Sending build context to Docker daemon 62.39MB

Step 1/5 : FROM 192.168.18.18:9999/common/java:openjdk-8u111-alpine

openjdk-8u111-alpine: Pulling from common/java

53478ce18e19: Pull complete

d1c225ed7c34: Pull complete

887f300163b6: Pull complete

Digest: sha256:f0506aad95c0e03473c0d22aaede25402584ecdab818f0aeee8ddc317f7145ed

Status: Downloaded newer image for 192.168.18.18:9999/common/java:openjdk-8u111-alpine

---> 3fd9dd82815c

Step 2/5 : WORKDIR /data/zhangsan/gpmall

---> Running in bb5239c3d849

Removing intermediate container bb5239c3d849

---> e791422cdb40

Step 3/5 : ADD user-provider-0.0.1-SNAPSHOT.jar /data/zhangsan/gpmall

---> 61ece5f0c8fe

Step 4/5 : ENTRYPOINT ["java","-jar"]

---> Running in 8e1a6a0d6f30

Removing intermediate container 8e1a6a0d6f30

---> beac96264c93

Step 5/5 : CMD ["/data/zhangsan/gpmall/user-provider-0.0.1-SNAPSHOT.jar"]

---> Running in a5993541334a

Removing intermediate container a5993541334a

---> 502d57ed4303

Successfully built 502d57ed4303

Successfully tagged user-provider:latest

1.3.4 查看镜像

[zhangsan@controller user-provider]$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

user-provider latest 502d57ed4303 24 seconds ago 207MB

192.168.18.18:9999/common/java openjdk-8u111-alpine 3fd9dd82815c 6 years ago 145MB类似地,逐个为其它jar包构建对应的镜像。

1.4 创建前端镜像

1.4.1 依赖安装

前端代码位于gpmall-front文件夹下,因此需在该目录下执行命令【npm install】,如下图所示。

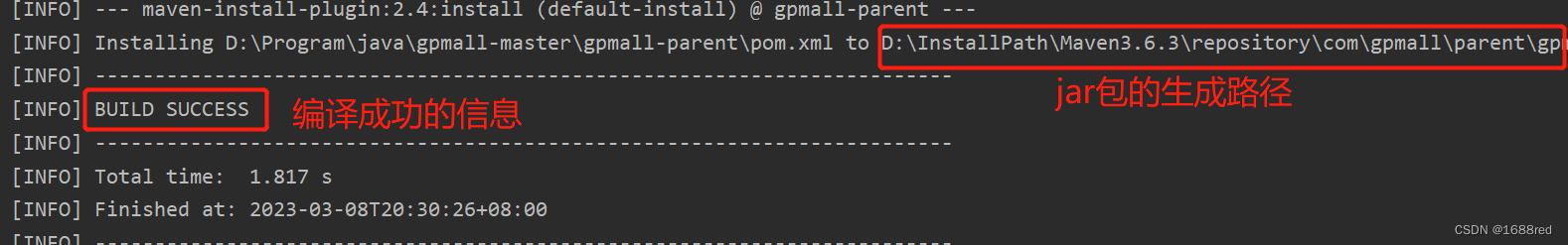

1.4.2 打包发布

执行【npm run build】命令打包,完成后会在目录下生成dist文件夹,如下图所示。

利用xftp或sftp等工具将该文件夹拷贝到前面构建Docker镜像的主机上,并配置好docker环境的机器上,如下所示。

[zhangsan@controller ~]$ ls /data/zhangsan/gpmall/frontend/

dist1.4.3 配置Web服务

gpmall项目采用前后端分离开发,前端需要独立部署,这里选择Nginx作为Web服务器,将以上生成的dist文件夹添加到nginx的镜像中并配置代理,所以首先需要准备nginx的配置文件nginx.conf,文件内容如下,将该文件保存到dist所在目录:

[zhangsan@controller ~]$ cd /data/zhangsan/gpmall/frontend/

[zhangsan@controller frontend]$ sudo vim nginx.conf

worker_processes auto;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

client_max_body_size 20m;

server {

listen 9999;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

try_files $uri $uri/ /index.html;

}

#这里是重点,需要将所有访问/user的请求转发到集群内部对应的服务端口上,不然前端数据无法展示

location /user {

proxy_pass http://gpmall-user-svc:8082;

proxy_redirect off;

proxy_cookie_path / /user;

}

#这里是重点,需要将所有访问/shopping的请求转发到集群内部对应的服务端口上,不然前端数据无法展示

location /shopping {

proxy_pass http://gpmall-shopping-svc:8081;

proxy_redirect off;

proxy_cookie_path / /shopping;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}1.4.4 编制Dockfile文件

在dist所在目录为创建构建前端镜像的Dockerfile文件,文件内容如下:

[zhangsan@controller frontend]$ sudo vim Dockerfile

FROM 192.168.18.18:9999/common/nginx:latest

COPY dist/ /usr/share/nginx/html/

COPY nginx.conf /etc/nginx/nginx.conf最后确认dist目录、nginx.conf配置文件和Dockerfile文件在同一目录下。

1.4.5 构建前端镜像

利用Dockerfile为前端构建镜像。

[zhangsan@controller frontend]$ sudo docker build -t gpmall-front:latest .

Sending build context to Docker daemon 11.01MB

Step 1/3 : FROM 192.168.18.18:9999/common/nginx:latest

latest: Pulling from common/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:ee89b00528ff4f02f2405e4ee221743ebc3f8e8dd0bfd5c4c20a2fa2aaa7ede3

Status: Downloaded newer image for 192.168.18.18:9999/common/nginx:latest

---> 605c77e624dd

Step 2/3 : COPY dist/ /usr/share/nginx/html/

---> 1b2bfaf186a0

Step 3/3 : COPY nginx.conf /etc/nginx/nginx.conf

---> a504c7bbf947

Successfully built a504c7bbf947

Successfully tagged gpmall-front:latest1.4.6 查看前端镜像

# 查看镜像

[zhangsan@controller frontend]$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gpmall-front latest a504c7bbf947 2 minutes ago 152MB

192.168.18.18:9999/common/nginx latest 605c77e624dd 14 months ago 141MB

1.5 将镜像上传至私有镜像仓库

为便于后期项目部署,需要将前面构建的镜像上传至镜像仓库,由于内部网络的限制,这里将所有镜像均上传至私有镜像仓库,私有镜像仓库的搭建过程可参考:搭建Harbor镜像仓库

本次内部部署的Harbor私有镜像仓库地址为:http://192.168.18.18:9999/

成功搭建私有镜像仓库后,需要创建一个成员账号,比如admin。

将一个Docker镜像上传至镜像仓库通常需要执行以下三个操作:

1.5.1 登录镜像仓库

利用在私有镜像仓库中的账号登录私有镜像仓库,命令如下:

[zhangsan@controller ~]$ sudo docker login 192.168.18.18:9999

Username: admin

Password:

1.5.2 标记镜像

标记镜像的语法格式为:

docker tag 源镜像名:源标记 镜像仓库地址/项目名称/修改后的镜像名:修改后的标记示例如下,标记前端镜像:

sudo docker tag gpmall-front:latest 192.168.18.18:9999/gpmall/gpmall-front:latest1.5.3 上传镜像

上传镜像的语法格式为:

docker push 镜像仓库地址/项目名称/修改后的镜像名:修改后的标记示例如下,将前端镜像推送至私有仓库中的gpmall项目:

[zhangsan@controller frontend]$ sudo docker push 192.168.18.18:9999/gpmall/gpmall-front:latest

The push refers to repository [192.168.18.18:9999/gpmall/gpmall-front]

2e5e73a63813: Pushed

75176abf2ccb: Pushed

d874fd2bc83b: Mounted from common/nginx

32ce5f6a5106: Mounted from common/nginx

f1db227348d0: Mounted from common/nginx

b8d6e692a25e: Mounted from common/nginx

e379e8aedd4d: Mounted from common/nginx

2edcec3590a4: Mounted from common/nginx

latest: digest: sha256:fc5389e7c056d95c5d269932c191312034b1c34b7486325853edf5478bf8f1b8 size: 1988

相似操作,将所有前面构建的镜像推送至私有镜像仓库,以备后用,推送完成的镜像如下图所示:

1.5.4 删除镜像

所有镜像均成功推送完成后,可清除这些docker镜像,示例命令如下:

# 先停止正在运行的所有容器

[zhangsan@controller ~]$ sudo docker stop $(sudo docker ps -a -q)

# 删除所有容器

[zhangsan@controller ~]$ sudo docker rm $(sudo docker ps -a -q)

# 删除所有镜像

[zhangsan@controller ~]$ sudo docker rmi $(sudo docker images -a -q) --force

2 部署运行环境

以下操作在任意一台k8s主机上完成,以下以在k8s-master01节点上完成。

2.1 创建命名空间

创建命名空间不是必要的,但是为了区别其他的部署环境,方便后期管理,最好创建一个命名空间。这里要求创建一个名为自己姓名拼音的命名空间,比如zhangsan:

sudo kubectl create namespace zhangsan为了后面执行kubectl命令时省略命名空间选项,可以将默认命名空间切换到自己的命名空间:

sudo kubectl config set-context --current --namespace=zhangsan

# 若要改回,将上面的zhangsan改为default即可2.2 配置NFS服务

由于部署过程中需要用于nfs共享,这里需先配置好NFS服务。

为方便文件存储,建议在k8s-master01上为当前磁盘再分一个区,格式化,并永久挂载到/data目录。

1、创建NFS共享目录

[zhangsan@k8s-master01 ~]$ sudo mkdir -p /data/zhangsan/gpmall/nfsdata

2、配置nfs服务

openEuler默认已安装nfs-tutils,可执行安装命令安装或检查是否已安装,然后修改nfs主配置文件,允许任意主机(*)拥有rw、sync和no_root_squash权限。

[zhangsan@k8s-master01 ~]$ sudo dnf -y install nfs-utils

[zhangsan@k8s-master01 ~]$ sudo vim /etc/exports

/data/zhangsan/gpmall/nfsdata *(rw,sync,no_root_squash)

3、重启服务,并设置为开机自启动

[zhangsan@k8s-master01 ~]$ sudo systemctl restart rpcbind.service

[zhangsan@k8s-master01 ~]$ sudo systemctl restart nfs-server.service

[zhangsan@k8s-master01 ~]$ sudo systemctl enable nfs-server.service

[zhangsan@k8s-master01 ~]$ sudo systemctl enable rpcbind.service

4、验证

在内部任意一台Linux主机上执行【showmount -e NFS服务器IP地址】,如下所示,若能看到NFS服务器的共享目录,则表示NFS服务配置OK。

[root@k8s-master03 ~]# showmount -e 192.168.218.100

Export list for 192.168.218.100:

/data/zhangsan/gpmall/nfsdata *

2.3 部署中间件

gpmall用到了Elasticsearch、zookeeper、kafka、MySQL、Rabbitmq以及Redis中间件,所以需要将这些基础中间件提前部署好。

2.3.1 部署Elasticsearch

为便于文件管理,这里可创建一个专用目录存放yaml文件,后面部署期间的所有yaml文件均存放在该目录。

# 创建一个专门存放yaml文件的目录

[zhangsan@k8s-master01 ~]$ sudo mkdir -p /data/zhangsan/gpmall/yaml

# 切换到yaml目录

[zhangsan@k8s-master01 ~]$ cd /data/zhangsan/gpmall/yaml1、创建es持久卷pv

Elasticsearch需要对数据进行持久化,因此需要在K8s上建立一个持久卷pv,这要求在NFS共享目录下创建一个挂载该pv的目录es,并开放该目录的访问要限。

[zhangsan@k8s-master01 yaml]$ sudo mkdir -p /data/zhangsan/gpmall/nfsdata/es

# 开放权限

[zhangsan@k8s-master01 yaml]$ sudo chmod 777 /data/zhangsan/gpmall/nfsdata/es(1)编制创建pv的yaml文件,注意修改name,path和server的IP地址。

# 编制es-pv.yaml文件

[zhangsan@k8s-master01 yaml]$ sudo vim es-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /data/zhangsan/gpmall/nfsdata/es

server: 192.168.218.100 #此处的IP为上面目录所在主机的IP(2)创建es-pv对象

# 创建

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f es-pv.yaml

persistentvolume/es-pv created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

es-pv 1Gi RWO Retain Bound zhangsan/es-pvc nfs 10h

2、创建pvc

创建es-pv后,还需要创建pvc以便pod能从指定的pv获取存储资源。

(1)编制创建pvc的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim es-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pvc

namespace: zhangsan

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs(2)创建es-pvc

# 创建

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f es-pvc.yaml

persistentvolumeclaim/es-pvc created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

es-pvc Bound es-pv 1Gi RWO nfs 10h3、创建es-Service

(1)编制创建es服务的yaml文件,注意修改namespace和nodePort。

默认情况下,nodePort的范围为30000-32767。

这里要求指定nodePort为3XY,其中X和Y均为两位数字,X为自己的班级ID,Y为自己为学号后两位,比如下面的31888表示18班88号同学指定的值。

[zhangsan@k8s-master01 yaml]$ sudo vim es-service.yaml

apiVersion: v1

kind: Service

metadata:

name: es-svc

namespace: zhangsan

spec:

type: NodePort

ports:

- name: kibana

port: 5601

targetPort: 5601

nodePort: 31888

- name: rest

port: 9200

targetPort: 9200

- name: inter

port: 9300

targetPort: 9300

selector:

app: es(2)创建es-service

# 创建

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f es-service.yaml

service/es-svc created

#查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

es-svc NodePort 10.108.214.56 <none> 5601:31888/TCP,9200:31532/TCP,9300:31548/TCP 10h4、创建部署Elasticsearch服务的deployment

(1)编制部署的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim es-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: es

namespace: zhangsan

spec:

selector:

matchLabels:

app: es

template:

metadata:

labels:

app: es

spec:

containers:

- image: 192.168.18.18:9999/common/elasticsearch:6.6.1

name: es

env:

- name: cluster.name

value: elasticsearch

- name: bootstrap.memory_lock

value: "false"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

ports:

- containerPort: 9200

name: rest

- containerPort: 9300

name: inter-node

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

- image: 192.168.18.18:9999/common/kibana:6.6.1

name: kibana

env:

- name: ELASTICSEARCH_HOSTS

value: http://es-svc:9200

ports:

- containerPort: 5601

name: kibana

volumes:

- name: es-data

persistentVolumeClaim:

claimName: es-pvc(2)创建deployment

# 创建

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f es-deployment.yaml

deployment.apps/es created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

es 1/1 1 1 10h

注:若READY列显示0/1,则表示存在问题,可执行【sudo kubectl get pod】 查看pod状态,若pod状态有异常,可执行【sudo kubectl describe pod_name】查看该pod的Events信息,也可执行【sudo kubectl logs -f pod/pod_name】查看日志消息。

若es开头的pod提示错误,在日志中有以下错误提示:

ERROR: [1] bootstrap checks failed

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

[2023-06-05T07:43:42,415][INFO ][o.e.n.Node ] [C1xLTO-] stopping ...

[2023-06-05T07:43:42,428][INFO ][o.e.n.Node ] [C1xLTO-] stopped

[2023-06-05T07:43:42,429][INFO ][o.e.n.Node ] [C1xLTO-] closing ...

[2023-06-05T07:43:42,446][INFO ][o.e.n.Node ] [C1xLTO-] closed

解决方案如下:

在所有k8s节点中,修改/etc/sysctl.conf文件,在文件末尾添加内容vm.max_map_count=262144,然后重启各k8s节点即可。

vim /etc/sysctl.conf

……此处省略文件原有内容……

vm.max_map_count=262144

2.3.2 部署zookeeper

1、创建zookeeper服务

(1)编制创建zookeeper服务对象的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim zk-service.yaml

apiVersion: v1

kind: Service

metadata:

name: zk-svc

namespace: zhangsan

spec:

ports:

- name: zkport

port: 2181

targetPort: 2181

selector:

app: zk(2)创建和查看zookeeper服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f zk-service.yaml

service/zk-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

es-svc NodePort 10.108.214.56 <none> 5601:31888/TCP,9200:31532/TCP,9300:31548/TCP 10h

zk-svc ClusterIP 10.107.4.169 <none> 2181/TCP 11s2、部署zookeeper服务

(1)编制部署zookeeper服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim zk-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: zk

name: zk

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: zk

template:

metadata:

labels:

app: zk

spec:

containers:

- image: 192.168.18.18:9999/common/zookeeper:latest

imagePullPolicy: IfNotPresent

name: zk

ports:

- containerPort: 2181(2)创建和查看的zookeeper的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f zk-deployment.yaml

deployment.apps/zk created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

es 1/1 1 1 116s

zk 1/1 1 1 4s

2.3.3 部署kafka

1、创建kafka服务

(1)编制创建kafka服务的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim kafka-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kafka-svc

namespace: zhangsan

spec:

ports:

- name: kafkaport

port: 9092

targetPort: 9092

selector:

app: kafka(2)创建和查看kafka服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f kafka-service.yaml

service/kafka-svc created

# 查看服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

es-svc NodePort 10.96.114.80 <none> 5601:31888/TCP,9200:32530/TCP,9300:32421/TCP 8m39s

kafka-svc ClusterIP 10.108.28.89 <none> 9092/TCP 9s

zk-svc ClusterIP 10.107.4.169 <none> 2181/TCP 3h27m

2、部署kafka服务

(1)创建部署kafka服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim kafka-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka

namespace: zhangsan

spec:

selector:

matchLabels:

app: kafka

template:

metadata:

labels:

app: kafka

spec:

containers:

- image: 192.168.18.18:9999/common/kafka:latest

name: kafka

env:

- name: KAFKA_ADVERTISED_HOST_NAME

value: kafka-svc

- name: KAFKA_ADVERTISED_PORT

value: "9092"

- name: KAFKA_ZOOKEEPER_CONNECT

value: zk-svc:2181

ports:

- containerPort: 9092(2)创建和查看kafka服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f kafka-deployment.yaml

deployment.apps/kafka created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment | grep kafka

kafka 1/1 1 1 30s

2.3.4 部署MySQL

MySQL同样需要对数据进行持久化,因此也需要pv资源,同样也需要创建存储目录并开放权限。

# 创建目录

[zhangsan@k8s-master01 yaml]$ sudo mkdir /data/zhangsan/gpmall/nfsdata/mysql

[zhangsan@k8s-master01 yaml]$ sudo chmod 777 /data/zhangsan/gpmall/nfsdata/mysql/1、创建MySQL的持久化卷pv

(1)编制创建pv的yaml文件,注意修改name、path和server的IP地址。

# 创建yaml文件

[zhangsan@k8s-master01 yaml]$ sudo vim mysql-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /data/zhangsan/gpmall/nfsdata/mysql

server: 192.168.218.100 #此处的IP为上面目录所在主机的IP地址(2)创建和查看mysql-pv

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f mysql-pv.yaml

persistentvolume/mysql-pv created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pv/mysql-pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mysql-pv 1Gi RWO Retain Available nfs 32s

2、创建pvc

(1)编制创建pvc的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim mysql-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

namespace: zhangsan

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs(2)创建和查看mysql-pvc

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f mysql-pvc.yaml

persistentvolumeclaim/mysql-pvc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pvc/mysql-pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pvc Bound mysql-pv 1Gi RWO nfs 17s

3、创建MySQL服务

(1)编制创建mysql服务的yaml文件,注意修改namespace和nodePort的值。这里的nodePort的取值范围为30000-32767,要求改成3X(Y+10),X为班级ID,Y为12位数学号的后两位,比如18班的88号,则nodePort值为31898。

[zhangsan@k8s-master01 yaml]$ sudo vim mysql-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

namespace: zhangsan

spec:

type: NodePort

ports:

- port: 3306

targetPort: 3306

nodePort: 31898

selector:

app: mysql(2)创建和查看MySQL服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f mysql-svc.yaml

service/mysql-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc/mysql-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mysql-svc NodePort 10.96.70.204 <none> 3306:30306/TCP 24s4、部署MySQL服务

(1)编制部署mysql服务的deployment yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim mysql-development.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

namespace: zhangsan

spec:

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: 192.168.18.18:9999/common/mysql:latest

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: root

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pvc(2)创建和查看mysql服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f mysql-deployment.yaml

deployment.apps/mysql created

# 查看

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment/mysql

NAME READY UP-TO-DATE AVAILABLE AGE

mysql 1/1 1 1 39s

2.3.5 部署Rabbitmq

各k8s节点规格要求至少4核8G。

1、创建Rabbitmq服务

(1)编制yaml文件,注意修改namespace和nodePort。

要求nodePort为3X(Y+1),这里的X为两位数的班级ID,Y为学号后两位,如下示例中的31889表示18班88号同学的nodePort值。

[zhangsan@k8s-master01 yaml]$ sudo vim rabbitmq-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: rabbitmq-svc

namespace: zhangsan

spec:

type: NodePort

ports:

- name: mangerport

port: 15672

targetPort: 15672

nodePort: 31889

- name: rabbitmqport

port: 5672

targetPort: 5672

selector:

app: rabbitmq(2)创建并查看Rabbitmq服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f rabbitmq-svc.yaml

service/rabbitmq-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc/rabbitmq-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rabbitmq-svc NodePort 10.102.83.214 <none> 15672:31889/TCP,5672:32764/TCP 4s2、部署Rabbitmq服务

(1)编辑yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim rabbitmq-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: rabbitmq

name: rabbitmq

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: rabbitmq

template:

metadata:

labels:

app: rabbitmq

spec:

containers:

- image: 192.168.18.18:9999/common/rabbitmq:management

imagePullPolicy: IfNotPresent

name: rabbitmq

ports:

- containerPort: 5672

name: rabbitmqport

- containerPort: 15672

name: managementport(2)创建和查看Rabbitmq服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f rabbitmq-deployment.yaml

deployment.apps/rabbitmq created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment/rabbitmq

NAME READY UP-TO-DATE AVAILABLE AGE

rabbitmq 1/1 1 1 24s

3、填坑

gpmall代码需要用到rabbitmq里的队列,但是代码好像有bug,无法在rabbitmq中自动创建队列,需要手动到rabbitmq容器中创建队列,具体操作过程如下:

(1)查看并进入pod

# 查看rabbitmq的pod名称

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pod | grep rabbitmq

rabbitmq-77f54bdd4f-xndb4 1/1 Running 0 6m1s

# 进入pod内部

[zhangsan@k8s-master01 yaml]$ sudo kubectl exec -it rabbitmq-77f54bdd4f-xndb4 -- /bin/bash

root@rabbitmq-77f54bdd4f-xndb4:/#

(2)在pod内部声明队列

root@rabbitmq-77f54bdd4f-xndb4:/# rabbitmqadmin declare queue name=delay_queue auto_delete=false durable=false --username=guest --password=guest

queue declared

(3)查看队列是否存在

root@rabbitmq-77f54bdd4f-xndb4:/# rabbitmqctl list_queues

Timeout: 60.0 seconds ...

Listing queues for vhost / ...

name messages

delay_queue 0

(4)退出pod

root@rabbitmq-77f54bdd4f-xndb4:/# exit

2.3.6 部署redis

1、创建redis服务

(1)编制创建redis服务的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim redis-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-svc

namespace: zhangsan

spec:

ports:

- name: redisport

port: 6379

targetPort: 6379

selector:

app: redis(2)创建和查看redis服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f redis-svc.yaml

service/redis-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc redis-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-svc ClusterIP 10.108.200.204 <none> 6379/TCP 14s

2、部署redis服务

(1)编制部署redis服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim redis-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: redis

name: redis

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- image: 192.168.18.18:9999/common/redis:latest

imagePullPolicy: IfNotPresent

name: redis

ports:

- containerPort: 6379(2)部署和查看redis服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f redis-deployment.yaml

deployment.apps/redis created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment/redis

NAME READY UP-TO-DATE AVAILABLE AGE

redis 1/1 1 1 13s

3 部署系统模块

系统模块有一定的先后依赖关系,建议按以下顺序部署。

3.1 部署用户模块

3.3.1 创建user-provider服务

1、编制创建user-provider服务的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim user-provider-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: user-provider-svc

namespace: zhangsan

spec:

ports:

- name: port

port: 80

targetPort: 80

selector:

app: user-provider2、创建和查看user-provider服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f user-provider-svc.yaml

service/user-provider-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc/user-provider-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

user-provider-svc ClusterIP 10.109.120.197 <none> 80/TCP 19s

3.3.2 部署用户模块提供者服务

1、编制部署user-provider服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim user-provider-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: user-provider

name: user-provider

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: user-provider

template:

metadata:

labels:

app: user-provider

spec:

containers:

- image: 192.168.18.18:9999/gpmall/user-provider:latest

imagePullPolicy: IfNotPresent

name: user-provider2、部署和查看user-provider服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f user-provider-deployment.yaml

deployment.apps/user-provider created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment/user-provider

NAME READY UP-TO-DATE AVAILABLE AGE

user-provider 1/1 1 1 21s

注:这里若发现若READY显示为0/1,则表示部署有问题,可执行命令【sudo kubectl get pod】查看pod状态,若pod运行异常,可执行【sudo kubectl logs -f pod_name】查看日志。若发现是前面构建的docker镜像有问题,则需要在各个k8s节点上执行【sudo crictl images】查看镜像,并执行【sudo crictl rmi 镜像ID】删除有问题的镜像。 重新构建和上传Docker镜像后,然后再重新部署。

3.2 部署gpmall-user服务

3.2.1 创建gpmall-user服务

1、编制创建gpmall-user服务的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-user-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: gpmall-user-svc

namespace: zhangsan

spec:

ports:

- name: port

port: 8082

targetPort: 8082

selector:

app: gpmall-user2、创建和查看gpmall-user服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-user-svc.yaml

service/gpmall-user-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc gpmall-user-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gpmall-user-svc ClusterIP 10.107.12.83 <none> 8082/TCP 17s

3.2.2 部署gpmall-user服务

1、编制部署gpmall-user服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-user-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: gpmall-user

name: gpmall-user

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: gpmall-user

template:

metadata:

labels:

app: gpmall-user

spec:

containers:

- image: 192.168.18.18:9999/gpmall/gpmall-user:latest

imagePullPolicy: IfNotPresent

name: gpmall-user

ports:

- containerPort: 80822、部署和查看gpmall-user服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-user-deployment.yaml

deployment.apps/gpmall-user created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment gpmall-user

NAME READY UP-TO-DATE AVAILABLE AGE

gpmall-user 1/1 1 1 22s

3.3 部署搜索模块

3.3.1 创建部署search-provider模块的yaml文件

注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim search-provider-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: search-provider

name: search-provider

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: search-provider

template:

metadata:

labels:

app: search-provider

spec:

containers:

- image: 192.168.18.18:9999/gpmall/search-provider:latest

imagePullPolicy: IfNotPresent

name: search-provider3.3.2 部署和查看search-provider模块的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f search-provider-deployment.yaml

deployment.apps/search-provider created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment search-provider

NAME READY UP-TO-DATE AVAILABLE AGE

search-provider 1/1 1 1 27s

3.4 部署订单模块

3.4.1 编制部署order-provider模块的yaml文件

注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim order-provider-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: order-provider

name: order-provider

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: order-provider

template:

metadata:

labels:

app: order-provider

spec:

containers:

- image: 192.168.18.18:9999/gpmall/order-provider:latest

imagePullPolicy: IfNotPresent

name: order-provider3.4.2 部署和查看order-provider模块的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f order-provider-deployment.yaml

deployment.apps/order-provider created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment order-provider

NAME READY UP-TO-DATE AVAILABLE AGE

order-provider 1/1 1 1 97s3.5 部署购物模块

3.5.1 部署shopping-provider模块

1、编制shopping-provider模块的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim shopping-provider-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: shopping-provider

name: shopping-provider

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: shopping-provider

template:

metadata:

labels:

app: shopping-provider

spec:

containers:

- image: 192.168.18.18:9999/gpmall/shopping-provider:latest

imagePullPolicy: IfNotPresent

name: shopping-provider2、部署和查看shopping-provider模块的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f shopping-provider-deployment.yaml

deployment.apps/shopping-provider created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment shopping-provider

NAME READY UP-TO-DATE AVAILABLE AGE

shopping-provider 1/1 1 1 14s3.5.2 创建购物服务

1、编制创建gpmall-shopping服务的yaml文件,注意修改namespace。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-shopping-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: gpmall-shopping-svc

namespace: zhangsan

spec:

ports:

- name: port

port: 8081

targetPort: 8081

selector:

app: gpmall-shopping2、创建和查看gpmall-shopping服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-shopping-svc.yaml

service/gpmall-shopping-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc gpmall-shopping-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gpmall-shopping-svc ClusterIP 10.105.229.200 <none> 8081/TCP 7m10s

3.5.3 部署gpmall-shopping服务

1、编制部署gpmall-shopping服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-shopping-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: gpmall-shopping

name: gpmall-shopping

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: gpmall-shopping

template:

metadata:

labels:

app: gpmall-shopping

spec:

containers:

- image: 192.168.18.18:9999/gpmall/gpmall-shopping:latest

imagePullPolicy: IfNotPresent

name: gpmall-shopping

ports:

- containerPort: 80812、部署和查看gpmall-shopping服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-shopping-deployment.yaml

deployment.apps/gpmall-shopping created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment gpmall-shopping

NAME READY UP-TO-DATE AVAILABLE AGE

gpmall-shopping 1/1 1 1 18s

3.6 部署评论模块

3.6.1 编制部署comment-provider的yaml文件

注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim comment-provider-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: comment-provider

name: comment-provider

namespace: zhangsan

spec:

replicas: 1

selector:

matchLabels:

app: comment-provider

template:

metadata:

labels:

app: comment-provider

spec:

containers:

- image: 192.168.18.18:9999/gpmall/comment-provider

imagePullPolicy: IfNotPresent

name: comment-provider3.6.2 部署和查看comment-provider模块的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f comment-provider-deployment.yaml

deployment.apps/comment-provider created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment comment-provider

NAME READY UP-TO-DATE AVAILABLE AGE

comment-provider 1/1 1 1 65s

3.7 部署前端模块

3.7.1 创建前端服务

1、编制创建gpmall-frontend服务的yaml文件,注意修改namespace。

这里要求nodePort指定为3X(Y+2),其中X为两位数的班级ID,Y为学号后两位,如下面的31890为18班88号同学的设定的值。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-frontend-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: gpmall-frontend-svc

namespace: zhangsan

spec:

type: NodePort

ports:

- port: 9999

targetPort: 9999

nodePort: 31890

selector:

app: gpmall-frontend2、创建和查看gpmall-frontend服务

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-frontend-svc.yaml

service/gpmall-frontend-svc created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc gpmall-frontend-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gpmall-frontend-svc NodePort 10.99.154.113 <none> 9999:31890/TCP 15s3.7.2 部署gpmall-frontend服务

1、编制部署gpmall-frontend服务的yaml文件,注意修改namespace和镜像地址。

[zhangsan@k8s-master01 yaml]$ sudo vim gpmall-frontend-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gpmall-frontend

namespace: zhangsan

spec:

selector:

matchLabels:

app: gpmall-frontend

template:

metadata:

labels:

app: gpmall-frontend

spec:

containers:

- image: 192.168.18.18:9999/gpmall/gpmall-front:latest

imagePullPolicy: IfNotPresent

name: gpmall-frontend

ports:

- containerPort: 99992、部署和查看gpmall-frontend服务的deployment

[zhangsan@k8s-master01 yaml]$ sudo kubectl create -f gpmall-frontend-deployment.yaml

deployment.apps/gpmall-frontend created

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment gpmall-frontend

NAME READY UP-TO-DATE AVAILABLE AGE

gpmall-frontend 1/1 1 1 17s3.8 确认状态

3.8.1 确认所有pod的状态

要求所有pod的STATUS均为Running。

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

comment-provider-59cb4fd467-84fbh 1/1 Running 0 15h 10.0.3.205 k8s-master01 <none> <none>

es-bb896c98-6ggf4 2/2 Running 0 23h 10.0.0.103 k8s-node02 <none> <none>

gpmall-frontend-6486fb87f6-7gsxn 1/1 Running 0 15h 10.0.0.221 k8s-node02 <none> <none>

gpmall-shopping-fc7d766b4-dzlgb 1/1 Running 0 15h 10.0.1.135 k8s-master02 <none> <none>

gpmall-user-6ddcf889bb-5w58x 1/1 Running 0 20h 10.0.2.23 k8s-master03 <none> <none>

kafka-7c6cdc8647-rx5tb 1/1 Running 0 22h 10.0.1.236 k8s-master02 <none> <none>

mysql-8976b8bb4-2sfkq 1/1 Running 0 14h 10.0.3.131 k8s-master01 <none> <none>

order-provider-74bbcd6dd4-f8k87 1/1 Running 0 16h 10.0.4.41 k8s-node01 <none> <none>

rabbitmq-77f54bdd4f-xndb4 1/1 Running 0 21h 10.0.4.1 k8s-node01 <none> <none>

redis-bc8ff7957-2xn8z 1/1 Running 0 20h 10.0.2.15 k8s-master03 <none> <none>

search-provider-f549c8d9d-ng4dv 1/1 Running 0 15h 10.0.3.115 k8s-master01 <none> <none>

shopping-provider-75b7cd5d6-6767x 1/1 Running 0 17h 10.0.1.55 k8s-master02 <none> <none>

user-provider-7f6d7f8b85-hj5m5 1/1 Running 0 20h 10.0.4.115 k8s-node01 <none> <none>

zk-84bfd67c77-llk5w 1/1 Running 0 24h 10.0.1.18 k8s-master02 <none> <none>

3.8.2 所有service的状态

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

es-svc NodePort 10.96.114.80 <none> 5601:31888/TCP,9200:32530/TCP,9300:32421/TCP 7h22m

gpmall-frontend-svc NodePort 10.99.154.113 <none> 9999:31890/TCP 4m23s

gpmall-shopping-svc ClusterIP 10.98.89.99 <none> 8081/TCP 77m

gpmall-user-svc ClusterIP 10.107.12.83 <none> 8082/TCP 4h48m

kafka-svc ClusterIP 10.108.28.89 <none> 9092/TCP 7h13m

mysql-svc NodePort 10.98.41.1 <none> 3306:30306/TCP 5h58m

rabbitmq-svc NodePort 10.102.83.214 <none> 15672:31889/TCP,5672:32764/TCP 5h37m

redis-svc ClusterIP 10.108.200.204 <none> 6379/TCP 5h17m

user-provider-svc ClusterIP 10.109.120.197 <none> 80/TCP 5h6m

zk-svc ClusterIP 10.107.4.169 <none> 2181/TCP 10h

3.8.3 所有deployment的状态

要求所有deployment的AVAILABLE均为1。

[zhangsan@k8s-master01 yaml]$ sudo kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

comment-provider 1/1 1 1 19m

es 1/1 1 1 7h22m

gpmall-frontend 1/1 1 1 2m49s

gpmall-shopping 1/1 1 1 14m

gpmall-user 1/1 1 1 4h45m

kafka 1/1 1 1 7h8m

mysql 1/1 1 1 5h58m

order-provider 1/1 1 1 49m

rabbitmq 1/1 1 1 5h32m

redis 1/1 1 1 5h16m

search-provider 1/1 1 1 16m

shopping-provider 1/1 1 1 84m

user-provider 1/1 1 1 4h55m

zk 1/1 1 1 8h

4 测试访问

至此gpmall已基本部署完毕,通过命令【kubectl get pod -o wide】可获得gpmall-frontend被部署在哪个节点上,然后通过节点的IP地址和对应端口号进行访问,地址形式为IP地址:3X(Y+2)。而对于K8S,这里的IP地址通常用Leader或者对外提供服务的VIP。

4.1 连接数据库

首次部署时,打开的页面无法显示商品信息,这就需要连接到数据库,具体操作如下:

4.1.1 创建MySQL连接

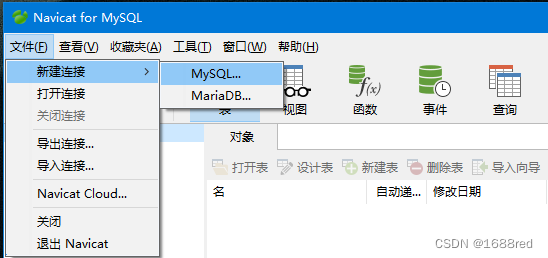

利用Navicat新建一个MySQL连接,如下图所示。

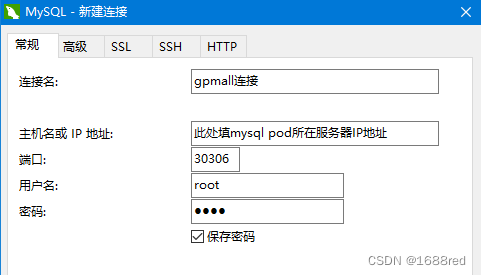

在打开的窗口中填写主机IP地址或主机名,通常是mysql pod所在主机的IP地址,可通过【kubectl get pod -o wide】命令查看,若k8s集群中有多个master节点,则填leader节点的IP地址,或者对外提供服务的VIP。

端口号默认是30306,也可以通过【kubectl get svc】命令查看,如下所示,pod内部的3306端口映射到外部的30306。

[zhangsan@k8s-master01 yaml]$ sudo kubectl get svc | grep mysql

mysql-svc NodePort 10.98.41.1 <none> 3306:30306/TCP 21h

默认的账号/密码为root/root。

4.1.2 打开连接

(1)双击左侧新建的MySQL连接可打开该连接,如果提示如下图所示的2003错误(2003 - Can't connect to MysQL server on "10.200.7.99' (10038)),则可能是前面填写的主机IP地址错误,在该连接的“连接属性”中修改主机地址后再重新尝试打开连接。

(2)如果提示下图所示的1151错误,要么更新Navicat,要么执行以下命令解决:

- 进入mysql pod内部:kubectl exec -it mysql-pod-name -- /bin/bash

- 登录mysql,默认账号root/root: mysql -u root -p

- 修改加密方式:ALTER USER 'root'@'%' IDENTIFIED BY 'root' PASSWORD EXPIRE NEVER;

- 修改root密码:ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY 'root';

- 刷新权限:FLUSH PRIVILEGES;

执过过程如下:

[root@k8s-master-1 yaml]# kubectl exec -it mysql-8976b8bb4-wdnzz -- /bin/bash

root@mysql-8976b8bb4-wdnzz:/# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 21

Server version: 8.0.27 MySQL Community Server - GPL

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> ALTER USER 'root'@'%' IDENTIFIED BY 'root' PASSWORD EXPIRE NEVER;

Query OK, 0 rows affected (0.01 sec)

mysql> ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY 'root';

Query OK, 0 rows affected (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> exit;

Bye

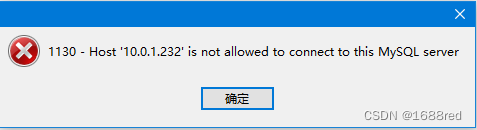

(3)如果出现如下图所示的1130错误(1130 - Host '10.0.1232' is not allowed to connect to this MySOL server),则可能是MysQL 只允许通过localhost访问。

可通过如下操作解决。

(1)查看mysql的pod名称

[zhangsan@k8s-master01 yaml]$ sudo kubectl get pod | grep mysql

mysql-8976b8bb4-2sfkq 1/1 Running 0 14h

(2)进入mysql pod容器内部

[zhangsan@k8s-master01 yaml]$ sudo kubectl exec -it mysql-8976b8bb4-2sfkq -- /bin/bash

root@mysql-8976b8bb4-2sfkq:/# (3)在容器内部登录mysql,默认账号/密码为root/root

root@mysql-8976b8bb4-2sfkq:/# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 10232

Server version: 8.0.27 MySQL Community Server - GPL

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

(4)在mysql容器内执行以下3条语句

mysql> use mysql;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> update user set host = '%' where user = 'root';

Query OK, 0 rows affected (0.00 sec)

Rows matched: 1 Changed: 0 Warnings: 0

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

4.1.3 新建数据库

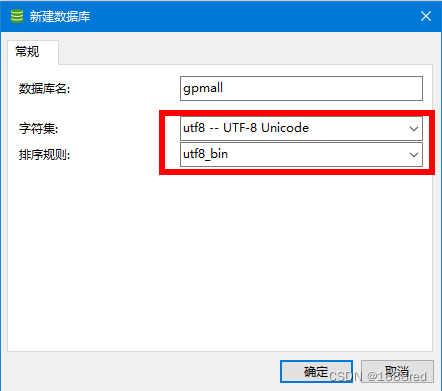

成功打开连接后,接下来就可以新建数据库了,右击数据库连接,选择【新建数据库】,如下图所示。

在打开的窗口中,填写数据库名称,并按如下图所示设置字符集和排序规则。

4.1.4 导入数据库表

gpmall项目作者已经提供了数据表脚本,源码的db_script目录下的gpmall.sql文件即是,利用Navicat导入该文件即可,操作如下:

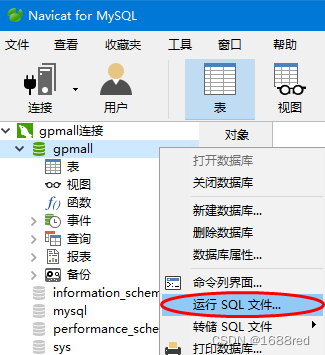

双击刚才新建的数据库,然后右击,选择【运行SQL文件...】,如下图所示。

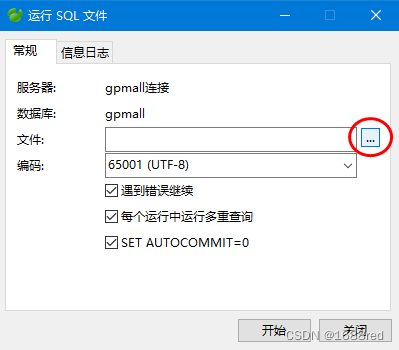

在打开的窗口中, 点击【文件】后面的按钮,选择源码db_script目录下的gpmall.sql文件。

单击【开始】按钮开始导入,成功后的界面如下图所示。

成功导入后,右击左侧的表,并选择【刷新】就可以看到数据库表了。

4.2 测试访问

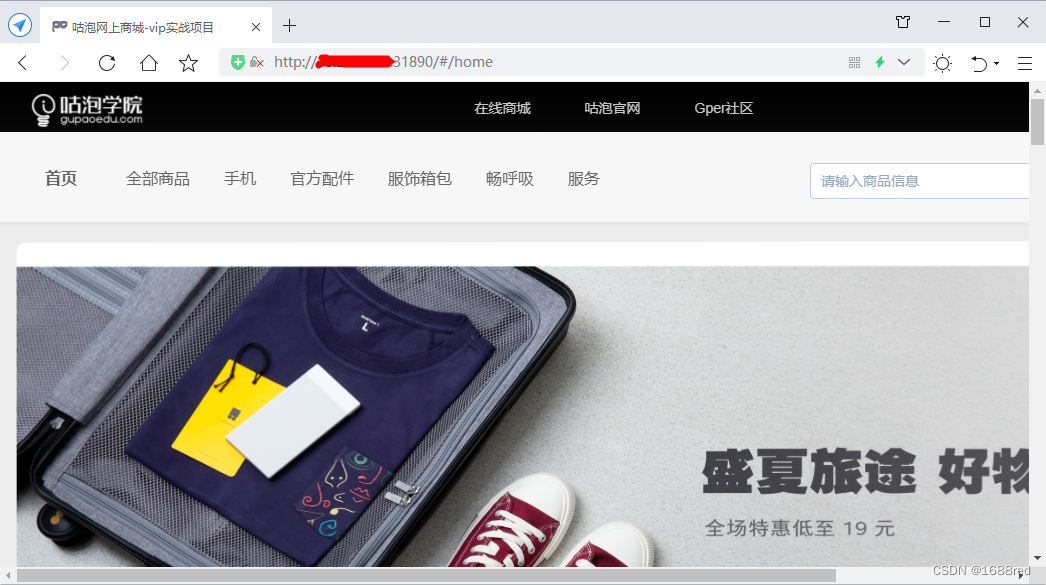

正常情况下,刷新页面就可以看到如下所示的页面了。

默认的测试账号为test/test,可登录体验。

如果仍然无法展示商品,可依次执行以下命令重新部署shopping-provider和gpmall-shopping即可。

# 先删除原先的部署

sudo kubectl delete -f shopping-provider-deployment.yaml

sudo kubectl delete -f gpmall-shopping-deployment.yaml

# 重新部署

sudo kubectl create -f shopping-provider-deployment.yaml

sudo kubectl create -f gpmall-shopping-deployment.yaml附:常见问题答疑

(1)K8S节点提示NotReady

正常部署成功K8S后,一段时间扫发现有个别节点状态为NotReady,则进入该节点,执行【systemctl restart kubelet.service】命令重启kubelet服务即可。

(2)如何删除指定命名空间下的所有资源

实验过程中,或者结束后,需要删除某个命名空间下的所有资源,可执行命令【kubectl delete all --all -n namespace-name】

(3)rabbitmq容器的状态一直在重启,状态为CrashLoopBackOff

执行命令【kubectl logs -f pod/pod-name -n namespace-name】查看日志为空,通常是资源不足引起,可考虑提升ECS规格,实践证明4核8G以上可自动解决该问题。

(4)若es pod状态不正常,日志提示“Defaulted container "es" out of: es, kibana”错误,如下所示:

[root@k8s-master-1 ~]# kubectl logs -f es-84b85675cd-l4qk2

Defaulted container "es" out of: es, kibana

Error from server (BadRequest): container "es" in pod "es-84b85675cd-l4qk2" is waiting to start: ContainerCreating错误原因可能是nfs共享目录(/etc/experts配置文件中)有错,或者nfs服务未重启,或者es-pv.yaml文件中的路径有错,请认真核对。

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)