K8S篇-搭建kubenetes集群

centos部署一主二从kubenetes集群

安装环境

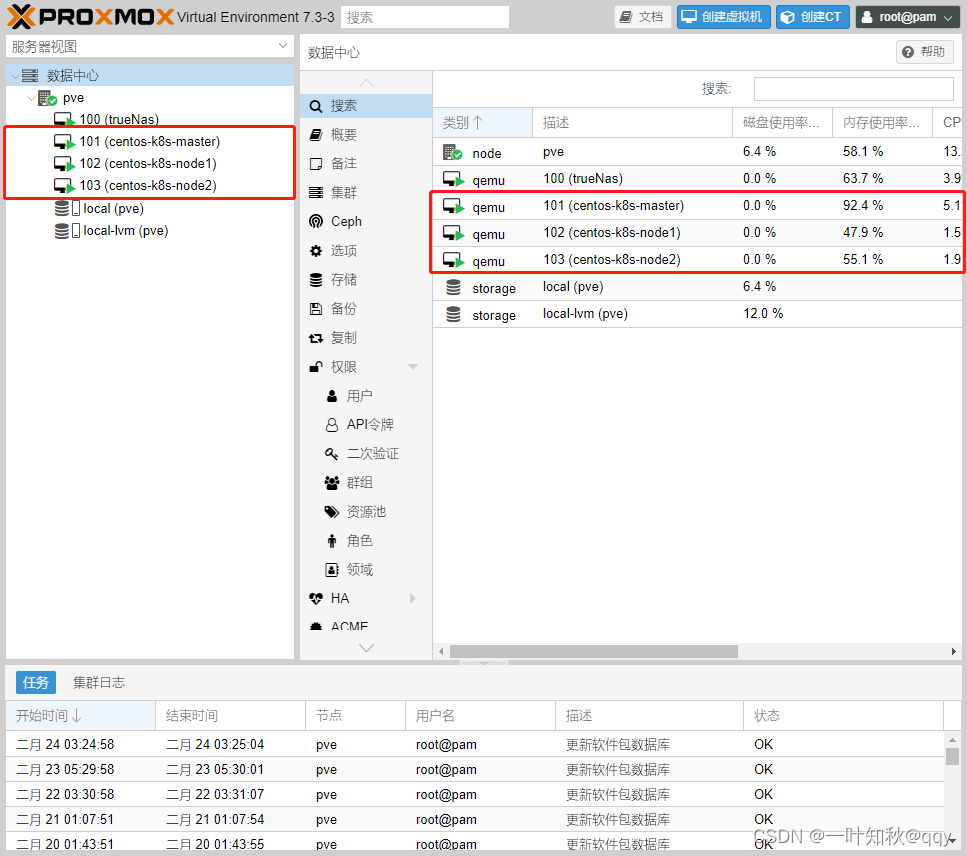

这里使用pve虚拟机搭建三台centos机器,搭建过程参考: Centos篇-Centos Minimal安装

此次安装硬件配置

CPU:2C

内存:2G

存储:64G

环境说明

操作系统:Centos 7.9

内核版本:6.2.0-1.el7.elrepo.x86_64

master:192.168.1.12

node1:192.168.1.13

node2:192.168.1.14

已经为三台目标机设置了ssh公钥登录,实现方式参考:Centos篇-Centos ssh公钥登录

环境准备

关闭防火墙(firewalld)和selinux

关闭防火墙指令:

systemctl stop firewalld && systemctl disable firewalld && iptables -F

firewalld和iptables是前后有两代方案,一般这俩可以先关闭,然后在部署k8s时如果确定使用iptables,可以再开启,如果使用其他方式,则firewalld和iptables均保持关闭就可以。

关闭selinux指令:

sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

Selinux是内核级别的一个安全模块,通过安全上下文的方式控制应用服务的权限,是应用和操作系统之间的一道ACL,但不是所有的程序都会去适配这个模块,不适配的话开着也不起作用,何况还有规则影响到正常的服务。比如权限等等相关的问题,所以一般要关闭掉。

关闭swap分区

临时关闭指令:

swapoff -a

永久关闭指令:

sed -ri 's/.*swap.*/#&/' /etc/fstab

可以先临时关闭,再永久关闭。

Swap会导致docker的运行不正常,性能下降,是个bug,现在不知道是否有修复,但是一般关闭swap已经成了通用方案。

修改host文件

因为很多主机的名称是一样的,可能是安装时默认的,也可能是虚拟机克隆的,所以这里设置hostname来进行区分,如果像我一样在安装系统时就定义了不同的主机名,那么可以忽略。

master:

hostnamectl set-hostname centos-k8s-master.local

node1:

hostnamectl set-hostname centos-k8s-node1.local

node2:

hostnamectl set-hostname centos-k8s-node2.local

vim /etc/hosts 添加如下内容

192.168.1.12 centos-k8s-master.local

192.168.1.13 centos-k8s-node1.local

192.168.1.14 centos-k8s-node2.local

这样就可以根据主机的hostname名解析ip了。

修改内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

加载ip_vs内核模块

如果kube-proxy 模式为ip_vs则必须加载,本文采用iptables

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

设置开机启动加载:

cat > /etc/modules-load.d/ip_vs.conf << EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

EOF

更新内核(可选)

这里我是更新了自己系统的内核,但是不是必须的,可以忽略此步骤。

启动ELRepo仓库

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

当ELRepo仓库启用后,可以使用下面的指令列出可以使用的内核包:

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

安装内核

安装稳定版本内核:

yum --enablerepo=elrepo-kernel install kernel-ml -y

设置默认内核

设置GRUB默认的内核版本:L;

grub2-set-default 0

重启

init 6

验证

使用下面的命令查看内核版本是否更新成功:

uname -r

新内核设置默认内核

打开并编辑/etc/default/grub并设置

GRUB_DEFAULT=0。意思是 GRUB 初始化页面的第一个内核将作为默认内核。

GRUB_TIMEOUT=5

GRUB_DEFAULT=0

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap crashkernel=auto rhgb quiet"

GRUB_DISABLE_RECOVERY="true"

再重启完成。

安装docker

配置阿里云yum源

yum install wget -y

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

安装指定版本docker

列出所有支持的docker版本:

yum list docker-ce.x86_64 --showduplicates |sort

选择一个版本进行安装,此处我安装的是23.0.1-1.el7

yum -y install docker-ce-23.0.1-1.el7 docker-ce-cli-23.0.1-1.el7

编辑docker配置文件

编辑/etc/docker/daemon.json

mkdir /etc/docker/

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://gqs7xcfd.mirror.aliyuncs.com","https://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

启动docker服务

systemctl daemon-reload && systemctl enable docker && systemctl start docker

安装kubeadm、kubelet、kubectl

配置k8s yum阿里云源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

列出kubelet指定版本

因为kubeadm、kubelet、kubectl需要安装同版本,所以一般查看kubelet就可以了,其余的使用同版本就ok

yum list kubelet --showduplicates

安装指定版本的kubeadm、kubelet、kubectl

yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

设置kubelet开机自启

systemctl enable kubelet

部署k8s master节点

master节点初始化

kubeadm init \

--kubernetes-version 1.23.6 \

--apiserver-advertise-address=192.168.1.12 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.245.0.0/16 \

--image-repository registry.aliyuncs.com/google_containers

-kubernetes-version版本一定要填与上面一致,我这里是1.23.6。

–service-cidr 指定service网络,不能和node网络冲突

–pod-network-cidr 指定pod网络,不能和node网络、service网络冲突

–image-repository registry.aliyuncs.com/google_containers 指定镜像源,由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

等待拉取镜像

这里可以直接使用下面的指令提前拉取镜像:

kubeadm --kubernetes-version 1.23.6 config images list

一般使用阿里云的镜像源后就慢不到哪里去了。

初始化结束后会在屏幕上出现两条重要的打印:

1、类似于initialized successfully!

2、kubeadm join xxx.xxx.xxx.xxx:6443 --token …

打印1是证明master节点初始化成功,打印2是用来加入集群的命令,在woker节点上使用,这里就不贴出来了。

配置kubectl

这里是还有三条指令,也是在初始化成功后输出的,是用来创建集群kubeconfig文件使用的命令:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

woker节点加入集群

在各woker节点也要走一遍上面的流程走一遍(除了初始化master节点)

woker节点加入集群

使用在初始化master节点成功后输出的加入集群命令,将woker节点加入集群,我的命令是:

kubeadm join 192.168.1.12:6443 --token 37xfed.jqnw7nsll8jhdfl8 \

--discovery-token-ca-cert-hash sha256:27b08986844ca2306353287507370ce7ba0caef7d4608860db4c85c762149bf6

在所有woker节点上都执行这条命令。

在master节点上查看集群节点

kubectl get nodes

差不多这个样子

但是我这个是已经成功运行之后的,在安装网络插件之前应该都是NotReady状态。

安装网络插件-flannel

安装 flannel

从官网下载flannel yaml文件:

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

或者直接使用我的:

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.245.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: docker.io/flannel/flannel:v0.21.2

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: docker.io/flannel/flannel:v0.21.2

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock

官网下载的yaml文件需要注意:net-conf.json内部的Network要与初始化master节点的pod-network-cidr保持一致。

官网默认下载的是:10.244.0.0/16

我修改为了:10.245.0.0/16

执行yaml文件:

kubectl apply -f kube-flannel.yaml

查看flannel部署结果

kubectl -n kube-system get pods -o wide

等待flannel节点ready。

查看各个woker节点的状态

等flanner部署成功后,应该所有节点都ready了。

修改kube-proxy的模式为iptables

如果centos的内核高于4,则可以忽略:

kubectl get cm kube-proxy -n kube-system -o yaml | sed 's/mode: ""/mode: "iptables"/' | kubectl apply -f -

kubectl -n kube-system rollout restart daemonsets.apps kube-proxy

kubectl -n kube-system rollout restart daemonsets.apps kube-flannel-ds

部署metrics-server

修改api server

先检查k8s集群的api server是否有启用API Aggregator

ps -ef |grep apiserver

对比:

ps -ef | grep apiserver | grep enable-aggregator-routing

如果输出一片空白就需要修改下api server

[root@centos-k8s-master ~]# ps -ef | grep apiserver

root 772 20181 0 12:17 pts/0 00:00:00 grep --color=auto apiserver

root 1999 1909 2 Feb22 ? 01:39:39 kube-apiserver --advertise-address=192.168.1.12 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/16 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

[root@centos-k8s-master ~]# ps -ef | grep apiserver | grep enable-aggregator-routing

[root@centos-k8s-master ~]#

默认是没有开启的,此时需要修改k8s apiserver的配置文件

sudo vim /etc/kubernetes/manifests/kube-apiserver.yaml

在spec.containers.command增加 --enable-aggregator-routing=true

api server 会自动重启,稍后用命令验证一下:

[root@centos-k8s-master ~]# ps -ef | grep apiserver | grep enable-aggregator-routing

root 4010 3984 11 12:22 ? 00:00:03 kube-apiserver --advertise-address=192.168.1.12 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --enable-aggregator-routing=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/16 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

[root@centos-k8s-master ~]#

下载并修改安装文件

先下载安装文件,直接用最新版本:wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

修改下载下来的 components.yaml(重命名为metrics-server.yaml), 增加 --kubelet-insecure-tls,而且将默认的官方源替换成了阿里云的源,不然一直拉取镜像失败。

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.2

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

执行部署命令:

kubectl apply -f metrics-server.yaml

简单试用一下:

[root@centos-k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d8c4cb4d-874c6 1/1 Running 0 2d14h

coredns-6d8c4cb4d-vrxjv 1/1 Running 0 2d14h

etcd-centos-k8s-master.local 1/1 Running 1 (2d13h ago) 2d14h

kube-apiserver-centos-k8s-master.local 1/1 Running 0 26m

kube-controller-manager-centos-k8s-master.local 1/1 Running 2 (26m ago) 2d14h

kube-flannel-ds-bv4rv 1/1 Running 0 2d13h

kube-flannel-ds-tv254 1/1 Running 0 2d13h

kube-flannel-ds-v9hjg 1/1 Running 0 2d13h

kube-proxy-5wfn9 1/1 Running 0 2d13h

kube-proxy-lwwzf 1/1 Running 0 2d13h

kube-proxy-w6vxq 1/1 Running 0 2d13h

kube-scheduler-centos-k8s-master.local 1/1 Running 2 (26m ago) 2d14h

metrics-server-55db4f88bc-rbddg 1/1 Running 0 5m46s

[root@centos-k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

centos-k8s-master.local 85m 4% 1329Mi 70%

centos-k8s-node1.local 28m 1% 581Mi 30%

centos-k8s-node2.local 28m 1% 614Mi 32%

[root@centos-k8s-master ~]#

复制证书到跳板机

命令:scp -r root@192.168.1.12:/root/.kube/ /root/

命令格式:scp [OPTIONS] [[user@]src_host:]file [[user@]dest_host:]file

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)