【kubernetes】k8s v1.20高可用多master节点部署

【kubernetes】k8s v1.20高可用多master节点部署

一,安装环境

1,硬件要求

内存:2GB或更多RAM

CPU: 2核CPU或更多CPU

硬盘: 30GB或更多

2,本次环境说明:

操作系统:CentOS 7.9

内核版本:3.10.0-1160

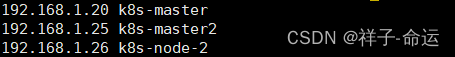

|

虚拟机 |

IP地址 |

节点 |

|

k8s-master |

192.168.1.20 |

master |

|

k8s-master2 |

192.168.1.25 |

master |

|

k8s-node-2 |

192.168.1.26 |

node |

二,环境前期准备工作(三台机器同步执行)

1,防火墙关闭

systemctl stop firewalld && systemctl disable firewalld

2,selinux关闭

sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

3,关闭swap

sed -ri 's/.*swap.*/#&/' /etc/fstab

4,设置主机名

在三台主机分别执行:

hostnamectl set-hostname k8s-master && bash

hostnamectl set-hostname k8s-master2 && bash

hostnamectl set-hostname k8s-node-2 && bash

5,修改hosts文件

添加三台主机的地址及主机名

6,开启包转发功能和修改内核参数

modprobe br_netfilter

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

7,配置生效

sysctl -p /etc/sysctl.d/k8s.conf

8,时间同步

yum install ntpdate -y

ntpdate cn.pool.ntp.org

echo "* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org >> /tmp/tmp.txt" >> /var/spool/cron/root

service crond restart

9,安装基础软件包

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm openssh-clients

10,安装docker

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-20.10.7-3.el7

systemctl start docker && systemctl enable docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://k73dxl89.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload && systemctl restart docker

三,k8s安装

1,备份原repo文件,替换阿里云yum源(两台master节点上执行)

mkdir /root/repo.bak

mv /etc/yum.repos.d/* /root/repo.bak/

cd /etc/yum.repos.d/

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

systemctl enable kubelet

2,创建kubeadm-config.yaml文件(k8s-master节点上执行)

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.20.6

controlPlaneEndpoint: 192.168.1.27:16443 #vip地址

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 192.168.1.20

- 192.168.1.25

- 192.168.1.26

- 192.168.1.27 #keepalived虚拟地址

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs #选择ipvs模式,性能更佳

3,k8s-master主机上执行命令:

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification

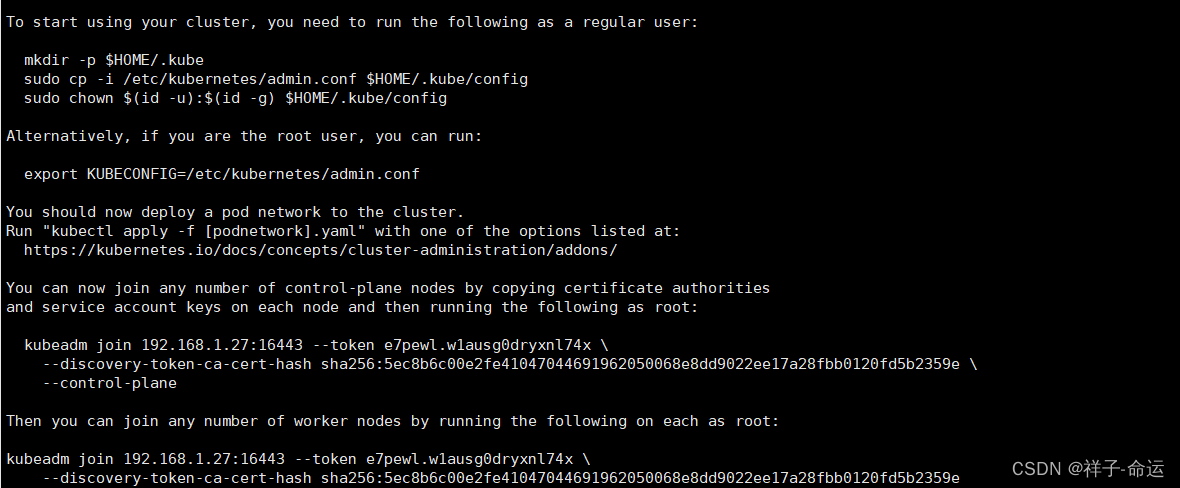

看到此段证明安装成功;

根据提示执行以下命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

4,添加k8s-master2节点(k8s-master2节点上执行)

在k8s-master2节点创建证书存放目录

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

在k8s-master节点复制证书到k8s-master2节点

scp /etc/kubernetes/pki/ca.crt k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key k8s-master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt k8s-master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key k8s-master2:/etc/kubernetes/pki/etcd/

在k8s-master2节点上执行kubeadm join命令加入控制节点

kubeadm join 192.168.1.27:16443 --token e7pewl.w1ausg0dryxnl74x \

--discovery-token-ca-cert-hash sha256:5ec8b6c00e2fe41047044691962050068e8dd9022ee17a28fbb0120fd5b2359e \

--control-plane --ignore-preflight-errors=SystemVerification

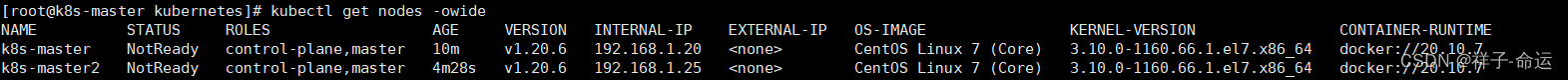

查看节点状态

kubectl get nodes -owide

5,添加node工作节点(node节点上执行)

kubeadm join 192.168.1.27:16443 --token e7pewl.w1ausg0dryxnl74x \

--discovery-token-ca-cert-hash sha256:5ec8b6c00e2fe41047044691962050068e8dd9022ee17a28fbb0120fd5b2359e

6,安装calico.yaml网络组件

wget -O https://docs.projectcalico.org/manifests/calico.yaml

kubectl apply -f calico.yaml

kubectl get nodes -owide

四,keepalive+nginx实现k8s apiserver节点高可用(master节点上操作)

1,安装keepalived+nginx

yum install nginx keepalived -y

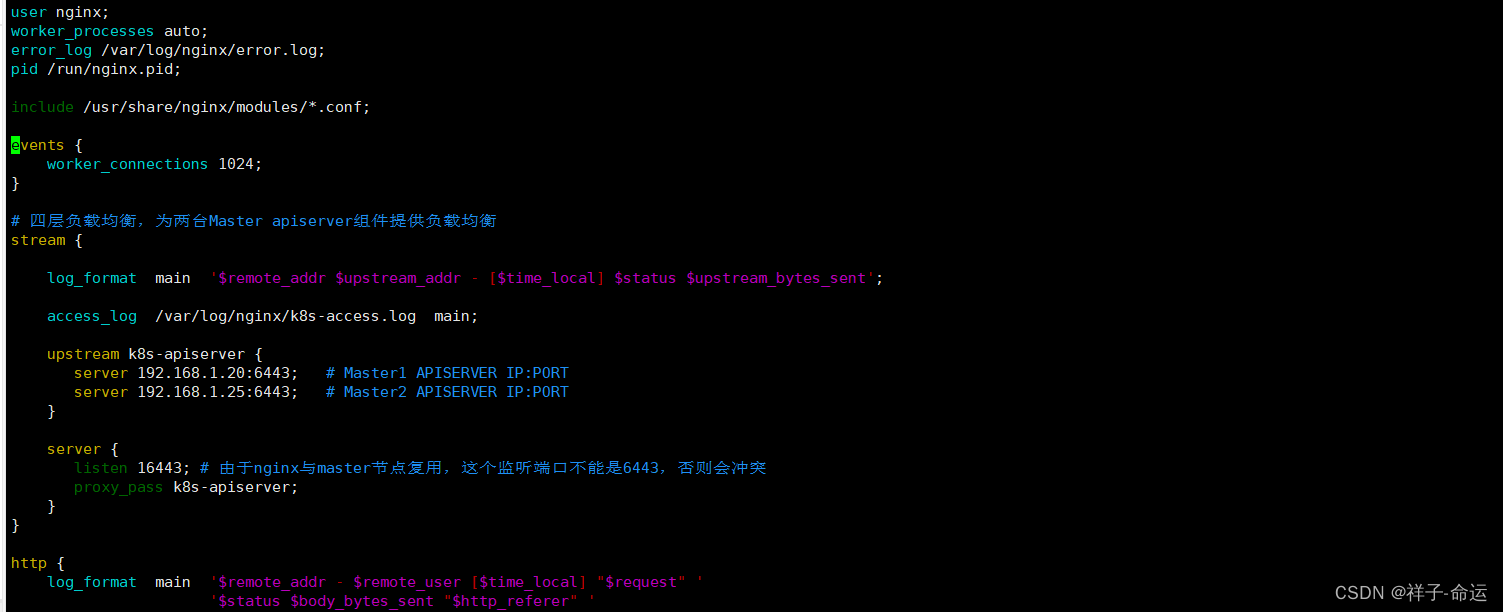

vim /etc/nginx/nginx.conf

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.20:6443; # Master1 APISERVER IP:PORT

server 192.168.1.25:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会

冲突

proxy_pass k8s-apiserver;

}

}

2,配置主keepalived

cat > /etc/keepalived/keepalived.conf <<EOF

global_defs {

notification_email {

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.1.27/24

}

track_script {

check_nginx

}

}

EOF

3,配置备keepalived

cat > /etc/keepalived/keepalived.conf <<EOF

global_defs {

notification_email {

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.1.27/24

}

track_script {

check_nginx

}

}

EOF

4,nginx工作状态检查脚本

cat > /etc/keepalived/check_nginx.sh <<EOF

#!/bin/bash

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

if [ $counter -eq 0 ]; then

service nginx start

sleep 2

counter=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$" )

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

#启动服务

systemctl daemon-reload

yum install nginx-mod-stream -y

systemctl start nginx

systemctl start keepalived

systemctl enable nginx keepalived

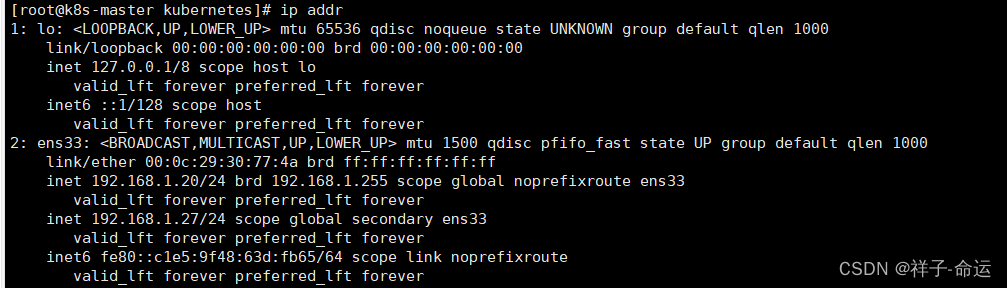

5,查看vip是否绑定

ip addr

五,愿为江水,与君相逢

到此k8s master高可用集群就安装完成了,想要安装三个master节点的根据上面安装nginx+keepalived和执行kubeadm join添加即可

如有问题或遗漏请留言指正。

谨以此文记录我们学习kubernetes的经历,希望能认识更多志同道合的朋友,一起分享遇到的问题和学习经验。

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)