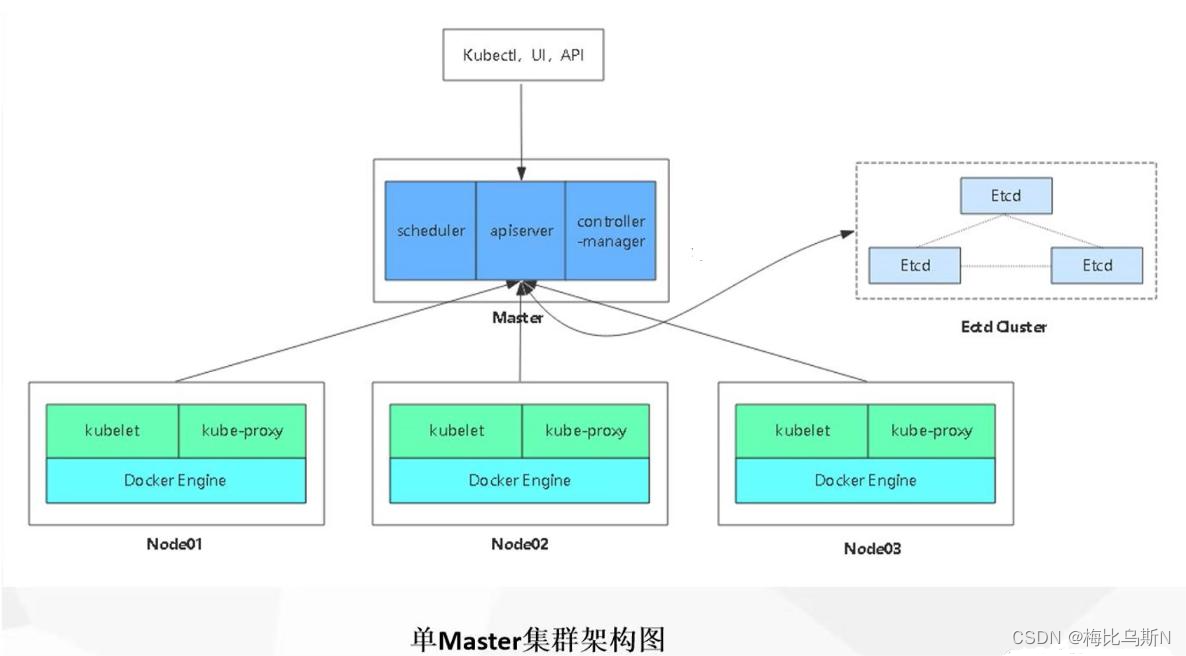

二进制部署K8S集群(单master)

二进制部署K8S集群

·

目录

一、架构图

二、部署步骤

1、实验环境

| 服务器类型 | IP地址 |

|---|---|

| master | 192.168.80.5 |

| node01 | 192.168.80.8 |

| node02 | 192.168.80.9 |

2、操作系统初始化配置

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

#关闭selinux

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#根据规划设置主机名

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

#在master添加hosts

cat >> /etc/hosts << EOF

192.168.80.5 master01

192.168.80.7 master02

192.168.80.8 node01

192.168.80.9 node02

EOF

#调整内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

sysctl --system

#时间同步

yum install ntpdate -y

ntpdate time.windows.com

3、部署 docker引擎

*所有node节点部署docker引擎

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker.service

systemctl enable docker.service 4、部署 etcd 集群

在master01节点上操作

#准备cfssl证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl*

#生成Etcd证书

mkdir /opt/k8s

cd /opt/k8s/

#上传 etcd-cert.sh 和 etcd.sh 到 /opt/k8s/ 目录中

chmod +x etcd-cert.sh etcd.sh

#创建用于生成CA证书、etcd 服务器证书以及私钥的目录

mkdir /opt/k8s/etcd-cert

mv etcd-cert.sh etcd-cert/

cd /opt/k8s/etcd-cert/

./etcd-cert.sh #生成CA证书、etcd 服务器证书以及私钥

ls

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem

#上传 etcd-v3.4.9-linux-amd64.tar.gz 到 /opt/k8s 目录中,启动etcd服务

https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

cd /opt/k8s/

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

ls etcd-v3.4.9-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

#创建用于存放 etcd 配置文件,命令文件,证书的目录

mkdir -p /opt/etcd/{cfg,bin,ssl}

cd /opt/k8s/etcd-v3.4.9-linux-amd64/

mv etcd etcdctl /opt/etcd/bin/

cp /opt/k8s/etcd-cert/*.pem /opt/etcd/ssl/

cd /opt/k8s/

./etcd.sh etcd01 192.168.80.5 etcd02=https://192.168.80.8:2380,etcd03=https://192.168.80.9:2380

#进入卡住状态等待其他节点加入,这里需要三台etcd服务同时启动,如果只启动其中一台后,服务会卡在那里,直到集群中所有etcd节点都已启动,可忽略这个情况

#可另外打开一个窗口查看etcd进程是否正常

ps -ef | grep etcd

#把etcd相关证书文件、命令文件和服务管理文件全部拷贝到另外两个etcd集群节点

scp -r /opt/etcd/ root@192.168.80.8:/opt/

scp -r /opt/etcd/ root@192.168.80.9:/opt/

scp /usr/lib/systemd/system/etcd.service root@192.168.80.8:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.80.9:/usr/lib/systemd/system/在node01节点上操作

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" #修改

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.8:2380" #修改

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.8:2379" #修改

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.8:2380" #修改

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.8:2379" #修改

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.5:2380,etcd02=https://192.168.80.8:2380,etcd03=https://192.168.80.9:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动etcd服务

systemctl start etcd

systemctl enable etcd

systemctl status etcd

在node02节点上操作

vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03" #修改

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.9:2380" #修改

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.9:2379" #修改

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.9:2380" #修改

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.9:2379" #修改

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.5:2380,etcd02=https://192.168.80.8:2380,etcd03=https://192.168.80.9:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动etcd服务

systemctl start etcd

systemctl enable etcd

systemctl status etcd检查etcd群集状态和查看成员列表

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.80.5:2379,https://192.168.80.8:2379,https://192.168.80.9:2379" endpoint health --write-out=table

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.80.5:2379,https://192.168.80.8:2379,https://192.168.80.9:2379" --write-out=table member list

5、部署 Master 组件

在master01节点上操作

#上传 master.zip 和 k8s-cert.sh 到 /opt/k8s 目录中,解压 master.zip 压缩包

cd /opt/k8s/

unzip master.zip

chmod +x *.sh

#创建kubernetes工作目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} -p

#创建用于生成CA证书、相关组件的证书和私钥的目录

mkdir /opt/k8s/k8s-cert

mv /opt/k8s/k8s-cert.sh /opt/k8s/k8s-cert

cd /opt/k8s/k8s-cert/

vim k8s-cert.sh

./k8s-cert.sh #生成CA证书、相关组件的证书和私钥

ls *pem

admin-key.pem apiserver-key.pem ca-key.pem kube-proxy-key.pem

admin.pem apiserver.pem ca.pem kube-proxy.pem

#复制CA证书、apiserver相关证书和私钥到 kubernetes工作目录的 ssl 子目录中

cp ca*pem apiserver*pem /opt/kubernetes/ssl/

#上传 kubernetes-server-linux-amd64.tar.gz 到 /opt/k8s/ 目录中,解压 kubernetes 压缩包

#下载地址:https://github.com/kubernetes/kubernetes/blob/release-1.20/CHANGELOG/CHANGELOG-1.20.md

#注:打开链接你会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。

cd /opt/k8s/

tar zxvf kubernetes-server-linux-amd64.tar.gz

#复制master组件的关键命令文件到 kubernetes工作目录的 bin 子目录中

cd /opt/k8s/kubernetes/server/bin

cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

ln -s /opt/kubernetes/bin/* /usr/local/bin/

#创建 bootstrap token 认证文件,apiserver 启动时会调用,然后就相当于在集群内创建了一个这个用户,接下来就可以用 RBAC 给他授权

cd /opt/k8s/

vim token.sh

#!/bin/bash

BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > /opt/kubernetes/cfg/token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

chmod +x token.sh

./token.sh

cat /opt/kubernetes/cfg/token.csv

#二进制文件、token、证书都准备好后,开启 apiserver 服务

cd /opt/k8s/

./apiserver.sh 192.168.80.5 https://192.168.80.5:2379,https://192.168.80.8:2379,https://192.168.80.9:2379

#检查进程是否启动成功

ps aux | grep kube-apiserver

netstat -natp | grep 6443 #安全端口6443用于接收HTTPS请求,用于基于Token文件或客户端证书等认证

#启动 scheduler 服务

cd /opt/k8s/

./scheduler.sh

ps aux | grep kube-scheduler

#启动 controller-manager 服务

./controller-manager.sh

ps aux | grep kube-controller-manager

#生成kubectl连接集群的kubeconfig文件

./admin.sh

#通过kubectl工具查看当前集群组件状态

kubectl get cs

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)