K8S搭建Zabbix6.2.6版本<详细版>

K8S搭建zabbix6

·

文章目录

简介

以下实验由yaml清单部署PV+zabbix-server,使用helm构建zabbix-proxy/agent完成

环境介绍

| 名称 | 版本信息 | 操作系统 | IP | 备注信息 |

|---|---|---|---|---|

| K8S集群 | 1.20.15 | Centos7.9 | 192.168.11.21 192.168.11.22 192.168.11.23 | 21:k8s-master 22:k8s-node01 23:k8s-node02 |

| Zabbix | 6.2.6 | Centos7.9 | 容器内 | 所有zabbix容器都在zabbix名称空间 |

| MySQL | 8.0.30-glic | Centos7.9 | 192.168.11.24 | MySQL程序在/home/application下 |

| NFS | Centos7.9 | 192.168.11.24 | 共享目录为/nfs |

K8S集群在此不做演示,请先搭建K8S集群再做以下实验

一、部署MySQL服务

1.1、准备软件包

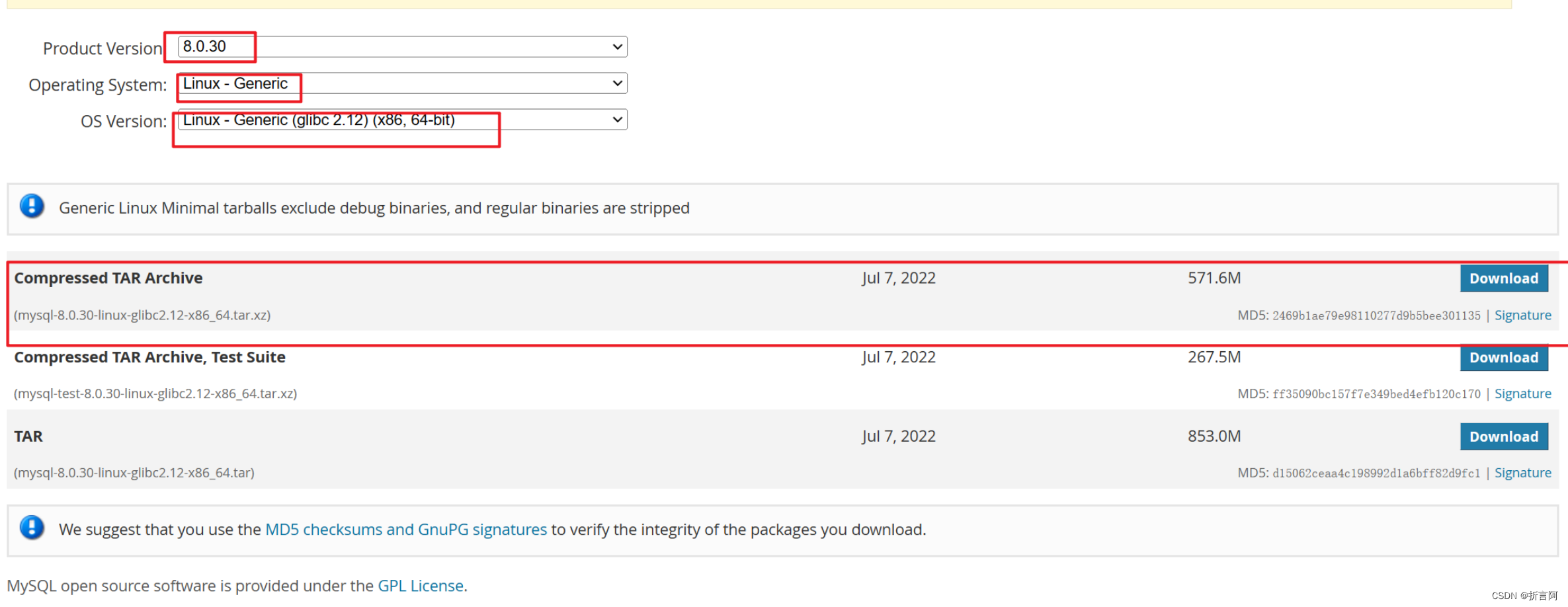

本次实验使用8.0.30-glibc版本:https://downloads.mysql.com/archives/community/

1.2、卸载mariadb

#查看是否存在MariaDB

rpm -qa|grep mariadb

#卸载mariadb

yum remove mariadb*

1.3、安装MySQL

root@mysql-nfs-server]─[~]

mkdir -p /home/application/mysql

##创建mysql工作目录

tar -xf mysql-8.0.30-linux-glibc2.12-x86_64.tar.xz

mv mysql-8.0.30-linux-glibc2.12-x86_64 /home/application/mysql/app

##将mysql目录移动并改名

echo "export PATH=$PATH:/home/application/mysql/app/bin" >> /etc/profile

##永久添加mysql的命令路径

. /etc/profile

##已经生效

useradd -s /sbin/nologin mysql -M

##创建mysql用户,用来运行mysql程序

mkdir -p /home/application/mysql/data

##创建mysql的数据目录

chown -Rf mysql.mysql /home/application/mysql/app

chown -Rf mysql.mysql /home/application/mysql/data

##修改目录属主属组

yum -y install wget cmake gcc gcc-c++ ncurses ncurses-devel libaio-devel openssl openssl-devel libaio libaio-devel

##安装mysql的依赖

mysqld --initialize-insecure --user=mysql --basedir=/home/application/mysql/app --datadir=/home/application/mysql/data

##初始化mysql

mkdir -p /home/application/mysql/data/logs

##创建mysql的日志存放目录

chown -Rf mysql.mysql /home/application/mysql/data/logs

##修改目录属主属组

修改mysql的配置文件

vim /etc/my.cnf

[mysqld]

user=mysql

basedir=/home/application/mysql/app

datadir=/home/application/mysql/data

character_set_server=utf8

collation-server=utf8_general_ci

skip-name-resolve=1

#设置只能用IP登录,不能用主机名,跳过域名解析

log_timestamps=SYSTEM

#日志时间

#慢日志设置

long_query_time=3

slow_query_log=ON

slow_query_log_file=/home/application/mysql/data/logs/slow_query.log

#通用日志设置

general_log=1

general_log_file=/home/application/mysql/data/logs/mysql_general.log

#错误日志设置

log-error=/home/application/mysql/data/logs/mysql-error.log

#设置默认的存储引擎

default-storage-engine=INNODB

#设置默认密码插件

default_authentication_plugin=mysql_native_password

port=3306

socket=/tmp/mysql.sock

max_connections=1000

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

max_allowed_packet=300M

[mysql]

socket=/tmp/mysql.sock

使用systemctl控制MySQL

vim /etc/systemd/system/mysqld.service

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/home/application/mysql/app/bin/mysqld --defaults-file=/etc/my.cnf

LimitNOFILE = 5000

启动服务

[15:47:30 root@mysql-server]─[~]

systemctl daemon-reload

systemctl enable --now mysqld.service

systemctl status mysqld.service

1.4、登录mysql数据库,创建用户、库、修改权限

[15:47:30 root@mysql-NFS-server]─[~]

mysql -uroot

mysql> alter user 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY 'zabbix';

##修改root的密码为zabbix

mysql> CREATE DATABASE zabbix DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_bin;

##创建zabbix库

mysql> CREATE USER 'zabbix'@'%' IDENTIFIED BY 'zabbix';

##创建zabbix用户,密码为zabbix

mysql> GRANT ALL PRIVILEGES ON *.* TO 'zabbix'@'%';

##修改zabbix为全权限用户

mysql> flush privileges;

##刷新权限

mysql> \q

二、搭建NFS

[16:23:51 root@mysql-nfs-server]─[~]

mkdir -p /nfs

#创建nfs挂载目录

yum -y install nfs-utils rpcbind

#安装nfs和rpc

echo "/nfs *(rw,sync,no_root_squash,no_subtree_check)" >> /etc/exports

systemctl start nfs && systemctl start rpcbind

systemctl enable nfs-server && systemctl enable rpcbind

showmount -e

##是否看到/nfs *字样

四、创建PV

[15:43:11 root@k8s-master1 ~]#

mkdir -p /webapp ##创建yaml文件存放目录

cd /webapp

4.1、创建nfs的deployment

[16:29:02 root@k8s-master1 webapp]#

vim nfs-client.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/xngczl/nfs-subdir-external-provisione:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs #注意这个值,可以自定义

- name: NFS_SERVER

value: 192.168.11.24 #nfs的IP,不一样要更换

- name: NFS_PATH

value: /nfs #共享目录,不一样要更换

volumes:

- name: nfs-client-root

nfs:

server: 192.168.11.24 #nfs的IP,不一样要更换

path: /nfs #共享目录,不一样要更换

4.2、创建nfs-rbac

[16:30:42 root@k8s-master1 webapp]#

vim nfs-client-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

4.3、创建nfs-sc

[16:32:54 root@k8s-master1 webapp]#

vim nfs-client-class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: course-nfs-storage

provisioner: fuseim.pri/ifs

4.4、启动PV

[16:34:12 root@k8s-master1 webapp]#

kubectl apply -f nfs-client.yaml

kubectl apply -f nfs-client-rbac.yaml

kubectl apply -f nfs-client-class.yaml

kubectl get po,sc

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-8579c9d69b-m6vp4 1/1 Running 0 13m

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/course-nfs-storage fuseim.pri/ifs Delete Immediate false 13m

五、创建zabbix-service

5.1、创建zabbix-service.yaml

[16:36:54 root@k8s-master1 webapp]#

vim zabbix-server.yaml

apiVersion: v1

kind: Namespace

metadata:

name: zabbix

---

apiVersion: v1

kind: Service

metadata:

name: zabbix-server

namespace: zabbix

labels:

app: zabbix-server

spec:

selector:

app: zabbix-server

ports:

- name: zabbix-server

port: 10051

nodePort: 30051

type: NodePort

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zabbix-scripts

namespace: zabbix

spec:

storageClassName: "course-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: zabbix-server

name: zabbix-server

namespace: zabbix

spec:

replicas: 1

selector:

matchLabels:

app: zabbix-server

template:

metadata:

labels:

app: zabbix-server

spec:

nodeSelector:

zabbix-server: "true"

hostNetwork: true

containers:

- image: zabbix/zabbix-server-mysql:6.2.6-centos

imagePullPolicy: IfNotPresent

name: zabbix-server-mysql

volumeMounts:

- mountPath: /usr/lib/zabbix/alertscripts

name: zabbix-scripts

env:

- name: DB_SERVER_HOST

value: 192.168.11.24

- name: DB_SERVER_PORT

value: "3306"

- name: MYSQL_DATABASE

value: zabbix

- name: MYSQL_USER

value: zabbix

- name: MYSQL_PASSWORD

value: zabbix

- name: ZBX_CACHESIZE

value: "512M"

- name: ZBX_HISTORYCACHESIZE

value: "128M"

- name: ZBX_HISTORYINDEXCACHESIZE

value: "128M"

- name: ZBX_TRENDCACHESIZE

value: "128M"

- name: ZBX_VALUECACHESIZE

value: "256M"

- name: ZBX_TIMEOUT

value: "30"

resources:

requests:

cpu: 500m

memory: 500Mi

limits:

cpu: 1000m

memory: 1Gi

volumes:

- name: zabbix-scripts

persistentVolumeClaim:

claimName: zabbix-scripts

5.2、启动zabbix-server

[16:39:33 root@k8s-master1 webapp]#

kubectl label node k8s-node01 zabbix-server=true

#因为设置只能允许再标签为zabbix-server=true的节点上,所以设置一个标签

kubectl apply -f zabbix-server.yaml

kubectl get all -n zabbix

NAME READY STATUS RESTARTS AGE

pod/zabbix-server-69d5fdb86d-z6h58 1/1 Running 0 47s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/zabbix-server NodePort 10.101.70.252 <none> 10051:30051/TCP 47s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/zabbix-server 1/1 1 1 47s

NAME DESIRED CURRENT READY AGE

replicaset.apps/zabbix-server-69d5fdb86d 1 1 1 47s

5.3、启动zabbix的web页面

[16:47:03 root@k8s-master1 webapp]#

vim zabbix-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: zabbix-web

name: zabbix-web

namespace: zabbix

spec:

replicas: 1

selector:

matchLabels:

app: zabbix-web

template:

metadata:

labels:

app: zabbix-web

spec:

containers:

- image: zabbix/zabbix-web-nginx-mysql:6.2.6-centos

imagePullPolicy: IfNotPresent

name: zabbix-web-nginx-mysql

env:

- name: DB_SERVER_HOST

value: 192.168.11.24 #指定数据数据库IP

- name: MYSQL_USER

value: zabbix #登录数据库的用户

- name: MYSQL_PASSWORD

value: zabbix #密码

- name: ZBX_SERVER_HOST

value: zabbix-server

- name: PHP_TZ

value: Asia/shanghai

resources:

requests:

cpu: 500m

memory: 500Mi

limits:

cpu: 1000m

memory: 1Gi

---

apiVersion: v1

kind: Service

metadata:

labels:

app: zabbix-web

name: zabbix-web

namespace: zabbix

spec:

ports:

- name: web

port: 8080

protocol: TCP

targetPort: 8080

nodePort: 30008 #访问的端口

selector:

app: zabbix-web

type: NodePort

5.4、启动zabbix的web页面

[16:51:25 root@k8s-master1 webapp]#

kubectl apply -f zabbix-web.yaml

kubectl get all -n zabbix

NAME READY STATUS RESTARTS AGE

pod/zabbix-server-69d5fdb86d-z6h58 1/1 Running 0 3m20s

pod/zabbix-web-fdb545749-dhk7b 1/1 Running 0 72s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/zabbix-server NodePort 10.101.70.252 <none> 10051:30051/TCP 3m20s

service/zabbix-web NodePort 10.110.39.191 <none> 8080:30008/TCP 72s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/zabbix-server 1/1 1 1 3m20s

deployment.apps/zabbix-web 1/1 1 1 72s

NAME DESIRED CURRENT READY AGE

replicaset.apps/zabbix-server-69d5fdb86d 1 1 1 3m20s

replicaset.apps/zabbix-web-fdb545746 1 1 1 72s

5.5、访问zabbix的web页面

浏览器访问192.168.11.21:30008

六、Helm部署zabbix-proxy&zabbix-agent

6.1、安装Helm工具

[17:00:37 root@k8s-master1 webapp]

mkdir -p /webapp/helm

cd /webapp/helm

[17:02:10 root@k8s-master1 helm]

wget https://get.helm.sh/helm-v3.8.1-linux-amd64.tar.gz

##安装Helm工具

tar zxvf helm-v3.8.1-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin/helm

6.2、添加Helm Chart Repository

[17:02:33 root@k8s-master1 helm]#

helm repo add zabbix-chart-6.2 https://cdn.zabbix.com/zabbix/integrations/kubernetes-helm/6.2/

helm repo list

NAME URL

zabbix-chart-6.2 https://cdn.zabbix.com/zabbix/integrations/kubernetes-helm/6.2

6.3、Zabbix Helm Chart,并解压

[17:04:38 root@k8s-master1 helm]#

helm pull zabbix-chart-6.2/zabbix-helm-chrt

##下载chart

tar -xf zabbix-helm-chrt-1.1.1.tgz

6.4、配置Chart

[17:07:19 root@k8s-master1 helm]#

cd /webapp/helm/zabbix-helm-chrt/

[17:07:41 root@k8s-master1 zabbix-helm-chrt]#

vim Chart.yaml

apiVersion: v2

appVersion: 6.2.0

dependencies:

- condition: kubeStateMetrics.enabled

name: kube-state-metrics

repository: https://charts.bitnami.com/bitnami

version: 3.5.*

description: A Helm chart for deploying Zabbix agent and proxy

home: https://www.zabbix.com/

icon: https://assets.zabbix.com/img/logo/zabbix_logo_500x131.png

name: zabbix-helm-chrt

type: application

version: 1.1.1

6.5、配置values.yaml

[17:10:49 root@k8s-master1 zabbix-helm-chrt]#

vim values.yaml

## nameOverride -- Override name of app

nameOverride: ""

## fullnameOverride -- Override the full qualified app name

fullnameOverride: "zabbix"

## kubeStateMetricsEnabled -- If true, deploys the kube-state-metrics deployment

kubeStateMetricsEnabled: true

## Service accoun for Kubernetes API

rbac:

## rbac.create Specifies whether the RBAC resources should be created

create: true

additionalRulesForClusterRole: []

## - apiGroups: [ "" ]

## resources:

## - nodes/proxy

## verbs: [ "get", "list", "watch" ]

serviceAccount:

## serviceAccount.create Specifies whether a service account should be created

create: true

## serviceAccount.name The name of the service account to use. If not set name is generated using the fullname template

name: zabbix-service-account

## **Zabbix proxy** configurations

zabbixProxy:

## Enables use of **Zabbix proxy**

enabled: true

containerSecurityContext: {}

resources: {}

image:

## Zabbix proxy Docker image name

repository: zabbix/zabbix-proxy-sqlite3

## Tag of Docker image of Zabbix proxy

tag: 6.2.6-centos

pullPolicy: IfNotPresent

## List of dockerconfig secrets names to use when pulling images

pullSecrets: []

env:

## The variable allows to switch Zabbix proxy mode. Bu default, value is 0 - active proxy. Allowed values are 0 and 1.

- name: ZBX_PROXYMODE

value: 0

## Zabbix proxy hostname

- name: ZBX_HOSTNAME

value: zabbix-proxy-k8s

## Zabbix server host

## If ProxyMode is set to active mode:

## IP address or DNS name of Zabbix server to get configuration data from and send data to.

## If ProxyMode is set to passive mode:

## List of comma delimited IP addresses, optionally in CIDR notation, or DNS names of Zabbix server. Incoming connections will be accepted only from the addresses listed here. If IPv6 support is enabled then '127.0.0.1', '::127.0.0.1', '::ffff:127.0.0.1' are treated equally and '::/0' will allow any IPv4 or IPv6 address. '0.0.0.0/0' can be used to allow any IPv4 address.

## Example: Server=127.0.0.1,192.168.1.0/24,::1,2001:db8::/32,zabbix.example.com

- name: ZBX_SERVER_HOST

value: "172.16.201.31"

## Zabbix server port

- name: ZBX_SERVER_PORT

value: 10051

## The variable is used to specify debug level. By default, value is 3

- name: ZBX_DEBUGLEVEL

value: 3

## Cache size

- name: ZBX_CACHESIZE

value: 128M

## The variable enable communication with Zabbix Java Gateway to collect Java related checks

- name: ZBX_JAVAGATEWAY_ENABLE

value: false

## How often proxy retrieves configuration data from Zabbix server in seconds. Active proxy parameter. Ignored for passive proxies.

- name: ZBX_CONFIGFREQUENCY

value: 60

## List can be extended with other environment variables listed here: https://github.com/zabbix/zabbix-docker/tree/5.4/agent/alpine#other-variables

## For example:

## The variable is list of comma separated loadable Zabbix modules.

## - name: ZBX_LOADMODULE

## value : dummy1.so,dummy2.so

service:

annotations: {}

labels: {}

## Type of service for Zabbix proxy

type: ClusterIP

## Port to expose service

port: 10051

## Port of application pod

targetPort: 10051

## Zabbix proxy Ingress externalIPs with optional path

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

## Must be provided if ProxyMode is set to passive mode

externalIPs: []

## Loadbalancer IP

## Only use if service.type is "LoadBalancer"

##

loadBalancerIP: ""

loadBalancerSourceRanges: []

## Node selector for Zabbix proxy

nodeSelector: {}

## Tolerations configurations for Zabbix proxy

tolerations: {}

## Affinity configurations for Zabbix proxy

affinity: {}

persistentVolume:

## If true, Zabbix proxy will create/use a Persistent Volume Claim

##

enabled: false

## Zabbix proxy data Persistent Volume access modes

## Must match those of existing PV or dynamic provisioner

## Ref: http://kubernetes.io/docs/user-guide/persistent-volumes/

##

accessModes:

- ReadWriteOnce

## Zabbix proxy data Persistent Volume Claim annotations

##

annotations: {}

## Zabbix proxy data Persistent Volume existing claim name

## Requires zabbixProxy.persistentVolume.enabled: true

## If defined, PVC must be created manually before volume will be bound

existingClaim: ""

## Zabbix proxy data Persistent Volume mount root path

##

mountPath: /data

## Zabbix proxy data Persistent Volume size

##

size: 2Gi

## Zabbix proxy data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

storageClass: "-"

## Zabbix proxy data Persistent Volume Binding Mode

## If defined, volumeBindingMode: <volumeBindingMode>

## If undefined (the default) or set to null, no volumeBindingMode spec is

## set, choosing the default mode.

##

volumeBindingMode: ""

## Subdirectory of Zabbix proxy data Persistent Volume to mount

## Useful if the volume's root directory is not empty

##

subPath: ""

## **Zabbix agent** configurations

zabbixAgent:

## Enables use of Zabbix agent

enabled: true

resources: {}

## requests:

## cpu: 100m

## memory: 54Mi

## limits:

## cpu: 100m

## memory: 54Mi

securityContext: {}

# fsGroup: 65534

# runAsGroup: 65534

# runAsNonRoot: true

# runAsUser: 65534

containerSecurityContext: {}

## capabilities:

## add:

## - SYS_TIME

## Expose the service to the host network

hostNetwork: true

# Specify dns configuration options for agent containers e.g ndots

## ref: https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/#pod-dns-config

dnsConfig: {}

# options:

# - name: ndots

# value: "1"

## Share the host process ID namespace

hostPID: true

## If true, agent pods mounts host / at /host/root

##

hostRootFsMount: true

extraHostVolumeMounts: []

## - name: <mountName>

## hostPath: <hostPath>

## mountPath: <mountPath>

## readOnly: true|false

## mountPropagation: None|HostToContainer|Bidirectional

image:

## Zabbix agent Docker image name

repository: zabbix/zabbix-agent2

## Tag of Docker image of Zabbix agent

tag: 6.2.6-centos

pullPolicy: IfNotPresent

## List of dockerconfig secrets names to use when pulling images

pullSecrets: []

env:

## Zabbix server host

- name: ZBX_SERVER_HOST

value: 0.0.0.0/0

## Zabbix server port

- name: ZBX_SERVER_PORT

value: 10051

## This variable is boolean (true or false) and enables or disables feature of passive checks. By default, value is true

- name: ZBX_PASSIVE_ALLOW

value: true

## The variable is comma separated list of allowed Zabbix server or proxy hosts for connections to Zabbix agent container.

- name: ZBX_PASSIVESERVERS

value: 0.0.0.0/0

## This variable is boolean (true or false) and enables or disables feature of active checks

- name: ZBX_ACTIVE_ALLOW

value: false

## The variable is used to specify debug level, from 0 to 5

- name: ZBX_DEBUGLEVEL

value: 3

## The variable is used to specify timeout for processing checks. By default, value is 4.

- name: ZBX_TIMEOUT

value: 4

## List can be extended with other environment variables listed here: https://github.com/zabbix/zabbix-docker/tree/5.4/agent/alpine#other-variables

## For example:

## The variable is comma separated list of allowed Zabbix server or proxy hosts for connections to Zabbix agent container. You may specify port.

## - name: ZBX_ACTIVESERVERS

## value: ''

## The variable is list of comma separated loadable Zabbix modules. It works with volume /var/lib/zabbix/modules.

## - name: ZBX_LOADMODULE

## value: ''

## Node selector for Agent. Only supports Linux.

nodeSelector:

kubernetes.io/os: linux

## Tolerations configurations

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

## Affinity configurations

affinity: {}

serviceAccount:

## Specifies whether a ServiceAccount should be created

create: true

## The name of the ServiceAccount to use.

## If not set and create is true, a name is generated using the fullname template

name: zabbix-agent-service-account

annotations: {}

imagePullSecrets: []

automountServiceAccountToken: false

service:

type: ClusterIP

port: 10050

targetPort: 10050

nodePort: 10050

portName: zabbix-agent

listenOnAllInterfaces: true

annotations:

agent.zabbix/monitor: "true"

rbac:

## If true, create & use RBAC resources

##

create: true

## If true, create & use Pod Security Policy resources

## https://kubernetes.io/docs/concepts/policy/pod-security-policy/

## PodSecurityPolicies disabled by default because they are deprecated in Kubernetes 1.21 and will be removed in Kubernetes 1.25.

## If you are using PodSecurityPolicies you can enable the previous behaviour by setting `rbac.pspEnabled: true`

pspEnabled: false

pspAnnotations: {}

6.6、修改kube-state-metrics 依赖Chart参数配置

[17:12:29 root@k8s-master1 zabbix-helm-chrt]#

cd charts/kube-state-metrics/

[17:14:05 root@k8s-master1 kube-state-metrics]#

vim values.yaml

## nameOverride -- Override name of app

nameOverride: ""

## fullnameOverride -- Override the full qualified app name

fullnameOverride: "zabbix"

## kubeStateMetricsEnabled -- If true, deploys the kube-state-metrics deployment

kubeStateMetricsEnabled: true

## Service accoun for Kubernetes API

rbac:

## rbac.create Specifies whether the RBAC resources should be created

create: true

additionalRulesForClusterRole: []

## - apiGroups: [ "" ]

## resources:

## - nodes/proxy

## verbs: [ "get", "list", "watch" ]

serviceAccount:

## serviceAccount.create Specifies whether a service account should be created

create: true

## serviceAccount.name The name of the service account to use. If not set name is generated using the fullname template

name: zabbix-service-account

## **Zabbix proxy** configurations

zabbixProxy:

## Enables use of **Zabbix proxy**

enabled: true

containerSecurityContext: {}

resources: {}

image:

## Zabbix proxy Docker image name

repository: zabbix/zabbix-proxy-sqlite3

## Tag of Docker image of Zabbix proxy

tag: 6.2.6-centos

pullPolicy: IfNotPresent

## List of dockerconfig secrets names to use when pulling images

pullSecrets: []

env:

## The variable allows to switch Zabbix proxy mode. Bu default, value is 0 - active proxy. Allowed values are 0 and 1.

- name: ZBX_PROXYMODE

value: 0

## Zabbix proxy hostname

- name: ZBX_HOSTNAME

value: zabbix-proxy-k8s

## Zabbix server host

## If ProxyMode is set to active mode:

## IP address or DNS name of Zabbix server to get configuration data from and send data to.

## If ProxyMode is set to passive mode:

## List of comma delimited IP addresses, optionally in CIDR notation, or DNS names of Zabbix server. Incoming connections will be accepted only from the addresses listed here. If IPv6 support is enabled then '127.0.0.1', '::127.0.0.1', '::ffff:127.0.0.1' are treated equally and '::/0' will allow any IPv4 or IPv6 address. '0.0.0.0/0' can be used to allow any IPv4 address.

## Example: Server=127.0.0.1,192.168.1.0/24,::1,2001:db8::/32,zabbix.example.com

- name: ZBX_SERVER_HOST

value: "172.16.201.31"

## Zabbix server port

- name: ZBX_SERVER_PORT

value: 10051

## The variable is used to specify debug level. By default, value is 3

- name: ZBX_DEBUGLEVEL

value: 3

## Cache size

- name: ZBX_CACHESIZE

value: 128M

## The variable enable communication with Zabbix Java Gateway to collect Java related checks

- name: ZBX_JAVAGATEWAY_ENABLE

value: false

## How often proxy retrieves configuration data from Zabbix server in seconds. Active proxy parameter. Ignored for passive proxies.

- name: ZBX_CONFIGFREQUENCY

value: 60

## List can be extended with other environment variables listed here: https://github.com/zabbix/zabbix-docker/tree/5.4/agent/alpine#other-variables

## For example:

## The variable is list of comma separated loadable Zabbix modules.

## - name: ZBX_LOADMODULE

## value : dummy1.so,dummy2.so

service:

annotations: {}

labels: {}

## Type of service for Zabbix proxy

type: ClusterIP

## Port to expose service

port: 10051

## Port of application pod

targetPort: 10051

## Zabbix proxy Ingress externalIPs with optional path

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

## Must be provided if ProxyMode is set to passive mode

externalIPs: []

## Loadbalancer IP

## Only use if service.type is "LoadBalancer"

##

loadBalancerIP: ""

loadBalancerSourceRanges: []

## Node selector for Zabbix proxy

nodeSelector: {}

## Tolerations configurations for Zabbix proxy

tolerations: {}

## Affinity configurations for Zabbix proxy

affinity: {}

persistentVolume:

## If true, Zabbix proxy will create/use a Persistent Volume Claim

##

enabled: false

## Zabbix proxy data Persistent Volume access modes

## Must match those of existing PV or dynamic provisioner

## Ref: http://kubernetes.io/docs/user-guide/persistent-volumes/

##

accessModes:

- ReadWriteOnce

## Zabbix proxy data Persistent Volume Claim annotations

##

annotations: {}

## Zabbix proxy data Persistent Volume existing claim name

## Requires zabbixProxy.persistentVolume.enabled: true

## If defined, PVC must be created manually before volume will be bound

existingClaim: ""

## Zabbix proxy data Persistent Volume mount root path

##

mountPath: /data

## Zabbix proxy data Persistent Volume size

##

size: 2Gi

## Zabbix proxy data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

storageClass: "-"

## Zabbix proxy data Persistent Volume Binding Mode

## If defined, volumeBindingMode: <volumeBindingMode>

## If undefined (the default) or set to null, no volumeBindingMode spec is

## set, choosing the default mode.

##

volumeBindingMode: ""

## Subdirectory of Zabbix proxy data Persistent Volume to mount

## Useful if the volume's root directory is not empty

##

subPath: ""

## **Zabbix agent** configurations

zabbixAgent:

## Enables use of Zabbix agent

enabled: true

resources: {}

## requests:

## cpu: 100m

## memory: 54Mi

## limits:

## cpu: 100m

## memory: 54Mi

securityContext: {}

# fsGroup: 65534

# runAsGroup: 65534

# runAsNonRoot: true

# runAsUser: 65534

containerSecurityContext: {}

## capabilities:

## add:

## - SYS_TIME

## Expose the service to the host network

hostNetwork: true

# Specify dns configuration options for agent containers e.g ndots

## ref: https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/#pod-dns-config

dnsConfig: {}

# options:

# - name: ndots

# value: "1"

## Share the host process ID namespace

hostPID: true

## If true, agent pods mounts host / at /host/root

##

hostRootFsMount: true

extraHostVolumeMounts: []

## - name: <mountName>

## hostPath: <hostPath>

## mountPath: <mountPath>

## readOnly: true|false

## mountPropagation: None|HostToContainer|Bidirectional

image:

## Zabbix agent Docker image name

repository: zabbix/zabbix-agent2

## Tag of Docker image of Zabbix agent

tag: 6.2.6-centos

pullPolicy: IfNotPresent

## List of dockerconfig secrets names to use when pulling images

pullSecrets: []

env:

## Zabbix server host

- name: ZBX_SERVER_HOST

value: 0.0.0.0/0

## Zabbix server port

- name: ZBX_SERVER_PORT

value: 10051

## This variable is boolean (true or false) and enables or disables feature of passive checks. By default, value is true

- name: ZBX_PASSIVE_ALLOW

value: true

## The variable is comma separated list of allowed Zabbix server or proxy hosts for connections to Zabbix agent container.

- name: ZBX_PASSIVESERVERS

value: 0.0.0.0/0

## This variable is boolean (true or false) and enables or disables feature of active checks

- name: ZBX_ACTIVE_ALLOW

value: false

## The variable is used to specify debug level, from 0 to 5

- name: ZBX_DEBUGLEVEL

value: 3

## The variable is used to specify timeout for processing checks. By default, value is 4.

- name: ZBX_TIMEOUT

value: 4

## List can be extended with other environment variables listed here: https://github.com/zabbix/zabbix-docker/tree/5.4/agent/alpine#other-variables

## For example:

## The variable is comma separated list of allowed Zabbix server or proxy hosts for connections to Zabbix agent container. You may specify port.

## - name: ZBX_ACTIVESERVERS

## value: ''

## The variable is list of comma separated loadable Zabbix modules. It works with volume /var/lib/zabbix/modules.

## - name: ZBX_LOADMODULE

## value: ''

## Node selector for Agent. Only supports Linux.

nodeSelector:

kubernetes.io/os: linux

## Tolerations configurations

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

## Affinity configurations

affinity: {}

serviceAccount:

## Specifies whether a ServiceAccount should be created

create: true

## The name of the ServiceAccount to use.

## If not set and create is true, a name is generated using the fullname template

name: zabbix-agent-service-account

annotations: {}

imagePullSecrets: []

automountServiceAccountToken: false

service:

type: ClusterIP

port: 10050

targetPort: 10050

nodePort: 10050

portName: zabbix-agent

listenOnAllInterfaces: true

annotations:

agent.zabbix/monitor: "true"

rbac:

## If true, create & use RBAC resources

##

create: true

## If true, create & use Pod Security Policy resources

## https://kubernetes.io/docs/concepts/policy/pod-security-policy/

## PodSecurityPolicies disabled by default because they are deprecated in Kubernetes 1.21 and will be removed in Kubernetes 1.25.

## If you are using PodSecurityPolicies you can enable the previous behaviour by setting `rbac.pspEnabled: true`

pspEnabled: false

pspAnnotations: {}

6.7、Helm 安装zabbix Chart

[17:17:04 root@k8s-master1 kube-state-metrics]#

cd /webapp/helm/zabbix-helm-chrt/

[17:17:32 root@k8s-master1 zabbix-helm-chrt]#

helm install zabbix . --dependency-update -n zabbix

NAME: zabbix

LAST DEPLOYED: Fri Dec 30 17:18:22 2023

NAMESPACE: zabbix

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing zabbix-helm-chrt.

Your release is named zabbix.

Zabbix agent installed: "zabbix/zabbix-agent2:6.2.6-centos"

Zabbix proxy installed: "zabbix/zabbix-proxy-sqlite3:6.2.6-centos"

Annotations:

app.kubernetes.io/name: zabbix

helm.sh/chart: zabbix-helm-chrt-1.1.1

app.kubernetes.io/version: "6.2.0"

app.kubernetes.io/managed-by: Helm

Service account created:

zabbix-service-account

To learn more about the release, try:

$ helm status zabbix

$ helm get all zabbix

查看pod

[17:19:38 root@k8s-master1 zabbix-helm-chrt]#

kubectl get pods -n zabbix

NAME READY STATUS RESTARTS AGE

zabbix-agent-cm7fv 1/1 Running 0 2m40s

zabbix-agent-mj6hb 1/1 Running 0 2m40s

zabbix-agent-nqtfc 1/1 Running 0 2m40s

zabbix-kube-state-metrics-f96fb757b-dhlth 1/1 Running 0 2m40s

zabbix-proxy-6b86dfd8df-sk7s6 1/1 Running 0 2m40s

zabbix-server-69d5fdb86-z5h58 1/1 Running 0 34m

zabbix-web-fdb545749-dhk7b 1/1 Running 0 32m

7、登录Web界面配置proxy

7.1、创建proxy

Proxy name:zabbix-proxy-k8s

7.2、验证proxy状态

要等此处不红继续往下走,如果等待几分钟后一只为红色把pod删了试一下(会自己创建):kubectl delete pod -n zabbix zabbix-proxy-79dcdc48bd-m5kf8

7.3、创建主机组

7.4、添加主机

7.5、查看IP查看token

[17:29:16 root@k8s-master1 ~]#

kubectl get endpoints -n zabbix

##查看ip

kubectl get secret zabbix-service-account -n zabbix -o jsonpath={.data.token} | base64 -d

##查看token,配置zabbix时使用。

7.6、定义变量

{$KUBE.API.ENDPOINT.URL} : https://192.168.11.21:6443/api

{$KUBE.API.TOKEN}: XXXXXXXX [上面获取到的token]

{$KUBE.NODES.ENDPOINT.NAME}: zabbix-agent 【通过kubectl get ep -n zabbix 获取到】

7.7、 创建k8s-cluster主机,用于自动发现服务组件

7.7.1、创建主机

Host name: k8s-cluster

Templates: 选择Templates 下的Kubernetes cluster state by HTTP 模板,用于自动发现K8S节点主机

Host groups: K8S Server

Monitored by proxy: 选择 zabbix-proxy-k8s 代理节点

7.7.2、定义变量

{$KUBE.API.HOST}: 192.168.11.21

{$KUBE.API.PORT}:6443

{$KUBE.API.TOKEN}: XXXXX [上面获取到的token]

{$KUBE.API.URL} : https://192.168.11.21:6443

{$KUBE.API_SERVER.PORT}:6443

{$KUBE.API_SERVER.SCHEME}:https

{$KUBE.CONTROLLER_MANAGER.PORT}:10252

{$KUBE.CONTROLLER_MANAGER.SCHEME}:http

{$KUBE.KUBELET.PORT}:10250

{$KUBE.KUBELET.SCHEME}:https

{$KUBE.SCHEDULER.PORT}:10251

{$KUBE.SCHEDULER.SCHEME}:http

{$KUBE.STATE.ENDPOINT.NAME}:zabbix-kube-state-metrics 【通过kubectl get ep -n zabbix 获取到】

IP端口以实际为准

7.7.3、验证主机

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)