保姆级二进制安装高可用k8s集群文档(1.23.8)

Etcd 是一个分布式键值存储系统, Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库, 为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,你也 可以使用5台组建集群,可容忍2台机器故障。O指定该证书的 Group 为 k8s,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时

保姆级二进制安装高可用k8s集群文档

k8s搭建方式

市面上有很多方式,最终主要分两种,kubeadmin 和二进制.

kubeadmin安装的

- sealos

- kuboard-sprary

- rancher

- 其他工具

Kubeadm是一个K8s部署工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。

官方地址: https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

二进制安装的:

- kubesz (ansible playbook 安装) 推荐

- 手动搭建

从github下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。

Kubeadm降低部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想更容易可控,推荐使用二进

制包部署Kubernetes集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

前期准备

- OS : Centos7

- 升级内核到5.4

- 禁用swap

- 修改时区、时间同步、集群免密

- 关闭防火墙、开启内核ip转发功能

- 安装基础软件 具体见install.sh 的内容

- …

集群规划

| ip | hostname | 配置 | 组件 | 角色 |

|---|---|---|---|---|

| 10.50.10.31 | master1 | 8C16G 40GB | kubelet / kube-proxy / kube-scheduler / kube-controller-manager / etcd / docker | master |

| 10.50.10.32 | master2 | 8C16G 40GB | kubelet / kube-proxy / kube-scheduler / kube-controller-manager/ etcd / docker | master |

| 10.50.10.33 | master3 | 8C16G 40GB | kubelet / kube-proxy / kube-scheduler / kube-controller-manager / etcd / docker / kubelet / kube-proxy | master |

| 10.50.10.34 | node1 | 8C16G 40GB | kubelet / kube-proxy / docker | node |

| 10.50.10.35 | node2 | 8C16G 40GB | kubelet / kube-proxy / nginx/ keepalived / docker | node |

| 10.50.10.36 | node3 | 8C16G 40GB | kubelet / kube-proxy / nginx/ keepalived / docker | node |

| 10.50.10.108 | VIP | haproxy + keepalived 负责API-SERVER 高可用 |

预留ip

10.50.10.28

10.50.10.29

10.50.10.30 暂时保留

10.50.10.241

10.50.10.242

10.50.10.250

10.50.10.251

机器准备

1、master vagrantfile

# -*- mode: ruby -*-

Vagrant.configure("2") do |config|

config.vm.box_check_update = false

config.vm.provider 'virtualbox' do |vb|

end

$num_instances = 3

(1..$num_instances).each do |i|

config.vm.define "node#{i}" do |node|

node.vm.box = "centos-7"

node.vm.hostname = "master#{i}"

ip = "10.50.10.#{i+30}"

node.vm.network "private_network", ip: ip,bridge: bond0

node.vm.provider "virtualbox" do |vb|

vb.memory = "16384"

vb.cpus = 8

vb.name = "master#{i}"

end

node.vm.provision "shell", path: "install.sh"

end

end

end

2、master install.sh

#!/usr/bin/env bash

# yum net-tools & udate route

cd /tmp && curl -O 10.50.10.25/pigsty/net-tools-2.0-0.25.20131004git.el7.x86_64.rpm

yum -y install net-tools-2.0-0.25.20131004git.el7.x86_64.rpm

route add default gw 10.50.10.254 eth1

route -n

# modify ssh parpmeter passwd=yes

sed -ri '/^PasswordAuthentication/cPasswordAuthentication yes' /etc/ssh/sshd_config

systemctl restart sshd

# change time zone

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

timedatectl set-timezone Asia/Shanghai

rm /etc/yum.repos.d/CentOS-Base.repo

curl 10.50.10.25/pigsty/Centos-Base.repo -o /etc/yum.repos.d/CentOS-Base.repo

# install kmod and ceph-common for rook

yum install -y wget curl conntrack-tools vim net-tools telnet tcpdump bind-utils socat ntp kmod dos2unix

kubernetes_release="/opt/kubernetes-server-linux-amd64.tar.gz"

# Download Kubernetes

#if [[ $(hostname) == "master1" ]] && [[ ! -f "$kubernetes_release" ]]; then

if [[ ! -f "$kubernetes_release" ]]; then

# wget 10.50.10.25/pigsty/kubernetes-server-linux-amd64.tar.gz -P /opt/

fi

echo 'disable selinux'

setenforce 0

sed -i 's/=enforcing/=disabled/g' /etc/selinux/config

echo 'enable iptable kernel parameter'

cat >> /etc/sysctl.conf <<EOF

net.ipv4.ip_forward=1

EOF

sysctl -p

# 将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

echo 'set host name resolution'

cat >> /etc/hosts <<EOF

10.50.10.31 master1

10.50.10.32 master1

10.50.10.33 master1

10.50.10.34 node1

10.50.10.35 node1

10.50.10.36 node1

EOF

cat /etc/hosts

echo 'disable swap'

swapoff -a

sed -i '/swap/s/^/#/' /etc/fstab

#install docker

#yum -y install docker-ce.x86_64

#systemctl daemon-reload && systemctl enable --now docker

#download etcd

#mkdir -p /opt/etcd/ && curl 10.50.10.25/pigsty/etcd-v3.4.9-linux-amd64.tar.gz -o /opt/etcd/etcd-v3.4.9-linux-amd64.tar.gz

# download kernel

cd /tmp && curl -O http://10.50.10.25/pigsty/kernel-lt-5.4.200-1.el7.elrepo.x86_64.rpm && rpm -Uvh kernel-lt-5.4.200-1.el7.elrepo.x86_64.rpm

#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。

grub2-set-default 0

# reboot 内核升级生效

reboot

# 启动node exporter 监控

curl -O 10.50.10.25/pigsty/node_exporter-1.3.1-1.el7.x86_64.rpm && rpm -ivh node_exporter-1.3.1-1.el7.x86_64.rpm && rm -rf node_exporter-1.3.1-1.el7.x86_64.rpm && systemctl enable node_exporter.service --now

3、node vagrantfile

Vagrant.configure("2") do |config|

config.vm.box_check_update = false

config.vm.provider 'virtualbox' do |vb|

end

$num_instances = 3

(1..$num_instances).each do |i|

config.vm.define "node#{i+3}" do |node|

node.vm.box = "centos-7"

node.vm.hostname = "node#{i}"

ip = "10.50.10.#{i+33}"

node.vm.network "public_network", ip: ip,bridge: "bond0"

node.vm.provider "virtualbox" do |vb|

vb.memory = "16384"

vb.cpus = 8

vb.name = "node#{i+3}"

end

node.vm.provision "shell", path: "install.sh"

end

end

end

4、node install.sh

主要是conntrack的安装

#!/usr/bin/env bash

# yum net-tools & udate route

cd /tmp && curl -O 10.50.10.25/pigsty/net-tools-2.0-0.25.20131004git.el7.x86_64.rpm

yum -y install net-tools-2.0-0.25.20131004git.el7.x86_64.rpm

route add default gw 10.50.10.254 eth1

route -n

# modify ssh parpmeter passwd=yes

sed -ri '/^PasswordAuthentication/cPasswordAuthentication yes' /etc/ssh/sshd_config

systemctl restart sshd

# change time zone

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

timedatectl set-timezone Asia/Shanghai

rm /etc/yum.repos.d/CentOS-Base.repo

curl 10.50.10.25/pigsty/Centos-Base.repo -o /etc/yum.repos.d/CentOS-Base.repo

# install kmod and ceph-common for rook

yum install -y wget curl conntrack-tools net-tools telnet tcpdump bind-utils socat ntp kmod dos2unix

echo 'disable selinux'

setenforce 0

sed -i 's/=enforcing/=disabled/g' /etc/selinux/config

echo 'enable iptable kernel parameter'

cat >> /etc/sysctl.conf <<EOF

net.ipv4.ip_forward=1

EOF

sysctl -p

echo 'set host name resolution'

cat >> /etc/hosts <<EOF

10.50.10.31 master1

10.50.10.32 master2

10.50.10.33 master3

10.50.10.34 node1

10.50.10.35 node2

10.50.10.36 node3

EOF

cat /etc/hosts

echo 'disable swap'

swapoff -a

sed -i '/swap/s/^/#/' /etc/fstab

#install docker

#yum -y install docker-ce.x86_64

#systemctl daemon-reload && systemctl enable --now docker

# 允许root登录、重启sshd

sed -i 's/PasswordAuthentication no/PasswordAuthentication yes/' /etc/ssh/sshd_config && systemctl restart sshd

# 启动node exporter 监控

curl -O 10.50.10.25/pigsty/node_exporter-1.3.1-1.el7.x86_64.rpm && rpm -ivh node_exporter-1.3.1-1.el7.x86_64.rpm && rm -rf node_exporter-1.3.1-1.el7.x86_64.rpm && systemctl enable node_exporter.service --now

#install conntrack

mkdir -p /opt/conntrack

#!/bin/bash

rpms=(

bash-4.2.46-35.el7_9.x86_64.rpm

conntrack-tools-1.4.4-7.el7.x86_64.rpm

glibc-2.17-326.el7_9.i686.rpm

glibc-2.17-326.el7_9.x86_64.rpm

libmnl-1.0.3-7.el7.x86_64.rpm

libnetfilter_conntrack-1.0.6-1.el7_3.i686.rpm

libnetfilter_conntrack-1.0.6-1.el7_3.x86_64.rpm

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

libnfnetlink-1.0.1-4.el7.x86_64.rpm

systemd-219-78.el7_9.5.x86_64.rpm )

for rpm in ${rpms[@]} ; do

curl 10.50.10.25/pigsty/$rpm -o /opt/conntrack/$rpm

done

cd /opt/conntrack && rpm -Uvh --force --nodeps *.rpm

# download kernel

cd /tmp && curl -O http://10.50.10.25/pigsty/kernel-lt-5.4.200-1.el7.elrepo.x86_64.rpm && rpm -Uvh kernel-lt-5.4.200-1.el7.elrepo.x86_64.rpm

#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。

grub2-set-default 0

# reboot 内核升级生效

reboot

5、时间同步

30 10 * * * /usr/sbin/ntpdate 10.56.5.240

vagran 启动脚本

## master

tee ./startk8s.sh <<-'EOF'

#!/bin/bash

paths=(

/spkshare1/vagrant/k8s/master1

/spkshare1/vagrant/k8s/node

)

for pathName in ${paths[@]} ; do

{

cd $pathName

vagrant reload --no-tty

for node in node{1..6};do

vagrant ssh $node -c "sudo route add default gw 10.50.10.254 eth1 # 添加默认网关

done

}

done

EOF

vagrant up注意点

在vagrant 中 执行shell provisioner时需要注意,该脚本得执行阶段是init的时候。

执行vagrant reload 和vagrant up是不生效的.

安装3台机器大约5mins

安装conntrack 工具

该工具可以排查k8s的网络问题

mkdir -p /opt/conntrack

#!/bin/bash

rpms=(

bash-4.2.46-35.el7_9.x86_64.rpm

conntrack-tools-1.4.4-7.el7.x86_64.rpm

glibc-2.17-326.el7_9.i686.rpm

glibc-2.17-326.el7_9.x86_64.rpm

libmnl-1.0.3-7.el7.x86_64.rpm

libnetfilter_conntrack-1.0.6-1.el7_3.i686.rpm

libnetfilter_conntrack-1.0.6-1.el7_3.x86_64.rpm

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm

libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

libnfnetlink-1.0.1-4.el7.x86_64.rpm

systemd-219-78.el7_9.5.x86_64.rpm )

for rpm in ${rpms[@]} ; do

curl 10.50.10.25/pigsty/$rpm -o /opt/conntrack/$rpm

done

cd /opt/conntrack && rpm -Uvh --force --nodeps *.rpm

ipvs的安装

# 所有节点

# 安装ipvs工具,方便以后操作ipvs,ipset,conntrack等

yum install ipvsadm ipset sysstat conntrack libseccomp -y

# 所有节点配置ipvs模块,执行以下命令,在内核4.19+版本改为nf_conntrack, 4.18下改为nf_conntrack_ipv4

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

#修改ipvs配置,加入以下内容

vi /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

# 执行命令

systemctl enable --now systemd-modules-load.service #--now = enable+start

#检测是否加载

lsmod | grep -e ip_vs -e nf_conntrack

## 所有节点

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

net.ipv4.conf.all.route_localnet = 1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16768

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16768

EOF

sysctl --system

VBoxManage snapshot 准备虚拟机快照

"master1" {7a83b753-149a-44c1-a69e-d701b2c47f50}

"master2" {b90f4050-37c2-4330-997d-338ad641ec09}

"master3" {7e7e16c2-43e0-4d4e-995c-ec653d999fbb}

"node4" {31d58233-11de-43a2-8a2d-c31d0f4dcb8c}

"node5" {d9de0220-2bae-4511-864f-d340b9c62fd1}

"node6" {8196e54c-7612-4c1f-89b5-eafceec1f795}

脚本生成snapshot

for snap in 7a83b753-149a-44c1-a69e-d701b2c47f50 b90f4050-37c2-4330-997d-338ad641ec09 7e7e16c2-43e0-4d4e-995c-ec653d999fbb 31d58233-11de-43a2-8a2d-c31d0f4dcb8c d9de0220-2bae-4511-864f-d340b9c62fd1 8196e54c-7612-4c1f-89b5-eafceec1f795;do

VBoxManage snapshot $snap take base0630—k8s --description="k8s-ha-20220630";

done

基础环境快照:

k8s高可用快照: 2022年6月30日14:13:57

ETCD部署

Etcd 是一个分布式键值存储系统, Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库, 为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,你也 可以使用5台组建集群,可容忍2台机器故障。

- 当集群较大时建议换成SSD加快etcd的访问速度.

- etcd 和master复用,部署在10.50.10.31-33。生产环境建议分开部署.

| 节点名称 | IP |

|---|---|

| etcd-1 | 10.50.10.31 |

| etcd-2 | 10.50.10.32 |

| etcd-3 | 10.50.10.33 |

cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。

找任意一台服务器操作,这里用Master节点。

CFSSL 组成:

- 自定义构建 TLS PKI 工具

- the

cfsslprogram, which is the canonical command line utility using the CFSSL packages. - the

multirootcaprogram, which is a certificate authority server that can use multiple signing keys. - the

mkbundleprogram is used to build certificate pool bundles. - the

cfssljsonprogram, which takes the JSON output from thecfsslandmultirootcaprograms and writes certificates, keys, CSRs, and bundles to disk.

安装:去官网下载cfssl-certinfo_linux-amd64 cfssljson_linux-amd64 cfssl_linux-amd64这三个组件

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

生成Etcd证书

自签证书颁发机构(CA)

创建工作目录

mkdir -p ~/TLS/{etcd,k8s}

cd ~/TLS/etcd

自签CA

证书过期时间自己可以改,这里默认是10年.

#

cat > ca-config.json << EOF

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"www":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#

cat > ca-csr.json << EOF

{

"CN":"etcd CA",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Beijing",

"ST":"Beijing"

}

]

}

EOF

生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@master1 etcd]# ll *pem

-rw------- 1 root root 1675 Jun 23 23:09 ca-key.pem

-rw-r--r-- 1 root root 1265 Jun 23 23:09 ca.pem

使用自签CA签发Etcd HTTPS证书

创建证书申请文件

cat > server-csr.json << EOF

{

"CN":"etcd",

"hosts":[

"10.50.10.31",

"10.50.10.32",

"10.50.10.33"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing"

}

]

}

EOF

注:上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩

容可以多写几个预留的IP。

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2022/06/24 14:37:26 [INFO] generate received request

2022/06/24 14:37:26 [INFO] received CSR

2022/06/24 14:37:26 [INFO] generating key: rsa-2048

2022/06/24 14:37:27 [INFO] encoded CSR

2022/06/24 14:37:27 [INFO] signed certificate with serial number 404918322157143866097023273814021931823617937124

2022/06/24 14:37:27 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@master1 etcd]#

[root@master1 etcd]# ls *server*pem

server-key.pem server.pem

安装etcd

下载软件:

# 这一步骤在vagrant 启动虚拟机的时候已从私有仓库下载,如果没有,可自行下载.

[root@master1 etcd]# cd /opt/etcd/

[root@master1 etcd]# ll

total 16960

-rw-r--r--. 1 root root 17364053 Jun 23 16:15 etcd-v3.4.9-linux-amd64.tar.gz

创建工作目录并解压二进制包

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

创建配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.50.10.31:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.50.10.31:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.50.10.31:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.50.10.31:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://10.50.10.31:2380,etcd-2=https://10.50.10.32:2380,etcd-3=https://10.50.10.33:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

说明:

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

拷贝刚才生成的证书

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

启动并设置开机启动

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

将配置和证书copy到其他etcd节点

scp -r /opt/etcd/ root@10.50.10.32:/opt/

scp /usr/lib/systemd/system/etcd.service root@10.50.10.32:/usr/lib/systemd/system/

scp -r /opt/etcd/ root@10.50.10.33:/opt/

scp /usr/lib/systemd/system/etcd.service root@10.50.10.33:/usr/lib/systemd/system/

etcd配置需要修改的地方

vi /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1" # 修改此处,节点2改为etcd-2,节点3改为etcd-3

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.50.10.31:2380" # 修改此处为当前服务器IP

ETCD_LISTEN_CLIENT_URLS="https://10.50.10.31:2379" # 修改此处为当前服务器IP

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.50.10.31:2380" # 修改此处为当前服务

器IP

ETCD_ADVERTISE_CLIENT_URLS="https://10.50.10.31:2379" # 修改此处为当前服务器IP

ETCD_INITIAL_CLUSTER="etcd-1=https://10.50.10.31:2380,etcd-

2=https://10.50.10.32:2380,etcd-3=https://10.50.10.33:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

启动etcd并设置开机自启动

systemctl daemon-reload

systemctl enable --now etcd.service # 立即启动并开机自启动

systemctl status etcd

验证集群

将etcd的变量暴露

export ETCDCTL_API=3

HOST_1=10.50.10.31

HOST_2=10.50.10.32

HOST_3=10.50.10.33

ENDPOINTS=$HOST_1:2379,$HOST_2:2379,$HOST_3:2379

## 导出环境变量,方便测试,参照https://github.com/etcd-io/etcd/tree/main/etcdctl

export ETCDCTL_DIAL_TIMEOUT=3s

export ETCDCTL_CACERT=/opt/etcd/ssl/ca.pem

export ETCDCTL_CERT=/opt/etcd/ssl/server.pem

export ETCDCTL_KEY=/opt/etcd/ssl/server-key.pem

export ETCDCTL_ENDPOINTS=$HOST_1:2379,$HOST_2:2379,$HOST_3:2379

[root@master1 /opt/etcd/ssl]#etcdctl member list --write-out=table

+------------------+---------+--------+--------------------------+--------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+--------+--------------------------+--------------------------+------------+

| 2b87b52cfcf8b337 | started | etcd-2 | https://10.50.10.32:2380 | https://10.50.10.32:2379 | false |

| 3a95f82ff174fcd6 | started | etcd-1 | https://10.50.10.31:2380 | https://10.50.10.31:2379 | false |

| 483410476ae339e9 | started | etcd-3 | https://10.50.10.33:2380 | https://10.50.10.33:2379 | false |

+------------------+---------+--------+--------------------------+--------------------------+------------+

[root@master1 /opt/etcd/ssl]#etcdctl endpoint status -w table

+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 10.50.10.31:2379 | 3a95f82ff174fcd6 | 3.4.9 | 16 kB | false | false | 6 | 16 | 16 | |

| 10.50.10.32:2379 | 2b87b52cfcf8b337 | 3.4.9 | 20 kB | true | false | 6 | 16 | 16 | |

| 10.50.10.33:2379 | 483410476ae339e9 | 3.4.9 | 20 kB | false | false | 6 | 16 | 16 | |

+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

安装Docker

下载地址: https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

解压二进制包

tar zxvf docker-19.03.9.tgz

mv docker/* /usr/bin

systemd 管理docker进程

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

配置镜像加速文件

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

如果没有网络先将镜像推送到Harbor 镜像仓库.

启动并设置开机启动

systemctl daemon-reload

systemctl enable docker --now

部署master

生成kube-apiserver证书

自签证书颁发机构 (*CA*)

#

cat > /root/TLS/k8s/ca-config.json << EOF

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"kubernetes":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#

cat >/root/TLS/k8s/ca-csr.json << EOF

{

"CN":"kubernetes",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Beijing",

"ST":"Beijing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

使用自签CA签发kube-apiserver HTTPS证书

hosts字段包含 Master/LB/VIP IP

cd TLS/k8s

cat > server-csr.json << EOF

{

"CN":"kubernetes",

"hosts":[

"10.96.0.1",

"127.0.0.1",

"10.50.10.28",

"10.50.10.29",

"10.50.10.30",

"10.50.10.31",

"10.50.10.32",

"10.50.10.33",

"10.50.10.34",

"10.50.10.35",

"10.50.10.36",

"10.50.10.108",

"10.50.10.242",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json - profile=kubernetes server-csr.json | cfssljson -bare server

ls server*pem

server-key.pem server.pem

说明:

后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group k8s 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限;O指定该证书的 Group 为 k8s,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 k8s,所以被授予访问所有 API 的权限;

注:

这个kubernetes证书,是将来生成管理员用的kubeconfig 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group;

“O”: “k8s”, 必须是k8s,否则后面kubectl create clusterrolebinding报错。

下载二进制文件

下载地址: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1. 18.md#v1183

解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin cp kubectl /usr/bin/

部署kube-apiserver

创建配置文件

cat > /etc/kubernetes/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=10.50.10.31 \

--secure-port=6443 \

--advertise-address=10.50.10.31 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.96.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://10.50.10.31:2379,https://10.50.10.32:2379,https://10.50.10.33:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

EOF

上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。

–logtostderr:启用日志

—v:日志等级

–log-dir:日志目录

–etcd-servers: etcd集群地址

–bind-address:监听地址

–secure-port: https安全端口

–advertise-address:集群通告地址

–allow-privileged:启用授权

–service-cluster-ip-range: Service虚拟IP地址段

–enable-admission-plugins:准入控制模块

–authorization-mode:认证授权,启用RBAC授权和节点自管理

–enable-bootstrap-token-auth:启用TLS bootstrap机制

–token-auth-file: bootstrap token文件

–service-node-port-range: Service nodeport类型默认分配端口范围

–kubelet-client-xxx: apiserver访问kubelet客户端证书

–tls-xxx-file: apiserver https证书

–etcd-xxxfile:连接Etcd集群证书

–audit-log-xxx:审计日志

拷贝刚才生成的证书

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /etc/kubernetes/ssl

启用 TLSBootstrapping 机制

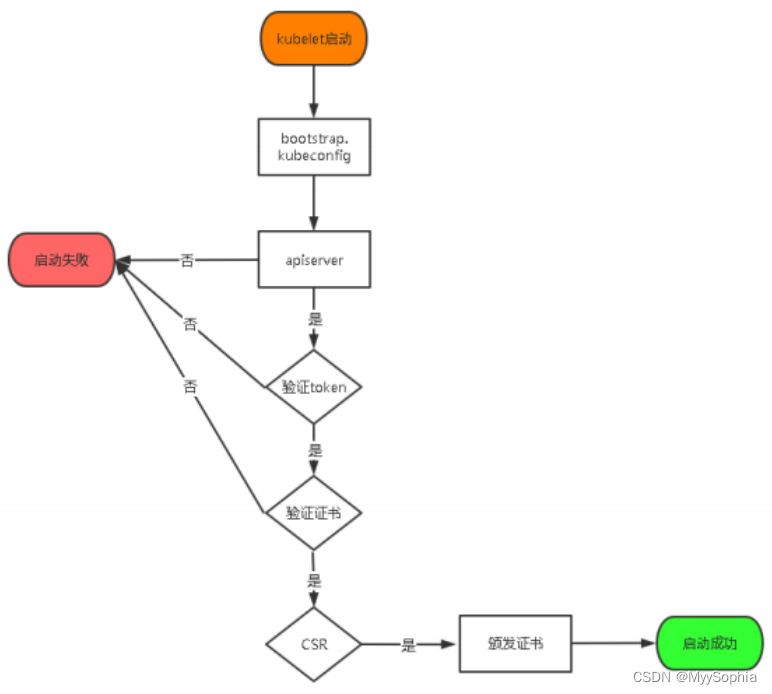

TLS Bootstraping: Master apiserver启用TLS认证后, Node节点kubelet和kube-proxy要与kube- apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需 要大量工作,同样也会增加集群扩展复杂度。为了简化流程, Kubernetes引入了TLS bootstraping机制 来自动颁发客户端证书, kubelet会以一个低权限用户自动向apiserver申请证书, kubelet的证书由 apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet, kube-proxy还是 由我们统一颁发一个证书。

TLS bootstraping 工作流程

k8s TLS

# 生成token

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

创建上述配置文件中token文件:

# token,用户名, UID,用户组

cat > /opt/kubernetes/cfg/token.csv << EOF

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

systemd管理kube-apiserver

cat > /etc/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

开机自启动

systemctl daemon-reload

systemctl enable kube-apiserver --now

授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

部署kube-controller-manager

创建配置文件

cat > /etc/kubernetes/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

EOF

–leader-elect:当该组件启动多个时,自动选举(HA)

–cluster-signing-cert-file/–cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver 保持一致

systemd 管理kube-controler-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

启动并设置开机启动

systemctl daemon-reload

systemctl enable kube-controller-manager --now

安装kube-scheduler

配置文件

cat > /etc/kubernetes/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

EOF

systemd管理kube-scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << eof

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

启动并设置开机启动

systemctl daemon-reload

systemctl enable kube-scheduler --now

查看集群状态

k get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

部署Worker

worker node上只需要部署kubelet 和 kube-proxy组件即可

部署kubelet

kube.config 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

创建配置文件

cat > /etc/kubernetes/kubelet.conf << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 0

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.2

clusterDomain: cluster.local

healthzBindAddress: 127.0.0.1

healthzPort: 10248

rotateCertificates: true

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.50.10.108:6443 --kubeconfig=kube.config

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

kubectl config use-context kubernetes --kubeconfig=kube.config

部署kube-proxy

创建kube-proxy证书请求文件

cat > kube-proxy-csr.json << "EOF"

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

# ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

创建kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.50.10.31:6443 --kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

创建服务配置文件

cat > kube-proxy.yaml << "EOF"

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 10.50.10.31

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 10.50.10.31:10256

kind: KubeProxyConfiguration

metricsBindAddress: 10.50.10.31:10249

mode: "ipvs"

EOF

创建服务启动管理文件

cat > kube-proxy.service << "EOF"

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

同步文件到集群工作节点主机

cp kube-proxy*.pem /etc/kubernetes/ssl/

cp kube-proxy.kubeconfig kube-proxy.yaml /etc/kubernetes/

cp kube-proxy.service /usr/lib/systemd/system/

for i in master2 master3 node1 node2 node3;do scp kube-proxy.kubeconfig kube-proxy.yaml $i:/etc/kubernetes/;done

for i in master2 master3 node1 node2 node3;do scp kube-proxy.service $i:/usr/lib/systemd/system/;done

说明:

修改kube-proxy.yaml中IP地址为当前主机IP.

10.50.10.32 10.50.10.33 10.50.10.34 10.50.10.35 10.50.10.36

sed -i s/.31/.34/g /etc/kubernetes/kube-proxy.yaml && more /etc/kubernetes/kube-proxy.yaml

sed -i s/.31/.35/g /etc/kubernetes/kube-proxy.yaml && more /etc/kubernetes/kube-proxy.yaml

sed -i s/.31/.36/g /etc/kubernetes/kube-proxy.yaml && more /etc/kubernetes/kube-proxy.yaml

报错汇总

kube-apiserver启动失败

Jun 25 23:25:45 master1 kube-apiserver: W0625 23:25:45.149384 6982 services.go:37] No CIDR for service cluster IPs specified. Default value which was 10.0.0.0/24 is deprecated and will be removed in future releases. Please specify it using --service-cluster-ip-range on kube-apiserver.

Jun 25 23:25:45 master1 kube-apiserver: I0625 23:25:45.149484 6982 server.go:565] external host was not specified, using 10.50.10.31

Jun 25 23:25:45 master1 kube-apiserver: W0625 23:25:45.149495 6982 authentication.go:523] AnonymousAuth is not allowed with the AlwaysAllow authorizer. Resetting AnonymousAuth to false. You should use a different authorizer

Jun 25 23:25:45 master1 kube-apiserver: E0625 23:25:45.149752 6982 run.go:74] "command failed" err="[--etcd-servers must be specified, service-account-issuer is a required flag, --service-account-signing-key-file and --service-account-issuer are required flags]"

Jun 25 23:25:45 master1 systemd: kube-apiserver.service: main process exited, code=exited, status=1/FAILURE

Jun 25 23:25:45 master1 systemd: Unit kube-apiserver.service entered failed state.

Jun 25 23:25:45 master1 systemd: kube-apiserver.service failed.

Jun 25 23:25:45 master1 systemd: kube-apiserver.service holdoff time over, scheduling restart.

解决:

/usr/lib/systemd/system/kube-apiserver.service 在使用cat 重定向输入的时候未将$KUBE_APISERVER_OPTS 进行转义,导致启动参数一直识别不到。(k8s 1.23.8 和1.18 竟然不一样)

排错命令 journalctl -xe -u kube-apiserver | more

https://github.com/opsnull/follow-me-install-kubernetes-cluster/issues/179

The connection to the server localhost:8080

[root@master1 /opt/kubernetes/cfg]#kubectl get cs

The connection to the server localhost:8080 was refused - did you specify the right host or port?

参考

https://www.iuskye.com/2021/03/04/k8s-bin.html

无法创建自启动服务

systemctl disable kube-apiserver.service

systemctl disable kube-controller-manager.service

systemctl disable kube-scheduler.service

但是如果一次没有配置成功的话,经常需要重复的第二次配置,但是如果忘记remove时候,就会出现比较奇怪的错误,比如会提示报错说file exists,这时候就需要先systemctl disable (服务名)。

这是因为在systemctl enable (service名)时候,实际上是创建了一个链接

参考

https://blog.51cto.com/zero01/2529035?source=drh

https://ost.51cto.com/posts/13116

https://blog.csdn.net/a13568hki/article/details/123581346

https://blog.csdn.net/eagle89/article/details/123786607

vagrant 快速搭建k8s集群

https://jimmysong.io/kubernetes-handbook/cloud-native/cloud-native-local-quick-start.html

高可用集群官方推荐

https://github.com/kubernetes-sigs/kubespray

更多推荐

已为社区贡献18条内容

已为社区贡献18条内容

所有评论(0)