1、准备工作

1.1 cilium-集群节点信息

机器均为8C8G的虚拟机,硬盘为100G。

| IP | Hostname |

|---|

| 10.31.188.1 | tiny-cilium-master-188-1.k8s.tcinternal |

| 10.31.188.11 | tiny-cilium-worker-188-11.k8s.tcinternal |

| 10.31.188.12 | tiny-cilium-worker-188-12.k8s.tcinternal |

| 10.188.0.0/18 | serviceSubnet |

1.2 检查mac和product_uuid

同一个k8s集群内的所有节点需要确保mac地址和product_uuid均唯一,开始集群初始化之前需要检查相关信息

1

2

3

4

5

6

| # 检查mac地址

ip link

ifconfig -a

# 检查product_uuid

sudo cat /sys/class/dmi/id/product_uuid

Copy

|

1.3 配置ssh免密登录(可选)

如果k8s集群的节点有多个网卡,确保每个节点能通过正确的网卡互联访问

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| # 在root用户下面生成一个公用的key,并配置可以使用该key免密登录

su root

ssh-keygen

cd /root/.ssh/

cat id_rsa.pub >> authorized_keys

chmod 600 authorized_keys

cat >> ~/.ssh/config <<EOF

Host tiny-cilium-master-188-1.k8s.tcinternal

HostName 10.31.188.1

User root

Port 22

IdentityFile ~/.ssh/id_rsa

Host tiny-cilium-worker-188-11.k8s.tcinternal

HostName 10.31.188.11

User root

Port 22

IdentityFile ~/.ssh/id_rsa

Host tiny-cilium-worker-188-12.k8s.tcinternal

HostName 10.31.188.12

User root

Port 22

IdentityFile ~/.ssh/id_rsa

EOF

Copy

|

1.4 修改hosts文件

1

2

3

4

5

| cat >> /etc/hosts <<EOF

10.31.188.1 tiny-cilium-master-188-1 tiny-cilium-master-188-1.k8s.tcinternal

10.31.188.11 tiny-cilium-worker-188-11 tiny-cilium-worker-188-11.k8s.tcinternal

10.31.188.12 tiny-cilium-worker-188-12 tiny-cilium-worker-188-12.k8s.tcinternal

EOF

Copy

|

1.5 关闭swap内存

1

2

3

4

| # 使用命令直接关闭swap内存

swapoff -a

# 修改fstab文件禁止开机自动挂载swap分区

sed -i '/swap / s/^\(.*\)$/#\1/g' /etc/fstab

Copy

|

1.6 配置时间同步

这里可以根据自己的习惯选择ntp或者是chrony同步均可,同步的时间源服务器可以选择阿里云的ntp1.aliyun.com或者是国家时间中心的ntp.ntsc.ac.cn。

使用ntp同步

1

2

3

4

5

6

7

8

| # 使用yum安装ntpdate工具

yum install ntpdate -y

# 使用国家时间中心的源同步时间

ntpdate ntp.ntsc.ac.cn

# 最后查看一下时间

hwclock

Copy

|

使用chrony同步

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| # 使用yum安装chrony

yum install chrony -y

# 设置开机启动并开启chony并查看运行状态

systemctl enable chronyd.service

systemctl start chronyd.service

systemctl status chronyd.service

# 当然也可以自定义时间服务器

vim /etc/chrony.conf

# 修改前

$ grep server /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

# 修改后

$ grep server /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

server ntp.ntsc.ac.cn iburst

# 重启服务使配置文件生效

systemctl restart chronyd.service

# 查看chrony的ntp服务器状态

chronyc sourcestats -v

chronyc sources -v

Copy

|

1.7 关闭selinux

1

2

3

4

5

| # 使用命令直接关闭

setenforce 0

# 也可以直接修改/etc/selinux/config文件

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

Copy

|

1.8 配置防火墙

k8s集群之间通信和服务暴露需要使用较多端口,为了方便,直接禁用防火墙

1

2

| # centos7使用systemctl禁用默认的firewalld服务

systemctl disable firewalld.service

Copy

|

1.9 配置netfilter参数

这里主要是需要配置内核加载br_netfilter和iptables放行ipv6和ipv4的流量,确保集群内的容器能够正常通信。

1

2

3

4

5

6

7

8

9

| cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

Copy

|

1.10 关闭IPV6(不建议)

和之前部署其他的CNI不一样,cilium很多服务监听默认情况下都是双栈的(使用cilium-cli操作的时候),因此建议开启系统的IPV6网络支持(即使没有可用的IPV6路由也可以)

当然没有ipv6网络也是可以的,只是在使用cilium-cli的一些开启port-forward命令时会报错而已。

1

2

| # 直接在内核中添加ipv6禁用参数

grubby --update-kernel=ALL --args=ipv6.disable=1

Copy

|

1.11 配置IPVS(建议)

IPVS是专门设计用来应对负载均衡场景的组件,kube-proxy 中的 IPVS 实现通过减少对 iptables 的使用来增加可扩展性。在 iptables 输入链中不使用 PREROUTING,而是创建一个假的接口,叫做 kube-ipvs0,当k8s集群中的负载均衡配置变多的时候,IPVS能实现比iptables更高效的转发性能。

因为cilium需要升级系统内核,因此这里的内核版本高于4.19

注意在4.19之后的内核版本中使用nf_conntrack模块来替换了原有的nf_conntrack_ipv4模块

(Notes: use nf_conntrack instead of nf_conntrack_ipv4 for Linux kernel 4.19 and later)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| # 在使用ipvs模式之前确保安装了ipset和ipvsadm

sudo yum install ipset ipvsadm -y

# 手动加载ipvs相关模块

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

# 配置开机自动加载ipvs相关模块

cat <<EOF | sudo tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

sudo sysctl --system

# 最好重启一遍系统确定是否生效

$ lsmod | grep -e ip_vs -e nf_conntrack

nf_conntrack_netlink 49152 0

nfnetlink 20480 2 nf_conntrack_netlink

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 159744 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 159744 5 xt_conntrack,nf_nat,nf_conntrack_netlink,xt_MASQUERADE,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

$ cut -f1 -d " " /proc/modules | grep -e ip_vs -e nf_conntrack

nf_conntrack_netlink

ip_vs_sh

ip_vs_wrr

ip_vs_rr

ip_vs

nf_conntrack

Copy

|

1.12 配置Linux内核(cilium必选)

cilium和其他的cni组件最大的不同在于其底层使用了ebpf技术,而该技术对于Linux的系统内核版本有较高的要求,完成的要求可以查看官网的详细链接,这里我们着重看内核版本、内核参数这两个部分。

Linux内核版本

默认情况下我们可以参考cilium官方给出的一个系统要求总结。因为我们是在k8s集群中部署(使用容器),因此只需要关注Linux内核版本和etcd版本即可。根据前面部署的经验我们可以知道1.23.6版本的k8s默认使用的etcd版本是3.5.+,因此重点就来到了Linux内核版本这里。

| Requirement | Minimum Version | In cilium container |

|---|

| Linux kernel | >= 4.9.17 | no |

| Key-Value store (etcd) | >= 3.1.0 | no |

| clang+LLVM | >= 10.0 | yes |

| iproute2 | >= 5.9.0 | yes |

This requirement is only needed if you run cilium-agent natively. If you are using the Cilium container image cilium/cilium, clang+LLVM is included in the container image.

iproute2 is only needed if you run cilium-agent directly on the host machine. iproute2 is included in the cilium/cilium container image.

毫无疑问CentOS7内置的默认内核版本3.10.x版本的内核是无法满足需求的,但是在升级内核之前,我们再看看其他的一些要求。

cilium官方还给出了一份列表描述了各项高级功能对内核版本的要求:

可以看到如果需要满足上面所有需求的话,需要内核版本高于5.10,本着学习测试研究作死的精神,反正都升级了,干脆就升级到新一些的版本吧。这里我们可以直接使用elrepo源来升级内核到较新的内核版本。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| # 查看elrepo源中支持的内核版本

$ yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

Available Packages

elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernel

kernel-lt.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 5.4.192-1.el7.elrepo elrepo-kernel

kernel-ml.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

perf.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.17.6-1.el7.elrepo elrepo-kernel

# 看起来ml版本的内核比较满足我们的需求,直接使用yum进行安装

sudo yum --enablerepo=elrepo-kernel install kernel-ml -y

# 使用grubby工具查看系统中已经安装的内核版本信息

sudo grubby --info=ALL

# 设置新安装的5.17.6版本内核为默认内核版本,此处的index=0要和上面查看的内核版本信息一致

sudo grubby --set-default-index=0

# 查看默认内核是否修改成功

sudo grubby --default-kernel

# 重启系统切换到新内核

init 6

# 重启后检查内核版本是否为新的5.17.6

uname -a

Copy

|

Linux内核参数

首先我们查看自己当前内核版本的参数,基本上可以分为y、n、m三个选项

- y:yes,Build directly into the kernel. 表示该功能被编译进内核中,默认启用

- n:no,Leave entirely out of the kernel. 表示该功能未被编译进内核中,不启用

- m:module,Build as a module, to be loaded if needed. 表示该功能被编译为模块,按需启用

1

2

| # 查看当前使用的内核版本的编译参数

cat /boot/config-$(uname -r)

Copy

|

cilium官方对各项功能所需要开启的内核参数列举如下:

In order for the eBPF feature to be enabled properly, the following kernel configuration options must be enabled. This is typically the case with distribution kernels. When an option can be built as a module or statically linked, either choice is valid.

为了正确启用 eBPF 功能,必须启用以下内核配置选项。这通常因内核版本情况而异。任何一个选项都可以构建为模块或静态链接,两个选择都是有效的。

我们暂时只看最基本的Base Requirements

1

2

3

4

5

6

7

8

9

10

| CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

CONFIG_NET_CLS_BPF=y

CONFIG_BPF_JIT=y

CONFIG_NET_CLS_ACT=y

CONFIG_NET_SCH_INGRESS=y

CONFIG_CRYPTO_SHA1=y

CONFIG_CRYPTO_USER_API_HASH=y

CONFIG_CGROUPS=y

CONFIG_CGROUP_BPF=y

Copy

|

对比我们使用的5.17.6-1.el7.elrepo.x86_64内核可以发现有两个模块是为m

1

2

3

4

5

6

7

8

9

10

11

| $ egrep "^CONFIG_BPF=|^CONFIG_BPF_SYSCALL=|^CONFIG_NET_CLS_BPF=|^CONFIG_BPF_JIT=|^CONFIG_NET_CLS_ACT=|^CONFIG_NET_SCH_INGRESS=|^CONFIG_CRYPTO_SHA1=|^CONFIG_CRYPTO_USER_API_HASH=|^CONFIG_CGROUPS=|^CONFIG_CGROUP_BPF=" /boot/config-5.17.6-1.el7.elrepo.x86_64

CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

CONFIG_BPF_JIT=y

CONFIG_CGROUPS=y

CONFIG_CGROUP_BPF=y

CONFIG_NET_SCH_INGRESS=m

CONFIG_NET_CLS_BPF=m

CONFIG_NET_CLS_ACT=y

CONFIG_CRYPTO_SHA1=y

CONFIG_CRYPTO_USER_API_HASH=y

Copy

|

缺少的这两个模块我们可以在/usr/lib/modules/$(uname -r)目录下面找到它们:

1

2

3

4

| $ realpath ./kernel/net/sched/sch_ingress.ko

/usr/lib/modules/5.17.6-1.el7.elrepo.x86_64/kernel/net/sched/sch_ingress.ko

$ realpath ./kernel/net/sched/cls_bpf.ko

/usr/lib/modules/5.17.6-1.el7.elrepo.x86_64/kernel/net/sched/cls_bpf.ko

Copy

|

确认相关内核模块存在我们直接加载内核即可:

1

2

3

4

5

6

7

8

9

10

11

12

| # 直接使用modprobe命令加载

$ modprobe cls_bpf

$ modprobe sch_ingress

$ lsmod | egrep "cls_bpf|sch_ingress"

sch_ingress 16384 0

cls_bpf 24576 0

# 配置开机自动加载cilium所需相关模块

cat <<EOF | sudo tee /etc/modules-load.d/cilium-base-requirements.conf

cls_bpf

sch_ingress

EOF

Copy

|

其他cilium高级功能所需要的内核功能也类似,这里不做赘述。

2、安装container runtime

2.1 安装docker

详细的官方文档可以参考这里,由于在刚发布的1.24版本中移除了docker-shim,因此安装的版本≥1.24的时候需要注意容器运行时的选择。这里我们安装的版本低于1.24,因此我们继续使用docker。

docker的具体安装可以参考我之前写的这篇文章,这里不做赘述。

1

2

3

4

5

6

| # 安装必要的依赖组件并且导入docker官方提供的yum源

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# 我们直接安装最新版本的docker

yum install docker-ce docker-ce-cli containerd.io

Copy

|

2.2 配置cgroup drivers

CentOS7使用的是systemd来初始化系统并管理进程,初始化进程会生成并使用一个 root 控制组 (cgroup), 并充当 cgroup 管理器。 Systemd 与 cgroup 集成紧密,并将为每个 systemd 单元分配一个 cgroup。 我们也可以配置容器运行时和 kubelet 使用 cgroupfs。 连同 systemd 一起使用 cgroupfs 意味着将有两个不同的 cgroup 管理器。而当一个系统中同时存在cgroupfs和systemd两者时,容易变得不稳定,因此最好更改设置,令容器运行时和 kubelet 使用 systemd 作为 cgroup 驱动,以此使系统更为稳定。 对于 Docker, 需要设置 native.cgroupdriver=systemd 参数。

参考官方的说明文档:

Container Runtimes | Kubernetes

参考配置说明文档

容器运行时 | Kubernetes

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| sudo mkdir /etc/docker

cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl enable docker

sudo systemctl daemon-reload

sudo systemctl restart docker

# 最后检查一下Cgroup Driver是否为systemd

$ docker info | grep systemd

Cgroup Driver: systemd

Copy

|

2.3 关于kubelet的cgroup driver

k8s官方有详细的文档介绍了如何设置kubelet的cgroup driver,需要特别注意的是,在1.22版本开始,如果没有手动设置kubelet的cgroup driver,那么默认会设置为systemd

Note: In v1.22, if the user is not setting the cgroupDriver field under KubeletConfiguration, kubeadm will default it to systemd.

一个比较简单的指定kubelet的cgroup driver的方法就是在kubeadm-config.yaml加入cgroupDriver字段

1

2

3

4

5

6

7

8

| # kubeadm-config.yaml

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.21.0

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

Copy

|

我们可以直接查看configmaps来查看初始化之后集群的kubeadm-config配置。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| $ kubectl describe configmaps kubeadm-config -n kube-system

Name: kubeadm-config

Namespace: kube-system

Labels: <none>

Annotations: <none>

Data

====

ClusterConfiguration:

----

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.6

networking:

dnsDomain: cali-cluster.tclocal

serviceSubnet: 10.88.0.0/18

scheduler: {}

BinaryData

====

Events: <none>

Copy

|

当然因为我们需要安装的版本高于1.22.0并且使用的就是systemd,因此可以不用再重复配置。

3、安装kube三件套

对应的官方文档可以参考这里

Installing kubeadm | Kubernetes

kube三件套就是kubeadm、kubelet 和 kubectl,三者的具体功能和作用如下:

kubeadm:用来初始化集群的指令。kubelet:在集群中的每个节点上用来启动 Pod 和容器等。kubectl:用来与集群通信的命令行工具。

需要注意的是:

kubeadm不会帮助我们管理kubelet和kubectl,其他两者也是一样的,也就是说这三者是相互独立的,并不存在谁管理谁的情况;kubelet的版本必须小于等于API-server的版本,否则容易出现兼容性的问题;kubectl并不是集群中的每个节点都需要安装,也并不是一定要安装在集群中的节点,可以单独安装在自己本地的机器环境上面,然后配合kubeconfig文件即可使用kubectl命令来远程管理对应的k8s集群;

CentOS7的安装比较简单,我们直接使用官方提供的yum源即可。需要注意的是这里需要设置selinux的状态,但是前面我们已经关闭了selinux,因此这里略过这步。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

| # 直接导入谷歌官方的yum源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# 当然如果连不上谷歌的源,可以考虑使用国内的阿里镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 接下来直接安装三件套即可

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

# 如果网络环境不好出现gpgcheck验证失败导致无法正常读取yum源,可以考虑关闭该yum源的repo_gpgcheck

sed -i 's/repo_gpgcheck=1/repo_gpgcheck=0/g' /etc/yum.repos.d/kubernetes.repo

# 或者在安装的时候禁用gpgcheck

sudo yum install -y kubelet kubeadm kubectl --nogpgcheck --disableexcludes=kubernetes

# 如果想要安装特定版本,可以使用这个命令查看相关版本的信息

sudo yum list --nogpgcheck kubelet kubeadm kubectl --showduplicates --disableexcludes=kubernetes

# 这里我们为了保留使用docker-shim,因此我们按照1.24.0版本的前一个版本1.23.6

sudo yum install -y kubelet-1.23.6-0 kubeadm-1.23.6-0 kubectl-1.23.6-0 --nogpgcheck --disableexcludes=kubernetes

# 安装完成后配置开机自启kubelet

sudo systemctl enable --now kubelet

Copy

|

4、初始化集群

4.1 编写配置文件

在集群中所有节点都执行完上面的三点操作之后,我们就可以开始创建k8s集群了。因为我们这次不涉及高可用部署,因此初始化的时候直接在我们的目标master节点上面操作即可。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # 我们先使用kubeadm命令查看一下主要的几个镜像版本

# 因为我们此前指定安装了旧的1.23.6版本,这里的apiserver镜像版本也会随之回滚

$ kubeadm config images list

I0509 12:06:03.036544 3593 version.go:255] remote version is much newer: v1.24.0; falling back to: stable-1.23

k8s.gcr.io/kube-apiserver:v1.23.6

k8s.gcr.io/kube-controller-manager:v1.23.6

k8s.gcr.io/kube-scheduler:v1.23.6

k8s.gcr.io/kube-proxy:v1.23.6

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

# 为了方便编辑和管理,我们还是把初始化参数导出成配置文件

$ kubeadm config print init-defaults > kubeadm-cilium.conf

Copy

|

- 考虑到大多数情况下国内的网络无法使用谷歌的k8s.gcr.io镜像源,我们可以直接在配置文件中修改

imageRepository参数为阿里的镜像源 kubernetesVersion字段用来指定我们要安装的k8s版本localAPIEndpoint参数需要修改为我们的master节点的IP和端口,初始化之后的k8s集群的apiserver地址就是这个podSubnet、serviceSubnet和dnsDomain两个参数默认情况下可以不用修改,这里我按照自己的需求进行了变更nodeRegistration里面的name参数修改为对应master节点的hostname- 新增配置块使用ipvs,具体可以参考官方文档

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

| apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.31.188.1

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: tiny-cilium-master-188-1.k8s.tcinternal

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.23.6

networking:

dnsDomain: cili-cluster.tclocal

serviceSubnet: 10.188.0.0/18

podSubnet: 10.188.64.0/18

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

Copy

|

4.2 初始化集群

此时我们再查看对应的配置文件中的镜像版本,就会发现已经变成了对应阿里云镜像源的版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| # 查看一下对应的镜像版本,确定配置文件是否生效

$ kubeadm config images list --config kubeadm-cilium.conf

registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.6

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.6

registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.6

registry.aliyuncs.com/google_containers/kube-proxy:v1.23.6

registry.aliyuncs.com/google_containers/pause:3.6

registry.aliyuncs.com/google_containers/etcd:3.5.1-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 确认没问题之后我们直接拉取镜像

$ kubeadm config images pull --config kubeadm-cilium.conf

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.6

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.6

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.6

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.23.6

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.6

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.1-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 初始化

$ kubeadm init --config kubeadm-cilium.conf

[init] Using Kubernetes version: v1.23.6

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

...此处略去一堆输出...

Copy

|

当我们看到下面这个输出结果的时候,我们的集群就算是初始化成功了。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.31.188.1:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:fbe33f0dbda199b487a78948a4c693660d742d0dfc270bad2963a035b4971ade

Copy

|

4.3 配置kubeconfig

刚初始化成功之后,我们还没办法马上查看k8s集群信息,需要配置kubeconfig相关参数才能正常使用kubectl连接apiserver读取集群信息。

1

2

3

4

5

6

7

8

9

10

| # 对于非root用户,可以这样操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 如果是root用户,可以直接导入环境变量

export KUBECONFIG=/etc/kubernetes/admin.conf

# 添加kubectl的自动补全功能

echo "source <(kubectl completion bash)" >> ~/.bashrc

Copy

|

前面我们提到过kubectl不一定要安装在集群内,实际上只要是任何一台能连接到apiserver的机器上面都可以安装kubectl并且根据步骤配置kubeconfig,就可以使用kubectl命令行来管理对应的k8s集群。

配置完成后,我们再执行相关命令就可以查看集群的信息了。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| $ kubectl cluster-info

Kubernetes control plane is running at https://10.31.188.1:6443

CoreDNS is running at https://10.31.188.1:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tiny-cilium-master-188-1.k8s.tcinternal NotReady control-plane,master 84s v1.23.6 10.31.188.1 <none> CentOS Linux 7 (Core) 5.17.6-1.el7.elrepo.x86_64 docker://20.10.14

$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-6d8c4cb4d-285cl 0/1 Pending 0 78s <none> <none> <none> <none>

kube-system coredns-6d8c4cb4d-6zntv 0/1 Pending 0 78s <none> <none> <none> <none>

kube-system etcd-tiny-cilium-master-188-1.k8s.tcinternal 1/1 Running 0 90s 10.31.188.1 tiny-cilium-master-188-1.k8s.tcinternal <none> <none>

kube-system kube-apiserver-tiny-cilium-master-188-1.k8s.tcinternal 1/1 Running 0 92s 10.31.188.1 tiny-cilium-master-188-1.k8s.tcinternal <none> <none>

kube-system kube-controller-manager-tiny-cilium-master-188-1.k8s.tcinternal 1/1 Running 0 90s 10.31.188.1 tiny-cilium-master-188-1.k8s.tcinternal <none> <none>

kube-system kube-proxy-m7q5n 1/1 Running 0 78s 10.31.188.1 tiny-cilium-master-188-1.k8s.tcinternal <none> <none>

kube-system kube-scheduler-tiny-cilium-master-188-1.k8s.tcinternal 1/1 Running 0 91s 10.31.188.1 tiny-cilium-master-188-1.k8s.tcinternal <none> <none>

Copy

|

4.4 添加worker节点

这时候我们还需要继续添加剩下的两个节点作为worker节点运行负载,直接在剩下的节点上面运行集群初始化成功时输出的命令就可以成功加入集群。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| $ kubeadm join 10.31.188.1:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:fbe33f0dbda199b487a78948a4c693660d742d0dfc270bad2963a035b4971ade

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Copy

|

如果不小心没保存初始化成功的输出信息也没有关系,我们可以使用kubectl工具查看或者生成token

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| # 查看现有的token列表

$ kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

abcdef.0123456789abcdef 23h 2022-05-10T05:46:11Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

# 如果token已经失效,那就再创建一个新的token

$ kubeadm token create

xd468t.co8ye3su70bojo2k

$ kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

abcdef.0123456789abcdef 23h 2022-05-10T05:46:11Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

xd468t.co8ye3su70bojo2k 23h 2022-05-10T05:58:40Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

# 如果找不到--discovery-token-ca-cert-hash参数,则可以在master节点上使用openssl工具来获取

$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

0d68339d3e5a045dc093470321f8f6334223e97f360542477c4f480bda34d72a

Copy

|

添加完成之后我们再查看集群的节点可以发现这时候已经多了两个node,但是此时节点的状态还是NotReady,接下来就需要部署CNI了。

1

2

3

4

5

| $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

tiny-cilium-master-188-1.k8s.tcinternal NotReady control-plane,master 2m47s v1.23.6

tiny-cilium-worker-188-11.k8s.tcinternal NotReady <none> 41s v1.23.6

tiny-cilium-worker-188-12.k8s.tcinternal NotReady <none> 30s v1.23.6

Copy

|

5、安装CNI

5.1 安装cilium

快速安装的教程可以参考官网文档,基本的安装思路就是先下载cilium官方的cli工具,然后使用cli工具进行安装。

这种安装方式的优势就是简单快捷,缺点就是缺少自定义参数配置的功能,只能使用官方原先设置的默认参数,比较适合快速初始化搭建可用环境用来学习和测试。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

| # cilium的cli工具是一个二进制的可执行文件

$ curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz{,.sha256sum}

$ sha256sum --check cilium-linux-amd64.tar.gz.sha256sum

cilium-linux-amd64.tar.gz: OK

$ sudo tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin

cilium

# 使用该命令即可完成cilium的安装

$ cilium install

ℹ️ using Cilium version "v1.11.3"

🔮 Auto-detected cluster name: kubernetes

🔮 Auto-detected IPAM mode: cluster-pool

ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.11.3 --set cluster.id=0,cluster.name=kubernetes,encryption.nodeEncryption=false,ipam.mode=cluster-pool,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator

ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret

🔑 Created CA in secret cilium-ca

🔑 Generating certificates for Hubble...

🚀 Creating Service accounts...

🚀 Creating Cluster roles...

🚀 Creating ConfigMap for Cilium version 1.11.3...

🚀 Creating Agent DaemonSet...

level=warning msg="spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[1].matchExpressions[0].key: beta.kubernetes.io/os is deprecated since v1.14; use \"kubernetes.io/os\" instead" subsys=klog

level=warning msg="spec.template.metadata.annotations[scheduler.alpha.kubernetes.io/critical-pod]: non-functional in v1.16+; use the \"priorityClassName\" field instead" subsys=klog

🚀 Creating Operator Deployment...

⌛ Waiting for Cilium to be installed and ready...

✅ Cilium was successfully installed! Run 'cilium status' to view installation health

# 查看cilium的状态

$ cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium-operator Running: 1

cilium Running: 3

Cluster Pods: 2/2 managed by Cilium

Image versions cilium quay.io/cilium/cilium:v1.11.3@sha256:cb6aac121e348abd61a692c435a90a6e2ad3f25baa9915346be7b333de8a767f: 3

cilium-operator quay.io/cilium/operator-generic:v1.11.3@sha256:5b81db7a32cb7e2d00bb3cf332277ec2b3be239d9e94a8d979915f4e6648c787: 1

Copy

|

5.2 配置hubble

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

| # 我们先使用cilium-cli工具在k8s集群中部署hubble,只需要下面一条命令即可

$ cilium hubble enable

🔑 Found CA in secret cilium-ca

ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.11.3 --set cluster.id=0,cluster.name=kubernetes,encryption.nodeEncryption=false,hubble.enabled=true,hubble.relay.enabled=true,hubble.tls.ca.cert=LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUNGRENDQWJxZ0F3SUJBZ0lVSDRQcit1UU0xSXZtdWQvVlV3YWlycGllSEZBd0NnWUlLb1pJemowRUF3SXcKYURFTE1Ba0dBMVVFQmhNQ1ZWTXhGakFVQmdOVkJBZ1REVk5oYmlCR2NtRnVZMmx6WTI4eEN6QUpCZ05WQkFjVApBa05CTVE4d0RRWURWUVFLRXdaRGFXeHBkVzB4RHpBTkJnTlZCQXNUQmtOcGJHbDFiVEVTTUJBR0ExVUVBeE1KClEybHNhWFZ0SUVOQk1CNFhEVEl5TURVd09UQTVNREF3TUZvWERUSTNNRFV3T0RBNU1EQXdNRm93YURFTE1Ba0cKQTFVRUJoTUNWVk14RmpBVUJnTlZCQWdURFZOaGJpQkdjbUZ1WTJselkyOHhDekFKQmdOVkJBY1RBa05CTVE4dwpEUVlEVlFRS0V3WkRhV3hwZFcweER6QU5CZ05WQkFzVEJrTnBiR2wxYlRFU01CQUdBMVVFQXhNSlEybHNhWFZ0CklFTkJNRmt3RXdZSEtvWkl6ajBDQVFZSUtvWkl6ajBEQVFjRFFnQUU3Z21EQ05WOERseEIxS3VYYzhEdndCeUoKWUxuSENZNjVDWUhBb3ZBY3FUM3drcitLVVNwelcyVjN0QW9IaFdZV0UyQ2lUNjNIOXZLV1ZRY3pHeXp1T0tOQwpNRUF3RGdZRFZSMFBBUUgvQkFRREFnRUdNQThHQTFVZEV3RUIvd1FGTUFNQkFmOHdIUVlEVlIwT0JCWUVGQmMrClNDb3F1Y0JBc09sdDBWaEVCbkwyYjEyNE1Bb0dDQ3FHU000OUJBTUNBMGdBTUVVQ0lRRDJsNWVqaDVLVTkySysKSHJJUXIweUwrL05pZ3NSUHRBblA5T3lDcHExbFJBSWdYeGY5a2t5N2xYU0pOYmpkREFjbnBrNlJFTFp2eEkzbQpKaG9JRkRlbER0dz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=,hubble.tls.ca.key=[--- REDACTED WHEN PRINTING TO TERMINAL (USE --redact-helm-certificate-keys=false TO PRINT) ---],ipam.mode=cluster-pool,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator

✨ Patching ConfigMap cilium-config to enable Hubble...

🚀 Creating ConfigMap for Cilium version 1.11.3...

♻️ Restarted Cilium pods

⌛ Waiting for Cilium to become ready before deploying other Hubble component(s)...

✨ Generating certificates...

🔑 Generating certificates for Relay...

✨ Deploying Relay...

⌛ Waiting for Hubble to be installed...

ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret

✅ Hubble was successfully enabled!

# 安装hubble-cli工具,安装逻辑和cilium-cli的逻辑相似

$ export HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

$ curl -L --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-amd64.tar.gz{,.sha256sum}

$ sha256sum --check hubble-linux-amd64.tar.gz.sha256sum

hubble-linux-amd64.tar.gz: OK

$ sudo tar xzvfC hubble-linux-amd64.tar.gz /usr/local/bin

hubble

# 首先我们要开启hubble的api,使用cilium-cli开启转发

$ cilium hubble port-forward&

[1] 15512

$ kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hubble-relay ClusterIP 10.188.55.197 <none> 80/TCP 16h

hubble-ui ClusterIP 10.188.17.78 <none> 80/TCP 16h

kube-dns ClusterIP 10.188.0.10 <none> 53/UDP,53/TCP,9153/TCP 17h

$ netstat -ntulp | grep 4245

tcp 0 0 0.0.0.0:4245 0.0.0.0:* LISTEN 15527/kubectl

tcp6 0 0 :::4245 :::* LISTEN 15527/kubectl

# 实际上执行的操作等同于下面这个命令

# kubectl port-forward -n kube-system svc/hubble-relay --address 0.0.0.0 --address :: 4245:80

# 测试和hubble-api的连通性

$ hubble status

Healthcheck (via localhost:4245): Ok

Current/Max Flows: 12,285/12,285 (100.00%)

Flows/s: 28.58

Connected Nodes: 3/3

# 使用hubble命令查看数据的转发情况

$ hubble observe

Handling connection for 4245

May 9 09:33:25.861: 10.0.1.47:44484 -> cilium-test/echo-same-node-5767b7b99d-xhzpb:8080 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

May 9 09:33:25.863: 10.0.1.47:44484 <- cilium-test/echo-same-node-5767b7b99d-xhzpb:8080 to-stack FORWARDED (TCP Flags: ACK, PSH)

May 9 09:33:25.864: 10.0.1.47:44484 -> cilium-test/echo-same-node-5767b7b99d-xhzpb:8080 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

...此处略去一堆输出...

# 开启hubble ui组件

$ cilium hubble enable --ui

🔑 Found CA in secret cilium-ca

ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.11.3 --set cluster.id=0,cluster.name=kubernetes,encryption.nodeEncryption=false,hubble.enabled=true,hubble.relay.enabled=true,hubble.tls.ca.cert=LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUNGRENDQWJxZ0F3SUJBZ0lVSDRQcit1UU0xSXZtdWQvVlV3YWlycGllSEZBd0NnWUlLb1pJemowRUF3SXcKYURFTE1Ba0dBMVVFQmhNQ1ZWTXhGakFVQmdOVkJBZ1REVk5oYmlCR2NtRnVZMmx6WTI4eEN6QUpCZ05WQkFjVApBa05CTVE4d0RRWURWUVFLRXdaRGFXeHBkVzB4RHpBTkJnTlZCQXNUQmtOcGJHbDFiVEVTTUJBR0ExVUVBeE1KClEybHNhWFZ0SUVOQk1CNFhEVEl5TURVd09UQTVNREF3TUZvWERUSTNNRFV3T0RBNU1EQXdNRm93YURFTE1Ba0cKQTFVRUJoTUNWVk14RmpBVUJnTlZCQWdURFZOaGJpQkdjbUZ1WTJselkyOHhDekFKQmdOVkJBY1RBa05CTVE4dwpEUVlEVlFRS0V3WkRhV3hwZFcweER6QU5CZ05WQkFzVEJrTnBiR2wxYlRFU01CQUdBMVVFQXhNSlEybHNhWFZ0CklFTkJNRmt3RXdZSEtvWkl6ajBDQVFZSUtvWkl6ajBEQVFjRFFnQUU3Z21EQ05WOERseEIxS3VYYzhEdndCeUoKWUxuSENZNjVDWUhBb3ZBY3FUM3drcitLVVNwelcyVjN0QW9IaFdZV0UyQ2lUNjNIOXZLV1ZRY3pHeXp1T0tOQwpNRUF3RGdZRFZSMFBBUUgvQkFRREFnRUdNQThHQTFVZEV3RUIvd1FGTUFNQkFmOHdIUVlEVlIwT0JCWUVGQmMrClNDb3F1Y0JBc09sdDBWaEVCbkwyYjEyNE1Bb0dDQ3FHU000OUJBTUNBMGdBTUVVQ0lRRDJsNWVqaDVLVTkySysKSHJJUXIweUwrL05pZ3NSUHRBblA5T3lDcHExbFJBSWdYeGY5a2t5N2xYU0pOYmpkREFjbnBrNlJFTFp2eEkzbQpKaG9JRkRlbER0dz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=,hubble.tls.ca.key=[--- REDACTED WHEN PRINTING TO TERMINAL (USE --redact-helm-certificate-keys=false TO PRINT) ---],hubble.ui.enabled=true,hubble.ui.securityContext.enabled=false,ipam.mode=cluster-pool,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator

✨ Patching ConfigMap cilium-config to enable Hubble...

🚀 Creating ConfigMap for Cilium version 1.11.3...

♻️ Restarted Cilium pods

⌛ Waiting for Cilium to become ready before deploying other Hubble component(s)...

✅ Relay is already deployed

✨ Deploying Hubble UI and Hubble UI Backend...

⌛ Waiting for Hubble to be installed...

ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret

✅ Hubble was successfully enabled!

# 实际上这时候我们再查看k8s集群的状态可以看到部署了一个名为hubble-ui的deployment

$ kubectl get deployment -n kube-system | grep hubble

hubble-relay 1/1 1 1 17h

hubble-ui 1/1 1 1 17h

$ kubectl get svc -n kube-system | grep hubble

hubble-relay ClusterIP 10.188.55.197 <none> 80/TCP 17h

hubble-ui ClusterIP 10.188.17.78 <none> 80/TCP 17h

# 将hubble-ui这个服务的80端口暴露到宿主机上面的12000端口上面

$ cilium hubble ui&

[2] 5809

ℹ️ Opening "http://localhost:12000" in your browser...

# 实际上执行的操作等同于下面这个命令

# kubectl port-forward -n kube-system svc/hubble-ui --address 0.0.0.0 --address :: 12000:80

Copy

|

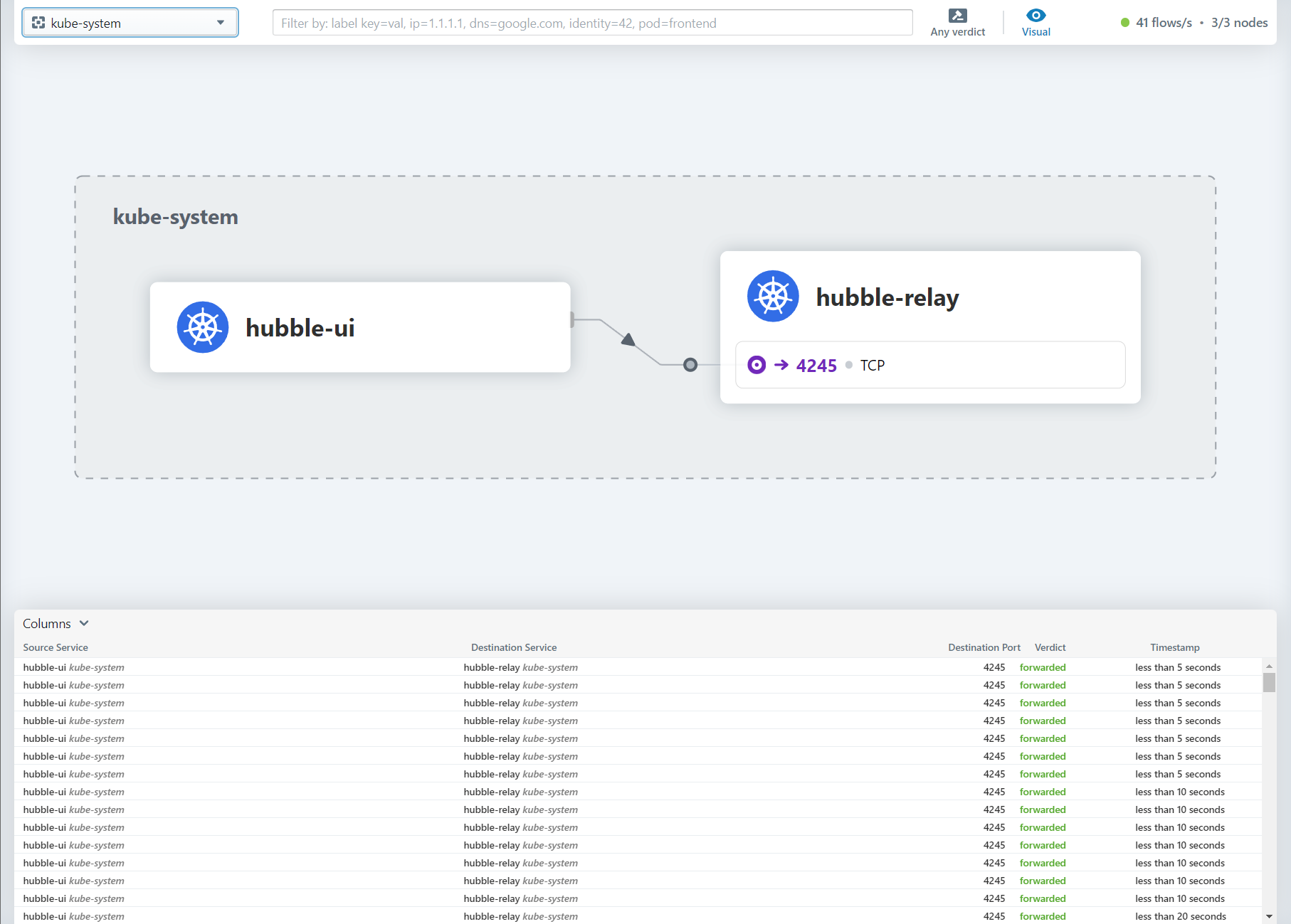

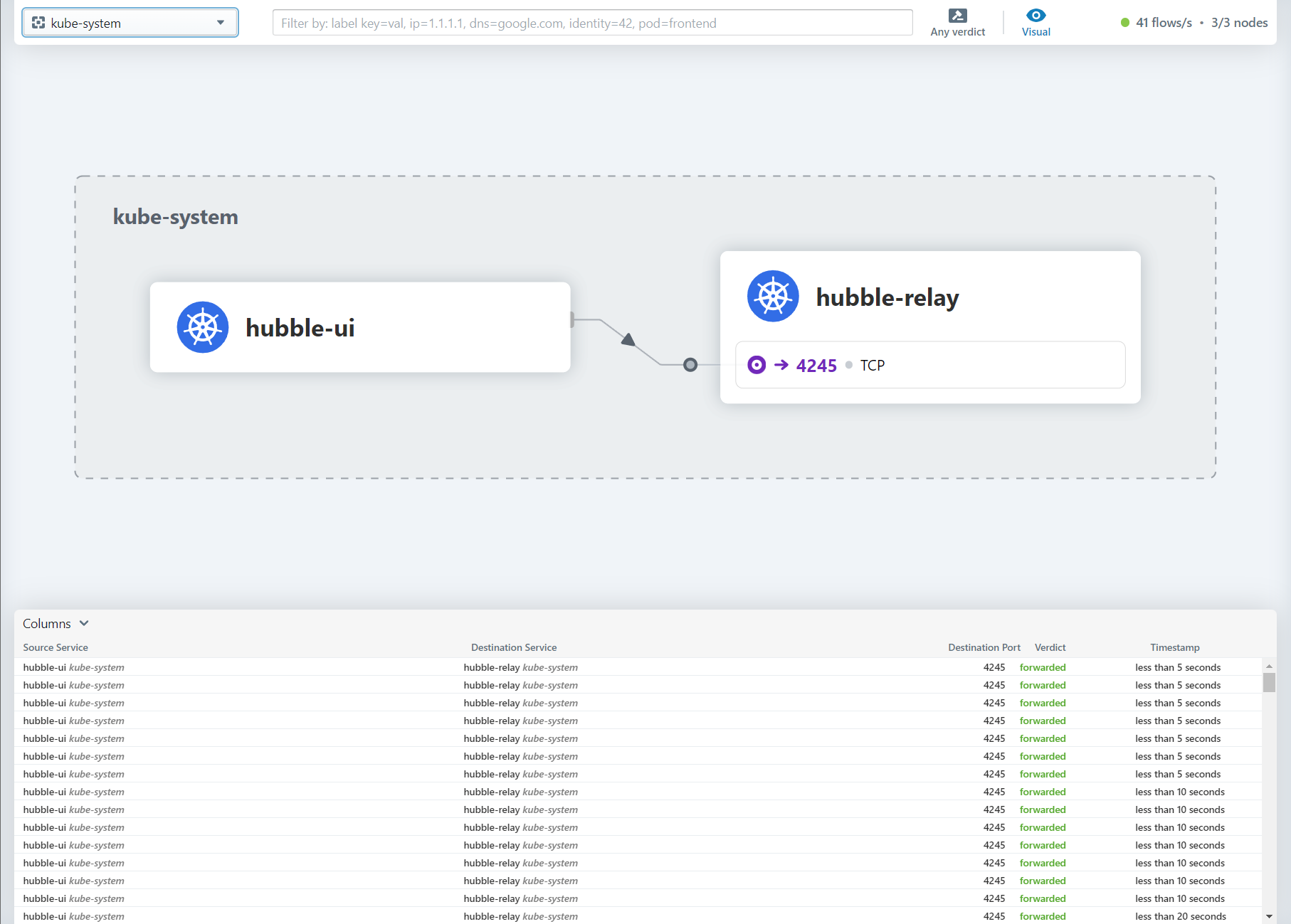

访问k8s宿主机节点的IP+端口就可以看到hubble-ui的界面了

最后所有的相关服务都部署完成之后,我们再检查一下整个cilium的状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| $ cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: OK

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Containers: cilium Running: 3

cilium-operator Running: 1

hubble-relay Running: 1

hubble-ui Running: 1

Cluster Pods: 8/8 managed by Cilium

Image versions cilium quay.io/cilium/cilium:v1.11.3@sha256:cb6aac121e348abd61a692c435a90a6e2ad3f25baa9915346be7b333de8a767f: 3

cilium-operator quay.io/cilium/operator-generic:v1.11.3@sha256:5b81db7a32cb7e2d00bb3cf332277ec2b3be239d9e94a8d979915f4e6648c787: 1

hubble-relay quay.io/cilium/hubble-relay:v1.11.3@sha256:7256ec111259a79b4f0e0f80ba4256ea23bd472e1fc3f0865975c2ed113ccb97: 1

hubble-ui quay.io/cilium/hubble-ui:v0.8.5@sha256:4eaca1ec1741043cfba6066a165b3bf251590cf4ac66371c4f63fbed2224ebb4: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.8.5@sha256:2bce50cf6c32719d072706f7ceccad654bfa907b2745a496da99610776fe31ed: 1

hubble-ui docker.io/envoyproxy/envoy:v1.18.4@sha256:e5c2bb2870d0e59ce917a5100311813b4ede96ce4eb0c6bfa879e3fbe3e83935: 1

Copy

|

6、部署测试用例

集群部署完成之后我们在k8s集群中部署一个nginx测试一下是否能够正常工作。首先我们创建一个名为nginx-quic的命名空间(namespace),然后在这个命名空间内创建一个名为nginx-quic-deployment的deployment用来部署pod,最后再创建一个service用来暴露服务,这里我们先使用nodeport的方式暴露端口方便测试。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

| $ cat nginx-quic.yaml

apiVersion: v1

kind: Namespace

metadata:

name: nginx-quic

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-quic-deployment

namespace: nginx-quic

spec:

selector:

matchLabels:

app: nginx-quic

replicas: 4

template:

metadata:

labels:

app: nginx-quic

spec:

containers:

- name: nginx-quic

image: tinychen777/nginx-quic:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-quic-service

namespace: nginx-quic

spec:

externalTrafficPolicy: Cluster

selector:

app: nginx-quic

ports:

- protocol: TCP

port: 8080 # match for service access port

targetPort: 80 # match for pod access port

nodePort: 30088 # match for external access port

type: NodePort

Copy

|

部署完成后我们直接查看状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

| # 直接部署

$ kubectl apply -f nginx-quic.yaml

namespace/nginx-quic created

deployment.apps/nginx-quic-deployment created

service/nginx-quic-service created

# 查看deployment的运行状态

$ kubectl get deployment -o wide -n nginx-quic

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-quic-deployment 4/4 4 4 17h nginx-quic tinychen777/nginx-quic:latest app=nginx-quic

# 查看service的运行状态

$ kubectl get service -o wide -n nginx-quic

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx-quic-service NodePort 10.188.0.200 <none> 8080:30088/TCP 17h app=nginx-quic

# 查看pod的运行状态

$ kubectl get pods -o wide -n nginx-quic

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-quic-deployment-696d959797-26dzh 1/1 Running 1 (17h ago) 17h 10.0.2.58 tiny-cilium-worker-188-12.k8s.tcinternal <none> <none>

nginx-quic-deployment-696d959797-kw6bn 1/1 Running 1 (17h ago) 17h 10.0.2.207 tiny-cilium-worker-188-12.k8s.tcinternal <none> <none>

nginx-quic-deployment-696d959797-mdw99 1/1 Running 1 (17h ago) 17h 10.0.1.247 tiny-cilium-worker-188-11.k8s.tcinternal <none> <none>

nginx-quic-deployment-696d959797-x42zn 1/1 Running 1 (17h ago) 17h 10.0.1.60 tiny-cilium-worker-188-11.k8s.tcinternal <none> <none>

# 查看IPVS规则

$ ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:30088 rr

-> 10.0.1.60:80 Masq 1 0 0

-> 10.0.1.247:80 Masq 1 0 0

-> 10.0.2.58:80 Masq 1 0 0

-> 10.0.2.207:80 Masq 1 0 0

TCP 10.0.0.226:30088 rr

-> 10.0.1.60:80 Masq 1 0 0

-> 10.0.1.247:80 Masq 1 0 0

-> 10.0.2.58:80 Masq 1 0 0

-> 10.0.2.207:80 Masq 1 0 0

TCP 10.31.188.1:30088 rr

-> 10.0.1.60:80 Masq 1 0 0

-> 10.0.1.247:80 Masq 1 0 0

-> 10.0.2.58:80 Masq 1 0 0

-> 10.0.2.207:80 Masq 1 0 0

TCP 10.188.0.200:8080 rr

-> 10.0.1.60:80 Masq 1 0 0

-> 10.0.1.247:80 Masq 1 0 0

-> 10.0.2.58:80 Masq 1 0 0

-> 10.0.2.207:80 Masq 1 0 0

Copy

|

最后我们进行测试,这个nginx-quic的镜像默认情况下会返回在nginx容器中获得的用户请求的IP和端口

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| # 首先我们在集群内进行测试

# 直接访问pod

$ curl 10.0.2.58:80

10.0.0.226:52312

# 直接访问service的ClusterIP,这时请求会被转发到pod中

$ curl 10.188.0.200:8080

10.0.0.226:36228

# 直接访问nodeport,这时请求会被转发到pod中,不会经过ClusterIP

# 此时实际返回的IP要取决于被转发到的后端pod是否在当前的k8s节点上

$ curl 10.31.188.1:30088

10.0.0.226:38034

$ curl 10.31.188.11:30088

10.31.188.11:24371

$ curl 10.31.188.12:30088

10.31.188.12:56919

$ curl 10.31.188.12:30088

10.0.2.151:49222

# 接着我们在集群外进行测试

# 直接访问三个节点的nodeport,这时请求会被转发到pod中,不会经过ClusterIP

# 此时实际返回的IP要取决于被转发到的后端pod是否在当前的k8s节点上

$ curl 10.31.188.11:30088

10.0.1.47:6318

$ curl 10.31.188.11:30088

10.31.188.11:63944

$ curl 10.31.188.12:30088

10.31.188.12:53882

$ curl 10.31.188.12:30088

10.0.2.151:6366

$ curl 10.31.188.12:30088

10.0.2.151:6368

$ curl 10.31.188.12:30088

10.31.188.12:48174

|

已为社区贡献7条内容

已为社区贡献7条内容

所有评论(0)