rke部署k8s集群(包含清理)

1、下载rke工具https://github.com/rancher/rke/releases选择对应版本然后重命名为rke2、禁用虚拟内存vm.swappiness=03、ssh配置文件打开配置AllowTcpForwarding yes4、安装docker-ce5、将用户加入docker组(centos不能用root用户并禁用 NetworkManager)usermod -aG docke

节点环境准备

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

lsmod | grep br_netfilter

lsmod | grep overlay 查看模块是否启用

1、下载rke工具

https://github.com/rancher/rke/releases 选择对应版本然后重命名为rke

2、禁用虚拟内存

vm.swappiness=0

3、ssh配置文件打开配置

AllowTcpForwarding yes

4、安装docker-ce

5、将用户加入docker组 (centos不能用root用户并禁用 NetworkManager)

usermod -aG docker <user_name>(将多个master到所有的node节点user_name做免密)

6、运行./rke config --list-version --all 查看该版本rke支持的kubernets版本

7、运行./rke config --name xxx.yml

将节点信息填到交互式界面

8、执行./rke up 将启动安装或者使用下面模板:

9、生成的kube_config_cluster.yml 复制到~/.kube/config (kubectl 执行命令读取的认证文件)

10、如果需要添加或删除节点 在cluster.yml中添加相应信息 然后rke up --update-only cluster.yml

11、kubectl补全

yum install -y bash-completion

mkdir -p /etc/bash_completion.d/

kubectl completion bash > /etc/bash_completion.d/kubectl # 添加命令行补全

source /etc/bash_completion.d/kubectl

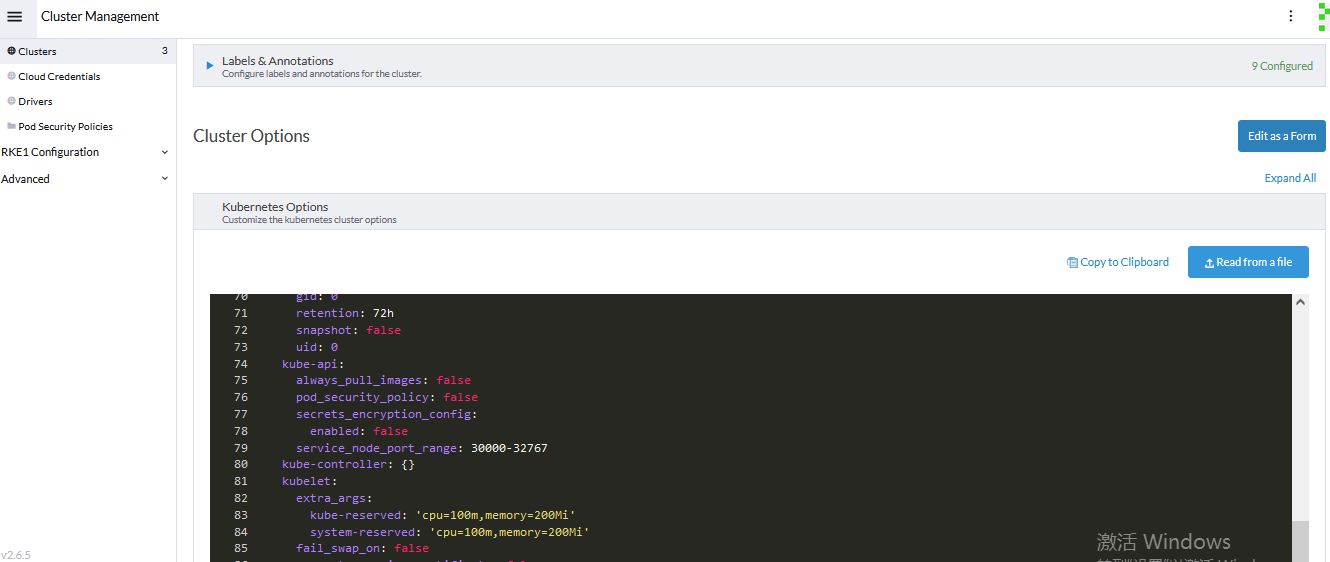

资源预留:

(如果kube-proxy默认用iptebles,在pod中ping不通service是因为规则默认禁止所有的icmp,解析和端口是能正常访问的)

直接使用一下模板做相关信息更改 然后rke up --config …yaml

nodes:

- address: 10.10.10.4

user: ops

role:

- controlplane

- etcd

- address: 10.10.10.5

user: ops

role:

- worker

#默认值为false,如果设置为true,当发现不支持的Docker版本时,RKE不会报错

ignore_docker_version: false

#集群级SSH私钥,如果没有为节点设置ssh信息则使用该私钥

ssh_key_path: /data/rke/privilege.key

#ssh_agent_auth: true

#kubernets的相关文件指定目录

prefix_path: /mnt/kubelet

#镜像仓库凭证列表

#如果你使用的是Docker Hub注册表,

#你可以省略`url`

#或者设置为`docker.io`is_default设置为`true`

#将覆盖全局设置中设置的系统默认注册表

private_registries:

- url: harbor.test.com

user: devops

password: 8EFunIanwJfxkHBssh9Rg0GWca

is_default: true

#堡垒机配置

#bastion_host:

#Set the name of the Kubernetes cluster

cluster_name: owntest

#For RKE v0.3.0 and above, the map of Kubernetes versions and their system images is

#located here:

#https://github.com/rancher/kontainer-driver-metadata/blob/master/rke/k8s_rke_system_images.go

#

#In case the kubernetes_version and kubernetes image in

#system_images are defined, the system_images configuration

#will take precedence over kubernetes_version.

kubernetes_version: v1.20.9-rancher1-1

#For RKE v0.3.0 and above, the map of Kubernetes versions and their system images is

#located here:

#https://github.com/rancher/kontainer-driver-metadata/blob/master/rke/k8s_rke_system_images.go

services:

etcd:

snapshot: true

creation: 5m0s

retention: 36h

# Note for Rancher v2.0.5 and v2.0.6 users: If you are configuring

# Cluster Options using a Config File when creating Rancher Launched

# Kubernetes, the names of services should contain underscores

# only: `kube_api`.

kube-api:

# IP range for any services created on Kubernetes

# This must match the service_cluster_ip_range in kube-controller

service_cluster_ip_range: 192.168.1.0/16

# Expose a different port range for NodePort services

service_node_port_range: 30000-32767

pod_security_policy: false

# Add additional arguments to the kubernetes API server

# This WILL OVERRIDE any existing defaults

extra_args:

feature-gates: RemoveSelfLink=false

# Enable audit log to stdout

#audit-log-path: "-"

# Increase number of delete workers

#delete-collection-workers: 3

# Set the level of log output to debug-level

#v: 2

# Note for Rancher 2 users: If you are configuring Cluster Options

# using a Config File when creating Rancher Launched Kubernetes,

# the names of services should contain underscores only:

# `kube_controller`. This only applies to Rancher v2.0.5 and v2.0.6.

kube-controller:

# CIDR pool used to assign IP addresses to pods in the cluster

cluster_cidr: 192.1.0.0/16

# IP range for any services created on Kubernetes

# This must match the service_cluster_ip_range in kube-api

service_cluster_ip_range: 192.168.1.0/16

kubelet:

# Base domain for the cluster

cluster_domain: cluster.local

# IP address for the DNS service endpoint

cluster_dns_server: 192.168.1.10

# Fail if swap is on

fail_swap_on: false

# Set max pods to 250 instead of default 110

extra_args:

max-pods: 250

# Optionally define additional volume binds to a service

#extra_binds:

# - "/usr/libexec/kubernetes/kubelet-plugins:/usr/libexec/kubernetes/kubelet-plugins"

kubeproxy:

extra_args:

proxy-mode: ipvs

masquerade-all: true

#Currently, only authentication strategy supported is x509.

#You can optionally create additional SANs (hostnames or IPs) to

#add to the API server PKI certificate.

#This is useful if you want to use a load balancer for the

#control plane servers.

authentication:

strategy: x509

sans:

- ""

#Kubernetes Authorization mode

#Use `mode: rbac` to enable RBAC

#Use `mode: none` to disable authorization

authorization:

mode: rbac

#If you want to set a Kubernetes cloud provider, you specify

#the name and configuration

#cloud_provider:

#name: aws

#Add-ons are deployed using kubernetes jobs. RKE will give

#up on trying to get the job status after this timeout in seconds..

addon_job_timeout: 30

#Specify network plugin-in (canal, calico, flannel, weave, or none)

network:

plugin: calico

#Specify DNS provider (coredns or kube-dns)

dns:

provider: coredns

#Currently only nginx ingress provider is supported.

#To disable ingress controller, set `provider: none`

#`node_selector` controls ingress placement and is optional

ingress:

provider: nginx

node_selector:

app: ingress

extra_envs:

- name: TZ

value: Asia/Shanghai

rke删除

df -h|grep kubelet |awk -F % ‘{print $2}’|xargs umount

rm /var/lib/kubelet/* -rf

rm /etc/kubernetes/* -rf

rm /var/lib/rancher/* -rf

rm /var/lib/etcd/* -rf

rm /var/lib/cni/* -rf

rm -rf /etc/ceph

/etc/cni

/opt/cni

/run/secrets/kubernetes.io

/run/calico

/run/flannel

/var/lib/calico

/var/lib/cni

/var/lib/kubelet

/var/log/containers

/var/log/pods

/var/run/calico

#清理残留进程

port_list=‘80 443 6443 2376 2379 2380 8472 9099 10250 10254’

for port in $port_list

do

pid=netstat -atlnup|grep $port |awk '{print $7}'|awk -F '/' '{print $1}'|grep -v -|sort -rnk2|uniq

if [[ -n $pid ]];then

kill -9 $pid

fi

done

pro_pid=ps -ef |grep -v grep |grep kube|awk '{print $2}'

if [[ -n $pro_pid ]];then

kill -9 $pro_pid

fi

iptables -F && iptables -t nat -F

ip link del flannel.1

rm -rf /var/etcd/

rm -rf /run/kubernetes/

docker rm -fv $(docker ps -aq)

docker volume rm $(docker volume ls)

rm -rf /etc/cni

rm -rf /opt/cni

systemctl restart docker

如果在使用k8s1.24或更高版本请加上enable_cri_dockerd: true 配置以应对取消dockershim支持

更多推荐

已为社区贡献14条内容

已为社区贡献14条内容

所有评论(0)