k8s安装prometheus+grafana(第二弹:prometheus-operator)

本来安装prometheus-operator用helm安装就是一句话的事奈何bitnami/prometheus-operator这版本的一个组件8080被应用占了,而且不智能的不切换端口,stable版本又太老提前准备好k8s环境,下载prometheus-operator安装包,我这里使用的0.8.0版本,k8s版本为v1.20.x,其版本与k8s版本有对应关系,请对应下载kube-prom

背景

本来安装prometheus-operator用helm安装就是一句话的事

奈何bitnami/prometheus-operator这版本的一个组件8080被应用占了,而且不智能的不切换端口,stable版本又太老

提前准备好k8s环境,下载prometheus-operator安装包,我这里使用的0.8.0版本,k8s版本为v1.20.x,其版本与k8s版本有对应关系,请对应下载

https://github.com/prometheus-operator/kube-prometheus/releases

| kube-prometheus stack | Kubernetes 1.18 | Kubernetes 1.19 | Kubernetes 1.20 | Kubernetes 1.21 | Kubernetes 1.22 |

|---|---|---|---|---|---|

| release-0.6 | ✗ | ✔ | ✗ | ✗ | ✗ |

| release-0.7 | ✗ | ✔ | ✔ | ✗ | ✗ |

| release-0.8 | ✗ | ✗ | ✔ | ✔ | ✗ |

| release-0.9 | ✗ | ✗ | ✗ | ✔ | ✔ |

| HEAD | ✗ | ✗ | ✗ | ✔ | ✔ |

安装

tar zxvf kube-prometheus-0.8.0.tar.gz

cd kube-prometheus-0.8.0/manifests/

翻墙了不需要替换国内镜像

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' setup/prometheus-operator-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-prometheus.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' alertmanager-alertmanager.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' kube-state-metrics-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' node-exporter-daemonset.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-adapter-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' blackbox-exporter-deployment.yaml

sed -i 's#k8s.gcr.io/kube-state-metrics/kube-state-metrics#bitnami/kube-state-metrics#g' kube-state-metrics-deployment.yaml

修改promethes,alertmanager 端口30093,grafana 30095的service类型为NodePort类型

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.26.0

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort #新增

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30090 #新增

selector:

app: prometheus

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

同理alertmanager-service.yaml、grafana-service.yaml

修改副本数 如果需要2个以上的不需要修改

alertmanager-alertmanager.yaml prometheus-adapter-deployment.yaml prometheus-prometheus.yaml

这个地方 大于2 改为1

replicas: 1 #修改

resources:

修改报警邮箱,其它钉钉 企业微信报警可以参考连接

https://blog.51cto.com/shoufu/2537258

https://blog.51cto.com/u_11970509/2904000

alertmanager-secret.yaml 也可以安装后修改

安装前修改没测试

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.163.com:465'

smtp_from: 'xxx@163.com'

smtp_auth_username: 'xxx@163.com'

smtp_auth_password: 'xxxxZNKXFOWOIWQF'

smtp_require_tls: false

route:

group_by: ['alertname']

group_wait: 30s

group_interval: 60s

repeat_interval: 24h #重复发送时间间隔

receiver: 'mail'

receivers:

- name: 'mail'

email_configs:

- to: 'xxx@qq.com'

send_resolved: true

安装后修改已测试,把上面代码段的保存alertmanager.yaml文件

# 先将之前的 secret 对象删除

$ kubectl delete secret alertmanager-main -n monitoring

secret "alertmanager-main" deleted

$ kubectl create secret generic alertmanager-main --from-file=alertmanager.yaml -n monitoring

secret "alertmanager-main" created安装prometheus-operator

kubectl apply -f setup/

查看状态,到podRunning再进行下一步

kubectl get pods -n monitoring

kubectl apply -f .

查看状态,到所有podRunning再进行下一步

kubectl get pods -n monitoring

获取prometheus端口

[root manifests]# kubectl get svc -n monitoring | grep NodePort

alertmanager-main NodePort 10.101.19.108 <none> 9093:30093/TCP 10m

grafana NodePort 10.101.15.228 <none> 3000:30095/TCP 10m

prometheus-k8s NodePort 10.97.1.58 <none> 9090:30090/TCP 10m

卸载命令

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup省略这步骤接下来,使用helm安装custom metrics api server我觉得这步可以省略yaml里有了

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm repo list

helm install prometheus-adapter stable/prometheus-adapter --namespace kube-system --set prometheus.url=http://prometheus-k8s.monitoring,prometheus.port=9090

查看custom metrics api

[root ~]# kubectl get apiservices -n monitoring | grep metrics

v1beta1.custom.metrics.k8s.io kube-system/prometheus-adapter True 11h

v1beta1.metrics.k8s.io monitoring/prometheus-adapter True 15hgrafana模版和测试

ip:30093/#/status查看报警配置

ip:30090查看prometheus

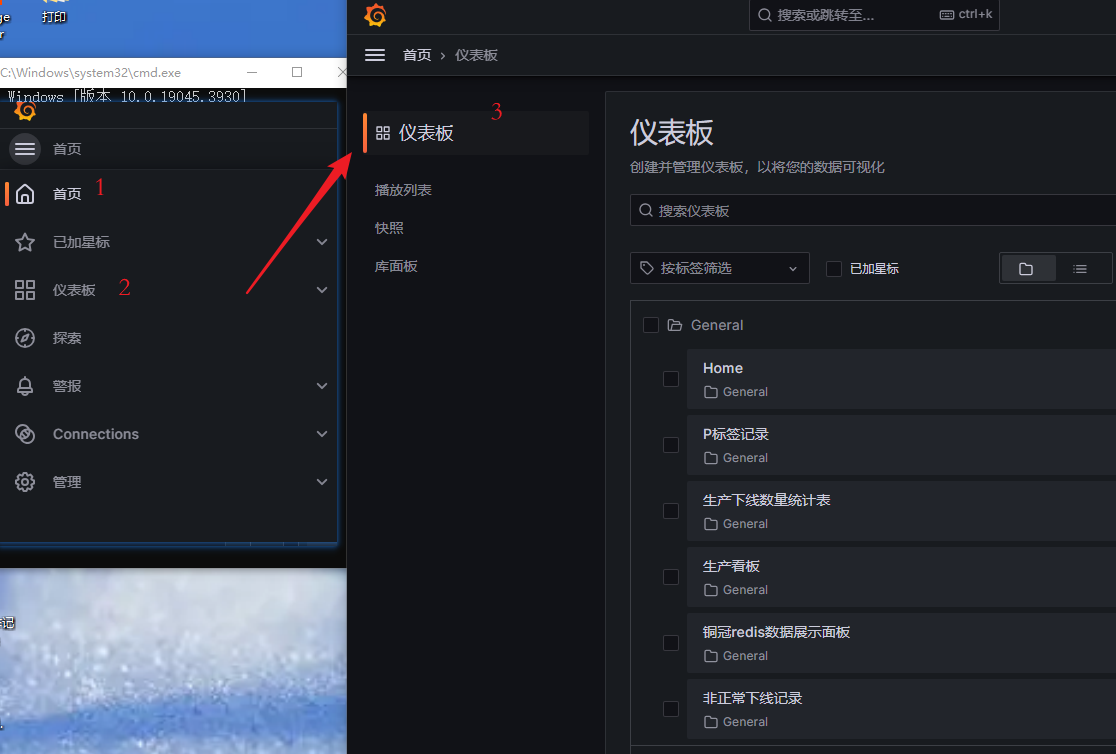

ip:30095查看grafana模版

添加面板13105 全部展示 ,8919熟悉的主机模版, 还有项目自带的模版

测试报警邮件

#!/usr/bin/env bash

alerts_message='[

{

"labels": {

"alertname": "DiskRunningFull",

"dev": "sda1",

"instance": "example1",

"msgtype": "testing"

},

"annotations": {

"info": "The disk sda1 is running full",

"summary": "please check the instance example1"

}

},

{

"labels": {

"alertname": "DiskRunningFull",

"dev": "sda2",

"instance": "example1",

"msgtype": "testing"

},

"annotations": {

"info": "The disk sda2 is running full",

"summary": "please check the instance example1",

"runbook": "the following link http://test-url should be clickable"

}

}

]'

curl -XPOST -d"$alerts_message" http://127.0.0.1:30093/api/v1/alerts修改规则只报警主机 自定义规则 ,也可以用项目自带的

1.清除原来服务默认的监控指标数据

查看默认的监控指标规则和清除

这一步会导致项目带的模版好几个看不到图形不用项目的模版 和报警 问题不大

kubectl get PrometheusRule -n monitoring

kubectl delete PrometheusRule alertmanager-main-rules kube-prometheus-rules kube-state-metrics-rules kubernetes-monitoring-rules node-exporter-rules prometheus-k8s-prometheus-rules prometheus-operator-rules -n monitoring规则大全参考:

https://awesome-prometheus-alerts.grep.to/rules

查看清理的

2. 自定义监控指标 vi bjgz.yaml 不要在意名字

kubectl apply -f bjgz.yamlapiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: host-rules

namespace: monitoring

spec:

groups:

- name: 主机状态-监控告警

rules:

- alert: 主机状态

expr: up == 0

for: 1m

labels:

status: 非常严重

annotations:

summary: "{{$labels.instance}}:服务器宕机"

description: "{{$labels.instance}}:服务器延时超过5分钟"

- alert: CPU使用情况

expr: 100-(avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by(instance)* 100) > 60

for: 1m

labels:

status: 一般告警

annotations:

summary: "{{$labels.mountpoint}} CPU使用率过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }} CPU使用大于60%(目前使用:{{$value}}%)"

- alert: 内存使用

expr: 100 -(node_memory_MemTotal_bytes -node_memory_MemFree_bytes+node_memory_Buffers_bytes+node_memory_Cached_bytes ) / node_memory_MemTotal_bytes * 100> 80

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 内存使用率过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }} 内存使用大于80%(目前使用:{{$value}}%)"

- alert: IO性能

expr: 100-(avg(irate(node_disk_io_time_seconds_total[1m])) by(instance)* 100) < 60

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流入磁盘IO使用率过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }} 流入磁盘IO大于60%(目前使用:{{$value}})"

- alert: 网络

expr: ((sum(rate (node_network_receive_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance)) / 100) > 102400

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流入网络带宽过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }}流入网络带宽持续2分钟高于100M. RX带宽使用率{{$value}}"

- alert: 网络

expr: ((sum(rate (node_network_transmit_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance)) / 100) > 102400

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流出网络带宽过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }}流出网络带宽持续2分钟高于100M. RX带宽使用率{{$value}}"

- alert: TCP会话

expr: node_netstat_Tcp_CurrEstab > 1000

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} TCP_ESTABLISHED过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }} TCP_ESTABLISHED大于1000%(目前使用:{{$value}}%)"

- alert: 磁盘容量

expr: 100-(node_filesystem_free_bytes{fstype=~"ext4|xfs"}/node_filesystem_size_bytes {fstype=~"ext4|xfs"}*100) > 80

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 磁盘分区使用率过高!"

description: "{{$labels.instance}}:{{$labels.mountpoint }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

等一会面板就能看到了

安装mysql监控,mysql和redis都是k8s外的服务

下面的mysql监控和redis监控加了采集服务后等一会都会在 http://ip:30090/config 也就是prometheus.yml下自动生成配置。

创建采集账号

CREATE USER 'mysqld-exporter' IDENTIFIED BY '123456' WITH MAX_USER_CONNECTIONS 3;GRANT PROCESS, REPLICATION CLIENT, REPLICATION SLAVE, SELECT ON *.* TO 'mysqld-exporter'

flush privileges;

部署mysqld-exporter创建mysqld-exporter.yaml

- --collect.perf_schema等有些可能报错 也可以省略,不省多点日志 应该无伤大雅

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqld-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: mysqld-exporter

template:

metadata:

labels:

app: mysqld-exporter

spec:

containers:

- name: mysqld-exporter

image: prom/mysqld-exporter

args:

#- --collect.info_schema.tables

#- --collect.info_schema.innodb_tablespaces

#- --collect.info_schema.innodb_metrics

#- --collect.global_status

#- --collect.global_variables

#- --collect.slave_status

#- --collect.info_schema.processlist

#- --collect.perf_schema.tablelocks

#- --collect.perf_schema.eventsstatements

#- --collect.perf_schema.eventsstatementssum

#- --collect.perf_schema.eventswaits

#- --collect.auto_increment.columns

#- --collect.binlog_size

#- --collect.perf_schema.tableiowaits

#- --collect.perf_schema.indexiowaits

#- --collect.info_schema.userstats

#- --collect.info_schema.clientstats

#- --collect.info_schema.tablestats

#- --collect.info_schema.schemastats

#- --collect.perf_schema.file_events

#- --collect.perf_schema.file_instances

#- --collect.perf_schema.replication_group_member_stats

#- --collect.perf_schema.replication_applier_status_by_worker

#- --collect.slave_hosts

#- --collect.info_schema.innodb_cmp

#- --collect.info_schema.innodb_cmpmem

#- --collect.info_schema.query_response_time

#- --collect.engine_tokudb_status

#- --collect.engine_innodb_status

ports:

- containerPort: 9104

protocol: TCP

env:

- name: DATA_SOURCE_NAME

value: "user:password@(hostname:3306)/"

---

apiVersion: v1

kind: Service

metadata:

name: mysqld-exporter

namespace: monitoring

labels:

app: mysqld-exporter

spec:

type: ClusterIP

ports:

- port: 9104

protocol: TCP

name: http-mysql

selector:

app: mysqld-exporter

user:password@(hostname:3306) 修改为刚刚创建的账号以及MySQL连接地址

部署采集配置, 创建mysqld-exportercaiji.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: mysqld-exporter

name: mysqld-exporter

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: http-mysql

relabelings:

- sourceLabels:

- __meta_kubernetes_service_name

targetLabel: service_name

jobLabel: mysqld-exporter

namespaceSelector:

matchNames:

- monitoring

selector:

matchLabels:

app: mysqld-exporter

curl http://ip:9104/metrics 没开nodePort ,只能k8s集群里

GRAFANA添加模版11329,14934,14969,11323总有一个合适的

监控规则可以接到上面自定义规则后面 ,也可以重新写yaml 我这里是接到自定义规则后面

mysql规则有点少啊 ,可以百度自行添加

- alert: Mysql_Instance_Reboot

expr: mysql_global_status_uptime < 180

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_Instance_Reboot detected"

description: "{{$labels.instance}}: Mysql_Instance_Reboot in 3 minute (up to now is: {{ $value }} seconds"

- alert: Mysql_High_QPS

expr: rate(mysql_global_status_questions[5m]) > 500

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_High_QPS detected"

description: "{{$labels.instance}}: Mysql opreation is more than 500 per second ,(current value is: {{ $value }})"

- alert: Mysql_Too_Many_Connections

expr: rate(mysql_global_status_connections[5m]) > 100

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql Too Many Connections detected"

description: "{{$labels.instance}}: Mysql Connections is more than 100 per second ,(current value is: {{ $value }})"

- alert: Mysql_High_Recv_Rate

expr: round(rate(mysql_global_status_bytes__received[5m]) /1024*100)/100 > 102400

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_High_Recv_Rate detected"

description: "{{$labels.instance}}: Mysql_Receive_Rate is more than 100Mbps ,(current value is: {{ $value }})"

- alert: Mysql_High_Send_Rate

expr: round(rate(mysql_global_status_bytes_sent[5m]) /1024*100)/100 > 102400

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_High_Send_Rate detected"

description: "{{$labels.instance}}: Mysql data Send Rate is more than 100Mbps ,(current value is: {{ $value }})"

- alert: Mysql_Too_Many_Slow_Query

expr: rate(mysql_global_status_slow_queries[30m]) > 3

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_Too_Many_Slow_Query detected"

description: "{{$labels.instance}}: Mysql current Slow_Query Sql is more than 3 ,(current value is: {{ $value }})"

- alert: Mysql_Deadlock

expr: mysql_global_status_innodb_deadlocks > 0

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_Deadlock detected"

description: "{{$labels.instance}}: Mysql Deadlock was found ,(current value is: {{ $value }})"

- alert: Mysql_Too_Many_sleep_threads

expr: mysql_global_status_threads_running / mysql_global_status_threads_connected * 100 < 3

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_Too_Many_sleep_threads detected"

description: "{{$labels.instance}}: Mysql_sleep_threads percent is more than {{ $value }}, please clean the sleeping threads"

- alert: Mysql_innodb_Cache_insufficient

expr: (mysql_global_status_innodb_page_size * on (instance) mysql_global_status_buffer_pool_pages{state="data"} + on (instance) mysql_global_variables_innodb_log_buffer_size + on (instance) mysql_global_variables_innodb_additional_mem_pool_size + on (instance) mysql_global_status_innodb_mem_dictionary + on (instance) mysql_global_variables_key_buffer_size + on (instance) mysql_global_variables_query_cache_size + on (instance) mysql_global_status_innodb_mem_adaptive_hash ) / on (instance) mysql_global_variables_innodb_buffer_pool_size * 100 > 80

for: 2m

labels:

status: warning

annotations:

summary: "{{$labels.instance}}: Mysql_innodb_Cache_insufficient detected"

description: "{{$labels.instance}}: Mysql innodb_Cache was used more than 80% ,(current value is: {{ $value }})"

上面mysql 规则解释

metric expr:

# 实例启动时间,单位s,三分钟内有重启记录则告警

- mysql_global_status_uptime < 180

# 每秒查询次数指标

- rate(mysql_global_status_questions[5m]) > 500

# 连接数指标

- rate(mysql_global_status_connections[5m]) > 200

# mysql接收速率,单位Mbps

- rate(mysql_global_status_bytes_received[3m]) * 1024 * 1024 * 8 > 50

# mysql传输速率,单位Mbps

- rate(mysql_global_status_bytes_sent[3m]) * 1024 * 1024 * 8 > 100

# 慢查询

- rate(mysql_global_status_slow_queries[30m]) > 3

# 死锁

- rate(mysql_global_status_innodb_deadlocks[3m]) > 1

# 活跃线程小于30%

- mysql_global_status_threads_running / mysql_global_status_threads_connected * 100 < 30

# innodb缓存占用缓存池大小超过80%

- (mysql_global_status_innodb_page_size * on (instance) mysql_global_status_buffer_pool_pages{state="data"} + on (instance) mysql_global_variables_innodb_log_buffer_size + on (instance) mysql_global_variables_innodb_additional_mem_pool_size + on (instance) mysql_global_status_innodb_mem_dictionary + on (instance) mysql_global_variables_key_buffer_size + on (instance) mysql_global_variables_query_cache_size + on (instance) mysql_global_status_innodb_mem_adaptive_hash ) / on (instance) mysql_global_variables_innodb_buffer_pool_size * 100 > 80

#读写操作速率参考

sum(rate(mysql_global_status_commands_total{command=~"insert|update|delete",job=~".*mysql"}[5m])) without (command)

#流量接收kb

round(rate(mysql_global_status_bytes__received[5m]) /1024*100)/100 > 102400

#传输接收kb

round(rate(mysql_global_status_bytes_sent[5m]) /1024*100)/100 > 102400

安装redis监控

部署redis-exporter创建redis-exporter.yaml,参照了helm的yaml奈何变量太多,还看不懂说明,直接helm install还是可以的,做包就是个麻烦(吐槽),小白的我还是喜欢写死,灵感来自上面和这篇kafka的监控https://www.tqwba.com/x_d/jishu/359387.html

创建部署redis--exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: redis-exporter

template:

metadata:

labels:

app: redis-exporter

spec:

containers:

- name: redis-exporter

image: oliver006/redis_exporter:v1.3.4

args: ["-redis.addr=redis://ip:6379","-redis.password=密码"]

ports:

- containerPort: 9121

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: redis-exporter

namespace: monitoring

labels:

app: redis-exporter

spec:

type: ClusterIP

ports:

- port: 9121

protocol: TCP

name: http-redis

selector:

app: redis-exporter看看这段yaml是不是很像mysql--exporter.yaml,没错 我就是复制粘贴它的格式(有工具创建k8s的yaml格式更好),自己写的不小心弄了个格式错误

部署采集配置, 创建redis-exportercaiji.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: redis-exporter

name: redis-exporter

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: http-redis

relabelings:

- sourceLabels:

- __meta_kubernetes_service_name

targetLabel: service_name

jobLabel: redis-exporter

namespaceSelector:

matchNames:

- monitoring

selector:

matchLabels:

app: redis-exporter

curl http://ip:9121/metrics 没开nodePort ,只能k8s集群里

添加模版11835

redis_memory_max_bytes为0 面板会显示N/A等,别人改数值

这里推荐修改redis配置,maxmemory 8096mb这个值推荐主机内存的80%或者60%,曾经的我就被开发的死循环\长字段,撑爆主机内存,还有挖矿也能搞爆,还可以选择一个策略超过了就删除最早数据,也可以其它策略

vi /etc/redis.conf

# In short... if you have slaves attached it is suggested that you set a lower

# limit for maxmemory so that there is some free RAM on the system for slave

# output buffers (but this is not needed if the policy is 'noeviction').

#

# maxmemory <bytes>

maxmemory 8096mbredis监控的报警规则,接上面mysql规则后面,也可以新建参考主机报警的格式,步骤都是一样的

其实我觉得的第一条报警不需要写加入了服务 ,主机哪里也会报警的

- alert: RedisDown

expr: redis_up == 0

for: 5m

labels:

status: error

annotations:

summary: "Redis down (instance {{ $labels.instance }})"

description: "Redis 挂了啊,mmp

VALUE = {{ $value }}LABELS: {{ $labels }}"

- alert: ReplicationBroken

expr: delta(redis_connected_slaves[1m]) < 0

for: 5m

labels:

status: error

annotations:

summary: "Replication broken (instance {{ $labels.instance }})"

description: "Redis instance lost a slave

VALUE = {{ $value }}LABELS: {{ $labels }}"

- alert: TooManyConnections

expr: redis_connected_clients > 1000

for: 5m

labels:

status: warning

annotations:

summary: "Too many connections (instance {{ $labels.instance }})"

description: "Redis instance has too many connections

VALUE = {{ $value }}

LABELS: {{ $labels }}"

- alert: RejectedConnections

expr: increase(redis_rejected_connections_total[1m]) > 0

for: 5m

labels:

status: error

annotations:

summary: "Rejected connections (instance {{ $labels.instance }})"

description: "Some connections to Redis has been rejected

VALUE = {{ $value }}

LABELS: {{ $labels }}"

blackbox监控域名

vi blackbox-additional.yaml

kind: Probe

apiVersion: monitoring.coreos.com/v1

metadata:

name: example-com-website

namespace: monitoring

spec:

interval: 60s

module: http_2xx #什么模块协议

prober:

url: blackbox-exporter.monitoring.svc.cluster.local:19115

targets:

staticConfig:

static:

- https://www.baidu.com

- https://www.qq.com

kubectl apply -f blackbox-additional.yaml报警规则百度吧= =

模版选择 14928,7587,14603

最后送上 prometheus的http://ip:30090/targets面板,grafana的模版中间截图了就不再上了

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)