深度学习经典论文deep learning!入门必读!:deep learning yann lecun 深度学习

深度学习YannLeCun, Yoshua Bengio & Geoffrey Hinton深度学习能够让多层处理层组成的计算模型学习多级抽象的数据的表达。这些方法极大地提高了语音识别,视觉目标识别,目标检测和许多其他领域(比如药物研究和基因学)的最新研究水平。深度学习使用反向传播算法来更新模型内部的表达上一层信息的参数,以此来从大量数据中发掘复杂的结构。深度卷积网络给图像、视频,语音处理

深度学习

YannLeCun, Yoshua Bengio & Geoffrey Hinton

深度学习能够让多层处理层组成的计算模型学习多级抽象的数据的表达。这些方法极大地提高了语音识别,视觉目标识别,目标检测和许多其他领域(比如药物研究和基因学)的最新研究水平。深度学习使用反向传播算法来更新模型内部的表达上一层信息的参数,以此来从大量数据中发掘复杂的结构。深度卷积网络给图像、视频,语音处理带来了突破性的进展。而递归网络给连续数据,比如文本和语音领域带来了曙光。

Machine-learning technology powers many aspects of modern society: from web searches to content filtering on social net works to recommendations on e-commerce websites, andit is increasingly present in consumer products such as cameras and smartphones. Machine-learning systems are used to identify objects in images, transcribe speech into text, match news items, posts or products with users’ interests, and select relevant results of search. Increasingly, these applications make use of a class of techniques called deep learning.

机器学习技术为现代社会的多方面赋能。从网页检索到社交网络文本过滤,再到电商网站推荐系统,并且它也越来越多地出现在诸如相机和智能手机等消费者产品中。机器学习系统被应用于目标检测,语音到文本的转录,根据用户兴趣进行新闻,职位或者产品匹配(推荐),还可以用于展示搜索相关的结果。这些应用系统越来越多地使用着叫做深度学习的一系列技术。

Conventional machine-learning techniques were limited in their ability to process natural data in their raw form. For decades, constructing a pattern-recognition or machine-learning system required careful engineering and considerable domain expertise to design a feature extractor that transformed the raw data (such as the pixel values of an image) into a suitable internal representation or feature vector from which the learning subsystem, often a classifier, could detect or classify patterns in the input.

传统机器学习技术对于那种最原始的数据的处理能力非常有限。几十年以来,构造一个模式识别或者机器学习系统需要非常细致的工程和大量的领域专业知识来设计一个将粗糙数据(比如一张图片的像素值)转换为合适的内在表示和特征向量的学习子系统,通常是一个可以对输入进行检测或者分类模式的分类器。

Representation learning is a set of methods that allows a machine to be fed with raw data and to automatically discover the representations needed for detection or classification. Deep-learning methods are representation-learning methods with multiple levels of representation, obtained by composing simple but non-linear modules that each transform the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level. With the composition of enough such transformations, very complex functions can be learned. For classification tasks, higher layers of representation amplify aspects of the input that are important for discrimination and suppress irrelevant variations. An image, for example, comes in the form of an array of pixel values, and the learned features in the first layer of representation typically represent the presence or absence of edges at particular orientations and locations in the image. The second layer typically detects motifs by spotting particular arrangements of edges, regardless of small variations in the edge positions. The third layer may assemble motifs into larger combinations that correspond to parts of familiar objects, and subsequent layers would detect objects as combinations of these parts. The key aspect of deep learning is that these layers of features are not designed by human engineers: they are learned from data using a general-purpose learning procedure.

表示学习是一系列方法,它让输入粗糙数据的机器自动的发现用于识别和分类的表示模式。深度学习方法就是具有多层表示能力的表示学习方法。它通过组成简单但是非线性的模型来获得这种能力。这种模型能够将一层的信息表示转换更高层的高级、抽象的信息表示(从最开始的粗糙数据开始)。随着足够数量的这种转换的组合,模型就能学到非常复杂的函数(知识)。对于分类任务来说,更高层次的数据表示能放大那些有利于区分的一些方面并且抑制无关分类的一些方面。举个例子,一张图片以一组像素值的形式输入,并且在第一层学到的特征很大程度是那些代表着在特定区域有无边界或者是图片中的位置信息。第二层一般是通过查找边的特定的布局来检测图像,而不管这个布局在图像中的位置。第三层可能将图像组为更大的结合体来与目标一些相似的地方相对应。后面的层再次组合成更高层次的信息以便检测目标。深度学习的要点就是这些层次所表示的信息(特征)不是由人类工程师设计,而是通过一般的学习过程来从数据中学到的。

Deep learning is making major advances in solving problems that have resisted the best attempts of the artificial intelligence community for many years. It has turned out to be very good at discovering intricate structures in high-dimensional data and is therefore applicable to many domains of science, business and government. In addition to beating records in image recognition1–4 and speech recognition5–7, it has beaten other machine-learning techniques at predicting the activity of potential drug molecules8, analysing particle accelerator data9,10 ,reconstructing brain circuits11, and predicting the effects of mutations in non-coding DNA on gene expression and disease12-13. Perhaps more surprisingly, deep learning has produced extremely promising results for various tasks in natural language understanding14, particularly topic classification, sentiment analysis, question answering15 and language translation16,17.

深度学习正在为解决多年以来阻碍着人工智能社区最好的尝试的难题发挥着重要的作用。它已被证实非常擅长发掘高维数据中复杂的结构,因此,它能够在多种科学领域、商业领域和政府中得到应用。另外,它打破了图像识别和语音识别的记录,也打败了其他机器学习在预测隐藏药物分子活性方面的技术,分析粒子加速数据的技术,重构大脑回路和预测非编码基因突变在基因表达和疾病方面的影响的技术。或许更令人惊讶的是,深度学习已经在自然语言理解尤其是话题分类,情感分析,问题回答和语言翻译等不同方面取得了非常不错、有前途的成果。

We think that deep learning will have many more successes in the near future because it requires very little engineering by hand, so it can easily take advantage of increases in the amount of available computation and data. New learning algorithms and architectures that are currently being developed for deep neural networks will only accelerate this progress.

我们认为深度学习在不久的将来能取得更多的成功。因为它仅仅需要非常少的人工工程。所以它很容易利用计算资源和数据的增长。目前正在为深度神经网络开发的新的算法和结构只会加速这个进程。

Supervised learning

监督学习

The most common form of machine learning, deep or not, is supervised learning. Imagine that we want to build a system that can classify images as containing, say, a house, a car, a person or a pet. We first collect a large data set of images of houses, cars, people and pets, each labelled with its category. During training, the machine is shown an image and produces an output in the form of a vector of scores, one for each category. We want the desired category to have the highest score of all categories, but this is unlikely to happen before training. We compute an objective function that measures the error (or distance) between the output scores and the desired pattern of scores. The machine then modifies its internal adjustable parameters to reduce this error. These adjustable parameters, often called weights, are real numbers that can be seen as ‘knobs’ that define the input–output function of the machine. In a typical deep-learning system, there may be hundreds of millions of these adjustable weights, and hundreds of millions of labelled examples with which to train the machine.

最常见的机器学习就是监督学习,无论它具有深度与否。试想一下我们想要建立一个根据包含的东西来进行分类的图像分类系统,一个房子、一辆车、一个人或者一只宠物,每一个都被标记着它的类别。在训练期间,机器被展示一张图片并以每个类别分数向量的形式来输出,我们想要期望类别得到相对于所有其他类别来说一个最高的分数。但是这在我们训练之前好像不能实现。我们建立一个目标函数来衡量输出分数和期望类别分数。然后机器调整它内部适应性的参数来减少这个错误(目标函数的值)。这些可调节参数(通常叫做权重)都是实数。他们都是可见的‘把手‘,他们定义了机器的输入到输出的函数。在一个典型的深度学习系统中,会有数百万个可调节参数,并且具有数百万个的标记的样本被用于训练这个机器学习系统。

To properly adjust the weight vector, the learning algorithm computes a gradient vector that, for each weight, indicates by what amount the error would increase or decrease if the weight were increased by a

tiny amount. The weight vector is then adjusted in the opposite direction to the gradient vector.

为了恰当地调整这些权重向量,机器学习算法为每一个权重计算一个梯度向量,表示如果权重增加一个很小的量,误差会增大多的的一个量的一个值。然后权重向量被以梯度方向相反的方向进行调整。

The objective function, averaged over all the training examples, can be seen as a kind of hilly landscape in the high-dimensional space of weight values. The negative gradient vector indicates the direction of steepest descent in this landscape, taking it closer to a minimum, where the output error is low on average.

平均了所有样本的目标函数可以看作是一种在权重高维空间上的丘陵地。福德梯度向量方向表示着地形中下降最快的方向,地形上越接近他的最小值。输出的平均误差就会更小。

In practice, most practitioners use a procedure called stochastic gradient descent (SGD). This consists of showing the input vector for a few examples, computing the outputs and the errors, computing the average gradient for those examples, and adjusting the weights accordingly. The process is repeated for many small sets of examples from the training set until the average of the objective function stops decreasing. It is called stochastic because each small set of examples gives a noisy estimate of the average gradient over all examples. This simple procedure usually finds a good set of weights surprisingly quickly when compared with far more elaborate optimization techniques18. After training, the performance of the system is measured on a different set of examples called a test set. This serves to test the generalization ability of the machine — its ability to produce sensible answers on new inputs that it has never seen during training.

在实践中,大多数从业者都会采用叫做随机梯度下降的方法。这包括了指定少量样本的输入向量,并计算得到输出和误差,为这些样本计算平均梯度,并相应地调整权重。这一过程在训练样本中的一小部分样本集合中重复进行,知道平均目标函数不在减少。之所以叫做随机是因为每一个小批量的样本相对于整体样本的平均梯度的估计来说,都拥有噪声。相比于一些精细化的优化技术,这个简单的过程往往能很快找到一组好的权重。在训练之后,模型的性能通过叫做测试集的不同样本集合来测试。这样做的目的是测试模型的泛化能力-它对在训练阶段没见过的样本产生正确的结果的能力。

Many of the current practical applications of machine learning use linear classifiers on top of hand engineered features. A two-class linear classifier computes a weighted sum of the feature vector components. If the weighted sum is above a threshold, the input is classified as belonging to a particular category.

许多当前在用的机器学习方法使用手工设计的特征来构造线性分类器。一个二分类的线性分类器计算特征向量各值的加权和。如果加权和超过了阈值,那么输入就会被分类为属于一个特定的类别。

Since the 1960s we have known that linear classifiers can only carve their input space into very simple regions, namely half-spaces separated by a hyperplane19. But problems such as image and speech recognition require the input–output function to be insensitive to irrelevant variations of the input, such as variations in position, orientation or illumination of an object, or variations in the pitch or accent of speech,while being very sensitive to particular minute variations (for example, the difference between a white wolf and a breed of wolf-like white dog called a Samoyed). At the pixel level, images of two Samoyeds in different poses and in different environments may be very different from each other, whereas two images of a Samoyed and a wolf in the same position and on similar backgrounds may be very similar to each other. A linear classifier, or any other ‘shallow’ classifier operating on raw pixels could not possibly distinguish the latter two, while putting the former two in the same category. This is why shallow classifiers require a good feature extractor that solves the selectivity–invariance dilemma — one that produces representations that are selective to the aspects of the image that are important for discrimination, but that are invariant to irrelevant aspects such as the pose of the animal. To make classifiers more powerful, one can use generic non-linear features, as with kernel methods20, but generic features such as those arising with the Gaussian kernel do not allow the learner to generalize well far from the training examples21. The conventional option is to hand design good feature extractors, which requires a considerable amount of engineering skill and domain expertise. But this can all be avoided if good features can be learned automatically using a general-purpose learning procedure. This is the key advantage of deep learning.

自从二十世纪六十年代以来,我们已经知道线性分类器将他们的输入空间分为非常简单的区域,也就是超平面分割的半空间。但是图像或者语音识别等问题需要输入输出函数忽视输入的无关信息,比如位置,朝向,目标的光照,或者演讲中口音或者音调的变化。但是需要对一些细微信息特别的敏感。比如一条白狼和叫做萨摩耶的一种像狼一样的白狗之间的区别。在像素级别,以不同姿势在不同环境的两只萨摩耶的图片可能相互之间有很大的不同,然而一只狼和一只萨摩耶在同一位置和类似背景可能会变得非常像。线性的分类器或者其他的直接在像素上操作的浅层分类器就算能够将前两个分为同一类,也有很大可能不能区分出后两个。这就是为什么浅层分类器需要很好的用于解决选择不变问题的特征提取器。一个能够提取出图像中区分目标的那些关键特征,但是这些特征对于分辨动物的姿势就无能为力了。为了是分类器更加强大,可以使用通用的非线性特征,叫做核方法。但是通过诸如高斯核产生的这种通用特征,不能使学习对象具有对于所有的训练样本都有很好的泛化效果。传统的选择是人工去设计好的特征提取器,但是这需要大量的工程技能和专业经验。通过使用通用目标的学习过程,可以自动学习到好的特征,从而避免上述问题,这是深度学习的关键的优势。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fcCtXnqh-1596508590603)(D:\桌面\QQ截图20200803182756.jpg)]](https://img-blog.csdnimg.cn/20200804103756777.jpg?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L2RpYW9rdWkyMzEy,size_16,color_FFFFFF,t_70#pic_center)

![*[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-xffhh5FA-1596508590605)(C:\Users\97100\AppData\Roaming\Typora\typora-user-images\image-20200804093120354.png)]](https://img-blog.csdnimg.cn/20200804103822527.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L2RpYW9rdWkyMzEy,size_16,color_FFFFFF,t_70#pic_center)

图一:多层神经网络和反向传播*

a一个多层神经网络(由相邻节点表示),能够扭曲输入空间来使数据集(红线和蓝线代表的样本)更加线性可分。注意输入空间的规则网格(左侧)是如何被隐藏层(中间)转换的。这是一个只有两个输入节点、两个隐藏节点和一个输出节点的说明性样例,但是用于目标识别或自然语言处理的网络通常有成千上百个这样的节点。

b链式求导法则告诉我们两个小的影响量(x的微小变化对于y的影响,和y对于z的影响)是如何关联的。x的微小变化量Δx首先会通过乘以∂y/∂x(这是偏导数的定义)转变成y的变化量Δy。类似的,Δy会给z带来改变Δz。通过链式法则将一个等式同另一个相关联——Δx通过乘以∂y/∂x和∂z/∂x就可以得到。当x,y,z是向量的时候,同样可以处理(导数是雅克比矩阵)。

c 在带有两个隐形层和一个输出层的神经网络中计算前向通路的值时使用这些等式,每个都包含一个可以反向传播梯度的模块。在每一层中,我们首先计算每一个节点的总输入z,即上一层输出的加权和。然后将非线性函数作用于z就得到这个节点的输出。为了简便,我们省略了偏置项。在神经网络中使用的非线性函数包括近些年广泛使用的修正线性单元(ReLU)f(z)=max(z,0),以及使用更广泛的S函数,例如双曲正切函数f(z)=(exp(z)-exp(-z))/(exp(z)+exp(-z))和Logistic函数f(z)=1/(1+exp(-z))。

*d计算反向传播值的等式。在每一个隐藏层,我们计算误差对于每一个节点输出的偏导,它是误差对上一层输入的偏导的加权和。我们通过乘以f(z)的梯度将误差对输出的偏导转换成对输入的导数。在输出层,误差对于每一个节点输出的偏导是通过对代价函数求导取得的。如果节点l的代价函数是0.5(yl-tl)2,那么结果就是yl-tl,而tl是目标值。一旦∂E/∂zk已知,则误差E对节点j的连接上的权重wlk的导数就是yl(∂E/∂zk)。

![*[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-CsEUJGDJ-1596508590609)(C:\Users\97100\AppData\Roaming\Typora\typora-user-images\image-20200804093150477.png)]*](https://img-blog.csdnimg.cn/20200804103905286.png#pic_center)

图2:一个卷积网络的内部 一个应用于萨摩耶犬图像的典型卷积网络的每一层的输出(不是滤波器)。每一个矩形图像是一个特征图,对应了由每一个位置的检测学习到的特征的输出。信息流自下而上,随着低层特征作为定向边界检测器,并利用修正线性单元计算出每一个输出图片类别的得分。

A deep-learning architecture is a multilayer stack of simple modules, all (or most) of which are subject to learning, and many of which compute non-linear input–output mappings. Each module in the stack transforms its input to increase both the selectivity and the invariance of the representation. With multiple non-linear layers, say a depth of 5 to 20, a system can implement extremely intricate functions of its inputs that are simultaneously sensitive to minute details — distinguishing Samoyeds from white wolves — and insensitive to large irrelevant variations such as the background, pose, lighting and surrounding objects.

一个深度学习框架是简单模块的多层堆叠,其所有(或大多数)的目标是学习,并且很多是在计算非线性的输入输出映射关系。这些堆叠中每个模块都在转换其输入来同时增加分离度和表达的不变性。有5到20层的多层非线性层的系统,系统可以变成既对一些细节很敏感的复杂函数——能够从白色的狼中区分出萨摩耶,又对大型的不相关变量不敏感,例如背景,姿势,光照和周围的物体。

Backpropagation to train multilayer architectures

反向传播训练多层结构

From the earliest days of pattern recognition22,23, the aim of researchers has been to replace hand-engineered features with trainable multilayer networks, but despite its simplicity, the solution was not widely understood until the mid 1980s. As it turns out, multilayer architectures can be trained by simple stochastic gradient descent. As long as the modules are relatively smooth functions of their inputs and of their internal weights, one can compute gradients using the backpropagation procedure. The idea that this could be done, and that it worked, was discovered independently by several different groups during the 1970s and 1980s24-27.

在早期的模式识别中,研究的目标是利用可训练的多层网络来代替手工设计的特征。但是尽管它很简单,它的解决方法知道二十世纪八十年代中期才被广泛理解。它指出,多层结构可以利用简单的梯度下降进行训练,只要每个模块是输入和内部权重的相对平滑的函数,就可以通过反向传播方法计算梯度。这个方法的可行性在二十世纪七八十年代被几个不同的团队独立地发现了。

The backpropagation procedure to compute the gradient of an objective function with respect to the weights of a multilayer stack of modules is nothing more than a practical application of the chain rule for derivatives. The key insight is that the derivative (or gradient) of the objective with respect to the input of a module can be computed by working backwards from the gradient with respect to the output of that module (or the input of the subsequent module) (Fig. 1). The backpropagation equation can be applied repeatedly to propagate gradients through all modules, starting from the output at the top (where the network produces its prediction) all the way to the bottom (where the external input is fed). Once these gradients have been computed, it is straightforward to compute the gradients with respect to the weights of each module.

反向传播计算目标函数对应于多层模块堆里面的参数的梯度仅仅只是链式求导法则的一个实际应用而已。关键在于目标函数对模型输入的求导可以通过对这个模型输出(或后一个模块的输入)的的导数来进行回传求得(图1)。反向传播的等式可以被反复应用于贯穿整个网络的从顶部的输出(模型产生预测的地方)一路到底部的输入(外部输入的地方)的梯度传播。一旦这些梯度被计算得到,就可以直接计算每个模块的权重的梯度。

Many applications of deep learning use feedforward neural network architectures (Fig. 1), which learn to map a fixed-size input (for example, an image) to a fixed-size output (for example, a probability for each of several categories). To go from one layer to the next, a set of units compute a weighted sum of their inputs from the previous layer and pass the result through a non-linear function. At present, the most popular non-linear function is the rectified linear unit (ReLU), which is simply the half-wave rectifier f(z) = max(z, 0). In past decades, neural nets used smoother non-linearities, such as tanh(z) or 1/(1 + exp(-z)), but the ReLU typically learns much faster in networks with many layers, allowing training of a deep supervised network without unsupervised pre-training28. Units that are not in the input or output layer are conventionally called hidden units. The hidden layers can be seen as distorting the input in a non-linear way so that categories become linearly separable by the last layer (Fig. 1).

许多深度学习的应用实例都使用了前馈神经网络结构(图1),这种结构将固定大小的输入(例如一幅图像)映射到固定大小的输出(例如划分为若干的类别的可能性值)。在层间传递中,一些单元计算了来自于上一层的输入的的加权和,并通过非线性函数传递它们的输出。目前最流行的非线性函数是修正线性单元(ReLU),它是一个简单的半波整流函数f(z)=max(z,0)。过去几十年中,神经网络使用了具有更平滑的非线性的函数,例如tanh(z)和1/(1+exp(z)),但是ReLU仍然可以在多层网络中较快地学习,使得不需要进行非监督的预训练就可以训练一个深度的监督网络。不属于输入层和输出层的单元被称为隐藏单元。隐藏层可以被看作是以非线性方式扭曲输入空间的,所以类别就变得可以被输出层线性分离(图1)。

In the late 1990s, neural nets and backpropagation were largely forsaken by the machine-learning community and ignored by the computer-vision and speech-recognition communities. It was widely thought that learning useful, multistage, feature extractors with little prior knowledge was infeasible. In particular, it was commonly thought that simple gradient descent would get trapped in poor local minima — weight configurations for which no small change would reduce the average error.

二十世纪九十年代后期,神经网络和反向传播算法很大程度上被机器学习领域的研究者所遗弃,也被计算机视觉和和语音识别领域的研究者们忽视。人们广泛认为学习实用的、多阶段的、需要很少先验知识的特征提取器是不可行的。特别的是,人们普遍认为简单的梯度下降很可能陷入不好的局部最小值——小的改变不能够使平均误差再下降的权重配置。

In practice, poor local minima are rarely a problem with large networks. Regardless of the initial conditions, the system nearly always reaches solutions of very similar quality. Recent theoretical and empirical results strongly suggest that local minima are not a serious issue in general. Instead, the landscape is packed with a combinatorially large number of saddle points where the gradient is zero, and the surface curves up in most dimensions and curves down in the remainder29,30. The analysis seems to show that saddle points with only a few downward curving directions are present in very large numbers, but almost all of them have very similar values of the objective function. Hence, it does not much matter which of these saddle points the algorithm gets stuck at.

在实践中,局部最小在大型网络中很少会成为问题。不考虑初始条件,系统总能得到效果差不多的结果。最近的理论和经验结果都强烈表明,局部最小通常不是一个严重的问题。正相反,解空间中存在大量的梯度为零的鞍点,并且在大多数维度上曲面都是弯曲向上的,只有剩下的很少的曲面方向是向下的。分析指出大量出现的鞍点都只有极少的向下卷曲的方向,但是它们几乎所有的都有和目标函数差不多的值。因此,即使算法陷入这些鞍点也没有太大的问题。

Interest in deep feedforward networks was revived around 200631-34by a group of researchers brought together by the Canadian Institute for Advanced Research (CIFAR). The researchers introduced unsupervised learning procedures that could create layers of feature detectors without requiring labelled data. The objective in learning each layer of feature detectors was to be able to reconstruct or model the activities of feature detectors (or raw inputs) in the layer below. By ‘pre-training’ several layers of progressively more complex feature detectors using this reconstruction objective, the weights of a deep network could be initialized to sensible values. A final layer of output units could then be added to the top of the network and the whole deep system could be fine-tuned using standard backpropagation33-35. This worked remarkably well for recognizing handwritten digits or for detecting pedestrians, especially when the amount of labelled data was very limited36.

2006年前后,加拿大高级研究院(CIFAR)聚集了一个研究员团队,他们使得人们重燃了对于深度前馈网络的研究兴趣。研究者们介绍了不需要有标签数据就可以创建多层特征检测器的无监督学习方法。在学习过程中,每一层的特征检测器的目标是希望能够在下一层重建或模拟特征检测器(或原始数据)的活动。通过利用重构目标预训练出更加复杂的若干层特征提取器,网络的权重可以被初始化为合适的值。输出层加在网络的顶部后,整个网络可以通过标准的BP算法做出相应的调整。这个方法在手写体识别和行人检测方面有很好的效果,特别是当有标签数据十分有限的时候。

The first major application of this pre-training approach was in speech recognition, and it was made possible by the advent of fast graphics processing units (GPUs) that were convenient to program37 and allowed researchers to train networks 10 or 20 times faster. In 2009, the approach was used to map short temporal windows of coefficients extracted from a sound wave to a set of probabilities for the various fragments of speech that might be represented by the frame in the centre of the window. It achieved record-breaking results on a standard speech recognition benchmark that used a small vocabulary38 and was quickly developed to give record-breaking results on a large vocabulary task39. By 2012, versions of the deep net from 2009 were being developed by many of the major speech groups6 and were already being deployed in Android phones. For smaller data sets, unsupervised pre-training helps to prevent overfitting40, leading to significantly better generalization when the number of labelled examples is small, or in a transfer setting where we have lots of examples for some ‘source’ tasks but very few for some ‘target’ tasks. Once deep learning had been rehabilitated, it turned out that the pre-training stage was only needed for small data sets.

这种预训练尝试的一个主要应用是语音识别,快速图形处理单元(GPU)的出现使得编程变得便捷,并且使研究者们训练网络的速度比以前提升了10到20倍。在2009年这种尝试被应用到将一段声波中提取到的短时间的窗口系数,映射到可以被窗口帧中心代替的一系列语音碎片的概率。它打破了在使用较小词汇库的标准的基准语音识别记录,并很快打破了使用更大的词汇库的识别记录。到2012年为止,从2009年发展起来的深度网络已经被许多主流语音团队所发展,并且已经被应用到了安卓手机上。对于比较小的数据集来说,无监督预训练可以很好地预防过拟合。当有标签数据比较少或者有很多源数据而目标数据很少时,它会取得更好的泛化效果。一旦深度学习的研究获得了恢复,这种预训练也就只有在数据较少的时候才需要了。

There was, however, one particular type of deep, feedforward network that was much easier to train and generalized much better than networks with full connectivity between adjacent layers. This was the convolutional neural network (ConvNet)41,42. It achieved many practical successes during the period when neural networks were out of favour and it has recently been widely adopted by the computervision community.

然而,一种特殊的深度前馈网络相对于那种相邻层使用全连接的网络来说更容易训练,泛化性能也更好。它就是卷积神经网络(ConvNet)。在神经网络不受人们关注期间,它取得了许多实践性的成功,并且最近已经被计算机视觉领域的研究者们广泛接受。

Convolutional neural networks

卷积神经网络

ConvNets are designed to process data that come in the form of multiple arrays, for example a colour image composed of three 2D arrays containing pixel intensities in the three colour channels. Many data modalities are in the form of multiple arrays: 1D for signals and sequences, including language; 2D for images or audio spectrograms; and 3D for video or volumetric images. There are four key ideas behind ConvNets that take advantage of the properties of natural signals: local connections, shared weights, pooling and the use of many layers.

卷积网络是被设计用来处理多阵列数据的,例如一张包含三通道2维彩色像素强度队列的图片。许多数据形态是多维阵列的形式:1维的是包括语言的信号序列,2维的是图像或声谱图,3维的是视频或立体图像。卷积网络利用自然信号的特性时背后的四个关键信息:局部连接,权值共享,池化和多层结构。

The architecture of a typical ConvNet (Fig. 2) is structured as a series of stages. The first few stages are composed of two types of layers: convolutional layers and pooling layers. Units in a convolutional layer are organized in feature maps, within which each unit is connected to local patches in the feature maps of the previous layer through a set of weights called a filter bank. The result of this local weighted sum is then passed through a non-linearity such as a ReLU. All units in a feature map share the same filter bank. Different feature maps in a layer use different filter banks. The reason for this architecture is twofold. First, in array data such as images, local groups of values are often highly correlated, forming distinctive local motifs that are easily detected. Second, the local statistics of images and other signals are invariant to location. In other words, if a motif can appear in one part of the image, it could appear anywhere, hence the idea of units at different locations sharing the same weights and detecting the same pattern in different parts of the array. Mathematically, the filtering operation performed by a feature map is a discrete convolution, hence the name.

典型的卷积网络结构(如图2)由一系列的阶段构成。最初的阶段由卷积层和池化层组成。卷积层的很多节点被构造成了一个特征图,每个节点与上一层在特征图中的局部块通过一系列的被称为滤波器的权重连接。这个节点的局部加权和被通过一个非线性函数如ReLU进行传递。一个特征映射中的所有节点分享了相同的滤波器。设计这种结构的原因有两方面。首先,在队列型数据例如图像中,局部的值是高度相关的,形成局部特征图是很容易检测的。其次,图像的局部统计与其他信号在位置上是不相关的。换句话说,一个局部图像也可能出现在其他的任何地方,因此构建在不同位置共享相同权值并在队列的不同部分检测相同模式的单元的方法,在数学上叫做离散卷积,是利用特征映射进行滤波操作的方法。

Although the role of the convolutional layer is to detect local conjunctions of features from the previous layer, the role of the pooling layer is to merge semantically similar features into one. Because the relative positions of the features forming a motif can vary somewhat, reliably detecting the motif can be done by coarse-graining the position of each feature. A typical pooling unit computes the maximum of a local patch of units in one feature map (or in a few feature maps). Neighbouring pooling units take input from patches that are shifted by more than one row or column, thereby reducing the dimension of the representation and creating an invariance to small shifts and distortions. Two or three stages of convolution, non-linearity and pooling are stacked, followed by more convolutional and fully-connected layers. Backpropagating gradients through a ConvNet is as simple as through a regular deep network, allowing all the weights in all the filter banks to be trained.

卷积层的功能是检测前一层特征的局部连接,而池化层的作用是合并语义上相似的特征。这是因为形成一个目标的特征的相对位置会有所不同,位置粗糙颗粒化的特征也可以形成可靠的目标检测。一个典型的池化单元会计算在一个(或几个)特征映射中的一个局部块中单元的最大值。邻近的池化单元对局部块按照一行或一列或者更多的顺序切换取得数据,从而减少了表达的维数,创造了对于微小移动或扭曲的不变性。两到三个卷积层,加上非线性性和池化层的堆叠,再连接上全连接层就构成了卷积网络。同普通深度网络中一样简单的BP算法就可以训练卷积网络所有的滤波器中的权重。

Deep neural networks exploit the property that many natural signals are compositional hierarchies, in which higher-level features are obtained by composing lower-level ones. In images, local combinations of edges form motifs, motifs assemble into parts, and parts form objects. Similar hierarchies exist in speech and text from sounds to phones, phonemes, syllables, words and sentences. The pooling allows representations to vary very little when elements in the previous layer vary in position and appearance.

深度神经网络在探究自然信号层级组成的特性,其中的高级特征是由低级特征组合获得的。在图像中,边缘的局部组合形成了图案,图案聚合成很多部分,最终组成目标。相似的层级结构也存在于来自电话里的声音、音位、音节以及单词和句子等的这些语音和文本中。当前一层的元素的位置或表现变化时,池化操作能够保证表达几乎不变。

The convolutional and pooling layers in ConvNets are directly inspired by the classic notions of simple cells and complex cells in visual neuroscience43, and the overall architecture is reminiscent of the LGN–V1–V2–V4–IT hierarchy in the visual cortex ventral pathway44. When ConvNet models and monkeys are shown the same picture, the activations of high-level units in the ConvNet explains half of the variance of random sets of 160 neurons in the monkey’s inferotemporal cortex45. ConvNets have their roots in the neocognitron46, the architecture of which was somewhat similar, but did not have an end-to-end supervised-learning algorithm such as backpropagation. A primitive 1D ConvNet called a time-delay neural net was used for the recognition of phonemes and simple words47,48.

卷积网络中卷积层和池化层是由视觉神经科学中的简单细胞和复杂细胞的经典观念启发而来的,视觉皮层的神经回路是以LGN–V1–V2–V4–IT这样的整体架构构成的。当给卷积网络和猴子展示相同图片时,卷积网络高层单元的激活过程就可以解释猴子的下颞叶皮质中随机组合的由160个神经元组成的神经元组中一半神经元的活性变化。卷积网络的根源可以归结到神经认知机,他们有相似的结构,但神经认知机中没有类似BP算法之类的端到端的监督学习算法。一个简单的1维卷积网络被称作时延神经网络,它曾被用于音位与简单单词的识别。

There have been numerous applications of convolutional networks going back to the early 1990s, starting with time-delay neural networks for speech recognition47 and document reading42. The document reading system used a ConvNet trained jointly with a probabilistic model that implemented language constraints. By the late 1990s this system was reading over 10% of all the cheques in the United States. A number of ConvNet-based optical character recognition and handwriting recognition systems were later deployed by Microsoft49. ConvNets were also experimented with in the early 1990s for object detection in natural images, including faces and hands50,51, and for face recognition52.

从二十世纪九十年代早期开始,卷积网络已经有了大量的应用,最初是时延神经网络用于语音识别和文本阅读。文本阅读系统使用了一个训练好的卷积网络和一个受到语言约束的概率模型的联合。二十世纪九十年代后期,这个系统阅读了大概10%的美国全国的支票。后来微软公司研发出大量的基于卷积网络的视觉特征识别和手写体识别系统。二十世纪九十年代早期,卷积网络也曾被实验在自然图片中的目标检测上,包括人脸识别和手写体识别。

Image understanding with deep convolutional networks

使用深度卷积网络的图像理解

Since the early 2000s, ConvNets have been applied with great success to the detection, segmentation and recognition of objects and regions in images. These were all tasks in which labelled data was relatively abundant, such as traffic sign recognition53, the segmentation of biological images54 particularly for connectomics55, and the detection of faces, text, pedestrians and human bodies in natural images36,50,51,56–58. A major recent practical success of ConvNets is face recognition59.

从2000年以来,卷积网络被应用在物体和区域的检测,分割和识别上,并取得了巨大成功。这些所有的任务都有相对丰富的有标签数据集,如交通标志的识别,生物图像特别是神经组的分割,以及自然图像中的人脸,文字,行人和人体的检测。卷积神经网络最近的一个重大的成功实践是人脸识别。

Importantly, images can be labelled at the pixel level, which will have applications in technology, including autonomous mobile robots and self-driving cars60,61. Companies such as Mobileye and NVIDIA are using such ConvNet-based methods in their upcoming vision systems for cars. Other applications gaining importance involve natural language understanding14 and speech recognition7.

重要的是,图像在像素水平就可以打上标签,这样就可以应用在很多技术上,包括自动移动机器人,自动驾驶汽车等。诸如Mobileye和英伟达这样的公司就在他们即将发布的汽车视觉系统中使用了基于卷积神经网络方法。其他值得关注的应用涉及到了自然语言理解和语音识别。

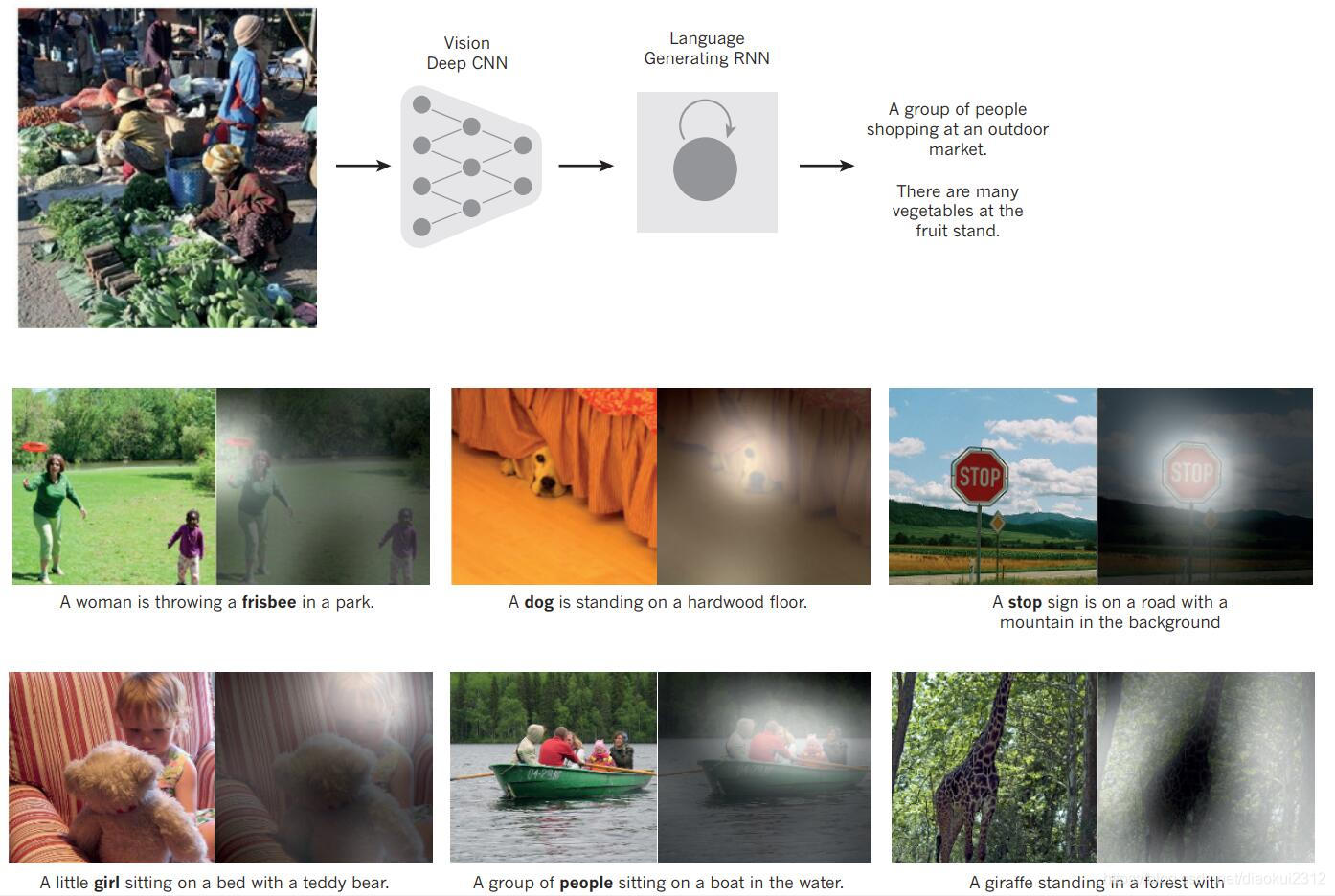

Despite these successes, ConvNets were largely forsaken by the mainstream computer-vision and machine-learning communities until the ImageNet competition in 2012. When deep convolutional networks were applied to a data set of about a million images from the web that contained 1,000 different classes, they achieved spectacular results, almost halving the error rates of the best competing approaches1. This success came from the efficient use of GPUs, ReLUs, a new regularization technique called dropout62, and techniques to generate more training examples by deforming the existing ones. This success has brought about a revolution in computer vision; ConvNets are now the dominant approach for almost all recognition and detection tasks4,58,59,63–65 and approach human performance on some tasks. A recent stunning demonstration combines ConvNets and recurrent net modules for the generation of image captions (Fig. 3).

尽管已经有了这些成功,卷积神经网络仍然被主流计算机视觉和机器学习团队所抛弃,直到2012年的ImageNet竞赛。当深度卷积网络被应用在包含1000个不同类别的大概有100万张图片的数据集上时,它们取得了惊人的成功,错误率低至原来最好结果的一半。这样的成功来源于GPU的有效利用,和ReLU函数的使用,以及一种叫做dropout的新的规则化技术的应用,还有通过扭曲现有图像来获得更多训练数据的技术。这个成功带来了一场计算机视觉领域的革命;几乎在所有检测和识别项目上卷积神经网络都是最具优势的方法,并在一些方面性能几乎接近人类。最近的一个很好的证明是结合了卷积神经网络与递归网络的,能够根据图像产生其文本标题(如图3)。

Recent ConvNet architectures have 10 to 20 layers of ReLUs, hundreds of millions of weights, and billions of connections between units. Whereas training such large networks could have taken weeks only two years ago, progress in hardware, software and algorithm parallelization have reduced training times to a few hours.

最近的卷积神经网络架构有10到20层的ReLU层,百万个权重,数十亿的节点间连接。然而在2年前,训练这样一个网络需要数周,随着硬件,软件和算法的同时提升,现在这仅仅需要几个小时了。

图3:从图像到文本*

由一个递归神经网络(RNN)生成的字幕,从测试图像中利用深度卷积神经网络(CNN)提取出的表达作为额外的输入,使用RNN训练出的高级图像表达将其”翻译“成字幕(图中最上方)。图转载自参考文献102。当递归神经网络在产生每个单词(粗体)时,它具备了关注输入图像不同位置的能力(图中二三行;较亮的部分被给予了更多的关注),我们发现它使得将图片“翻译”成文字的技术有了极大的扩展。

The performance of ConvNet-based vision systems has caused most major technology companies, including Google, Facebook, Microsoft, IBM, Yahoo!, Twitter and Adobe, as well as a quickly growing number of start-ups to initiate research and development projects and to deploy ConvNet-based image understanding products and services.

基于卷积神经网络的视觉系统的性能已经引起了许多科技公司的关注,包括Google,Facebook,Microsoft,IBM,Yahoo!,Twitter和Adobe,还有越来越多的创业公司开始研究并致力于提供基于卷积神经网络的图像理解产品和服务。

ConvNets are easily amenable to efficient hardware implementations in chips or field-programmable gate arrays66,67. A number of companies such as NVIDIA, Mobileye, Intel, Qualcomm and Samsung are developing ConvNet chips to enable real-time vision applications in smartphones, cameras, robots and self-driving cars.

卷积神经网络是很容易在芯片或可编程门阵列上实现的。许多公司例如NVIDIA,Mobileye,Intel,Qualcomm和Samsung正在开发卷积神经网络芯片使之能够在智能手机,相机,机器人和自动驾驶汽车上实现实时的视觉应用。

Distributed representations and language processing

分布式表达与语言处理

Deep-learning theory shows that deep nets have two different exponential advantages over classic learning algorithms that do not use distributed representations21. Both of these advantages arise from the power of composition and depend on the underlying data-generating distribution having an appropriate componential structure40. First, learning distributed representations enable generalization to new combinations of the values of learned features beyond those seen during training (for example, 2n combinations are possible with n binary features)68,69. Second, composing layers of representation in a deep net brings the potential for another exponential advantage70 (exponential in the depth).

深度学习理论表明深度网络有两个不同的指数优于不使用分布式表达的经典学习算法。这两个优点源于其组成,并且依赖于底层的数据生成的分布有一个适当的组成结构。首先,学习分布式表达能够超出训练时可见的特征,概括出已学习特征的新组合(例如,n个二值的特征就有种可能)。第二,深度网络中表达的构成层会带来潜在的另一种指数级的优势(指数级深度)。

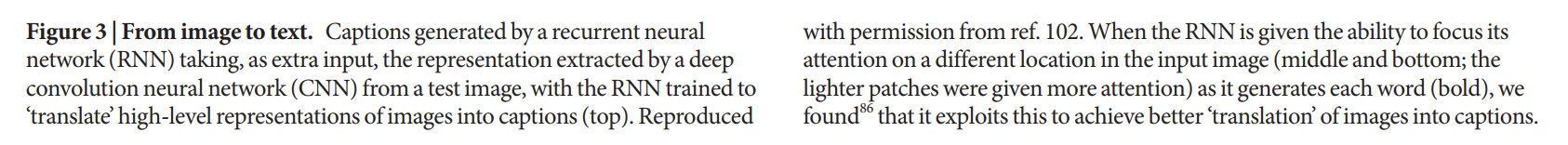

图4:已学习单词向量可视化*

左图是为语言建模所学习的单词表达的图解,使用t-SNE算法将其非线性映射到2维实现可视化。右图是由一个英语-法语编解码递归神经网络学习到的单词的2维表达。可以观察到语义上相似的单词会在图上表达在相近的区域。单词表达的分布是由BP算法对每个单词表达的共同学习和一个可以预测目标总量的函数得到的,例如一句话中的下一个单词(针对语言模型)或一整句话的译文(针对机器翻译)。

The hidden layers of a multilayer neural network learn to represent the network’s inputs in a way that makes it easy to predict the target outputs. This is nicely demonstrated by training a multilayer neural network to predict the next word in a sequence from a local context of earlier words71. Each word in the context is presented to the network as a one-of-N vector, that is, one component has a value of 1 and the rest are 0. In the first layer, each word creates a different pattern of activations, or word vectors (Fig. 4). In a language model, the other layers of the network learn to convert the input word vectors into an output word vector for the predicted next word, which can be used to predict the probability for any word in the vocabulary to appear as the next word. The network learns word vectors that contain many active components each of which can be interpreted as a separate feature of the word, as was first demonstrated27 in the context of learning distributed representations for symbols. These semantic features were not explicitly present in the input. They were discovered by the learning procedure as a good way of factorizing the structured relationships between the input and output symbols into multiple ‘micro-rules’. Learning word vectors turned out to also work very well when the word sequences come from a large corpus of real text and the individual micro-rules are unreliable71. When trained to predict the next word in a news story, for example, the learned word vectors for Tuesday and Wednesday are very similar, as are the word vectors for Sweden and Norway. Such representations are called distributed representations because their elements (the features) are not mutually exclusive and their many configurations correspond to the variations seen in the observed data. These word vectors are composed of learned features that were not determined ahead of time by experts, but automatically discovered by the neural network. Vector representations of words learned from text are now very widely used in natural language applications14,17,72–76.

一个多层神经网络的隐藏层学习输入的特征重新表达,是为了使输出预测的结果更容易。通过训练一个根据局部文本中的前一部分去预测接下来的一个单词的多层神经网络,就可以证明这一点。内容中的每个单词代表网络中的一个N分之1的向量,也就是说,一个元素中有一个1,其他都是0。在第一层中,每个单词生成一个不同的激活模式,或是单词向量(如图4)。在一个语言模型中,为了预测下一个单词,网络的其它部分学习将输入单词向量转化为一个输出单词向量,这可以用来预测字典中任一词作为下一个出现的概率。网络学习了包含许多积极成分的单词向量,其中每一个成分都可以被看作单词的一个分离特征,正如学习符号的分布式表达时在文本中实现分布一样。这些语义特征没有在输入中被明确地表示出来。在学习过程中发现,将可以将输入输出符号之间的关系分解为多重的“微规则”。当单词来自于一个庞大的真实文本语义库并且独立微规则也不可靠时,学习单词向量的方法也有很好的效果。当利用训练好的网络去预测新闻中的下一个单词时,已学习到的单词向量有的很相似,例如星期二和星期三,瑞典和挪威。这种表达被称为分布式表达,因为他们的元素(特征)不相互独立,并且他们的很多配置与观察到数据的变化相一致。这些单词向量由学习好的特征组成,这些特征并不是事先由专家决定的,而是由神经网络自动发掘。从文本中学习单词向量的表达的方法在自然语言处理中已经被广泛使用了。

The issue of representation lies at the heart of the debate between the logic-inspired and the neural-network-inspired paradigms for cognition. In the logic-inspired paradigm, an instance of a symbol is

something for which the only property is that it is either identical or non-identical to other symbol instances. It has no internal structure that is relevant to its use; and to reason with symbols, they must be

bound to the variables in judiciously chosen rules of inference. By contrast, neural networks just use big activity vectors, big weight matrices and scalar non-linearities to perform the type of fast ‘intuitive’ inference that underpins effortless commonsense reasoning.

特征表示是逻辑启发范例和神经网络启发范例在认知问题上争论的核心。在逻辑启发范例中,一个符号的实例是与其他完全相同或完全不同的唯一属性。它没有与其使用相关的内部结构;并且,理解它的语义时,必须与推断选择规则的变量相关。正相反,神经网络使用巨大的活跃向量,巨大的权重矩阵以及阶梯非线性性来实现一种快速直观的、支撑简单常识的推断。

Before the introduction of neural language models71, the standard approach to statistical modelling of language did not exploit distributed representations: it was based on counting frequencies of occurrences of short symbol sequences of length up to N (called N-grams). The number of possible N-grams is on the order of VN, where V is the vocabulary size, so taking into account a context of more than a handful of words would require very large training corpora. N-grams treat each word as an atomic unit, so they cannot generalize across semantically related sequences of words, whereas neural language models can because they associate each word with a vector of real valued features, and semantically related words end up close to each other in that vector space (Fig. 4).

在介绍神经语言模型之前,标准的统计语言建模方法并没有扩展分布式表达:是基于对最高长度为N 的短符号序列进行出现频率统计的方法(N元文法)。可能的N-grams的数目接近V的N次方,其中V是词汇表的大小,所以考虑一段文本可能需要非常大的训练语料库。N-grams将每个单词看作一个原子单元,所以它不能使语义相关的单词序列一般化,而这是神经语言模型可以的,因为它利用有实值特征的向量组织起了每个单词,并且语义相关的单词在向量空间是邻近的(如图4)。

Recurrent neural networks

递归神经网络

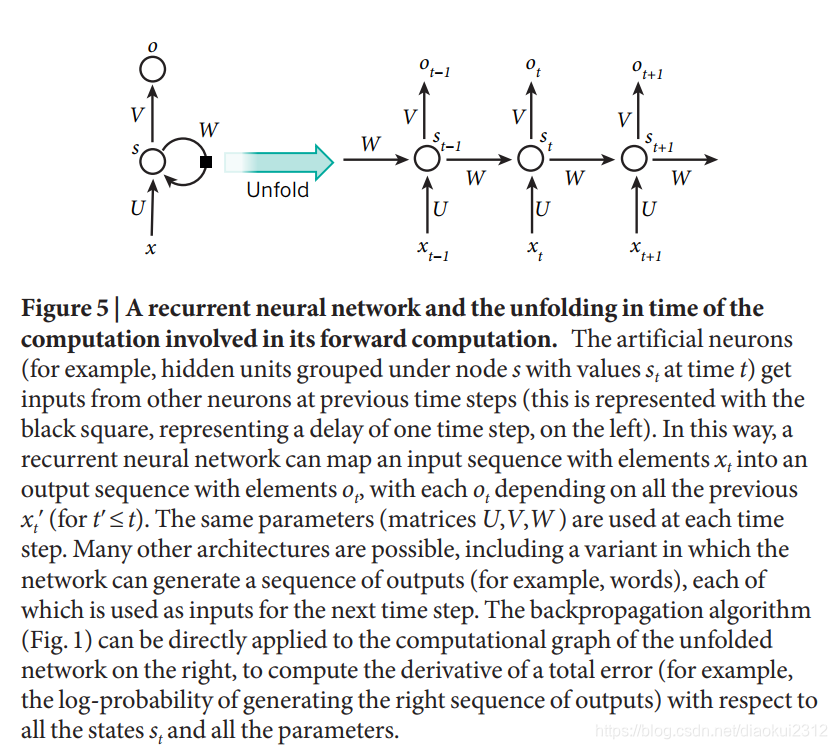

When backpropagation was first introduced, its most exciting use was for training recurrent neural networks (RNNs). For tasks that involve sequential inputs, such as speech and language, it is often better to use RNNs (Fig. 5). RNNs process an input sequence one element at a time, maintaining in their hidden units a ‘state vector’ that implicitly contains information about the history of all the past elements of the sequence. When we consider the outputs of the hidden units at different discrete time steps as if they were the outputs of different neurons in a deep multilayer network (Fig. 5, right), it becomes clear how we can apply backpropagation to train RNNs.

当BP算法第一次被公布时,它最令人激动的应用就是训练递归神经网络(RNNs)。对于语音和语言这种连续输入的问题,递归神经网络的效果通常是比较好的。递归神经网络一次处理输入序列中的一个元素,并且将序列中之前元素的历史信息含蓄地保存在隐藏节点的状态向量中。当我们考虑不同的离散时间节点上隐藏节点的输出时,就像它们是深度多层网络中不同神经元的输出,我们就能清晰地知道BP算法是如何被用来训练递归神经网络的。

RNNs are very powerful dynamic systems, but training them has proved to be problematic because the backpropagated gradients either grow or shrink at each time step, so over many time steps they

typically explode or vanish77,78.

递归神经网络是非常强大的动态系统,但是它们的训练是存在问题的,因为反向传播的梯度在每个时间间隔中都或增或减,所以一段时间后可能就会激增或归零。

Thanks to advances in their architecture79,80 and ways of training them81,82, RNNs have been found to be very good at predicting the next character in the text83 or the next word in a sequence75 but they can also be used for more complex tasks. For example, after reading an English sentence one word at a time, an English ‘encoder’ network can be trained so that the final state vector of its hidden units is a good representation of the thought expressed by the sentence. This thought vector can then be used as the initial hidden state of (or as extra input to) a jointly trained French ‘decoder’ network, which outputs a probability distribution for the first word of the French translation. If a particular first word is chosen from this distribution and provided as input to the decoder network it will then output a probability distribution for the second word of the translation and so on until a full stop is chosen17,72,76. Overall, this process generates sequences of French words according to a probability distribution that depends on the English sentence. This rather naive way of performing machine translation has quickly become competitive with the state-of-the-art, and this raises serious doubts about whether understanding a sentence requires anything like the internal symbolic expressions that are manipulated by using inference rules. It is more compatible with the view that everyday reasoning involves many simultaneous analogies that each contribute plausibility to a conclusion84,85.

得益于它们先进的结构和训练方式,递归神经网络被发现在预测文本中下一个字符或序列中下一个单词上是很有效的,但是也可以被用来完成一些更复杂的任务。例如,在一字一句地阅读完一个英文句子后,一个被训练出的英文“编码器”网络的隐含层的最终状态向量就是句子意思的正确表达。这个设定向量可以作为共同训练出的法语“译码器”网络的隐含层的初始状态向量(或是网络的额外输入),它的输出是法语翻译的首单词的概率分布。如果一个特殊的首单词从分布中被选中并作为编码器网络的输入,它将能输出第二个单词的概率分布,如此直到最后。总的来说,这个过程是根据英语句子的概率分布来产生法语单词的序列。这种很简单的机器翻译方法很快成为了最先进方法的对手,这引起了人们对于理解一个句子是否需要使用推理规则来组织内部符号表达产生了很大的怀疑。这与日常推理会同时涉及到可以为形成结论提供合理性的类推这一观点是相互并列的。

图5 在前一个时间节拍上,人工神经元(例如在时刻,节点下有值的隐藏节点)从其他神经元获取输入(由图中左边黑方块代表,表示了一个时间节拍的延迟)。通过这样,递归神经网络就可以将输入序列元素映射到输出序列元素,每个都由前一个决定()。相同的参数(矩阵U,V,W)在任一时刻都会被利用。很多结构都可以这样,包括很多可以生成一个输出队列(如单词)的网络,这些输出都会作为下一层的输入。反向传播算法(图1)可以直接应用在右边的展开网络中,用于计算总误差关于所有的状态以及参数的导数(例如产生正确输出的对数概率)。

Instead of translating the meaning of a French sentence into an English sentence, one can learn to ‘translate’ the meaning of an image into an English sentence (Fig. 3). The encoder here is a deep ConvNet that converts the pixels into an activity vector in its last hidden layer. The decoder is an RNN similar to the ones used for machine translation and neural language modelling. There has been a surge of interest in such systems recently (see examples mentioned in ref. 86).

与将一个法语句子翻译到英不同,我们也可以学习将一幅图像的意思“翻译”成一个英文句子。这里的编码器是一个深度卷积网络,在它最后的隐藏层将像素转换成了活动向量。而译码器是与用于机器翻译和神经语言模型类似的递归神经网络。最近,研究者们对这样的系统产生了巨大的兴趣(见参考文献86中提及的例子)。

RNNs, once unfolded in time (Fig. 5), can be seen as very deep feedforward networks in which all the layers share the same weights. Although their main purpose is to learn long-term dependencies, theoretical and empirical evidence shows that it is difficult to learn to store information for very long78.

递归神经网络在时域上展开,可以看作是一个所有层共享相同权重的深度前馈网络。尽管他们的主要目标是学习到长期的依赖,但是理论和实践经验都表明学习去长久地储存信息是很困难的。

To correct for that, one idea is to augment the network with an explicit memory. The first proposal of this kind is the long short-term memory (LSTM) networks that use special hidden units, the natural behaviour of which is to remember inputs for a long time79. A special unit called the memory cell acts like an accumulator or a gated leaky neuron: it has a connection to itself at the next time step that has a weight of one, so it copies its own real-valued state and accumulates the external signal, but this self-connection is multiplicatively gated by another unit that learns to decide when to clear the content of the memory.

为了解决这一问题,可以增大网络的存储量。这个方法最初的提议是一种采用了特殊隐藏单元的长短期记忆网络(LSTM),它自然的行为就是长时间地保存输入内容。一种叫作记忆细胞的特殊单元,类似累加器或门控神经元:在下一个时间节点时,它与自身以一个权重连接,所以它复制了自己的实值状态,并且累加了外部信号,但是这个自连接是被另一个学习决定何时清除记忆内容的单元以乘法门控制的。

LSTM networks have subsequently proved to be more effective than conventional RNNs, especially when they have several layers for each time step87, enabling an entire speech recognition system that goes all the way from acoustics to the sequence of characters in the transcription. LSTM networks or related forms of gated units are also currently used for the encoder and decoder networks that perform so well at machine translation17,72,76.

长短期记忆网络后来被证明比传统的递归神经网络更有效,尤其是当每一个时间节点有若干层时,使整个语音识别系统能够完成从声音到字符序列的转录。长短期记忆网络或者其他门限单元也被用于编解码网络,在机器翻译中也表现得很出色。

Over the past year, several authors have made different proposals to augment RNNs with a memory module. Proposals include the Neural Turing Machine in which the network is augmented by a ‘tape-like’ memory that the RNN can choose to read from or write to88, and memory networks, in which a regular network is augmented by a kind of associative memory89. Memory networks have yielded excellent performance on standard question-answering benchmarks. The memory is used to remember the story about which the network is later asked to answer questions.

在过去,若干学者已经对于增大递归神经网络的记忆模块提出了不同的方法。包括神经图灵机,一个递归神经网络可选择读或写的类似“磁带”的存储模型,还有记忆网络,它可以通过联想记忆来增强常规的网络。记忆网络在标准的问答基准测试中表现良好。记忆是用来记住后来网络被要求回答的问题的事例的。

Beyond simple memorization, neural Turing machines and memory networks are being used for tasks that would normally require reasoning and symbol manipulation. Neural Turing machines can be taught ‘algorithms’. Among other things, they can learn to output a sorted list of symbols when their input consists of an unsorted sequence in which each symbol is accompanied by a real value that indicates its priority in the list88. Memory networks can be trained to keep track of the state of the world in a setting similar to a text adventure game and after reading a story, they can answer questions that require complex inference90. In one test example, the network is shown a 15-sentence version of the The Lord of the Rings and correctly answers questions such as “where is Frodo now?”89.

除了简单的记忆,神经图灵机和记忆网络还被用于进行推理或符号操作的任务。神经图灵机也可以被教授“算法”。在其它的事情中,当它们的输入由未排序的序列组成时,它们可以学习输出符号排序后的列表,并且输出的每个符号都会有一个表明优先值的实值。记忆网络可以被训练去追踪一个与文字冒险游戏相似的世界,并且在阅读一个故事后,它可以回答需要复杂推理才能得出结果的问题。在一个测试示例中,网络被给予一个15句版本的《指环王》,结果它正确回答了例如“Frodo在哪?”这样的问题。

The future of deep learning

深度学习展望

Unsupervised learning91-98 had a catalytic effect in reviving interest in deep learning, but has since been overshadowed by the successes of purely supervised learning. Although we have not focused on it in this Review, we expect unsupervised learning to become far more important in the longer term. Human and animal learning is largely unsupervised: we discover the structure of the world by observing it, not by being told the name of every object.

非监督学习对于恢复人们对于深度学习的兴趣有催化作用,但是纯粹的监督学习的成功已经失去了光彩。尽管在这篇综述中我们并没有太多关注无监督学习,但是从长期来看我们期望它变得更加重要。人类和动物学习是无监督的:我们通过观察去发现世界的结构,而不是被告知每个物体的名字。

Human vision is an active process that sequentially samples the optic array in an intelligent, task-specific way using a small, high-resolution fovea with a large, low-resolution surround. We expect much of the

future progress in vision to come from systems that are trained end-toend and combine ConvNets with RNNs that use reinforcement learning to decide where to look. Systems combining deep learning and reinforcement learning are in their infancy, but they already outperform passive vision systems99 at classification tasks and produce impressive results in learning to play many different video games100.

人类视觉是通过使用一个极小的、高分辨率的视网膜中间凹以及其周围相对大的低分辨率的感官,以一种智能的,特定方式进行采集成像的活跃过程。我们期望未来能够训练出端到端的系统,并且联合卷积神经网络以及使用强化学习的 递归神经网络,从而实现能够决定去看哪里的系统。结合了深度学习和强化学习的系统还处于初级阶段,但是在分类任务以及学习操作很多不同的视频游戏上,它已经超越了之前的视觉系统。

Natural language understanding is another area in which deep learning is poised to make a large impact over the next few years. We expect systems that use RNNs to understand sentences or whole documents will become much better when they learn strategies for selectively attending to one part at a time76,86.

在未来几年,自然语言理解方面的是深度学习的另一个大有可为的发展领域。我们希望使用递归神经网络的系统学会选择性地一次能够进入某一部分的策咯,从而使得它们理解句子或整个文档的效果变得更好。

Ultimately, major progress in artificial intelligence will come about through systems that combine representation learning with complex reasoning. Although deep learning and simple reasoning have been

used for speech and handwriting recognition for a long time, new paradigms are needed to replace rule-based manipulation of symbolic expressions by operations on large vectors101.

最后,通过结合表示学习与复杂推理的系统会在人工智能领域取得重大的进展。尽管深度学习和简单的推理被用于语音和手写体识别已经很长一段时间了,但是用操作大型向量的方法去替代基于规则的符号表达方法需要新的范例。

ing recognition for a long time, new paradigms are needed to replace rule-based manipulation of symbolic expressions by operations on large vectors101.

最后,通过结合表示学习与复杂推理的系统会在人工智能领域取得重大的进展。尽管深度学习和简单的推理被用于语音和手写体识别已经很长一段时间了,但是用操作大型向量的方法去替代基于规则的符号表达方法需要新的范例。

更多推荐

已为社区贡献24条内容

已为社区贡献24条内容

所有评论(0)