超详细!“看图说话”(Image Caption)项目实战

超详细!基于pytorch的“看图说话”(Image Caption)项目实战0.简介1.运行环境1.1 我的环境1.2 建立环境2.理论介绍3.运行项目3.1 项目结构3.2 数据准备3.2 开始训练3.3 报错及解决4.效果演示0.简介本文将介绍一个“看图说话”的项目实战,用的是git上一个大神的代码,首先放出来地址:https://github.com/sgrvinod/a-PyTorch-

超详细!基于pytorch的“看图说话”(Image Caption)项目实战

0.简介

本文将介绍一个“看图说话”的项目实战,用的是git上一个大神的代码,首先放出来地址:

https://github.com/sgrvinod/a-PyTorch-Tutorial-to-Image-Captioning

作者对项目的原理进行了比较详细的介绍,为了方便大家理解,我再将其中的关键内容翻译一遍,之后再对代码进行介绍。

1.运行环境

1.1 我的环境

在进行详细的介绍之前,先介绍一下我的运行环境,大家可以以此作为参考。在配置环境的时候需要根据自己的显卡和驱动来选择合适的pytorch版本,在这里贴出清华镜像的torch的地址,根据自己的实际情况去选择合适的版本。

https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/linux-64/?C=S&O=A

在原项目中,作者所用的环境是torch0.4+python3.6.

于是我先在本地按照这个环境来配,装了pytorch0.4的cpu版本,和torchvision的cpu版本,然后跑了一下代码,亲测是可以正常运行的,直接train,不会报错。但是训练的速度实在是太慢了,一个epoch可能都要跑好几天,所以还是得用GPU去跑。

在配置GPU环境的时候,问题就出现了,服务器上的显卡比较新,只能用10.0以上版本的CUDA,然后再找一下cuda10.0对应的pytorch的版本,惊喜地发现,没有0.4版本的pytorch与之对应,只能装1.0以上的pytorch,这就麻烦了,因为pytorch不同版本之间是存在差异的,原代码极有可能跑不通了。

所以,如果你的显卡可以配9.0及以下的版本,那么恭喜你,会在接下来的内容中少遇到很多麻烦,如果只能用10.0以上的CUDA,也不要慌,跟着下面的步骤去做,应该也可以跑通。

既然用新版本,那不如做的干脆一点,直接上CUDA10.2,对应的pytorch的版本是pytorch-1.5.0-py3.6_cuda10.2.89_cudnn7.6.5_0和torchvision-0.6.0-py36_cu102,直接在清华镜像那里边搜索就可以了。

不管是哪个版本的pytorch,我都建议使用3.6版本的python(不过我也没有尝试其他版本)。

1.2 建立环境

显卡和驱动的问题不是本文介绍的重点,至于CUDA如何去装,还有怎么在服务器上使用多个版本的CUDA的问题,可以自行去百度,别人已经介绍的很清楚了。

首先conda create 一个新的环境,然后在这个新的环境里边安装我们需要的包。

最重要的当然是pytorch了。在清华镜像网站下载了相应的tar.bz2文件之后,先source activate激活你的pytorch环境,切换到你的压缩包所在目录,然后分别安装torch和torchvision

conda install pytorch-1.5.0-py3.6_cuda10.2.89_cudnn7.6.5_0.tar.bz2

conda install torchvision-0.6.0-py36_cu102.tar.bz2

然后继续安装项目需要的其他模块

conda install scipy==1.2.1

conda install nltk

conda install h5py

conda install tqdm

注意一下numpy和scipy的版本问题,版本过高的话在运行过程中可能会报错,在这里我采用的是numpy1.18和scipy1.2.1。

2.理论介绍

(理论部分暂时空着,有空了再补上)

3.运行项目

3.1 项目结构

首先来总体地看一下项目中的几个脚本的作用。

| 脚本 | 作用 |

|---|---|

| trian.py | 训练模型 |

| eval.py | 评估模型 |

| create_input_files.py | 生成输入数据 |

| caption.py | 使用束搜索读取图片并进行可视化 |

| datasets.py | 创建pytorch的DataSet类 |

| models.py | 定义模型结构 |

| utils.py | 各种辅助功能 |

3.2 数据准备

接下来需要为模型的训练准备数据集。模型需要的数据包括两部分,第一部分是图片数据集,第二部分是caption。在项目的主目录下新建两个文件夹,命名为images的文件夹用来储存图片,命名为caption的文件夹用来储存caption,当热你也可以放在别的地方,起别的名字,这个都无所谓。

首先看图片部分,本模型支持COCO、flickr8k、flickr30k三个数据集,作者的例子是在COCO数据集上进行的,COCO中的图片数量比较大,如果你电脑跑不动的话可以试试flickr,我也是在COCO数据集上训练的。git上提供了数据的下载链接,如果下载速度慢的话,可以试一下我的百度网盘链接:

训练集:https://pan.baidu.com/s/1RPSKaRH7vg03H1H7uErVZg

提取码:nnr9

验证集:https://pan.baidu.com/s/15oQtDhT0VWVizMXSbWbKqA

提取码:sx3k

flickr8k的百度云链接:https://pan.baidu.com/s/1q77r2KtxMzE74WNBkaAigw 提取码:1rh2

(我为了上传这个大文件专门开了一个月网盘会员,各位看官给我点个赞吧)

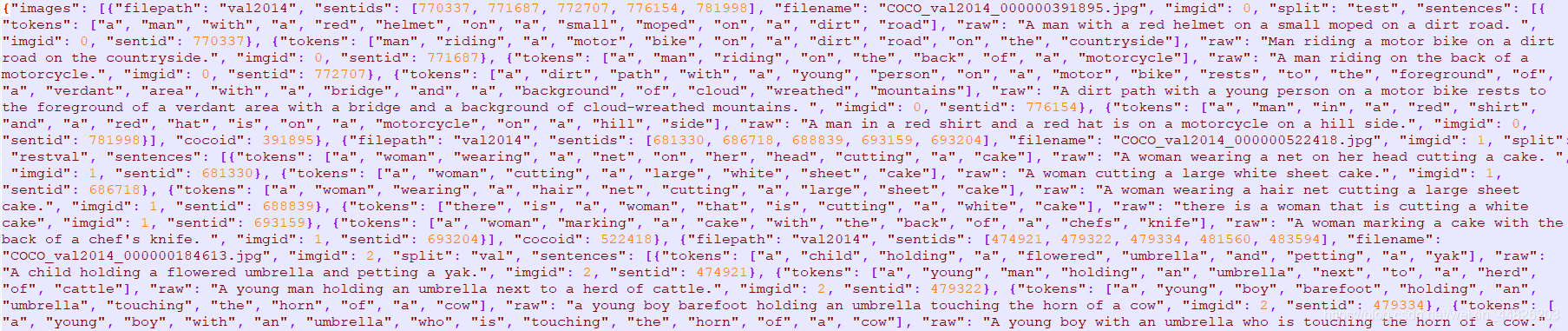

然后是caption。也就是用来描述每个图片中的内容的话。图片数据集里边的每一个图片,对应caption的json文件中的一行。比如下面这个是COCO数据集对应的caption:

caption数据我同样放在网盘里边了:https://pan.baidu.com/s/1tNAyFucFT0FJw1ebnAItuA 提取码:bcf3

接下来就可以制作输入模型所需的数据格式了。

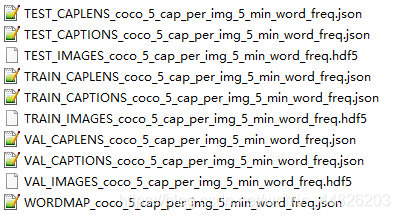

直接执行create_input_files.py这个脚本就可以(其实就是调用了utils里边的一个函数),函数的输入参数在这个脚本里边没有写,但是在utils里边是可以看到的。你可以先不用修改文件的命名格式,只需要指定数据所在的路径和输出路径就可以了。

然后就在你指定的路径下生成了一些json和hdf5文件,包括这些:

在这里注意一个小细节。最好是在与train环境相同的环境下去生成这些输入数据。我在windows下制作的数据,上传到服务器上之后好像不灵了,然后就又在服务器上生成了一套数据。

3.2 开始训练

模型可调参数如下:

| 参数 | 含义 |

|---|---|

| emb_dim | 词嵌入的维度 |

| attention_dim | attention层的维度 |

| decoder_dim | RNNdecoder的维度 |

| dropout | dropout的比率 |

| device | 在cpu还是gpu上训练 |

| cudnn.benchmark | 设置成False |

| start_epoch | 起始epoch |

| epochs | 总共训练多少epochs |

| batch_size | batch_size |

| workers | hdf5 数据加载 |

| encoder_lr | 学习率 |

| decoder_lr | 学习率 |

| grad_clip | 梯度裁剪 |

| alpha_c | 双向随机attention的正则项参数 |

| best_bleu4 | 当前最佳的BLEU4 |

| print_freq | 每多少张图片显示一次损失和准确率 |

| fine_tune_encoder | 是否fine_tune encoder |

| checkpoint | checkpoint的路径(如果继续之前的) |

设置好参数之后,用python执行train.py开始训练。建议一开始把各种维度设置的小一点,epoch设置的少一点。这个模型跑起来真的是太慢了。我直接把维度从512降到了64,结果还是跑的很慢。

在训练的过程中要连接网络,因为torchvision需要去下载预训练的模型resnet101,或者你可以在model.py中进行修改,使用其他CNN结构,如VGG。下载好的模型会保存在/root/.torch/models/resnet101路径下。

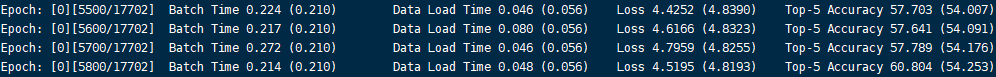

运行过程中是这样的:

如果报错了的话,可以参考下面遇到的错误,和响应的解决方法,希望能有帮助。

3.3 报错及解决

(1) EOFError: Ran out of input

这个错误是pytorch函数torch.utils.data.DataLoader中出现的,好像只有在windows下才会遇到。把脚本里的参数workers从1改成0就可以了。亲测linux环境下改成0也可以。

(2) OSError: Unable to open file (truncated file: eof = 20967661683, sblock->base_addr = 0, stored_eof = 22273132544)

文件读取错误,制作的数据集无法正常载入。应该是制作过程中断了,或者在不同操作系统下生成的文件造成的,或者可能是模型没有下载完整?(这个我不确定)。总之重新制作一下数据,就可以解决了。

(3)找不到GPU

在终端进入python环境,测试torch.cuda.is_available(),返回True,但是在运行脚本的过程中torch.cuda.is_available()返回False。这是因为你的pytorch版本要低于你电脑上CUDA的版本。

解决方法:重新安装正确版本的pytorch,或安装低版本的cuda driver。

(4)torch版本升级导致的不兼容问题

最担心的事情还是发生了,果然用1.0以上的版本去跑0.4的代码是会出问题的。网上查了一下,大部分都在介绍如何从torch0.3迁移到0.4,却很少有人介绍从0.4迁移到1.0。所以只能自己想办法修改了。

遇到的第一个问题:

Traceback (most recent call last):

File "train.py", line 333, in <module>

main()

File "train.py", line 118, in main

epoch=epoch)

File "train.py", line 181, in train

scores, _ = pack_padded_sequence(scores, decode_lengths, batch_first=True)

ValueError: too many values to unpack (expected 2)

看上去是返回值太多了?定位到anaconda\envs\caption\Lib\site-packages\torch\nn\utils\rnn.py中的pack_padded_sequence函数,对比一下新旧版本,就可以发现问题出在哪儿了。

在旧版本中,函数返回的是PackedSequence(data, batch_sizes),

而新版本中,返回的直接就是一个PackedSequence类,所以返回值只有一个。于是把原来的代码

scores, _ = pack_padded_sequence(scores, decode_lengths, batch_first=True)

targets, _ = pack_padded_sequence(targets, decode_lengths, batch_first=True)

改成

scores = pack_padded_sequence(scores, decode_lengths, batch_first=True)

targets = pack_padded_sequence(targets, decode_lengths, batch_first=True)

当然,如果不怕改崩了的话,也可以直接在rnn.py中把函数的返回值给改了,那样的话,相应的PackedSequence这个类也需要做出相应的修改。

继续运行,发现又报错了。遇到的第二个问题:

Traceback (most recent call last):

File "train.py", line 333, in <module>

main()

File "train.py", line 118, in main

epoch=epoch)

File "train.py", line 185, in train

loss = criterion(scores, targets)

File "/root/anaconda3/envs/pytorch/lib/python3.6/site-packages/torch/nn/modules/module.py", line 550, in __call__

result = self.forward(*input, **kwargs)

File "/root/anaconda3/envs/pytorch/lib/python3.6/site-packages/torch/nn/modules/loss.py", line 932, in forward

ignore_index=self.ignore_index, reduction=self.reduction)

File "/root/anaconda3/envs/pytorch/lib/python3.6/site-packages/torch/nn/functional.py", line 2317, in cross_entropy

return nll_loss(log_softmax(input, 1), target, weight, None, ignore_index, None, reduction)

File "/root/anaconda3/envs/pytorch/lib/python3.6/site-packages/torch/nn/functional.py", line 1535, in log_softmax

ret = input.log_softmax(dim)

AttributeError: 'PackedSequence' object has no attribute 'log_softmax'

没有log_softmax是怎么回事。这个问题一般都是由于数据类型错误造成的,在计算损失函数的过程中,传入 criterion的两个输入,应该都是tensor,再来看一下我们scores和targets的类型,发现是PackedSequence,再结合之前的脚本,就可以理解为什么报错了。

在这里,需要传入criterion的是这个PackedSequence类里边的数据,而不是类本身。刚才的修改,虽然把返回值数量的问题给解决了,但这个返回的scores,并不是我们想要的scores,我们需要的应该是它里边的data。

所以只需要把脚本中所有出现scores和targets的地方,改成scores.data,以及targets.data,就可以解决了。

验证一下,print出scores.data的类型,是tensor。

注意top5和val函数相关的地方也需要做出相同的修改。

下面我把我的train.py整个贴上来,以供参考

import time

import torch.backends.cudnn as cudnn

import torch.optim

import torch.utils.data

import torchvision.transforms as transforms

from torch import nn

from torch.nn.utils.rnn import pack_padded_sequence

from models import Encoder, DecoderWithAttention

from datasets import *

from utils import *

from nltk.translate.bleu_score import corpus_bleu

print(torch.cuda.is_available())

# Data parameters

data_folder = '*******'

# folder with data files saved by create_input_files.py

data_name = 'coco_5_cap_per_img_5_min_word_freq' # base name shared by data files

# Model parameters

emb_dim = 64 # dimension of word embeddings 512

attention_dim = 64 # dimension of attention linear layers 512

decoder_dim = 64 # dimension of decoder RNN 512

dropout = 0.5

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # sets device for model and PyTorch tensors /

cudnn.benchmark = False # set to true only if inputs to model are fixed size; otherwise lot of computational overhead T

# Training parameters

start_epoch = 0

epochs = 1 # number of epochs to train for (if early stopping is not triggered) 120

epochs_since_improvement = 0 # keeps track of number of epochs since there's been an improvement in validation BLEU

batch_size = 32 # 32

workers = 0 # for data-loading; right now, only 1 works with h5py 1

encoder_lr = 1e-3 # learning rate for encoder if fine-tuning 1e-4

decoder_lr = 4e-3 # learning rate for decoder 4e-4

grad_clip = 5. # clip gradients at an absolute value of

alpha_c = 1. # regularization parameter for 'doubly stochastic attention', as in the paper

best_bleu4 = 0. # BLEU-4 score right now

print_freq = 100 # print training/validation stats every __ batches

fine_tune_encoder = False # fine-tune encoder?

checkpoint = None # path to checkpoint, None if none

def main():

"""

Training and validation.

"""

global best_bleu4, epochs_since_improvement, checkpoint, start_epoch, fine_tune_encoder, data_name, word_map

# Read word map

word_map_file = os.path.join(data_folder, 'WORDMAP_' + data_name + '.json')

with open(word_map_file, 'r') as j:

word_map = json.load(j)

# Initialize / load checkpoint

if checkpoint is None:

decoder = DecoderWithAttention(attention_dim=attention_dim,

embed_dim=emb_dim,

decoder_dim=decoder_dim,

vocab_size=len(word_map),

dropout=dropout)

decoder_optimizer = torch.optim.Adam(params=filter(lambda p: p.requires_grad, decoder.parameters()),

lr=decoder_lr)

encoder = Encoder()

encoder.fine_tune(fine_tune_encoder)

encoder_optimizer = torch.optim.Adam(params=filter(lambda p: p.requires_grad, encoder.parameters()),

lr=encoder_lr) if fine_tune_encoder else None

else:

checkpoint = torch.load(checkpoint)

start_epoch = checkpoint['epoch'] + 1

epochs_since_improvement = checkpoint['epochs_since_improvement']

best_bleu4 = checkpoint['bleu-4']

decoder = checkpoint['decoder']

decoder_optimizer = checkpoint['decoder_optimizer']

encoder = checkpoint['encoder']

encoder_optimizer = checkpoint['encoder_optimizer']

if fine_tune_encoder is True and encoder_optimizer is None:

encoder.fine_tune(fine_tune_encoder)

encoder_optimizer = torch.optim.Adam(params=filter(lambda p: p.requires_grad, encoder.parameters()),

lr=encoder_lr)

# Move to GPU, if available

decoder = decoder.to(device)

encoder = encoder.to(device)

# Loss function

criterion = nn.CrossEntropyLoss().to(device)

# Custom dataloaders

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

train_loader = torch.utils.data.DataLoader(

CaptionDataset(data_folder, data_name, 'TRAIN', transform=transforms.Compose([normalize])),

batch_size=batch_size, shuffle=True, num_workers=workers, pin_memory=True)

val_loader = torch.utils.data.DataLoader(

CaptionDataset(data_folder, data_name, 'VAL', transform=transforms.Compose([normalize])),

batch_size=batch_size, shuffle=True, num_workers=workers, pin_memory=True)

# Epochs

for epoch in range(start_epoch, epochs):

# Decay learning rate if there is no improvement for 8 consecutive epochs, and terminate training after 20

if epochs_since_improvement == 20:

break

if epochs_since_improvement > 0 and epochs_since_improvement % 8 == 0:

adjust_learning_rate(decoder_optimizer, 0.8)

if fine_tune_encoder:

adjust_learning_rate(encoder_optimizer, 0.8)

# One epoch's training

train(train_loader=train_loader,

encoder=encoder,

decoder=decoder,

criterion=criterion,

encoder_optimizer=encoder_optimizer,

decoder_optimizer=decoder_optimizer,

epoch=epoch)

# One epoch's validation

recent_bleu4 = validate(val_loader=val_loader,

encoder=encoder,

decoder=decoder,

criterion=criterion)

# Check if there was an improvement

is_best = recent_bleu4 > best_bleu4

best_bleu4 = max(recent_bleu4, best_bleu4)

if not is_best:

epochs_since_improvement += 1

print("\nEpochs since last improvement: %d\n" % (epochs_since_improvement,))

else:

epochs_since_improvement = 0

# Save checkpoint

save_checkpoint(data_name, epoch, epochs_since_improvement, encoder, decoder, encoder_optimizer,

decoder_optimizer, recent_bleu4, is_best)

def train(train_loader, encoder, decoder, criterion, encoder_optimizer, decoder_optimizer, epoch):

"""

Performs one epoch's training.

:param train_loader: DataLoader for training data

:param encoder: encoder model

:param decoder: decoder model

:param criterion: loss layer

:param encoder_optimizer: optimizer to update encoder's weights (if fine-tuning)

:param decoder_optimizer: optimizer to update decoder's weights

:param epoch: epoch number

"""

decoder.train() # train mode (dropout and batchnorm is used)

encoder.train()

batch_time = AverageMeter() # forward prop. + back prop. time

data_time = AverageMeter() # data loading time

losses = AverageMeter() # loss (per word decoded)

top5accs = AverageMeter() # top5 accuracy

start = time.time()

# Batches

for i, (imgs, caps, caplens) in enumerate(train_loader):

data_time.update(time.time() - start)

# Move to GPU, if available

imgs = imgs.to(device)

caps = caps.to(device)

caplens = caplens.to(device)

# Forward prop.

imgs = encoder(imgs)

scores, caps_sorted, decode_lengths, alphas, sort_ind = decoder(imgs, caps, caplens)

# Since we decoded starting with <start>, the targets are all words after <start>, up to <end>

targets = caps_sorted[:, 1:]

# Remove timesteps that we didn't decode at, or are pads

# pack_padded_sequence is an easy trick to do this

scores = pack_padded_sequence(scores, decode_lengths, batch_first=True)

targets = pack_padded_sequence(targets, decode_lengths, batch_first=True)

# print(type(scores.data))

# print(type(targets.data))

# Calculate loss

loss = criterion(scores.data, targets.data)

# Add doubly stochastic attention regularization

loss += alpha_c * ((1. - alphas.sum(dim=1)) ** 2).mean()

# Back prop.

decoder_optimizer.zero_grad()

if encoder_optimizer is not None:

encoder_optimizer.zero_grad()

loss.backward()

# Clip gradients

if grad_clip is not None:

clip_gradient(decoder_optimizer, grad_clip)

if encoder_optimizer is not None:

clip_gradient(encoder_optimizer, grad_clip)

# Update weights

decoder_optimizer.step()

if encoder_optimizer is not None:

encoder_optimizer.step()

# Keep track of metrics

top5 = accuracy(scores.data, targets.data, 5)

losses.update(loss.item(), sum(decode_lengths))

top5accs.update(top5, sum(decode_lengths))

batch_time.update(time.time() - start)

start = time.time()

# Print status

if i % print_freq == 0:

print('Epoch: [{0}][{1}/{2}]\t'

'Batch Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Data Load Time {data_time.val:.3f} ({data_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Top-5 Accuracy {top5.val:.3f} ({top5.avg:.3f})'.format(epoch, i, len(train_loader),

batch_time=batch_time,

data_time=data_time, loss=losses,

top5=top5accs))

def validate(val_loader, encoder, decoder, criterion):

"""

Performs one epoch's validation.

:param val_loader: DataLoader for validation data.

:param encoder: encoder model

:param decoder: decoder model

:param criterion: loss layer

:return: BLEU-4 score

"""

decoder.eval() # eval mode (no dropout or batchnorm)

if encoder is not None:

encoder.eval()

batch_time = AverageMeter()

losses = AverageMeter()

top5accs = AverageMeter()

start = time.time()

references = list() # references (true captions) for calculating BLEU-4 score

hypotheses = list() # hypotheses (predictions)

# explicitly disable gradient calculation to avoid CUDA memory error

# solves the issue #57

with torch.no_grad():

# Batches

for i, (imgs, caps, caplens, allcaps) in enumerate(val_loader):

# Move to device, if available

imgs = imgs.to(device)

caps = caps.to(device)

caplens = caplens.to(device)

# Forward prop.

if encoder is not None:

imgs = encoder(imgs)

scores, caps_sorted, decode_lengths, alphas, sort_ind = decoder(imgs, caps, caplens)

# Since we decoded starting with <start>, the targets are all words after <start>, up to <end>

targets = caps_sorted[:, 1:]

# Remove timesteps that we didn't decode at, or are pads

# pack_padded_sequence is an easy trick to do this

scores_copy = scores.clone()

scores = pack_padded_sequence(scores, decode_lengths, batch_first=True)

targets = pack_padded_sequence(targets, decode_lengths, batch_first=True)

# Calculate loss

loss = criterion(scores.data, targets.data)

# Add doubly stochastic attention regularization

loss += alpha_c * ((1. - alphas.sum(dim=1)) ** 2).mean()

# Keep track of metrics

losses.update(loss.item(), sum(decode_lengths))

top5 = accuracy(scores.data, targets.data, 5)

top5accs.update(top5, sum(decode_lengths))

batch_time.update(time.time() - start)

start = time.time()

if i % print_freq == 0:

print('Validation: [{0}/{1}]\t'

'Batch Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Top-5 Accuracy {top5.val:.3f} ({top5.avg:.3f})\t'.format(i, len(val_loader), batch_time=batch_time,

loss=losses, top5=top5accs))

# Store references (true captions), and hypothesis (prediction) for each image

# If for n images, we have n hypotheses, and references a, b, c... for each image, we need -

# references = [[ref1a, ref1b, ref1c], [ref2a, ref2b], ...], hypotheses = [hyp1, hyp2, ...]

# References

allcaps = allcaps[sort_ind] # because images were sorted in the decoder

for j in range(allcaps.shape[0]):

img_caps = allcaps[j].tolist()

img_captions = list(

map(lambda c: [w for w in c if w not in {word_map['<start>'], word_map['<pad>']}],

img_caps)) # remove <start> and pads

references.append(img_captions)

# Hypotheses

_, preds = torch.max(scores_copy, dim=2)

preds = preds.tolist()

temp_preds = list()

for j, p in enumerate(preds):

temp_preds.append(preds[j][:decode_lengths[j]]) # remove pads

preds = temp_preds

hypotheses.extend(preds)

assert len(references) == len(hypotheses)

# Calculate BLEU-4 scores

bleu4 = corpus_bleu(references, hypotheses)

print(

'\n * LOSS - {loss.avg:.3f}, TOP-5 ACCURACY - {top5.avg:.3f}, BLEU-4 - {bleu}\n'.format(

loss=losses,

top5=top5accs,

bleu=bleu4))

return bleu4

if __name__ == '__main__':

main()

4.效果演示

使用caption.py脚本,进行效果展示,会把attention的部分和提取出的caption都展示在图片上。

由于我这边服务器没有图形界面,所以我就只把提取出的所有words展示一下吧。如果你们有图形界面的话,展示的结果应该是作者那样的,逐个单词展示的。

我对caption.py做简单的修改,注释掉所有跟matplotlab和skimage相关的部分,然后在visualize_att函数中,把words打印出来就可以了。

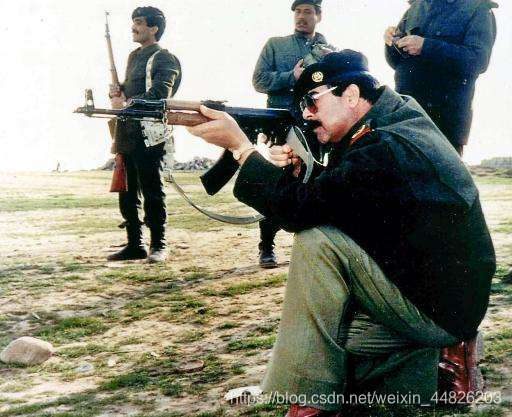

测试的一张图片如下,是百度图片里边随便搜出来的一张图。

输出结果:[’’, ‘a’, ‘group’, ‘of’, ‘people’, ‘standing’, ‘on’, ‘a’, ‘field’, ‘’]

一群人在一个地方站着。。。。这么大个人在这蹲着都看不到吗?

由于我现在只跑了一个epoch,而且模型里边embedding和attention的维度设置都很小,所以效果差也是理所当然的。接下来会修改一些参数,或者尝试torchvision中其他的预训练网络,如果效果有所改善的话,我可能会更新一下效果图(当然,大概率是懒得更新了)。

至此,模型‘看图说话’的就已经可以实现了,只是效果上还需要继续进行改进。

如果你觉得本文对你的学习和工作有所帮助的话,记得点赞、投币、收藏支持一下。那么我们下期再见。

更多推荐

已为社区贡献9条内容

已为社区贡献9条内容

所有评论(0)