kubeadm部署kubernetes

kubeadm安装kubernetes主机名ip地址操作系统软件版本k8s-master192.168.136.200Centos7docker-ce 19.03.9 kubeadm.x86_64 0:1.18.3-0kubectl.x86_64 0:1.18.3-0kubelet.x86_64 0:1.18.3-0k8s-node1192.168.136.201Centos7docker-ce

kubeadm安装kubernetes

| 主机名 | ip地址 | 操作系统 | 软件版本 |

|---|---|---|---|

| k8s-master | 192.168.136.200 | Centos7 | docker-ce 19.03.9 kubeadm.x86_64 0:1.18.3-0 kubectl.x86_64 0:1.18.3-0 kubelet.x86_64 0:1.18.3-0 |

| k8s-node1 | 192.168.136.201 | Centos7 | docker-ce 19.03.9 kubeadm.x86_64 0:1.18.3-0 kubectl.x86_64 0:1.18.3-0 kubelet.x86_64 0:1.18.3-0 |

| k8s-node2 | 192.168.136.202 | Centos7 | vdocker-ce 19.03.9 kubeadm.x86_64 0:1.18.3-0 kubectl.x86_64 0:1.18.3-0 kubelet.x86_64 0:1.18.3-0 |

一、安装containner runtimes

为了在pods中运行容器,Kubernetes需要安装容器运行环境,我们需要以下其中之一。这里我选用docker来进行。

Docker

CRI-O (Redhat)

Containerd

Other CRI runtimes: frakti

docker的安装

####安装所需软件包

[root@k8s-master ~]# yum install -y yum-utils \

> device-mapper-persistent-data lvm2

####设置docker官方仓库

[root@k8s-master ~]# yum-config-manager \

> --add-repo \

> https://download.docker.com/linux/centos/docker-ce.repo

####安装docker-ce

[root@k8s-master ~]# yum install docker-ce docker-ce-cli containerd.io -y

####创建/etc/docker目录

[root@k8s-master ~]# mkdir /etc/docker

####指定目录下创建daemon.json文件来设置docker参数

[root@k8s-master ~]# vim /etc/docker/daemon.json

键入:

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

####创建目录,以便后续的存放使用

[root@k8s-master ~]# mkdir -p /etc/systemd/system/docker.service.d

####重启后台进程并启动docker服务

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable docker

[root@k8s-master ~]# systemctl start docker

二、开始之前

操作系统及硬件需求

CentOS 7

每台计算机2GB或更多的RAM(虚拟机,要给到4G)

2个或更多CPU

群集中所有计算机之间的完整网络连接

1.设置各个主机名

2.设置各个主机名互相解析,通过/etc/hosts文件

[root@k8s-master ~]# cat /etc/hosts

192.168.136.201 k8s-node1

192.168.136.200 k8s-master

192.168.136.202 k8s-node2

3.确保MAC地址唯一性

4.确保product_uuid唯一

[root@k8s-master ~]# cat /sys/class/dmi/id/product_uuid

CA014D56-6DD4-EC02-5CE7-E724D80C5CA1

[root@k8s-node1 ~]# cat /sys/class/dmi/id/product_uuid

03EE4D56-A573-1E40-BC73-6B71B7AE7082

[root@k8s-node2 ~]# cat /sys/class/dmi/id/product_uuid

E3024D56-A44A-7A83-2A6C-99365D310F84

5.确保iptables工具,而不是用nftables(新的防火墙配置工具,centos8中就是)

6.禁用selinux

开放端口

1.放行master上各个组件的端口

[root@k8s-master ~]# firewall-cmd --add-port=6443/tcp --permanent

[root@k8s-master ~]# firewall-cmd --add-port=2379-2380/tcp --permanent

[root@k8s-master ~]# firewall-cmd --add-port=10250-10252/tcp --permanent

[root@k8s-master ~]# firewall-cmd --reload

2.放行两个node上各个组件的端口

[root@k8s-node1 ~]# firewall-cmd --add-port=10250/tcp --permanent

[root@k8s-node1 ~]# firewall-cmd --add-port=30000-32767/tcp --permanent

[root@k8s-node1 ~]# firewall-cmd --reload

必须禁用swap功能才能使kubelet正常工作

Kubernetes 1.8开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动。如果开启了swap分区,kubelet会启动失败(可以通过将参数 --fail-swap-on 设置为false来忽略swap on),故需要在每台机器上关闭 swap分区。

每个节点上关闭swap分区

[root@k8s-master ~]# vim /etc/fstab

注释掉带有swap分区的一行

[root@k8s-master ~]# swapoff -a

调整内核参数

所有节点都需要调整

[root@k8s-master ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

br_netfilter模块要被加载

所有节点都需要加载

永久加载模块:

[root@k8s-master ~]# modprobe br_netfilter

[root@k8s-master ~]# vim /etc/modules-load.d/k8s.conf

写入:

br_netfilter

三、安装kubeadm, kubelet和kubectl

kubeadm:引导集群的命令;

kubelet:运行在集群中所有机器上并执行诸如启动pod和容器之类操作的组件;

kubectl:操作集群的命令行单元;

安装阿里云源

所有节点安装源

[root@k8s-master ~]# cat /etc/yum.repos.d/k8s.repo

[mirrors.aliyun.com_kubernetes]

name=added from: https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

进行安装

所有节点安装

[root@k8s-master ~]# yum install -y kubelet kubeadm kubectl

[root@k8s-master ~]# systemctl enable kubelet.service

[root@k8s-master ~]# systemctl start kubelet.service(服务起不来先别管)

四、master节点自举集群

进行自举

kubelet和kubectl要和kubernetes版本一致,允许一些稍微的偏差。

由于阿里云没有18.3版本的镜像,所以下面的版本换成1.17.1

这些网段什么的,都是同下面的yml文件中保持一致的。默认就是

[root@k8s-master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.136.200 \

> --kubernetes-version=v1.18.1 \

> --pod-network-cidr=10.244.0.0/16 \

> --service-cidr=10.1.0.0/16 \

> --image-repository registry.aliyuncs.com/google_containers

回显中,要根据人家的提示进行操作。(待会做)

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

最后的回显结果是token(这个token有效期24小时):

kubeadm join 192.168.136.200:6443 --token cglndv.gk2eo9d6tcfg5m90 \

--discovery-token-ca-cert-hash sha256:e8ab6b97d4f0910227b3e654d07c29da8044dc172ca642a8d00b0824a183ee9a

查看下载的docker镜像

[root@k8s-master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.18.1 4e68534e24f6 7 weeks ago 117MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.18.1 a595af0107f9 7 weeks ago 173MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.1 d1ccdd18e6ed 7 weeks ago 162MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.18.1 6c9320041a7b 7 weeks ago 95.3MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 3 months ago 683kB

registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 4 months ago 43.8MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 7 months ago 288MB

根据刚才的提示进行操作

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

查看集群状态

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

五、安装flannel网络

下载yml文件

[root@k8s-master ~]# wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kubeflannel.yml

文件内容修改:

....省略部分内容

net-conf.json: |

{

"Network": "10.244.0.0/16", ##Pod的网段地址,要和初始化集群是一致。

"Backend": {

"Type": "vxlan"

}

}

....省略部分内容

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64 ##拉取镜像名字

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens33 ##如果是多网卡,要指定具体网卡

resources:

requests:

cpu: "100m"

安装flannel网络

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

##创建完成后就开始拉取这些镜像

通过以下命令可以查看镜像拉取状态:

##kube-flannel-ds-amd64都变成1

[root@k8s-master ~]# kubectl get ds -l app=flannel -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel-ds-amd64 1 1 1 1 1 <none> 8m19s

kube-flannel-ds-arm 0 0 0 0 0 <none> 8m19s

kube-flannel-ds-arm64 0 0 0 0 0 <none> 8m19s

kube-flannel-ds-ppc64le 0 0 0 0 0 <none> 8m19s

kube-flannel-ds-s390x 0 0 0 0 0 <none> 8m19s

##所有的都变成1/1,kube-system 是Kubernetes系统创建的对象的名称空间。

通常,其中将包含 kube-dns , kube-proxy , kubernetes-dashboard 以及诸如fluentd,heapster,ingresses之类的东西。

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5mbw8 1/1 Running 0 51m

coredns-7ff77c879f-k24xw 1/1 Running 0 51m

etcd-k8s-master 1/1 Running 0 51m

kube-apiserver-k8s-master 1/1 Running 0 51m

kube-controller-manager-k8s-master 1/1 Running 0 51m

kube-flannel-ds-amd64-jdlsq 1/1 Running 0 8m10s

kube-proxy-jrwx9 1/1 Running 0 51m

kube-scheduler-k8s-master 1/1 Running 0 51m

六、node加入集群

将2个节点加入到集群中

##其中的token和discovery-toke-ca-cert-hash和我上面保留的是一样的

[root@k8s-node1 ~]# kubeadm join 192.168.136.200:6443 --token cglndv.gk2eo9d6tcfg5m90 \

> --discovery-token-ca-cert-hash sha256:e8ab6b97d4f0910227b3e654d07c29da8044dc172ca642a8d00b0824a183ee9a

最后的回显:

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

在master节点上进行查看集群的状态

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 109m v1.18.3

k8s-node1 Ready <none> 54m v1.18.3

k8s-node2 Ready <none> 52m v1.18.3

##下面的全部变成1/1

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5mbw8 1/1 Running 0 65m

coredns-7ff77c879f-k24xw 1/1 Running 0 65m

etcd-k8s-master 1/1 Running 0 65m

kube-apiserver-k8s-master 1/1 Running 0 65m

kube-controller-manager-k8s-master 1/1 Running 0 65m

kube-flannel-ds-amd64-cm9n6 1/1 Running 0 10m

kube-flannel-ds-amd64-jdlsq 1/1 Running 0 22m

kube-flannel-ds-amd64-mbcvx 1/1 Running 2 8m50s

kube-proxy-jrwx9 1/1 Running 0 65m

kube-proxy-k597x 1/1 Running 0 10m

kube-proxy-wcnpw 1/1 Running 0 8m50s

kube-scheduler-k8s-master 1/1 Running 0 65m

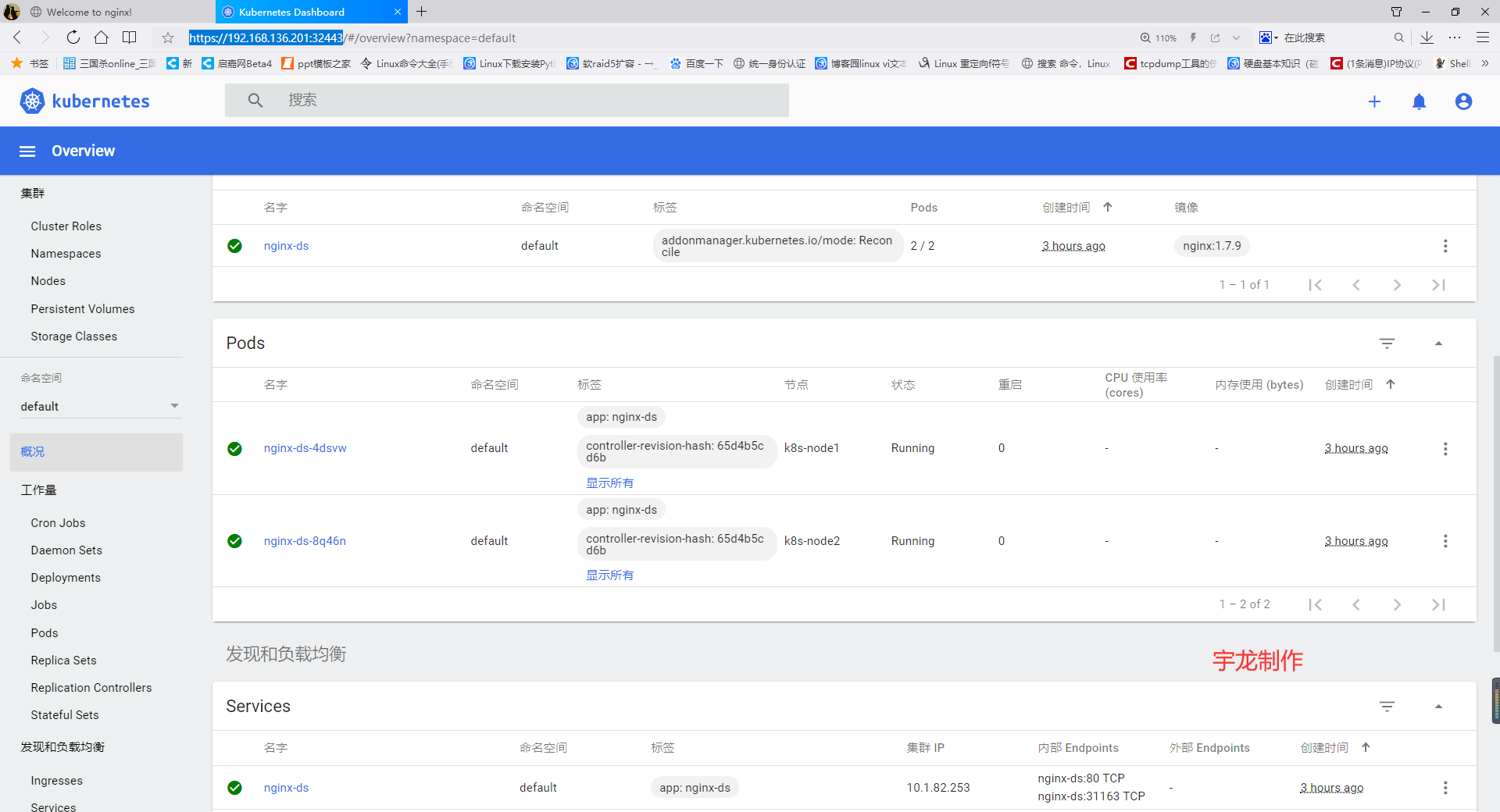

七、测试集群

防火墙放行udp的8472端口(vxlan报文)

保证这个vxlan网段可以通信10.244.1.0

[root@k8s-node2 ~]# firewall-cmd --add-port=8472/udp --permanent

[root@k8s-node2 ~]# firewall-cmd --add-port=8472/udp

创建测试yml文件

[root@k8s-master ~]# vim nginx-ds.yml

apiVersion: v1

kind: Service

metadata:

name: nginx-ds

labels:

app: nginx-ds

spec:

type: NodePort

selector:

app: nginx-ds

ports:

- name: http

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

labels:

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

app: nginx-ds

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: nginx:1.7.9

ports:

- containerPort: 80

执行yml文件

[root@k8s-master ~]# kubectl apply -f nginx-ds.yml

service/nginx-ds created

daemonset.apps/nginx-ds created

[root@k8s-master ~]# kubectl get pods -o wide | grep nginx-ds

nginx-ds-4dsvw 1/1 Running 0 116s 10.244.1.2 k8s-node1 <none> <none>

nginx-ds-8q46n 1/1 Running 0 116s 10.244.2.2 k8s-node2 <none> <none>

[root@k8s-master ~]# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 105m <none>

nginx-ds NodePort 10.1.82.253 <none> 80:31163/TCP 2m24s app=nginx-ds

可见nginx-ds服务信息如下:

service cluster IP :10.1.82.253

服务端口:80

nodeport端口:31163

访问cluster-ip:10.1.82.253,外部访问需要加上192.168.136.xxx:31163

容器运行在node上,我们访问两个node进行查看

八、安装Dashboard UI

先下载官方配置文件

[root@k8s-master ~]# wget -c https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

对其进行修改

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32243 ##访问端口

selector:

k8s-app: kubernetes-dashboard

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin ##修改名字为集群管理员

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

安装kubernetes-dashboard

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

pods检查

如果其中一个不是running,那么可能是yaml文件出问题了,或者镜像下载失败了,如果是文件出问题,重新弄一个文件,并重新安装yaml文件,如果镜像出问题,我们要去yaml文件里面看看,是哪个镜像,然后手动下载下来,记住一定是下载到node节点上,而不是master管理节点

另外,还有一种解决方案,我们可以查看logs,下面是查看命令:

kuberctl logs -n 命名空间 pods名称

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6b4884c9d5-mrlhl 1/1 Running 0 36m

kubernetes-dashboard-7bfbb48676-qcl6f 1/1 Running 0 36m

获取登陆token

[root@k8s-master ~]# kubectl describe secret -n kubernetes-dashboard \

> $(kubectl get secret -n kubernetes-dashboard |grep \

> kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

回显:

kubernetes-dashboard-token-fjvpw

kubernetes.io/service-account-token ####下面的都是

eyJhbGciOiJSUzI1NiIsImtpZCI6IlljeGxGM0I1b1huMTd0Zkg0WnY4ZUdZWUZUekV0MzNIMmxWY21qcjlHVkEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1manZwdyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImQzNjFhMmY1LWNlMmItNDI5NC04OTFjLWYxMzg1M2NhNzZlZSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.OpLgXaRTz-PtiMyGlwGbfUaZZenDzVrChnYsZ2a7jv3Iw8QwSjXUyCyb6ZtsO7T2mZ3wtUHPreXRNgCF5moR0mxRJGY7qiBZKNsbHslvRpCG9ZTbOnjSmX5Ux-OqDNDS0zmJ8TV5nqk7Aa4dd5lJOUJpALMYbAaBW7TxcwEtlJOxC__YPsK7yrsu8fceVOQhJV114z421CDOTC0ifLxrWdwEFV9LxqisbdjpV_UPRuIsnZBl1iPvvp-cN_TmRMqxc-yiCXwT0KaLVq6M18sCWBSb3oybPXeOIXjR6GBirjYRucX3QnFACQvzvprWVurdOvgrhh-xb0rteua1KEYT3w

防火墙放行32443

[root@k8s-master ~]# firewall-cmd --add-port=32443/tcp --permanent

[root@k8s-master ~]# firewall-cmd --reload

我们首先要看看dashboard跑在哪个节点上

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-6b4884c9d5-mrlhl 1/1 Running 0 20m 10.244.2.7 k8s-node2 <none> <none>

kubernetes-dashboard-7bfbb48676-qcl6f 1/1 Running 0 20m 10.244.1.8 k8s-node1 <none>

<none>

##可以看到跑在node1上,node1的IP地址为

登陆dashboard UI

windows浏览器访问:https://192.168.136.201:32443

注意:这是node1的地址,当然,因为这是一个整体,我们访问三个节点的任意一个都可以,他都做了映射。

s-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-6b4884c9d5-mrlhl 1/1 Running 0 20m 10.244.2.7 k8s-node2

kubernetes-dashboard-7bfbb48676-qcl6f 1/1 Running 0 20m 10.244.1.8 k8s-node1

##可以看到跑在node1上,node1的IP地址为

###### 登陆dashboard UI

windows浏览器访问:https://192.168.136.201:32443

注意:这是node1的地址,当然,因为这是一个整体,我们访问三个节点的任意一个都可以,他都做了映射。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)