WEKA算法开发——记一次不太成功的遗传属性加权贝叶斯算法实验

WEKA算法开发——记一次不太成功的遗传属性加权贝叶斯算法实验1. WEKA介绍2.使用WEKA开发自己的算法3.~~总结~~ 吐槽1. WEKA介绍Weka平台是一种数据分析+模式识别平台,使用JAVA编写,包含如Iris等等数据集与贝叶斯,决策树等多种分类/聚类算法。下图为WEKA的主页面,在本次开发中,主要使用了Explorer功能第一步需要打开数据集WEKA中的数据集为.ariff格式第二

WEKA算法开发——记一次不太成功的遗传属性加权贝叶斯算法实验

1. WEKA介绍

Weka平台是一种数据分析+模式识别平台,使用JAVA编写,包含如Iris等等数据集与贝叶斯,决策树等多种分类/聚类算法。

下图为WEKA的主页面,在本次开发中,主要使用了Explorer功能

第一步需要打开数据集

WEKA中的数据集为.ariff格式

第二步,点击Classify,选择算法,点击Start进行分类

2. 使用WEKA开发自己的算法

weke存在部分分类/聚类算法,我们可以在其之上,继续开发我们自己的算法。

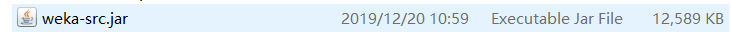

- 第一步,找到你安装的weka平台目录下的weka-src.jar文件

解压后获得源代码文件,如下:

使用JAVA编译器,我这里是Eclipse,打开如下:

- 然后我们就可以准备进行开发了,首先要找到开发的位置,并新建文件

前提:WEKA在JAVA编译器中的启动,是通过GUIChooser.java启动

位置位于

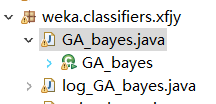

接下来,我们需要新建立一个分类算法,所以需要在src/main/java下新建一个包(Package),并将其命名为weka.classifiers.(这里你自己起名),我创建的如下(下面一个),然后在里面创建java文件,那就是你的算法文件了:

创建文件的最后一步是添加到路径,打开weka.gui下属的GenericPropertiesCreator.props,把你的包名字添加进去

这样就能在启动后搜索到你的分类文件夹了。

3. 第三步,编写具体的算法

当然这步是最难的,首先要了解,编写自己的算法主要就是改写下面两个函数:

其中第一个是构建分类器的,对于贝叶斯来说,构建分类器只需要统计类别数量/属性数量即可,所以一个二维矩阵+几个数组就能放下参数,没那么复杂。 输入为数据集,多个样本。

第二个参数是用来进行验证的,输入为一个一个的样本,输出的为标准化的分类结果,不是[0,1,0],是[0.2,0.6,0.2]

public void buildClassifier(Instances instances) throws Exception

public double [] distributionForInstance(Instance instance) throws Exception

然后给出朴素贝叶斯的代码,经过简化,原WEKA1000多行的代码,简化成了200多行。

代码是从老师那里获取的,MOOC课程链接为:MOOC-机器学习。其中有关于WEKA的一章,不懂的可以去看一看。

package weka.classifiers.xfjy;

import weka.core.*;

import weka.classifiers.*;

/**

* Implement the NB1 classifier.

*/

/*这里注意你起的类的名字*/

public class naive_bayes extends AbstractClassifier {

/** The number of class and each attribute value occurs in the dataset */

private double [][] m_ClassAttCounts;

/** The number of each class value occurs in the dataset */

private double [] m_ClassCounts;

/** The number of values for each attribute in the dataset */

private int [] m_NumAttValues;

/** The starting index of each attribute in the dataset */

private int [] m_StartAttIndex;

/** The number of values for all attributes in the dataset */

private int m_TotalAttValues;

/** The number of classes in the dataset */

private int m_NumClasses;

/** The number of attributes including class in the dataset */

private int m_NumAttributes;

/** The number of instances in the dataset */

private int m_NumInstances;

/** The index of the class attribute in the dataset */

private int m_ClassIndex;

public void buildClassifier(Instances instances) throws Exception {

// reset variable

m_NumClasses = instances.numClasses();

m_ClassIndex = instances.classIndex();

m_NumAttributes = instances.numAttributes();

m_NumInstances = instances.numInstances();

m_TotalAttValues = 0;

// allocate space for attribute reference arrays

m_StartAttIndex = new int[m_NumAttributes];

m_NumAttValues = new int[m_NumAttributes];

// set the starting index of each attribute and the number of values for

// each attribute and the total number of values for all attributes(not including class).

for(int i = 0; i < m_NumAttributes; i++) {

if(i != m_ClassIndex) {

m_StartAttIndex[i] = m_TotalAttValues;

m_NumAttValues[i] = instances.attribute(i).numValues();

m_TotalAttValues += m_NumAttValues[i];

}

else {

m_StartAttIndex[i] = -1;

m_NumAttValues[i] = m_NumClasses;

}

}

// allocate space for counts and frequencies

m_ClassCounts = new double[m_NumClasses];

m_ClassAttCounts = new double[m_NumClasses][m_TotalAttValues];

// Calculate the counts

for(int k = 0; k < m_NumInstances; k++) {

int classVal=(int)instances.instance(k).classValue();

m_ClassCounts[classVal] ++;

int[] attIndex = new int[m_NumAttributes];

for(int i = 0; i < m_NumAttributes; i++) {

if(i == m_ClassIndex){

attIndex[i] = -1;

}

else{

attIndex[i] = m_StartAttIndex[i] + (int)instances.instance(k).value(i);

m_ClassAttCounts[classVal][attIndex[i]]++;

}

}

}

}

/**

* Calculates the class membership probabilities for the given test instance

*

* @param instance the instance to be classified

* @return predicted class probability distribution

* @exception Exception if there is a problem generating the prediction

*/

public double [] distributionForInstance(Instance instance) throws Exception {

double [] probs = new double[m_NumClasses];

// store instance's att values in an int array

int[] attIndex = new int[m_NumAttributes];

for(int att = 0; att < m_NumAttributes; att++) {

if(att == m_ClassIndex)

attIndex[att] = -1;

else

attIndex[att] = m_StartAttIndex[att] + (int)instance.value(att);

}

// calculate probabilities for each possible class value

for(int classVal = 0; classVal < m_NumClasses; classVal++) {

probs[classVal]=(m_ClassCounts[classVal]+1.0)/(m_NumInstances+m_NumClasses);

for(int att = 0; att < m_NumAttributes; att++) {

if(attIndex[att]==-1) continue;

probs[classVal]*=(m_ClassAttCounts[classVal][attIndex[att]]+1.0)/(m_ClassCounts[classVal]+m_NumAttValues[att]);

}

}

Utils.normalize(probs);

return probs;

}

public static void main(String [] argv) {

runClassifier(new naive_bayes(), argv);/*你起的类的名字,注意*/

}

}

然后就可以根据上面的朴素贝叶斯进行你自己算法的修改了,我的修改是基于遗传算法的属性加权贝叶斯。。。但是效果贼差,额。。。真的很差,就当做是抛砖引玉了,大家可以自己写自己的。

package weka.classifiers.xfjy;

import weka.core.*;

import weka.classifiers.*;

/**

* Implement the NB1 classifier.

*/

public class 你的类名 extends AbstractClassifier {

/** The number of class and each attribute value occurs in the dataset */

private double [][] m_ClassAttCounts;

/** The number of each class value occurs in the dataset */

private double [] m_ClassCounts;

/** The number of values for each attribute in the dataset */

private int [] m_NumAttValues;

/** The starting index of each attribute in the dataset */

private int [] m_StartAttIndex;

/** The number of values for all attributes in the dataset */

private int m_TotalAttValues;

/** The number of classes in the dataset */

private int m_NumClasses;

/** The number of attributes including class in the dataset */

private int m_NumAttributes;

/** The number of instances in the dataset */

private int m_NumInstances;

/** The index of the class attribute in the dataset */

private int m_ClassIndex;

/*权重种群矩阵*/

private double [][] m_WeightsPop;

/*保存最好的个体*/

private double [] best;

/*保存第二好的个体*/

private double [] second_best;

/*保存100个个体的表现*/

private double [] performance;

/*保存最终的最优值*/

private double [] final_best;

/*子代个体*/

private double [] child_iter;

private Double final_best_fitness;

/*种群数量*/

public int m_pop_num =400;

/*交叉函数*/

public double[] cross(double[] iter1,double[] iter2) throws Exception{

double[] child = new double[iter1.length];

int point1 = (int)(Math.random()*(int)(iter1.length)/2)+ (int)(iter1.length/2);

//int point2 = point1 ;

for(int i =0;i<point1;i++) {

child[i] = iter1[i];

}

for(int j =point1;j<iter1.length;j++) {

child[j]=iter2[j];

}

//Utils.normalize(child);

return child;

}

public void buildClassifier(Instances instances) throws Exception {

// reset variable

m_NumClasses = instances.numClasses();

m_ClassIndex = instances.classIndex();

m_NumAttributes = instances.numAttributes();

m_NumInstances = instances.numInstances();

m_TotalAttValues = 0;

// allocate space for attribute reference arrays

m_StartAttIndex = new int[m_NumAttributes];

m_NumAttValues = new int[m_NumAttributes];

// set the starting index of each attribute and the number of values for

// each attribute and the total number of values for all attributes(not including class).

for(int i = 0; i < m_NumAttributes; i++) {

if(i != m_ClassIndex) {

m_StartAttIndex[i] = m_TotalAttValues;

m_NumAttValues[i] = instances.attribute(i).numValues();/*一共有几个可选的值*/

m_TotalAttValues += m_NumAttValues[i]; /*总共有几个可选的值*/

}

else {

m_StartAttIndex[i] = -1;

m_NumAttValues[i] = m_NumClasses;

}

}

// allocate space for counts and frequencies

m_ClassCounts = new double[m_NumClasses];

m_ClassAttCounts = new double[m_NumClasses][10000];

// Calculate the counts

for(int k = 0; k < m_NumInstances; k++) {

int classVal=(int)instances.instance(k).classValue();

m_ClassCounts[classVal] ++;

int[] attIndex = new int[m_NumAttributes];

for(int i = 0; i < m_NumAttributes; i++) {

if(i == m_ClassIndex){

attIndex[i] = -1;

}

else{

attIndex[i] = m_StartAttIndex[i] + (int)instances.instance(k).value(i);

m_ClassAttCounts[classVal][attIndex[i]]++;

}

}

}

/*数组,用以normlize,辅助生成权重种群*/

double[] norm_array = new double[m_NumAttributes-1];

/*初始化权重种群,互信息再说*/

m_WeightsPop = new double[m_pop_num][m_NumAttributes-1];

/*构建权重种群,100个*/

for(int num=0;num< m_pop_num;num++) {

for(int num2=0;num2<m_NumAttributes-1;num2++) {

double i = Math.random();

norm_array[num2] = i;

}

Utils.normalize(norm_array);

for(int num3=0;num3<m_NumAttributes-1;num3++) {

m_WeightsPop[num][num3] = norm_array[num3];

}

}

//==============================超参数

final_best = new double[m_NumAttributes-1];

performance = new double[m_pop_num];

second_best = new double[m_NumAttributes-1];

best = new double[m_NumAttributes-1];

double [][] WeightsPop_tmp = new double[m_pop_num][m_NumAttributes-1];

child_iter = new double[m_NumAttributes];

final_best_fitness = Double.NEGATIVE_INFINITY;

//===========================================

/*开始主循环,谨慎一些*/

for(int main_loop =0; main_loop<100;main_loop++) { //!!!!!注意,循环次数记得统一到开始

double [] probs = new double[m_NumClasses]; //获得预测值

double [] real = new double[m_NumClasses];

for(int i =0;i<m_NumClasses;i++) {real[i]=0;}

double error= 0;//用以统计整体误差

for(int p_num=0;p_num<m_pop_num;p_num++) {

error =0;

for(int num=0; num < m_NumInstances;num++) {

Instance ins = instances.instance(num);

probs = predict_tmp(ins,p_num);

int real_class = (int)ins.classValue();

real[real_class]= 1;

// error += Math.log(probs[real_class]/3);

error += Math.pow(probs[real_class], 2)/3;

for(int i =0;i<m_NumClasses;i++) {real[i]=0;}

}//评估函数的括号 计算error

performance[p_num] = error;

}//种群次数循环计算performance

for(int new_pop =0;new_pop<m_pop_num;new_pop++) {

/*获取最优个体*/

double max = Double.NEGATIVE_INFINITY;//

int maxIndex1 = 0;

for (int i = 0; i < performance.length; i++) {

if (performance[i] > max) {

max = performance[i];

maxIndex1 = i;

}

}

for(int num=0;num<m_NumAttributes-1;num++) {

best[num] = m_WeightsPop[maxIndex1][num];

}

if (final_best_fitness < max) {

final_best = best;

final_best_fitness = max;

}

/*接下来找第二个,随机就行*/

int maxIndex2 =(int)(Math.random()*m_pop_num);

for(int num=0;num<m_NumAttributes-1;num++) {

second_best[num] = m_WeightsPop[maxIndex2][num];

}

/*到目前为止,两个找到了,接下来是交叉和变异*/

second_best = cross(best,second_best);

for(int i =0;i<m_NumAttributes-1;i++) {

child_iter[i] = second_best[i];

}

double child_performance=0;

double [] real2 = new double[m_NumClasses];

for(int i =0;i<m_NumClasses;i++) {real2[i]=0;}

for(int num=0; num < m_NumInstances;num++) {

Instance ins = instances.instance(num);

probs = predict_child(ins);

int real_class = (int)ins.classValue();

real2[real_class]=1;

// child_performance += Math.log(probs[real_class]/3);

child_performance += Math.pow(probs[real_class], 2)/3;

}//评估函数的括号 计算error

if(child_performance > performance[maxIndex1]) {

for(int i=0;i<m_NumAttributes-1;i++) {

final_best[i]=second_best[i];

WeightsPop_tmp[new_pop][i] = second_best[i];

final_best_fitness = child_performance;

}

}

else {

int point1 = (int)Math.random()*(m_NumAttributes-1);

WeightsPop_tmp[new_pop][point1]+=0.05;

Utils.normalize(best);

for(int i=0;i<m_NumAttributes-1;i++) {

WeightsPop_tmp[new_pop][i] = best[i];

}

}

}//这个是生成新种群括号

m_WeightsPop = WeightsPop_tmp;

/*新种群替换旧种群*/

}//这个是主循环括号

}

public double [] predict_child(Instance instance) throws Exception {

double [] probs = new double[m_NumClasses];

// store instance's att values in an int array

int[] attIndex = new int[m_NumAttributes];

for(int att = 0; att < m_NumAttributes; att++) {

if(att == m_ClassIndex)

attIndex[att] = -1;

else

attIndex[att] = m_StartAttIndex[att] + (int)instance.value(att);

}

// calculate probabilities for each possible class value

for(int classVal = 0; classVal < m_NumClasses; classVal++) {

probs[classVal]=(m_ClassCounts[classVal]+1.0)/(m_NumInstances+m_NumClasses);

for(int att = 0; att < m_NumAttributes; att++) {

if(attIndex[att]==-1) continue;

probs[classVal]*=Math.pow((m_ClassAttCounts[classVal][attIndex[att]]+1.0)/(m_ClassCounts[classVal]+m_NumAttValues[att]),(child_iter[att]));

}

}

Utils.normalize(probs);

return probs;

}

public double [] distributionForInstance(Instance instance) throws Exception {

double [] probs = new double[m_NumClasses];

// store instance's att values in an int array

int[] attIndex = new int[m_NumAttributes];

for(int att = 0; att < m_NumAttributes; att++) {

if(att == m_ClassIndex)

attIndex[att] = -1;

else

attIndex[att] = m_StartAttIndex[att] + (int)instance.value(att);

}

// calculate probabilities for each possible class value

for(int classVal = 0; classVal < m_NumClasses; classVal++) {

probs[classVal]=(m_ClassCounts[classVal]+1.0)/(m_NumInstances+m_NumClasses);

for(int att = 0; att < m_NumAttributes; att++) {

if(attIndex[att]==-1) continue;

probs[classVal]*=Math.pow((m_ClassAttCounts[classVal][attIndex[att]]+1.0)/(m_ClassCounts[classVal]+m_NumAttValues[att]),(final_best[att]));

}

}

Utils.normalize(probs);

return probs;

}

public double [] predict_tmp(Instance instance,int pop_num) throws Exception {

double [] probs = new double[m_NumClasses];

// store instance's att values in an int array

int[] attIndex = new int[m_NumAttributes];

for(int att = 0; att < m_NumAttributes; att++) {

if(att == m_ClassIndex)

attIndex[att] = -1;

else

attIndex[att] = m_StartAttIndex[att] + (int)instance.value(att);

}

// calculate probabilities for each possible class value

for(int classVal = 0; classVal < m_NumClasses; classVal++) {

probs[classVal]=(m_ClassCounts[classVal]+1.0)/(m_NumInstances+m_NumClasses);

for(int att = 0; att < m_NumAttributes; att++) {

if(attIndex[att]==-1) continue;

probs[classVal]*=Math.pow((m_ClassAttCounts[classVal][attIndex[att]]+1.0)/(m_ClassCounts[classVal]+m_NumAttValues[att]),(m_WeightsPop[pop_num][att]));

}

}

Utils.normalize(probs);

return probs;

}

public static void main(String [] argv) {

runClassifier(new 你的类名(), argv);

}

}

我试过用交叉熵,但是还是没有MSE表现好,变异函数加上去之后效果反而变差了,于是删掉了,删掉之后两代种群就完全一样了,嗯,这次的设计本来就有问题。函数能合并成一个的,被我复制了三份。。。最后结果正确率大部分在80,少数95,极少数98。朴素贝叶斯为95(Iris,66%训练集情况)。但是在Iris2D能到100%正确率。

3. 总结 吐槽

CUG课设题目系列

模式识别课程中现在大部分用python的情况下,老师坚持使用JAVA+WEKA,虽然说写贝叶斯的话,二者其实差不多,细算下来,好像反而JAVA+WEKA更简单,毕竟骨架都给了,而且评估函数都不用写。

就我这样的稀烂结课水平老师给了99分。。。99分,第一次感觉我不配这个成绩

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)