如何在python中对维基百科类别进行分组?

问题:如何在python中对维基百科类别进行分组?

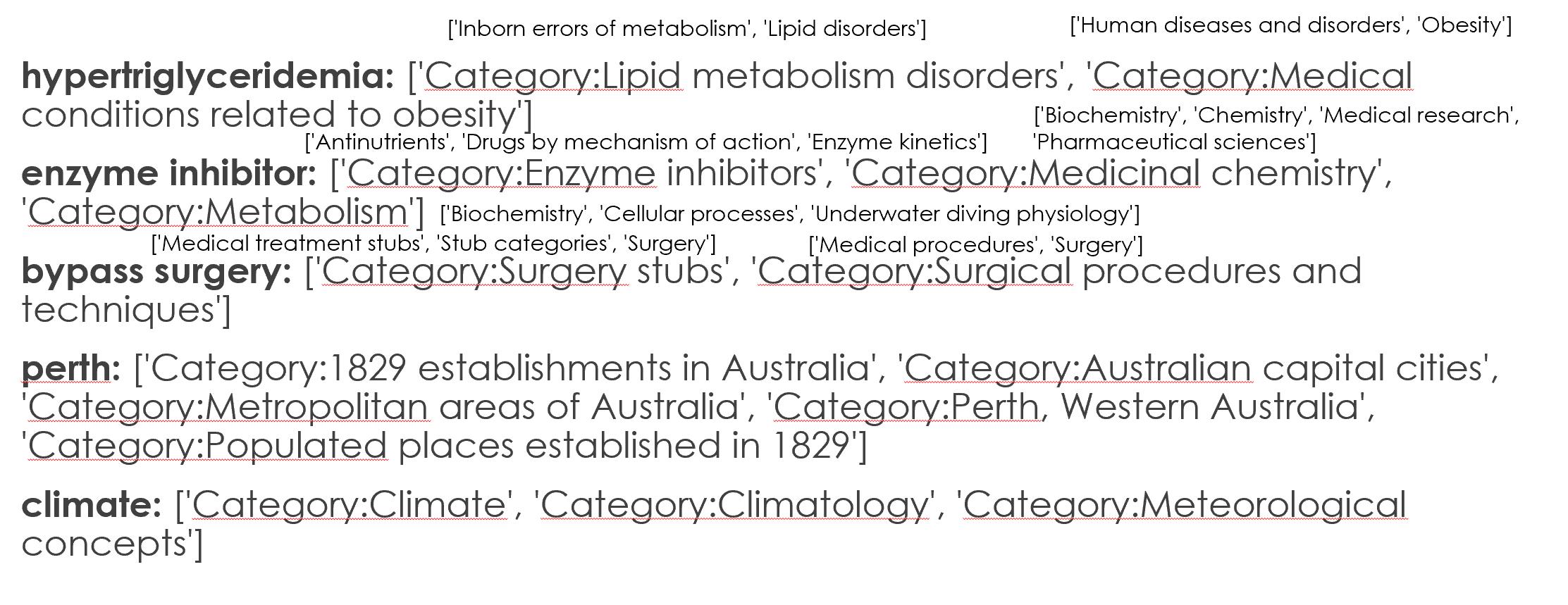

对于我的数据集的每个概念,我都存储了相应的维基百科类别。例如,考虑以下 5 个概念及其对应的维基百科类别。

-

高甘油三酯血症:

['Category:Lipid metabolism disorders', 'Category:Medical conditions related to obesity'] -

酶抑制剂:

['Category:Enzyme inhibitors', 'Category:Medicinal chemistry', 'Category:Metabolism'] -

搭桥手术:

['Category:Surgery stubs', 'Category:Surgical procedures and techniques'] -

珀斯:

['Category:1829 establishments in Australia', 'Category:Australian capital cities', 'Category:Metropolitan areas of Australia', 'Category:Perth, Western Australia', 'Category:Populated places established in 1829'] -

气候:

['Category:Climate', 'Category:Climatology', 'Category:Meteorological concepts']

如您所见,前三个概念属于医学领域(而其余两个术语不是医学术语)。

更准确地说,我想将我的概念分为医疗和非医疗。然而,仅使用类别来划分概念是非常困难的。例如,尽管enzyme inhibitor和bypass surgery这两个概念属于医学领域,但它们的类别彼此非常不同。

所以想知道有没有办法获取类别的parent category(比如enzyme inhibitor和bypass surgery的类别属于medical父类别)

我目前正在使用pymediawiki和pywikibot。但是,我并不仅限于这两个库,也很高兴有使用其他库的解决方案。

编辑

正如@IlmariKaronen 所建议的,我也在使用categories of categories,我得到的结果如下(category附近的T_he 小字体是categories of the category_)。

但是,我仍然找不到使用这些类别详细信息来确定给定术语是医学还是非医学的方法。

此外,正如@IlmariKaronen 所指出的,使用Wikiproject细节可能是潜在的。但是,Medicinewikiproject 似乎没有所有的医学术语。因此,我们还需要检查其他 wikiprojects。

**编辑:**我当前从维基百科概念中提取类别的代码如下。这可以使用pywikibot或pymediawiki来完成,如下所示。

1.使用库pymediawiki

将 mediawiki 导入为密码

p u003d wikipedia.page('酶抑制剂')

打印(p.categories)

祖兹 100033

2.使用库`pywikibot`

将 pywikibot 导入为密码

site u003d pw.Site('en', 'wikipedia')

打印([

cat.title()

对于 pw.Page(site, 'support-vector machine').categories() 中的 cat

如果“隐藏”不在 cat.categoryinfo 中

])

zoz100036`

类别的类别也可以按照@IlmariKaronen 的答案中所示的相同方式完成。

如果您正在寻找更长的测试概念列表,我在下面提到了更多示例。

['juvenile chronic arthritis', 'climate', 'alexidine', 'mouthrinse', 'sialosis', 'australia', 'artificial neural network', 'ricinoleic acid', 'bromosulfophthalein', 'myelosclerosis', 'hydrochloride salt', 'cycasin', 'aldosterone antagonist', 'fungal growth', 'describe', 'liver resection', 'coffee table', 'natural language processing', 'infratemporal fossa', 'social withdrawal', 'information retrieval', 'monday', 'menthol', 'overturn', 'prevailing', 'spline function', 'acinic cell carcinoma', 'furth', 'hepatic protein', 'blistering', 'prefixation', 'january', 'cardiopulmonary receptor', 'extracorporeal membrane oxygenation', 'clinodactyly', 'melancholic', 'chlorpromazine hydrochloride', 'level of evidence', 'washington state', 'cat', 'newyork', 'year elevan', 'trituration', 'gold alloy', 'hexoprenaline', 'second molar', 'novice', 'oxygen radical', 'subscription', 'ordinate', 'approximal', 'spongiosis', 'ribothymidine', 'body of evidence', 'vpb', 'porins', 'musculocutaneous']

对于很长的列表,请查看下面的链接。https://docs.google.com/document/d/1BYllMyDlw-Rb4uMh89VjLml2Bl9Y7oUlopM-Z4F6pN0/edit?uspu003dsharing

注意:我不期望解决方案 100% 有效(如果提议的算法能够检测到许多对我来说足够的医学概念)

如果需要,我很乐意提供更多详细信息。

解答

解决方案概述

好的,我会从多个方向解决这个问题。这里有一些很好的建议,如果我是你,我会使用这些方法的集合(多数投票,预测标签,在你的二进制案例中超过 50% 的分类器同意)。

我正在考虑以下方法:

-

主动学习(下面我提供的示例方法)

-

MediaWiki 反向链接由@TavoGC 作为答案提供

-

SPARQL@Stanislav Kralin和/或父类别由[@Meena Nagarajan]提供的对您的问题的评论提供的祖先类别他们的差异,但为此您必须联系两位创作者并比较他们的结果)。

这样一来,三分之二的人必须同意某个概念是医学概念,从而进一步减少出错的机会。

虽然我们在这里,但我会反对 反对@ananand_v.singh在这个答案中提出的方法,因为:

-

距离度量不应该是欧几里得,余弦相似度是更好的度量(例如spaCy使用),因为它不考虑向量的大小(它不应该,这就是 word2vec 或手套被训练)

-

如果我理解正确的话会创建很多人工集群,而我们只需要两个:药物和非药物一个。此外,药物的质心不以药物本身为中心。这带来了额外的问题,比如质心远离药物,其他词,比如

computer或human(或任何其他你认为不适合医学的词)可能会进入集群。 -

很难评价结果,更何况,这件事完全是主观的。此外,词向量很难可视化和理解(对这么多词使用 PCA/TSNE/similar 将它们转换为较低维度 [2D/3D],会给我们带来完全没有意义的结果 [是的,我已经尝试过它,PCA 为您的较长数据集获得了大约 5% 的解释方差,非常非常低])。

基于上面突出显示的问题,我提出了使用[主动学习](https://en.wikipedia.org/wiki/Active_learning_(machine_learning)的解决方案,这是解决此类问题的一种非常被遗忘的方法。

主动学习方法

在机器学习的这个子集中,当我们很难想出一个精确的算法时(比如一个术语成为medical类别的一部分意味着什么),我们会问人类“专家”(实际上不必是专家)提供一些答案。

知识编码

正如anand_v.singh指出的那样,词向量是最有前途的方法之一,我也会在这里使用它(尽管不同,IMO 以更简洁和更容易的方式)。

我不会在我的回答中重复他的观点,所以我会加上我的两分钱:

-

不要使用上下文化的词嵌入作为当前可用的最先进技术(例如BERT)

-

检查您的概念中有多少没有表示(例如,表示为一个零向量)。应该检查它(并在我的代码中检查,到时候会有进一步的讨论),你可以使用其中大部分存在的嵌入。

使用 spaCy 测量相似度

此类测量编码为 spaCy 的 GloVe 词向量的medicine与所有其他概念之间的相似性。

class Similarity:

def __init__(self, centroid, nlp, n_threads: int, batch_size: int):

# In our case it will be medicine

self.centroid = centroid

# spaCy's Language model (english), which will be used to return similarity to

# centroid of each concept

self.nlp = nlp

self.n_threads: int = n_threads

self.batch_size: int = batch_size

self.missing: typing.List[int] = []

def __call__(self, concepts):

concepts_similarity = []

# nlp.pipe is faster for many documents and can work in parallel (not blocked by GIL)

for i, concept in enumerate(

self.nlp.pipe(

concepts, n_threads=self.n_threads, batch_size=self.batch_size

)

):

if concept.has_vector:

concepts_similarity.append(self.centroid.similarity(concept))

else:

# If document has no vector, it's assumed to be totally dissimilar to centroid

concepts_similarity.append(-1)

self.missing.append(i)

return np.array(concepts_similarity)

此代码将为每个概念返回一个数字,衡量它与质心的相似程度。此外,它记录了缺少其表示的概念的索引。它可能被这样调用:

import json

import typing

import numpy as np

import spacy

nlp = spacy.load("en_vectors_web_lg")

centroid = nlp("medicine")

concepts = json.load(open("concepts_new.txt"))

concepts_similarity = Similarity(centroid, nlp, n_threads=-1, batch_size=4096)(

concepts

)

您可以用您的数据代替new_concepts.json。

查看spacy.load并注意我使用了en_vectors_web_lg。它由685.000 个独特的词向量(很多)组成,并且可以为您的案例开箱即用。安装 spaCy 后,您必须单独下载它,更多信息在上面的链接中提供。

另外您可能想要使用 多个中心词,例如添加诸如disease或health之类的词并平均它们的词向量。不过,我不确定这是否会对您的案件产生积极影响。

其他可能性可能是使用多个质心并计算每个概念与多个质心之间的相似性。在这种情况下,我们可能有一些阈值,这可能会消除一些误报,但可能会遗漏一些可以认为与medicine相似的术语。此外,这会使情况更加复杂,但如果您的结果不令人满意,您应该考虑上面的两个选项(并且只有在这些选项是,不要在没有事先考虑的情况下跳入这种方法)。

现在,我们对概念的相似性有了一个粗略的衡量。但是这是什么意思某个概念与医学有 0.1 的正相似性?这是一个应该归类为医学的概念吗?或者也许那已经太远了?

请教高手

要获得一个阈值(低于它的术语将被视为非医学术语),最简单的方法是让人类为我们分类一些概念(这就是主动学习的意义所在)。是的,我知道这是一种非常简单的主动学习形式,但无论如何我都会这么认为。

我写了一个带有sklearn-like接口的类,要求人类对概念进行分类,直到达到最佳阈值(或最大迭代次数)。

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

max_steps: int,

samples: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.max_steps: int = max_steps

self.samples: int = samples

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

-

samples参数描述了在每次迭代期间将向专家显示多少示例(它是最大值,如果已经要求样本或者没有足够的样本可以显示,它将返回更少)。 -

step表示每次迭代中阈值的下降(我们从 1 开始表示完全相似)。 -

change_multiplier- 如果专家回答的概念不相关(或大部分不相关,因为返回了多个),则 step 乘以该浮点数。它用于查明每次迭代中step变化之间的准确阈值。 -

概念按相似度排序(概念越相似越高)

下面的函数向专家征求意见,并根据他的回答找到最佳阈值。

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

示例问题如下所示:

Are those concepts related to medicine?

0. anesthetic drug

1. child and adolescent psychiatry

2. tertiary care center

3. sex therapy

4. drug design

5. pain disorder

6. psychiatric rehabilitation

7. combined oral contraceptive

8. family practitioner committee

9. cancer family syndrome

10. social psychology

11. drug sale

12. blood system

[y]es / [n]o / [any]quit y

...解析专家的答案:

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

最后是ActiveLearner的完整代码,根据专家找到最佳相似度阈值:

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

samples: int,

max_steps: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.samples: int = samples

self.max_steps: int = max_steps

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

def fit(self):

for _ in range(self.max_steps):

available_concepts_indices = np.nonzero(

self.concepts_similarity >= self.threshold_

)[0]

if available_concepts_indices.size != 0:

decision = self._ask_expert(available_concepts_indices)

if not self._parse_expert_decision(decision):

break

else:

self.threshold_ -= self.step

return self

总而言之,您必须手动回答一些问题,但在我看来,这种方法更准确。

此外,您不必查看所有样本,只需查看其中的一小部分。您可以决定有多少样本构成一个医学术语(显示的 40 个医学样本和 10 个非医学样本是否仍应视为医学?),这让您可以根据自己的喜好微调此方法。如果存在异常值(例如,50 个样本中有 1 个是非医学样本),我会认为阈值仍然有效。

**再一次:**此方法应与其他方法混合使用,以最大程度地减少错误分类的机会。

分类器

当我们从专家那里获得阈值时,分类将是瞬时的,这里有一个简单的分类类:

class Classifier:

def __init__(self, centroid, threshold: float):

self.centroid = centroid

self.threshold: float = threshold

def predict(self, concepts_pipe):

predictions = []

for concept in concepts_pipe:

predictions.append(self.centroid.similarity(concept) > self.threshold)

return predictions

为简洁起见,这是最终的源代码:

import json

import typing

import numpy as np

import spacy

class Similarity:

def __init__(self, centroid, nlp, n_threads: int, batch_size: int):

# In our case it will be medicine

self.centroid = centroid

# spaCy's Language model (english), which will be used to return similarity to

# centroid of each concept

self.nlp = nlp

self.n_threads: int = n_threads

self.batch_size: int = batch_size

self.missing: typing.List[int] = []

def __call__(self, concepts):

concepts_similarity = []

# nlp.pipe is faster for many documents and can work in parallel (not blocked by GIL)

for i, concept in enumerate(

self.nlp.pipe(

concepts, n_threads=self.n_threads, batch_size=self.batch_size

)

):

if concept.has_vector:

concepts_similarity.append(self.centroid.similarity(concept))

else:

# If document has no vector, it's assumed to be totally dissimilar to centroid

concepts_similarity.append(-1)

self.missing.append(i)

return np.array(concepts_similarity)

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

samples: int,

max_steps: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.samples: int = samples

self.max_steps: int = max_steps

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

def fit(self):

for _ in range(self.max_steps):

available_concepts_indices = np.nonzero(

self.concepts_similarity >= self.threshold_

)[0]

if available_concepts_indices.size != 0:

decision = self._ask_expert(available_concepts_indices)

if not self._parse_expert_decision(decision):

break

else:

self.threshold_ -= self.step

return self

class Classifier:

def __init__(self, centroid, threshold: float):

self.centroid = centroid

self.threshold: float = threshold

def predict(self, concepts_pipe):

predictions = []

for concept in concepts_pipe:

predictions.append(self.centroid.similarity(concept) > self.threshold)

return predictions

if __name__ == "__main__":

nlp = spacy.load("en_vectors_web_lg")

centroid = nlp("medicine")

concepts = json.load(open("concepts_new.txt"))

concepts_similarity = Similarity(centroid, nlp, n_threads=-1, batch_size=4096)(

concepts

)

learner = ActiveLearner(

np.array(concepts), concepts_similarity, samples=20, max_steps=50

).fit()

print(f"Found threshold {learner.threshold_}\n")

classifier = Classifier(centroid, learner.threshold_)

pipe = nlp.pipe(concepts, n_threads=-1, batch_size=4096)

predictions = classifier.predict(pipe)

print(

"\n".join(

f"{concept}: {label}"

for concept, label in zip(concepts[20:40], predictions[20:40])

)

)

在回答了一些问题后,阈值为 0.1([-1, 0.1)之间的所有内容都被认为是非医疗的,而[0.1, 1]被认为是医疗的)我得到了以下结果:

kartagener s syndrome: True

summer season: True

taq: False

atypical neuroleptic: True

anterior cingulate: False

acute respiratory distress syndrome: True

circularity: False

mutase: False

adrenergic blocking drug: True

systematic desensitization: True

the turning point: True

9l: False

pyridazine: False

bisoprolol: False

trq: False

propylhexedrine: False

type 18: True

darpp 32: False

rickettsia conorii: False

sport shoe: True

如您所见,这种方法远非完美,因此最后一节描述了可能的改进:

可能的改进

正如一开始提到的,使用我的方法与其他答案混合可能会忽略属于medicine的sport shoe之类的想法,如果上述两种启发式方法之间出现平局,主动学习方法将更具决定性。

我们也可以创建一个主动学习集合。我们将使用多个阈值(增加或减少)而不是一个阈值,例如 0.1,假设它们是0.1, 0.2, 0.3, 0.4, 0.5。

假设sport shoe得到,对于每个阈值,它是各自的True/False,如下所示:

True True False False False,

进行多数投票时,我们将以 2 票中的 3 票将其标记为non-medical。此外,如果低于它的阈值超过它,我也会减轻过于严格的阈值(如果True/False看起来像这样:True True True False False)。

我想出的最终可能的改进:在上面的代码中,我使用了Doc向量,这是创建概念的词向量的平均值。假设缺少一个单词(由零组成的向量),在这种情况下,它将被推离medicine质心。您可能不希望这样(因为某些小众医学术语[gpv或其他缩写等] 可能缺少它们的表示),在这种情况下,您只能对那些不为零的向量进行平均。

我知道这篇文章很长,所以如果您有任何问题,请在下面发布。

更多推荐

已为社区贡献126445条内容

已为社区贡献126445条内容

所有评论(0)