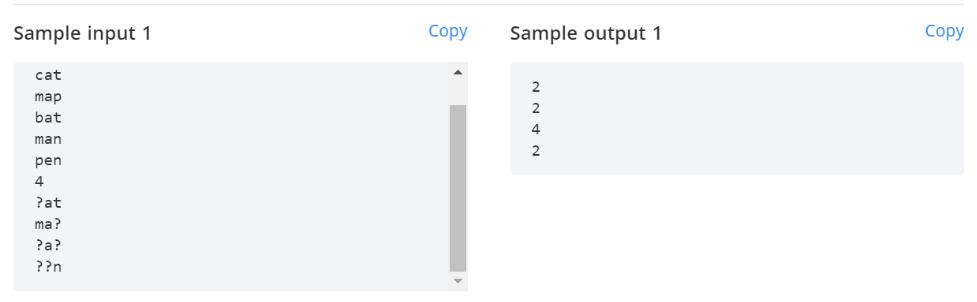

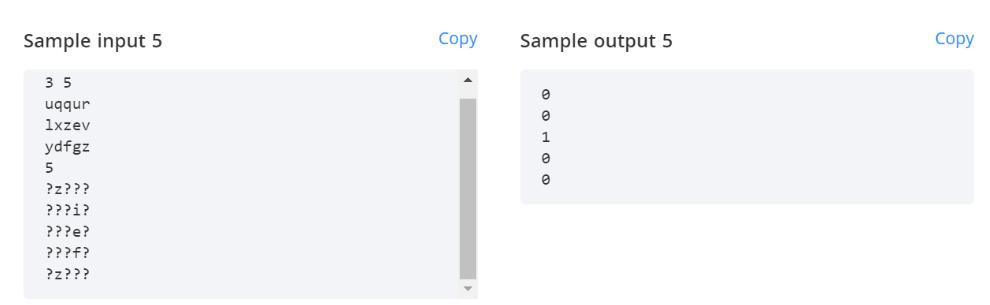

I got the following problem for the Google Coding Challenge which happened on 16th August 2020. I tried to solve it but couldn't.

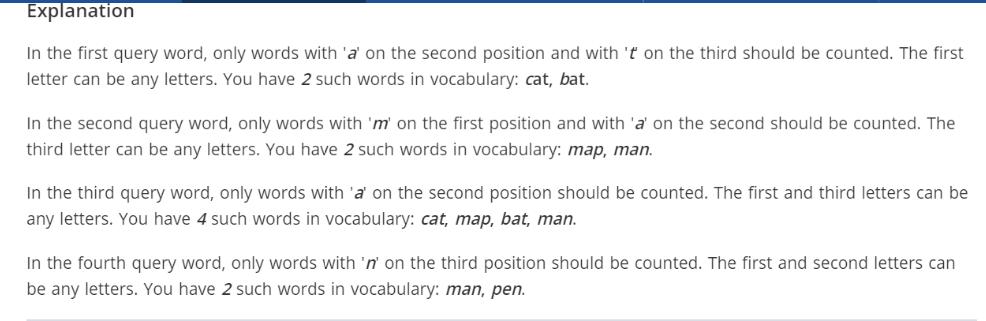

There are N words in a dictionary such that each word is of fixed length and M consists only of lowercase English letters, that is ('a', 'b', ...,'z')

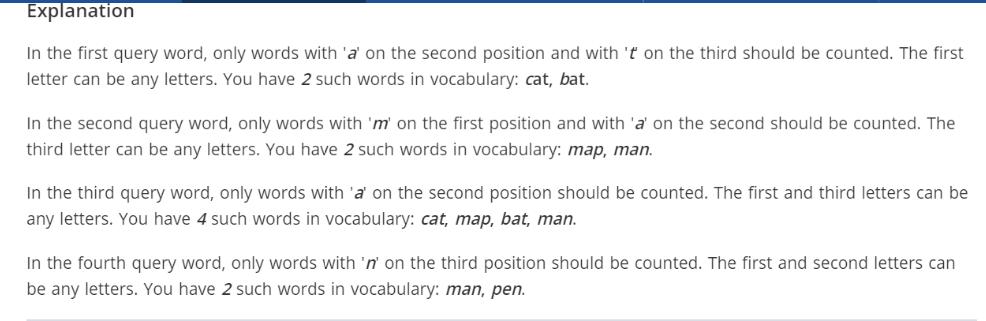

A query word is denoted by Q. The length of query word is M. These words contain lowercase English letters but at some places instead of a letter between 'a', 'b', ...,'z' there is '?'. Refer to the Sample input section to understand this case.

A match count of Q, denoted by match_count(Q) is the count of words that are in the dictionary and contain the same English letters(excluding a letter that can be in the position of ?) in the same position as the letters are there in the query word Q. In other words, a word in the dictionary can contain any letters at the position of '?' but the remaining alphabets must match with the query word.

You are given a query word Q and you are required to compute match_count.

Input Format

- The first line contains two space-separated integers

N and M denoting the number of words in the dictionary and length of each word respectively.

- The next

N lines contain one word each from the dictionary.

- The next line contains an integer Q denoting the number of query words for which you have to compute match_count.

- The next

Q lines contain one query word each.

Output Format

For each query word, print match_count for a specific word in a new line.

Constraints

1 <= N <= 5X10^4

1 <= M <= 7

1 <= Q <= 10^5

So, I got 30 minutes for this question and I could write the following code which is incorrect and hence didn't give the expected output.

def Solve(N, M, Words, Q, Query):

output = []

count = 0

for i in range(Q):

x = Query[i].split('?')

for k in range(N):

if x in Words:

count += 1

else:

pass

output.append(count)

return output

N, M = map(int , input().split())

Words = []

for _ in range(N):

Words.append(input())

Q = int(input())

Query = []

for _ in range(Q):

Query.append(input())

out = Solve(N, M, Words, Q, Query)

for x in out_:

print(x)

Can somebody help me with some pseudocode or algorithm which can solve this problem, please?

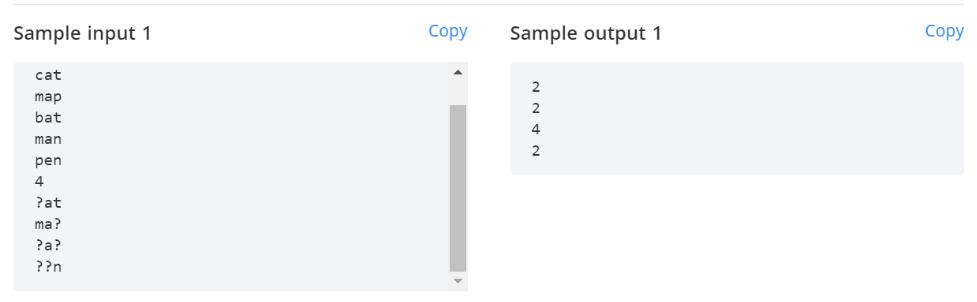

I guess my first try would have been to replace the ? with a . in the query, i.e. change ?at to .at, and then use those as regular expressions and match them against all the words in the dictionary, something as simple as this:

import re

for q in queries:

p = re.compile(q.replace("?", "."))

print(sum(1 for w in words if p.match(w)))

However, seeing the input sizes as N up to 5x104 and Q up to 105, this might be too slow, just as any other algorithm comparing all pairs of words and queries.

On the other hand, note that M, the number of letters per word, is constant and rather low. So instead, you could create Mx26 sets of words for all letters in all positions and then get the intersection of those sets.

from collections import defaultdict

from functools import reduce

M = 3

words = ["cat", "map", "bat", "man", "pen"]

queries = ["?at", "ma?", "?a?", "??n"]

sets = defaultdict(set)

for word in words:

for i, c in enumerate(word):

sets[i,c].add(word)

all_words = set(words)

for q in queries:

possible_words = (sets[i,c] for i, c in enumerate(q) if c != "?")

w = reduce(set.intersection, possible_words, all_words)

print(q, len(w), w)

In the worst case (a query that has a non-? letter that is common to most or all words in the dictionary) this may still be slow, but should be much faster in filtering down the words than iterating all the words for each query. (Assuming random letters in both words and queries, the set of words for the first letter will contain N/26 words, the intersection for the first two has N/26² words, etc.)

This could probably be improved a bit by taking the different cases into account, e.g. (a) if the query does not contain any ?, just check whether it is in the set (!) of words without creating all those intersections; (b) if the query is all-?, just return the set of all words; and (c) sort the possible-words-sets by size and start the intersection with the smallest sets first to reduce the size of temporarily created sets.

About time complexity: To be honest, I am not sure what time complexity this algorithm has. With N, Q, and M being the number of words, number of queries, and length of words and queries, respectively, creating the initial sets will have complexity O(N*M). After that, the complexity of the queries obviously depends on the number of non-? in the queries (and thus the number of set intersections to create), and the average size of the sets. For queries with zero, one, or M non-? characters, the query will execute in O(M) (evaluating the situation and then a single set/dict lookup), but for queries with two or more non-?-characters, the first set intersections will have on average complexity O(N/26), which strictly speaking is still O(N). (All following intersections will only have to consider N/26², N/26³ etc. elements and are thus negligible.) I don't know how this compares to The Trie Approach and would be very interested if any of the other answers could elaborate on that.

已为社区贡献126445条内容

已为社区贡献126445条内容

所有评论(0)