One of the top challenges that I have faced while working on some projects is updating the state of my entire work machine and some times breaking something unrelated, and this problem gets me crazy every time. As a fan of software development as well as embedded systems, I decided to mix the two disciplines and use virtual machines to solve this issue, by building an isolated environment for embedded systems development.

This may seem like a stretch, but the traditional way to do this is to create a virtual machine, install Linux, then install the packages we need for cross compiling, edit the code on the host machine, switch to the VM, compile and repeat the process every time. This is too slow and heavy and it's not fun.

So why do we need to use docker?

Well because it offers us an efficient and speedy way to port applications across systems and machines. It is light and lean, allowing you to quickly containerize applications and run them within their own secure environments.

background :

My goal is setting up an isolated and repeatable environment for developing STM32 applications using docker that encompasses all the needed resources and the automation we are using, also add a shared directory between the host file system and our docker container so that we don't need to copy the code every time to the VM and restart it.

Once all of this is ready, we can quickly start a development environment on our machine without having to worry about finding, installing, and configuring project dependencies every time we need it.

our final result features :

-Docker build files and Ubuntu 14.04LTS image;

-GNU ARM toolchain;

-GDB for debugging application;

-OpenOCD as GDB server;

-Example of LED blink Project for stm32F4;

The project originally is cloned from Here

Let's start

before we start you must setup docker, you can check the installation instructions Here from the official docker website. All the code of this tutorial is available on github. so go clone or fork the project now so you can have all the code to build, compile and modify it.

Write Dockerfile:

The container with our application is a little more interesting :

#the first thing we need to do is define from what image we want to build from.

#Here we will use a 14.04 LTS(long term support) version of ubuntu from docker Hub :

FROM ubuntu:14.04

MAINTAINER Mohamed Ali May "https://github.com/maydali28"

ARG DEBIAN_FRONTEND=noninteractive

RUN mv /etc/apt/sources.list /etc/apt/sources.list.old

RUN echo 'deb mirror://mirrors.ubuntu.com/mirrors.txt trusty main restricted universe multiverse' >> /etc/apt/sources.list

RUN echo 'deb mirror://mirrors.ubuntu.com/mirrors.txt trusty-updates main restricted universe multiverse' >> /etc/apt/sources.list

RUN echo 'deb mirror://mirrors.ubuntu.com/mirrors.txt trusty-backports main restricted universe multiverse' >> /etc/apt/sources.list

RUN echo 'deb mirror://mirrors.ubuntu.com/mirrors.txt trusty-security main restricted universe multiverse' >> /etc/apt/sources.list

RUN apt-get update -q

#install software requirements

RUN apt-get install --no-install-recommends -y software-properties-common build-essential git symlinks expect

# Install build dependancies

RUN apt-get purge binutils-arm-none-eabi \

gcc-arm-none-eabi \

gdb-arm-none-eabi \

libnewlib-arm-none-eabi

RUN add-apt-repository -y ppa:team-gcc-arm-embedded/ppa

RUN apt-get update -q

RUN apt-cache policy gcc-arm-none-eabi

RUN apt-get install --no-install-recommends -y gcc-arm-embedded

#install Debugging dependancies

#install OPENOCD Build dependancies and gdb

RUN apt-get install --no-install-recommends -y \

libhidapi-hidraw0 \

libusb-0.1-4 \

libusb-1.0-0 \

libhidapi-dev \

libusb-1.0-0-dev \

libusb-dev \

libtool \

make \

automake \

pkg-config \

autoconf \

texinfo

#build and install OPENOCD from repository

RUN cd /usr/src/ \

&& git clone --depth 1 https://github.com/ntfreak/openocd.git \

&& cd openocd \

&& ./bootstrap \

&& ./configure --enable-stlink --enable-jlink --enable-ftdi --enable-cmsis-dap \

&& make -j"$(nproc)" \

&& make install

#remove unneeded directories

RUN cd ..

RUN rm -rf /usr/src/openocd \

&& rm -rf /var/lib/apt/lists/

#OpenOCD talks to the chip through USB, so we need to grant our account access to the FTDI.

RUN cp /usr/local/share/openocd/contrib/60-openocd.rules /etc/udev/rules.d/60-openocd.rules

#create a directory for our project & setup a shared workfolder between the host and docker container

RUN mkdir -p /usr/src/app

VOLUME ["/usr/src/app"]

WORKDIR /usr/src/app

RUN cd /usr/src/app

EXPOSE 4444

A Dockerfile is a set of instructions to build and create an image. After each instruction the docker build commits a new layer. If a layer hasn’t changed, it doesn’t need to be rebuilt the next time the build runs. Instead, the cached layers are used. This layering system is one of the reasons why Docker is so fast.

Let’s go through a brief overview of the instructions used in this Dockerfile:

-FROM tells the Docker with which image to start. All Docker images need to have one root image. In this case it is the official ubuntu image.

-WORKDIR sets the current working directory for subsequent instructions.

-RUN starts arbitrary commands during the build process. so the processes is for downloading and installing GCC for arm and GDB to debug our application and finaly openocd as a GDB server.

-VOLUME adds files from the host file system into the Docker container.

Note that there are several instructions. The reason for this are the different layers.

-ARG sets environment variables. for build-time customization

-EXPOSE exposes PORTS from the container.

Build Docker image:

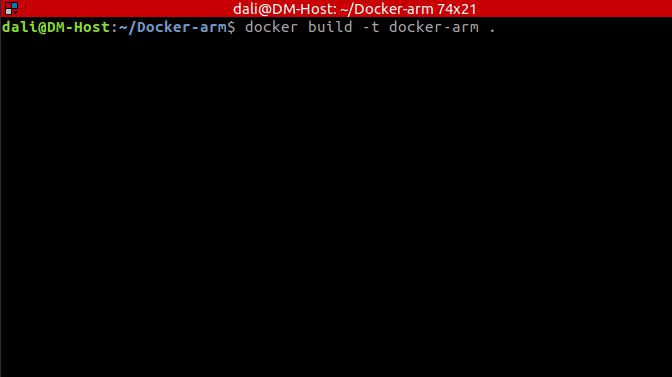

With the instructions ready all that remains is to run the ‘docker build’ command, Go to the directory that has your Docker file and run our famous command. after that set the name of our image with ‘-t’ parameter.

$ docker build -t docker-arm .

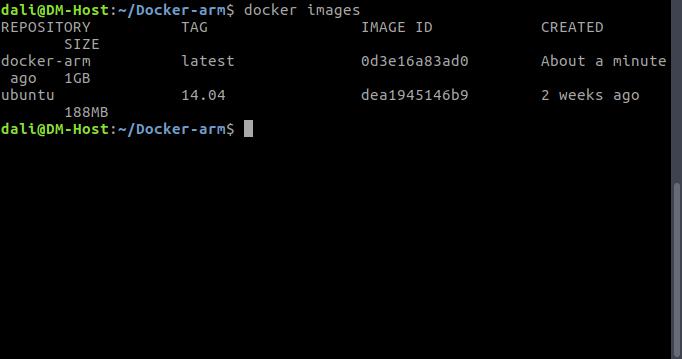

the image will now be listed by docker:

$ docker images

Run Docker container:

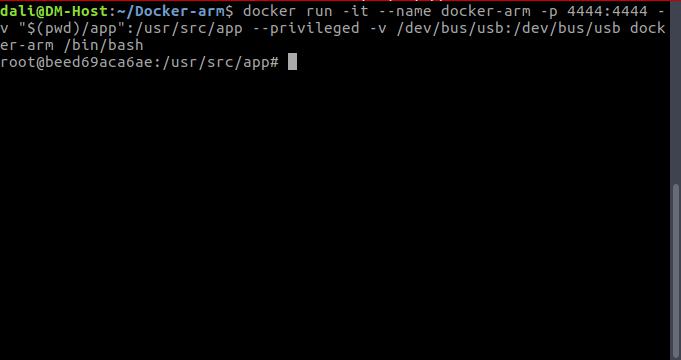

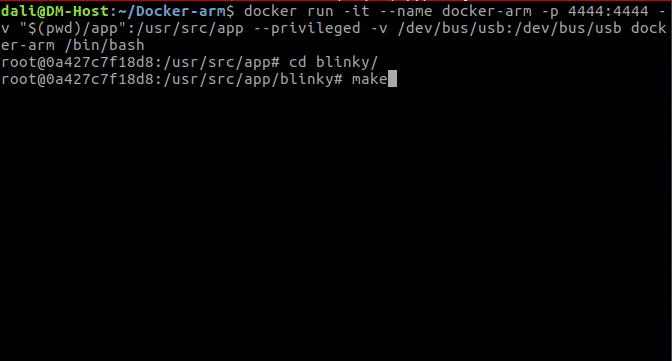

Running your image with ‘-it’ gives you a running shell inside the container. using -v “volume” will make the directory /usr/src/app inside the container live outside the Union File System and directly accessible on the folder “app” on our host and also to have access to our usb device with root privileges. the -p flag redirects a public port to a private port inside the container. Run the image you previously built:

$ docker run -it --name docker-arm -p 4444:4444 -v "$(pwd)/app":/usr/src/app --privileged -v /dev/bus/usb:/dev/bus/usb docker-arm /bin/bash

after that we will have access to our container using bash

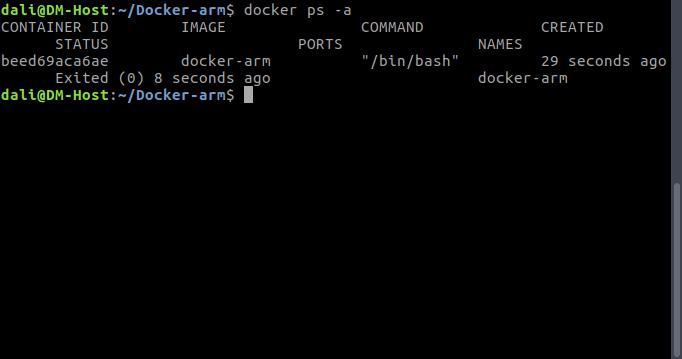

to Print the output of your container:

$ docker ps -a

Deploy our Project and run it inside Docker Container:

For testing our environment, I created a blink led project for stm32f407vg6, you can clone the project from the Github Repo and paste the content of app folder into your workspace where your Dockerfile is located. you will end up with a directory structure similar to the one bolow :

$ tree -L 3

.

├── app

│ ├── blinky

│ │ ├── main.c

│ │ ├── Makefile

│ │ ├── stm32f4xx_conf.h

│ │ ├── stm32_flash.ld

│ │ └── system_stm32f4xx.c

│ └── STM32F4-Discovery_FW_V1.1.0

│ ├── _htmresc

│ ├── Libraries

│ ├── MCD-ST Liberty SW License Agreement 20Jul2011 v0.1.pdf

│ ├── Project

│ ├── Release_Notes.html

│ └── Utilities

├── Dockerfile

└── README.md

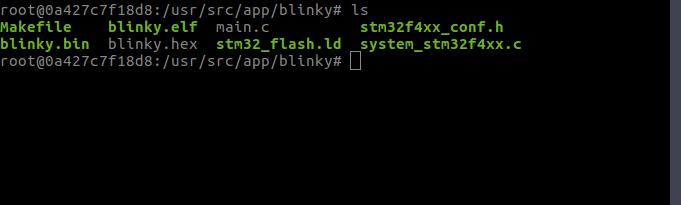

So after that we need to compile our project using gcc for arm :

$ cd blinky

$ make

Then, we will found that there are a new files : “main.elf,main.bin and main.hex”

$ ls

after compiling our project, let’s flash it into the Board with openocd using :

$ openocd -s "/usr/local/share/openocd/scripts" -f "interface/stlink-v2.cfg" -f "target/stm32f4x.cfg" -c "program blinky.elf verify reset exit")

Conclusion

as you can see, it’s really easy to configure an embedded system project using docker, we only need a Docker file in order to configure our environment.

with our new project we are ready to start developing Applications .

see you next time.

If you enjoyed reading, please support my work by hitting that little heart!

FYI: this article originally appeared on my website.

已为社区贡献12493条内容

已为社区贡献12493条内容

所有评论(0)