【部署篇】grafana+prometheus+k8s

编辑prometheus.yaml(如上文),之后在grafana-Plugin启用kubernetes,配置Data Source之后即可使用。为了能够采集到主机的运行指标如CPU、内存、从磁盘等信息,我们可以使用Node Exporter。访问:https://grafana.test.cn/,如下示例图。1.1 创建namespace。1.2 创建 grafana。

·

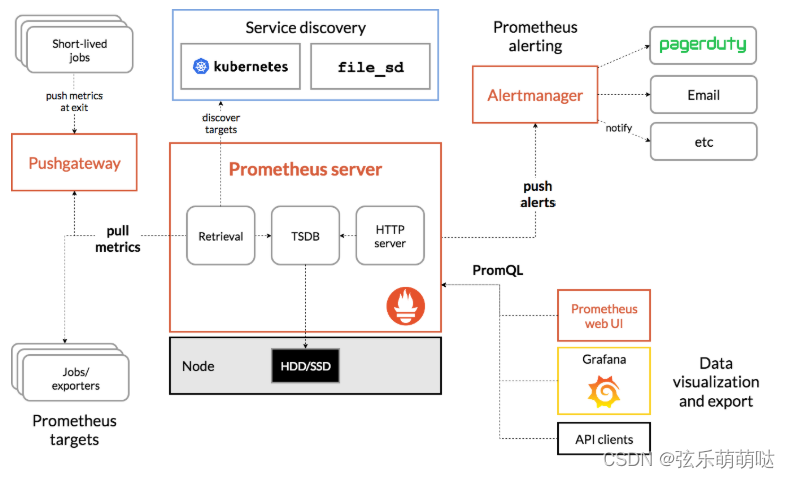

部署架构图

1、grafana部署

1.1 创建namespace

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

1.2 创建 grafana

vim grafana.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: monitoring

spec:

rules:

- host: grafana.test.cn

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

annotations:

prometheus.io/scrape: 'true'

prometheus.io/path: '/metrics'

spec:

selector:

app: grafana

ports:

- name: grafana

port: 3000

protocol: TCP

targetPort: 3000

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: pv-grafana

namespace: monitoring

labels:

pv: pv-grafana

spec:

capacity:

storage: 5Gi

nfs:

server: 172.12.0.1

path: /data/grafana

accessModes:

- ReadWriteMany

volumeMode: Filesystem

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-grafana

namespace: monitoring

spec:

accessModes:

- ReadWriteMany

selector:

matchLabels:

pv: pv-grafana

resources:

requests:

storage: 5Gi

volumeName: pv-grafana

storageClassName: ''

volumeMode: Filesystem

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

nodeSelector:

kubernetes.io/hostname: k8s-worker5

hostAliases:

- ip: 172.13.2.2

hostnames:

- "kubelb"

containers:

- name: grafana

image: harbor.test.cn/devops/grafana:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

- name: GF_DASHBOARDS_JSON_ENABLED

value: "true"

- name: GF_INSTALL_PLUGINS

value: grafana-kubernetes-app,alexanderzobnin-zabbix-app

- name: GF_SECURITY_ADMIN_USER

valueFrom:

secretKeyRef:

name: grafana

key: admin-username

- name: GF_SECURITY_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: grafana

key: admin-password

readinessProbe:

httpGet:

path: /login

port: 3000

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: grafana-storage

mountPath: /var/lib/grafana

volumes:

- name: grafana-storage

persistentVolumeClaim:

claimName: pvc-grafana

imagePullSecrets:

- name: harborsecret

---

apiVersion: v1

kind: Secret

metadata:

name: grafana

namespace: monitoring

data:

admin-password: YWRtaW4=

admin-username: YWRtaW4=

type: Opaque

kubectl apply -f grafana.yaml

2、prometheus部署

vim config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 10s

scrape_timeout: 10s

evaluation_interval: 10s

alerting:

alertmanagers:

- static_configs:

- targets:

- prometheus-alert-center:8080 # - alertmanager:9093 # - 172.18.0.2:31080

rule_files:

- "/etc/prometheus-rules/*.rules"

scrape_configs:

- job_name: 'node-exporter' #node节点性能指标数据

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_endpoint_port_name]

regex: true;node-exporter

action: keep

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: (.+)(?::\d+);(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kube-apiservers'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

regex: default;kubernetes;https

action: keep

- job_name: 'kube-controller-manager'

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_namespace, __meta_kubernetes_service_name]

regex: true;kube-system;kube-controller-manager-prometheus-discovery

action: keep

- job_name: 'kube-scheduler'

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_namespace, __meta_kubernetes_service_name]

regex: true;kube-system;kube-scheduler-prometheus-discovery

action: keep

- job_name: 'kubelet'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 172.16.6.2:8443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor' #容器、Pod相关的性能指标数据

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 172.16.6.2:8443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

metric_relabel_configs:

- source_labels: [id]

action: replace

regex: '^/machine\.slice/machine-rkt\\x2d([^\\]+)\\.+/([^/]+)\.service$'

target_label: rkt_container_name

replacement: '${2}-${1}'

- source_labels: [id]

action: replace

regex: '^/system\.slice/(.+)\.service$'

target_label: systemd_service_name

replacement: '${1}'

- job_name: 'kube-state-metrics' #资源对象(Deployment、Pod等)的状态

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_endpoint_port_name]

regex: true;kube-state-metrics

action: keep

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: (.+)(?::\d+);(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-service-http-probe' #通过http方式探测Service状态

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_service_annotation_prometheus_io_http_probe]

regex: true;true

action: keep

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_namespace, __meta_kubernetes_service_annotation_prometheus_io_http_probe_port, __meta_kubernetes_service_annotation_prometheus_io_http_probe_path]

action: replace

target_label: __param_target

regex: (.+);(.+);(.+);(.+)

replacement: $1.$2:$3$4

- target_label: __address__

replacement: blackbox-exporter.monitoring:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_annotation_prometheus_io_app_info_(.+)

- job_name: 'kubernetes-service-tcp-probe' #通过tcp方式探测Service状态

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [tcp_connect]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape, __meta_kubernetes_service_annotation_prometheus_io_tcp_probe]

regex: true;true

action: keep

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_namespace, __meta_kubernetes_service_annotation_prometheus_io_tcp_probe_port]

action: replace

target_label: __param_target

regex: (.+);(.+);(.+)

replacement: $1.$2:$3

- target_label: __address__

replacement: blackbox-exporter.monitoring:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_annotation_prometheus_io_app_info_(.+)

- job_name: 'kubernetes-ingresses' #通过http方式探测ingresses状态

kubernetes_sd_configs:

- role: ingress

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_scheme, __address__, __meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.monitoring:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'mysql-performance'

scrape_interval: 1m

static_configs:

- targets:

['mysqld-exporter.monitoring:9104']

params:

collect[]:

- global_status

- perf_schema.tableiowaits

- perf_schema.indexiowaits

- perf_schema.tablelocks

- job_name: 'redis'

static_configs:

- targets: ['redis-exporter.monitoring:9121']

- job_name: 'RabbitMQ'

static_configs:

- targets: ['rabbitmq-exporter.monitoring:9419']

vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups: ["networking.k8s.io"]

resources:

- ingresses

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- configmaps

verbs: ["get"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

vim rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: monitoring

data:

node.rules: |

groups:

- name: node

rules:

- alert: NodeDown

expr: up == 0

for: 3m

labels:

severity: critical

annotations:

summary: "{{ $labels.instance }}: down"

description: "{{ $labels.instance }} has been down for more than 3m"

value: "{{ $value }}"

- alert: NodeCPUHigh

expr: (1 - avg by (instance) (irate(node_cpu_seconds_total{mode="idle"}[5m]))) * 100 > 75

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High CPU usage"

description: "{{$labels.instance}}: CPU usage is above 75%"

value: "{{ $value }}"

- alert: NodeCPUIowaitHigh

expr: avg by (instance) (irate(node_cpu_seconds_total{mode="iowait"}[5m])) * 100 > 80

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High CPU iowait usage"

description: "{{$labels.instance}}: CPU iowait usage is above 80%"

value: "{{ $value }}"

- alert: NodeMemoryUsageHigh

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100 > 85

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: High memory usage"

description: "{{$labels.instance}}: Memory usage is above 85%"

value: "{{ $value }}"

- alert: NodeDiskRootLow

expr: (1 - node_filesystem_avail_bytes{fstype=~"ext.*|xfs",mountpoint ="/"} / node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint ="/"}) * 100 > 80

for: 10m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Low disk(the / partition) space"

description: "{{$labels.instance}}: Disk(the / partition) usage is above 80%"

value: "{{ $value }}"

- alert: NodeDiskBootLow

expr: (1 - node_filesystem_avail_bytes{fstype=~"ext.*|xfs",mountpoint ="/boot"} / node_filesystem_size_bytes{fstype=~"ext.*|xfs",mountpoint ="/boot"}) * 100 > 80

for: 10m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Low disk(the /boot partition) space"

description: "{{$labels.instance}}: Disk(the /boot partition) usage is above 80%"

value: "{{ $value }}"

- alert: NodeLoad5High

expr: (node_load5) > (count by (instance) (node_cpu_seconds_total{mode='system'}) * 2)

for: 5m

labels:

severity: warning

annotations:

summary: "{{$labels.instance}}: Load(5m) High"

description: "{{$labels.instance}}: Load(5m) is 2 times the number of CPU cores"

value: "{{ $value }}"

vim prometheus.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus

namespace: monitoring

spec:

rules:

- host: prometheus.test.cn

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: 9090

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

app: prometheus

ports:

- name: prometheus

port: 9090

protocol: TCP

targetPort: 9090

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

name: prometheus

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: harbor.test.cn/devops/prometheus:latest

imagePullPolicy: IfNotPresent

args:

- '--storage.tsdb.path=/prometheus'

- '--storage.tsdb.retention.time=30d'

- '--config.file=/etc/prometheus/prometheus.yml'

ports:

- containerPort: 9090

volumeMounts:

- name: config

mountPath: /etc/prometheus

- name: rules

mountPath: /etc/prometheus-rules

- name: prometheus

mountPath: /prometheus

volumes:

- name: config

configMap:

name: prometheus-config

- name: rules

configMap:

name: prometheus-rules

- name: prometheus

emptyDir: {}

imagePullSecrets:

- name: harborsecret

nodeSelector:

kubernetes.io/hostname: cqprodk8slnx08

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

3、部署告警

vim PrometheusAlert.yaml

# apiVersion: v1

# kind: Namespace

# metadata:

# name: monitoring

---

apiVersion: v1

data:

app.conf: |

#---------------------全局配置-----------------------

appname = PrometheusAlert

#登录用户名

login_user=prometheusalert

#登录密码

login_password=prometheusalert

#监听地址

httpaddr = "0.0.0.0"

#监听端口

httpport = 8080

runmode = dev

#开启JSON请求

copyrequestbody = true

#告警消息标题

title=ServerAlert

#链接到告警平台地址

GraylogAlerturl=https://alertmanager.test.cn/

#自动告警抑制(自动告警抑制是默认同一个告警源的告警信息只发送告警级别最高的第一条告警信息,其他消息默认屏蔽,这么做的目的是为了减少相同告警来源的消息数量,防止告警炸弹,0为关闭,1为开启)

silent=0

#是否前台输出file or console

logtype=file

#日志文件路径

logpath=logs/prometheusalertcenter.log

#转换Prometheus,graylog告警消息的时区为CST时区(如默认已经是CST时区,请勿开启)

prometheus_cst_time=0

#数据库驱动,支持sqlite3,mysql,postgres如使用mysql或postgres,请开启db_host,db_port,db_user,db_password,db_name的注释

db_driver=mysql

db_host=172.12.0.4

db_port=3306

db_user=xxx

db_password=xxxxx

db_name=prometheusalert

#---------------------飞书-----------------------

#是否开启飞书告警通道,可同时开始多个通道0为关闭,1为开启

open-feishu=1

#默认飞书机器人地址

fsurl=https://xxxxx

#---------------------邮件配置-----------------------

#是否开启邮件

open-email=1

#邮件发件服务器地址

Email_host=smtp.exmail.qq.com

#邮件发件服务器端口

Email_port=465

#邮件帐号

Email_user=test@test.com

#邮件密码

Email_password=xxxxxx

#邮件标题

Email_title=DevOps监控告警

#默认发送邮箱

Default_emails=test@test.com

user.csv: |

2024年2月3日,xxxxxx,xxx

kind: ConfigMap

metadata:

name: prometheus-alert-center-conf

namespace: monitoring

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: prometheus-alert-center

alertname: prometheus-alert-center

name: prometheus-alert-center

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-alert-center

alertname: prometheus-alert-center

template:

metadata:

labels:

app: prometheus-alert-center

alertname: prometheus-alert-center

spec:

nodeSlector:

node: k8s

containers:

- image: feiyu563/prometheus-alert

name: prometheus-alert-center

env:

- name: TZ

value: "Asia/Shanghai"

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: prometheus-alert-center-conf-map

mountPath: /app/conf/app.conf

subPath: app.conf

- name: prometheus-alert-center-conf-map

mountPath: /app/user.csv

subPath: user.csv

volumes:

- name: prometheus-alert-center-conf-map

configMap:

name: prometheus-alert-center-conf

items:

- key: app.conf

path: app.conf

- key: user.csv

path: user.csv

---

apiVersion: v1

kind: Service

metadata:

labels:

alertname: prometheus-alert-center

name: prometheus-alert-center

namespace: monitoring

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '8080'

spec:

ports:

- name: http

port: 8080

targetPort: http

nodePort: 31080

selector:

app: prometheus-alert-center

type: NodePort

4、k8s主机监控

为了能够采集到主机的运行指标如CPU、内存、从磁盘等信息,我们可以使用Node Exporter

vim node-exporter.yaml

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

annotations:

prometheus.io/scrape: 'true'

spec:

selector:

app: node-exporter

ports:

- name: node-exporter

port: 9100

protocol: TCP

targetPort: 9100

clusterIP: None

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

app: node-exporter

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

name: node-exporter

labels:

app: node-exporter

spec:

containers:

- name: node-exporter

image: harbor.test.cn/devops/node-exporter:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9100

hostPort: 9100

imagePullSecrets:

- name: harborsecret

hostNetwork: true

hostPID: true

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

访问:https://grafana.test.cn/,如下示例图

5、K8S 集群监控

编辑prometheus.yaml(如上文),之后在grafana-Plugin启用kubernetes,配置Data Source之后即可使用

6、平台服务监控

6.1服务清单

6.2效果图示例:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)