k8s--storageClass自动创建PV

storageClass自动创建pv

·

一、storageClass自动创建PV

这里使用NFS实现

1.1 安装NFS

安装nfs-server: sh nfs_install.sh /mnt/data03 10.60.41.0/24

nfs_install.sh

#!/bin/bash

### How to install it? ###

### 安装nfs-server,需要两个参数:1、挂载点 2、允许访问nfs-server的网段 ###

### How to use it? ###

### Client节点`yum -y install nfs-utils rpcbind`,然后挂载nfs-server目录到本地 ###

### 如:echo "192.168.0.20:/mnt/data01 /mnt/data01 nfs defaults 0 0" >> /etc/fstab && mount -a ###

mount_point=$1

subnet=$2

function nfs_server() {

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/^SELINUX.*/SELINUX\=disabled/' /etc/selinux/config

yum -y install nfs-utils rpcbind

mkdir -p $mount_point

echo "$mount_point ${subnet}(rw,sync,no_root_squash)" >> /etc/exports

systemctl start rpcbind && systemctl enable rpcbind

systemctl restart nfs-server && systemctl enable nfs-server

chown -R nfsnobody:nfsnobody $mount_point

}

function usage() {

echo "Require 2 argument: [mount_point] [subnet]

eg: sh $0 /mnt/data01 192.168.10.0/24"

}

declare -i arg_nums

arg_nums=$#

if [ $arg_nums -eq 2 ];then

nfs_server

else

usage

exit 1

fi

1.2 创建nfs storageClass

创建: kubectl apply -f storageclass_deploy.yaml

storageclass_deploy.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

namespace: devops

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: run-nfs-provisioner

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nfs-provisioner-runner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: devops

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: leader-locking-nfs-provisioner

namespace: devops

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: leader-locking-nfs-provisioner

namespace: devops

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: leader-locking-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: devops

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-provisioner

spec:

selector:

matchLabels:

app: nfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccountName: nfs-provisioner

containers:

- name: nfs-provisioner

image: docker.io/gmoney23/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: example.com/nfs

- name: NFS_SERVER

value: 10.60.41.248

- name: NFS_PATH

value: /mnt/data03

volumes:

- name: nfs-client-root

nfs:

server: 10.60.41.248

path: /mnt/data03

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: nfs

provisioner: example.com/nfs

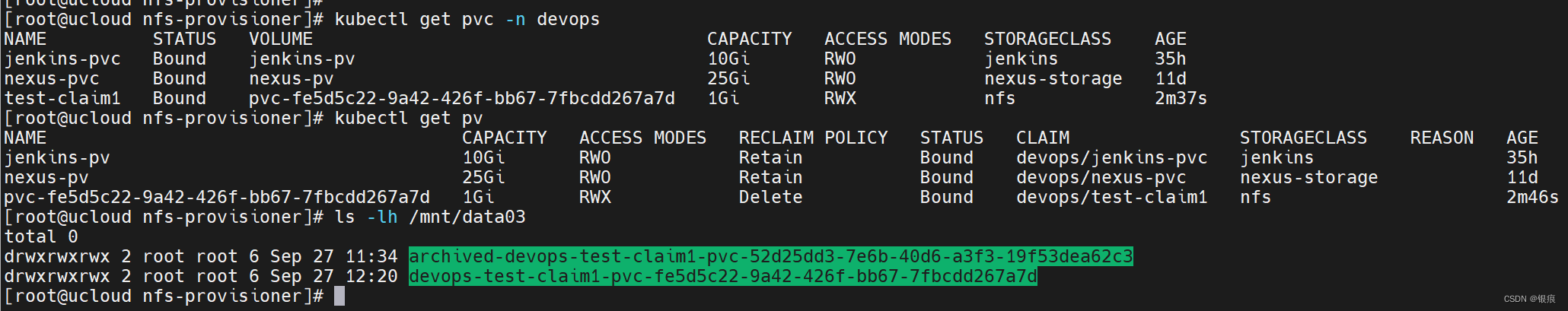

1.3 测试自动创建pv

创建: kubectl apply -f test_auto_generate_pv.yaml

创建pvc之后发现pv自动创建了,在/mnt/data03/ 目录下

test_auto_generate_pv.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-claim1

namespace: devops

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

name: nginx-pod

template:

metadata:

labels:

name: nginx-pod

namespace: myapp

spec:

containers:

- name: nginx

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-pvc

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim1

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)