k8s1,三面美团、四面阿里成功斩下offer

外链图片转存中…(img-N0KqH91x-1712957036926)][外链图片转存中…(img-tHPZvXMR-1712957036926)][外链图片转存中…(img-Zph0Ef70-1712957036927)]

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen k8s-apiserver

bind *:8443

mode tcp

timeout client 1h

timeout connect 1h

log global

option tcplog

balance roundrobin

server master01 192.168.178.138:6443 check

server master02 192.168.178.139:6443 check

server master03 192.168.178.140:6443 check

acl is_websocket hdr(Upgrade) -i WebSocket

acl is_websocket hdr_beg(Host) -i ws

EOF

#运行 haproxy

systemctl enable --now haproxy

#安装 keepalived

yum -y install keepalived

#配置 keepalived

tee > /etc/keepalived/keepalived.conf <<EOF

global_defs {

router_id 100

vrrp_version 2

vrrp_garp_master_delay 1

vrrp_mcast_group4 224.0.0.18 #后续podSubnet需要一致

}

vrrp_script chk_haproxy {

script “/usr/bin/nc -nvz -w 2 127.0.0.1 8443”

timeout 1

interval 1 # check every 1 second

fall 2 # require 2 failures for KO

rise 2 # require 2 successes for OK

}

vrrp_instance lb-vips {

state MASTER

interface ens33 #VIP网卡名字

virtual_router_id 100

priority 150

advert_int 1

nopreempt

track_script {

chk_haproxy

}

authentication {

auth_type PASS

auth_pass blahblah

}

virtual_ipaddress {

192.168.178.141/24 dev eth0 #VIP的IP

}

}

EOF

#运行 keepalived

systemctl enable --now keepalived

#检查 vip 的情况

ip a

journalctl -fu keepalived

### 生成 `kubeadm` 默认配置文件 kubeadm-config.yaml

修改过的已经加#,具体以你自己的版本为主。

#生成 kubeadm 默认配置文件

kubeadm config print init-defaults --component-configs

KubeProxyConfiguration,KubeletConfiguration > kubeadm-config.yaml

#修改后完整的配置文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages: - signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.178.138 #操作机IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master01

taints: null

- system:bootstrappers:kubeadm:default-node-token

controlPlaneEndpoint: 192.168.178.141:8443 #VIP

apiServer:

timeoutForControlPlane: 4m0s

extraArgs:

authorization-mode: “Node,RBAC”

enable-admission-plugins: “DefaultIngressClass,DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,MutatingAdmissionWebhook,NamespaceLifecycle,PersistentVolumeClaimResize,PodSecurity,Priority,ResourceQuota,RuntimeClass,ServiceAccount,StorageObjectInUseProtection,TaintNodesByCondition,ValidatingAdmissionPolicy,ValidatingAdmissionWebhook” #准入控制

etcd-servers: https://master01:2379,https://master02:2379,https://master03:2379 #master节点

certSANs:

- 192.168.178.141 # VIP 地址

- 10.96.0.1 # service cidr的第一个ip

- 127.0.0.1 # 多个master的时候负载均衡出问题了能够快速使用localhost调试

- master01

- master02

- master03

- kubernetes

- kubernetes.default

- kubernetes.default.svc

- kubernetes.default.svc.cluster.local

extraVolumes: - hostPath: /etc/localtime

mountPath: /etc/localtime

name: timezone

readOnly: true

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

serverCertSANs: #证书分发- master01

- master02

- master03

peerCertSANs: - master01

- master02

- master03

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.29.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #跟keep alive保持一致

scheduler:

extraVolumes:

- hostPath: /etc/localtime

mountPath: /etc/localtime

name: timezone

readOnly: true

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: “”

burst: 0

contentType: “”

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: “”

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpBeLiberal: false

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

udpStreamTimeout: 0s

udpTimeout: 0s

detectLocal:

bridgeInterface: “”

interfaceNamePrefix: “”

detectLocalMode: “”

enableProfiling: false

healthzBindAddress: “”

hostnameOverride: “”

iptables:

localhostNodePorts: null

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: “”

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: “0”

verbosity: 0

metricsBindAddress: “”

mode: “ipvs” #IPVS模式

nftables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

nodePortAddresses: null

oomScoreAdj: null

portRange: “”

showHiddenMetricsForVersion: “”

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: “”

rootHnsEndpointName: “”

sourceVip: “”

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd #systemd模式

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: “”

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMaximumGCAge: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: “0”

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

#验证语法

kubeadm init --config kubeadm-config.yaml --dry-run

#预先拉取镜像

kubeadm config images pull --config kubeadm-config.yaml

#其它节点

kubeadm config images pull

#初始化集群

kubeadm init --config kubeadm-config.yaml --upload-certs

–upload-certs 标志用来将在所有控制平面实例之间的共享证书上传到集群。

等待初始化完成信息如下

···

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown

(

i

d

−

u

)

:

(id -u):

(id−u):(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.178.141:8443 --token abcdef.0123456789abcdef

–discovery-token-ca-cert-hash sha256:0bc9dd684a2e3e1417e85765ef826208d2acfdbc530b6d641bb7f09e3a7e069f

–control-plane --certificate-key 1c5a48c6d5ea3765c69f42458fda18381752a16618796be6798e117d4cc55ac3

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

“kubeadm init phase upload-certs --upload-certs” to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.178.141:8443 --token abcdef.0123456789abcdef

–discovery-token-ca-cert-hash sha256:0bc9dd684a2e3e1417e85765ef826208d2acfdbc530b6d641bb7f09e3a7e069f

···

#master节点加入

[root@master02 ~]# kubeadm join 192.168.178.141:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:0bc9dd684a2e3e1417e85765ef826208d2acfdbc530b6d641bb7f09e3a7e069f --control-plane --certificate-key 1c5a48c6d5ea3765c69f42458fda18381752a16618796be6798e117d4cc55ac3

#slave节点加入

kubeadm join 192.168.178.141:8443 --token abcdef.0123456789abcdef

–discovery-token-ca-cert-hash sha256:0bc9dd684a2e3e1417e85765ef826208d2acfdbc530b6d641bb7f09e3a7e069f

#控制节点检查

kubectl get nodes

## 二:网络部署

flannel host-gw模式

#下载 For Kubernetes v1.17+ 对于 Kubernetes v1.17+

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

#编辑

vim kube-flannel.yml

89 net-conf.json: |

90 {

91 “Network”: “10.244.0.0/16”,

92 “Backend”: {

93 “Type”: “host-gw”

94 }

95 }

#安装前,运行下面命令

ip addr show

route -n

#安装

kubectl create -f kube-flannel.yml

#查看

kubectl get all -n kube-flannel

···

NAME READY STATUS RESTARTS AGE

pod/kube-flannel-ds-4wl74 1/1 Running 0 17m

pod/kube-flannel-ds-r7j2n 1/1 Running 0 17m

pod/kube-flannel-ds-w79wg 1/1 Running 0 17m

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

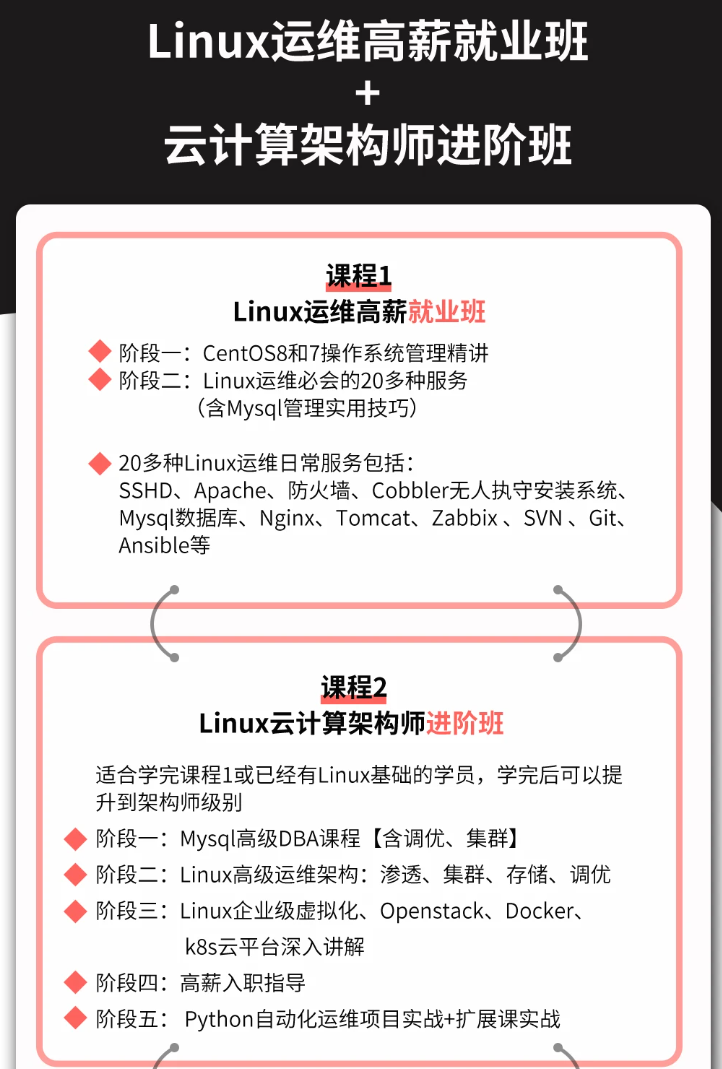

深知大多数Linux运维工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)

…(img-OJ5kJfoK-1712957036926)]

[外链图片转存中…(img-N0KqH91x-1712957036926)]

[外链图片转存中…(img-tHPZvXMR-1712957036926)]

[外链图片转存中…(img-Zph0Ef70-1712957036927)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)

[外链图片转存中…(img-XnaP6Ses-1712957036927)]

更多推荐

已为社区贡献11条内容

已为社区贡献11条内容

所有评论(0)