K8s核心网容灾部署方案

【代码】K8s核心网容灾部署方案。

·

K8s核心网容灾部署方案

1. 物理机/虚拟机系统准备(3台机器都执行)

- 1 master + 2 node

- ubuntu 18.04

- 换源:vim /etc/apt/sources.list

默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

# 预发布软件源,不建议启用

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-proposed main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-proposed main restricted universe multiverse

- 更新源

sudo apt update

- 禁用交换分区(3台机器执行)

swapoff -a

- 安装docker

sudo apt install docker.io

- 修改主机名(每个机器都操作)

- Master

vim /etc/hostname

改为:kubeedge-master

- Node-01

vim /etc/hostname

改为:kubeedge-edge01

- Node-02

vim /etc/hostname

改为:kubeedge-edge02

2. Master 节点配置

- 依次执行以下命令

sudo apt-get update && sudo apt-get install -y apt-transport-https

apt install curl

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

- 然后,执行以下命令

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

- 更新源

apt-get update

- 安装Kubernetes

apt install kubelet=1.22.15-00 kubectl=1.22.15-00 kubeadm=1.22.15-00

- 初始化Master节点(首先 sudo su,使用root用户)

kubeadm init --image-repository=registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.22.15

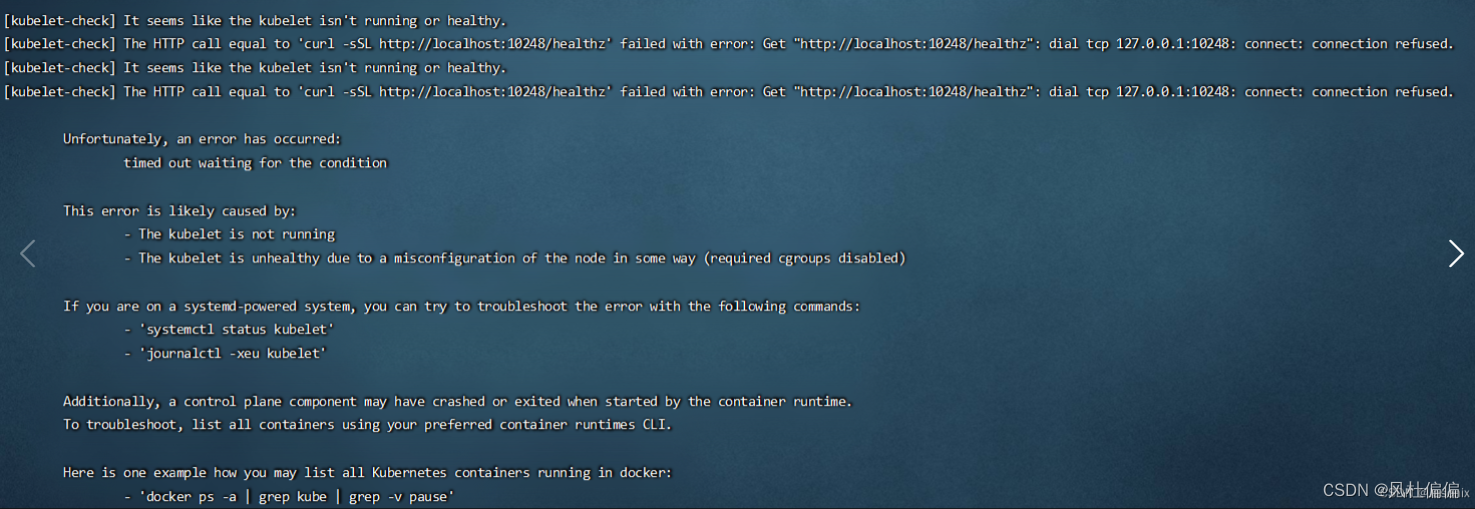

- 如果初始化不成功,日志如下:

- 那么执行以下命令:

vim /etc/docker/daemon.json

- 复制以下内容到 daemon.json文件中

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 然后执行以下命令:

systemctl daemon-reload

systemctl restart docker

systemctl restart kubelet

kubeadm reset

- 然后,重新运行init命令,即:

kubeadm init --image-repository=registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --kubernetes-version=v1.22.15

- 然后,运行以下命令:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

- 最后通过以下命令查看Master是否成功:

kubectl get pods -o wide --all-namespaces

- Master节点配置网络插件 flannel

- 创建 kube-flannel.yml 文件,复制以下内容

vim ~/kube-flannel.yml

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.2.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.22.3

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.22.3

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- 运行以下命令启动flannel

kubectl apply -f ~/kube-flannel.yml

3. Node节点配置

- 参考Master节点方案安装 kubelet, kubectl, kubeadm

apt install kubelet=1.22.15-00 kubectl=1.22.15-00 kubeadm=1.22.15-00

- 然后运行以下命令加入node节点到master节点(该命令在master节点init成功后会出现,对应修改即可)

kubeadm join 192.168.112.64:6443 --token p4rdk9.a04jar6kywvnelpf --discovery-token-ca-cert-hash sha256:d407605d84f27512297f75c0dc13c133149086d1ce6788109144c8247675fac1

- 运行成功后,在master节点运行以下命令可看到是否成功加入

kubectl get nodes -o wide

4. 核心网部署

-

网络拓扑

-

在所有node节点上加载核心网镜像文件

tar -xzvf r2.2311.7_u22_cloud_cy.tar.gz

tar -xvf amf-r2.2311.7_u22_cloud_cy.tgz

cd amf-r2.2311.7_u22_cloud

docker load < amf_image_v2.2311.7.tar

- Node节点上的UPF镜像需要换成 upf-v2.tar(需要支持ifconfig命令和iptables,以支持从外部自动化添加 UPF内部的NAT规则,从而实现UE访问5G网络域外数据)

iptables -t nat -A POSTROUTING -s 10.2.1.0/24 -o eth0 -j SNAT --to $ipcommand

- 其他镜像文件加载方式类似,最终呈现以下效果:

- 然后在 Master节点上运行,以部署 redis 服务

docker run --name my-redis-container -d -p 10.0.0.2:6379:6379 redis

- 其中IP(10.0.0.2)改为 Master节点IP

- 安装NFS服务,用于容器目录挂载

- 所有节点运行

apt install nfs-kernel-server portmap

- 然后在 Master节点运行以下命令:

mkdir /nfs/

vim /etc/exports

/nfs *(rw,sync,no_root_squash) 注:添加到/etc/exports文件中

exportfs -arv

- 查看NFS是否启动

showmount -e 192.168.112.64

- 正常情况如下图所示:

- 在 /nfs 目录创建以下文件

vim amfcfg.yaml

info:

version: 1.0.0

description: AMF initial local configuration

configuration:

instance: 0

amfName: AMF

tnlAssociationList:

- ngapIpList:

- 127.0.1.10

ngapSctpPort: 38412

weightFactor: 255

sctpOutStreams: 2

dscpEnabled: false

dscpValue: 48

relativeCapacity: 255

backupAmfEnabled: false

backupAmfName: AMF-BAK

managementAddr: 127.0.1.10

sbi:

scheme: http

registerIPv4: 127.0.1.10

registerIPv6:

bindingIPv4: 127.0.1.10

bindingIPv6:

port: 8080

tls:

minVersion: 1.2

maxVersion: 1.3

timeoutValue: 2

shortConnection: false

serviceNameList:

- namf-comm

- namf-evts

- namf-mt

- namf-loc

servedGuamiList:

- plmnId:

mcc: 460

mnc: 00

regionId: 1

setId: 1

pointer: 1

supportTaiList:

- plmnId:

mcc: 460

mnc: 00

tac:

- 4388

plmnSupportList:

- plmnId:

mcc: 460

mnc: 00

snssaiList:

- sst: 1

sd: 000001

equivalentPlmn:

enable: false

plmnList:

- mcc: 460

mnc: 01

overloadProtection:

enable: false

totalUeNum: 10000

cpuPercentage: 80

n1RateLimit:

enable: false

recvRateLimit: 20

minBackoffTimerVal: 900

maxBackoffTimerVal: 1800

n2RecvRateLimit:

enable: false

recvRateLimit: 20

overloadEnable: false

overloadAction: 1

overloadCheckTime: 10

n2SendRateLimit:

enable: false

sendRateLimit: 20

n8RateLimit:

enable: false

sendRateLimit: 20

abnormalSignalingControl:

enable: false

period: 1h

threshold: 10

authReject2NasCause: 7

sliceNotFound2NasCause: 7

no5gsSubscription2NasCause: 7

ratNotAllowed2NasCause: 27

noAvailableSlice2NasCause: 62

noAvailableAusf2NasCause: 15

checkSliceInTaEnabled: false

uePolicyEnabled: false

smsOverNasEnabled: false

statusReportEnabled: false

statusReportImsiPre: 4600001

dnnCorrectionEnabled: false

gutiReallocationInPRUEnabled: false

ueRadioCapabilityMatchEnabled: false

triggerInitCtxSetupForAllNASProc: true

supportFollowOnRequestIndication: false

supportAllAllowedNssai: false

abnormalSignalingControlEnabled: false

implicitUnsubscribeEnabled: true

supportRRCInactiveReport: false

optimizeSignalingProcedure: false

reAuthInServiceRequestProc: false

skipGetSubscribedNssai: false

supportDnnList:

- cmnet

sendDnnOiToSmf: false

nrfEnabled: false

pcfDiscoveryParameter: SUPI

nrfUri: http://127.0.1.60:8080

ausfUri: http://127.0.1.40:8080

udmUri: http://127.0.1.30:8080

smfUri: http://127.0.1.20:8080

pcfUri: http://127.0.1.50:8080

lmfUri: http://127.0.1.80:8080

nefUri: http://127.0.1.70:8080

httpManageCfg:

ipType: ipv4

ipv4: 127.0.1.10

ipv6:

port: 3030

scheme: http

oamConfig:

enable: true

ipType: ipv4

ipv4: 127.0.1.10

ipv6:

port: 3030

scheme: http

neConfig:

neId: 001

rmUid: 4400HX1AMF001

neName: AMF_001

dn: TN

vendorName:

province: GD

pvFlag: PNF

udsfEnabled: false

redisDb:

enable: false

netType: tcp

addr: 127.0.1.90:6379

security:

integrityOrder:

- algorithm: NIA2

priority: 1

cipheringOrder:

- algorithm: NEA0

priority: 1

debugLevel: trace

networkNameList:

- plmnId:

mcc: 460

mnc: 00

enable: false

codingScheme: 0

full: agt5GC

short: agt

timeZone: +08:00

nssaiInclusionModeList:

- plmnId:

mcc: 460

mnc: 00

mode: A

networkFeatureSupport5GS:

enable: true

length: 1

imsVoPS: 1

emc: 0

emf: 0

iwkN26: 0

mpsi: 0

emcN3: 0

mcsi: 0

drxVaule: 0

t3502Value: 720

configurationPriority: true

t3512Value: 3600

mobileReachabilityTimerValue: 3840

implicitDeregistrationTimerValue: 3840

non3gppDeregistrationTimerValue: 3240

n2HoldingTimerValue: 3

t3513:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3522:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3550:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3555:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3560:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3565:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3570:

enable: true

expireTime: 2s

maxRetryTimes: 2

purgeTimer:

enable: false

expireTime: 24h

vim ausfcfg.yaml

configuration:

sbi:

scheme: http

tls:

verifyClientCrt: true

verifyServerCrt: true

minVersion: "1.2"

maxVersion: "1.3"

registerIPv4: 127.0.1.40

bindingIPv4: 127.0.1.40

port: 8080

idleTimeout: 3

udmUri: http://127.0.1.30:8080

supiRanges:

- imsi-460000100080000~imsi-460000100080099

- imsi-460010100080000~imsi-460010100080099

plmnSupportList:

- mcc: "460"

mnc: "00"

- mcc: "460"

mnc: "01"

groupId: ausfGroup001

priority: 65535

capacity: 4096

logLevel: info

NFId: 101cfba8-c852-4d58-8824-3aadb32f9e31

intraUdmIPv4: 127.0.1.30

httpManageCfg:

ipType: ipv4

ipv4: 127.0.1.40

ipv6: ""

port: 3030

scheme: http

oamConfig:

enable: true

ipType: ipv4

ipv4: 127.0.1.40

ipv6: ""

port: 3030

scheme: http

neConfig:

neId: "001"

rmUid: 4400HX1AUSF001

neName: AUSF_001

dn: TN

vendorName: ""

province: GD

pvFlag: PNF

nrf:

enable: false

nrfUri: http://127.0.1.60:8080

telnet:

port: 4100

snmp:

bindingIPv4: 127.0.1.40

port: 4957

vim nef_conf.yaml

debuglogging:

file: /var/log/nef.log

level: error

httpcfg:

ip: 127.0.1.70

port: 8080

scheme: http

amf:

ip: 127.0.1.10:8080

pcf:

ip: 127.0.1.50:8080

udm:

ip: 127.0.1.30:8080

af:

ip: 192.168.18.174:8080

snmpSeqNo: 13750008

localSNMP:

ip: 127.0.1.70

port: 4957

amfSNMP:

ip: 127.0.1.10

port: 4957

ausfSNMP:

ip: 127.0.1.140

port: 4957

udmSNMP:

ip: 127.0.1.30

port: 4957

smfSNMP:

ip: 127.0.1.20

port: 4957

pcfSNMP:

ip: 127.0.1.50

port: 4957

upfSNMP:

ip: 127.0.1.100

port: 4957

nrfSNMP:

ip: 127.0.1.60

port: 4957

nssfSNMP:

ip: 192.168.8.10

port: 4957

imsSNMP:

ip: 192.168.8.11

port: 4957

vim pcfcfg.yaml

configuration:

sbi:

scheme: http

tls:

verifyClientCrt: true

verifyServerCrt: true

minVersion: 0x0303

maxVersion: 0x0304

registerIPv4: 127.0.1.50

bindingIPv4: 127.0.1.50

port: 8080

idleTimeout: 3

serviceList:

- serviceName: npcf-am-policy-control

- serviceName: npcf-ue-policy-control

- serviceName: npcf-smpolicycontrol

suppFeat: 3fff

- serviceName: npcf-policyauthorization

suppFeat: 3

supiRanges:

- imsi-001010100080000~imsi-001010100080099

plmnSupportList:

- mcc: 001

mnc: 01

logLevel: info

NFId: 88e0343e-8b79-11eb-bc48-5becbb2e9678

systemID: 0

httpManageCfg:

ipType: ipv4

ipv4: 127.0.1.50

ipv6: ''

port: 3030

scheme: http

oamConfig:

enable: true

ipType: ipv4

ipv4: 127.0.1.51

ipv6: ''

port: 3030

scheme: http

neConfig:

neId: '001'

rmUid: 4400HX1PCF001

neName: PCF_001

dn: TN

vendorName: ''

province: GD

pvFlag: PNF

nrf:

enable: false

nrfUri: http://127.0.1.60:8080

nef:

nefUri: http://127.0.1.70:8080

ueReport: false

amfUri: http://127.0.1.10:8080

redisDb:

netType: tcp

addr: 192.168.112.64:6379

gxServer:

enabled: false

netType: tcp

addr: 127.0.1.50:3868

host: pcrf.epc.mnc000.mcc460.3gppnetwork.org

realm: epc.mnc000.mcc460.3gppnetwork.org

rxServer:

enabled: false

netType: tcp

addr: 127.0.1.50:3867

host: pcrf.epc.mnc000.mcc460.3gppnetwork.org

realm: epc.mnc000.mcc460.3gppnetwork.org

ueReport:

enabled: false

mqUrl: amqp://rabbitmq:SpnMq0914@192.168.111.123:5672/

priAuthResQue: pushPriAuthResQueue

dataUsageQue: pushDataUsageQueue

userStatusQue: pushUserStatusQueue

vplmnCheatQue: pushVplmnCheatQueue

snmp:

bindingIPv4: 127.0.1.50

IPType: ipv4

telnet:

port: 4100

userNum: 8

vim smf_conf.yaml

localconfig:

debuglogging:

file: /var/log/smf.log

level: debug

gtpucfg:

ipv4: ""

ipv6: ""

httpcfg:

scheme: http

ip: 127.0.1.20

ipv6: ""

port: 8080

serveridletime: 0

serverauthclient: 0

clientskipverify: false

tlsminversion: ""

tlsmaxversion: ""

certexpirydays: 0

ischarging: true

ladndnn: ;

ladnreleasetimer: 10

n4interfacecfg:

iptype: ipv4

ipv4: 127.0.1.20

port: 8805

pcscfip:

ipv4:

default: 127.0.1.20

smfinstanceid: b00ea745-e25a-11ec-ae22-000c29a0c97a

snmpcfg:

ip: 127.0.1.20

port: 4957

systemid: 0

spgwcfg:

s11ipaddr: 127.0.1.20

localdiamcfg:

ipaddr: 127.0.1.20

hostname: smf

realmname: smf.com

gycfg:

enable: false

primrmtip: ""

primrmtport: 3868

secrmtip: ""

secrmtport: 3868

gxcfg:

enable: false

ischarge: false

primrmtip: 127.0.1.20

primrmtport: 3868

secrmtip: ""

secrmtport: 3868

telnetip: 127.0.1.20

uedns:

ipv4:

default: 114.114.114.114

secondary: 223.5.5.5

uemtu: 1400

upintegrityprotrate:

dlrate: 255

enable: false

ulrate: 255

localprofilecfg:

plmnlist: 460-00;460-01

snssailist:

- dnnlist: example-1;example-2;example-3

snssai:

sd: "000000"

sst: 1

tailist:

- plmnid:

mcc: "000"

mnc: "00"

tac: "000000"

tairangelist:

- plmnId:

mcc: "000"

mnc: "00"

tacRangeList:

- end: "000111"

pattern: ""

start: "000000"

- end: ""

pattern: ^111[0-9a-fA-F]{3}$

start: ""

nfconfig:

amf:

ip:

- 127.0.1.10:8080

nrfenable: false

nrf: 127.0.1.60:8080

secodarynrf: ""

nrfenabledisc: false

pcf:

ip:

- 127.0.1.50:8080

udm:

ip:

- 127.0.1.30:8080

nef: 127.0.1.70:8080

serviceareas:

- plmnId:

mcc: ""

mnc: ""

tac: ""

snssaiapncfg:

- apn: ""

snssai:

sst: 0

sd: ""

statusreport:

enable: false

traffrptperiod: 10

totaltraffrptthreshold: 0

upfconfig:

- id: upf-1

ip: 127.0.1.100:8805

uednnippool:

- dnn: test

ueippool:

ipv4:

- 10.10.13.0/24

ipv6:

- fd0d:10:1::/50

uestaticipv4enable: false

uestaticipv4rangebegin: 0.0.0.0

uestaticipv4rangeend: 0.0.0.0

uestaticipv6enable: false

uestaticipv6rangebegin: ""

uestaticipv6rangeend: ""

ueippool:

ipv4:

- 10.2.1.0/24

ipv6:

- null

uestaticipv4enable: false

uestaticipv4rangebegin: 10.2.1.200

uestaticipv4rangeend: 10.2.1.240

uestaticipv6enable: false

uestaticipv6rangebegin: ""

uestaticipv6rangeend: ""

upfdnaicfg:

- dnai: example

psaupfid: upf2-id

ulclupfid: upf3-id

upfselectcfg:

dnntaiiupfpsaupfmap:

- dnn: example

iupfid: upf3-id

psaupfid: upf4-id

tai: "46000666666"

dnntaisnssaiupfmap:

- dnn: example

snssai:

sd: "000000"

sst: 1

tai: "46000666666"

upfid:

- upf2-id

dnntaiupfmap:

- dnn: example

tai: "46000666666"

upfid:

- upf2-id

dnnupfmap:

- Dnn: cmnet

upfid:

- upf-1

- Dnn: ims

upfid:

- upf-1

staticueipv4upfmap:

- endipv4: 169.0.0.64

psaupfid: upf1-id

startipv4: 169.0.0.12

upfulclselectcfg:

dnntaisnssaiulclmap:

- dnn: example

psa1upfid: upf1-id

psa1upfsdf: permit out ip from any to assigned

psa2upfid: upf2-id

psa2upfsdf: permit out ip from 192.168.0.0/16 to assigned

snssai:

sd: "000000"

sst: 1

tai: "46000666666"

ulclupfid: upf3-id

dnntaiulclmap:

- dnn: example

psa1upfid: upf1-id

psa1upfsdf: permit out ip from any to assigned

psa2upfid: upf2-id

psa2upfsdf: permit out ip from 192.168.0.0/16 to assigned

tai: "46000666666"

ulclupfid: upf3-id

dnnulclmap:

- dnn: example

psa1upfid: upf1-id

psa1upfsdf: permit out ip from any to assigned

psa2upfid: upf2-id

psa2upfsdf: permit out ip from 192.168.0.0/16 to assigned

ulclupfid: upf2-id

vim udmcfg.yaml

configuration:

sbi:

scheme: http

tls:

verifyClientCrt: true

verifyServerCrt: true

minVersion: '1.2'

maxVersion: '1.3'

registerIPv4: 127.0.1.30

bindingIPv4: 127.0.1.30

port: 8080

idleTimeout: 3

serviceNameList:

- nudm-sdm

- nudm-uecm

- nudm-ueau

groupId: "0"

priority: 1

capacity: 4096

fqdn: udm.5gc.com

supiRanges: imsi-001010100080000~imsi-001010100080099

gpsiRanges: msisdn-69072000~msisdn-69072099

plmnList:

- mcc: 460

mnc: 00

logLevel: info

NFId: b4c9e3d4-b7f8-4906-aade-5a84940afe29

systemID: 0

intraN13: false

intraAusfIPv4: 127.0.1.40

httpManageCfg:

ipType: ipv4

ipv4: 127.0.1.30

ipv6: ''

port: 3030

scheme: http

oamConfig:

enable: true

ipType: ipv4

ipv4: 127.0.1.31

ipv6: ''

port: 3030

scheme: http

neConfig:

neId: '001'

rmUid: 4400HX1UDM001

neName: UDM_001

dn: TN

vendorName: ''

province: GD

pvFlag: PNF

nrf:

enable: false

nrfUri: http://127.0.1.60:8080

telnet:

port: 4100

ssh:

enable: false

port: 4101

snmp:

bindingIPv4: 127.0.1.30

port: 4957

redisDb:

netType: tcp

addr: 192.168.112.64:6379

s6aServer:

enable: false

netType: "sctp"

addr: 127.0.1.30:3868

host: hss.ims.mnc000.mcc460.3gppnetwork.org

realm: ims.mnc000.mcc460.3gppnetwork.org

cxServer:

enable: true

netType: "tcp"

addr: 127.0.1.30:3868

host: hss.ims.mnc000.mcc460.3gppnetwork.org

realm: ims.mnc000.mcc460.3gppnetwork.org

z1:

enable: false

z1Uri: "http://192.168.111.50:9999"

authorize:

enable: false

hours: 1024

sipNumberWithPlus: false

overloadProtection:

enable: false

cpuPercentage: 60

registerPerSec: 100

retryAfterSec: 30

trace:

enable: false

vim upf_conf.yaml

logger:

directory: /var/log

level: debug

size: 500

number: 9

cpu:

main: 1

#workers: 2, 3

upf:

pfcp:

- addr: 127.0.1.100

gtpu:

- addr: 127.0.1.101

subnet:

- addr: 10.2.0.0/16

#- addr: 2001:db8:cafe::1/48

created: 0

telnet:

- addr: 127.0.0.1

port: 4100

qer_enable: 0

local_switch_disable: 0

flow_reflect_enable: 0

#n9:

#- addr: 0.0.0.0

#n19:

#- addr: 0.0.0.0

vim getIp.sh (用于upf获取网卡IP,并设置NAT规则)

#!/bin/bash

ipcommand=`ifconfig eth0 | awk 'NR==2{print $2}'`

iptables -t nat -A POSTROUTING -s 10.2.1.0/24 -o eth0 -j SNAT --to $ipcommand

- 其中,涉及redis 的网元,包括udm和pcf,需要修改对应的IP地址到Master节点IP

- udmcfg.yaml

redisDb:

netType: tcp

addr: 192.168.112.64:6379

- pcfcfg.yaml

redisDb:

netType: tcp

addr: 192.168.112.64:6379

- 最后,创建用于kubernetes部署的yaml文件

vim ~/cmcc_5gc.yaml

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: cmii-core

namespace: cmii

spec:

#hostNetwork: true

replicas: 1

selector:

matchLabels:

app: core

template:

metadata:

annotations:

deployment.kubernetes.io/revision: '1'

labels:

app: core

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: node-role.kubernetes.io/agent

operator: DoesNotExist

containers:

- name: amf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: amf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["amf -c /home/amfcfg.yaml"]

ports:

- containerPort: 38412

hostPort: 38412

protocol: SCTP

volumeMounts:

- mountPath: /home

name: nf

- name: smf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: smf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["mkdir /usr/local/etc/; mkdir /usr/local/etc/smf/; cp /home/smf_manage.yaml /usr/local/etc/smf; cp /home/smf_policy.yaml /usr/local/etc/smf; smf -c /home/smf_conf.yaml"]

volumeMounts:

- mountPath: /home

name: nf

- name: upf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: upf:v2

command: ["/bin/sh", "-c"]

args: ["/home/getIp.sh & upf -c /home/upf_conf.yaml "]

ports:

- containerPort: 2152

hostPort: 2152

protocol: UDP

volumeMounts:

- mountPath: /home

name: nf

- name: ausf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ausf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["ausf --ausfcfg /home/ausfcfg.yaml"]

volumeMounts:

- mountPath: /home

name: nf

- name: nef

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: nef:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/nef_conf.yaml /usr/local/etc/nef/ ; GOTRACEBACK=crash /usr/local/bin/nef"]

volumeMounts:

- mountPath: /home

name: nf

- name: udm

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: udm:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/udmcfg.yaml /usr/local/etc/udm/ ; GOTRACEBACK=crash /usr/local/bin/udm"]

volumeMounts:

- mountPath: /home

name: nf

- name: pcf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: pcf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/pcfcfg.yaml /usr/local/etc/pcf/ ; GOTRACEBACK=crash /usr/local/bin/pcf"]

volumeMounts:

- mountPath: /home

name: nf

volumes:

- name: nf

nfs:

server: 192.168.112.64

path: /nfs

- 部署核心网

kubectl apply -f ~/cmcc_5gc.yaml

- 查看部署情况,Master节点中:

kubectl get pods -o wide --all-namespaces

- 在对应Node节点中查看docker 容器:

docker ps

参考:

Ubuntu: 搭建 NFS 服务器

KubeEdge环境搭建(支持网络插件flannel)

5. K8s核心网服务监控脚本及后台运行(Master节点)

vim sysMonitor.sh

具备功能:

1) 监测核心网Pod运行状态,当不是Running状态的时候,新建一个核心网Pod

2) 可以读取创建Pod的IP地址,并修改amf和upf配置文件(amfcfg.yaml; upf_conf.yaml)

3) 设置了master节点的NAT规则,让Master节点作为对接基站gNB的统一入口

#!/bin/bash

isConfIp=0

isIptables=0

while true

do

cmii=$(kubectl get pods -o wide -n cmii | grep 'cmii-core')

#echo $cmii

length=$(echo $cmii | wc -L)

flag=0

isSrv=0

ip=""

ip1=""

ip2=""

ipId=0

isRunning=0

node=""

if [[ $length -gt 130 ]]

then

echo ""

echo -E "More than one cmii-core service"

srv01_stat=$(echo $cmii | awk '{print $3}')

srv01_name=$(echo $cmii | awk '{print $1}')

ip1=$(echo $cmii | awk '{print $6}')

srv02_stat=$(echo $cmii | awk '{print $12}')

srv02_name=$(echo $cmii | awk '{print $10}')

ip2=$(echo $cmii | awk '{print $15}')

flag=1

else

if [[ $length -gt 0 ]]

then

echo ""

echo -E "One cmii-core service available"

srv_name=$(echo $cmii | awk '{print $1}')

srv_stat=$(echo $cmii | awk '{print $3}')

ip=$(echo $cmii | awk '{print $6}')

else

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

continue

fi

fi

if [[ $flag -eq 0 ]]

then

if [[ $srv_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv_stat =~ "Running" ]]

then

echo $srv_name "("$ip")" "is" $srv_stat

isSrv=1

ipId=0

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "Terminating" || $srv_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "NotReady" ]]

then

isSrv=1

ip=$(echo $cmii | awk '{print $8}')

ipId=0

fi

echo $srv_name "is abnormal"

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

else

echo $srv01_name "is" $srv01_stat

echo $srv02_name "is" $srv02_stat

if [[ $srv01_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv01_stat =~ "Running" ]]

then

echo $srv01_name "is" $srv01_stat

isSrv=1

ipId=1

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "Terminating" || $srv01_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "NotReady" ]]

then

isSrv=1

ip1=$(echo $cmii | awk '{print $8}')

ipId=1

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

#echo $srv01_name "is abnormal, Please create a new one ..."

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

if [[ $srv02_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv02_stat =~ "Running" ]]

then

echo $srv02_name "is" $srv02_stat

isSrv=1

ipId=2

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "Terminating" || $srv02_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "NotReady" ]]

then

isSrv=1

ip2=$(echo $cmii | awk '{print $8}')

ipId=2

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

# echo $srv02_name "is abnormal, Please create a new one ..."

# kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

fi

if [[ $isSrv -eq 0 ]]

then

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

if [[ isConfIp -eq 0 ]]

then

ipF=""

#isConfIp=1

if [[ $ipId -eq 0 ]]

then

ipF=$ip

fi

if [[ $ipId -eq 1 ]]

then

ipF=$ip1

fi

if [[ $ipId -eq 2 ]]

then

ipF=$ip2

fi

echo "change ip to"

echo $ipF

cp /home/cmii/amfcfg.yaml /home/cmii/amfcfg-tmp.yaml

#cp /home/cmii/smf_conf.yaml /home/cmii/smf_conf-tmp.yaml

cp /home/cmii/upf_conf.yaml /home/cmii/upf_conf-tmp.yaml

sed -i "s/@AMFIP@/$ipF/g" /home/cmii/amfcfg-tmp.yaml

#sed -i "s/@AMFIP@/$ipF/g" /home/cmii/smf_conf-tmp.yaml

sed -i "s/@N3IP@/$ipF/g" /home/cmii/upf_conf-tmp.yaml

cp /home/cmii/amfcfg-tmp.yaml /nfs/amfcfg.yaml

#cp /home/cmii/smf_conf-tmp.yaml /nfs/smf_conf.yaml

cp /home/cmii/upf_conf-tmp.yaml /nfs/upf_conf.yaml

fi

if [[ $node =~ "" ]]

then

#if [[ $isIptables -eq 1 ]]

#then

# continue

#fi

echo $node

iptablesRet=$(iptables -L -t nat --line-number | grep sctp)

if [[ $iptablesRet == "" ]]

then

if [[ $node == "kubeedge-edge01" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

if [[ $node == "kubeedge-edge02" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

else

ipp=$(echo $iptablesRet | awk '{print $7}')

pa="to:"

ippp=${ip#*${pa}}

if [[ $node == "kubeedge-edge01" ]]

then

if [[ "192.168.112.65" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

fi

if [[ $node == "kubeedge-edge02" ]]

then

if [[ "192.168.112.168" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

fi

fi

isIptables=1

else

echo "?"

fi

sleep 2

done

- 部署后台运行,5s监控一次

- /home/cmii/amfcfg.yaml(配置模板,使用sysMonitor.sh读取IP并修改该配置文件)

info:

version: 1.0.0

description: AMF initial local configuration

configuration:

instance: 0

amfName: AMF

tnlAssociationList:

- ngapIpList:

- @AMFIP@

ngapSctpPort: 38412

weightFactor: 255

sctpOutStreams: 2

dscpEnabled: false

dscpValue: 48

relativeCapacity: 255

backupAmfEnabled: false

backupAmfName: AMF-BAK

managementAddr: 127.0.1.10

sbi:

scheme: http

registerIPv4: 127.0.1.10

registerIPv6:

bindingIPv4: 127.0.1.10

bindingIPv6:

port: 8080

tls:

minVersion: 1.2

maxVersion: 1.3

timeoutValue: 2

shortConnection: false

serviceNameList:

- namf-comm

- namf-evts

- namf-mt

- namf-loc

servedGuamiList:

- plmnId:

mcc: 460

mnc: 00

regionId: 1

setId: 1

pointer: 1

supportTaiList:

- plmnId:

mcc: 460

mnc: 00

tac:

- 4388

plmnSupportList:

- plmnId:

mcc: 460

mnc: 00

snssaiList:

- sst: 1

sd: 000001

equivalentPlmn:

enable: false

plmnList:

- mcc: 460

mnc: 01

overloadProtection:

enable: false

totalUeNum: 10000

cpuPercentage: 80

n1RateLimit:

enable: false

recvRateLimit: 20

minBackoffTimerVal: 900

maxBackoffTimerVal: 1800

n2RecvRateLimit:

enable: false

recvRateLimit: 20

overloadEnable: false

overloadAction: 1

overloadCheckTime: 10

n2SendRateLimit:

enable: false

sendRateLimit: 20

n8RateLimit:

enable: false

sendRateLimit: 20

abnormalSignalingControl:

enable: false

period: 1h

threshold: 10

authReject2NasCause: 7

sliceNotFound2NasCause: 7

no5gsSubscription2NasCause: 7

ratNotAllowed2NasCause: 27

noAvailableSlice2NasCause: 62

noAvailableAusf2NasCause: 15

checkSliceInTaEnabled: false

uePolicyEnabled: false

smsOverNasEnabled: false

statusReportEnabled: false

statusReportImsiPre: 4600001

dnnCorrectionEnabled: false

gutiReallocationInPRUEnabled: false

ueRadioCapabilityMatchEnabled: false

triggerInitCtxSetupForAllNASProc: true

supportFollowOnRequestIndication: false

supportAllAllowedNssai: false

abnormalSignalingControlEnabled: false

implicitUnsubscribeEnabled: true

supportRRCInactiveReport: false

optimizeSignalingProcedure: false

reAuthInServiceRequestProc: false

skipGetSubscribedNssai: false

supportDnnList:

- cmnet

sendDnnOiToSmf: false

nrfEnabled: false

pcfDiscoveryParameter: SUPI

nrfUri: http://127.0.1.60:8080

ausfUri: http://127.0.1.40:8080

udmUri: http://127.0.1.30:8080

smfUri: http://127.0.1.20:8080

pcfUri: http://127.0.1.50:8080

lmfUri: http://127.0.1.80:8080

nefUri: http://127.0.1.70:8080

httpManageCfg:

ipType: ipv4

ipv4: @AMFIP@

ipv6:

port: 3030

scheme: http

oamConfig:

enable: false

ipType: ipv4

ipv4: 127.0.1.10

ipv6:

port: 3030

scheme: http

neConfig:

neId: 001

rmUid: 4400HX1AMF001

neName: AMF_001

dn: TN

vendorName:

province: GD

pvFlag: PNF

udsfEnabled: false

redisDb:

enable: false

netType: tcp

addr: 127.0.1.90:6379

security:

integrityOrder:

- algorithm: NIA2

priority: 1

cipheringOrder:

- algorithm: NEA0

priority: 1

debugLevel: trace

networkNameList:

- plmnId:

mcc: 460

mnc: 00

enable: false

codingScheme: 0

full: agt5GC

short: agt

timeZone: +08:00

nssaiInclusionModeList:

- plmnId:

mcc: 460

mnc: 00

mode: A

networkFeatureSupport5GS:

enable: true

length: 1

imsVoPS: 1

emc: 0

emf: 0

iwkN26: 0

mpsi: 0

emcN3: 0

mcsi: 0

drxVaule: 0

t3502Value: 720

configurationPriority: true

t3512Value: 3600

mobileReachabilityTimerValue: 3840

implicitDeregistrationTimerValue: 3840

non3gppDeregistrationTimerValue: 3240

n2HoldingTimerValue: 3

t3513:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3522:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3550:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3555:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3560:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3565:

enable: true

expireTime: 2s

maxRetryTimes: 2

t3570:

enable: true

expireTime: 2s

maxRetryTimes: 2

purgeTimer:

enable: false

expireTime: 24h

- /home/cmii/upf_conf.yaml(配置模板,使用sysMonitor.sh读取IP并修改该配置文件)

logger:

directory: /var/log

level: debug

size: 500

number: 9

cpu:

main: 1

#workers: 2, 3

upf:

pfcp:

- addr: 127.0.1.100

gtpu:

- addr: @N3IP@

subnet:

- addr: 10.2.1.0/24

#- addr: 2001:db8:cafe::1/48

created: 0

telnet:

- addr: 127.0.0.1

port: 4100

qer_enable: 0

local_switch_disable: 0

flow_reflect_enable: 0

#n9:

#- addr: 0.0.0.0

#n19:

#- addr: 0.0.0.0

nohup /home/cmii/sysMonitor.sh > /dev/null 2>&1 &

6. 使用UERANSIM进行部署验证

- UERANSIM源码库(编译安装可见链接,有教程)

git clone -b docker_support https://github.com/orion-belt/UERANSIM.git

- UERANSIM gnb配置文件(config/oaicn5g-gnb.yaml)

mcc: '460' # Mobile Country Code value

mnc: '00' # Mobile Network Code value (2 or 3 digits)

nci: '0x000000010' # NR Cell Identity (36-bit)

idLength: 32 # NR gNB ID length in bits [22...32]

tac: 4388 # Tracking Area Code

linkIp: 127.0.0.1 # gNB's local IP address for Radio Link Simulation (Usually same with local IP)

ngapIp: 192.168.112.64 # gNB's local IP address for N2 Interface (Usually same with local IP)

gtpIp: 192.168.112.64 # gNB's local IP address for N3 Interface (Usually same with local IP)

# List of AMF address information

amfConfigs:

- address: 192.168.112.64

port: 38412

# List of supported S-NSSAIs by this gNB

slices:

- sst: 222

sd: 123

# Indicates whether or not SCTP stream number errors should be ignored.

ignoreStreamIds: true

- UERANSIM ue配置文件(config/oaicn5g-ue.yaml)

# IMSI number of the UE. IMSI = [MCC|MNC|MSISDN] (In total 15 digits)

supi: 'imsi-460002072701114'

# Mobile Country Code value of HPLMN

mcc: '460'

# Mobile Network Code value of HPLMN (2 or 3 digits)

mnc: '00'

# Permanent subscription key

key: '1DBD7E06EDAE124327FEED313672A125'

# Operator code (OP or OPC) of the UE

op: '27E5240241BA683F35AC172A863FBC76'

# This value specifies the OP type and it can be either 'OP' or 'OPC'

opType: 'OPC'

# Authentication Management Field (AMF) value

amf: '8000'

# IMEI number of the device. It is used if no SUPI is provided

imei: '356938035643803'

# IMEISV number of the device. It is used if no SUPI and IMEI is provided

imeiSv: '0035609204079514'

# List of gNB IP addresses for Radio Link Simulation

gnbSearchList:

- 127.0.0.1

# UAC Access Identities Configuration

uacAic:

mps: false

mcs: false

# UAC Access Control Class

uacAcc:

normalClass: 0

class11: false

class12: false

class13: false

class14: false

class15: false

# Initial PDU sessions to be established

sessions:

- type: 'IPv4'

apn: 'cmnet'

slice:

sst: 1

sd: 1

# Configured NSSAI for this UE by HPLMN

configured-nssai:

- sst: 1

sd: 1

# Default Configured NSSAI for this UE

default-nssai:

- sst: 1

sd: 1

# Supported integrity algorithms by this UE

integrity:

IA1: true

IA2: true

IA3: true

# Supported encryption algorithms by this UE

ciphering:

EA1: true

EA2: true

EA3: true

# Integrity protection maximum data rate for user plane

integrityMaxRate:

uplink: 'full'

downlink: 'full'

- 测试结果

7. 使用商用基站和终端进行测试

- 基站IP:192.168.112.251

- UPF IP:10.244.6.68

- UPF所在宿主机IP:192.168.112.65

- 方案一:基站和宿主机通信通过三层交换机网管的模式:则分别需要在基站上和交换机上设置静态路由:

基站上:

交换机上:

注:有的商用基站封装的GTPU数据包报头校验值为0,该数据包可能在交换机网关处被丢弃,这种情况,需要考虑方案二的模式。

- 方案二:基站和宿主机通信不通过网关,直接通过交换机二层路由模式,那么只需要在基站上添加上述静态路由

8. IMS在k8s云环境中部署与测试

- 在各个node节点安装IMS镜像文件(直接执行 ims_image_load.sh):

- 修改k8s部署的yaml文件:cmcc_5gc.yaml,修改为如下:

vim cmcc_5gc.yaml

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: cmii-core

namespace: cmii

spec:

#hostNetwork: true

replicas: 1

selector:

matchLabels:

app: core

template:

metadata:

annotations:

deployment.kubernetes.io/revision: '1'

labels:

app: core

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: node-role.kubernetes.io/agent

operator: DoesNotExist

containers:

- name: rtproxy

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: rtproxy:1.2.9

command: ["/bin/sh", "-c"]

args: ["rtproxy --interface=127.0.1.120 --listen-ng=12221 --listen-cli=9900 --timeout=60 --silent-timeout=3600 --port-min=30000 --port-max=50000 --pidfile=/var/run/ngcp-rtpengine-daemon.pid --no-redis-required --foreground"]

volumeMounts:

- mountPath: /home

name: nf

- name: amf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: amf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["amf -c /home/amfcfg.yaml"]

ports:

- containerPort: 38412

hostPort: 38412

protocol: SCTP

volumeMounts:

- mountPath: /home

name: nf

- name: smf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: smf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["smf -c /home/smf_conf.yaml"]

#args: ["mkdir usr/local/etc/; mkdir usr/local/etc/smf/; cp /home/smf_manage.yaml /usr/local/etc/smf; cp /home/smf_policy.yaml /usr/local/etc/smf; smf -c /home/smf_conf.yaml"]

volumeMounts:

- mountPath: /home

name: nf

- name: upf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: upf:v2

command: ["/bin/sh", "-c"]

args: ["/home/getIp.sh & upf -c /home/upf_conf.yaml "]

ports:

- containerPort: 2152

hostPort: 2152

protocol: UDP

volumeMounts:

- mountPath: /home

name: nf

- name: ausf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ausf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["ausf --ausfcfg /home/ausfcfg.yaml"]

volumeMounts:

- mountPath: /home

name: nf

- name: nef

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: nef:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/nef_conf.yaml /usr/local/etc/nef/ ; GOTRACEBACK=crash /usr/local/bin/nef"]

volumeMounts:

- mountPath: /home

name: nf

- name: udm

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: udm:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/udmcfg.yaml /usr/local/etc/udm/ ; GOTRACEBACK=crash /usr/local/bin/udm"]

volumeMounts:

- mountPath: /home

name: nf

- name: pcf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: pcf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/pcfcfg.yaml /usr/local/etc/pcf/ ; GOTRACEBACK=crash /usr/local/bin/pcf"]

volumeMounts:

- mountPath: /home

name: nf

- name: pcscf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ims:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/hosts /etc/hosts; cp /home/ims/protocols /etc/protocols ; cp -r /home/ims /usr/local/etc/; ims -f /usr/local/etc/ims/pcscf/main.cfg -DD"]

volumeMounts:

- mountPath: /home

name: nf

- name: icscf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ims:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/hosts /etc/hosts; cp /home/ims/protocols /etc/protocols ; cp -r /home/ims /usr/local/etc/; ims -f /usr/local/etc/ims/icscf/main.cfg -DD"]

volumeMounts:

- mountPath: /home

name: nf

- name: scscf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ims:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/hosts /etc/hosts; cp /home/ims/protocols /etc/protocols ; cp -r /home/ims /usr/local/etc/; ims -f /usr/local/etc/ims/scscf/main.cfg -DD"]

volumeMounts:

- mountPath: /home

name: nf

- name: bgcf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ims:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/hosts /etc/hosts; cp /home/ims/protocols /etc/protocols ; cp -r /home/ims /usr/local/etc/; ims -f /usr/local/etc/ims/bgcf/main.cfg -DD"]

volumeMounts:

- mountPath: /home

name: nf

- name: mmtel

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: ims:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp /home/hosts /etc/hosts; cp /home/ims/protocols /etc/protocols ; cp -r /home/ims /usr/local/etc/; ims -f /usr/local/etc/ims/mmtel/main.cfg -DD"]

volumeMounts:

- mountPath: /home

name: nf

- name: mrf

securityContext:

privileged: true

capabilities:

add: ["NET_ADMIN"]

image: mrf:2.2311.7

command: ["/bin/sh", "-c"]

args: ["cp -r /home/ims /usr/local/etc/; mrf /usr/local/etc/ims/mrf"]

volumeMounts:

- mountPath: /home

name: nf

volumes:

- name: nf

nfs:

server: 192.168.112.64

path: /nfs

- 修改sysMonitor.sh文件,以支持IMS配置修改

vim sysMonitor.sh

#!/bin/bash

isConfIp=0

isIptables=0

while true

do

cmii=$(kubectl get pods -o wide -n cmii | grep 'cmii-core')

#echo $cmii

length=$(echo $cmii | wc -L)

flag=0

isSrv=0

ip=""

ip1=""

ip2=""

ipId=0

isRunning=0

node=""

if [[ $length -gt 130 ]]

then

echo ""

echo -E "More than one cmii-core service"

srv01_stat=$(echo $cmii | awk '{print $3}')

srv01_name=$(echo $cmii | awk '{print $1}')

ip1=$(echo $cmii | awk '{print $6}')

srv02_stat=$(echo $cmii | awk '{print $12}')

srv02_name=$(echo $cmii | awk '{print $10}')

ip2=$(echo $cmii | awk '{print $15}')

flag=1

else

if [[ $length -gt 0 ]]

then

echo ""

echo -E "One cmii-core service available"

srv_name=$(echo $cmii | awk '{print $1}')

srv_stat=$(echo $cmii | awk '{print $3}')

ip=$(echo $cmii | awk '{print $6}')

else

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

continue

fi

fi

if [[ $flag -eq 0 ]]

then

if [[ $srv_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv_stat =~ "Running" ]]

then

echo $srv_name "("$ip")" "is" $srv_stat

isSrv=1

ipId=0

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "Terminating" || $srv_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "NotReady" ]]

then

isSrv=1

ip=$(echo $cmii | awk '{print $8}')

ipId=0

fi

echo $srv_name "is abnormal"

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

else

echo $srv01_name "is" $srv01_stat

echo $srv02_name "is" $srv02_stat

if [[ $srv01_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv01_stat =~ "Running" ]]

then

echo $srv01_name "is" $srv01_stat

isSrv=1

ipId=1

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "Terminating" || $srv01_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "NotReady" ]]

then

isSrv=1

ip1=$(echo $cmii | awk '{print $8}')

ipId=1

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

#echo $srv01_name "is abnormal, Please create a new one ..."

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

if [[ $srv02_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv02_stat =~ "Running" ]]

then

echo $srv02_name "is" $srv02_stat

isSrv=1

ipId=2

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "Terminating" || $srv02_stat =~ "NotReady" ]]

then

isConfIp=0

if [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "NotReady" ]]

then

isSrv=1

ip2=$(echo $cmii | awk '{print $8}')

ipId=2

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

# echo $srv02_name "is abnormal, Please create a new one ..."

# kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

fi

if [[ $isSrv -eq 0 ]]

then

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

if [[ isConfIp -eq 0 ]]

then

ipF=""

#isConfIp=1

if [[ $ipId -eq 0 ]]

then

ipF=$ip

fi

if [[ $ipId -eq 1 ]]

then

ipF=$ip1

fi

if [[ $ipId -eq 2 ]]

then

ipF=$ip2

fi

echo "change ip to"

echo $ipF

cp /home/cmii/amfcfg.yaml /home/cmii/amfcfg-tmp.yaml

#cp /home/cmii/smf_conf.yaml /home/cmii/smf_conf-tmp.yaml

cp /home/cmii/upf_conf.yaml /home/cmii/upf_conf-tmp.yaml

sed -i "s/@AMFIP@/$ipF/g" /home/cmii/amfcfg-tmp.yaml

#sed -i "s/@AMFIP@/$ipF/g" /home/cmii/smf_conf-tmp.yaml

sed -i "s/@N3IP@/$ipF/g" /home/cmii/upf_conf-tmp.yaml

cp /home/cmii/amfcfg-tmp.yaml /nfs/amfcfg.yaml

#cp /home/cmii/smf_conf-tmp.yaml /nfs/smf_conf.yaml

cp /home/cmii/upf_conf-tmp.yaml /nfs/upf_conf.yaml

/nfs/ims/tools/modipplmn.sh $ipF 460 00

fi

if [[ $node =~ "" ]]

then

#if [[ $isIptables -eq 1 ]]

#then

# continue

#fi

echo $node

iptablesRet=$(iptables -L -t nat --line-number | grep sctp)

if [[ $iptablesRet == "" ]]

then

if [[ $node == "kubeedge-edge01" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

if [[ $node == "kubeedge-edge02" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

else

ipp=$(echo $iptablesRet | awk '{print $7}')

pa="to:"

ippp=${ip#*${pa}}

if [[ $node == "kubeedge-edge01" ]]

then

if [[ "192.168.112.65" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

fi

if [[ $node == "kubeedge-edge02" ]]

then

if [[ "192.168.112.168" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

fi

fi

isIptables=1

else

echo "?"

fi

sleep 2

done

- 将IMS配置文件复制到/nfs目录下,以加载到k8s docker化的IMS功能中:

cp -r /home/cmii/5gc/ims-r2.2311.7_u22_cloud_cy/conf/ims/ /nfs

- 修改IMS自带脚本:/nfs/ims/tools/modipplmn.sh

#!/bin/bash

# ./modipplmn.sh 192.168.1.123 001 01

NEWIP=$1

MCC=$2

MNC=$3

MNCLEN=`expr length $MNC`

#echo $MNCLEN

MNCSTR=`sed -n '/IMS_DOMAIN/p' /nfs/ims/vars.cfg | awk '{print $3}'| awk -F '.' '{print $2}'`

MCCSTR=`sed -n '/IMS_DOMAIN/p' /nfs/ims/vars.cfg | awk '{print $3}'| awk -F '.' '{print $3}'`

#echo $MNCSTR $MCCSTR

if [ $MNCLEN = 2 ];then

DSTPLMN=mnc0$MNC.mcc$MCC

elif [ $MNCLEN = 3 ];then

DSTPLMN=mnc$MNC.mcc$MCC

else

echo "Usage: $0 ip_address mcc mnc"

exit

fi

#echo $DSTPLMN

OLDIP=`sed -n '/IMS_IP /p' /nfs/ims/vars.cfg | awk -F '"' '{print $2}'`

echo $OLDIP

##mcc=460

##mnc=00

sed -i "s/mcc=${MCCSTR:3}/mcc=$MCC/g" /nfs/ims/vars.cfg

sed -i "s/mnc=${MNCSTR:4}/mnc=$MNC/g" /nfs/ims/vars.cfg

sed -i "s/$OLDIP/$NEWIP/g" /nfs/ims/vars.cfg

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/vars.cfg

#sed -i "s/$OLDIP/$NEWIP/g" /etc/bind/agt-ims.dnszone

#sed -i "s/$OLDIP/$NEWIP/g" /etc/default/ngcp-rtpengine-daemon

#sed -i "s/$OLDIP/$NEWIP/g" /usr/local/etc/rtproxy/rtproxy.conf

sed -i "s/$OLDIP/$NEWIP/g" /nfs/ims/scscf/dispatcher.list

sed -i "s/$OLDIP/$NEWIP/g" /nfs/ims/mmtel/dispatcher.list

#sed -i "s/$OLDIP/$NEWIP/g" /nfs/ims/n5/conf/n5_profile

sed -i "s/$OLDIP/$NEWIP/g" /nfs/ims/mrf/mrf_param.conf

#sed -i "s/$OLDIP/$NEWIP/g" /etc/hosts

#sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /etc/bind/agt-ims.dnszone

#sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /etc/bind/named.conf

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/scscf/domain.list

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/pcscf/pcscf.xml

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/icscf/icscf.xml

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/scscf/scscf.xml

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/mrf/mrf_param.conf

#sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/n5/conf/n5_profile

#sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/ims/n5/conf/fd_server.conf

#echo $MNCSTR

#echo $MCCSTR

#echo $DSTPLM

cp /home/cmii/hosts-tmp /nfs/hosts

#sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/hosts

sed -i "s/@NEWIP@/$NEWIP/g" /nfs/hosts

sed -i "s/$MNCSTR.$MCCSTR/$DSTPLMN/g" /nfs/iwf/iwf_conf.yaml

sed -i "s/$OLDIP/$NEWIP/g" /nfs/iwf/iwf_conf.yaml

echo "config successfully"

#systemd-resolve --flush-caches

#service bind9 restart

#service n5 restart

- 修改IMS容器内/etc/hosts,以支持IMS域名通信方法

vim /home/cmii/hosts-tmp

127.0.0.1 localhost

127.0.1.1 kubeedge-master

127.0.1.30 hss.ims.mnc000.mcc460.3gppnetwork.org hss

@NEWIP@ ims.mnc000.mcc460.3gppnetwork.org ims

@NEWIP@ pcscf.ims.mnc000.mcc460.3gppnetwork.org pcscf

@NEWIP@ icscf.ims.mnc000.mcc460.3gppnetwork.org icscf

@NEWIP@ scscf.ims.mnc000.mcc460.3gppnetwork.org scscf

@NEWIP@ mmtel.ims.mnc000.mcc460.3gppnetwork.org mmtel

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

- 测试结果:手机上有 HD标识

- 测试结果,抓包:SIP协议

9. IMS自动化配置

- 网络拓扑

- 手机卡信息在核心网中配置的方式(添加一次就行,应该写到redis中了)

1. docker exec -it [upf_container_id] /bin/sh

2. apt-get install telnet

3. telnet 127.0.1.30 4100

4. 输入用户名admin,密码admin

5. add authdat:imsi=460003000100051,ki=00112233445566778899aabbccddeeff,amf=8000,algo=0,opc=11111111111111111111111111111111

6. add udmuser:imsi=460003000100051,msisdn=12307550051,sm_data=1-000001&cmnet&ims,eps_flag=1,kdc_flag=0

7. 查看已有SIM卡信息:dsp udmuser:imsi=46000xxxxx

- 需要修改 smf_conf.yaml、as.yaml、pcscf.xml、pcscf.cfg、hosts文件,分别如下:

vim smf_conf.yaml

vim as.yaml

vim pcscf.xml

vim pcscf.cfg

vim hosts

- 修改sysMonitor.sh脚本将上述文件中@NEWIP@或者@PCSCF@等改成确定IP

vim sysMonitor.sh

#!/bin/bash

isConfIp=0

isIptables=0

isRestarted=0

while true

do

cmii=$(kubectl get pods -o wide -n cmii | grep 'cmii-core')

#echo $cmii

length=$(echo $cmii | wc -L)

flag=0

isSrv=0

ip=""

ip1=""

ip2=""

ipId=0

isRunning=0

node=""

if [[ $length -gt 130 ]]

then

echo ""

echo -E "More than one cmii-core service"

srv01_stat=$(echo $cmii | awk '{print $3}')

srv01_name=$(echo $cmii | awk '{print $1}')

ip1=$(echo $cmii | awk '{print $6}')

srv02_stat=$(echo $cmii | awk '{print $12}')

srv02_name=$(echo $cmii | awk '{print $10}')

ip2=$(echo $cmii | awk '{print $15}')

flag=1

else

if [[ $length -gt 0 ]]

then

echo ""

echo -E "One cmii-core service available"

srv_name=$(echo $cmii | awk '{print $1}')

srv_stat=$(echo $cmii | awk '{print $3}')

ip=$(echo $cmii | awk '{print $6}')

else

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

continue

fi

fi

if [[ $flag -eq 0 ]]

then

if [[ $srv_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv_stat =~ "Running" ]]

then

echo $srv_name "("$ip")" "is" $srv_stat

isSrv=1

ipId=0

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "Terminating" || $srv_stat =~ "NotReady" ]]

then

isConfIp=0

isRunning=0

if [[ $srv_stat =~ "CrashLoopBackOff" || $srv_stat =~ "Error" || $srv_stat =~ "NotReady" ]]

then

isSrv=1

ip=$(echo $cmii | awk '{print $8}')

ipId=0

fi

echo $srv_name "is abnormal"

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

else

echo $srv01_name "is" $srv01_stat

echo $srv02_name "is" $srv02_stat

if [[ $srv01_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv01_stat =~ "Running" ]]

then

echo $srv01_name "is" $srv01_stat

isSrv=1

ipId=1

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "Terminating" || $srv01_stat =~ "NotReady" ]]

then

isConfIp=0

isRunning=0

if [[ $srv01_stat =~ "CrashLoopBackOff" || $srv01_stat =~ "Error" || $srv01_stat =~ "NotReady" ]]

then

isSrv=1

ip1=$(echo $cmii | awk '{print $8}')

ipId=1

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

#echo $srv01_name "is abnormal, Please create a new one ..."

#kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

if [[ $srv02_stat =~ "ContainerCreating" ]]

then

echo "Creating a new cmii-core service ... (Please Wait...)"

isSrv=1

elif [[ $srv02_stat =~ "Running" ]]

then

echo $srv02_name "is" $srv02_stat

isSrv=1

ipId=2

isRunning=1

isConfIp=1

node=$(echo $cmii | awk '{print $9}')

elif [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "Terminating" || $srv02_stat =~ "NotReady" ]]

then

isConfIp=0

isRunning=0

if [[ $srv02_stat =~ "CrashLoopBackOff" || $srv02_stat =~ "Error" || $srv02_stat =~ "NotReady" ]]

then

isSrv=1

ip2=$(echo $cmii | awk '{print $8}')

ipId=2

fi

echo $srv01_name "is abnormal"

#if [[ $isSrv -eq 0 ]]

#then

# echo $srv02_name "is abnormal, Please create a new one ..."

# kubectl apply -f /home/cmii/cmcc_5gc.yaml

#fi

fi

fi

if [[ $isSrv -eq 0 ]]

then

echo "No available cmii-core, trying to create a new one ..."

#cp /home/cmii/smf_conf.yaml /nfs/

kubectl apply -f /home/cmii/cmcc_5gc.yaml

fi

if [[ isConfIp -eq 0 ]]

then

ipF=""

#isConfIp=1

if [[ $ipId -eq 0 ]]

then

ipF=$ip

fi

if [[ $ipId -eq 1 ]]

then

ipF=$ip1

fi

if [[ $ipId -eq 2 ]]

then

ipF=$ip2

fi

echo "change ip to"

echo $ipF

ipS="127.0.1.121"

cp /home/cmii/amfcfg.yaml /home/cmii/amfcfg-tmp.yaml

cp /home/cmii/smf_conf.yaml /home/cmii/smf_conf-tmp.yaml

cp /home/cmii/upf_conf.yaml /home/cmii/upf_conf-tmp.yaml

cp /home/cmii/pcscf.xml /home/cmii/pcscf-tmp.xml

cp /home/cmii/pcscf.cfg /home/cmii/pcscf-tmp.cfg

cp /home/cmii/as.yaml /home/cmii/as-tmp.yaml

cp /home/cmii/hosts /home/cmii/hosts-tmp

sed -i "s/@AMFIP@/$ipF/g" /home/cmii/amfcfg-tmp.yaml

sed -i "s/@NEWIP@/$ipF/g" /home/cmii/smf_conf-tmp.yaml

sed -i "s/@NEWIP@/$ipF/g" /home/cmii/pcscf-tmp.xml

sed -i "s/@NEWIP@/$ipF/g" /home/cmii/pcscf-tmp.cfg

sed -i "s/@NEWIP@/$ipS/g" /home/cmii/as-tmp.yaml

sed -i "s/@N3IP@/$ipF/g" /home/cmii/upf_conf-tmp.yaml

sed -i "s/@PCSCF@/$ipF/g" /home/cmii/hosts-tmp

cp /home/cmii/amfcfg-tmp.yaml /nfs/amfcfg.yaml

cp /home/cmii/smf_conf-tmp.yaml /nfs/smf_conf.yaml

cp /home/cmii/upf_conf-tmp.yaml /nfs/upf_conf.yaml

cp /home/cmii/pcscf-tmp.xml /nfs/ims/pcscf/pcscf.xml

cp /home/cmii/pcscf-tmp.cfg /nfs/ims/pcscf/pcscf.cfg

cp /home/cmii/as-tmp.yaml /nfs/udm/as.yaml

#/nfs/ims/tools/modipplmn.sh 127.0.1.333 460 00

/nfs/ims/tools/modipplmn.sh $ipS 460 00

fi

if [[ $node =~ "" ]]

then

#if [[ $isIptables -eq 1 ]]

#then

# continue

#fi

echo $node

iptablesRet=$(iptables -L -t nat --line-number | grep sctp)

if [[ $iptablesRet == "" ]]

then

if [[ $node == "kubeedge-edge01" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

if [[ $node == "kubeedge-edge02" ]]

then

#iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

else

ipp=$(echo $iptablesRet | awk '{print $7}')

pa="to:"

ippp=${ip#*${pa}}

if [[ $node == "kubeedge-edge01" ]]

then

if [[ "192.168.112.65" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.65

fi

fi

if [[ $node == "kubeedge-edge02" ]]

then

if [[ "192.168.112.168" == $ippp ]]

then

echo "iptables is ready ..."

else

iptables -D OUTPUT 5 -t nat

iptables -t nat -A OUTPUT -d 192.168.112.64 -p sctp -j DNAT --to-destination 192.168.112.168

fi

fi

fi

isIptables=1

else

echo "?"

fi

if [[ $isRunning -eq 1 ]]

then

if [[ $isRestarted -eq 0 ]]

then

echo "Restart containers ..."

if [[ $node == "kubeedge-edge01" ]]

then

sshpass -p 'cmii' ssh -t cmii@192.168.112.65 'sudo /home/cmii/restartC.sh'

isRestarted=1

fi

if [[ $node == "kubeedge-edge02" ]]

then

sshpass -p 'cmii' ssh -t cmii@192.168.112.168 'sudo /home/cmii/restartC.sh'

isRestarted=1

fi

fi

fi

sleep 1

done

- 其中运行POD后,需要将其中smf容器重启,以让修改的配置生效

- 在对应node节点主机上部署脚本 restartC.sh

vim restartC.sh

#!/bin/bash

# 定义要匹配的容器名称

container_names=("smf")

# 执行docker ps命令并将结果保存到变量中

output=$(docker ps --format "{{.Names}}")

# 遍历容器名称列表

for name in "${container_names[@]}"; do

# 使用grep命令从输出中匹配包含指定名称的容器,并将结果保存到对应变量中

matching_containers=$(echo "${output}" | grep -E "${name}")

# 如果找到匹配的容器,则逐一重启它们

if [ -n "${matching_containers}" ]; then

IFS=$'\n' read -rd '' -a containers_to_restart <<<"${matching_containers}"

for container in "${containers_to_restart[@]}"; do

docker restart "${container}"

echo "Container ${container} has been restarted."

done

else

echo "No container containing '${name}' found."

fi

done

- 值得注意的是,mmtel.cfg文件中连接redis的地址得修改

- 最终效果:

- 注:有个地方,smf中配置pcscf的IP地址不能为127.x.x.x,因为该IP是UE最终进行SIP注册的目的地址,如果配置成127.x.x.x,该数据包无法从UE空口传输至网络侧,呈现的效果就是从网络侧抓包看根本就没有SIP协议数据包。

附录:终端VoNR语言通话协议数据抓包图

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)